Long-Term Future Fund: August 2019 grant recommendations

By Habryka [Deactivated] @ 2019-10-03T18:46 (+79)

Note: The Q4 deadline for applications to the Long-Term Future Fund is Friday 11th October. Apply here.

We opened up an application for grant requests earlier this year, and it was open for about one month. This post contains the list of grant recipients for Q3 2019, as well as some of the reasoning behind the grants. Most of the funding for these grants has already been distributed to the recipients.

In the writeups below, we explain the purpose for each grant and summarize our reasoning behind their recommendation. Each summary is written by the fund manager who was most excited about recommending the relevant grant (with a few exceptions that we've noted below). These differ a lot in length, based on how much available time the different fund members had to explain their reasoning.

When we’ve shared excerpts from an application, those excerpts may have been lightly edited for context or clarity.

Grant Recipients

Grants Made By the Long-Term Future Fund

Each grant recipient is followed by the size of the grant and their one-sentence description of their project. All of these grants have been made.

- Samuel Hilton, on behalf of the HIPE team ($60,000): Placing a staff member within the government, to support civil servants to do the most good they can.

- Stag Lynn ($23,000): To spend the next year leveling up various technical skills with the goal of becoming more impactful in AI safety.

- Roam Research ($10,000): Workflowy, but with much more power to organize your thoughts and collaborate with others.

- Alexander Gietelink Oldenziel ($30,000): Independent AI Safety thinking, doing research in aspects of self-reference in using techniques from type theory, topos theory and category theory more generally.

- Alexander Siegenfeld ($20,000): Characterizing the properties and constraints of complex systems and their external interactions.

- Sören M. ($36,982): Additional funding for an AI strategy PhD at Oxford / FHI to improve my research productivity

- AI Safety Camp ($41,000): A research experience program for prospective AI safety researchers.

- Miranda Dixon-Luinenburg ($13,500): Writing EA-themed fiction that addresses X-risk topics.

- David Manheim ($30,000): Multi-model approach to corporate and state actors relevant to existential risk mitigation.

- Joar Skalse ($10,000): Upskilling in ML in order to be able to do productive AI safety research sooner than otherwise.

- Chris Chambers ($36,635): Combat publication bias in science by promoting and supporting the Registered Reports journal format.

- Jess Whittlestone ($75,080): Research on the links between short- and long-term AI policy while skilling up in technical ML.

- Lynette Bye ($23,000): Productivity coaching for effective altruists to increase their impact.

Total distributed: $439,197

Other Recommendations

The following people and organizations were applicants who got alternative sources of funding, or decided to work on a different project. The Long-Term Future Fund recommended grants to them, but did not end up funding them.

The following recommendation still has a writeup below:

- Center for Applied Rationality ($150,000): Help promising people to reason more effectively and find high-impact work, such as reducing x-risk.

We did not write up the following recommendations:

- Jake Coble, who requested $10,000 to conduct research alongside Simon Beard of CSER. This grant request came with an early deadline, so we made the recommendation earlier in the grant cycle. However, after our recommendation went out, Jake found a different project he preferred, and no longer required funding.

- We recommended another individual for a grant, but they wound up accepting funding from another source. (They requested that we not share their name; we would have shared this information had they received funding from us.)

Writeups by Helen Toner

Samuel Hilton, on behalf of the HIPE team ($60,000)

Placing a staff member within the government, to support civil servants to do the most good they can.

This grant supports HIPE (https://hipe.org.uk), a UK-based organization that helps civil servants to have high-impact careers. HIPE’s primary activities are researching how to have a positive impact in the UK government; disseminating their findings via workshops, blog posts, etc.; and providing one-on-one support to interested individuals.

HIPE has so far been entirely volunteer-run. This grant funds part of the cost of a full-time staff member for two years, plus some office and travel costs.

Our reasoning for making this grant is based on our impression that HIPE has already been able to gain some traction as a volunteer organization, and on the fact that they now have the opportunity to place a full-time staff member within the Cabinet Office. We see this both as a promising opportunity in its own right, and also as a positive signal about the engagement HIPE has been able to create so far. The fact that the Cabinet Office is willing to provide desk space and cover part of the overhead cost for the staff member suggests that HIPE is engaging successfully with its core audiences.

HIPE does not yet have robust ways of tracking its impact, but they expressed strong interest in improving their impact tracking over time. We would hope to see a more fleshed-out impact evaluation if we were asked to renew this grant in the future.

I’ll add that I (Helen) personally see promise in the idea of services that offer career discussion, coaching, and mentoring in more specialized settings. (Other fund members may agree with this, but it was not part of our discussion when deciding whether to make this grant, so I’m not sure.)

Writeups by Alex Zhu

Stag Lynn ($23,000)

To spend the next year leveling up various technical skills with the goal of becoming more impactful in AI safety

Stag’s current intention is to spend the next year improving his skills in a variety of areas (e.g. programming, theoretical neuroscience, and game theory) with the goal of contributing to AI safety research, meeting relevant people in the x-risk community, and helping out in EA/rationality related contexts wherever he can (eg, at rationality summer camps like SPARC and ESPR).

Two projects he may pursue during the year:

- Working to implement certificates of impact in the EA/X-risk community, in the hope of encouraging coordination between funders with different values and increasing transparency around the contributions of different people to impactful projects.

- Working as an unpaid personal assistant to someone in EA who is sufficiently busy for this form of assistance to be useful, and sufficiently productive for the assistance to be valuable.

I recommended funding Stag because I think he is smart, productive, and altruistic, has a track record of doing useful work, and will contribute more usefully to reducing existential risk by directly developing his capabilities and embedding himself in the EA community than he would by finishing his undergraduate degree or working a full-time job. While I’m not yet clear on what projects he will pursue, I think it’s likely that the end result will be very valuable — projects like impact certificates require substantial work from someone with technical and executional skills, and Stag seems to me to fit the bill.

More on Stag’s background: In high school, Stag had top finishes in various Latvian and European Olympiads, including a gold medal in the 2015 Latvian Olympiad in Mathematics. Stag has also previously taken the initiative to work on EA causes -- for example, he joined two other people in Latvia in attempting to create the Latvian chapter of Effective Altruism (which reached the point of creating a Latvian-language website), and he has volunteered to take on major responsibilities in future iterations of the European Summer Program in Rationality (which introduces promising high-school students to effective altruism).

Potential conflict of interest: at the time of making the grant, Stag was living with me and helping me with various odd jobs, as part of his plan to meet people in the EA community and help out where he could. This arrangement lasted for about 1.5 months. To compensate for this potential issue, I’ve included notes on Stag from Oliver Habryka, another fund manager.

Oliver Habryka’s comments on Stag Lynn

I’ve interacted with Stag in the past and have broadly positive impressions of him, in particular his capacity for independent strategic thinking

Stag has achieved a high level of success in Latvian and Galois Mathematical Olympiads. I generally think that success in these competitions is one of the best predictors we have of a person’s future performance on making intellectual progress on core issues in AI safety. See also my comments and discussion on the grant to Misha Yagudin last round.

Stag has also contributed significantly to improving both ESPR and SPARC , both of which introduce talented pre-college students to core ideas in EA and AI safety. In particular, he’s helped the programs find and select strong participants, while suggesting curriculum changes that gave them more opportunities to think independently about important issues. This gives me a positive impression of Stag’s ability to contribute to other projects in the space. (I also consider ESPR and SPARC to be among the most cost-effective ways to get more excellent people interested in working on topics of relevance to the long-term future, and take this as another signal of Stag’s talent at selecting and/or improving projects.)

Roam Research ($10,000)

Workflowy, but with much more power to organize your thoughts and collaborate with others.

Roam is a web application which automates the Zettelkasten method, a note-taking / document-drafting process based on physical index cards. While it is difficult to start using the system, those who do often find it extremely helpful, including a researcher at MIRI who claims that the method doubled his research productivity.

On my inside view, if Roam succeeds, an experienced user of the note-taking app Workflowy will get at least as much value switching to Roam as they got from using Workflowy in the first place. (Many EAs, myself included, see Workflowy as an integral part of our intellectual process, and I think Roam might become even more integral than Workflowy. See also Sarah Constantin’s review of Roam, which describes Roam as being potentially as “profound a mental prosthetic as hypertext”, and her more recent endorsement of Roam.)

Over the course of the last year, I’ve had intermittent conversations with Conor White-Sullivan, Roam’s CEO, about the app. I started out in a position of skepticism: I doubted that Roam would ever have active users, let alone succeed at its stated mission. After a recent update call with Conor about his LTF Fund application, I was encouraged enough by Roam’s most recent progress, and sufficiently convinced of the possible upsides of its possible success, that I decided to recommend a grant to Roam.

Since then, Roam has developed enough as a product that I’ve personally switched from Workflowy to Roam and now recommend Roam to my friends. Roam’s progress on its product, combined with its growing base of active users, has led me to feel significantly more optimistic about Roam succeeding at its mission.

(This funding will support Roam’s general operating costs, including expenses for Conor, one employee, and several contractors.)

Potential conflict of interest: Conor is a friend of mine, and I was once his housemate for a few months.

Alexander Gietelink Oldenziel ($30,000)

Independent AI Safety thinking, doing research in aspects of self-reference in using techniques from type theory, topos theory and category theory more generally.

In our previous round of grants, we funded MIRI as an organization: see our April reportfor a detailed explanation of why we chose to support their work. I think Alexander’s research directions could lead to significant progress on MIRI’s research agenda — in fact, MIRI was sufficiently impressed by his work that they offered him an internship. I have also spoken to him in some depth, and was impressed both by his research taste and clarity of thought.

After the internship ends, I think it will be valuable for Alexander to have additional funding to dig deeper into these topics; I expect this grant to support roughly 1.5 years of research. During this time, he will have regular contact with researchers at MIRI, reporting on his research progress and receiving feedback.

Alexander Siegenfeld ($20,000)

Characterizing the properties and constraints of complex systems and their external interactions.

Alexander is a 5th-year graduate student in physics at MIT, and he wants to conduct independent deconfusion research for AI safety. His goal is to get a better conceptual understanding of multi-level world models by coming up with better formalisms for analyzing complex systems at differing levels of scale, building off of the work of Yaneer Bar-Yam. (Yaneer is Alexander’s advisor, and the president of the New England Complex Science Institute.)

I decided to recommend funding to Alexander because I think his research directions are promising, and because I was personally impressed by his technical abilities and his clarity of thought. Tsvi Benson-Tilsen, a MIRI researcher, was also impressed enough by Alexander to recommend that the Fund support him. Alexander plans to publish a paper on his research; it will be evaluated by researchers at MIRI, helping him decide how best to pursue further work in this area.

Potential conflict of interest: Alexander and I have been friends since our undergraduate years at MIT.

Writeups by Oliver Habryka

I have a sense that funders in EA, usually due to time constraints, tend to give little feedback to organizations they fund (or decide not to fund). In my writeups below, I tried to be as transparent as possible in explaining the reasons for why I came to believe that each grant was a good idea, my greatest uncertainties and/or concerns with each grant, and some background models I use to evaluate grants. (I hope this last item will help others better understand my future decisions in this space.)

I think that there exist more publicly defensible (or easier to understand) arguments for some of the grants that I recommended. However, I tried to explain the actual models that drove my decisions for these grants, which are often hard to summarize in a few paragraphs. I apologize in advance that some of the explanations below are probably difficult to understand.

Thoughts on grant selection and grant incentives

Some higher-level points on many of the grants below, as well as many grants from last round:

For almost every grant we make, I have a lot of opinions and thoughts about how the applicant(s) could achieve their aims better. I also have a lot of ideas for projects that I would prefer to fund over the grants we are actually making.

However, in the current structure of the LTFF, I primarily have the ability to select potential grantees from an established pool, rather than encouraging the creation of new projects. Alongside my time constraints, this means that I have a very limited ability to contribute to the projects with my own thoughts and models.

Additionally, I spend a lot of time thinking independently about these areas, and have a broad view of “ideal projects that could be made to exist.” This means that for many of the grants I am recommending, it is not usually the case that I think the projects are very good on all the relevant dimensions; I can see how they fall short of my “ideal” projects. More frequently, the projects I fund are among the only available projects in a reference class I believe to be important, and I recommend them because I want projects of that type to receive more resources (and because they pass a moderate bar for quality).

Some examples:

- Our grant to the Kocherga community space club last round. I see Kocherga as the only promising project trying to build infrastructure that helps people pursue projects related to x-risk and rationality in Russia.

- I recommended this round’s grant to Miranda partly because I think Miranda's plans are good and I think her past work in this domain and others is of high quality, but also because she is the only person who applied with a project in a domain that seems promising and neglected (using fiction to communicate otherwise hard-to-explain ideas relating to x-risk and how to work on difficult problems).

- In the November 2018 grant round, I recommended a grant to Orpheus Lummis to run an AI safety unconference in Montreal. This is because I think he had a great idea, and would create a lot of value even if he ran the events only moderately well. This isn’t the same as believing Orpheus has excellent skills in the relevant domain; I can imagine other applicants who I’d have been more excited to fund, had they applied.

I am, overall, still very excited about the grants below, and I think they are a much better use of resources than what I think of as the most common counterfactuals to donating to the LTFF fund (e.g. donating to the largest organizations in the space, donating based on time-limited personal research) .

However, related to the points I made above, I will have many criticisms of almost all the projects that receive funding from us. I think that my criticisms are valid, but readers shouldn't interpret them to mean that I have a negative impression of the grants we are making — which are strong despite their flaws. Aggregating my individual (and frequently critical) recommendations will not give readers an accurate impression of my overall (highly positive) view of the grant round.

(If I ever come to think that the pool of valuable grants has dried up, I will say so in a high-level note like this one.)

I can imagine that in the future I might want to invest more resources into writing up lists of potential projects that I would be excited about, though it is also not clear to me that I want people to optimize too much for what I am excited about, and think that the current balance of "things that I think are exciting, and that people feel internally motivated to do and generated their own plans for" seems pretty decent.

To follow up the above with a high-level assessment, I am slightly less excited about this round’s grants than I am about last round’s, and I’d estimate (very roughly) that this round is about 25% less cost-effective than the previous round.

Acknowledgements

For both this round and the last round, I wrote the writeups in collaboration with Ben Pace, who works with me on LessWrong and the Alignment Forum. After an extensive discussion about the grants and the Fund's reasoning for them, we split the grants between us and independently wrote initial drafts. We then iterated on those drafts until they accurately described my thinking about them and the relevant domains.

I am also grateful for Aaron Gertler’s help with editing and refining these writeups, which has substantially increased their clarity.

Sören M. ($36,982)

Additional funding to improve my research productivity during an AI strategy PhD program at Oxford / FHI.

I'm looking for additional funding to supplement my 15k pound/y PhD stipend for 3-4 years from September 2019. I am hoping to roughly double this. My PhD is at Oxford in machine learning, but co-supervised by Allan Dafoe from FHI so that I can focus on AI strategy. We will have multiple joint meetings each month, and I will have a desk at FHI.

The purpose is to increase my productivity and happiness. Given my expected financial situation, I currently have to make compromises on e. g. Ubers, Soylent, eating out with colleagues, accommodation, quality and waiting times for health care, spending time comparing prices, travel durations and stress, and eating less healthily.

I expect that more financial security would increase my own productivity and the effectiveness of the time invested by my supervisors.

I think that when FHI or other organizations in that reference class have trouble doing certain things due to logistical obstacles, we should usually step in and fill those gaps (e.g. see Jacob L.’ grant from last round). My sense is that FHI has trouble with providing funding in situations like this (due to budgetary constraints imposed by Oxford University).

I’ve interacted with Sören in the past (during my work at CEA), and generally have positive impressions of him in a variety of domains, like his basic thinking about AI Alignment, and his general competence from running projects like the EA Newsletter.

I have a lot of trust in the judgment of Nick Bostrom and several other researchers at FHI. I am not currently very excited about the work at GovAI (the team that Allan Dafoe leads), but still have enough trust in many of the relevant decision makers to think that it is very likely that Soeren should be supported in his work.

In general, I think many of the salaries for people working on existential risk are low enough that they have to make major tradeoffs in order to deal with the resulting financial constraints. I think that increasing salaries in situations like this is a good idea (though I am hesitant about increasing salaries for other types of jobs, for a variety of reasons I won’t go into here, but am happy to expand on).

This funding should last for about 2 years of Sören’s time at Oxford.

AI Safety Camp ($41,000)

A research experience program for prospective AI safety researchers.

We want to organize the 4th AI Safety Camp (AISC) - a research retreat and program for prospective AI safety researchers. Compared to past iterations, we plan to change the format to include a 3 to 4-day project generation period and team formation workshop, followed by a several-week period of online team collaboration on concrete research questions, a 6 to 7-day intensive research retreat, and ongoing mentoring after the camp. The target capacity is 25 - 30 participants, with projects that range from technical AI safety (majority) to policy and strategy research. More information about past camps is at https://aisafetycamp.com/

[...]

Early-career entry stage seems to be a less well-covered part of the talent pipeline, especially in Europe. Individual mentoring is costly from the standpoint of expert advisors (esp. compared to guided team work), while internships and e.g. MSFP have limited capacity and are US-centric. After the camp, we advise and encourage participants on future career steps and help connect them to other organizations, or direct them to further individual work and learning if they are pursuing an academic track..

Overviews of previous research projects from the first 2 camps can be found here:

1- http://bit.ly/2FFFcK1

2- http://bit.ly/2KKjPLB

Projects from AISC3 are still in progress and there is no public summary.

To evaluate the camp, we send out an evaluation form directly after the camp has concluded and then informally follow the career decisions, publications, and other AI safety/EA involvement of the participants. We plan to conduct a larger survey from past AISC participants later in 2019 to evaluate our mid-term impact. We expect to get a more comprehensive picture of the impact, but it is difficult to evaluate counterfactuals and indirect effects (e.g. networking effects). The (anecdotal) positive examples we attribute to past camps include the acceleration of entrance of several people in the field, research outputs that include 2 conference papers, several SW projects, and about 10 blogposts.

The main direct costs of the camp are the opportunity costs of participants, organizers and advisors. There are also downside risks associated with personal conflicts at multi-day retreats and discouraging capable people from the field if the camp is run poorly. We actively work to prevent this by providing both on-site and external anonymous contact points, as well as actively attending to participant well-being, including during the online phases.

This grant is for the AI Safety Camp, to which we made a grant in the last round. Of the grants I recommended this round, I am most uncertain about this one. The primary reason is that I have not received much evidence about the performance of either of the last two camps [1], and I assign at least some probability that the camps are not facilitating very much good work. (This is mostly because I have low expectations for the quality of most work of this kind and haven’t looked closely enough at the camp to override these — not because I have positive evidence that they produce low-quality work.)

My biggest concern is that the camps do not provide a sufficient level of feedback and mentorship for the attendees. When I try to predict how well I’d expect a research retreat like the AI Safety Camp to go, much of the impact hinges on putting attendees into contact with more experienced researchers and having a good mentoring setup. Some of the problems I have with the output from the AI Safety Camp seem like they could be explained by a lack of mentorship.

From the evidence I observe on their website, I see that the attendees of the second camp all produced an artifact of their research (e.g. an academic writeup or code repository). I think this is a very positive sign. That said, it doesn’t look like any alignment researchers have commented on any of this work (this may in part have been because most of it was presented in formats that require a lot of time to engage with, such as GitHub repositories), so I’m not sure the output actually lead to the participants to get any feedback on their research directions, which is one of the most important things for people new to the field.

After some followup discussion with the organizers, I heard about changes to the upcoming camp (the target of this grant) that address some of the above concerns (independent of my feedback). In particular, the camp is being renamed to “AI Safety Research Program”, and is now split into two parts — a topic selection workshop and a research retreat, with experienced AI Alignment researchers attending the workshop. The format change seems likely to be a good idea, and makes me more optimistic about this grant.

I generally think hackathons and retreats for researchers can be very valuable, allowing for focused thinking in a new environment. I think the AI Safety Camp is held at a relatively low cost, in a part of the world (Europe) where there exist few other opportunities for potential new researchers to spend time thinking about these topics, and some promising people have attended. I hope that the camps are going well, but I will not fund another one without spending significantly more time investigating the program.

Footnotes

[1] After signing off on this grant, I found out that, due to overlap between the organizers of the events, some feedback I got about this camp was actually feedback about the Human Aligned AI Summer School, which means that I had even less information than I thought. In April I said I wanted to talk with the organizers before renewing this grant, and I expected to have at least six months between applications from them, but we received another application this round and I ended up not having time for that conversation.

Miranda Dixon-Luinenburg ($13,500)

Writing EA-themed fiction that addresses X-risk topics.

I want to spend three months evaluating my ability to produce an original work that explores existential risk, rationality, EA, and related themes such as coordination between people with different beliefs and backgrounds, handling burnout, planning on long timescales, growth mindset, etc. I predict that completing a high-quality novel of this type would take ~12 months, so 3 months is just an initial test.

In 3 months, I would hope to produce a detailed outline of an original work plus several completed chapters. Simultaneously, I would be evaluating whether writing full-time is a good fit for me in terms of motivation and personal wellbeing.

[...]

I have spent the last 2 years writing an EA-themed fanfiction of The Last Herald-Mage trilogy by Mercedes Lackey (online at https://archiveofourown.org/series/936480). In this period I have completed 9 “books” of the series, totalling 1.2M words (average of 60K words/month), mostly while I was also working full-time. (I am currently writing the final arc, and when I finish, hope to create a shorter abridged/edited version with a more solid beginning and better pacing overall.)

In the writing process, I researched key background topics, in particular AI safety work (I read a number of Arbital articles and most of this MIRI paper on decision theory: https://arxiv.org/pdf/1710.05060v1.pdf), as well as ethics, mental health, organizational best practices, medieval history and economics, etc. I have accumulated a very dedicated group of around 10 beta readers, all EAs, who read early drafts of each section and give feedback on how well it addresses various topics, which gives me more confidence that I am portraying these concepts accurately.

One natural decomposition of whether this grant is a good idea is to first ask whether writing fiction of this type is valuable, then whether Miranda is capable of actually creating that type of fiction, and last whether funding Miranda will make a significant difference in the amount/quality of her fiction.

I think that many people reading this will be surprised or confused about this grant. I feel fairly confident that grants of this type are well worth considering, and I am interested in funding more projects like this in the future, so I’ve tried my best to summarize my reasoning. I do think there are some good arguments for why we should be hesitant to do so (partly summarized by the section below that lists things that I think fiction doesn’t do as well as non-fiction), so while I think that grants like this are quite important, and have the potential to do a significant amount of good, I can imagine changing my mind about this in the future.

The track record of fiction

In a general sense, I think that fiction has a pretty strong track record of both being successful at conveying important ideas, and being a good attractor of talent and other resources. I also think that good fiction is often necessary to establish shared norms and shared language.

Here are some examples of communities and institutions that I think used fiction very centrally in their function. Note that after the first example, I am making no claim that the effect was good, I’m just establishing the magnitude of the potential effect size.

- Harry Potter and the Methods of Rationality (HPMOR) was instrumental in the growth and development of both the EA and Rationality communities. It is very likely the single most important recruitment mechanism for productive AI alignment researchers, and has also drawn many other people to work on the broader aims of the EA and Rationality communities.

- Fiction was a core part of the strategy of the neoliberal movement; fiction writers were among the groups referred to by Hayek as "secondhand dealers in ideas.” An example of someone whose fiction played both a large role in the rise of neoliberalism and in its eventual spread would be Ayn Rand.

- Almost every major religion, culture and nation-state is built on shared myths and stories, usually fictional (though the stories are often held to be true by the groups in question, making this data point a bit more confusing).

- Francis Bacon’s (unfinished) utopian novel “The New Atlantis” is often cited as the primary inspiration for the founding of the Royal Society, which may have been the single institution with the greatest influence on the progress of the scientific revolution.

On a more conceptual level, I think fiction tends to be particularly good at achieving the following aims (compared to non-fiction writing):

- Teaching low-level cognitive patterns by displaying characters that follow those patterns, allowing the reader to learn from very concrete examples set in a fictional world. (Compare Aesop’s Fables to some nonfiction book of moral precepts — it can be much easier to remember good habits when we attach them to characters.)

- Establishing norms, by having stories that display the consequences of not following certain norms, and the rewards of following them in the right way

- Establishing a common language, by not only explaining concepts, but also showing concepts as they are used, and how they are brought up in conversational context

- Establishing common goals, by creating concrete utopian visions of possible futures that motivate people to work towards them together

- Reaching a broader audience, since we naturally find stories more exciting than abstract descriptions of concepts

(I wrote in more detail about how this works for HPMOR in the last grant round.)

In contrast, here are some things that fiction is generally worse at (though a lot of these depend on context; since fiction often contains embedded non-fiction explanations, some of these can be overcome):

- Carefully evaluating ideas, in particular when evaluating them requires empirical data. There is a norm against showing graphs or tables in fiction books, making any explanation that rests on that kind of data difficult to access in fiction.

- Conveying precise technical definitions

- Engaging in dialogue with other writers and researchers

- Dealing with topics in which readers tend to come to better conclusions by mentally distancing themselves from the problem at hand, instead of engaging with concrete visceral examples (I think some ethical topics like the trolley problem qualify here, as well as problems that require mathematical concepts that don’t neatly correspond to easy real-world examples)

Overall, I think current writing about both existential risk, rationality, and effective altruism skews too much towards non-fiction, so I’m excited about experimenting with funding fiction writing.

Miranda’s writing

The second question is whether I trust Miranda to actually be able to write fiction that leverages these opportunities and provides value. This is why I think Miranda can do a good job:

- Her current fiction project is read by a few people whose taste I trust, and many of them describe having developed valuable skills or insights as a result (for example, better skills for crisis management, a better conception of moral philosophy, an improved moral compass, and some insights about decision theory)

- She wrote frequently on LessWrong and her blog for a few years, producing content of consistently high quality that, while not fictional, often displayed some of the same useful properties as fiction writing.

- I’ve seen her execute a large variety of difficult projects outside of her writing, which means I am a lot more optimistic about things like her ability to motivate herself on this project, and excelling in the non-writing aspects of the work (e.g. promoting her fiction to audiences beyond the EA and rationality communities)

- She worked in operations at CEA and received strong reviews from her coworkers

- She helped CFAR run the operations for SPARC in two consecutive years and performed well as a logistics volunteer for 11 of their other workshops

- I’ve seen her organize various events and provide useful help with logistics and general problem-solving on a large number of occasions

My two biggest concerns are:

- Miranda losing motivation to work on this project, because writing fiction with a specific goal requires a significantly different motivation than doing it for personal enjoyment

- The fiction being well-written and engaging, but failing to actually help people better understand the important issues it tries to cover.

I like the fact that this grant is for an exploratory 3 months rather than a longer period of time; this allows Miranda to pivot if it doesn’t work out, rather than being tied to a project that isn’t going well.

The counterfactual value of funding

It would be reasonable to ask whether a grant is really necessary, given that Miranda has produced a huge amount of fiction in the last two years without receiving funding explicitly dedicated to that. I have two thoughts here:

- I generally think that we should avoid declining to pay people just because they’d be willing to do valuable work for free. It seems good to reward people for work even if this doesn’t make much of a difference in the quality/consistency of the work, because I expect this promise of reward to help people build long-term motivation and encourage exploration.

- To explain this a bit more, I think this grant will help other people build motivation towards pursuing similar projects in the future, by setting a precedent for potential funding in this space. For example, I think the possibility of funding (and recognition) was also a motivator for Miranda in starting to work on this project.

- I expect this grant to have a significant effect on Miranda’s productivity, because I think that there is often a qualitative difference between work someone produces in their spare time and work that someone can focus full-time on. In particular, I expect this grant to cause Miranda’s work to improve in the dimensions that she doesn’t naturally find very stimulating, which I expect will include editing, restructuring, and other forms of “polish”.

David Manheim ($30,000)

Multi-model approach to corporate and state actors relevant to existential risk mitigation.

Work for 2-3 months on continuing to build out a multi-model approach to understanding international relations and multi-stakeholder dynamics as it relates to risks of strong(er) AI systems development, based on and extending similar work done on biological weapons risks done on behalf of FHI's Biorisk group and supporting Open Philanthropy Project planning.

This work is likely to help policy and decision analysis for effective altruism related to the deeply uncertain and complex issues in international relations and long term planning that need to be considered for many existential risk mitigation activities. While the project is focused on understanding actors and motivations in the short term, the decisions being supported are exactly those that are critical for existential risk mitigation, with long term implications for the future.

I feel a lot of skepticism toward much of the work done in the academic study of international relations. Judging from my models of political influence and its effects on the quality of intellectual contributions, and my models of research fields with little ability to perform experiments, I have high priors that work in international relations is of significantly lower quality than in most scientific fields. However, I have engaged relatively little with actual research on the topic of international relations (outside of unusual scholars like Nick Bostrom) and so am hesitant in my judgement here.

I also have a fair bit of worry around biorisk. I haven’t really had the opportunity to engage with a good case for it, and neither have many of the people I would trust most in this space, in large part due to secrecy concerns from people who work on it (more on that below). Due to this, I am worried about information cascades. (An information cascade is a situation where people primarily share what they believe but not why, and because people update on each others' beliefs you end up with a lot of people all believing the same thing precisely because everyone else does.)

I think is valuable to work on biorisk, but this view is mostly based on individual conversations that are hard to summarize, and I feel uncomfortable with my level of understanding of possible interventions, or even just conceptual frameworks I could use to approach the problem. I don’t know how most people who work in this space came to decide it was important, and those I’ve spoken to have usually been reluctant to share details in conversation (e.g. about specific discoveries they think created risk, or types of arguments that convinced them to focus on biorisk over other threats).

I’m broadly supportive of work done at places like FHI and by the people at OpenPhil who care about x-risks, so I am in favor of funding their work (e.g. Soren’s grant above). But I don’t feel as though I can defer to the people working in this domain on the object level when there is so much secrecy around their epistemic process, because I and others cannot evaluate their reasoning.

However, I am excited about this grant, because I have a good amount of trust in David’s judgment. To be more specific, he has a track record of identifying important ideas and institutions and then working on/with them. Some concrete examples include:

- Wrote up a paper on Goodhart’s Law with Scott Garrabrant (after seeing Scott’s very terse post on it)

- Works with the biorisk teams at FHI and OpenPhil

- Completed his PhD in public policy and decision theory at the RAND Corporation, which is an unusually innovative institution (e.g. this study);

- Writes interesting comments and blog posts on the internet (e.g. LessWrong)

- Has offered mentoring in his fields of expertise to other people working or preparing to work projects in the x-risk space; I’ve heard positive feedback from his mentees

Another major factor for me is the degree to which David is shares his thinking openly and transparently on the internet, and participates in public discourse, so that other people interested in these topics can engage with his ideas. (He’s also a superforecaster, which I think is predictive of broadly good judgment.) If David didn’t have this track record of public discourse, I likely wouldn’t be recommending this grant, and if he suddenly stopped participating, I’d be fairly hesitant to recommend such a grant in the future.

As I said, I’m not excited about the specific project he is proposing, but have trust in his sense of which projects might be good to work on, and I have emphasized to him that I think he should feel comfortable working on the projects he thinks are best. I strongly prefer a world where David has the freedom to work on the projects he judges to be most valuable, compared to the world where he has to take unrelated jobs (e.g. teaching at university).

Joar Skalse ($10,000)

Upskilling in ML in order to be able to do productive AI safety research sooner than otherwise.

I am requesting grant money to upskill in machine learning (ML).

Background: I am an undergraduate student in Computer Science and Philosophy at Oxford University, about to start the 4th year of a 4-year degree. I plan to do research in AI safety after I graduate, as I deem this to be the most promising way of having a significant positive impact on the long-term future

[...]

What I’d like to do:

I would like to improve my skills in ML by reading literature and research, replicating research papers, building ML-based systems, and so on.

To do this effectively, I need access to the compute that is required to train large models and run lengthy reinforcement learning experiments and similar.

It would also likely be very beneficial if I could live in Oxford during the vacations, as I would then be in an environment in which it is easier to be productive. It would also make it easier for me to speak with the researchers there, and give me access to the facilities of the university (including libraries, etc.).

It would also be useful to be able to attend conferences and similar events.

Joar was one of the co-authors on the Mesa-Optimisers paper, which I found surprisingly useful and clearly written, especially considering that its authors had relatively little background in alignment research or research in general. I think it is probably the second most important piece of writing on AI alignment that came out in the last 12 months, after the Embedded Agency sequence. My current best guess is that this type of conceptual clarification / deconfusion is the most important type of research in AI alignment, and the type of work I’m most interested in funding. While I don’t know exactly how Joar contributed to the paper, my sense is that all the authors put in a significant effort (bar Scott Garrabrant, who played a supervising role).

This grant is for projects during and in between terms at Oxford. I want to support Joar producing more of this kind of research, which I expect this grant to help with. He’s also been writing further thoughts online (example), which I think has many positive effects (personally and as externalities).

My brief thoughts on the paper (nontechnical):

- The paper introduced me to a lot of of terminology that I’ve continued to use over the past few months (which is not true for most terminology introduced in this space)

- It helped me deconfuse my thinking on a bunch of concrete problems (in particular on the question of whether things like Alpha Go can be dangerous when “scaled up”)

- I’ve seen multiple other researchers and thinkers I respect refer to it positively

- In addition to being published as a paper, it was written up as a series of blogposts in a way that made it a lot more accessible

More of my thoughts on the paper (technical):

Note: If you haven’t read the paper, or you don’t have other background in the subject, this section will likely be unclear. It’s not essential to the case for the grant, but I wanted to share it in case people with the requisite background are interested in more details about the research

I was surprised by how helpful the conceptual work in the paper was - helping me think about where the optimization was happening in a system like AlphaGo Zero improved my understanding of that system and how to connect it to other systems that do optimization in the world. The primary formalism in the paper was clarifying rather than obscuring (and the ratio of insight to formalism was very high - see my addendum below for more thoughts on that).

Once the basic concepts were in place, clarifying different basic tools that would encourage optimization to happen in either the base optimizer or the mesa optimizer (e.g. constraining and expanding space/time offered to the base or mesa optimizers has interesting effects), plus clarifying the types of alignment / pseudo-alignment / internalizing of the base objective, all helped me think about this issue very clearly. It largely used basic technical language I already knew, and put it together in ways that would’ve taken me many months to achieve on my own - a very helpful conceptual piece of work.

Note on the writeups for Chris, Jess, and Lynette

The following three grants were more exciting to one or more other fund managers than they were to me (Oliver). I think that for all three, if it had just been me on the grant committee, we might have not actually made them. However, I had more resources available to invest into these writeups, and as such I ended up summarizing my view on them, instead of someone else on the fund doing so. As such, they are probably less representative of the reasons for why we made these grants than the writeups above.

In the course of thinking through these grants, I formed (and wrote out below) more detailed, explicit models of the topics. Although these models were not counterfactual in the Fund’s making the grants, I think they are fairly predictive of my future grant recommendations.

Chris Chambers ($36,635)

Note: Application sent in by Jacob Hilton.

Combat publication bias in science by promoting and supporting the Registered Reports journal format

I'm suggesting a grant to fund a teaching buyout for Professor Chris Chambers, an academic at the University of Cardiff working to promote and support Registered Reports. This funding opportunity was originally identified and researched by Hauke Hillebrandt, who published a full analysis here. In brief, a Registered Report is a format for journal articles where peer review and acceptance decisions happen before data is collected, so that the results are much less susceptible to publication bias. The grant would free Chris of teaching duties so that he can work full-time on trying to get Registered Reports to become part of mainstream science, which includes outreach to journal editors and supporting them through the process of adopting the format for their journal. More details of Chris's plans can be found here.

I think the main reason for funding this is from a worldview diversification perspective: I would expect it to broadly improve the efficiency of scientific research by improving the communication of negative results, and to enable people to make better-informed use of scientific research by reducing publication bias. I would expect these effects to be primarily within fields where empirical tests tend to be useful but not always definitive, such as clinical trials (one of Chris's focus areas), which would have knock-on effects on health.

From an X-risk perspective, the key question to answer seems to be which technologies differentially benefit from this grant. I do not have a strong opinion on this, but to quote Brian Wang from a Facebook thread:

In terms of [...] bio-risk, my initial thoughts are that reproducibility concerns in biology are strongest when it comes to biomedicine, a field that can be broadly viewed as defense-enabling. By contrast, I'm not sure that reproducibility concerns hinder the more fundamental, offense-enabling developments in biology all that much (e.g., the falling costs of gene synthesis, the discovery of CRISPR).

As for why this particular intervention strikes me as a cost-effective way to improve science, it is shovel-ready, it may be the sort of thing that traditional funding sources would miss, it has been carefully vetted by Hauke, and I thought that Chris seemed thoughtful and intelligent from his videoed talk.”

The Let’s Fund report linked in the application played a major role in my assessment of the grant, and I probably would not have been comfortable recommending this grant without access to that report.

Thoughts on Registered Reports

The replication crisis in psychology, and the broad spread of “career science,” have made it (to me) quite clear that the methodological foundations of at least psychology itself, but possibly also the broader life-sciences, are creating a very large volume of false and likely unreproducible claims.

This is in large part caused by problematic incentives for individual scientists to engage in highly biased reporting and statistically dubious practices.

I think preregistration has the opportunity to fix a small but significant part of this problem, primarily by reducing file-drawer effects. To borrow an explanation from the Let’s Fund report (lightly edited for clarity):

[Pre-registration] was introduced to address two problems: publication bias and analytical flexibility (in particular outcome switching in the case of clinical medicine).

Publication bias, also known as the file drawer problem, refers to the fact that many more studies are conducted than published. Studies that obtain positive and novel results are more likely to be published than studies that obtain negative results or report replications of prior results. The consequence is that the published literature indicates stronger evidence for findings than exists in reality.

Outcome switching refers to the possibility of changing the outcomes of interest in the study depending on the observed results. A researcher may include ten variables that could be considered outcomes of the research, and — once the results are known — intentionally or unintentionally select the subset of outcomes that show statistically significant results as the outcomes of interest. The consequence is an increase in the likelihood that reported results are spurious by leveraging chance, while negative evidence gets ignored.

This is one of several related research practices that can inflate spurious findings when analysis decisions are made with knowledge of the observed data, such as selection of models, exclusion rules and covariates. Such data-contingent analysis decisions constitute what has become known as P-hacking, and pre-registration can protect against all of these.

[...]

It also effectively blinds the researcher to the outcome because the data are not collected yet and the outcomes are not yet known. This way the researcher’s unconscious biases cannot influence the analysis strategy

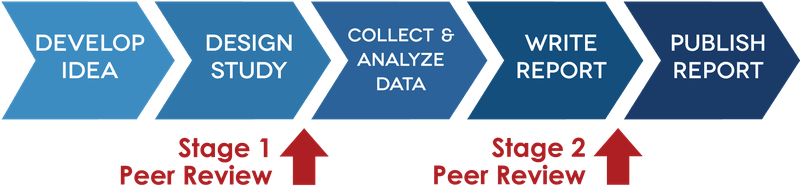

“Registered reports” refers to a specific protocol that journals are encouraged to adopt, which integrates preregistration into the journal acceptance process. Illustrated by this picture (borrowed from the Let’s Fund report):

Of the many ways to implement preregistration practices, I don’t think the one that Chambers proposes seems ideal, and I can see some flaws with it, but I still think that the quality of clinical science (and potentially other fields) will significantly improve if more journals adopt the registered reports protocol. (Please keep this in mind as you read my concerns in the next section.)

The importance of bandwidth constraints for journals

Chambers has the explicit goal of making all clinical trials require the use of registered reports. That outcome seems potentially quite harmful, and possibly worse than the current state of clinical science. (However, since that current state is very far from “universal registered reports,” I am not very worried about this grant contributing to that scenario.)

The Let’s Fund report covers the benefits of preregistration pretty well, so I won’t go into much detail here. Instead, I will mention some of my specific concerns with the protocol that Chambers is trying to promote.

From the registered reports website:

Manuscripts that pass peer review will be issued an in principle acceptance (IPA), indicating that the article will be published pending successful completion of the study according to the exact methods and analytic procedures outlined, as well as a defensible and evidence-bound interpretation of the results.

This seems unlikely to be the best course of action. I don’t think that the most widely-read journals should only publish replications. The key reason is that many scientific journals are solving a bandwidth constraint - sharing papers that are worth reading, not merely papers that say true things, to help researchers keep up to date with new findings in their field. A math journal could have papers for every true mathematical statement, including trivial ones, but they instead need to focus on true statements that are useful to signal boost to the mathematics community. (Related concepts are the tradeoff between bias and variance in Machine Learning, or accuracy and calibration in forecasting.)

Ultimately, from a value of information perspective, it is totally possible for a study to only be interesting if it finds a positive result, and to be uninteresting when analyzed pre-publication from the perspective of the editor. It seems better to encourage pre-publication, but still take into account the information value of a paper’s experimental results, even if this doesn’t fully prevent publication bias.

To give a concrete (and highly simplified) example, imagine a world where you are trying to find an effective treatment for a disease. You don’t have great theory in this space, so you basically have to test 100 plausible treatments. On their own, none of these have a high likelihood of being effective, but you expect that at least one of them will work reasonably well.

Currently, you would preregister those trials (as is required for clinical trials), and then start performing the studies one by one. Each failure provides relatively little information (since the prior probability was low anyways), so you are unlikely to be able to publish it in a prestigious journal, but you can probably still publish it somewhere. Not many people would hear about it, but it would be findable if someone is looking specifically for evidence about the specific disease you are trying to treat, or the treatment that you tried out. However, finding a successful treatment is highly valuable information which will likely get published in a journal with a lot of readers, causing lots of people to hear about the potential new treatment.

In a world with mandatory registered reports, none of these studies will be published in a high-readership journal, since journals will be forced to make a decision before they know the outcome of a treatment. Because all 100 studies are equally unpromising, none are likely to pass the high bar of such a journal, and they’ll wind up in obscure publications (if they are published at all) [1]. Thus, even if one of them finds a successful result, few people will hear about it. High-readership journals exist in large part to spread news about valuable results in a limited bandwidth environment; this no longer happens in scenarios of this kind.

Because of dynamics like this, I think it is very unlikely that any major journals will ever switch towards only publishing registered report-based studies, even within clinical trials, since no journal would want to pass up on the opportunity to publish a study that has the opportunity to revolutionize the field.

Importance of selecting for clarity

Here is the full set of criteria that papers are being evaluated by for stage 2 of the registered reports process:

1. Whether the data are able to test the authors’ proposed hypotheses by satisfying the approved outcome-neutral conditions (such as quality checks or positive controls)

2. Whether the Introduction, rationale and stated hypotheses are the same as the approved Stage 1 submission (required)

3. Whether the authors adhered precisely to the registered experimental procedures

4. Whether any unregistered post hoc analyses added by the authors are justified, methodologically sound, and informative

5. Whether the authors’ conclusions are justified given the data

The above list is comprehensive, and does not include any mention of the clarity of the authors’ writing, the quality/rigor of the explanation provided by the paper’s methodology, or the implications of the paper’s findings on underlying theory. (All of these are very important to how journals currently evaluate papers.) This means that journals can only filter for those characteristics in the first stage of the registered reports process, when large parts of the paper haven’t yet been written. As a result, large parts of the paper basically have no selection applied to them for conceptual clarity, as well as thoughtful analysis of implications for future theory, likely resulting in those qualities getting worse.

I think the goal of registered reports is to split research in two halves where you publish two separate papers, one that is empirical, and another that is purely theoretical, which that takes the results of the first paper as given and explores their consequences. We already see this split a good amount in physics, in which there exists a pretty significant divide between experimental and theoretical physics, the latter of which rarely performs experiments. I don’t know whether encouraging this split in a given field is a net-improvement, since I generally think that a lot of good science comes from combining the gathering of good empirical data with careful analysis and explanations, and I am particularly worried that the analysis of the results in papers published via registered reports will be of particularly low-quality, which encourages the spread of bad explanations and misconceptions which can cause a lot of damage (though some of that is definitely offset by reducing the degree to which scientists can fit hypotheses post-hoc, due to preregistration). The costs here seem related to Chris Olah’s article on research debt.

Again, I think both of these problems are unlikely to become serious issues, because at most I can imagine getting to a world where something between 10% and 30% of top journal publications in a given field have gone through registered reports-based preregistration. I would be deeply surprised if there weren’t alternative outlets for papers that do try to combine the gathering of empirical data with high-quality explanations and analysis.

Failures due to bureaucracy

I should also note clinical science is not something I have spent large amounts of time thinking about, that I am quite concerned about adding more red tape and necessary logistical hurdles to jump through when registering clinical trials. I have high uncertainty about the effect of registered reports on the costs of doing small-scale clinical experiments, but it seems more likely than not that they will lengthen the review process, and add additional methodological constraints.

(There is also a chance that it will reduce these burdens by giving scientists feedback earlier in the process and letting them be more certain of the value of running a particular study. However, this effect seems slightly weaker to me than the additional costs, though I am very uncertain about this.)

In the current scientific environment, running even a simple clinical study may require millions of dollars of overhead (a related example is detailed in Scott Alexander’s “My IRB nightmare”). I believe this barrier is a substantial drag on progress in medical science. In this context, I think that requiring even more mandatory documentation, and adding even more upfront costs, seems very costly. (Though again, it seems highly unlikely for the registered reports format to ever become mandatory on a large scale, and giving more researchers the option to publish a study via the registered reports protocol, depending on their local tradeoffs, seems likely net-positive)

To summarize these three points:

- If journals have to commit to publishing studies, it’s not obvious to me that this is good, given that they would have to do so without access to important information (e.g. whether a surprising result was found) and only a limited number of slots for publishing papers.

- It seems quite important for journals to be able to select papers based on the clarity of their explanations, both for ease of communication and for conceptual refinement.

- Excessive red tape in clinical research seems like one of the main problems with medical science today, so adding more is worrying, though the sign of the registered reports protocol on this is a bit ambigious

Differential technological progress

Let’s Fund covers differential technological progress concerns in their writeup. Key quote:

One might worry that funding meta-research indiscriminately speeds up all research, including research which carries a lot of risks. However, for the above reasons, we believe that meta-research improves predominantly social science and applied clinical science (“p-value science’) and so has a strong differential technological development element, that hopefully makes society wiser before more risks from technology emerge through innovation. However, there are some reproducibility concerns in harder sciences such as basic biological research and high energy physics that might be sped up by meta-research and thus carry risks from emerging technologies[110].

My sense is that further progress in sociology and psychology seems net positive from a global catastrophic risk reduction perspective. The case for clinical science seems a bit weaker, but still positive.

In general, I am more excited about this grant in worlds in which global catastrophes are less immediate and less likely than my usual models suggest, and I’m thinking of this grant in some sense as a hedging bet, in case we live in one of those worlds.

Overall, a reasonable summary of my position on this grant would be "I think preregistration helps, but is probably not really attacking the core issues in science. I think this grant is good, because I think it actually makes preregistration a possibility in a large number of journals, though I disagree with Chris Chambers on whether it would be good for all clinical trials to require preregistration, which I think would be quite bad. On the margin, I support his efforts, but if I ever come to change my mind about this, it’s likely for one or more of the above reasons."

Footnotes

[1] The journal could also publish a random subset, though at scale that gives rise to the same dynamics, so I’ll ignore that case. It could also batch a large number of the experiments until the expected value of information is above the relevant threshold, though that significantly increases costs.

Jess Whittlestone ($75,080)

Note: Funding from this grant will go to the Leverhulme Centre for the Future of Intelligence, which will fund Jess in turn. The LTF Fund is not replacing funding that CFI would have supplied instead; without this grant, Jess would need to pursue grants from sources outside CFI.

Research on the links between short- and long-term AI policy while skilling up in technical ML.

I’m applying for funding to cover my salary for a year as a postdoc at the Leverhulme CFI, enabling me to do two things:

-- Research the links between short- and long-term AI policy. My plan is to start broad: thinking about how to approach, frame and prioritise work on ‘short-term’ issues from a long-term perspective, and then focusing in on a more specific issue. I envision two main outputs (papers/reports): (1) reframing various aspects of ‘short-term’ AI policy from a long-term perspective (e.g. highlighting ways that ‘short-term’ issues could have long-term consequences, and ways of working on AI policy today most likely to have a long-run impact); (2) tackling a specific issue in ‘short-term’ AI policy with possible long-term consequences (tbd, but an example might be the possible impact of microtargeting on democracy and epistemic security as AI capabilities advance).

-- Skill up in technical ML by taking courses from the Cambridge ML masters.

Most work on long-term impacts of AI focuses on issues arising in the future from AGI. But issues arising in the short term may have long-term consequences: either by directly leading to extreme scenarios (e.g. automated surveillance leading to authoritarianism), or by undermining our capability to deal with other threats (e.g. disinformation undermining collective decision-making). Policy work today will also shape how AI gets developed, deployed and governed, and what issues will arise in the future. We’re at a particularly good time to influence the focus of AI policy, with many countries developing AI strategies and new research centres emerging.

There’s very little rigorous thinking the best way to do short-term AI policy from a long-term perspective. My aim is to change that, and in doing so improve the quality of discourse in current AI policy. I would start with a focus on influencing UK AI policy, as I have experience and a strong network here (e.g. the CDEI and Office for AI). Since DeepMind is in the UK, I think it is worth at least some people focusing on UK institutions. I would also ensure this research was broadly relevant, by collaborating with groups working on US AI policy (e.g. FHI, CSET and OpenAI).

I’m also asking for a time buyout to skill up in ML (~30%). This would improve my own ability to do high-quality research, by helping me to think clearly about how issues might evolve as capabilities advance, and how technical and policy approaches can best combine to influence the future impacts of AI.

The main work I know of Jess’s is her early involvement in 80,000 Hours. In the first 1-2 years of their existence, she wrote dozens of articles for them, and contributed to their culture and development. Since then I’ve seen her make positive contributions to a number of projects over the years - she has helped in some form with every EA Global conference I’ve organized (two in 2015 and one in 2016), and she’s continued to write publicly in places like the EA Forum, the EA Handbook, and news sites like Quartz and Vox. This background implies that Jess has had a lot of opportunities for members of the fund to judge her output. My sense is that this is the main reason that the other members of the fund were excited about this grant — they generally trust Jess’s judgment and value her experience (while being more hesitant about CFI’s work).

There are three things I looked into for this grant writeup: Jess’s policy research output, Jess’s blog, and the institutional quality of Leverhulme CFI. The section on Leverhulme CFI became longer than the section on Jess and was mostly unrelated to her work, so I’ve taken it out and included it as an addendum.

Impressions of Policy Papers

First is her policy research. The papers I read were from those linked on her blog. They were:

- The Role and Limits of Principles in AI Ethics: Towards a Focus on Tensions by Jess Whittlestone, Rune Nyrup, Anna Alexandrova and Stephen Cave

- Reducing Malicious Use of Synthetic Media Research: Considerations and Potential Release Practices for Machine Learning by Aviv Ovadya and Jess Whittlestone

On the first paper, about focusing on tensions: the paper said that many “principles of AI ethics” that people publicly talk about in industry, non-profit, government and academia are substantively meaningless, because they don’t come with the sort of concrete advice that actually tells you how to apply them - and specifically, how to trade them off against each other. The part of the paper I found most interesting were four paragraphs pointing to specific tensions between principles of AI ethics. They were:

- Using data to improve the quality and efficiency of services vs. respecting the privacy and autonomy of individuals

- Using algorithms to make decisions and predictions more accurate vs. ensuring fair and equal treatment

- Reaping the benefits of increased personalization in the digital sphere vs. enhancing solidarity and citizenship

- Using automation to make people’s lives more convenient and empowered vs. promoting self-actualization and dignity

My sense is that while there is some good public discussion about AI and policy (e.g. OpenAI’s work on release practices seems quite positive to me), much conversation that brands itself as ‘ethics’ is often not motivated by the desire to ensure this novel technology improves society in accordance with our deepest values, but instead by factors like reputation, PR and politics.

There are many notions, like Peter Thiel’s “At its core, artificial intelligence is a military technology” or the common question “Who should control the AI?” which don’t fully account for the details of how machine learning and artificial intelligence systems work, or the ways in which we need to think about them in very different ways from other technologies; in particular, that we will need to build new concepts and abstractions to talk about them. I think this is also true of most conversations around making AI fair, inclusive, democratic, safe, beneficial, respectful of privacy, etc.; they seldom consider how these values can be grounded in modern ML systems or future AGI systems. My sense is that much of the best conversation around AI is about how to correctly conceptualize it. This is something that (I was surprised to find) Henry Kissinger’s article on AI did well; he spends most of the essay trying to figure out which abstractions to use, as opposed to using already existing ones.

The reason I liked that bit of Jess’s paper is that I felt the paper used mainstream language around AI ethics (in a way that could appeal to a broad audience), but then:

- Correctly pointed out that AI is a sufficiently novel technology that we’re going to have to rethink what these values actually mean, because the technology causes a host of fundamentally novel ways for them to come into tension

- Provided concrete examples of these tensions

In the context of a public conversation that I feel is often substantially motivated by politics and PR rather than truth, seeing someone point clearly at important conceptual problems felt like a breath of fresh air.

That said, given all of the political incentives around public discussion of AI and ethics, I don’t know how papers like this can improve the conversation. For example, companies are worried about losing in the court of Twitter’s public opinion, and also are worried about things like governmental regulation, which are strong forces pushing them to primarily take popular but ineffectual steps to be more "ethical". I’m not saying papers like this can’t improve this situation in principle, only that I don’t personally feel like I have much of a clue about how to do it or how to evaluate whether someone else is doing it well, in advance of their having successfully done it.

Personally, I feel much more able to evaluate the conceptual work of figuring out how to think about AI and its strategic implications (two standout examples are this paper by Bostrom and this LessWrong post by Christiano), rather than work on revising popular views about AI. I’d be excited to see Jess continue with the conceptual side of her work, but if she instead primarily aims to influence public conversation (the other goal of that paper), I personally don’t think I’ll be able to evaluate and recommend grants on that basis.

From the second paper I read sections 3 and 4, which lists many safety and security practices in the fields of biosafety, computer information security, and institutional review boards (IRBs), then outlines variables for analysing release practices in ML. I found it useful, even if it was shallow (i.e. did not go into much depth in the fields it covered). Overall, the paper felt like a fine first step in thinking about this space.

In both papers, I was concerned with the level of inspiration drawn from bioethics, which seems to me to be a terribly broken field (cf. Scott Alexander talking about his IRB nightmare or medicine’s ‘culture of life’). My understanding is that bioethics coordinated a successful power grab (cf. OpenPhil’s writeup) from the field of medicine, creating hundreds of dysfunctional and impractical ethics boards that have formed a highly adversarial relationship with doctors (whose practical involvement with patients often makes them better than ethicists at making tradeoffs between treatment, pain/suffering, and dignity). The formation of an “AI ethics” community that has this sort of adversarial, unhealthy relationship with machine learning researchers would be an incredible catastrophe.

Overall, it seems like Jess is still at the beginning of her research career (she’s only been in this field for ~1.5 years). And while she’s spent a lot of effort on areas that don’t personally excite me, both of her papers include interesting ideas, and I’m curious to see her future work.

Impressions of Other Writing

Jess also writes a blog, and this is one of the main things that makes me excited about this grant. On the topic of AI, she wrote three posts (1, 2, 3), all of which made good points on at least one important issue. I also thought the post on confirmation bias and her PhD was quite thoughtful. It correctly identified a lot of problems with discussions of confirmation bias in psychology, and came to a much more nuanced view of the trade-off between being open-minded versus committing to your plans and beliefs. Overall, the posts show independent thinking written with an intent to actually convey understanding to the reader, and doing a good job of it. They share the vibe I associate with much of Julia Galef’s work - they’re noticing true observations / conceptual clarifications, successfully moving the conversation forward one or two steps, and avoiding political conflict.

I do have some significant concerns with the work above, including the positive portrayal of bioethics and the absence of any criticism toward the AAAI safety conference talks, many of which seem to me to have major flaws.

While I’m not excited about Leverhulme CFI’s work (see the addendum for details), I think it will be good for Jess to have free rein to follow her own research initiatives within CFI. And while she might be able to obtain funding elsewhere, this alternative seems considerably worse, as I expect other funding options would substantially constrain the types of research she’d be able to conduct.

Lynette Bye ($23,000)

Productivity coaching for effective altruists to increase their impact.

I plan to continue coaching high-impact EAs on productivity. I expect to have 600+ sessions with about 100 clients over the next year, focusing on people working in AI safety and EA orgs. I’ve worked with people at FHI, Open Phil, CEA, MIRI, CHAI, DeepMind, the Forethought Foundation, and ACE, and will probably continue to do so. Half of my current clients (and a third of all clients I’ve worked with) are people at these orgs. I aim to increase my clients’ output by improving prioritization and increasing focused work time.

I would use the funding to: offer a subsidized rate to people at EA orgs (e.g. between $10 and $50 instead of $125 per call), offer free coaching for select coachees referred by 80,000 Hours, and hire contractors to help me create materials to scale coaching.

You can view my impact evaluation (linked below) for how I’m measuring my impact so far.

(Lynette’s public self-evaluation is here.)

I generally think it's pretty hard to do "productivity coaching" as your primary activity, especially when you are young, due to a lack of work experience. This means I have a high bar for it being a good idea that someone should go full-time into the "help other people be more productive” business.