Therapy without a therapist: Why unguided self-help might be a better intervention than guided

By huw @ 2024-04-08T06:01 (+75)

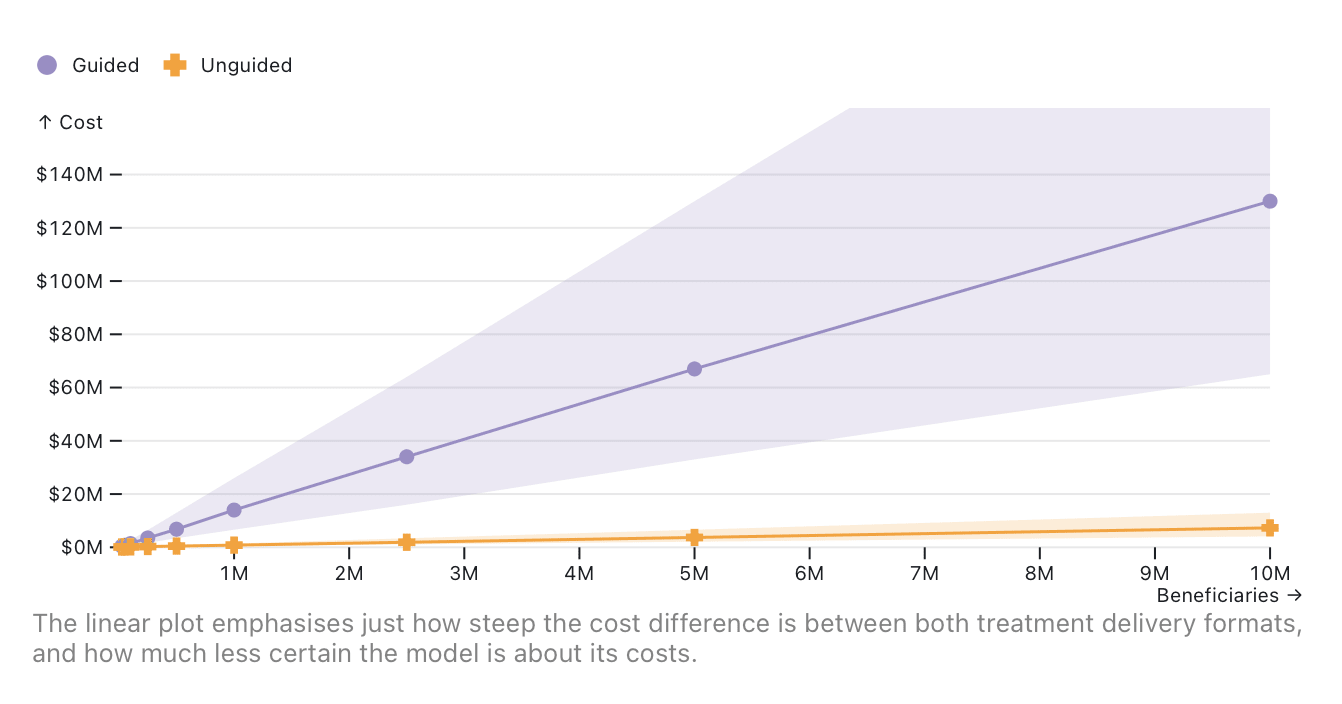

Correction: I identified some errors in my cost model that caused me to slightly overstate the difference between the cost of guided and unguided self-help. I've corrected a lot of this post (you'll see strikethroughs where I have), but not the charts. See the comment for more details.

Summary

- Guided self-help involves self-learning psychotherapy, and regular, short contact with an advisor (ex. Kaya Guides, AbleTo). Unguided self-help removes the advisor (ex. Headspace, Waking Up, stress relief apps).

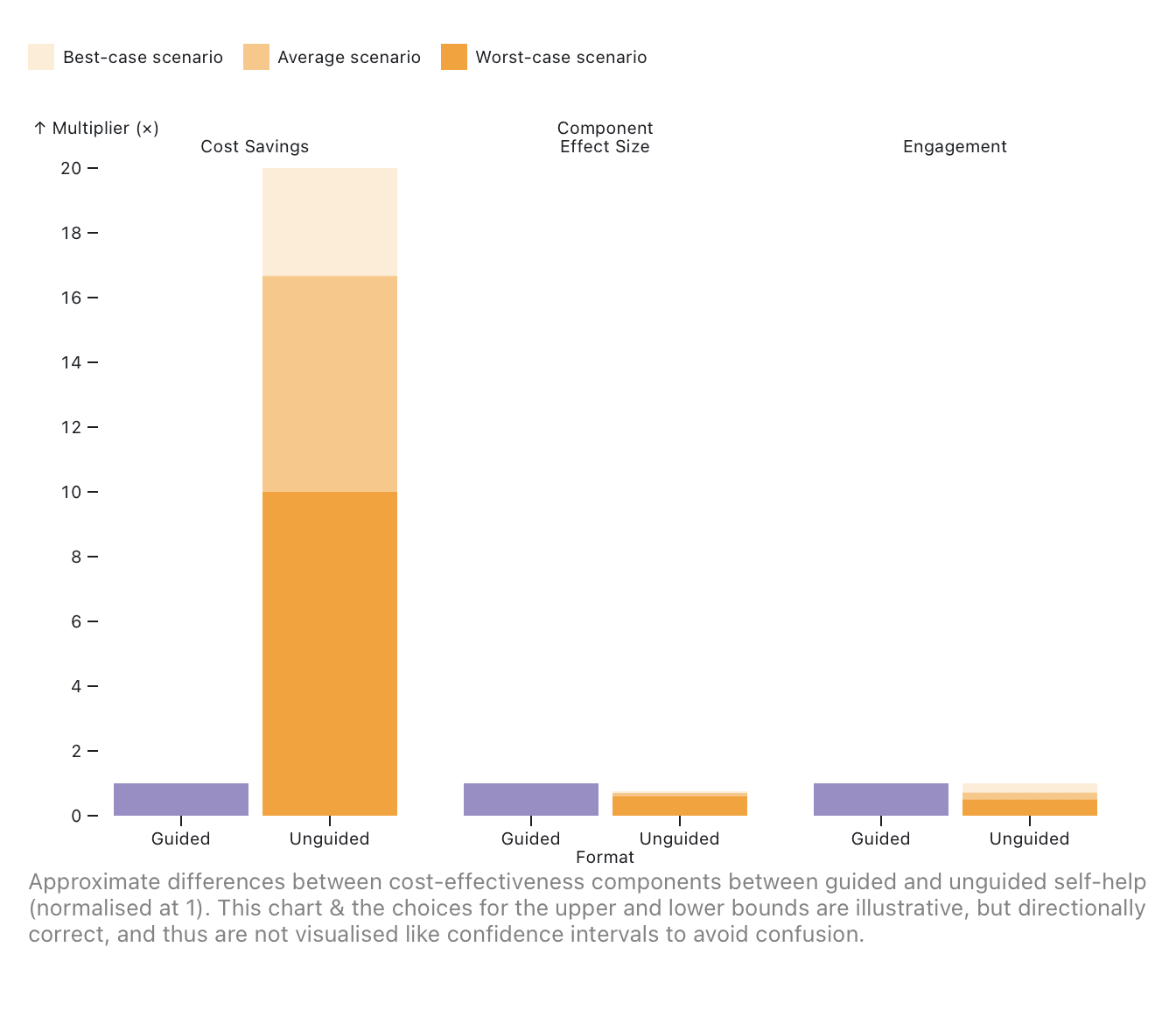

- It's probably 6× (2.5–9×) more cost-effective

- Behavioural activation is a great fit for it (That's where you think about things that make you happy and make structured plans to do them)

- It’s less risky, mostly because it scales better

How much worse is therapy without a therapist?

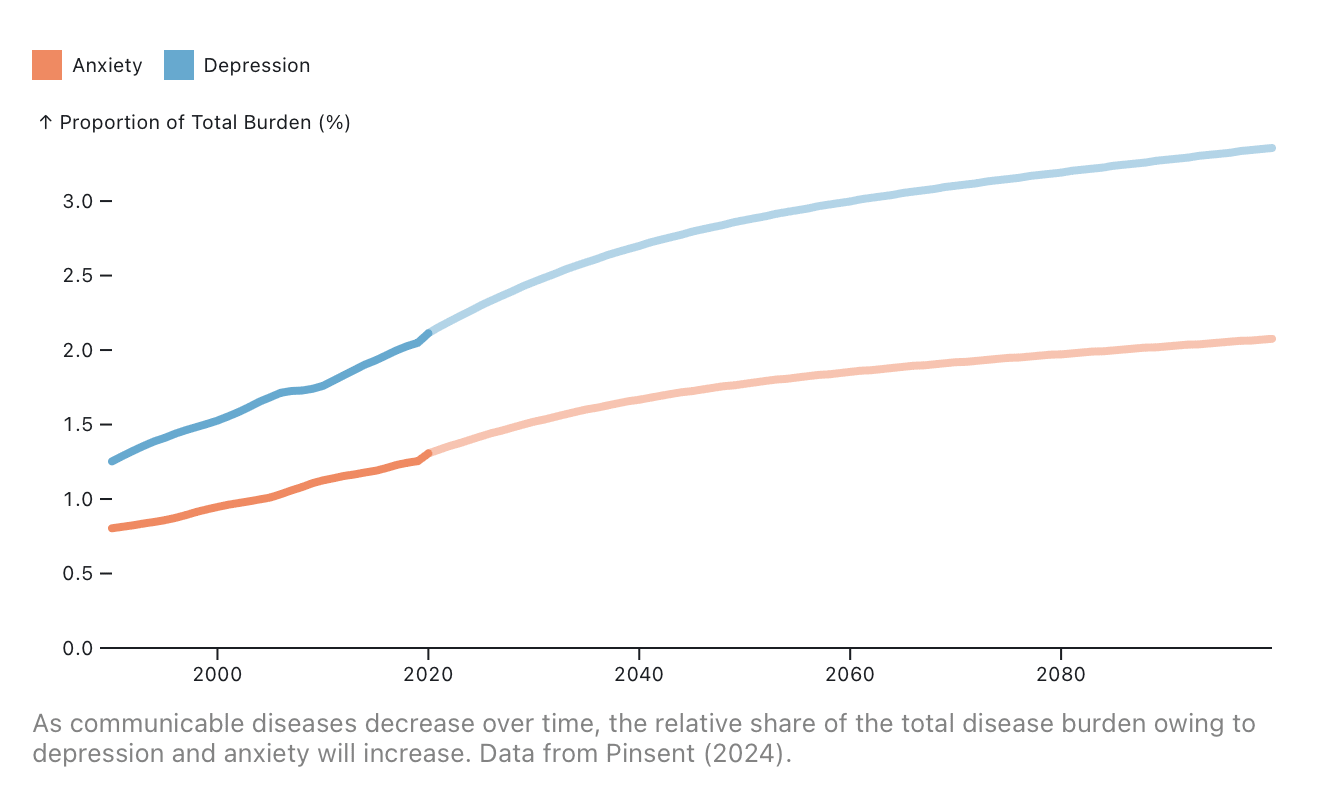

A lot of work has already been done in EA to emphasise mental health as a cause area. It seems important, tractable, neglected relative to other interventions, and is at least in the conversation for cost-effectiveness[1]. And unlike many other health issues, we can only expect it to get worse over time[2].

Guided self-help is an intervention which incorporates self-directed learning of a psychotherapy, and brief, regular contact with a lightly-trained healthcare worker. It can be deployed in highly cost-sensitive environments, and flagship programmes have been developed with the WHO and deployed across Europe, Asia, and Africa[3][4][5][6]. Off the back of their own research (easily the most comprehensive cost-effectiveness analysis)[7], Charity Entrepreneurship recently incubated Kaya Guides, who are cost-effectively scaling the same programme in India[8].

But the same report also notes that removing the guided component might be even more cost-effective. This is called unguided (a.k.a. pure) self-help, and it’s usually defined as any self-learned psychotherapy (regardless of whether that psychotherapy is evidence-based). The early examples involved reading books, such as the Overcoming series, but modern interventions are usually apps, such as Headspace, Waking Up, Clarity CBT Journal, Thought Saver, UpLift or just versions of Step-By-Step and Kaya Guides without the guides. This report’s definition is deliberately broad to keep in line with the cited literature, but when talking about potential interventions I’m generally thinking about apps based on evidence-based techniques.

This report is purely comparative; it's only valuable if you already believe that guided self-help might be a promising, cost-effective intervention. I'll discuss dollar-for-dollar cost-effectiveness, make some arguments for behavioural activation as a uniquely well-suited psychotherapy to apply, and finish up by arguing that since it scales so much better, it's much less risky to try. (Note: That last bit is a bit self-serving since I'm applying to AIM with this idea).

Finally, I'll limit the analysis to depression since it's the most burdensome mental health problem, but many of the included studies also find similar results for anxiety.

Unguided self-help is probably 6× (2.5–9×) more cost-effective than guided

Let's start with cost-effectiveness. Here's my chain of reasoning:

- It’s about 70% as effective against depression

- The effect shouldn’t decay differently

- If therapy effects decay exponentially, we’d see little to no difference between guided and unguided at follow-up

- We find no evidence of a difference at 6–12 months follow-up (but there isn’t much data)

- Beneficiaries use it no less than half as much

- It's at least 10–15× cheaper, and might scale sub-linearly

- In Charity Entrepreneurship's CEAs, removing guidance reduces costs by ~93% at scale

- Using Kaya Guides' estimates, removing guidance reduces costs by ~90% at scale

- An effective intervention should be able to scale development sub-linearly

- Marginal costs might scale to zero due to organic recruitment

In the worst case, the effect size might be around half, with half the engagement, and only 10% of the cost. This would still generate a 2.5× improvement. And in the best case, we might achieve 9× (with 75% engagement and 7% of the cost).

I don't think you need to believe in my exact estimates to agree with me—the lowest bar here would probably be to agree that it's unlikely that the differences between the two delivery forms are an order of magnitude or more, and as we'll see, there isn't strong evidence for this.

It’s about 70% as effective against depression

We should compare against waitlist controls, despite possible bias

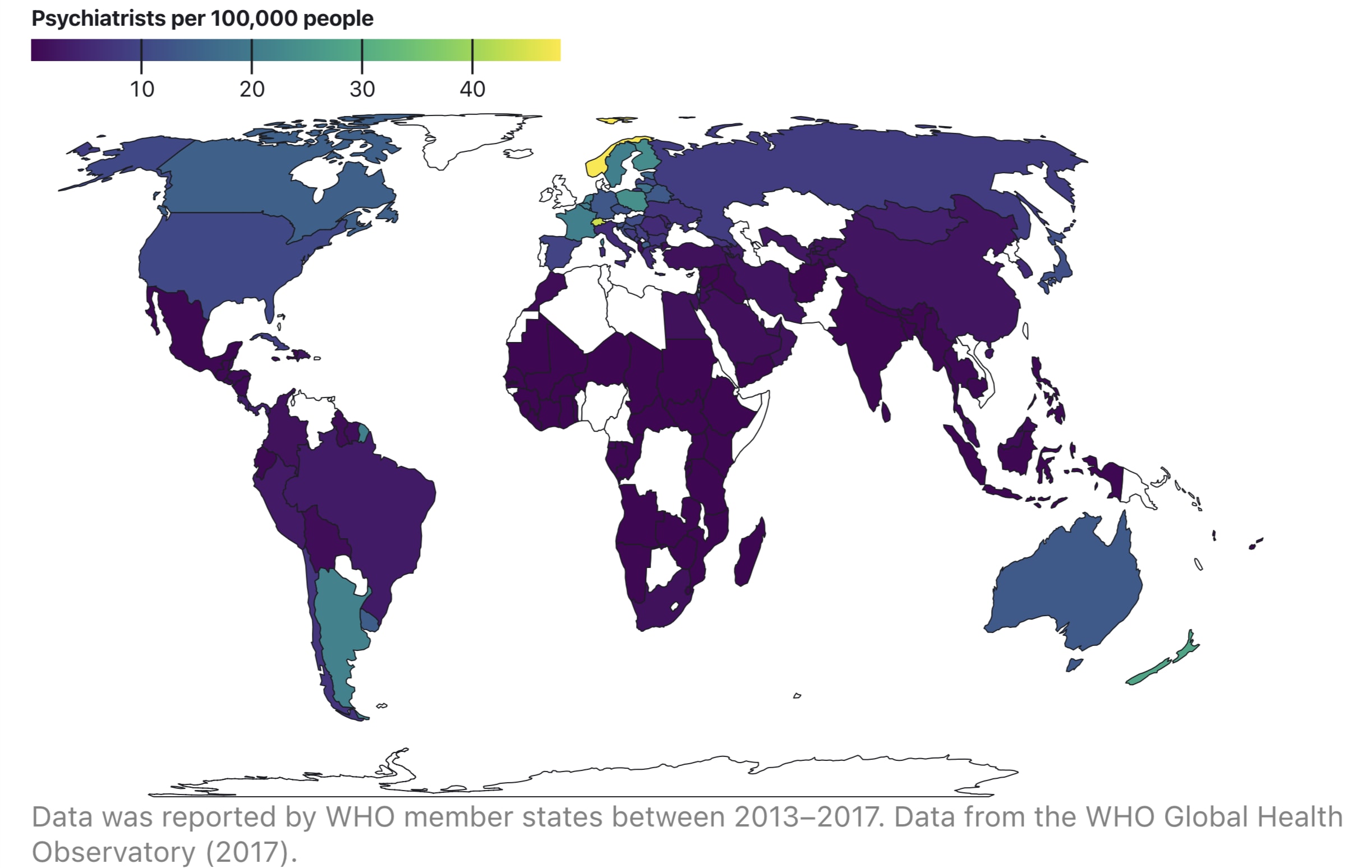

86% of active mental health cases in LMICs receive no treatment, compared to 63% in HICs[9]. The median number of mental health workers per 100,000 population in LMICs is 2, compared with 60 in HICs[10].

We should expect that almost all recipients of a mental health charity operating anywhere in the world should be untreated. Furthermore, most psychological trials are run in HIC contexts, where treatment-as-usual (TAU) means much more care than in LMICs. This means that we should focus on trials that compare intervention to an inactive control, typically a waitlist. However, there is considerable debate about whether waitlist controls inflate effect sizes[11][12][13][14] (by approx. 25%[14:1]), so we should still remain mindful of TAU control results.

We should compare against studies which recruited depressed people

More severely depressed people have more to improve, so we shouldn't be surprised when we find that baseline severity moderates effect sizes of internet-based psychotherapies[15][16][17][18][19]. We should be careful not to include studies of general populations, and at least require that the patient score for depression, since a new effective intervention should expect to treat people presenting with a problem, or at least to spend donor money in a targeted way.

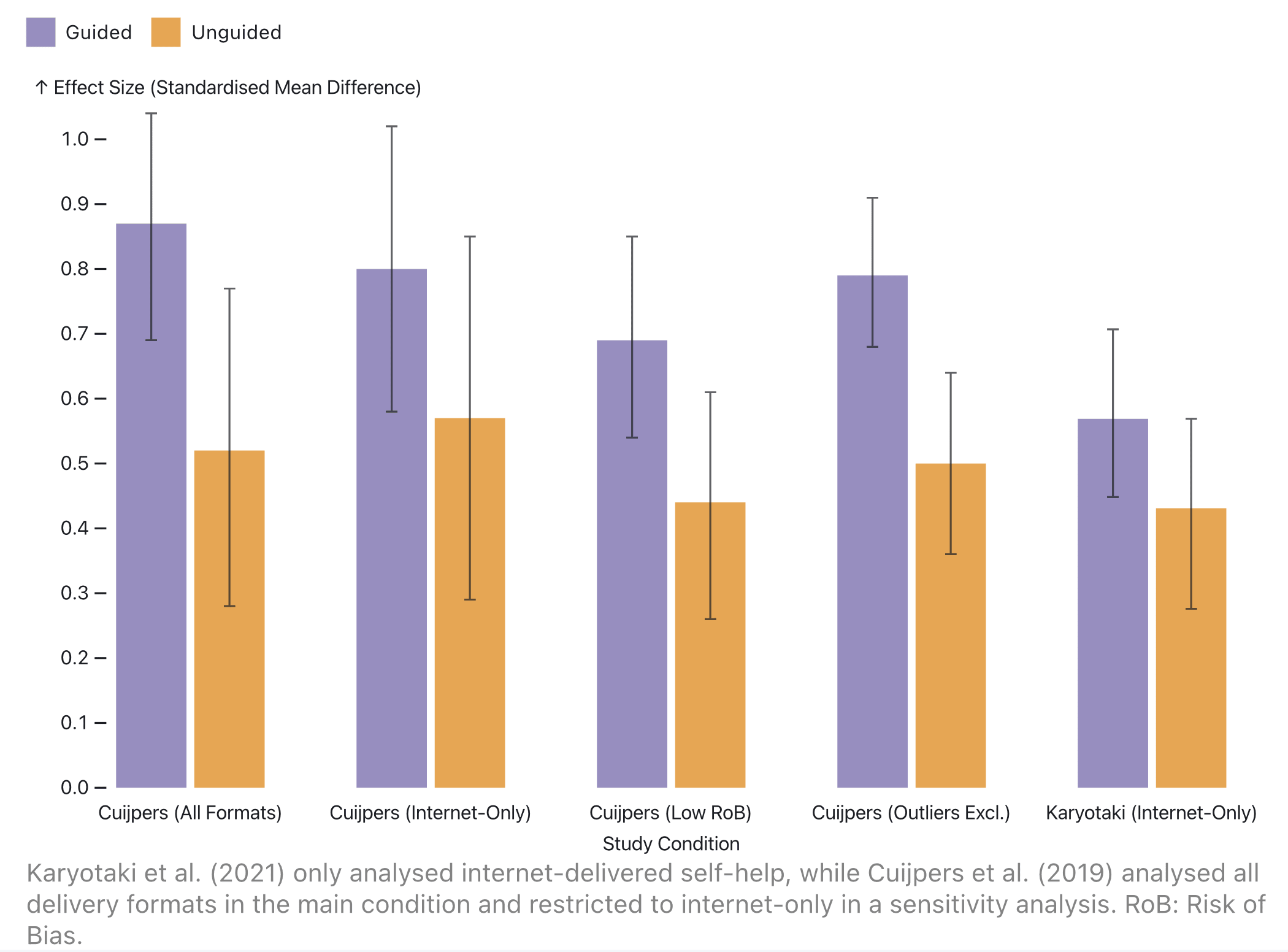

The best meta-analyses show ~70% effectiveness for these conditions

A very large network meta-analysis ( = 155, = 15,191) found unguided self-help has 60% of the effect size of guided ( = 0.52 vs 0.87) for patients with depression against waitlist[20]. Restricting to just low risk of bias studies ( = 71) improved the gap to 64%; restricting to just internet-delivered self-help ( = 148) improved it to 70% ( = 0.57 vs 0.8), at which point the difference was no longer statistically significant. However, they could not find a significant advantage of unguided self-help against TAU in any condition.

Another very large study of individual data for depressed patients ( = 39, = 9,751; 8,107 aggregated) found unguided had 76% of the effect size of guided against waitlist ( = 0.43 vs 0.57), and found a significant advantage of unguided against TAU[16:1]. They only found weak evidence of publication bias, and risk of bias in their included studies was low, so they didn't split out their analyses in the same way.

The effect shouldn’t decay differently

The decay characteristics of psychotherapy are not well understood[21], and few studies provide long-term follow-up at real-world intervals[22]. Assuming a null hypothesis of exponential decay, we'd expect the difference to disappear over time (as larger effects will wear off faster). Neither of the two very large meta-analyses above found evidence of a difference between guided and unguided self-help at ≥3 months follow-up[16:2][20:1], although neither had much data.

Beneficiaries use it no less than half as much

We suspect that there might be a difference between engagement in guided and unguided self-help. In trial contexts, we can measure the amount of content engaged with (adherence), and how many people drop out of treatment (acceptability/dropout/attrition), but extrapolating these to real-world interventions is hard because they don't have upper bounds on engagement or duration. We’ll reason that it’s valid to extrapolate the relative guided-unguided difference to real-world contexts, because the primary factors of that difference hold under both conditions & real-world unguided engagement isn’t terribly low.

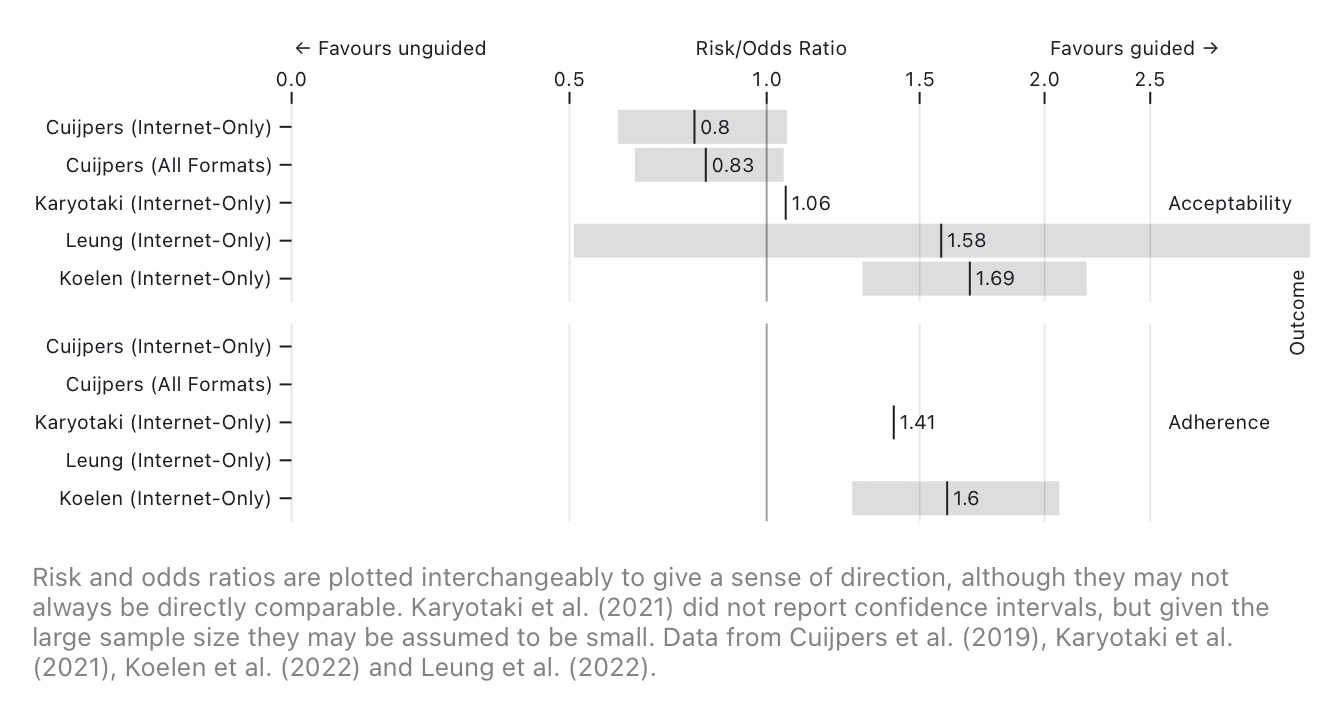

The engagement difference is no worse than half in trials

No meta-analyses found a relative risk or odds ratio of more than 2 for the improvement of guided self-help on adherence and acceptability[20:2][16:3][23][24], and one very large meta-analysis found that guided self-help decreased acceptability[20:3]. Beyond this, the studies disagreed about the intensity of the association; heterogeneity even in direct comparisons was high ( = 42% for adherence and 52% for acceptability[23:1], where the latter was statistically significant). We can only conclude that the engagement difference should be no worse than half (and likely have little difference), and more driven by adherence than acceptability.

The difference might not be due to expertise

There is no evidence that stronger qualifications improve effectiveness[24:1][23:2][25] or adherence [23:3], such that lay mental health workers are highly effective[26][3:1][4:1] and even non-inferior to accredited psychotherapists in large trials[25:1]. Going further, a very large individual participant data (IPD) meta-analysis ( = 76, = 17,521; 11,704 aggregated) deconstructed factors relevant to adherence across guided and unguided internet-based self-help[27]. Neither initial face-to-face contact nor treatment-relevant guidance improved effectiveness or adherence; rather, where human guidance improved those outcomes it was likely due to encouragement and did not depend on expertise.

The difference might really be due to encouragement

As mentioned, the above very large IPD meta-analysis found stronger evidence for both human and automated encouragement on adherence, although neither were quite statistically significant[27:1]. Automated encouragement also improves acceptability[28] and effectiveness[29] (although the latter study only reached significance for anxiety symptoms, not depression). Additionally, making human guidance on-demand reduces adherence and acceptability[23:4]. Finally, in real-world settings, user encouragement features such as calls to action, adaptivity, and feedback improve 30-day retention[30], unique sessions[31], and usage time[30:1][31:1].

Real-world unguided engagement isn't obliteratively low

Acceptability rates for mental health phone app RCTs average around 74%[18:1], while real-world 30th-day retention is 3.3% (median; IQR 6.2%; this should heavily underestimate trial acceptability because it only measures usage on a single day, while trials collect follow-up over many weeks)[32]. In the worst case, when directly comparing trial data of publicly available apps to real-world usage, adherence is only 4 times lower[33].

Since real-world conditions are similar, we can extrapolate from trial contexts

In trial contexts, we see a solid gap between engagement of unguided and guided self-help. Although precise numbers are hard to narrow down, it's not worse than a 50% reduction. We know that the gap is more likely to be driven by encouragement than expertise, which, if anything, is likely to improve for real-world interventions with more development work; but at least isn't likely to reduce. When we look at real-world engagement, we don't have comparative data for guided and unguided self-help, but we know that unguided self-help's doesn't decrease by an order of magnitude. Therefore, I think a conservative read of the evidence puts the worst case at a 50% reduction in engagement, but with potential for a smaller gap.

It's at least 10–15× cheaper, and might scale sub-linearly

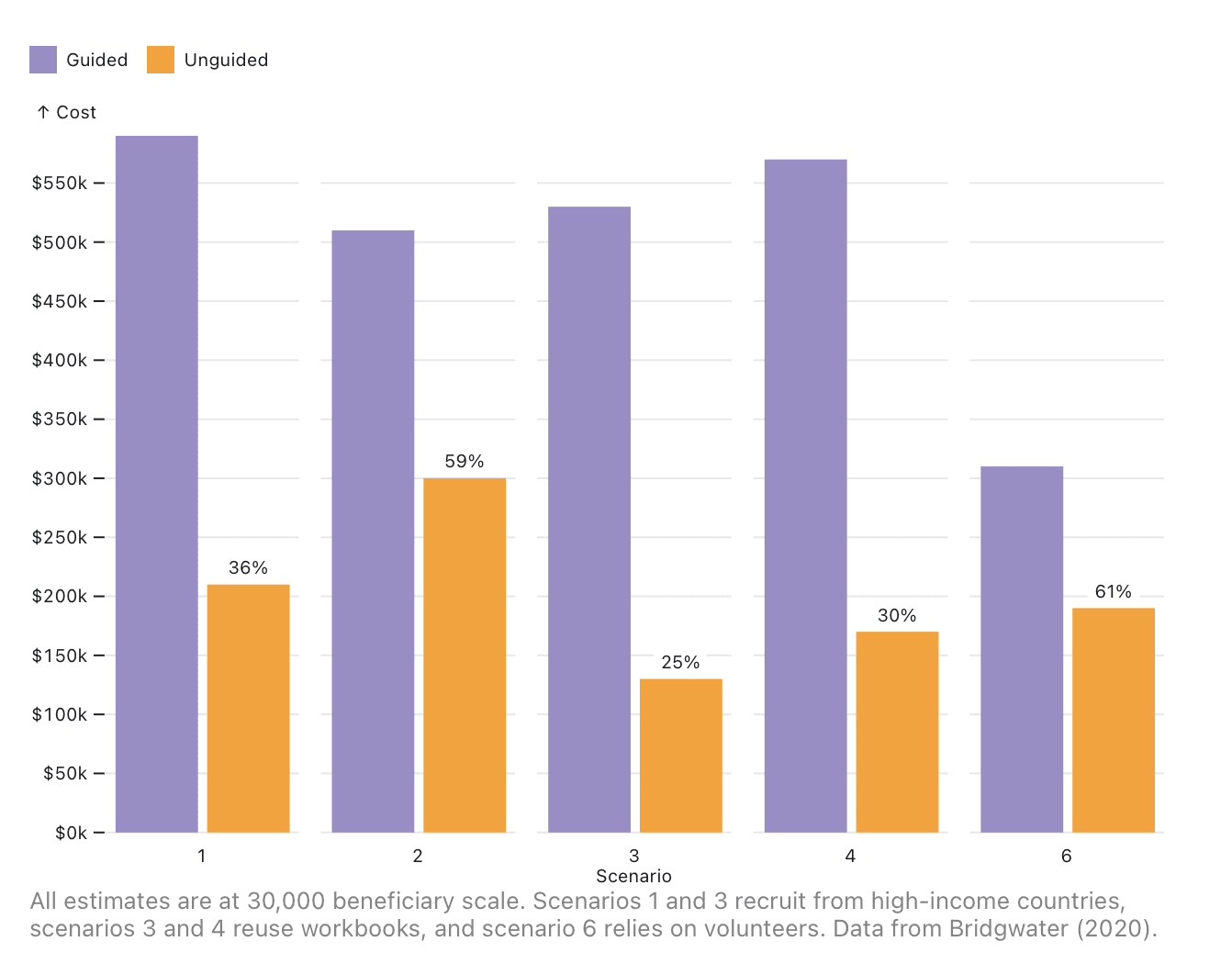

In Charity Entrepreneurship's CEAs, removing guidance reduces costs by ~93% at scale

Charity Entrepreneurship modelled 6 cost-effectiveness analyses (CEAs) assessing different forms of self-help, including one for unguided self-help; the latter outperforming the others in cost-effectiveness[7:1].

When we remove guidance costs without altering the cost structure or scale, we lower costs by up to 75%.

| Scenario | Cost with guidance | Cost without guidance | Proportion |

|---|---|---|---|

| 1 | $590,000 | $210,000 | 36% |

| 2 | $510,000 | $300,000 | 59% |

| 3 | $530,000 | $130,000 | 25% |

| 4 | $570,000 | $170,000 | 30% |

| 6 | $310,000 | $190,000 | 61% |

We must acknowledge that Scenario 6 uses volunteer labour, so treats staff time as free, but real-world Step-By-Step implementers believe this is unlikely to scale[5:1]. Furthermore, Scenarios 1 and 3 recruit guides from high-income countries, which would inflate costs. Scenarios 3 and 4 use reused workbooks, which accounts for their reduced costs. Scenario 4 is the most representative of those provided.

However, in practice, the cost structure of a Step-By-Step-style programme would is very different, since it doesn't distribute workbooks. We can modify the scenarios as follows:

- Reduce workbook, delivery, and travel costs to $0

- Add 1 additional staff member per 250k beneficiaries for app development

- The approximate number of downloads per employee across top self-guided mental health apps is 85–650k ( = 250k; Appendix 1). A self-guided mental health app developer reported reaching 8,000,000 users with 2 developers[34].

- Reduce recruitment costs to $0.02–0.10

- This was reported as $0.02-0.10 by a self-guided mental health app developer as the cost per install for low-income countries[34:1].

Multiply by 2 in the unguided case to account for lower engagement rates.

- Divide recruitment costs by 10–15% to get the cost per first starter (~12.34% of Kaya Guides installers actually started the programme[8:1])

- Break out staff time per participant

- Each participant has 1 to 10

- We set the minimum to 1 since the 12.34% of installers above all made it to a call

- We set the maximum to 10 since a typical programme has about ~8 weeks of calls

- Staff spend 15 to 40 minutes on each call

- Some programmes do 15 minute calls[8:2], others do half-hour calls

- There's some leeway time per call for notes, chat review, etc

- Each participant has 1 to 10

- Split office space costs to account for different numbers of staff in each condition.

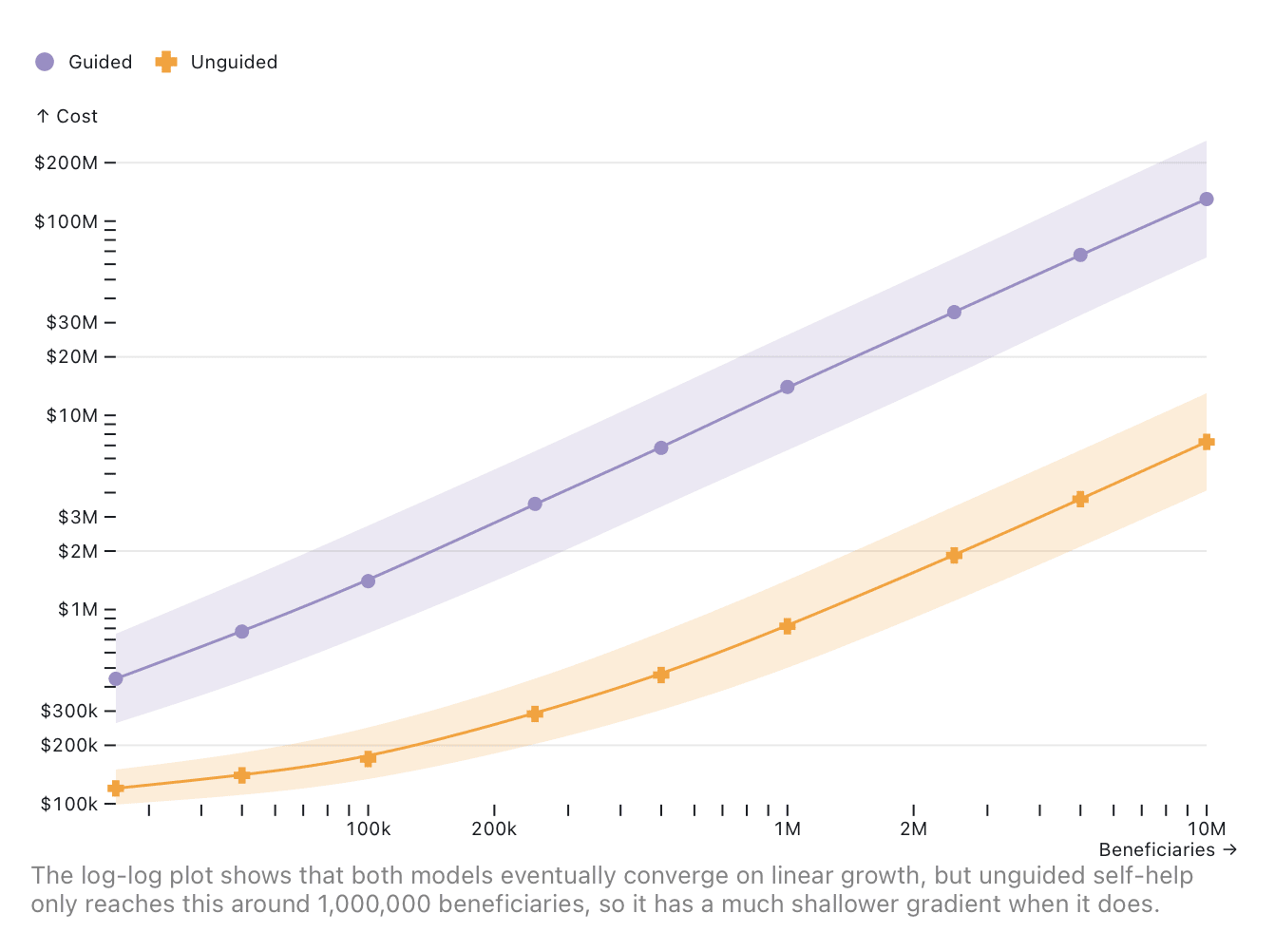

The modified Guesstimate model is in Appendix 2. In it, unguided self-help scales extremely well.

| Beneficiaries | Cost with guidance | Cost without guidance | Proportion |

|---|---|---|---|

| 25,000 | $450,000 | $120,000 | 35% |

| 50,000 | $810,000 | $130,000 | 25% |

| 100,000 | $1,500,000 | $160,000 | 17% |

| 500,000 | $7,100,000 | $400,000 | 9.4% |

| 1,000,000 | $14,000,000 | $710,000 | 8.2% |

| 5,000,000 | $70,000,000 | $3,100,000 | 7.2% |

| 10,000,000 | $140,000,000 | $6,200,000 | 7.1% |

The following plots include the original numbers before I updated the model, but are close enough to the new estimates that it's not worth changing them.

Under this cost structure, the proportion approaches a limiting value of around 7% (credible interval 1.5–22%) at modest scale—a reduction of 93%. At this scale, the costs begin to linearise and beneficiary recruitment becomes the main cost. Even if we assume a fully volunteer-based model is possible, managerial staff & administration dominate guided self-help, leaving the limiting proportion at 14%.

The proportion is sensitive to the number of hours per participant. Reducing the minimum time to 15 minutes (a single Kaya Guides session, excluding buffer time to prepare & review) increases the limiting proportion to 8.5%. However, increasing the maximum to 780 (a 15-minute session per week for a year, excluding buffer time) a session per week per year reduces it to 4.8%. The issue here is that decreasing staff hours per participant is likely to proportionally reduce effectiveness, to the point of realising that the most cost-effective intervention eliminates guidance entirely.

Using Kaya Guides' estimates, removing guidance reduces costs by ~90% at scale

At 15,000-person scale, Kaya Guides estimate a total cost of $9.32 per beneficiary, and a marginal cost of $3.93 for new beneficiaries[8:3]. The modified Charity Entrepreneurship model is close to agreement, predicting a total cost of $13–35 per beneficiary and a marginal cost of $3.40–15 per beneficiary. If we divide the marginal cost of treating a guided participant by 2, to agree with Kaya Guides, it predicts a cost reduction of 44% for unguided self-help.

However, this occurs at Kaya Guides' predicted 3-year scale. At their predicted 5-year scale of 100,000 beneficiaries, the reduction increases to 78%, and limits at around 90%.

An effective intervention should be able to scale development costs sub-linearly

It's not clear how to account for development costs. Although major software companies tend to scale their headcount linearly with the number of users, an unguided self-help intervention only needs to be developed once and delivered to multiple contexts, only incurring adaptation costs for new target populations (as Step-By-Step did[35][6:1][36]). Once an intervention reliably produces an effect size, additional development isn't necessary and a smaller group of engineers could handle maintenance and support, which tends to scale sub-linearly as bugs are fixed without new ones being introduced.

Marginal costs might scale to zero due to organic recruitment

As the intervention proliferates, it will appear higher in searches and app store rankings, and spread through media and word of mouth. As such, at higher scale the cost of recruiting new beneficiaries should decrease—and these beneficiaries should be less likely to drop out. A self-guided mental health app developer reported an organic install rate of roughly 2/3rds, and speculated that popular apps would reach close to 100%[37]. Practically, the marginal cost of recruitment may even approach zero.

Behavioural activation is a great fit for unguided self-help

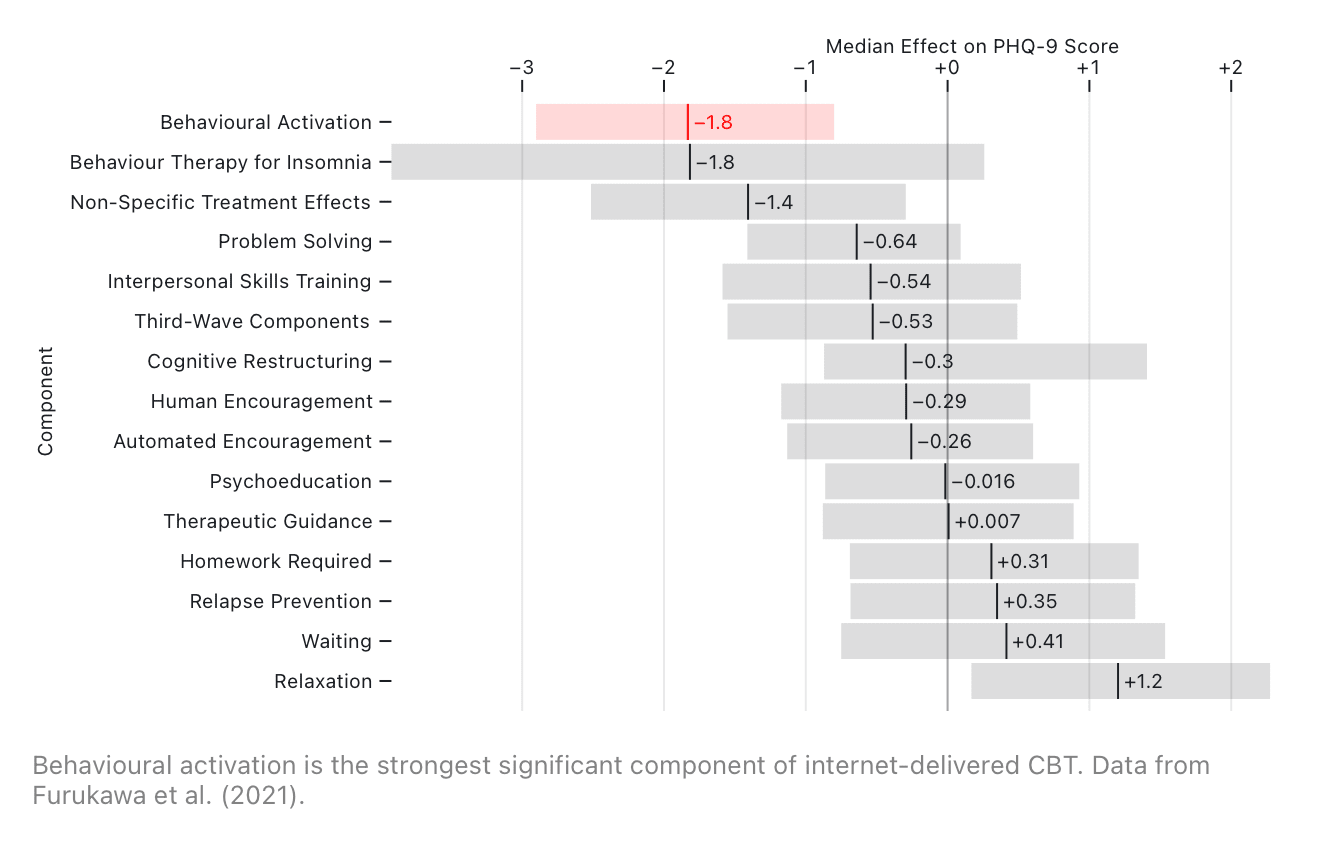

Cognitive behavioural therapy is made up of many components, and although the overall programme is highly effective[38][22:1], component analyses have struggled to determine what drives it[39][40][41][27:2]. We can use this to our advantage by identifying components that are likely to be non-inferior, but better suited to unguided self-help.

One such component is behavioural activation therapy (BAT; itself a subset of CBT). It is extremely simple, as it involves tracking one's mood, identifying pleasant activities and creating structured plans to do them[42][43][44][41:1][45]. Although developed by Lewinsohn in 1975[44:1], BAT fell out of favour during the cognitive revolution before being revived in 1996 by a study demonstrating its non-inferiority to pure cognitive therapy and cognitive behavioural therapy[41:2].

In this section, I'll argue that behavioural activation:

- Is as effective as CBT and other evidence-based therapies

- Is the strongest significant component of internet-based CBT

- Might be easier to self-learn since it's simpler

- Might be less stigmatising since it's less medicalised

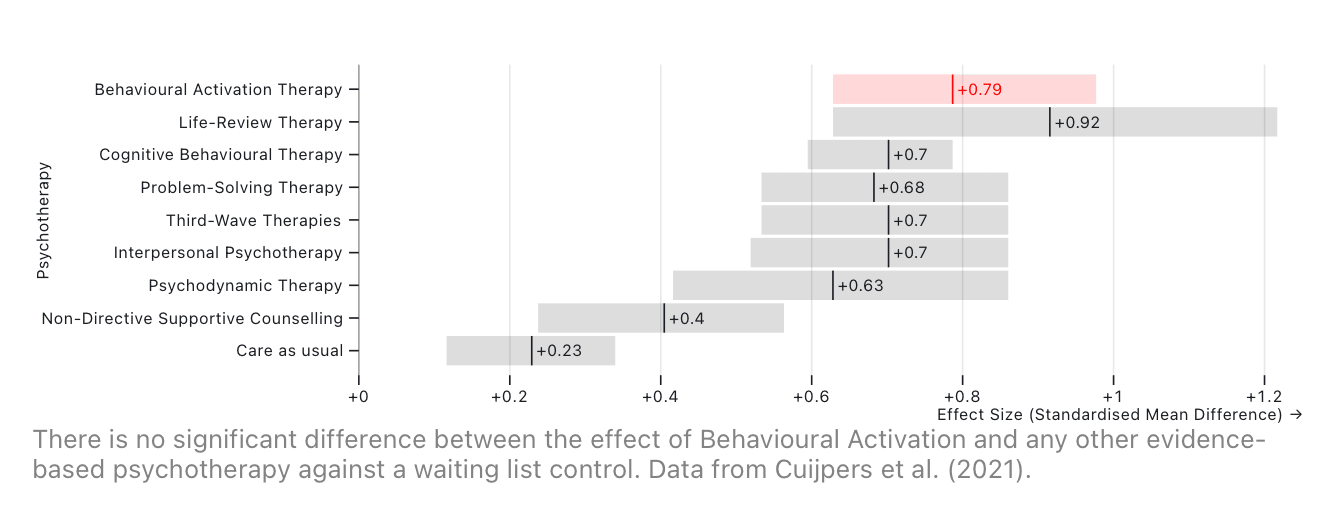

It's as effective as CBT and other evidence-based therapies

In the most comprehensive meta-analyses, BAT is highly effective against active and inactive controls ( = 331, = 34,285[38:1]; = 26, = 1,524[46]; = 16, = 780[45:1]) and non-directive counselling[38:2], non-inferior against all other evidence-based psychotherapies in effectiveness, acceptability, and remission rates[45:2][38:3], and specifically non-inferior against pure cognitive therapy and cognitive behavioural therapy[45:3] ( = 45, = 3,382[47]).

It's the strongest significant component of internet-based CBT

A large meta-analysis of component studies found removing behavioural activation from CBT significantly reduced effect sizes, although only had 3 direct comparisons[39:1]. Another large network meta-analysis ( = 76, = 6,973) found a non-significant moderate depressive effect of behavioural activation, but it only had a few arms without it, and only measured face-to-face CBT[40:1].

However, a very large IPD meta-analysis ( = 76, = 17,521; 11,704 aggregated) found a significant positive effect of behavioural activation using 84 out of 179 arms ( = 0.13–0.48), making it the strongest analysed component[27:3]. As well as having more contrasting arms, by using internet-based CBT it didn't risk face-to-face therapists incorporating elements from other therapies ad-hoc, so was more statistically powerful. The authors note that this restriction hampers extrapolation, but for unguided self-help it's clearly superior to other therapies.

It might be easier to self-learn since it's simpler

As discussed, there isn't strong evidence that qualifications improve effect sizes[24:2][23:5][25:2]. Further, a study directly compared CBT administered by a licensed psychotherapist with BAT administered by lay mental health workers, and found no difference between treatment arms ( = 440)[25:3]. The very large IPD meta-analysis above concluded that behavioural activation is more effective in self-help contexts specifically because it's easier to self-learn[27:4], and this concurs with intuitions about its simplicity.

It might be less stigmatising since it's less medicalised

Mental health stigma is higher in low-income countries[48][49], which drives self-stigma[50][51][52] and reduces mental health care utilisation[51:1][53][54]. High assessments of public stigma are associated with higher dropout in guided and unguided self-help, although not adherence[55].

Unguided self-help has been shown be an acceptable intervention for people otherwise unwilling to engage with face-to-face treatment[56]. When Step-By-Step was trialled in Lebanon, nearly half of the participants didn't tell anyone they knew for fear of stigmatisation[5:2], or even partner violence[6:2] (if anything, however, this indicates that it was an acceptable & private intervention for many). One Syrian refugee noted:

When you hear the word psychiatrist, it is associated with craziness or something.[6:3]

We can reason that behavioural activation may be less stigmatising over and above unguided self-help. Unlike many other therapies, it can be delivered without psychoeducation or even requiring the recipient to admit they have a mental health problem. The intervention can be designed to minimise medicalised terms, which Step-By-Step did in Lebanon[6:4] and was suggested by Step-By-Step participants in Germany and Sweden as needing further improvement[57]. In lower-income countries, users mentioned sharing phones and wanting privacy[6:5][36:1][58]; the plausible deniability of a less medicalised app may help with this. However, placebo effects may be a large part of psychotherapy's effectiveness[27:5][38:4], so demedicalisation may not be worth the trade-off.

It’s less risky, mostly because it scales better

For the sake of convenience, this section will mirror the Charity Entrepreneurship report's risk factors: idea strength, limiting factors, execution difficulty, and externalities. I’ll argue:

- There’s less evidence overall, but not much

- It scales superbly, so it’s highly funding-absorbent

- It can fail faster and cheaper

- Externalities are small, but displacement is concerning

Overall, the value of higher scale—both directly, and as a de-risker—should outweigh the small differences in the other factors, which would make unguided self-help a better intervention.

There's less evidence overall, but not much

Although the literature generally produces statistically significant results for unguided self-help, face-to-face therapy will always have stronger support. Putting aside all of the evidence, it's hard to shake the feeling that this shouldn't work as well as it does, that perhaps unguided self-help completely collapses outside a trial context. This is not an insignificant risk, and I don't wish to downplay it.

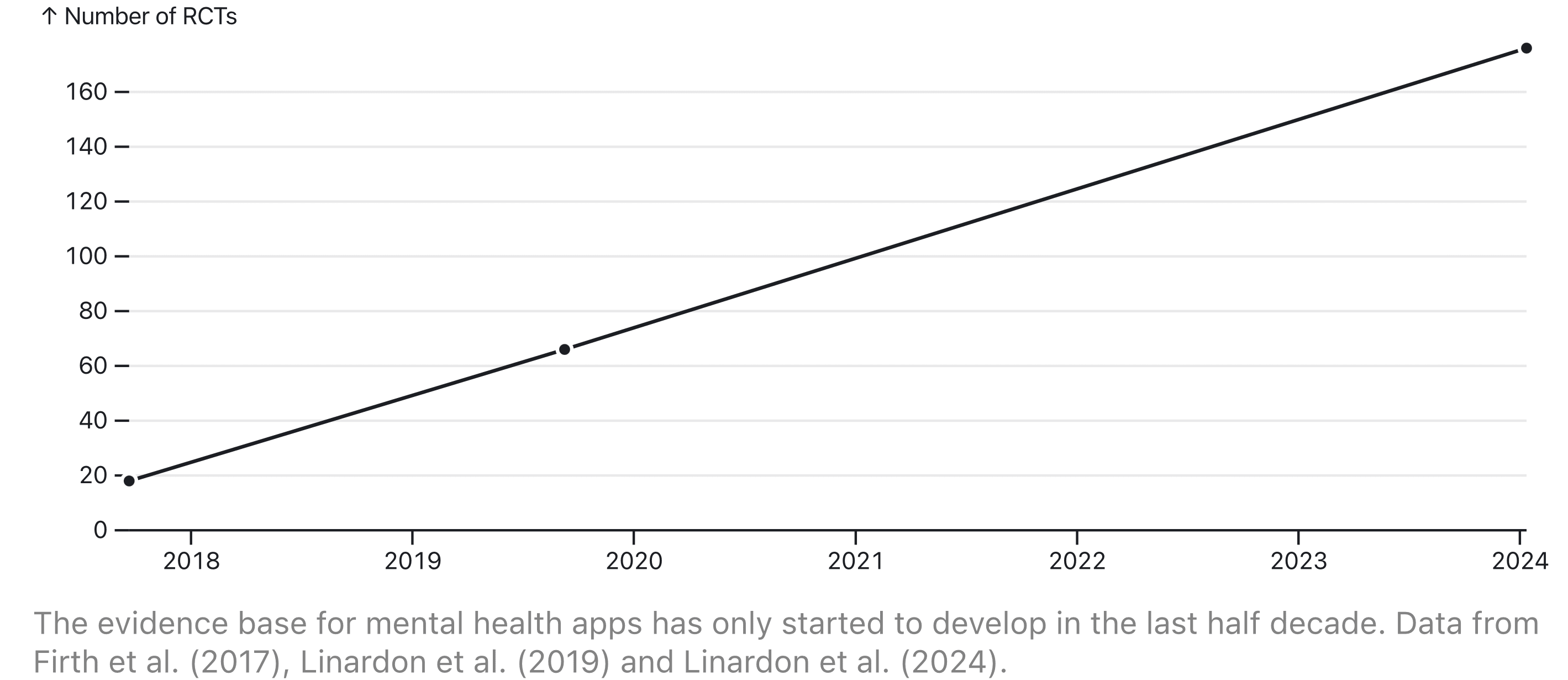

Most of the evidence emerged since CE recommended guided

Most of the evidence base for unguided self-help and behavioural activation is very recent, and has emerged since CE’s report recommended guided self-help[59]. The largest meta-analysis of mental health apps was repeated with the same criteria in 2017, 2019, and 2024, finding 18, 66, and 176 RCTs respectively[60][29:1][18:2]. As such, the most downloaded evidence-based mental health apps are almost exclusively based around mindfulness meditation, with a handful of mood trackers, CBT psychoeducation, and relaxation apps[32:1], because the evidence for better-suited techniques & components didn’t exist. It should be possible to surpass these in effectiveness by incorporating new evidence.

There’s now an effective BAT app intervention that just needs to be scaled

Many of the most promising interventions focus on scaling something that has been directly successful in clinical trials. Kaya Guides, for example, is just adapting & operationalising Step-By-Step[8:4], which has already proven to be successful[3:2][4:2]. A big de-risker is that such a treatment already exists; the Moodivate app is an unguided behavioural activation intervention and is effective[61][62][63], adhered to[61:1][63:1], and culturally adaptable without losing these properties[63:2]. All of the discussed individually effective components appear to come together in practice, although the evidence base is still much more limited than for equivalent guided- and CBT-based interventions.

It scales superbly, so it’s highly funding-absorbent

Software has scaled to envelop the world faster than any innovation before it. New apps can reach hundreds of millions of users within days of launching[64]. This is because software, by and large, doesn't require any marginal cost to reach new users (although businesses may still spend on customer acquisition). They are targets for huge venture capital investments precisely because their upside is nearly uncapped.

Guided self-help, however, has a strong limiting factor in guidance. Guides not only have to be recruited and trained, but need ever-deepening layers of management. All of this is likely to heavily lower funding absorbency, since hiring & scaling in anticipation of future growth isn't cost-effective, but doing it reactively is slow. To illustrate, Kaya Guides estimate a decreasing growth rate over time, but in a best-case scenario where they continue to double annually after year 5[8:5], it will take 15 years to reach 100 million people (unguided could get there 1,000 times faster). An unguided intervention, on the other hand, could theoretically get there 1,000 times faster, because its only practical limiting factor is ad spend for recruitment.

Not only should cost improve at scale, but effect size & engagement should too. Advanced software apps are able to use analytics across huge audiences to automate experimentation against both of these metrics. One expert app developer believed the best apps may be 1–2 orders of magnitude more effective[34:2], concurring with wide between-app variance in trials[18:3], and outlier engagement numbers for the largest mental health apps[65].

It can fail faster & cheaper

The corollary of faster scale and lower costs is that it can fail faster, and for cheaper. The structure of an unguided behavioural activation app is particularly advantageous, as beneficiaries are recruited via ads and log their mood daily. The impact of changes on effect size & engagement can be measured within days, and a preliminary trial with those outcomes can be produced faster and with higher scale than the years it takes typical new charities. As such, the major path to failure is that a Moodivate-style app fails to reproduce a strong effect size with a real-world cohort (rather than running into logistical or policy issues).

Since it’s free to expand an app to high-income countries, if an intervention fails to achieve cost-effectiveness quickly it could self-fund indefinitely. Even if it shuts down, it could contribute private aggregates of the data to the scientific community[66], in what, at even modest scales, would be the largest-ever study of global mental health & wellbeing.

Externalities are low, but displacement is concerning

Unguided self-help is less likely to be harmful than control conditions. Both guided[67] and unguided[68] self-help have been shown to reduce deterioration rates against inactive controls, although the evidence for the effect of unguided against TAU was insignificant[68:1]. Neither study found a subgroup or moderator for whom self-help would be worse than inactive controls; it does seem to be better than nothing.

The bigger risk is that unguided self-help displaces more effective treatments, both for individual recipients, and for funding. The corollary of unguided self-help having lower stigma is that some recipients may prefer it over face-to-face treatment, even when the latter would be better & more cost-effective for them.

If an unguided intervention demonstrated a higher cost-effectiveness, it might draw funding away from more effective charities such as Kaya Guides, and from the hard governmental work of building out mental health infrastructure. This is probably bad. Hopefully in an Effective Altruist context, pushing the state of the art on cost-effectiveness would draw enough new funding into the space to offset the displacement impact.

Conclusion

New technology may be able to close the effectiveness and engagement gaps further. One meta-analysis found weak evidence that rudimentary chatbots improve effectiveness of mental health apps[18:4]. Where there are obvious legal concerns, modern AI techniques still have the potential to dramatically improve personalisation without being embedded in a chat UI, or to culturally & linguistically adapt interventions to new contexts. Being ready for cloud and on-device costs to come down over the next half-decade should be a key consideration of any unguided intervention.

I'm less sure about what form such an intervention would take. It might make equal sense as a non-profit, or as a for-profit that donates a large proportion of its profits to subsidising itself in LMICs. The latter is particularly tempting, because it could be a target for venture capital, which brings money and decades of expertise in scaling software products—but this would equally bring the risk of trying to juice profits from countries that need such an intervention to be free.

To finish up, I'll spare the pretence: I think it's really unintuitive that you can do therapy without a therapist. What keeps me confident is that everything I've discussed is relative to guided self-help (an already established intervention) and the credible intervals for unguided self-help are well above guided's. If you believe guided self-help is likely to be very effective, then I hope it's not a big step to believe the same about unguided, and moreover, that there's barely any cost to finding out.

Cohen, Alex (2023) Assessment of Happier Lives Institute’s Cost-Effectiveness Analysis of StrongMinds, GiveWell. ↩︎

Pinsent, Stan (2024) Mental illness is growing as a proportion of the global disease burden, Effective Altruism Forum, January 18. ↩︎

Cuijpers, Pim et al. (2022) Effects of a WHO-guided digital health intervention for depression in Syrian refugees in Lebanon: A randomized controlled trial, PLOS Medicine, vol. 19, p. e1004025. ↩︎ ↩︎ ↩︎

Cuijpers, Pim et al. (2022) Guided digital health intervention for depression in Lebanon: randomised trial, Evidence Based Mental Health, vol. 25, pp. e34–e40. ↩︎ ↩︎ ↩︎

Abi Ramia, Jinane et al. (2024) Feasibility and uptake of a digital mental health intervention for depression among Lebanese and Syrian displaced people in Lebanon: a qualitative study, Frontiers in Public Health, vol. 11, p. 1293187. ↩︎ ↩︎ ↩︎

Abi Ramia, J. et al. (2018) Community cognitive interviewing to inform local adaptations of an e-mental health intervention in Lebanon, Global Mental Health, vol. 5, p. e39. ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

Bridgwater, George (2020) CE Research Report: Mental Health — Self-Help, Charity Entrepreneurship. Accessed via 2020 Top Charity Ideas. ↩︎ ↩︎

Abbott, Rachel (2023) Kaya Guides- Marginal Funding for Tech-Enabled Mental Health in LMICs, Effective Altruism Forum, November 26. ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

Evans-Lacko, S. et al. (2018) Socio-economic variations in the mental health treatment gap for people with anxiety, mood, and substance use disorders: results from the WHO World Mental Health (WMH) surveys, Psychological Medicine, vol. 48, pp. 1560–1571. ↩︎

World Health Organization (2021) Mental Health Atlas 2020, Geneva, Switzerland. ↩︎

Cristea, Ioana A. (2019) The waiting list is an inadequate benchmark for estimating the effectiveness of psychotherapy for depression, Epidemiology and Psychiatric Sciences, vol. 28, pp. 278–279. ↩︎

Cuijpers, Pim et al. (2019) Is psychotherapy effective? Pretending everything is fine will not help the field forward, Epidemiology and Psychiatric Sciences, vol. 28, pp. 356–357. ↩︎

Munder, T. et al. (2019) Is psychotherapy effective? A re-analysis of treatments for depression, Epidemiology and Psychiatric Sciences, vol. 28, pp. 268–274. ↩︎

Cuijpers, P. et al. (2019) Was Eysenck right after all? A reassessment of the effects of psychotherapy for adult depression, Epidemiology and Psychiatric Sciences, vol. 28, pp. 21–30. ↩︎ ↩︎

Cuijpers, Pim et al. (2023) Psychological treatment of depression: A systematic overview of a ‘Meta-Analytic Research Domain’, Journal of Affective Disorders, vol. 335, pp. 141–151. ↩︎

Karyotaki, Eirini et al. (2021) Internet-Based Cognitive Behavioral Therapy for Depression: A Systematic Review and Individual Patient Data Network Meta-analysis, JAMA Psychiatry, vol. 78, p. 361. ↩︎ ↩︎ ↩︎ ↩︎

Reins, Jo Annika et al. (2021) Efficacy and Moderators of Internet-Based Interventions in Adults with Subthreshold Depression: An Individual Participant Data Meta-Analysis of Randomized Controlled Trials, Psychotherapy and Psychosomatics, vol. 90, pp. 94–106. ↩︎

Linardon, Jake et al. (2024) Current evidence on the efficacy of mental health smartphone apps for symptoms of depression and anxiety. A meta‐analysis of 176 randomized controlled trials, World Psychiatry, vol. 23, pp. 139–149. ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

Serrano-Ripoll, Maria J. et al. (2022) Impact of Smartphone App–Based Psychological Interventions for Reducing Depressive Symptoms in People With Depression: Systematic Literature Review and Meta-analysis of Randomized Controlled Trials, JMIR mHealth and uHealth, vol. 10, p. e29621. ↩︎

Cuijpers, Pim et al. (2019) Effectiveness and Acceptability of Cognitive Behavior Therapy Delivery Formats in Adults With Depression: A Network Meta-analysis, JAMA Psychiatry, vol. 76, p. 700. ↩︎ ↩︎ ↩︎ ↩︎

M, Ishaan et al. (2023) StrongMinds (4 of 9) - Psychotherapy’s impact may be shorter lived than previously estimated, Effective Altruism Forum, December 13. ↩︎

Cuijpers, Pim et al. (2023) Cognitive behavior therapy vs. control conditions, other psychotherapies, pharmacotherapies and combined treatment for depression: a comprehensive meta‐analysis including 409 trials with 52,702 patients, World Psychiatry, vol. 22, pp. 105–115. ↩︎ ↩︎

Koelen, J. A. et al. (2022) Man vs. machine: A meta-analysis on the added value of human support in text-based internet treatments (“e-therapy”) for mental disorders, Clinical Psychology Review, vol. 96, p. 102179. ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

Leung, Calista et al. (2022) The Effects of Nonclinician Guidance on Effectiveness and Process Outcomes in Digital Mental Health Interventions: Systematic Review and Meta-analysis, Journal of Medical Internet Research, vol. 24, p. e36004. ↩︎ ↩︎ ↩︎

Richards, David A. et al. (2016) Cost and Outcome of Behavioural Activation versus Cognitive Behavioural Therapy for Depression (COBRA): a randomised, controlled, non-inferiority trial, The Lancet, vol. 388, pp. 871–880. ↩︎ ↩︎ ↩︎ ↩︎

Heim, Eva et al. (2021) Step-by-step: Feasibility randomised controlled trial of a mobile-based intervention for depression among populations affected by adversity in Lebanon, Internet Interventions, vol. 24, p. 100380. ↩︎

Furukawa, Toshi A. et al. (2021) Dismantling, optimising, and personalising internet cognitive behavioural therapy for depression: a systematic review and component network meta-analysis using individual participant data, The Lancet Psychiatry, vol. 8, pp. 500–511. ↩︎ ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

Linardon, Jake & Matthew Fuller-Tyszkiewicz (2020) Attrition and adherence in smartphone-delivered interventions for mental health problems: A systematic and meta-analytic review., Journal of Consulting and Clinical Psychology, vol. 88, pp. 1–13. ↩︎

Linardon, Jake et al. (2019) The efficacy of app‐supported smartphone interventions for mental health problems: a meta‐analysis of randomized controlled trials, World Psychiatry, vol. 18, pp. 325–336. ↩︎ ↩︎

Baumel, Amit & John M. Kane (2018) Examining Predictors of Real-World User Engagement with Self-Guided eHealth Interventions: Analysis of Mobile Apps and Websites Using a Novel Dataset, Journal of Medical Internet Research, vol. 20, p. e11491. ↩︎ ↩︎

Baumel, Amit & Elad Yom-Tov (2018) Predicting user adherence to behavioral eHealth interventions in the real world: examining which aspects of intervention design matter most, Translational Behavioral Medicine, vol. 8, pp. 793–798. ↩︎ ↩︎

Baumel, Amit et al. (2019) Objective User Engagement With Mental Health Apps: Systematic Search and Panel-Based Usage Analysis, Journal of Medical Internet Research, vol. 21, p. e14567. ↩︎ ↩︎

Baumel, Amit, Stav Edan & John M. Kane (2019) Is there a trial bias impacting user engagement with unguided e-mental health interventions? A systematic comparison of published reports and real-world usage of the same programs, Translational Behavioral Medicine, p. ibz147. ↩︎

Liu, Eddie (2024) Comment by Eddie Liu on Self-guided mental health apps aren’t cost-effective... yet, Effective Altruism Forum, February 8. ↩︎ ↩︎ ↩︎

Carswell, Kenneth et al. (2018) Step-by-Step: a new WHO digital mental health intervention for depression, mHealth, vol. 4, pp. 34–34. ↩︎

Burchert, Sebastian et al. (2019) User-Centered App Adaptation of a Low-Intensity E-Mental Health Intervention for Syrian Refugees, Frontiers in Psychiatry, vol. 9, p. 663. ↩︎ ↩︎

Liu, Eddie (2024) Comment by Eddie Liu on Self-guided mental health apps aren’t cost-effective... yet, Effective Altruism Forum, February 8. ↩︎

Cuijpers, Pim et al. (2021) Psychotherapies for depression: a network meta‐analysis covering efficacy, acceptability and long‐term outcomes of all main treatment types, World Psychiatry, vol. 20, pp. 283–293. ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

Cuijpers, Pim et al. (2017) Component studies of psychological treatments of adult depression: A systematic review and meta-analysis, Psychotherapy Research. (world). ↩︎ ↩︎

López-López, José A. et al. (2019) The process and delivery of CBT for depression in adults: a systematic review and network meta-analysis, Psychological Medicine, vol. 49, pp. 1937–1947. ↩︎ ↩︎

Jacobson, Neil S. et al. (1996) A component analysis of cognitive-behavioral treatment for depression., Journal of Consulting and Clinical Psychology, vol. 64, pp. 295–304. ↩︎ ↩︎ ↩︎

Lewinsohn, Peter M. & Julian Libet (1972) Pleasant events, activity schedules, and depressions., Journal of Abnormal Psychology, vol. 79, pp. 291–295. ↩︎

Lewinsohn, Peter M. & Michael Graf (1973) Pleasant activities and depression., Journal of Consulting and Clinical Psychology, vol. 41, pp. 261–268. ↩︎

Lewinsohn, Peter M. (1975) The Behavioral Study and Treatment of Depression, in Progress in Behavior Modification, , vol. 1, Elsevier, pp. 19–64. ↩︎ ↩︎

Cuijpers, Pim, Annemieke Van Straten & Lisanne Warmerdam (2007) Behavioral activation treatments of depression: A meta-analysis, Clinical Psychology Review, vol. 27, pp. 318–326. ↩︎ ↩︎ ↩︎ ↩︎

Ekers, David et al. (2014) Behavioural Activation for Depression; An Update of Meta-Analysis of Effectiveness and Sub Group Analysis, PLoS ONE, vol. 9, p. e100100. ↩︎

Ciharova, Marketa et al. (2021) Cognitive restructuring, behavioral activation and cognitive-behavioral therapy in the treatment of adult depression: A network meta-analysis., Journal of Consulting and Clinical Psychology, vol. 89, pp. 563–574. ↩︎

Seeman, Neil et al. (2016) World survey of mental illness stigma, Journal of Affective Disorders, vol. 190, pp. 115–121. ↩︎

Dubreucq, Julien, Julien Plasse & Nicolas Franck (2021) Self-stigma in Serious Mental Illness: A Systematic Review of Frequency, Correlates, and Consequences, Schizophrenia Bulletin, vol. 47, pp. 1261–1287. ↩︎

Corrigan, Patrick W. & Amy C. Watson (2002) The paradox of self-stigma and mental illness., Clinical Psychology: Science and Practice, vol. 9, pp. 35–53. ↩︎

Evans-Lacko, S. et al. (2012) Association between public views of mental illness and self-stigma among individuals with mental illness in 14 European countries, Psychological Medicine, vol. 42, pp. 1741–1752. ↩︎ ↩︎

Vogel, David L. et al. (2013) Is stigma internalized? The longitudinal impact of public stigma on self-stigma., Journal of Counseling Psychology, vol. 60, pp. 311–316. ↩︎

Lannin, Daniel G. et al. (2016) Does self-stigma reduce the probability of seeking mental health information?, Journal of Counseling Psychology, vol. 63, pp. 351–358. ↩︎

Pattyn, Elise et al. (2014) Public Stigma and Self-Stigma: Differential Association With Attitudes Toward Formal and Informal Help Seeking, Psychiatric Services, vol. 65, pp. 232–238. ↩︎

Gulliver, Amelia et al. (2021) Predictors of acceptability and engagement in a self-guided online program for depression and anxiety, Internet Interventions, vol. 25, p. 100400. ↩︎

Levin, Michael E., Jennifer Krafft & Crissa Levin (2018) Does self-help increase rates of help seeking for student mental health problems by minimizing stigma as a barrier?, Journal of American College Health, vol. 66, pp. 302–309. ↩︎

Woodward, Aniek et al. (2023) Scalability of digital psychological innovations for refugees: A comparative analysis in Egypt, Germany, and Sweden, SSM - Mental Health, vol. 4, p. 100231. ↩︎

Sijbrandij, Marit et al. (2017) Strengthening mental health care systems for Syrian refugees in Europe and the Middle East: integrating scalable psychological interventions in eight countries, European Journal of Psychotraumatology, vol. 8, p. 1388102. ↩︎

Just take a look through the bibliography! ↩︎

Firth, Joseph et al. (2017) The efficacy of smartphone‐based mental health interventions for depressive symptoms: a meta‐analysis of randomized controlled trials, World Psychiatry, vol. 16, pp. 287–298. ↩︎

Dahne, Jennifer, C. W. Lejuez et al. (2019) Pilot Randomized Trial of a Self-Help Behavioral Activation Mobile App for Utilization in Primary Care, Behavior Therapy, vol. 50, pp. 817–827. ↩︎ ↩︎

Vanderkruik, Rachel C. et al. (2024) Testing a Behavioral Activation Gaming App for Depression During Pregnancy: Multimethod Pilot Study, JMIR Formative Research, vol. 8, p. e44029. ↩︎

Dahne, Jennifer, Anahi Collado et al. (2019) Pilot randomized controlled trial of a Spanish-language Behavioral Activation mobile app (¡Aptívate!) for the treatment of depressive symptoms among united states Latinx adults with limited English proficiency, Journal of Affective Disorders, vol. 250, pp. 210–217. ↩︎ ↩︎ ↩︎

Ray, Siladitya (2023) Threads Now Fastest-Growing App In History—With 100 Million Users In Just Five Days, Forbes, July 10. Retrieved from https://web.archive.org/web/20230710110220/https://www.forbes.com/sites/siladityaray/2023/07/10/with-100-million-users-in-five-days-threads-is-the-fastest-growing-app-in-history/. ↩︎

Koetsier, John (2020) The Odd Story Of How A Massive Meditation App Is Growing In Spite Of Disavowing Normal Growth Methods, Forbes, November 22. Retrieved from https://web.archive.org/web/20201122051035/https://www.forbes.com/sites/johnkoetsier/2020/11/22/the-odd-story-of-how-a-massive-meditation-app-is-growing-in-spite-of-disavowing-normal-growth-methods/. ↩︎

Tholoniat, Pierre (2023) Have your data and hide it too: an introduction to differential privacy, Cloudflare Blog, December 23. ↩︎

Ebert, D. D. et al. (2016) Does Internet-based guided-self-help for depression cause harm? An individual participant data meta-analysis on deterioration rates and its moderators in randomized controlled trials, Psychological Medicine, vol. 46, pp. 2679–2693. ↩︎

Karyotaki, Eirini et al. (2018) Is self-guided internet-based cognitive behavioural therapy (iCBT) harmful? An individual participant data meta-analysis, Psychological Medicine, vol. 48, pp. 2456–2466. ↩︎ ↩︎

Julia_Wise @ 2024-04-08T15:47 (+9)

The ability to schedule when you want (as opposed to a therapist who only has a slot at 2:30 on Thursdays) is another benefit, especially compared to in-person therapy you need to travel to. I have a pet peeve about studies that don't count the cost to beneficiaries of taking time off work, or whatever else they'd be doing with their time, to travel to and from an appointment during the workday.

huw @ 2024-04-09T00:33 (+1)

Yes! This came up in a different way in some of the Step-By-Step studies. Beneficiaries only had to take a phone call, but since it was during the work day this might’ve had a selection effect on recruitment (many of the participants in those studies were housewives).

Corentin Biteau @ 2024-04-08T09:11 (+6)

Thanks for the post. This sounds worth exploring and quite promising, especially if it works.

It's quite impressive that it may be about 60-70% as effective compared to guided therapy.

I was just wondering about one element that I think could be more clear. What kind of unguided self-help apps are you talking about? Are they already widespread? Are we talking about Thought Saver or Waking Up or something like that ? I think a little example of such an app and how it works at the beginning could provide a bit more clarity.

huw @ 2024-04-08T12:31 (+1)

Thank you! It’s easy to get lost in the myopia of a good investigation. I’ve added a section to the intro, but in essence, the definition in the literature is very broad and essentially includes any self-learned psychotherapy, regardless of the evidence base for it! Anything that comes up when you type ‘mental health’ in an app store counts, and as such, effectiveness varies wildly.

The ones that tend to get studied are usually more evidence-based and adhere more strictly to the canonical forms of their associated therapies, which might explain why that difference isn’t wider. That’s a long-winded way of saying that yes, Waking Up and Thought Saver would both count (what I have in mind is something pretty close to Thought Saver).

Corentin Biteau @ 2024-04-09T04:59 (+2)

Thanks for the answer ! So if I have some friends and contacts that have mental health issue, I guess it would be relevant to provide them these kind of apps (at least in complement)? Do you have in mind some apps that would be better than others ? (Thought Saver is there I guess)

huw @ 2024-04-10T00:36 (+4)

I haven’t done an extensive search, but the ones that seem the best to me would be Thought Saver, UpLift, and Clarity CBT Journal. All three of these are from EA-aligned people and seem to be very evidence-based; Clarity is also very popular.

Meditation might be more approachable for people & is less medicalised. Headspace is the most-used and has the most evidence, but if your hypothetical friend wants something a bit less mushy both 10% Happier and Waking Up are really good (and are both doing something a bit different).

Corentin Biteau @ 2024-04-10T07:08 (+1)

Thanks for the answer!

GeorgeBridgwater @ 2024-05-01T08:14 (+5)

Awesome great post, fantastic to see variations of this intervention being considered. The main concerns we focused on with unguided vs guided delivery methods for self-help were recruitment costs and retention.

As you show in the relative engagement in recent trials retention seems to play out in favour of unguided given the lower costs. I think this is a fair read, from what I've seen from comparing the net effect size of these interventions the no less than half figure is about right too.

For recruitment, given the average rate of download for apps we discounted organic growth as you have. Then the figure cited for cost per install is correct (can see another source here) but for general installs. For the unguided self-help intervention, we want a subset of people with the/a condition we are targeting (~5-15%). We then want to turn those installs into active engaged users of the program. I don't know how much these would increase the costs compared to the $0.02 - $0.10 figure. Plausibly 6-20 times for targeting the subgroup with the condition for installs (although even the general population may benefit a bit from the intervention). Then the cost of turning those installs into active users. Some stats suggest that on day one there is a 23.01% retention rate and then as low as 2.59% by day 30 (source, similar to your 3.3% real-world app data). So again looking at maybe a 4 to 40 times increase in recruitment costs depending. Overall that could increase costs for recruiting an active user by 24 to 800 times (Or $0.48 to $8).

Although these would be identical across unguided vs guided the main consideration then becomes does it costs more to recruit more users or to guide existing users and increase the follow-through/ effect size. Adding the above factors quickly to your CEA for recruitment cost gives a cost per active user of 9.6 (1.6 to 32) and cost-effectiveness for a guided of mean 24 (12 to 47) and unguided of mean 21 (4.8 to 66). Taking all of this at face value which version looks more cost-effective will depend a lot on exactly where and how the intervention is being employed. Even then there is uncertainty with some of these figures in a real-world setting.

For the unguided app model you outline I agree if successful this would be incredibly cost-effective. Although at present I'd still be uncertain which version would look best. Ultimately that's up to groups implementing this family of interventions, like Kaya Guides, to explore through implementing and experimenting.

huw @ 2024-05-09T13:00 (+1)

Thank you so much! Your criticism has helped me identify a few mistakes, and I think can get us closer to clarity. The main difference between our models is around who counts as a 'beneficiary', or what it means to 'recruit' someone.

The main thing I want to focus on is that you're predicting a cost per beneficiary that would be nearly 50% recruitment. I don't think that passes the smell test. The main difference is you're only counting the staff time for active participants, but even with modest dropout, we'd expect the vast majority of staff time to go to users who only complete one or two calls. But you're right to point out that we should factor in dropout between installation and the first guidance call, and when I factor this in, unguided has 7% of the cost of guided at scale.

The rest of this comment is just my working out.

Mistakes I made

One of the mistakes I made was having different definitions for recruitment for each condition in my cost model. If the number in that model says there are 100,000 beneficiaries, in the unguided model this means we got 100,000 installs, but in the guided model it means we got 100,000 participants who had 50–180 minutes of staff time allocated to them. Obviously there are different costs to recruit these kinds of participants.

(Two other mistakes I found: I shouldn't have multiplied the unguided recruitment cost by 2 to account for engagement differences, and I forgot to discount the office space costs for the nonexistent guides in the unguided model)

I should've been clearer about a 'beneficiary'

To rectify this, let's count a 'beneficiary' as someone who is in the targeted subgroup and completes pre-treatment. This is in line with most of the literature, which counts 'dropout' regardless of whether users complete any of the material, so long as they've done their induction. We don't want to filter this down to 'active' users, since users who drop out will still incur costs.

We have some facts from Kaya Guides:

- They spent US$105 and got 875 initial user interactions for a cost per interaction of $0.12 (this isn't quite cost per install but it's close enough)

- Let's consider this a lower bound, Meta's targeting should get cheaper at scale and after optimisation on Kaya Guides' end. I think it could get down to the $0.02–0.10 figure pretty easily (and it may have already been there if a number of interactions didn't lead to installs)

- 82.65% of users who completed their depression questionnaire scored above threshold

- This should also be a lower bound. Kaya Guides used basic ads, but Meta is able to optimise for 'app events' (such as scoring highly on this questionnaire) so should be better than this at targeting for depression (scary!)

- 12.34% (108) of these initially interacting users scheduled and picked up an initial call

- Their programmes involve an initial call, a second call at 3 days (I confirmed this directly), and then 5–8 weeks of subsequent calls. All calls are 15 minutes.

Updating my model

- Split office space costs between conditions

- Removed the recruitment cost doubling in unguided

- Broke down staff time per participant

- 1–10 calls per participant (lognormal), this is roughly what Kaya Guides and Step-By-Step do. I distributed it as a lognormal to quickly account for dropout rates, but the model is somewhat sensitive to this parameter. More knowledge on its distribution could change the overall calculus.

- 15–40 minutes of time spent per call (again, roughly consistent with Kaya Guides and Step-By-Step, accounts for other time spent on that participant like notes & chat reviews)

- Broke down cost per treatment starter

- Kept cost per install at $0.02–0.10, since it's consistent with real-world data

- Set a discount rate at 12.34% (~5–20%) of installers starting the programme, consistent with Kaya Guides' observations

- I decided to extrapolate this to unguided, in absence of better data

I think this fairly accounts for everything you raised. I think you're right to point out that my model should've accounted for the cost of a treatment starter (~8x higher). But I don't think it's right to only account for active users, since Kaya Guides spend 15 minutes of staff time on 12% of all installers, even if they drop out later. And as their ad targeting gets better, we'd only expect this number to increase, paradoxically widening the cost gap!

Plugging it all in, unguided has 7% (15–22%) of the cost of guided at scale.

Earlier, I also sense-checked with Kaya Guides' direct cost-per-beneficiary, which they estimate to be $3.93. If the unguided cost per beneficiary is $0.41 (as in the updated model), then the limiting proportion increases a bit to 11%.

My doubts

The fact that it took over a month to find some pretty obvious flaws in my model is a concern, and my model is clearly somewhat sensitive to the parameters. However, even if I'm really pessimistic about the parameters, I can't get it above 20% of the cost of guided, which would still make it more cost-effective.

The bigger doubt I've had since writing this report is learning from Kaya Guides that they actually do have an unguided condition—anyone who scores 0–9 on the PHQ-9 (no/mild depression), or anyone who scores above but explicitly doesn't want a guide gets the ordinary programme, just without the calls. This has an astonishing 0% completion rate. I think the different subgroup, programme design, and lack of focus are mostly contributing to this, but it indicates that it's gonna be hard to keep users engaged. I'll chat with them some more and see if I can learn anything else.

Skye Nygaard @ 2024-04-10T21:54 (+2)

Great to see this post! I similarly felt that self help could be almost as effective and more scalable after engaging with Feeling good/great by Dr. David Burns. He has an app coming out soon that might be a good candidate for this type of work.

SummaryBot @ 2024-04-08T13:42 (+2)

Executive summary: Unguided self-help therapy, particularly using behavioral activation techniques delivered via apps, may be significantly more cost-effective than guided self-help for treating depression in low-resource settings.

Key points:

- Meta-analyses suggest unguided self-help has about 70% the effectiveness of guided self-help for depression, with similar long-term effects.

- Engagement with unguided self-help in trials is no less than half that of guided; real-world engagement is lower but not drastically so. Differences may be more due to encouragement than expertise.

- Cost-effectiveness models estimate unguided self-help could be 10-20x cheaper than guided at scale, with costs potentially decreasing further due to organic growth.

- Behavioral activation therapy is well-suited for unguided delivery due to its simplicity, is a key effective component of CBT, and may be less stigmatizing.

- Unguided self-help is less risky to implement as it can scale faster, fail faster and cheaper, and be highly funding-absorbent. Displacement of other treatments is a concern.

- Advances in AI and technology could help further close effectiveness and engagement gaps between unguided and guided self-help.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

indrekk @ 2024-04-10T13:03 (+1)

So, which unguided self help app should I try?

huw @ 2024-04-11T00:46 (+1)

Hey there! I wrote up my thoughts in another comment here.

Agustín Covarrubias @ 2024-04-08T16:05 (+1)

This is just a top-notch post. I love to see detailed analyses like this. Props.