RCTs in Development Economics, Their Critics and Their Evolution (Ogden, 2020) [linkpost]

By KarolinaSarek🔸 @ 2021-04-06T12:29 (+78)

This is a linkpost for https://www.financialaccess.org/publications-index/2020/11/4/rcts-in-development-econ

Below you will find copy-pasted quotes from the paper that I tried to organize to provide a quick-ish summary of the main points.

Abstract

The use of RCTs in development economics has attracted a consistent drumbeat of criticism, but relatively little response from so-called randomistas (other than a steadily increasing number of practitioners and papers). Here I systematize the critiques and discuss the difficulty in responding directly to them. Then I apply prominent RCT critic Lant Pritchett's PDIA framework to illustrate how the RCT movement has been responsive to the critiques if not to the critics through a steady evolution of practice. Finally, I assess the current state of the RCT movement in terms of impact and productivity.

7 categories of the critiques

The “Nothing Magic” critiques

- The main version of the Nothing Magic critique is that randomization does not necessarily yield a less biased estimate of impact than other methods.

- Another version of the Nothing Magic critique is that field experiments in economics do not conform to the double-blind standard of RCTs in medical practice—and could therefore be referred to as there is “nothing magic about development economics RCTs.” The inability to run double-blind trials, or even blindtrials, means that RCTs in social sciences generally don’t meet the requirements to reduce one of the main sources of expected bias.

- A third version of the critique says that even if RCTs do limit degrees of freedom, nothing is eliminated. Therefore RCTs have to be as carefully scrutinized as other methods.

The Black Box critiques

- An RCT does not necessarily illuminate the actual casual mechanism even when a causal relationship is convincingly established.

- It many cases it is impossible to determine whether the null result is because of an ineffective treatment or an ineffective implementation of the treatment.

- An RCT that is not grounded in theory can be very difficult to interpret regardless of whether the outcome is distinguishable from zero or not. But if there is a well-grounded theory informing the RCT, the benefits of randomization may be quite limited.

The External Validity critiques

- The External Validity critique points out that each RCT is anchored in a highly specific context.

- Thus, while the results from a particular RCT may tell you a lot about the impact of a particular program in a particular place during a particular point in time, it doesn’t tell you much about the result of even running an exactly identical program carried out in a different context and time.

The Trivial Significance critiques

- The critiques can take several different guises, but all share the basic point that the programs and projects measured and measurable by RCTs yield changes, even when“successful,” that are not big enough to make a difference between poverty and prosperity, at anything approaching the scale of the problem of global poverty.

- These Critiques can come from a macro perspective (the things that “really matter” are macroeconomic-level choices like trade policy which cannot be randomized) (Ravallion2020), a systems perspective (an RCT on increasing vaccination rates doesn’t improve the health system, and may in fact hinder system development) (Garchitorrena et al.2020), or a political economy perspective (RCTs cannot answer whether investing in8

- transport infrastructure, health systems, or education systems is most likely to lead to growth)

The Policy Sausage critiques

- The Policy Sausage critiques are primarily associated with Pritchett. The simplified version is that policies (whether policies of government or of NGOs) are created through complex and opaque actions influenced by politics, capability, capacity, resource constraints, history and many other factors.

- Impact evaluation, and independent academic research in general, plays only a small role in the policy sausage, especially if it is impact evaluation that comes from outside the organization. Thus, the effort put into an RCT is likely wasted, as it will fail to have an effect on this complex process.

The Ethical critiques

- One is that experimenting on human beings, particularly and especially on people in poor communities as is necessarily the case in development economics RCTs, is inherently unethical.

The “Too Much” critiques

- Critique that even if there were advantages to RCTs over alternatives, those advantages do not justify the time, monetary, opportunity or “talent” costs they impose.

The Challenge of Responding to The Critiques

What is a randomista?

- A specific example of this difficulty is that of the basic question of defining who (or what) is a randomista. Many of the critiques are founded on what the randomistas believe—but there is certainly no manifesto or statement of beliefs or core principles that defines who is in “the club.”

- The point is not that such statements are meaningless or that no one believes the statements in particular, but that it is very difficult to identify who exactly would sign on to a specific statement and whether those who would or wouldn’t could reasonably be defined as inside or outside the circle of randomistas.

- The intent of this discussion is not to end with the conclusion that the term randomista is so ill-defined and undefinable as to be practically useless, though I think that's true, but to illustrate why it is so hard to respond meaningfully to the critiques(and perhaps to explain why the randomistas have generally stopped responding directly to critics, as evidenced by the fact that none agreed to participate in this volume).

- Any response must necessarily be on behalf of an individual who likely will agree with at least some parts of any particular critique—and ultimately speaks only of themselves. At the same time, any person who, like I do in this paper, tries to defend RCTs must bear some burden to answer for any statement on behalf of RCTs no matter how off-the-cuff, uncareful, misguided or wrong. It’s not surprising then that few relish, at this late stage of the ongoing discussions, the opportunity to respond to a critique of ill-defined randomistas in general with a specific statement of personal beliefs. What would that accomplish?

The Argument Behind the Arguments

- The disputes between randomistas and their discontents can put too much emphasis on the particularities of methodology, and distract from the more important disagreement behind them. That more important disagreement is about theories of change. Argument over theories of change—ideas about how the world changes—are hardly unique to the present moment in development economics. Indeed, it is the foundation of development economics (and much of other social sciences): how is it that poor countries become richer (or, why is that poor countries stay poor)?

- While wary of reducing theories of change to short summaries or points on a chart, nevertheless I find it helpful in the context of this discussion, to think about the competing theories of change along three main axes:

- the value of small versus big changes;

- the value of local knowledge versus technocratic expertise;

- the role of individual versus collective actions via institutions.

- In practice, there is significant variation within the RCT movement and between the critics, such that in some cases there is more in common between a particular RCT advocate and a particular critic then there is between two different critics—again the problem of definition of a randomista arises.

- Underneath each of the critiques of RCTs noted above is a theory of change that differs from that of RCT advocates along at least one of the three axes.

- [...] my impression is that those in the RCT movement tend to believe that small changes can matter a great deal, that technocratic expertise is highly valuable, and that individuals within institutions matter as much as the institutions themselves. Those critics who invoke the Trivial Significance Critique, in contrast, usually agree on the value of technocratic expertise, but disagree about the value of small changes and the role of institutions.

- This difference matters because it influences how one evaluates the quality and especially the utility of evidence.

The Evolution of the “Movement”

In this section, I’ll argue that by examining the evolution of the use and practice of RCTs it is clear that many RCT critiques have in fact been acknowledged to be correct by virtue of changing practices among RCT practitioners and research centers.

Problem Driven Iterative Adaption

- In their original paper (which spawned a number of additional papers and ultimately a book, Building State Capacity) Andrews, Pritchett and Woolcock introduce the principles of problem driven iterative adaptation: “We propose an approach, Problem-Driven Iterative Adaptation (PDIA), based on four core principles, each of which stands in sharp contrast with the standard approaches.” These four principles are clearly seen in the evolution of the RCT movement.

- Principle One: Solving locally nominated and defined problems in performance (as opposed to transplanting pre-conceived and packaged "best practice" solutions).

- Kremer was clearly also trying to solve a locally nominated and defined problem in a double sense. First, he was trying to help the specifically locally defined problem of ICSof picking which seven schools would receive textbooks. Second he was addressing the locally defined and nominated problems among economists of improving causal identification.

- Sometimes, as with Dupas’s and Kremer’s story, the locally defined and nominated problem was a question that was shared by the economist and an NGO or government agency.

- Principle Two: create an 'authorizing environment' for decision-making that encourages 'positive deviance' and experimentation as opposed to designing projects and programs and then requiring agents to implement them exactly as designed).

- It’s clear that the initiators of the use of RCTs created an authorizing environment that encourages positive deviance and experimentation, the last quite literally. The level of innovation within the conduct of RCTs is quite impressive.

- A “second wave” of randomistas have gone on to implement much more sophisticated experiments over much longer timeframes (e.g. Blattman and Dercon’s experiments comparing industrial jobs to microcredit) and much, much larger scales (e.g. Muralidharan and Niehaus’sexperiments with NREGA and Aadhar) on much more complex topics (e.g. Pomeranz’s experiments on taxation schemes and Karlan’s experiments on religious content in an intervention).

- Principle Three: it embeds this experimentation in tight feedback loops that facilitate rapid experiential learning as opposed to enduring long lag times in learning from ex post "evaluation").

- The only argument about the randomistas implementation of this principle is whether it is something they directly created or an extant feature of economics education that they exploited. I would argue it is both.

- Principle Four: it actively engages broad sets of agents to ensure that reforms are viable, legitimate, relevant and supportable (as opposed to a narrow set of external experts promoting the "top down" diffusion of innovation).

- The institutions to support the implementation of RCTs are the best examples of this principle in practice. Since the first RCTs, prominent users of RCTs have created organizations like J-PAL, IPA, and CEGA which easily match the description as broad sets of agents that ensure that reforms are viable, legitimate, relevant and supportable. All of the organizations are involved in ongoing projects to reduce the barriers to the conduct of and reporting of RCTs.

- Principle One: Solving locally nominated and defined problems in performance (as opposed to transplanting pre-conceived and packaged "best practice" solutions).

PDIA-driven Evolution and Critiques of RCTs

- True to Andrews, Pritchett, and Woolcock’s promise, the implementation of PDIA has served to vastly improve the practice of RCTs, their relevance to development practice and to policymakers, and to institutionalize the process of conducting and reporting the results of randomized experiments. But two additional points are necessary.

- First, the practice of RCTs has developed as practitioners confront the problems that they perceived.

- The only way for the RCT movement to evolve into a sustainable and effective force for development was to develop capabilities and solutions internally.

- In this section, I’ll briefly discuss how the PDIA process has led to the evolution of the practice of RCTs to at least in part address many of the critiques of the movement.

Nothing Magic

- As noted in an earlier section, it is not clear how many RCT practitioners ever believed that RCTs were magic or were not subject to any biases.

- It is worth noting that Brodeuret al. (2018) find significantly less evidence of p-hacking and significance searching in RCT and RDD papers than in IV and Difference-in-Difference papers and Vivalt (2019)finds less significance inflation in RCTs than in papers using other methods. Perhaps even more important in relation to the present discussion, she finds that RCTs have“exhibited less significance inflation over time.

- It is however likely that many RCT practitioners did not appreciate the many sources of bias that remain in randomized experiments when they first began. If there were believers that RCTs solved all of the problems that critics point out, we would expect that RCT practitioners would resist innovations in implementation and analysis that better account for such sources of potential bias.

- In fact, what we see is many economists who might be called randomistas are actively innovating to address concerns about bias, reliability and replicability.

Black Box

- Again there has been considerable evolution in the application of RCTs along PDIA principles to address the limitations of simple RCTs in exposing causal mechanisms.

- [...] an emphasis on addressing the “black box” critique is not limited to a few researchers, schools, contexts, or sectors. As more such studies are done, the implicit PDIA process operating within the RCT movement means that establishing mechanisms will increasingly be expected of new RCTs.

External Validity

- The external validity critique would in general be more credible if some of its proponents were as vocal about the problems of external validity of all studies and not just of RCTs. That being said, there are many instances of RCT proponents offering policy advice which assumes external validity.

- Faced with the problem of proving external validity, RCT practitioners have evolved in several ways.

- First they have empirically studied whether the results of RCTs in one context predict results in another.

- At the same time, RCT practitioners have put much more emphasis on applications and studies with multiple arms in multiple contexts.

- Such ex-post replications are becoming easier because of the efforts of other RCT implementers to ensure data and code for all experiments are available for replication.

- Of course, there will always be questions of external validity in the application of any impact evaluation (RCT or otherwise) to predict outcomes in other contexts. But more systematic approaches are also evolving. As more RCTs address causal mechanisms, assumptions about external validity will become more explicit, and more studies will include structural models. This in turn, will allow more formal frameworks for assessing external validity and integrating results from multiple studies such as Dehejia et al.(2019) and Wilke and Humphreys (2019).

Trivial Significance

- Earlier I noted that a key foundation of the trivial significance critique is differing theories of change between randomistas and critics. Little can be done to respond to a critique that the only changes that matter are macro-level policies. That, however is more a critique of applied microeconomics in general than RCTs.

- That being said, the RCT movement has a response to at least one of the critiques emanating from a different theory of change. For those that argue that institutions matter, the institution building prowess of the randomistas should be impressive. Aside from the obvious examples of IPA and J-PAL, the institutions build by the randomista movement directly and indirectly include the Global Innovation Fund, the Busara Center for BehavioralResearch, 3ie, Evidence Action, Development Impact Ventures, AEJ: Applied, and many local survey firms.

- Another related variation laments that RCTs are not well suited for measuring long-term impact(see Ravallion 2020). Confronting these issues has yielded an impressive amount of creativity in application of RCTs. The most direct response to the “too small” critique has been expanding the scale of RCTs.

Policy Sausage

- The translation of RCT results into policy changes has always been an explicit goal of RCT practitioners.

- Put another way, the randomistas have engaged a broad set of agents to ensure the validity and continuity of the use of RCTs to influence policy. A new generation of RCT practitioners are going to be an integral part of policy-making institutions (if for no other reason than the shortage of jobs in academia).

The Ethical Critiques

- There is much less to say on this topic. In part, that is because fundamentally randomistas clearly believe that experimentation with human beings is ethical, regardless of the moral intuitions of the majority of American public, an attitude of course shared by most scientists.

- On the questions of equipoise, as noted above, this remains an area where the RCTmovement has yet to significantly engage as best I can tell.

Too Much: The Final Critique

- I find this the least compelling of all critiques within the economics frame.

- To begin with, as many of the Too Much critiques acknowledge, the emergence of RCTs in development economics is in no small part due to the conditions and structure of the market for academic economics. The use of RCTs gained popularity in the context of widespread questions about the credibility of other methods, in an environment that demanded of aspiring economists that they do work that was credible, novel and publishable. RCTs promised—and delivered—work that was all three. Thus the criticism of Too Much should really be directed at the structures and incentives of the profession not at those who respond to the incentives the profession creates.

- Second, the Too Much critique fails to articulate an objective measure of what the thresholds between “not enough”, “just right”, and “too much” might be. It is objectively true that the use of RCTs and the publication of papers using the method has increased greatly), but this growth must be put in perspective. It’s worth quoting McKenzie’s (2019) look at the data on this question at length: “despite the rapid growth, the majority of development economics papers published in even the top-five journals are not RCTs...[O]ut of the 454 development papers published in these 14 [economic development field]journals in 2015, only 44 are RCTs (9.7%). The consequence is that RCT-studies are only a small share of all development research taking place”.

- Third, the oft-repeated assertion that “enthusiasm for RCTs will fade '' seems to me to be a hollow critique. Of course we should expect that methods will continue to improve, new innovations in all sorts of research designs will uncover heretofore unappreciated problems and improved approaches.

- Finally, there is the lament that the “brightest and best” economists are wasting their talents focused on RCTs. This critique makes the least logical sense of all. If the critics are right and the problems of RCTs are insurmountable, and there are clearly better alternatives, then that must indicate that those who continue to primarily use RCTs are not the brightest and best. This critique must explain why anyone should believe that the brightest and best are systematically wrong and yet still are the worthy of the moniker.

Conclusion

- To conclude, I want to provide a different framework for thinking about the evolution of the practice of RCTs and the various critiques and responses.

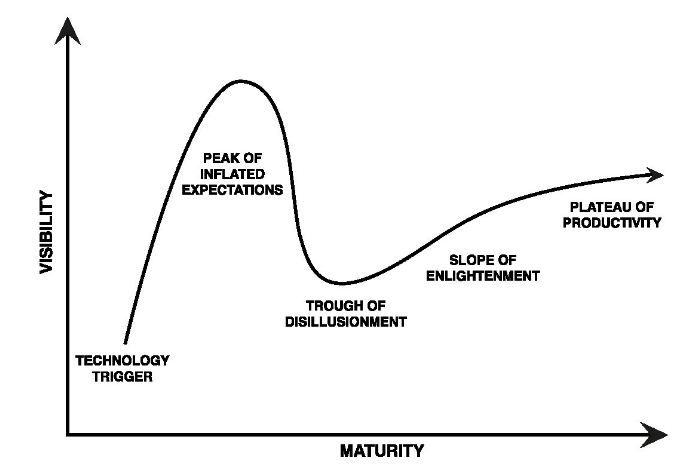

- One of the organization’s most widely known products is The Hype Cycle—a way of conceiving of the emergence, evolution and adoption of emerging technologies. Pritchett stumbled across the Hype Cycle and applied it to RCTs in a 2013 essay. I agree that it is a useful model for thinking about RCTs—in fact, I would argue that RCTs are best thought of as an “emerging technology” in development economics rather than a movement.

3. Through the lens of the Hype Cycle, in this paper I have argued that,

- the peak of inflated expectations for RCTs was real, but was never as high as critics made it out to be, and in any event has passed,

- initial enthusiasm for RCTs was quickly met by a range of valid critiques, leading if not to a trough of disillusionment at least meaningful changes in the use and practice of RCTs, and

- the current state is clearly in the slope of enlightenment phase as evidenced by the statements and practices of advanced users of the technology.

4. It is worth noting specifically that the evolution of the practice of RCTs validate many of the critiques detailed here. The evolution that I have attempted to document is responsive to these critiques.

5. Beyond that, RCTs are a more useful tool for improving the world than most tools available to the mediandevelopment economist, given the nature and requirements of the profession, and the difficulties of policy influence.

6. It is true that RCTs are unlikely to be a useful tool for evaluating exchange rate policies, the optimal level of public debt, or the consequences of wealth inequality (just as a few examples), the policies related to the answers to questions on such topics are far less susceptible to academic influence regardless of the methodology issued to answer them. There is no reason to believe that the marginal impact of the average development economist studying one of these topics is greater than a precisely-estimated zero.

7. In closing, [...] the critiques of the RCT movement are generally valid if not objectively correct. However, many of those critiques have been addressed by the evolution in the practice of RCTs.

Aaron Gertler @ 2021-04-07T05:41 (+13)

Thank you for finding, reading, and excerpting this paper! I'd likely never have seen it without this Forum post, and your post is now the best resource I know of on a topic that comes up a lot when I talk about EA with people. I expect to refer to it often.

KarolinaSarek @ 2021-04-13T15:41 (+15)

Thanks, Aaron! I read quite a lot of papers of this type, so I will consider posting more summaries or excerpts.

DavidBernard @ 2021-04-06T13:47 (+7)

This paper was a chapter in the book Randomized Control Trials in the Field of Development: A Critical Perspective, a collection of articles on RCTs. Assuming the author of this chapter, Timothy Ogden doesn't identify as a randomista, the only other author who maybe does is Jonathan Morduch, so it's a pretty one-sided book (which isn't necessarily a problem, just something to be aware of).

There was a launch event for the book with talks from Sir Angus Deaton, Agnès Labrousse, Jonathan Morduch, Lant Pritchett and moderated by William Easterly, which you might find interesting if you enjoyed this post.

Stephen Clare @ 2021-04-06T14:07 (+8)

Ogden works with Innovations for Poverty Action (and, incidentally, is on GiveWell's board). I'm not sure he'd identify as a randomista but seems very likely he's favourable to RCTs.

jo @ 2021-04-29T12:54 (+1)

Thanks Karolina, this is great! It's nice to see a balanced perspective considering the pros and cons of RCTs. It can be very frustrating seeing RCTs treated as either a silver bullet or a useless piece of trash. They're a tool in a toolbox like every other kind of research, and in my opinion, best used in conjunction with broader foundational research approaches like qualitative data collection, case studies, descriptive or correlational studies, and so on. I'll definitely be bookmarking this to refer back to.