Modelling civilisation beyond a catastrophe

By Arepo @ 2022-10-30T16:26 (+58)

tl;dr

What would be the expected loss of value of a major catastrophe that stopped short of causing human extinction? To answer this question, we need to focus not simply on the likelihood that we would recover, but rather on the likelihood that we would ultimately reach our desired end state. And we need to formally account for the possibility that the pathway to this end state could involve many such catastrophes and ‘recoveries’.

I present three models that can help us do this. My guess is that, on these models, some plausible assumptions will turn out to imply that a non-extinction catastrophe would involve an expected loss of value comparable to that of outright extinction, for example by starting us down a vicious cycle of declining energy availability and increasing energy costs to materials essential for modern agriculture. If so, this would imply that longtermists who agree with these assumptions should give greater priority to avoiding non-extinction catastrophes. Some assumptions might also have some surprising implications for how we should prioritise among different methods of making civilisation more resilient to such catastrophes.

Introduction

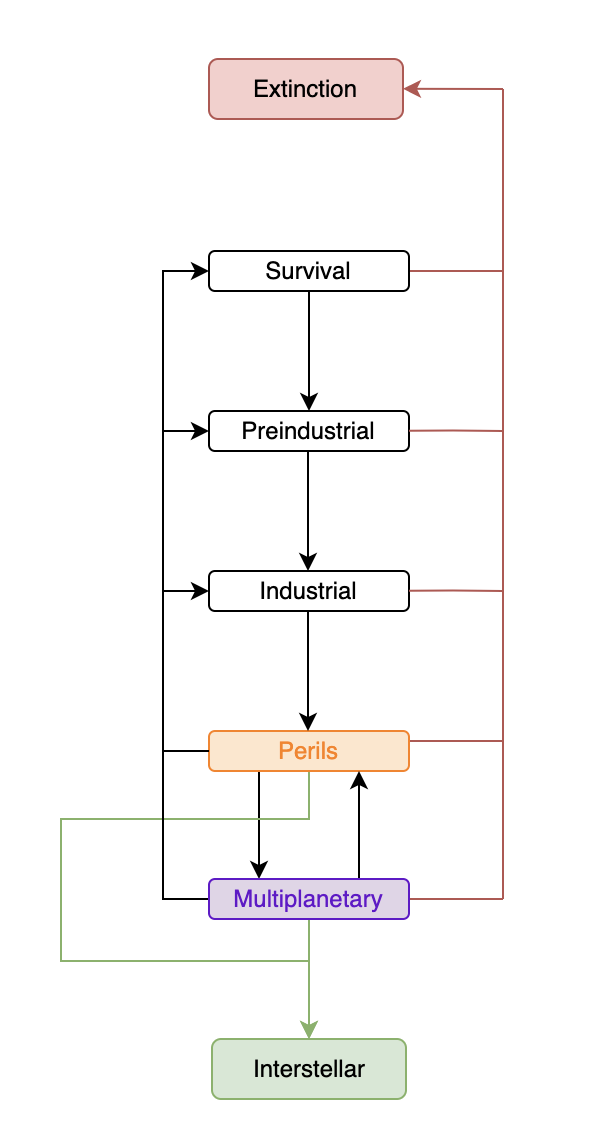

In this post I present a family of models of the future of civilisation that divide the states of civilisations following by the milestones of technological development they’ve reached following what I’ll call a milestone catastrophe (see previous post for complete definition). We can then label these as separate states, and reason about the transitions between them. The states follow those identified in historical and existential risk literature (extinction, short term struggle for survival, preindustrial, industrial, time of perils, multiplanetary, interstellar), but the models also attempt to intuitively capture the key points I claimed were missing from most discussions of ‘recovery’ after a catastrophe, specifically:

- ‘becoming interstellar, and eventually colonising the Virgo supercluster’ as the relevant positive outcome rather than focusing on a single ‘recovery’

- the effect of the possibility of multiple civilisational collapses on the probability of colonising the Virgo supercluster

- treating ‘permanent stagnation’ and ‘unrecoverable dystopia’ as the pathways on which we don’t become interstellar, rather than as distinct end states

In each model, the relationships between these states form a discrete time Markov chain - a comprehensive if highly idealised set of probabilities of moving from any state to the others. The two more complex models just add nodes to the chain, to provide a somewhat more nuanced picture.

Three models of increasing complexity

The cyclical model

In this version we keep things simple, treating each civilisation as having approximately a constant transition probability between states. That is, no matter how many times we contract to an earlier state, we assume that, from any given level of technology, the probability of going extinct or of becoming interstellar is the same as at equivalent points of development in previous civilisations.

Here we have the following states:

Extinction: Extinction of whatever type of life you value any time between now and our sun’s death (i.e. any case where we've failed to develop interplanetary technology that lets us escape the event).

Survival: Scrabbling for survival as Rodriguez described in the years or decades following an extreme civilisational catastrophe, before a new equilibrium emerges.

Preindustrial: Civilisation has regressed to pre-first-industrial-revolution technology (either directly or via a period of Survival).

Industrial: Civilisation has technology comparable to the first industrial revolution but does not yet have the technological capacity to do enough civilisational damage to regress to a previous state (eg nuclear weapons, biopandemics etc). A formal definition of industrial revolution technology is tricky but seems unlikely to dramatically affect probability estimates. In principle it could be something like 'kcals captured per capita go up more than 5x as much in a 100 year period as they had in any of the previous five 100-year periods.’[1]

Perils: Civilisation has reached the ‘time of perils’ - the capacity to produce technology that could either cause contraction to an ‘earlier’ state OR cause something equally bad to extinction (e.g. misaligned AI) - but does not yet have multiple self-sustaining colonies.

Multiplanetary: Civilisation has progressed to having at least two self-sustaining settlements capable of continuing in an advanced enough technological state to settle other planets even if the others disappeared, which are physically isolated enough to be unaffected by at least one type of technological milestone catastrophe impacting the other two. Each settlement could still be in a state of relatively high local risk, but the risk to the whole civilisation declines as the number of settlements increases.

Interstellar: Civilisation has progressed to having at least two self-sustaining colonies in different star systems, or is somehow guaranteed to - we treat this as equivalent to ‘existential security’.[2]

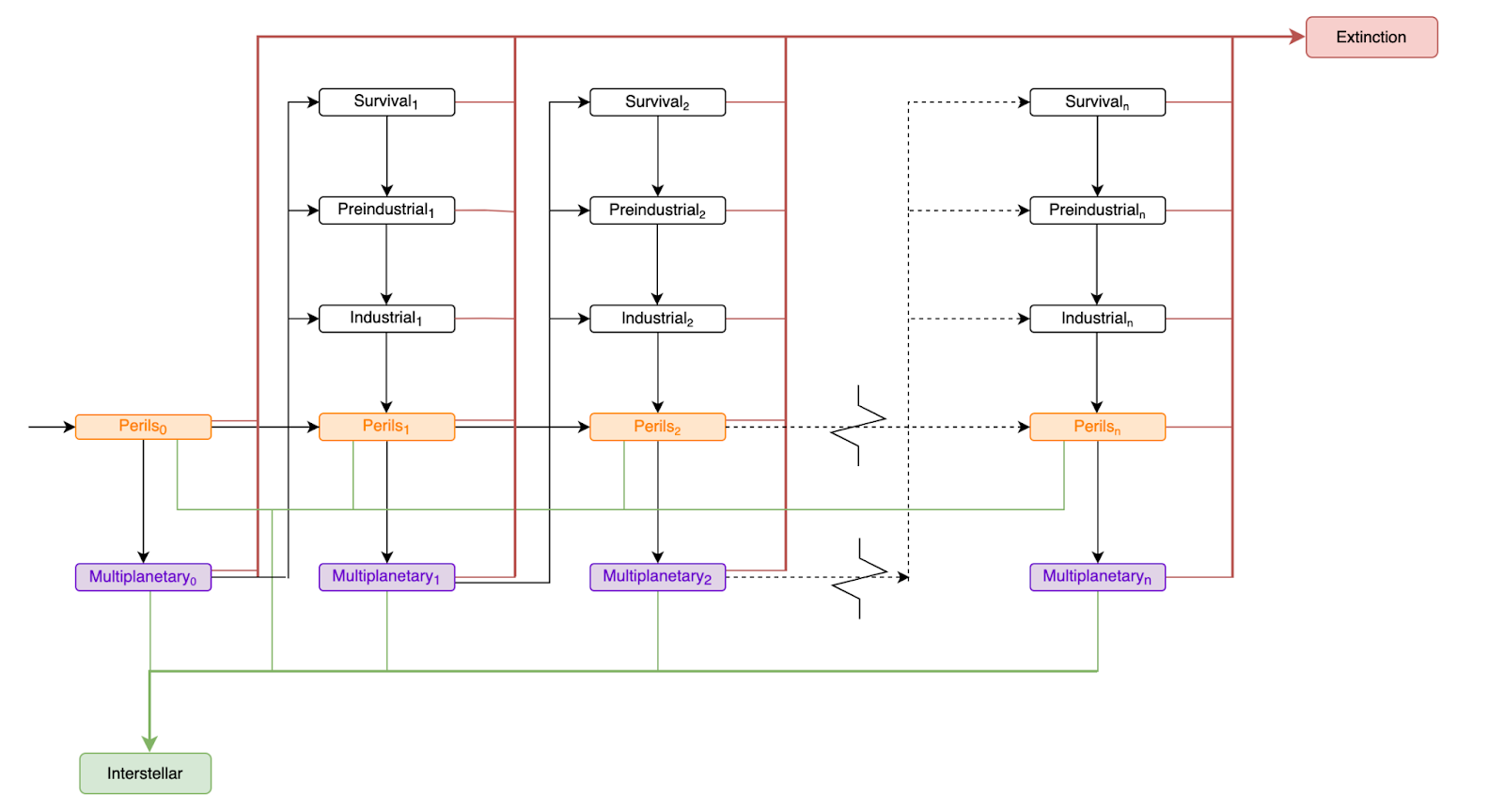

The decay model

The cyclical model captures the core intuition behind ‘recovery is not enough’, and I hope to make a simple calculator for it. But it’s missing an important notion - that prospects for success might predictably differ after multiple catastrophes. My strong intuition is that each civilisation will tend to use the lowest hanging-fruit in terms of resources, and while some of those might replenish in predindustrial periods (eg topsoil, woodlands), and they might leave much of it to the next civilisation in easily accessible forms (the materials that make up old vehicles, buildings etc), much of the latter will be in unrecoverable states, and much of what can be recovered will be lost in the recycling process. We could represent this either with key discontinuities, for example…

- the first reboot likely having coal and fossil fuels probably not economically useful to subsequent civilisations[3]

- rebooted societies plausibly having a much more cautious approach to developing harmful technology (which would presumably still lead to a decline across multiple reboots, but conceivably make early ones actually have greater probability of becoming interstellar than our current state if you believed this was a strong enough effect)

- somewhere around reboot 2 or 3 we would likely likely run out of phosphorus reserves

… or with a smooth decay in the probability of each civilisation becoming interstellar, or with a combination of the two.

To try to capture this concern, the decay model tracks the notion of ‘technological milestone catastrophes’, hereafter just ‘milestone catastrophes’ described in the previous post,[4] such that some of the transitional probabilities could be a function of how many times we’ve such a catastrophe (such that, for example, the probability of achieving some technological milestone like industrialisation goes down with the number of milestone catastrophes):

Here the subscript number represents the number of milestone catastrophes each civilisation has passed through to reach that state, where each milestone catastrophe is equivalent to moving a column rightwards. Eg Preindustrialk is a civilisation with preindustrial technology that has had k milestone contractions, and so on (visually, each column represents a milestone contraction leading to a civilisational reboot, such that moving right or up on this model is ‘bad’, moving down is ‘good’ - at least on a total utility view, and assuming that reboots make us less likely to have a better future). Also, the definition of Perilsk is now ‘having the capacity to produce weapons that do enough civilisational damage to cause a milestone catastrophe, transitioning them to the k+1th civilisation’.

An interesting property that falls out of this model is that different views on probability decay-rates given k - that is, how much the probability of achieving key technological milestones decreases for each milestone catastrophe - could lead us to quite different resilience priorities.

For example, if we expect a high decay rate and we assume that it’s mostly due to resource scarcity that mainly affects the postindustrial eras, then civilisations are effectively count-limited more than, as e.g. Ord characterises them in The Precipice, time-limited.

This could make attempts to speed up recovery less effective than attempts to give each future reboot the best chance of success or to increase the maximum number of reboots, such as husbanding resources that could be passed to many of them.[5]

Similarly, it might imply that catastrophes which destroy resources (such as nuclear war) are substantially worse in expectation than those that leave them behind (eg biopandemics) - because some of them could have been used not just in the first reboot, but for potentially many subsequent ones.

On the other hand, a low decay rate would indicate that the expected harms of very long-lasting problems might be high even if they’re comparatively mild in any given iteration, especially combined with a low probability of success per iteration. If we had hundreds of realistic chances to become interstellar but expected to need to use a high proportion of them before success, then even environmental harms that reduced the probability of success per iteration a few tenths of a percent could add up to a large decline in our long-term prospects. Possible examples include accumulating diseases, residual climate change, genetic loss of intelligence, debris in medium Earth orbit, long-lived radioisotopes, or ecosystem-level accumulation of toxic metals (though I’m very unsure about the likelihood of any given one of these).

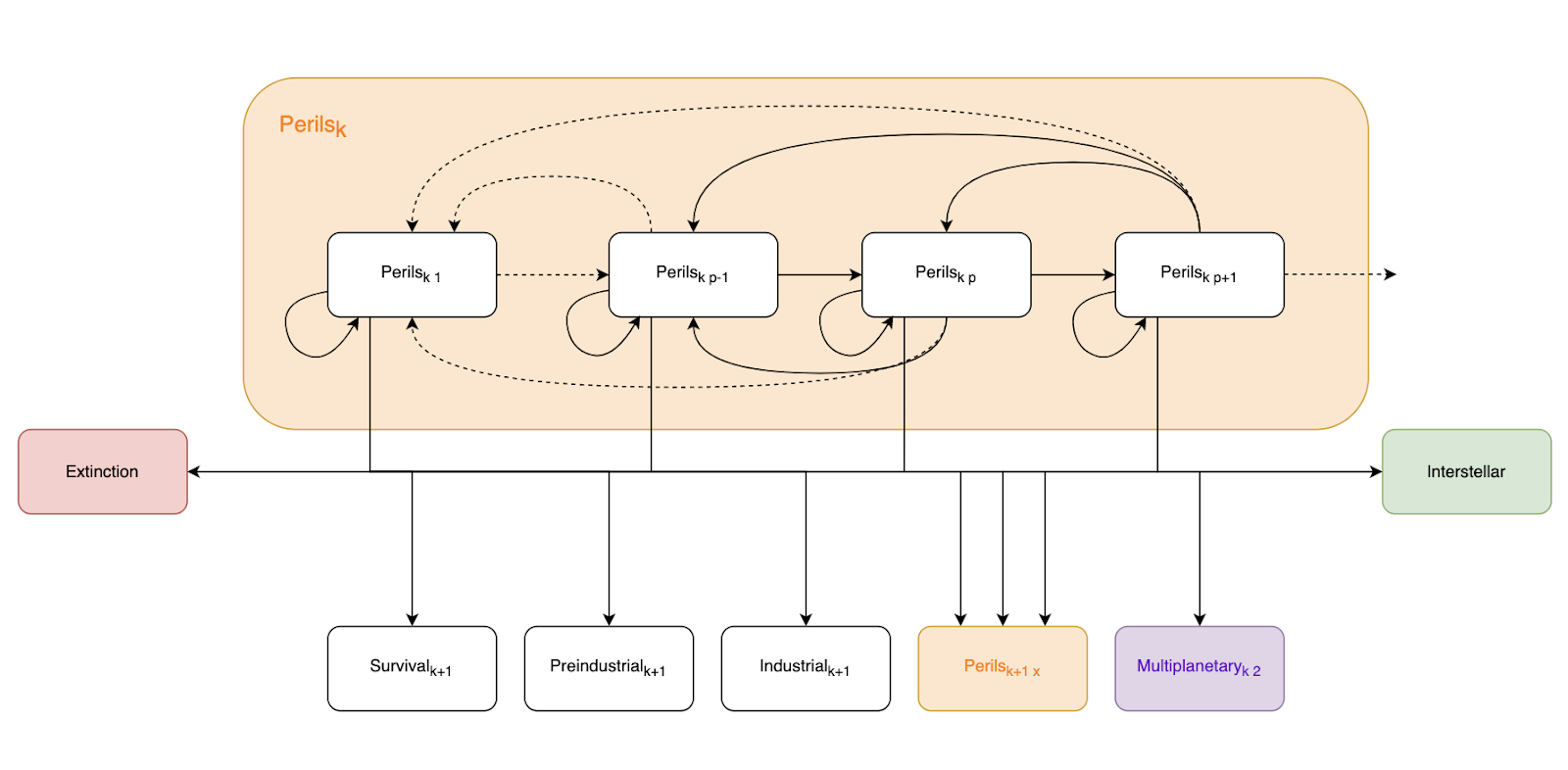

The perils-focused model

The decay model captures most of my strong intuitions, and might be the best balance between fidelity and computation time, but it’s still missing some details about the modern age (time of perils onwards) which seem important to me:

- the risk per year in times of perils is less of a constant than a function of technological and social progress

- civilisations can have ‘minor’ contractions during the time of perils, elongating the period and so increasing premature extinction risk by a small but potentially noteworthy amount.

To give a toy example of the latter, if we think the average annual risk of a milestone catastrophe during the time of perils of 0.2%, then an economic depression that set us back 10 years would naively reduce our probability of transitioning to a multiplanetary state before any milestone catastrophe by about 2%. If we then assumed that our chances of success given a milestone catastrophe decreased by 20%, then the economic depression would have decreased longterm expected value by about 0.4%. Not much relative to immediate extinction, but enough to put it in the same playing field as direct extinction risks if it turned out to be very much more likely and/or very much more tractable. These numbers should only be taken at best as a very rough indication of what’s in scope - I aim to fill them in with my best guesses in a future post.

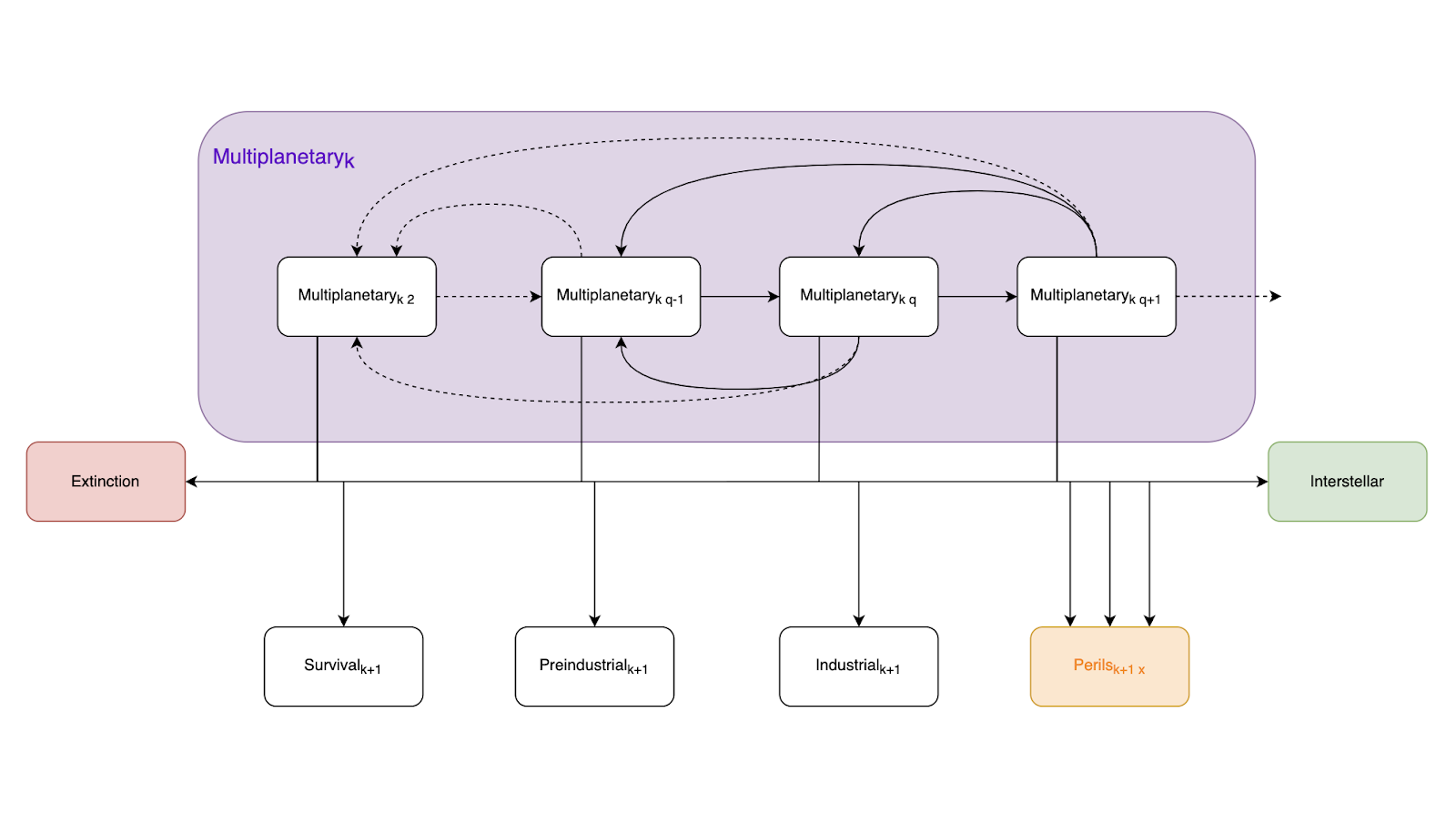

Similarly, though perhaps much less importantly, a civilisation with 2 planets seems much less secure than one with 10, both causally and evidentially - a 10-planet civilisation provides much better protection against many existential threats and much more strongly implies that humanity has reached a point where its settlements are likely to have a reproduction rate > 1.

To capture this, we can zoom in on progress through the time of perils, treating each ‘unit of progress’ as a distinct state:

The most convenient unit of progress seems to be a year, since that allows us to work with a lot of annualised data, such as GDP and the highest fidelity risk estimates. Thus we have an extra parameter:

Perilskp = A year of a civilisation in ‘time of perils’ state, where that civilisation has had k milestone catastrophes, and has reached the equivalent of p years of uninterrupted technological progress through the time of perils - though we allow the idea of ‘travelling backward’ to represent relatively minor global setbacks (eg the 2008 financial crisis might have knocked us back a couple of years). You could think of these as Quality Adjusted Progress Years.

And we could do something very similar with ‘number of colonies’ in the interplanetary state:

Multiplanetarykq = Civilisation has progressed to having q>=2 self-sustaining settlements on different planets[6] after k milestone catastrophes.

Though this feels less important, since my intuition is that by the time we had 3-5 colonies, we’d be about as secure as we were ever going to get - but this model gives scope for one to disagree.

Limitations of this approach

Necessarily all three of the models are heavily idealised. They’re my attempt at a reasonable compromise between capturing some important concerns we’ve been leaving out while not getting too far into the weeds (YMMV). Nonetheless I think it’s important to acknowledge several limitations of this approach, in case others think they’re significant enough to want to include them in a very different model, or argue that I should work them into one like these. In roughly descending order of importance:

1. The models focus mainly on technological progress through the time of perils, or on forms of progress that behave similarly. The picture could look very different if some other outcome that isn’t analogous to or more or less a function of technology dramatically changes x-risk - long-lasting global totalitarian governments or particularly successful/unsuccessful work on AI safety being evident candidates.

2. The models don’t look at whether different values might emerge after catastrophe, or any other reasons why one might cause a positive or negative change to wellbeing per person. I think this is a really important question, but a largely unrelated one - or at least, any interdependent assumptions would be very difficult to model.

3. The models have little historical or social context - we don’t account for how we arrived at any state, except via the count of former civilisations, via our choice of entry point, and, in the perils-focused model, via the notions of progress years and planets. Theoretically we can average over all possibilities, but this obscures many nuances:

- It makes it hard to represent possibly importantly different social and moral attitudes in a world that’s just regressed to industrial living vs one that’s finally achieved it after several preindustrial generations. For example global long-term totalitarian governments of the sort Bostrom and Ord discuss end up as an averaging factor in the transition probabilities.

- It makes it hard to model a series of catastrophes, where we regress in many respects to pre-times of perils, but for example still have the capacity to launch former nuclear arsenals. Implicitly we would treat the actual use of such arsenals subsequent to the original event as part of the same overall catastrophe.

- The length of previous times of perils (and to a lesser extent industrial eras) might be highly relevant to the state the next civilisation finds itself in. Longer technological eras would basically act as a multiplier on the consequences of the previous civilisation: they would use up more resources, probably inflict other environmental damage, may leave behind more knowledge, and the technology they leave behind may be more durable and/or less useable to a more primitive successor civilisation.

- The length of time between civilisations might be important. If it takes 10,000+ years, they could actually be in a better position than after only 500 since excess CO2 will have left the atmosphere, forests will have regrown, most orbital debris will have burned up etc - so as long as the preindustrial civilisation hadn’t been too voracious, they’d start the industrial era with a cleaner slate. This could be especially important in a count-limited scenario. This time-insensitivity will also make it hard to account for non-anthropogenic changes over time, such as glacial periods.

- Post-catastrophe environmental stressors will differ depending on the catastrophe.

4. Some of the states are hard to define crisply:

- The model assumes civilisation-harming weaponry is on the critical path to reaching multiplanetary status. This is not logically necessary but, for reasons given in footnote 3 of the previous post, I think almost certain (>98%) in practice.

- How exactly does the time of perils start? ‘Civilisation-harming weaponry’ is not well defined. We could specify technologies, but there might be others we haven’t imagined, and some we have imagined could be more or less destructive than we expect.

- What counts as a self-sustaining colony? Could a sufficiently robust and extensive bunker system be one? Or even ‘New Zealand’ - though that might be vulnerable to loss of energy or an influx of refugees. In the other direction, an offworld colony needn't be permanently able to exist without Earth to afford some security; for example, if they could last at least a few years without running out of some key material, they might be able to repopulate this planet after e.g. a nuclear winter or biopandemic that killed everyone on the surface. So in practice, self-sustainingness might look more like a spectrum than a threshold.

- What is an industrial revolution? I gestured at a formal definition above, but that might turn out to be inadequate if we could progress slowly enough towards time of perils technology that the condition didn’t trigger until after we’d got there.

- [ETA: Is 'extinction' specifically of human descendants? Or does it mean something more like 'the event that, when the sun consumes that Earth, no sentient life has escaped from it'? The latter could be approximated to some degree, e.g. by a higher probability of both returning to and escaping from a preindustrial state, but it would be hard to properly account for the difference between 'humans take a few thousand years to regain technology' and 'lizards take a few 10s of millions of years to evolve advanced intelligence'. It would probably be better to separately assess the prospects of a future species' civilisation and add them to our own, rather than to attempt to blend them together.]

5. ‘Interstellar’ is treated as equivalent to ‘existentially secure’. In principle a gamma ray burst, a simultaneous total collapse of multiple civilisations, a false vacuum decay or a hostile AI could get us - but I’m reasonably content with this assumption, for the reasons given in footnote 2.[2]

6. It broadly assumes we're interested in expected utility maximisation, so will be less useful to people with value-systems or decision theories that reject this.

7. It has low fidelity regarding AI trajectories. This is somewhat intentional, since a) much more work has been done on estimation of these subjects than on other milestone catastrophes, at least in the EA-adjacent community, so many people will have better informed estimates than mine that they can plug in, and b) the AI-resolves-everything narrative is still under discussion, so our models should allow worlds in which AI could be much harder to develop or much less transformative than our typical estimates.

8. It doesn't look at lost astronomical value from delayed success. In Astronomical Waste, Bostrom calculated that a 10-million year delay wouldn't make much relative difference, but perhaps there’s a delay within the lifespan of the Earth where expected losses of values would start to look comparable.

9. I'm really unsure how to think about evidential updates if/when we reach certain future states. If we were to reach the 100th civilisation, having neither gone extinct nor interstellar, it seems like we should strongly update that reaching either state is very unlikely from any given civilisation (at least until the expanding sun gets involved). But should the logic we use now to estimate transitional probabilities from that state take that update into account, or should we only do the update if we actually reach it? [ETA: I've come to think we should not try to anticipate such evidential updates. Since most of what we will want to use the model for is imagining the difference between the present and the possible counterfactual effects of actions we might take now, for which we need to make our best guess as to the state of the world with the information we currently have]

10. It treats some non-0 transition probabilities as 0. For example, it’s ‘impossible’ to go from an industrial state directly to a preindustrial state. I don’t view this as a serious concern for three reasons: firstly I do think that transition is unlikely, secondly because we can encapsulate the slight chance that it happens and we consequently go extinct as humanity going extinct from an industrial state (and the chance that it happens and we reach a time of perils as having just made that transition directly), and thirdly because it’s trivial to insert the transition probabilities into the model for anyone who feels strongly they should be there.

Next steps

I’ve presented the models here in the abstract for critique, with the following potential goals in mind:

- Plug in or extrapolate existing estimates of transition probabilities where possible and elsewhere source from interviews or give my own reasoning to develop a crude estimate of the difference between probabilities of becoming interstellar a) given no non-existential global catastrophe (or, using the previous post’s terms, no milestone contraction) and b) given at least one. The exact difference doesn’t matter so much as ‘when you squint, does it basically look like 0, or like not-0?’ If the latter, it will suggest longtermists should treat non-extinction catastrophes as substantially more serious than we typically have been[7]

- Create a calculator so that anyone can tweak the probabilities. Ideally this would have enough flexibility to let them input more complex functions to describe the probabilities (in practice this might just mean ‘open source the calculator’)

- Use the calculator for some sensitivity analysis

- Consider real world implications of the significance of different variables

- Plug the calculator into some Monte Carlo simulation software, for more robust versions of each of the above

Where I go next depends somewhat on the feedback I get about this model - but if the basic approach seems useful, I expect to proceed through these steps in more or less this order.

Acknowledgements

I’m extremely grateful to John Halstead for agreeing to commit ongoing advice on the project, with no personal benefit.

I’m also indebted to David Thorstad, Siao Si Looi, Luisa Rodriguez, Christopher Lankhof, David Kristoffersson, Nick Krempel, Emrik Garden, Carson Ezell, Alex Dietz, Michael Dickens, David Denkenberger, Emily Dardaman, Joseph Carlsmith and Florent Berthet for their input on all three of these posts. Additionally, the comprehensibility of the diagrams in this post is entirely due to Siao Si Looi’s design skills.

This is a subject I’ve wanted to write on for several years, but it has only been possible to take the time to even begin to do it justice thanks to a grant from the Long Term Future Fund.

Mistakes, heresies, and astrological disruptions are all mine.

- ^

Gregory Clark has criticised kcals captured as a measure of progress in his review, for having somewhat inconsistent definitions of energy capture over large amounts of time, but I think that either by refining Morris’ definitions or just by restricting our scrutiny to 100-year intervals, we could avoid most of those criticisms.

- ^

The identified states may be worth explaining further:

While ‘time of perils’ seems like an intuitive label for the period that starts when we develop such dangerous technology, I don’t mean to imply that we’re basically safe once we transition to the multiplanetary state, but I do think it reduces risks from some extinction threats and most sub-extinction contractions, which, per this post, may ultimately reduce our extinction risk significantly. The multiplanetary state is distinguished from interstellar/existential security precisely to allow differing opinions on this question.

I treat interstellar state as equivalent to existential security for four reasons:

1. Simplicity.

2. Causally: by the time we have self-sustaining settlements most conceivable threats will be incapable of wiping out our entire species, and we’ll have plenty of capacity to repopulate specific systems if they somehow disappear.

3. Evidentially: technological threats that could wipe out an interstellar civilisation - AI, triggered false vacuum decay - will likely have had hundreds or thousands of years to materialise, so likely would have already got us at this stage if they were going to.

4. Pragmatically: we either live in a universe with a non-negligible background threat of events capable of wiping out an interstellar civilisation - eg naturally occuring false vacuum decay - or we don’t. In the latter we have vastly more expected value, so for the purposes of modelling counterfactual expected value considerations we can focus on it more or less exclusively.

For now I’m ignoring the possibility of alien civilisations for similar reasons:

1. I don’t have any a priori reason to think they would have higher or lower value than our own descendants.

2. Given 1, the counterfactual expected value of longtermist decisions is far higher in a universe where aliens won’t substitute for us.

- ^

See the discussion in Chapter 6 of What We Owe the Future. Also, see footnote 2.22, in which MacAskill estimates ‘My credence on recovery from [a civilisational] catastrophe, with current natural resources, is 95 percent or more; if we’ve used up the easily accessible fossil fuels, that credence drops to below 90 percent.’

- ^

In using a technology lens, I don’t mean to imply that moral, legal or other contractions aren’t possible or aren’t a concern. The discussion around those subjects seems much more sparse than around technological contractions, and the interaction with them complex enough as to be outside the scope of this sequence. One could imagine a similar model replacing nodes like ‘preindustrial’ with, say, moral or legal milestones like ‘international ban on slavery’ - and a model that took into account the question question of average wellbeing per person would divide the ‘interstellar’ end node into at least ‘astronomical value’ and ‘astronomical disvalue’. But that’s beyond the scope of this sequence.

- ^

It might also suggest that husbanding resources that could be reused across multiple reboots if not squandered could be more important than passing on single-use resources such as coal. For example, the discussion here implies very roughly enough phosphorus for 260-1390 years at current usage and technology - plenty for our current civilisation, but if subsequent civilisations use it at about the same rate that we have and if they stay in the time of perils for about 100-200 years on average, that would give enough for perhaps 2-14 civilisations before it ran out. Future civilisations might be better at conserving it per year, but if their times of perils would be slower-advancing than our current one, their total time of perils era could deplete it as much or more as our current one. Furthermore, it is not clear that even the second civilisation would be able to bootstrap the technology to exploit the current relatively low concentrations of phosphorus ore.

That said if single-use resources are important enough they might still outweigh this. For example in Out of the Ashes, Dartnell says ‘an industrial revolution without coal would be, at a minimum, very difficult’ - though it’s difficult to know what probability he would assign to it never happening.

- ^

More specifically, we’re counting self-sustaining settlements that are isolated enough to have a chance to survive catastrophes that would wipe the others out and persist in an advanced enough technological state to quickly repopulate the others. ‘Planets’ seems like a good approximation of this for the highest-risk era, though later on the line might become blurred by populations living in hollowed out asteroids, O’Neill cylinders, emulating their minds on isolated computers, or isolating themselves in any number of other creative and boundary-blurring ways.

- ^

Looking at the distribution of support for different causes across the broader EA community would be a research project in itself, but for example, the most recent Long Term Future Fund grant recommendations I can find show that 26 of the 45 total were given to explicitly AI-oriented projects and 6 of the remaining 19 were for projects that included a heavy AI component.

I’m not saying this is a misallocation - it looks rational either if a) AI much more tractable than all other longtermist risks, b) friendly AI will be a panacea for all our other problems soon enough to be worth working on for their own sake, c) milestone catastrophe risks from from the others are very low, or d) a milestone catastrophe would barely reduce the chance of becoming interstellar. I highlight it not to claim that it is necessarily wrong to make any of those assumptions, but as evidence that the EA movement is de facto doing so.

- ^

In using a technology lens, I don’t mean to imply that moral, legal or other contractions aren’t possible or aren’t a concern. The discussion around those subjects seems much more sparse than around technological contractions, and the interaction with them complex enough as to be outside the scope of this sequence. One could imagine a similar model replacing nodes like ‘preindustrial’ with, say, moral or legal milestones like ‘international ban on slavery’ - and a model that took into account the question question of average wellbeing per person would divide the ‘interstellar’ end node into at least ‘astronomical value’ and ‘astronomical disvalue’. But that’s beyond the scope of this sequence.

Charlie_Guthmann @ 2022-10-31T05:01 (+6)

Nice. This post and the previous post did significantly clarify what a post like Luisa's is/should be measuring.

Some very speculative thoughts -

Not sure we need to classify extinction as human descendants dying, or even make extinction a binary. If animals are still alive there is a higher base rate of intelligent life popping than if not, if mammals are alive then probably higher still, etc. Perhaps the chance of getting to interstellar civilization is just so much lower if humans die that this distinction is just unnecessarily complicated, but I'm not sure and think it's at least worth looking into. I'm sure people who are into the hard steps model have thought about this a bit.

This is sort of an insane thought, but if we figure out the necessary environmental pressures to select for intelligence, we can try to genetically modify the earth's habitats so that if humans go extinct the chance of another intelligent species evolving will be higher.

The way I see the decay model, outside of culture change stuff, is that we need some amount of available resources (energy, fertilizer, etc.) to get to interstellar. As time passes on earth and humans are alive, we will burn through non-renewable resources, thus reducing the potential resources on earth. However, humans can invent renewable resources. Moreover, we can use renewable resources now to create non-renewable resources and put them in the ground. So is decay the fundamental state of things? If we put a bunch of fusion reactors everywhere, couldn't the available energy go up, even if there is less coal/oil? And what if we figure out how to create phosphorus or a new fertilizer that we can make/ or a different farming paradigm? Edit: I just listened to this podcast and lewis dartnell seems to think this "green reboot" isn't super possible, but I didn't really follow his logic. He seemed to focus heavily on not being able to re-make photo-voltaics and not on the fact that future people could access the existing renewable tech lying around.

This seems to present an opportunity. Leaving anti-decay objects everywhere, but not WMDs, we can speed up recovery without equivalently speeding up military technology. The important thing here is that it disputes your assumption that civilization-harming weaponry is critical on the path to ICs. If we leave sufficient energy production for the next civilization and a blueprint for spaceships, perhaps they can liftoff before an inevitable military buildup gets especially perilous.

Arepo @ 2022-10-31T10:49 (+6)

Thanks Charlie... there's a lot to unpack here!

Not sure we need to classify extinction as human descendants dying, or even make extinction a binary.

I think this is worth looking into. My first thoughts, not really meant to establish any proposition:

- You can kind of plug this into the models above, and just treat reference to human-descendants as Earth-originating life. Obviously you'll lose some fidelity, and the time-insensitivity problem would start to look more of a concern

- In particular, on the timescales on which new intelligent non-mammalian species would emerge (I'm imagining 10-100 million years if most mammals go extinct), we might actually start losing significant expected value from the delay

- The timescale doesn't actually seem enough for fossil fuels to replenish, so if that turns out to be the major problem facing our descendants, a new species probably wouldn't fare any better

- So for it to be a major factor, you'd probably have to believe both that whatever stopped humans from becoming interstellar was a relatively sudden extinction event rather than a drawn out failure to get the requisite technology, and that whatever killed us left a liveable planet behind

we can try to genetically modify the earth's habitats

This feels like it would be a very expensive project and very sensitive to natural selection un-modifying itself unless those traits would have been selected for anyway!

However, humans can invent renewable resources

If I understand you right, you're imagining human resources generally leading to efficiency gains in a virtuous circle? If so, I would say I agree that's plausible from our current state in which we're lucky enough to have plenty of energy spare to devote to such work (though maybe even now it [could be tough](https://forum.effectivealtruism.org/posts/wXzc75txE5hbHqYug/the-great-energy-descent-short-version-an-important-thing-ea)) - but the worse your starting technology, the higher a proportion of your population need to work in agriculture and other survival-necessary roles, and so the harder it becomes to do the relevant research.

what if we figure out how to create phosphorus

Phosphorus is an atom, so not something we can create or destroy (at least without very advanced technology). The problem is its availability - how much energy it costs to extract in a useable form. At the moment, we're extracting the majority of what we use from high-density rocks, which will run out, and we're flushing most of that into lakes and oceans where, from what I understand (though I've found it hard to find work on this), it's basically impossible to reclaim even with modern technology. So future civilisations will have less energy with which to extract it, and lower energy efficiency of the extraction process.

or a new fertilizer that we can make

What makes phosphorus useful both in growing plants and in us eating those plants is it's a major component of human chemistry. In this sense, you can't replace it (at least, not without dramatically altering human chemistry). Less phosphorus basically = fewer humans.

If we leave sufficient energy production for the next civilization and a blueprint for spaceships, perhaps they can liftoff before an inevitable military buildup gets especially perilous.

If you mean actually building spare energy technology for them, in a sense that's what all our energy technology is - we put it in the places where it's most useful to current humans, who are living there because they're the most habitable places. But windmills, solar panels, nuclear reactors etc need constant maintenance. Within a couple of generations they'd probably all have stopped functioning at a useful level. So what you need to leave future generations is knowledge of how to rebuild them, which is both trivial in the sense that it's what we're doing by scattering technology around the world for them to reverse engineer and extremely hard in the sense that leaving anything more robust requires a lot of extra work both to engineer it well enough and to protect it from people who might destroy it before understanding (or caring about) its value.

I can think of a few organisations working on this - I think it's a big part of Allfed's mission, there's the Svalbard Global Seed Vault on a Norwegian Island, and in some sense knowledge preservation is the main value of refuges and fallout shelters - but I haven't looked into it closely, and I'd be surprised if there weren't many others (I think it's something Western governments have at least some contingency plans for).

It doesn't seem realistic to let them develop the economy to settle other planets without getting any harmful tech, though. We don't have good enough rocket technology to send much mass other planets yet, so the future civilisations would have to leapfrog our current capabilities without getting any time to develop eg biotech on the way. And Robert Zubrin, the main voice for Mars colonisation strategies before Elon Musk, thinks it would be almost impossible to set up a permanent Mars base without nuclear technology, so you'd need to develop nuclear energy without getting nuclear weaponry.

Charlie_Guthmann @ 2022-11-17T00:11 (+1)

responding to this super late but two quick things.

But windmills, solar panels, nuclear reactors etc need constant maintenance. Within a couple of generations they'd probably all have stopped functioning at a useful level

Does anyone have incentives to make long-lasting renewable resources? Curious about what would happen if a significant portion of energy tech switched from trying to optimize efficiency to lifespan (my intuition here is that there isn't much incentive to make long-lasting stuff). Seems like this could be low-hanging fruit and change the decay paradigm - of course, possible this has been extensively looked at and I'm out of my depth.

It doesn't seem realistic to let them develop the economy to settle other planets without getting any harmful tech, though. We don't have good enough rocket technology to send much mass other planets yet, so the future civilisations would have to leapfrog our current capabilities without getting any time to develop eg biotech on the way. And Robert Zubrin, the main voice for Mars colonisation strategies before Elon Musk, thinks it would be almost impossible to set up a permanent Mars base without nuclear technology, so you'd need to develop nuclear energy without getting nuclear weaponry.

I guess I wouldn't see this as a binary. Can we set up a civilization that will get to space before creating nukes, via well-placed knowledge about spaceships that conveniently don't include stuff about weapons? probably not, but it's not a binary. We could in theory push in this direction to reduce the time of perils length or magnitude.

and regarding the phosphorus stuff, I've unfortunately exposed my knowledge of the hard sciences.

Vasco Grilo @ 2022-11-06T09:39 (+3)

Thanks, I think this is a pretty valuable series!

FYI, I have estimated here the probability of extinction for various types of catastrophes.

The inputs are predictions from Metaculus’ Ragnarok Series[2], and guesses from me, and provided by Luisa Rodriguez here.

Jobst Heitzig (vodle.it) @ 2022-11-03T14:09 (+3)

I like this type of models very much! As it happens, a few years ago I had a paper in Sustainability that used an even simpler model in a similar spirit, which I used to discuss and compare the implications of various normative systems.

Abstract: We introduce and analyze a simple formal thought experiment designed to reflect a qualitative decision dilemma humanity might currently face in view of anthropogenic climate change. In this exercise, each generation can choose between two options, either setting humanity on a pathway to certain high wellbeing after one generation of suffering, or leaving the next generation in the same state as the current one with the same options, but facing a continuous risk of permanent collapse. We analyze this abstract setup regarding the question of what the right choice would be both in a rationality-based framework including optimal control, welfare economics, and game theory, and by means of other approaches based on the notions of responsibility, safe operating spaces, and sustainability paradigms. Across these different approaches, we confirm the intuition that a focus on the long-term future makes the first option more attractive while a focus on equality across generations favors the second. Despite this, we generally find a large diversity and disagreement of assessments both between and within these different approaches, suggesting a strong dependence on the choice of the normative framework used. This implies that policy measures selected to achieve targets such as the United Nations Sustainable Development Goals can depend strongly on the normative framework applied and specific care needs to be taken with regard to the choice of such frameworks.

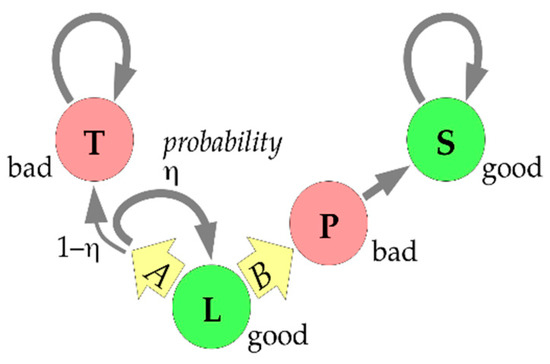

The model is this:

Here L is the current "lake" state, from which action A ("taking action") certainly leads to the absorbing "shelter" state S via the passing "valley of tears" state P, while action B ("business as usual") likely keeps us in L but might also lead to the absorbing "trench" state.

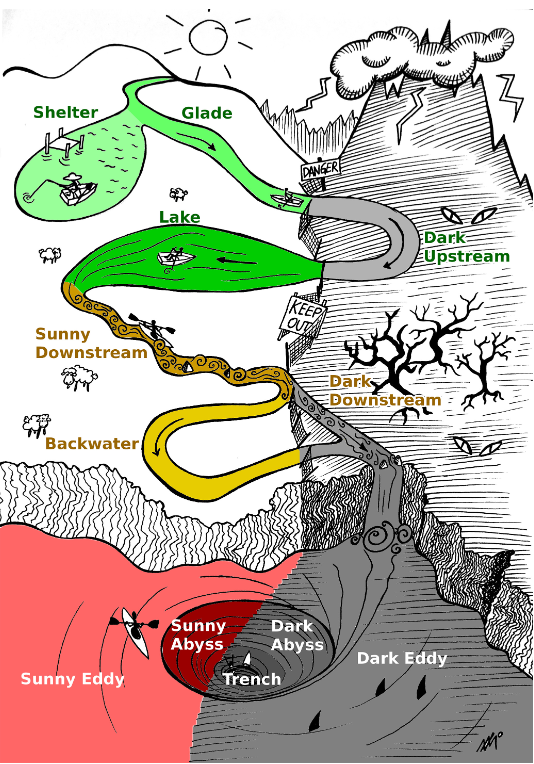

The names "lake", "shelter", "trench" I had introduced earlier in another paper on sustainable management that contained this figure to explain that terminology:

(Humanity sits in a boat and can row against slow currents but not up waterfalls. The dark half is ethically undesirable, the light half desirable. We are likely in a "lake" or "glade" state now, roughly comparable to your "age of perils". Your "interstellar" state would be a "shelter".)