Evaluations from Manifund's EA Community Choice initiative

By Arepo @ 2024-09-16T03:29 (+53)

My partner (who we’ll refer to as ‘they’ for plausible anonymity), and I (‘he’) recently took part in Manifund’s EA Community Choice initiative. Since the money was claimed before they could claim anything, we decided to work together on distributing the $600 I received.

I think this was a great initiative, not only because it gave us a couple of fun date nights, but because it demonstrated a lot of latent wisdom of the crowd sitting largely untapped in the EA community. Many thanks to Anonymous Donor, for both of these outcomes! This post is our effort to pay the kindness (further) forward.

As my partner went through the projects, we decided to keep notes on most of them and on the landscape overall, to hopefully contribute in our small way to the community’s self-understanding. These notes were necessarily scrappy given the time available, and in some cases blunt, but we hope that even the recipients of criticism will find something useful in what we had to say. In this post we’ve given just notes on the projects we funded, but you can see our comments on the full set of projects (including those we didn’t fund) on this spreadsheet.

Our process:

We had three ‘date nights’, where both of us went through the list of grants independently. For each, we indicated Yes, No, or Maybe, and then spent the second half our time discussing our notes. Once we’d placed everything into a yes/no category, we each got a vote on whether it was a standout; if one of us marked it that way it would receive a greater amount; if both did we’d give it $100. In this way we had a three-tiered level of support: 'double standout', 'single standout', and 'supported' (or four, if you count the ones we didn’t give money to).

In general we wanted to support a wide set of projects, partly because of the quadratic funding match, but mostly because with $600 between us, the epistemic value of sending an extra signal of support seemed much more important than giving a project an extra $10. Even so, there were a number of projects we would have liked to support and couldn’t without losing the quasi-meaningful amounts we wanted to give to our standout picks.

He and they had some general thoughts provoked by this process:

His general observations

- Despite being philosophically aligned with totalising consequentialism (and hence, in theory, longtermism), I found the animal welfare submissions substantially more convincing than the longtermist ones - perhaps this is because I’m comparatively sceptical of AI as a unique x-risk (and almost all longtermist submissions were AI-related); but they seemed noticeably less well constructed, with less convincing track records of the teams behind them. I have a couple of hypotheses for this:

- The nature of the work and the culture of longtermist EA attracting people with idealistic conviction but not much practical ability

- The EA funding landscape being much kinder to longtermist work, such that the better longtermist projects tend to have a lot of funding already

- Similarly I’m strongly bought in to the narrative of community-building work (which to me has been unfairly scapegoated for much of what went wrong with FTX), but there wasn’t actually that much of it here. And like AI, it didn’t seem like the proposals had been thought through that well, or backed by a convincing track record (in this case that might be because it’s very hard to get a track record in community building since there’s so little funding for it - though see next two points). Even so, I would have liked to fund more of the community projects - many of them were among the last cuts.

- 'Track record' is really important to me, but doesn’t have to mean ‘impressive CV/elite university’, but rather ‘I’ve put some effort into this project or ones like it for at least a few months’. Bonus points for already having a project or product - though this was in tension with preferring to fund projects with high leverage, that didn’t already have large amounts of funding.

- ‘Track record’ can extend into the future, esp for smaller/less established projects - does the proposer convincingly claim they’ll try and get the project to some kind of minimally useful state even if it takes longer than expected? (not necessary, but a plus)

- Concrete plans were much more important to me than grandiose goals (which is maybe relevant to animal welfare vs AI projects), though they’re not particularly exclusive of each other. Ideally the plans would be laid out in excruciating detail, but given time constraints on both sides, just sounding convincingly like you have such concrete plans was worth a lot (e.g. the 'EA Community Building Initiative in China' proposal gave a convincing reason for reserve'; also the ‘Yes on IP28’ submission, despite being incredibly unlikely, was just about concrete + planned enough).

- I care very little about 'theories of change'. You can put together a narrative showing how anything can achieve anything so they just don’t update me much. To me, a concise story with a concrete datum or two is worth a thousand nodes on a flow chart. (I haven’t spoken to anyone else who seems to feel this way, and the community seems to be moving in a theory-of-changeward direction, so I’m curious if anyone reading this feels the same?)

- Having said all that, I realise I care much less about concrete plans the stronger I find the track record - e.g. the EA for Christians and GWWC proposals were very open-ended, but I trust both groups to prioritise their money in a sensible way that might be inconsistent with pledging it to a specific project.

- I also really want people to show that they’re constantly seeking better feedback loops, and a realistic notion of what 'this isn't working, we should stop doing it’ would look like.

- Where are all the global health projects? I would have been really excited to fund some good examples! Ditto non-AI longtermist/technologist stuff other than prediction markets.

- Many regranters (inc me) had projects of their own in the list. Although it turned out that the rules permitted self-donation, I had a very dim view of people who donated a large proportion of their windfall to themselves (in practice as far as I’ve seen so far, most people donated nothing to themselves, and the handful of exceptions donated everything to themselves). IMO the value of an initiative like this lies primarily in the information the donations provide, and self-donating throws all that away in favour of effectively making it a first-come-first-served goldrush.

- Based on this whole exercise, EA is still incredibly funding constrained. While many of these projects are likely to end up having low value, there is evidently a lot of untapped creativity in the community which is getting little to no financial support, not to mention community groups that are struggling post-FTX.

They’s general observations

- I was surprised how often we disagreed!

- Somewhat in the opposite direction, I was surprised that a few times Arepo wrote a comment that basically summarized a view that I had before him.

- I also found it off-putting when people had donated to their own projects; however unlike Arepo I thought that this was less bad when projects were a) groups of people, and b) where the individuals who donated can say that they’ve personally benefited from what the operations of the group, and much worse when A) the project was an individual person, basically giving themselves money, and B) when the project didn’t have the EA community as a focus, meaning they couldn’t make a the case of being supported by the project.

- This was fun and I want to do it again! I’m the ‘type’ of EA who has a ‘favorite’ global health charity which I intend to give ~all my donations to, but I’m thinking about setting aside some amount for these ‘dates’, to make an event out of donating.

Our final choices (along with our hasty comments):

Double standout

Covid Work By Elizabeth VN/Aceso Under Glass – $100

They: Generally a fan of her work. Her epistemics are refreshingly good - has made my life better with other research, so I'm happy to assume her Covid work was good or compensate her in principle for the other work

He: I think retroactive funding is a really important idea, and what I've seen of Elizabeth's work is extremely good

Kickstarting For Good - High-Impact Nonprofit Incubation Program – $100

He: Seed funding novel ideas seems v counterfactually valuable, and giving it to the projects best-evaled by an experienced team = win

New Roots Institute: Empowering the Next Generation to End Factory Farming – $100

They: Working in education with experience as teacher is a good combination. More positive about this than most advocacy because of history of repeatable, small projects

He: good advocacy? Would have liked more info on methods. But love the track record of high risk but bitesize, measurable-value projects - these made it the standout animal welfare initiative in this list for me

Single standout

EA Community Building Initiative in China – $40

Both(!): Community building in China is incredibly important, and seems to have been stymied by a single speculative, outdated and to our mind mildly insulting post by Ben Todd (implying that China's EAness is a key that can be unlocked with the right language, rather than a conversation to have which might - positively - change the message)

Commissions for a Cause - a Profit for Good project – $50

They: I wish EA would do more in “finance” - I suspect there’s a lot we can do with a community that wants to use significant portions of their funds for good, that the rest of the world can’t. Also, insurance people make a lot of money, and this is a rare project with very clearly well-aligned incentives

He: I liked this more the more I thought about the success of similar targeted-fundraising projects (High Impact Athletes, Founders Pledge). Would have liked a more concrete proposal ('develop marketing materials' feels like the organisational CV equivalent of 'proficient in MS Word') and more on what success/failure would look like, but the idea seems well worth testing, and the founders sound really solid

Calibration City – $40

They: I think this meaningfully provides additional value to the forecasting landscape, and tries to answer an in-retrospect, obvious question - how accurate have all these things been?

He: Product ready = big plus, and doing something distinct (much more a fan of this than making new forecasting alternative tools)

BAIS (ex-AIS Hub Serbia) Office Space for (Frugal) AI Safety Researchers – $40

They: In favour of AI safety in lower income countries both for low cost and for increased diversity of ideas; in favour of funding physical space

He: Frugal' in middle income country = high value! Have interacted professionally with Dusan a couple of times, and he seemed very sharp. Would prefer ‘general EA’ to AI safety unless they’re at max utilisation

CEEALAR – $40

He: I was closely involved with CEEALAR for many of the past few years for no pay, because I thought (and still think) its combination of low cost/longer termness/bringing EAs meaningfully together/potential to scale up is basically unrivalled in the EA world.

Supported

Giving What We Can – $10

They: I remember reading that OpenPhil asked GWWC to fundraise about half their budget from elsewhere - I don't know how this arrangement works, but it does make me want to give to them more - it seems like GWWC much harder time fundraising from small donors than most orgs because its hard for them to go around making strong "donate to us" pitches (although I could be very wrong here, and being supported in mind by a lot of people who are willing to donate means its easy).

He: I suspect they were penalised slightly for requesting general funding (about which it's hard not to be scope sensitive) rather than project-specific funding, and

I think if so this is basically unreasonable. Rethink's requesting funding for specific projects says to me 'we basically think these are the least important things we'd spend money on, otherwise we'd spend it on them out of our primary budget (not relevant to the Rethink subsidiary project, which I assume doesn't get financial support from above). GWWC's request says to me 'whatever we think the optimal marginal value of your dollar is, we'll spend it on that'.

Giving a token amount because they're a comparatively large org, but of all the big orgs in the EA movement they're the one I'm most pleased to be associated with, and who feel like they most represent the spirit of what the movement is supposed to be.

An online platform to solve collective action and coordination problems – $10

They: Idea seems unique, worth trying, skin in the game/track record of builders (title of Manifund post kinda offputting - makes it sound much less concrete than it is)

He: Seems like a long shot but haven't seen tried seriously, so good value of information. For non-market-based consulting to work well, I think you need a really strong emphasis on feedback loops, which is missing here

Hive Slack - an active community space for engaged farmed animal advocates – $10

He: Heavily discounted = high market value at least, and I generally find it convincing that active Slack communities are valuable

WhiteBox Research’s AI Interpretability Fellowship – $10

They: Many elements of this - from decision to focus on interpretability to demonstrating they've thought about differing activities at different bars of funding - make it look well thought out as a proposal

He: Field of technical AI safety I'm most positive about. SEA = good value for money. Would like more real-world experience from the team, but the project track record looks good. Proposal v well presented, too (I appreciate the credences!)

Yes On IP28 – $10

They: Concrete policy proposal, well-thought out, volunteer supported. Wish there was more detail about funding use but meh

He: Very high variance, unclear track record - no examples of success

VoiceDeck – $10

They: I'm confused by the demo but they *built* the demo, which is cool. Definitely an experiment worth trying and it strikes me as a well-thought out experiment. I can definitely think of people (non-EAs) I would point to this website if they asked where to donate

He: Prototype in place, looking reasonable; concept is interesting, though unsure what they've learned from failure of previous impact certificate systems

Coworking Space for EA/AI Safety Initiatives in Chile – $10

They: Wish they would tell me more about the other initiatives they list other than coworking

He: Would have liked to have a little more estimation of counterfactual (e.g. justification for claim that 'Potential to double or triple our annual output'), but space is cheap, and most of the participants have some kind of track record

EA Christian / CFI Community – $10

They: Don't feel equipped to evaluate them, being non-Christian. I've found many Christian EAs to be a very valuable addition to our community on many counts, but I suspect this is mostly to do with the way the selection effects of the two communities intersect

He: I would like a more concrete proposal, but (as a nonChristian) my impression of EA for Christians is extremely positive. They seem to donate more on average than the rest of us, have a better community, and IMO are generally much closer to the spirit of EA than most core EA orgs.

Using M&E to increase impact in the animal cause area, by The Mission Motor – $10

Both: we really think the value of gathering data in general is high, though would have liked to understand more concrete examples of what and how much data they would gather, how they would do it, and some kind of credences of concrete value.

NickLaing @ 2024-09-16T05:13 (+12)

"I care very little about 'theories of change'. You can put together a narrative showing how anything can achieve anything so they just don’t update me much. To me, a concise story with a concrete datum or two is worth a thousand nodes on a flow chart. (I haven’t spoken to anyone else who seems to feel this way, and the community seems to be moving in a theory-of-changeward direction, so I’m curious if anyone reading this feels the same?"

I completely agree with this - in the GHD, social Enterprise work a lot of weight seems to be put on theoru of change and I don't buy it. It just favors the best storytellers. Of course you HAVE to have a solid understanding of how what you are doing will work, but for me it's a little more of a binary thing, like it has to make sense rather than "oh wow this incredible theory of change convinces me this org is amazing"

Henri Thunberg 🔸 @ 2024-09-18T18:21 (+9)

Thank you for an interesting and useful post, the style of narration made it an enjoyable read.

I wanted to briefly address the below statement:

Rethink's requesting funding for specific projects says to me 'we basically think these are the least important things we'd spend money on, otherwise we'd spend it on them out of our primary budget

As I was part of the group at Rethink Priorities that chose what project to front on Manifund, I can honestly share that this is not how our thought process went. Rather, we focused mostly on ...

I) relatively small asks, that would be a better fit for the crowdfunding format of people giving hundreds of dollars

II) projects we thought were best suited for the "EA Community" framing of the funding round, i.e. meta efforts rather than broader .

Relative to our scale, we also don't have a large "primary budget" that we can use to fund projects we think are impactful. Some of our departments do not have grants for general team work, and have to fundraise for any specific next research project. Even the ones that do have department-level earmarking, are often somewhat restricted by funder(s) in what types of projects they can choose to work on by default. My experience is that it's more likely that a particular project that doesn't get funded gets put on hold in hope of another funder, than that we're able to use general unrestricted funds for it.

With more unrestricted funds available to RP, I think our reality would look at least a bit more like what you described in your comment. I think we have some very astute thinkers internally when it comes to cause prio, so I think that would be a good thing.

Arepo @ 2024-09-19T03:36 (+5)

Thanks Henri, that's useful context. Obviously we had to make pretty quick decisions on low info, and I guess the same was true for many other participants. If anything like this happens in future it might be worth including something like this about the broad counterfactuality of the decision in (or at least linked from) the proposal.

Henri Thunberg 🔸 @ 2024-09-19T06:28 (+3)

That makes sense, and thank you for the suggestion :)

For what it's worth, I was also on a personal level quite excited about the smaller type of projects that could get funding through something like this and otherwise might not make the effort of going through a long application process.

Tom-makes-fibres @ 2024-09-18T14:47 (+5)

Interesting insight on how you reached your decisions.

I think there could be a debate on pre-filtering from Manifund for these kind of initiatives. I think sometimes less is more - it might even make sense to roll some projects together as they were very similar at first look.

JDBauman @ 2024-09-23T18:21 (+3)

Thank you for the feedback on the EA Christian / Christians for Impact page!

I wasn't expecting folks to comb through the projects like you did. The fact that you did so encourages me and updates me towards being more public and forthcoming about project and funding proposals.

Lucas Kohorst @ 2024-09-23T12:21 (+3)

Hi! Really appreciate you putting this together, was cool to read about your thought process.

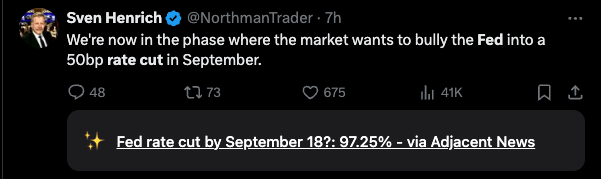

I am building Adjacent News and appreciated your feedback!

I don't know how valuable this is - it seems like an improvement but I can't stop myself from thinking that people should just install a twitter feed blocker extension, or an extension that redirects them from X to manifold automatically, or something. (I suspect people should read less news, and I was bullish on "getting the news via prediction markets" until I "got the news" about Roe v Wade via a prediction market and wasted a couple hours on something that made me unhappy and I could do nothing about)

I found this initial comment pretty interesting. We are building the chrome extension to provide additional context to the news you read. It looks like this

It features a pretty subtle market inline with context to what you read. Here is another example

This also works for newspaper sites like NYT, Semafor, etc. (really any site that you might find it useful to add context to).

Another of our last-minute cuts. Interesting ideas, but I'm unclear from the post how far advanced they are/what the primary product is; though I feel slightly guilty not supporting this, since I can imagine using their API in future

Thanks! I would say decently far along we currently have

- Historical Data for active prediction markets on our data platform at data.adj.news

- API at docs.adj.news

- Beta chrome extension that will be on the chrome web store within a few weeks

- RSS feed reader with embedded markets

In early October we will be publishing our own news content when odds significantly move within a 15min period.

Thanks again for the feedback! If you ever would like to chat you can email at lucas@adjacentresearch.xyz or message me on telegram at t.me/lucaskohorst.

Jonas Hallgren @ 2024-09-16T18:19 (+3)

I enjoyed the post and I thought the platform for collective action looked quite cool.

I also want to mention that I think tractability is just generally a really hard thing for longtermism. It's also a newer field and so on expectation I think you should just believe that the projects will look worse than in animal welfare. I don't think there's any need for psychoanalysis of the people in the space even though it has its fair share of wackos.

Arepo @ 2024-09-18T02:57 (+4)

Fwiw it felt like a more concrete difference than that. My overall sense is that the animal welfare projects tended to be backed by multiple people with years of experience doing something relevant, have a concrete near term goal or set of milestones, and a set of well-described steps for moving forwards, while the longtermist/AI stuff tended to lack some or all of that.

Jonas Hallgren @ 2024-09-18T07:28 (+3)

I think that still makes sense under my model of a younger and less tractable field?

Experience comes partly from the field being viable for a longer period of time since there can be a lot more people who have worked in that area in the past.

The well-described steps and concrete near-term goals can be described as a lack of easy tractability?

I'm not saying that it isn't the case that the proposals in longtermism are worse today but rather that it will probably look different in 10 years? A question that pops up for me is about how great the proposals and applications were in the beginning of animal welfare as a field. I'm sure it was worse in terms of legibility of the people involved and the clarity of the plans.(If anyone has any light to shed on this, that would be great!)

Maybe there's some sort of effect where the more money and talent a field gets the better the applications get. To get there you first have to have people spend on more exploratory causes though? I feel like there should be anecdata from grantmakers on this.

Arepo @ 2024-09-18T12:58 (+2)

That might be part of the effect, but I would think it would apply more strongly to EA community building than AI (which has been around for several decades with vastly more money flowing into it) - and the community projects were maybe slightly better over all? At least not substantially worse.

I don't really buy that concrete steps are hard to come up with for good AI or even general longtermism projects - one could for e.g. aim to show or disprove some proposition in a research program, aim to reach some number of views, aim to produce x media every y days (which IIRC one project did), or write x-thousand words or interview x industry experts, or use some tool for some effect, or any one of countless ways of just breaking down what your physical interactions will be with the world between now and your envisioned success.

SummaryBot @ 2024-09-16T16:52 (+1)

Executive summary: The authors participated in Manifund's EA Community Choice initiative, distributing $600 across various projects, and share their evaluation process, funding decisions, and observations about the EA funding landscape.

Key points:

- Authors evaluated projects across three tiers of support, prioritizing wide distribution of funds for signaling value.

- Animal welfare and concrete, well-planned projects were viewed more favorably than longtermist or AI-related proposals.

- Track record and demonstrated effort were highly valued, even for smaller or less established projects.

- Authors noted a lack of global health projects and non-AI longtermist work in the submissions.

- Self-donation was viewed negatively, as it reduces the initiative's informational value.

- Observations suggest EA remains funding constrained, with untapped creativity in the community.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.