Should there be just one western AGI project?

By rosehadshar, Tom_Davidson @ 2024-12-04T14:41 (+49)

This is a linkpost to https://www.forethought.org/research/should-there-be-just-one-western-agi-project

Tom Davidson did the original thinking; Rose Hadshar helped with later thinking, structure and writing.

Some plans for AI governance involve centralising western AGI development.[1] Would this actually be a good idea? We don’t think this question has been analysed in enough detail, given how important it is. In this post, we’re going to:

- Explore the strategic implications of having one project instead of several

- Discuss what we think the best path forwards is, given that strategic landscape

(If at this point you’re thinking ‘this is all irrelevant, because centralisation is inevitable’, we disagree! We suggest you read the appendix, and then consider if you want to read the rest of the post.)

On 2, we’re going to present:

- Our overall take: It’s very unclear whether centralising would be good or bad.

- Our current best guess: Centralisation is probably net bad, because of risks from power concentration (but this is very uncertain).

Overall, we think the best path forward is to increase the chances we get to good versions of either a single or multiple projects, rather than to increase the chances we get a centralised project (which could be good or bad). We’re excited about work on:

- Interventions which are robustly good whether there are one or multiple AGI projects.

- Governance structures which avoid the biggest downsides of single and/or multiple project scenarios.

What are the strategic implications of having one instead of several projects?

What should we expect to vary with the number of western AGI development projects?

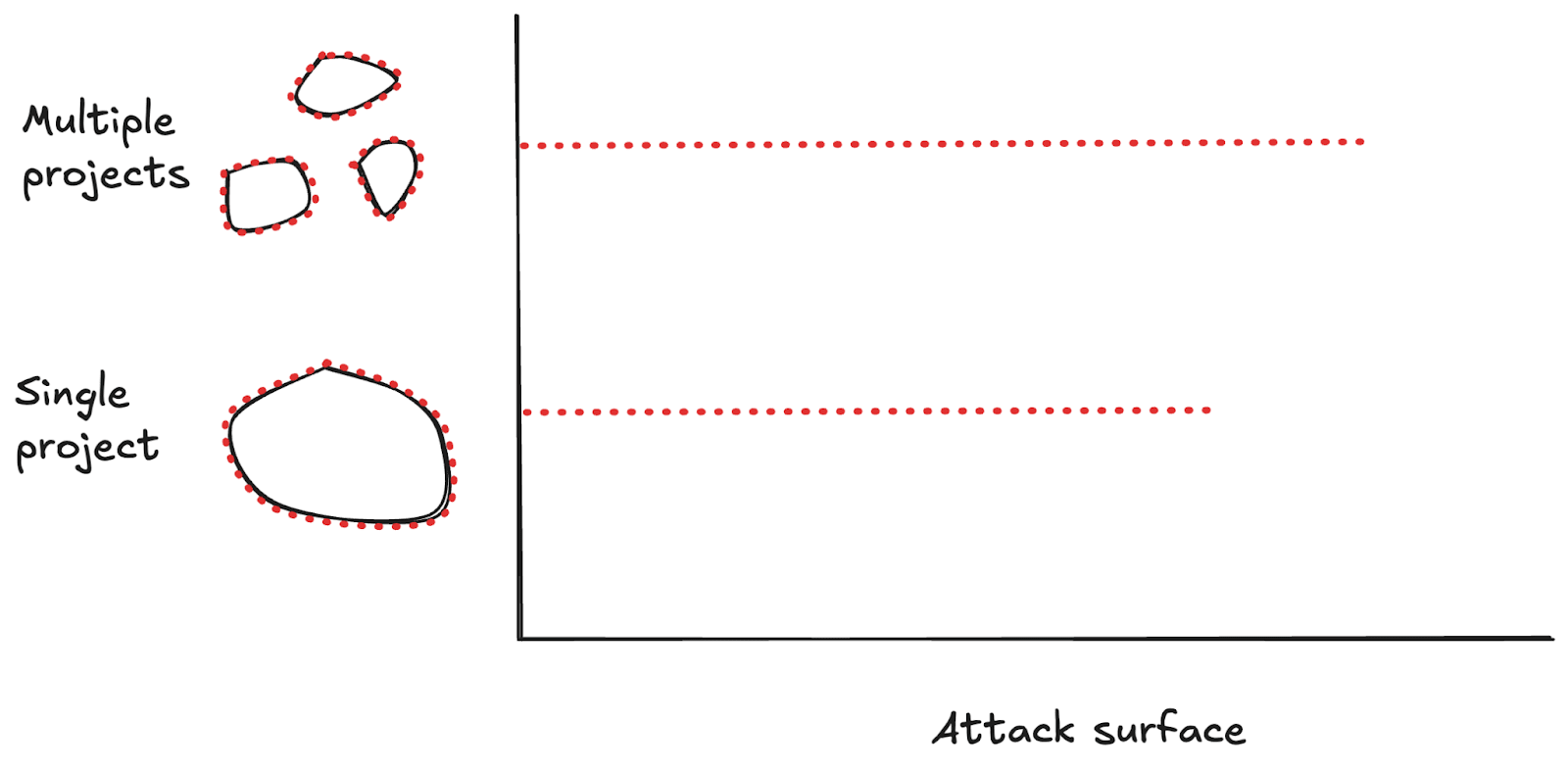

At a very abstract level, if we start out with some blobs, and then mush them into one blob, there are a few obvious things that change:

- There are fewer blobs in total. So interaction dynamics will change. In the AGI case, the important interaction dynamics to consider are:

- There’s less surface area. So it might be easier to protect our big blob. In the AGI case, we can think about this in terms of infosecurity.

Summary table

| Variable | Implications of one project | Uncertainties[2] |

| Race dynamics | Less racing between western projects - No competing projects

Unclear implications for racing with China: - US might speed up or slow down - China might speed up too | Do ‘races to the top’ on safety outweigh races to the bottom? How effectively can government regulation reduce racing between multiple western projects?

Will the speedup from compute amalgamation outweigh other slowdowns for the US? How much will China speed up in response to US centralisation? How much stronger will infosecurity be for a centralised project? |

| Power concentration | Greater concentration of power: - No other western AGI projects - Less access to advanced AI for the rest of the world - Greater integration with USG | How effectively can a single project make use of: - Market mechanisms? - Checks and balances? How much will power concentrate anyway with multiple projects? |

| Infosecurity | Unclear implications for infosecurity: - More resources, but USG provision or R&D breakthroughs could mitigate this for multiple projects - Might provoke larger earlier attacks | How much bigger will a single project be? How strong can infosecurity be for multiple projects? Will a single project provoke more serious attacks? |

Race dynamics

One thing that changes if western AGI development gets centralised is that there are fewer competing AGI projects.

When there are multiple AGI projects, there are incentives to move fast to develop capabilities before your competitors do. These incentives could be strong enough to cause projects to neglect other features we care about, like safety.

What would happen to these race dynamics if the number of western AGI projects were reduced to one?

Racing between western projects

At first blush, it seems like there would be much less incentive to race between western projects if there were only one project, as there would be no competition to race against.

This effect might not be as big as it initially seems though:

- Racing between teams. There could still be some racing between teams within a single project.

- Regulation to reduce racing. Government regulation could temper racing between multiple western projects. So there are ways to reduce racing between western projects, besides centralising.

Also, competition can incentivise races to the top as well as races to the bottom. Competition could create incentives to:

- Scrutinise competitors’ systems.

- Publish technical AI safety work, to look more responsible than competitors.

- Develop safer systems, to the extent that consumers desire this and can tell the difference.

It’s not clear how races to the top and races to the bottom will net out for AGI, but the possibility of races to the top is a reason to think that racing between multiple western AGI projects wouldn’t be as negative as you’d otherwise think.

Having one project would mean less racing between western projects, but maybe not a lot less (as the counterfactual might be well-regulated projects with races to the top on safety).

Racing between the US and China

How would racing between the US and China change if the US only had one AGI project?

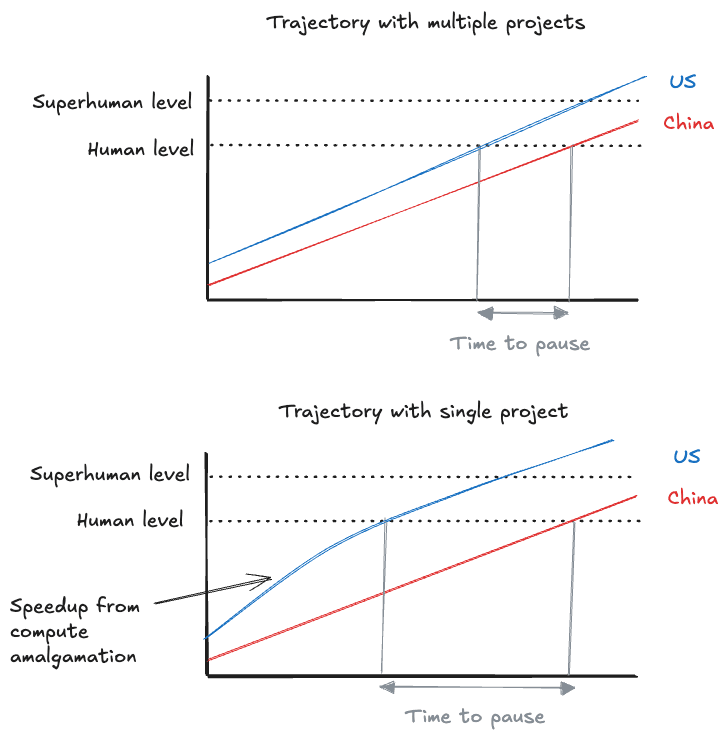

The main lever that could change the amount of racing is the size of the lead between the US and China: the bigger the US’s lead, the less incentive there is for the US to race (and the smaller the lead, the more there’s an incentive).[3]

Somewhat paradoxically, this means that speeding up US AGI development could reduce racing, as the US has a larger lead and so can afford to go more slowly later.

Speeding up US AGI development gives the US a bigger lead, which means they have more time to pause later and can afford to race less.

At first blush, it seems like centralising US AGI development would reduce racing with China, because amalgamating all western compute would speed up AGI development.

However, there are other effects which could counteract this, and it’s not obvious how they net out:

- China might speed up too. Centralising western AGI development might prompt China to do the same. So the lead might remain the same (or even get smaller, if you expect China to be faster and more efficient at centralising AGI development).

- The US might slow down for other reasons. It’s not clear how the speedup from compute amalgamation nets out with other factors which might slow the US down:

- Bureaucracy. A centralised project would probably be more bureaucratic.

- Reduced innovation. Reducing the number of projects could reduce innovation.

- Chinese attempts to slow down US AGI development. Centralising US AGI development might provoke Chinese attempts to slow the US down (for example, by blockading Taiwan).

- Centralising might make the US less likely to pause at the crucial time. If part of the reason for centralising is to develop AGI before China, it might become politically harder for the US to slow down at the crucial time even if the lead is bigger than counterfactually (because there’s a stronger narrative about racing to beat China).

- Infosecurity. Centralising western AGI development would probably make it harder for China to steal model weights, but it might also prompt China to try harder to do so. (We discuss infosecurity in more detail below.)

So it’s not clear whether having one project would increase or decrease racing between the US and China.

Why do race dynamics matter?

Racing could make it harder for AGI projects to:

- Invest in AI safety in general

- Slow down or pause at the crucial time between human-level and superintelligent AI, when AI first poses an x-risk and when AI safety and governance work is particularly valuable

This would increase AI takeover risk, risks from proliferation, and the risk of coups (as mitigating all of these risks takes time and investment).

It might also matter who wins the race, for instance if you think that some projects are more likely than others to:

- Invest in safety (reducing AI takeover risk)

- Invest in infosecurity (reducing risks from proliferation)

- Avoid robust totalitarianism

- Lead to really good futures

Many people think that this means it’s important for the US to develop AGI before China. (This is about who wins the race, not strictly about how much racing there is. But these things are related: the more likely the US is to win a race, the less intensely the US needs to race.[4])

Power concentration

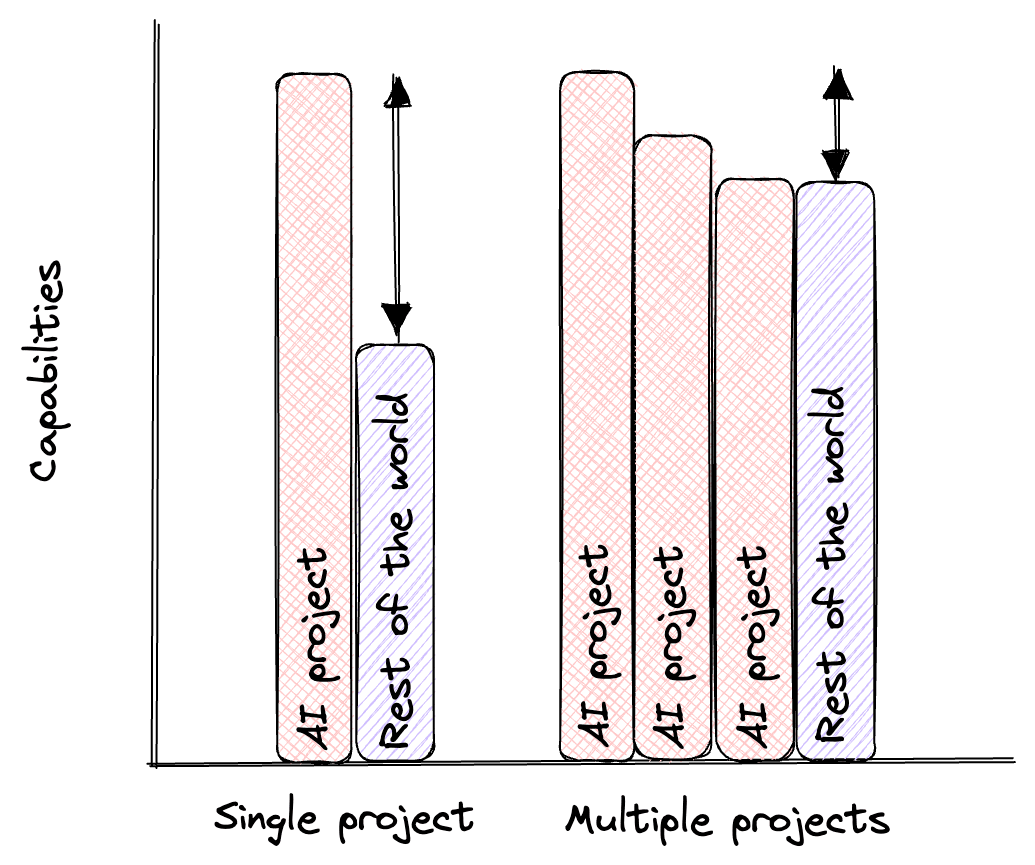

If western AGI development gets centralised, power would concentrate: the single project would have a lot more power than any individual project in a multiple project scenario.

There are a few different mechanisms by which centralising would concentrate power:

- Removing competing AGI projects. This has two different effects:

- The single project amasses more resources.

- Some of the constraints on the single project are removed:

- There are fewer actors with the technical expertise and incentives to expose malpractice.

- It removes incentives to compete on safety and on guarantees that AI systems are aligned with the interests of broader society (rather than biased towards promoting the interests of their developers).

- Reducing access to advanced AI services. Competition significantly helps people get access to advanced tech: it incentivises selling better products sooner to more people for less money. A single project wouldn’t naturally have this (very strong) incentive. So we should expect less access to advanced AI services than if there are multiple projects.

- Reducing access to these services will significantly disempower the rest of the world: we’re not talking about whether people will have access to the best chatbots or not, but whether they’ll have access to extremely powerful future capabilities which enable them to shape and improve their lives on a scale that humans haven’t previously been able to.

If multiple projects compete to sell AI services to the rest of the world, the rest of the world will be more empowered.

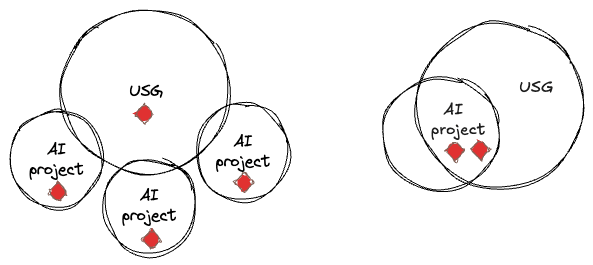

- Increasing integration with USG. We expect that AGI projects will work closely with the USG, however many projects there are. But there’s a finite amount of USG bandwidth: there’s only one President, for example. So the fewer projects there are, the more integration we should expect. This further concentrates power:

- The AGI project gets access to more non-AI resources.

- The USG and the AGI project become less independent.

With multiple projects there would be more independent centres of power (red diamonds).

How much more concentrated would power be if western AGI development were centralised?

Partly, this depends on how concentrated power would become in a multiple project scenario: if power would concentrate significantly anyway, then the additional concentration from centralisation would be less significant. (This is related to how inevitable a single project is - see this appendix.)

And partly this depends on how easy it is to reduce power concentration by designing a single project well.[5] A single project could be designed with:

- Market mechanisms, which would increase access to advanced AI services.

- For example, many companies could be allowed to fine-tune the project’s models, and compete to sell API access.

- Checks and balances to

- Limit integration with the USG.

- Increase broad transparency into its AI capabilities and risk analyses.

- Limit the formal rights and authorities of the project.

- For example, restricting the project’s rights to make money, or to take actions like paying for ads or investing in media companies or political lobbying.

But these mechanisms would be less robust than having multiple projects at reducing power concentration: any market mechanisms and checks and balances would be a matter of policy, not competitive survival, so they would be easier to go back on.

Having one project might massively increase power concentration, but also might just increase it a bit (if it’s possible to have a well-designed centralised project with market mechanisms and checks and balances).

Why does power concentration matter?

Power concentration could:

- Reduce pluralism. Power concentration means that fewer actors are empowered (with AI capabilities and resources). The many would have less influence and less chance to flourish. This is unfair, and it probably makes it less likely that humanity reflects collectively and explores many different kinds of future.

- Increase coup risk and the chance of a permanent dictatorship. At an extreme, power concentration could allow a small group to seize permanent control.

- Power concentration increases the risk of a coup in the US, because:

- It’s easier for a single project to retain privileged access to the most advanced systems.

- There are no competing western projects with similar capabilities.

- There’s no incentive for the project to sell its most advanced systems to keep up with the competition.

- It’s probably easier for a single project to install secret loyalties undetected,[6] as there are fewer independent actors with the technical expertise to expose it.[7]

- There are fewer centres of power and a single project would be more closely integrated with the USG.

- It’s easier for a single project to retain privileged access to the most advanced systems.

- If growth is sufficiently explosive, then a coup of the USG could lead to:

- Permanent dictatorship.

- Taking over the world.

- Power concentration increases the risk of a coup in the US, because:

Infosecurity

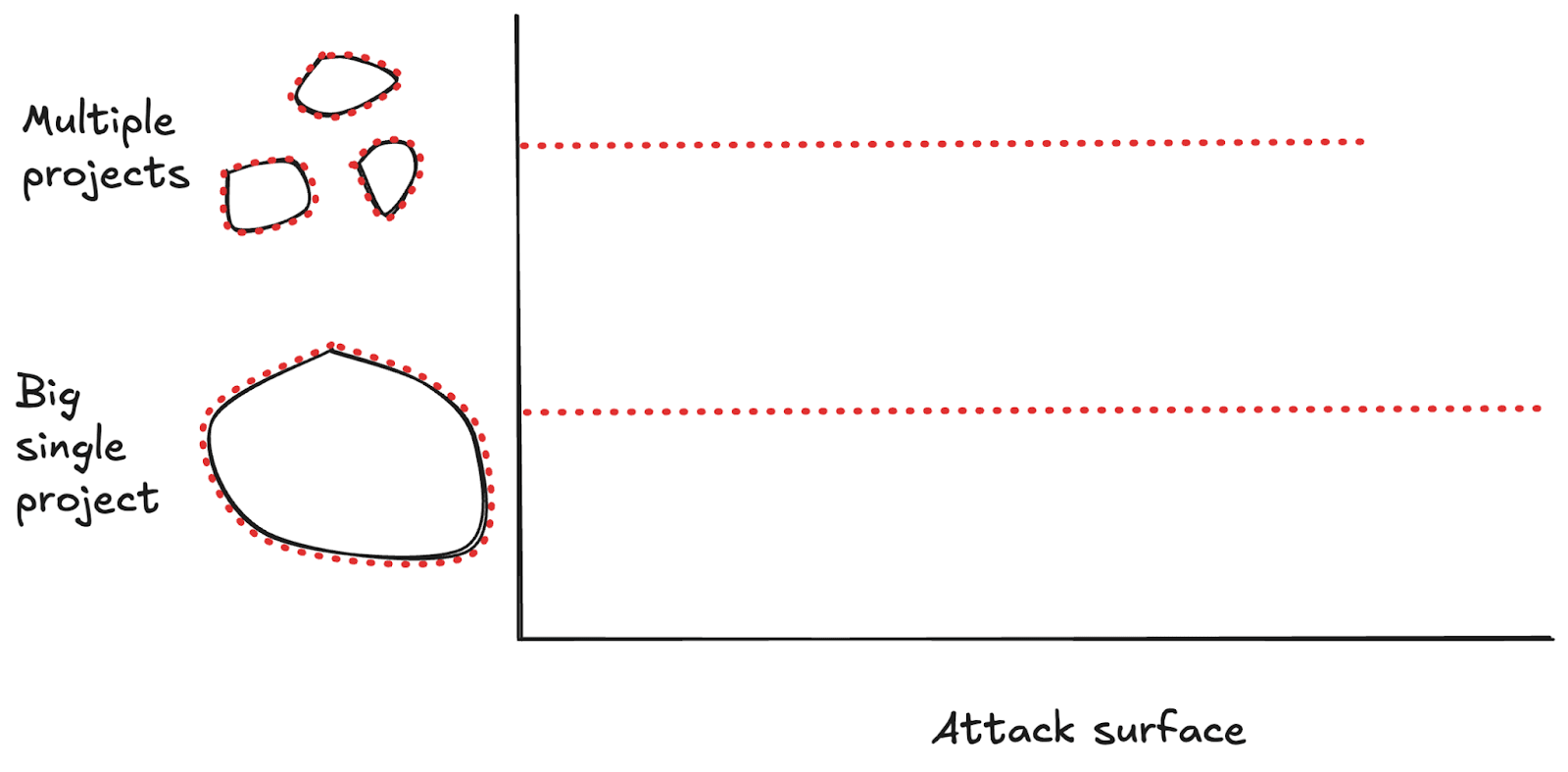

Another thing that changes if western AGI development gets centralised is that there’s less attack surface:

- There are fewer security systems which could be compromised or fail.

- There might be fewer individual AI models to secure.

Some attack surface scales with the number of projects.

At the same time, a single project would probably have more resources to devote to infosecurity:

- Government resources. A single project is likely to be closely integrated with USG, and so to have access to the highest levels of government infosecurity.

- Total resources. A single project would have more total resources to spend on infosecurity than any individual project in a multiple project scenario, as it would be bigger.

So all else equal, it seems that centralising western AGI development would lead to stronger infosecurity.

But all else might not be equal:

- A single project might motivate more serious attacks, which are harder to defend against.

- It might also motivate earlier attacks, such that the single project would have less total time to get security measures into place.

- There are ways to increase the infosecurity of multiple projects:

- The USG might provide or mandate strong infosecurity for multiple projects.

- The USG might be motivated to provide this, to the extent that it wants to prevent China stealing model weights.

- If the USG set very high infosecurity standards, and put the burden on private companies to meet them, companies might be motivated to do so given the massive economic incentives.[8]

- R&D breakthroughs might lower the costs of strong infosecurity, making it easier for multiple projects to access.

- The USG might provide or mandate strong infosecurity for multiple projects.

- A single project could have more attack surface, if it’s sufficiently big. Some attack surface scales with the number of projects (like the number of security systems), but other kinds of attack surface scale with total size (like the number of people or buildings). If a single project were sufficiently bigger than the sum of the counterfactual multiple projects, it could have more attack surface and so be less infosecure.

If a single project is big enough, it would have more attack surface than multiple projects (as some attack surface scales with total size).

It’s not clear whether having one project would reduce the chance that the weights are stolen. . We think that it would be harder to steal the weights of a single project, but the motivation to do so would also be stronger – it’s not clear how these balance out.

Why does infosecurity matter?

The stronger infosecurity is, the harder it is for:

- Any actor to steal model weights.

- This reduces risks from proliferation: it’s harder for bad actors to get access to GCR-enabling technologies. The more it’s the case that AI enables strongly offence dominant technologies, the more important this point is.

- China in particular to steal model weights.

- This effectively increases the size of the US lead over China (as you don’t need to discount the actual lead by as much to account for the possibility of China stealing the weights), which:

- Probably reduces racing (which reduces AI takeover risk),[9] and

- Increases the chance that the US develops AGI before China.

- This effectively increases the size of the US lead over China (as you don’t need to discount the actual lead by as much to account for the possibility of China stealing the weights), which:

If we’re right that centralising western AGI development would make it harder to steal the weights, but also increase the motivation to do so, then the effect of centralising might be more important for reducing proliferation risk than for preventing China stealing the weights:

- If it’s harder to steal the weights, fewer actors will be able to do so.

- China is one of the most resourced and competent actors, and would have even stronger motivation to steal the weights than other actors (because of race dynamics).

- So it’s more likely that centralising reduces proliferation risk, and less likely that it reduces the chance of China stealing the weights.

What is the best path forwards, given that strategic landscape?

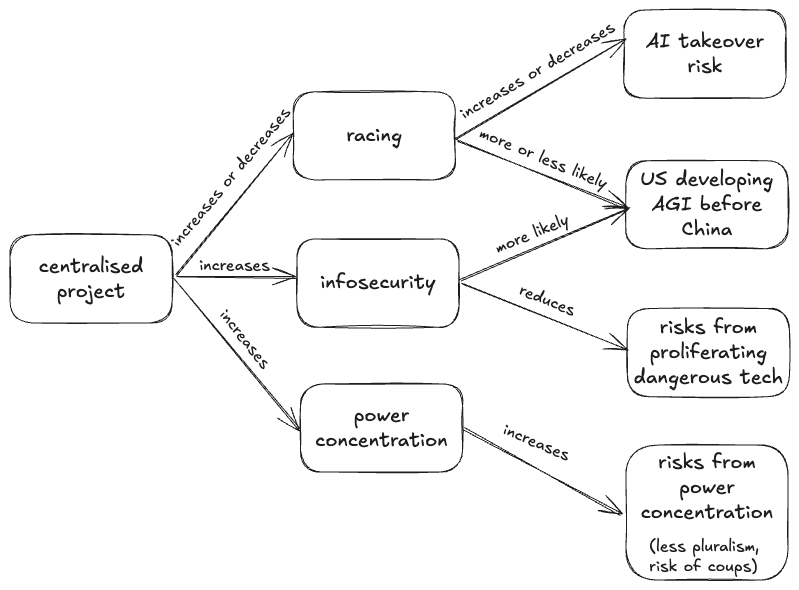

We’ve just considered a lot of different implications of having a single project instead of several. Summing up, we think that:

- It’s not clear what the sign of having a single project would be for racing between the US and China and infosecurity.

- Having a single project would probably lead to less racing between western companies and greater power concentration (but it’s not clear what the effect size here would be).

So, given this strategic landscape, what’s the best path forwards?

- Our overall take: It’s very unclear whether centralising would be good or bad.

- Our current best guess: Centralisation is probably net bad, because of risks from power concentration (but this is very uncertain).

Our overall take

It’s very unclear whether centralising would be good or bad.

It seems to us that whether or not western AGI development is centralised could have large strategic implications. But it’s very hard to be confident in what the implications will be. Centralising western AGI development could:

- Increase or decrease AI takeover risk, depending on whether it exacerbates racing with China or not and on how bad (or good) racing between multiple western projects would be.

- Make it more or less likely that the US develops AGI before China, depending on whether it slows the US down, how much it causes China to speed up, and how much stronger the infosecurity of a centralised project would be.

- Reduce risks from proliferating dangerous technologies by a little or a lot, depending on the attack surface of the single project, whether attacks get more serious following centralisation, and how strong the infosecurity of multiple projects would be.

- Increase risks from power concentration by a little or a lot, depending on how well-designed the centralised project would be.

It’s also unclear what the relative magnitudes of the risks are in the first place. Should we prefer a world where the US is more likely to beat China but also more likely to slide into dictatorship, or a world where it’s less likely to beat China but also less likely to become a dictatorship? If centralising increases AI takeover risk but only by a small amount, and greatly increases risks from power concentration, what should we do? The trade-offs here are really hard.

We have our own tentative opinions on this stuff (below), but our strongest take here is that it’s very unclear whether centralising would be good or bad. If you are very confident that centralising would be good — you shouldn’t be.

Our current best guess

We think that the overall effect of centralising AGI development is very uncertain, but it still seems useful to put forward concrete best guesses on the object-level, so that others can disagree and we can make progress on figuring out the answer.

Our current best guess is that centralisation is probably net bad because of risks from power concentration.

Why we think this:

- Centralising western AGI development would be an extreme concentration of power.

- There would be no competing western AGI projects, the rest of the world would have worse access to advanced AI services, and the AGI project would be more integrated with the USG.

- A single project could be designed with market mechanisms and checks and balances, but we haven’t seen plans which seem nearly robust enough to mitigate these challenges. What concretely would stop a centralised project from ignoring the checks and balances once it has huge amounts of power?

- Power concentration is a major way we could lose out on a lot of the value of the future.

- It makes it less likely that we end up with good reflective processes leading to a pluralist future.

- It increases the risk of a coup leading to permanent dictatorship.

- The benefits of centralising probably don’t outweigh these costs.

- Centralising wouldn’t significantly reduce AI takeover risk.

- Racing between the US and China would probably intensify, as centralising would prompt China to speed up AGI development and to try harder to steal model weights.

- The counterfactual wouldn’t be that bad:

- It seems pretty feasible to regulate the bad effects of racing between western AGI projects.

- Races to the top will counteract races to the bottom to some extent.

- Centralising wouldn’t significantly reduce AI takeover risk.

- Centralising wouldn’t significantly increase the chances that the US develops AGI before China (as it would prompt China to speed up AGI development and to try harder to steal model weights).

- Multiple western AGI projects could have good enough infosecurity to prevent proliferation or China stealing the weights.

- The USG will be able and willing to either provide or mandate strong infosecurity for multiple projects.

But because there’s so much uncertainty, we could easily be wrong. These are the main ways we are tracking that our best guess could be wrong:

- Infosecurity. It might be possible to have good enough infosecurity with a centralised project, but not with multiple projects.

- We don’t see why the USG can’t secure multiple projects in principle, but we’re not experts here.

- Regulating western racing. Absent a centralised project, regulation may be very unlikely to reduce problematic western racing.

- Good regulation is hard, especially for an embryonic field like catastrophic risks from AI.

- US China racing. Maybe China wouldn’t speed up as much as the US would, such that centralising would increase the US lead, reduce racing, and reduce AI takeover risk.

- This could be the case if China just doesn’t have good enough compute access to compete, or if the US centralisation process is surprisingly efficient.

- Designing a single project. There might be robust mechanisms for a single project to sell advanced AI services and manage coup risk.

- We haven’t seen anything very convincing here, but we’d be excited to see more work here.

- Trade-offs between risks. The probability-weighted impacts of AI takeover or the proliferation of world-ending technologies might be high enough to dominate the probability-weighted impacts of power concentration.

- We currently doubt this, but we haven’t modelled it out, and we have lower p(doom) from misalignment than many (<10%).

- Accessible versions. A really good version of a centralised project might become accessible, or the accessible versions of multiple projects might be pretty bad.

- This seems plausible. Note that unfortunately good versions of both scenarios are probably correlated, as they both significantly depend on the USG doing a good job (of managing a single project, or of regulating multiple projects).

- Inevitability. Centralisation might be more inevitable than we thought, such that this argument is moot (see appendix).

- Inevitability is a really strong claim, and we are currently not convinced. But this might become clearer over time, and we’re not very close to the USG.

Overall conclusion

Overall, we think the best path forward is to increase the chances we get to good versions of either a single or multiple projects, rather than to increase the chances we get a centralised project (which could be good or bad).

The variation between good and bad versions of these projects seems much more significant than the variation from whether or not projects are centralised.

A centralised project could be:

- A monopoly which gives unchecked power to a small group of people who are also very influential in the USG.

- A well-designed and accountable project with market mechanisms and thorough checks and balances on power.

- Anything in between.

A multiple project scenario could be:

- Subject to poorly-targeted regulation which wastes time and safety resources, without preventing dangerous outcomes.

- Subject to well-targeted regulation which reliably prevents dangerous outcomes and supports infosecurity.

It’s hard to tell whether a centralised project is better or worse than multiple projects as an overall category; it’s easy to tell within categories which scenarios we’d prefer.

We’re excited about work on:

- Interventions which are robustly good whether there are one or multiple AGI projects. For example:

- Robust processes to prevent AIs from having secret loyalties.

- R&D into improved infosecurity.

- Governance structures which avoid the biggest downsides of single and/or multiple project scenarios. For example:

- A centralised project could be carefully designed to minimise its power, e.g. by only allowing it to do pre-training and safety testing, and requiring it to share access to near-SOTA models with multiple private companies who can fine-tune and sell access more broadly.

- Multiple projects could be carefully governed to prevent racing to the bottom on safety, e.g. by requiring approval of a centralised body to train significantly more capable AI.

For extremely helpful comments on earlier drafts, thanks to Adam Bales, Catherine Brewer, Owen Cotton-Barratt, Max Dalton, Lukas Finnveden, Ryan Greenblatt, Will MacAskill, Matthew van der Merwe, Toby Ord, Carl Shulman, Lizka Vaintrob, and others.

Appendix: Why we don’t think centralisation is inevitable

A common argument for pushing to centralise western AGI development is that centralisation is basically inevitable, and that conditional on centralisation happening at some point, it’s better to push towards good versions of a single project sooner rather than later.

We agree with the conditional, but don’t think that centralisation is inevitable.

The main arguments we’ve heard for centralisation being inevitable are:

- Economies of scale. Gains from scale might cause AGI development to centralise eventually (e.g. if training runs become too expensive/compute-intensive/energy-intensive to do otherwise).

- Inevitability of a decisive strategic advantage (DSA).[10] The leading project might get a DSA at some point due to recursive self-improvement after automating AI R&D.

- Government involvement. The national security implications of AGI might cause the USG to centralise AGI development eventually.

These arguments don’t convince us:

- Economies of scale point to fewer but not necessarily one project. There will be pressure towards fewer projects as training runs become more expensive/compute-intensive/energy-intensive. But it’s not obvious that this will push all the way to a single project:

- Ratio of revenues to costs. If revenues from AGI are more than double the costs of training AGI, then there are incentives for more projects to enter (because even if you split the revenues in half, they still cover the costs of training for two projects).

- Market inefficiencies. It could still be most efficient to have a single project even if revenues are double the costs (because less money is spent for the same returns) — but the free market isn’t always maximally efficient.

- Benefits of innovation. Alternatively, it could be more efficient to have multiple projects, because competition increases innovation by enough to outweigh the additional costs.

- Antitrust. By default, there’s strong legal pressure against monopolies.

- There are ways of preventing a decisive strategic advantage (DSA). There is a risk that recursive self-improvement after automating AI R&D could enable the leading AGI project to get a decisive strategic advantage, which would effectively centralise all power in that project. We’re very concerned by this risk, but think that there are many ways to prevent the leading project getting a DSA.

- For example:

- External oversight. Have significant external oversight into how the project trains and/or deploys AI to prevent the project from seeking influence in illegitimate ways.

- Cheap API access. Require the leader to provide cheap API access to near-SOTA models (to prevent them hoarding their capabilities or charging high monopoly prices on their unrivalled AI services).

- Weight-sharing. Require them to sell the weights of models trained with 10X less effective FLOP than their best model to other AGI projects, limiting how large a lead they can build up over competitors.

- We think countermeasures like these could bring the risk of a DSA down to very low levels, if implemented well. Even so, the leading AGI project would still be hugely powerful.

- For example:

- Government involvement =/= centralisation. The government will very likely want to be heavily involved in AGI development for natsec reasons, but there are other ways of doing this besides a single project, like defence contracting and public-private partnerships.

So, while we still think that centralisation is plausible, we don’t think that it’s inevitable.

- ^

Centralising: either merging all existing AGI development projects, or shutting down all but the leading project. Either of these would require substantial US government (USG) involvement, and could involve the USG effectively nationalising the project (though there’s a spectrum here, and the lower end seems particularly likely).

Western: we’re mostly equating western with US. This is because we’re assuming that:

- Google DeepMind is effectively a US company because most of its data centres are in the US.

- Timelines are short enough that there are no plausible AGI developers outside the US and China.

We don’t think that these assumptions change our conclusions much. If western AGI projects were spread out beyond the US, then this would raise the benefits of centralising (as it’s harder to regulate racing across international borders), but also increase the harms (as centralising would be a larger concentration of power on the counterfactual) and make centralisation less likely to happen.

- ^

An uncertainty which cuts across all of these variables is what version of a centralised project/multiple project scenario we would get.

- ^

This is more likely to be true to the extent that:

- There are winner-takes-all dynamics.

- The actors are fully rational.

- The perceived lead matches the actual lead.

It seems plausible that 2 and 3 just add noise, rather than systematically pushing towards more or less racing.

- ^

Even if you don’t care who wins, you might prefer to increase the US lead to reduce racing. Though as we saw above, it’s not clear that centralising western AGI development actually would increase the US lead.

- ^

There are also scenarios where having a single project reduces power concentration even without being well-designed: if failing to centralise would mean that US AGI development was so far ahead of China that the US was able to dominate, but centralising would slow the US down enough that China would also have a lot of power, then having a single project would reduce power concentration by default.

There are a lot of conditionals here, so we’re not currently putting much weight on this possibility. But we’re noting it for completeness, and in case others think there are reasons to put more weight on it.

- ^

By ‘secret loyalties’, we mean undetected biases in AI systems towards the interests of their developers or some small cabal of people. For example, AI systems which give advice which subtly tends towards the interests of this cabal, or AI systems which have backdoors.

- ^

A factor which might make it easier to install secret loyalties with multiple projects is racing: CEOs might have an easier time justifying moving fast and not installing proper checks and balances, if competition is very fierce.

- ^

Though these standards might be hard to audit, which would make compliance harder to achieve.

- ^

There are a few ways that making it harder for China to steal the model weights might not reduce racing:

- Centralising might simultaneously cause China to speed up its AGI development, and make it harder to steal the weights. It’s not clear how these effects would net out.

- What matters is the perceived size of the lead. The US could be poorly calibrated about how hard it is for China to steal the weights or about how that nets out with China speeding up AGI development, such that the US doesn’t race less even though it would be rational to do so.

- If it were very easy for China to steal the weights, this would reduce US incentives to race. (Note that this would probably be very bad for proliferation risk, and so isn’t very desirable.)

We still think that making the weights harder to steal would probably lead to less racing, as the US would feel more secure - but this is a complicated empirical question.

- ^

Bostrom defines DSA as “a level of technological and other advantages sufficient to enable it to achieve complete world domination” in Superintelligence. Tom tends to define having a DSA as controlling >99% of economic output, and being able to do so indefinitely.

Xavier_ORourke @ 2024-12-05T05:18 (+5)

I think an important consideration being overlooked is how comptetntly a centralised project would actually be managed.

In one of your charts, you suggest worlds where there is a single project will make progress faster due to "speedup from compute almagamation". This is not necessarily true. It's very possible that different teams would be able to make progress at very different rates even if both given identical compute resources.

At a boots-on-the-ground level, the speed of progress an AI project makes will be influenced by thosands of tiny decisions about how to:

- Manage people

- Collect training data

- Prioritize research direcitons

- Debug training runs

- Decide who to hire

- Assess people's perfomance and decide to should be promoted to more influential positions

- Manage code quality/technical debt

- Design+run evals

- Transfer knowledge between teams

- Retain key personnel

- Document findings

- Decide what internal tools to use/build

- Handle data pipeline bottlenecks

- Coordinate between engineers/researchers/infrastructure teams

- Make sure operations run smoothly

The list goes on!

Even seemingly minor decisions like coding standards, meeting structures and reporting processes might compound over time to create massive differences in research velocity. A poorly run organization with 10x the budget might make substantially less progress than a well-run one.

If there was only one major AI project underway it would probably be managed less well than the overall best-run project selected from a diverse set of competing companies.

Unlike the Manhattan project - there's already sufficently strong commercial incentives for private companies to focus on the problem, it's not already clear exactly how the first AGI system will work, and capital markets today are more mature and capable of funding projects at much larger scales. My gut feeling is if AI was fully consolidated tomorrow - this is more likely to slow things down than speed them up.

rosehadshar @ 2024-12-05T13:33 (+5)

I agree that it's not necessarily true that centralising would speed up US development!

(I don't think we overlook this: we say "The US might slow down for other reasons. It’s not clear how the speedup from compute amalgamation nets out with other factors which might slow the US down:

- Bureaucracy. A centralised project would probably be more bureaucratic.

- Reduced innovation. Reducing the number of projects could reduce innovation.")

Interesting take that it's more likely to slow things down than speed things up. I tentatively agree, but I haven't thought deeply about just how much more compute a central project would have access to, and could imagine changing my mind if it were lots more.

SummaryBot @ 2024-12-04T22:23 (+1)

Executive summary: While centralizing Western AGI development into a single project could have major strategic implications, the authors argue it's unclear whether this would be beneficial overall and tentatively conclude it would be net negative due to risks from power concentration.

Key points:

- Race dynamics: Centralizing would reduce competition between Western projects but has unclear effects on US-China competition, potentially intensifying rather than reducing that race.

- Power concentration is a major concern: A single project would concentrate unprecedented power, reducing pluralism and increasing risks of coups/dictatorship, though good governance design could partially mitigate this.

- Information security implications are ambiguous: While fewer projects means less attack surface, a single project might attract more serious attacks and earlier attempts at theft.

- Rather than pushing for centralization specifically, efforts should focus on improving outcomes under either scenario through robust safeguards and governance structures.

- The authors reject arguments that centralization is inevitable, noting multiple projects could remain economically viable and government involvement doesn't necessitate full centralization.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.