The V&V method - A step towards safer AGI

By Yoav Hollander @ 2025-06-24T15:57 (+1)

This is a linkpost to https://blog.foretellix.com/2025/06/24/the-vv-method-a-step-towards-safer-agi/

Originally posted on my blog, 24 Jun 2025 - see also full PDF (26 pp)

Abstract

The V&V Method is a concrete, practical framework which can complement several alignment approaches. Instead of asking a nascent AGI to “do X,” we instruct it to design and rigorously verify a bounded “machine-for-X”. The machine (e.g. an Autonomous Vehicle or a “machine” for curing cancer) is prohibited from uncontrolled self-improvement; every new version must re-enter the same verification loop. Borrowing from safety-critical industries, the loop couples large-scale, scenario-based simulation with coverage metrics and a safety case that humans can audit. Human operators—supported by transparent evidence—retain veto power over deployment.

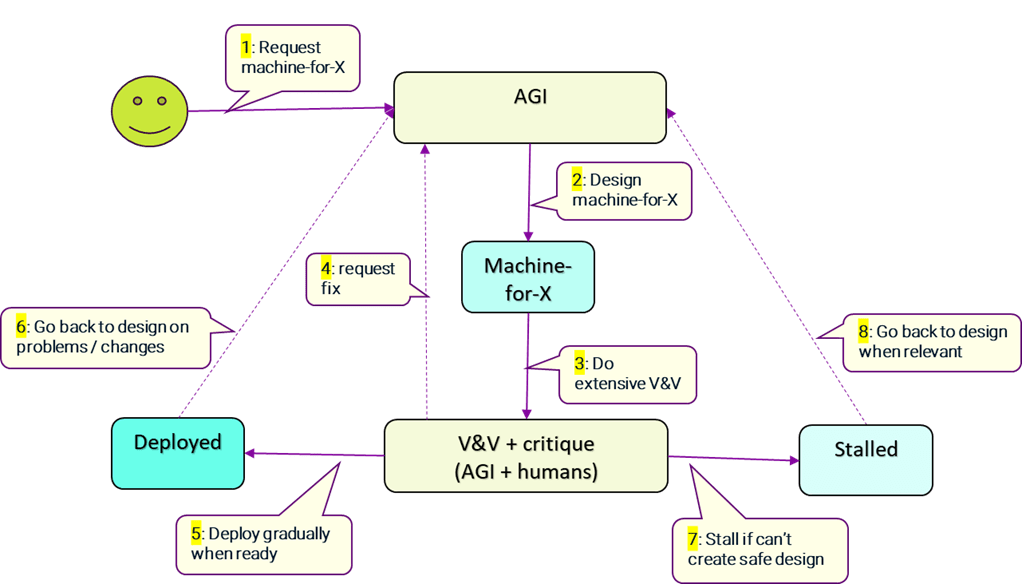

The method proceeds according to the following diagram:

This method is not a silver bullet: it still relies on aligned obedience, incentive structures that deter multi-agent collusion, and tolerates only partial assurance under tight competitive timelines. Yet it complements existing scalable-oversight proposals—bolstering Constitutional AI, IDA and CAIS with holistic system checks—and offers a practical migration path because industries already practice V&V for complex AI today. In the critical years when AGI first appears, the V&V Method could buy humanity time, reduce specification gaming, and focus research attention on the remaining hard gaps such as strategic deception and corrigibility.

TL;DR

- Ask AGI to build & verify a task-specific, non-self-improving machine

- Scenario-based, coverage-driven simulations + safety case give humans transparent evidence

- Main contributions: Complements Constitutional AI, improves human efficiency in Scalable Oversight, handles "simpler” reward hacking systematically, bolsters IDA and CAIS

- Works today in AVs; scales with smarter “AI workers”

- Limits: Needs aligned obedience & anti-collusion incentives

About the author

Yoav Hollander led the creation of three V&V standards (IEEE 1647 “e”, Accellera PSS, ASAM OpenSCENARIO DSL) and is now trying to connect some of these ideas with AGI safety.