AISN #27: Defensive Accelerationism, A Retrospective On The OpenAI Board Saga, And A New AI Bill From Senators Thune And Klobuchar

By Center for AI Safety, Dan H, Corin Katzke, allisoncyhuang @ 2023-12-07T15:57 (+10)

This is a linkpost to https://newsletter.safe.ai/p/aisn-27-defensive-accelerationism

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

Subscribe here to receive future versions.

Listen to the AI Safety Newsletter for free on Spotify.

Defensive Accelerationism

Vitalik Buterin, the creator of Ethereum, recently wrote an essay on the risks and opportunities of AI and other technologies. He responds to Marc Andreessen’s manifesto on techno-optimism and the growth of the effective accelerationism (e/acc) movement, and offers a more nuanced perspective.

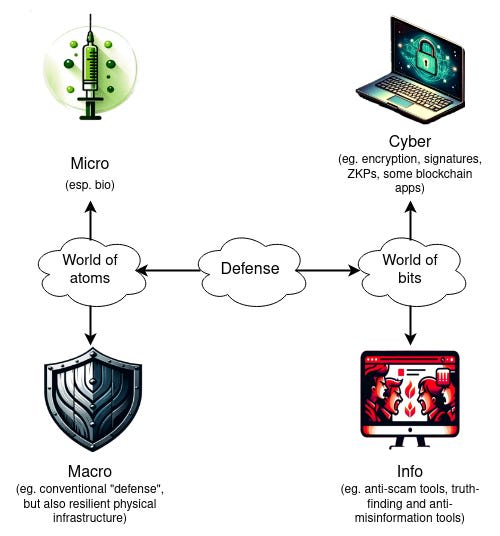

Technology is often great for humanity, the essay argues, but AI could be an exception to that rule. Rather than giving governments control of AI so they can protect us, Buterin argues that we should build defensive technologies that provide security against catastrophic risks in a decentralized society. Cybersecurity, biosecurity, resilient physical infrastructure, and a robust information ecosystem are some of the technologies Buterin believes we should build to protect ourselves from AI risks.

Technology has risks, but regulation is no panacea. Longer lifespans, lower poverty rates, and expanded access to education and information are among the many successes Buterin credits to technology. But most would recognize that technology can also cause harms, such as global warming. Buterin specifically says that, unlike most technologies, AI presents an existential threat to humanity.

To address this risk, some advocate strong government control of AI development. Buterin is uncomfortable with this solution, and he expects that many others will be too. Many of history’s worst catastrophes have been deliberately carried out by powerful political figures such as Stalin and Mao. AI could help brutal regimes surveil and control large populations, and Buterin is wary of accelerating that trend by pushing AI development from private labs to public ones.

Between the extremes of unrestrained technological development and absolute government control, Buterin advocates for a new path forwards. He calls his philosophy d/acc, where the “d” stands for defense, democracy, decentralization, or differential technological development.

Defensive technologies for a decentralized world. Buterin advocates the acceleration of technologies that would protect society from catastrophic risks. Specifically, he highlights:

- Biosecurity. Dense cities, frequent air travel, and modern biotechnology all raise the risk of pandemics, but we can improve our biosecurity by improving our air quality, hastening the development of vaccines and therapeutics, and monitoring for emerging pathogens.

- Cybersecurity. AIs which can code could be used in cyberattacks, but they can also be used by defenders to find and fix security flaws before they’re exploited. Buterin’s work on blockchains aims towards a future where some digital systems can be provably secure.

- Resilient Physical Infrastructure. Most of the expected deaths in a nuclear catastrophe would come not from the blast itself, but from disruptions to the supply chain of food, energy, and other essentials. Elon Musk has aspired to improve humanity’s physical infrastructure by making us less dependent on fossil fuels, providing internet connections via satellite, and ideally making humanity a multi-planetary species that could outlast a disaster on Earth.

- Robust Information Environment. For helping people find truth in an age of AI persuasion, Buterin points to prediction markets and consensus-generating algorithms such as Community Notes.

Scientists and CEOs might find themselves inspired by Buterin’s goal of building technology, rather than slowing it down. Yet for those who are concerned about AI and other catastrophic risks, Buterin offers a thoughtful view of the technologies that are most likely to keep our civilization safe. For those who are interested, there are many more thoughts in the full essay.

Retrospective on the OpenAI Board Saga

On November 17th, OpenAI announced that the board of directors had removed Sam Altman as CEO. After four days of corporate politics and negotiations, he returned as CEO. Here, we review the known facts about this series of events.

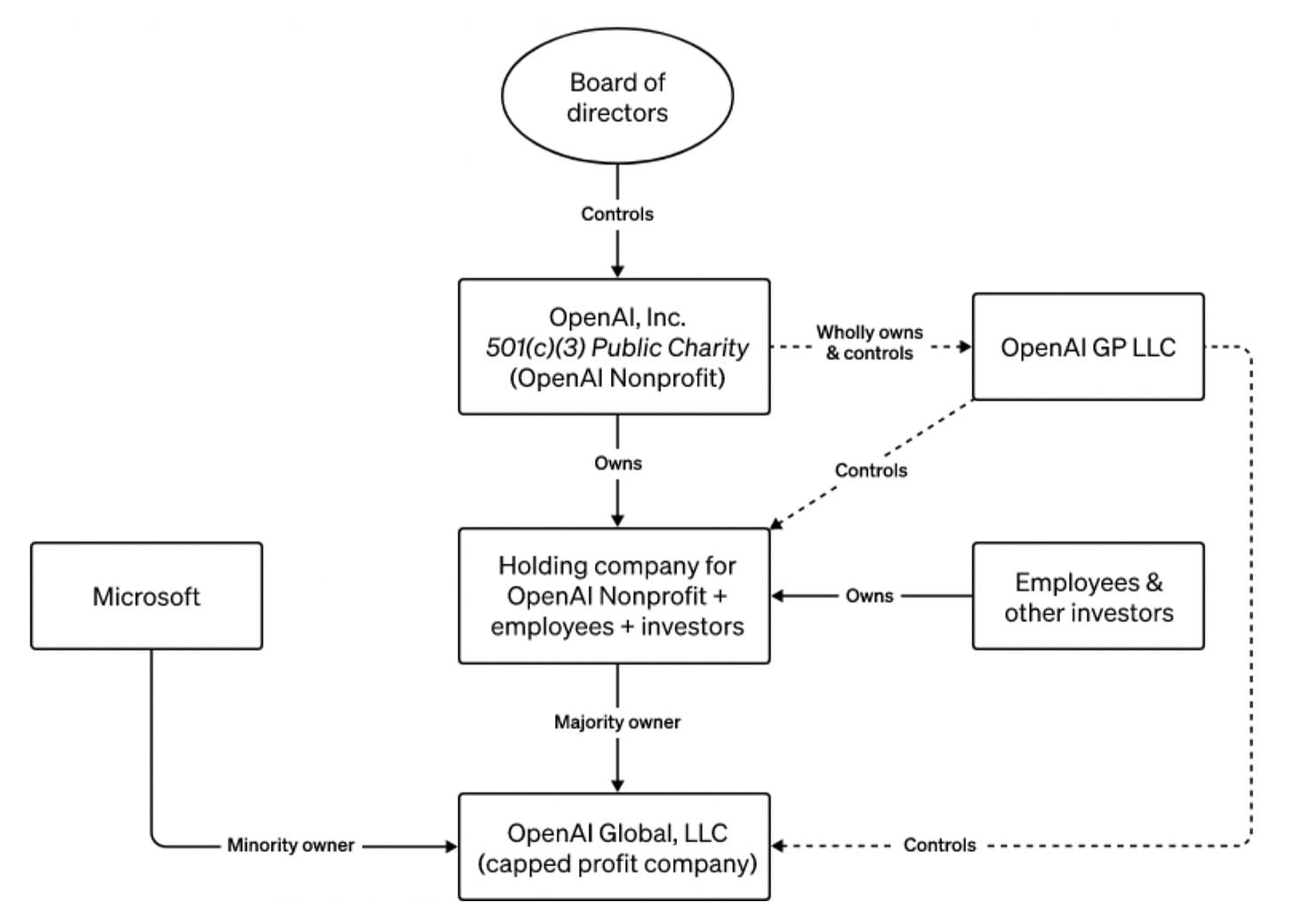

OpenAI is intended to be controlled by a nonprofit board. OpenAI was founded in 2015 as a nonprofit. In 2019, OpenAI announced the creation of a for-profit company that would help finance its expensive plans for scaling large language models. The profit that investments in OpenAI can yield was originally “capped” at 100x — anything beyond that would be redirected to the nonprofit. But after a recent rule change, that cap will rise 20% per year beginning in 2025.

OpenAI’s corporate structure was designed so that the nonprofit could retain legal control over the for-profit. The nonprofit is led by a board of directors, which holds power over the for-profit through its ability to select and remove the CEO of the for-profit. The board of directors is responsible for upholding the mission of the nonprofit, which is to ensure that artificial general intelligence benefits all of humanity.

The board removes Sam Altman as CEO. At the time of its removal of Sam Altman as CEO, the board of directors consisted of OpenAI Chief Scientist Ilya Sutskever, Quora CEO Adam D’Angelo, technology entrepreneur Tasha McCauley, and CSET’s Helen Toner. Greg Brockman — OpenAI’s co-founder and president — was removed from his position as chair of the board alongside Sam Altman.

According to the announcement, the board of directors fired Altman because he had not been “consistently candid in his communications with the board, hindering its ability to exercise its responsibilities.” While the board did not provide any specific examples of Altman's deception in the announcement, it was later reported that Altman had attempted to play board members against each other in an attempt to remove Helen Toner.

Altman had earlier confronted Toner about a paper she had co-authored. The paper in part criticizes OpenAI’s release of ChatGPT for accelerating the pace of AI development. It also praises one of OpenAI’s rivals, Anthropic, for delaying the release of their then-flagship model, Claude.

OpenAI employees turn against the board. The fallout of the announcement was swift and dramatic. Within a few days, Greg Brockman and Mira Murati (the initial interim CEO) had resigned, and almost all OpenAI employees had signed a petition threatening to resign and join Microsoft unless Sam Altman was reinstated and the board members resigned. During negotiations, board member Helen Toner reportedly said that allowing OpenAI to be destroyed by the departure of its investors and employees would be “consistent with the mission.” Ilya Sutskevar later flipped sides and joined the petition, tweeting “I deeply regret my participation in the board's actions.”

Microsoft tries to poach OpenAI employees. Microsoft — OpenAIs largest minority stakeholder — had not been informed of the board's plans, and offered OpenAI employees positions in its own AI research team. It briefly seemed as if Microsoft had successfully hired Sam Altman, Greg Brockman, and other senior OpenAI employees.

Sam Altman returns as CEO. On November 21st, OpenAI announced that it had reached an agreement that would have Sam Altman return as CEO and reorganize the board. The initial board is former Salesforce CEO Bret Taylor, former Secretary of the Treasury Larry Summers, and Adam D’Angelo. Among the initial board’s first goals is to expand the board, which will include a non-voting member from Microsoft. Sam Altman also faces an internal investigation of his behavior upon his return.

This series of events marks a time of significant change in OpenAI’s internal governance structure.

Klobuchar and Thune’s “light-touch” Senate bill

Senators Amy Klobuchar and John Thune introduced a new AI bill. It would require companies building high-risk AI systems to self-certify that they follow recommended safety guidelines. Notably, the bill only focuses on AI systems built for high-risk domains such as hiring and healthcare, but its main provisions would not apply to many general purpose foundation models including GPT-4.

The bill regulates specific AI applications, not general purpose AI systems. This application-based approach is similar to that taken by initial drafts of the EU AI Act, which specified domains where AI systems may be used for sensitive purposes, making them high-risk. General-purpose models like ChatGPT were not within the scope of the Act, but public use of these models and the demonstration of their capabilities has sparked debate on how to approach regulating them.

This indicates that the current approach taken by the Senate bill may not be enough. By governing AI systems based on their applications, highly capable general purpose systems are left unregulated.

Risk assessments are mandatory, but enforcement may be difficult. The bill requires developers and deployers of high-risk AI systems to perform an assessment every two years evaluating how potential risks from their systems are understood and managed. Additionally, the Department of Commerce will develop certification standards with the help of the to-be-established AI Certification Advisory Committee, which will include industry stakeholders.

Because companies are asked to self-certify their own compliance with these standards, it will be important for the Commerce Department to ensure companies are actually following the rules. But the bill offers few enforcement options. The agency is not provided any additional resources for enforcing the new law. Moreover, they can only prevent a model from being deployed if it is determined that the bill’s requirements were violated intentionally. If an AI system accidentally violates the law, the agency will be able to fine the company that built it, but will not be able to prohibit its deployment.

Mandatory identification of AI-generated content. The bill would require digital platforms to notify users when they are presented with AI-generated content. To ensure that malicious actors cannot pass off AI-generated content as authentic, NIST would develop new technical standards for determining the provenance of digital content.

Links

- Google DeepMind released Gemini, a new AI system that’s similar to GPT-4 Vision and narrowly beats it on a variety of benchmarks.

- Donald Trump says that as president he would immediately cancel Biden’s executive order on AI.

- Secretary of Commerce Gina Raimondo spoke on AI, China, GPU export controls, and more.

- The New York Times released a profile on the origins of today’s major AGI labs.

- The Congressional Research Service released a new report about AI for biology.

- Inflection released another LLM, with performance between that of GPT-3.5 and GPT-4.

- A new open source LLM from Chinese developers claims to outperform Llama 2.

- Here’s a new syllabus about legal and policy perspectives on AI regulation.

- Two Swiss universities have started a new research initiative on AI and AI safety.

- BARDA is accepting applications to fund AI applied to health security and CBRN threats.

- The Future of Life Institute’s new affiliate will incubate new organizations addressing AI risks.

- For those attending NeurIPS 2023, the UK’s AI Safety Institute will host an event, and there will also be an AI Safety Social.

See also: CAIS website, CAIS twitter, A technical safety research newsletter, An Overview of Catastrophic AI Risks, and our feedback form

Listen to the AI Safety Newsletter for free on Spotify.

Subscribe here to receive future versions.