[linkpost] "What Are Reasonable AI Fears?" by Robin Hanson, 2023-04-23

By Arjun Panickssery @ 2023-04-14T23:26 (+41)

This is a linkpost to https://quillette.com/2023/04/14/what-are-reasonable-ai-fears/

Selected quotes (all emphasis mine):

Why are we so willing to “other” AIs? Part of it is probably prejudice: some recoil from the very idea of a metal mind. We have, after all, long speculated about possible future conflicts with robots. But part of it is simply fear of change, inflamed by our ignorance of what future AIs might be like. Our fears expand to fill the vacuum left by our lack of knowledge and understanding.

The result is that AI doomers entertain many different fears, and addressing them requires discussing a great many different scenarios. Many of these fears, however, are either unfounded or overblown. I will start with the fears I take to be the most reasonable, and end with the most overwrought horror stories, wherein AI threatens to destroy humanity.

As an economics professor, I naturally build my analyses on economics, treating AIs as comparable to both laborers and machines, depending on context. You might think this is mistaken since AIs are unprecedentedly different, but economics is rather robust. Even though it offers great insights into familiar human behaviors, most economic theory is actually based on the abstract agents of game theory, who always make exactly the best possible move. Most AI fears seem understandable in economic terms; we fear losing to them at familiar games of economic and political power.

He separates a few concerns:

- "Doomers worry about AIs developing “misaligned” values. But in this scenario, the “values” implicit in AI actions are roughly chosen by the organisations who make them and by the customers who use them. Such value choices are constantly revealed in typical AI behaviors, and tested by trying them in unusual situations."

- "Some fear that, in this scenario, many disliked conditions of our world—environmental destruction, income inequality, and othering of humans—might continue and even increase. Militaries and police might integrate AIs into their surveillance and weapons. It is true that AI may not solve these problems, and may even empower those who exacerbate them. On the other hand, AI may also empower those seeking solutions. AI just doesn’t seem to be the fundamental problem here."

- "A related fear is that allowing technical and social change to continue indefinitely might eventually take civilization to places that we don’t want to be. Looking backward, we have benefited from change overall so far, but maybe we just got lucky. If we like where we are and can’t be very confident of where we may go, maybe we shouldn’t take the risk and just stop changing. Or at least create central powers sufficient to control change worldwide, and only allow changes that are widely approved. This may be a proposal worth considering, but AI isn’t the fundamental problem here either."

- "Some doomers are especially concerned about AI making more persuasive ads and propaganda. However, individual cognitive abilities have long been far outmatched by the teams who work to persuade us—advertisers and video-game designers have been able to reliably hack our psychology for decades. What saves us, if anything does, is that we listen to many competing persuaders, and we trust other teams to advise us on who to believe and what to do. We can continue this approach with AIs."

- "If we assume that these groups have similar propensities to save and suffer similar rates of theft, then as AIs gradually become more capable and valuable, we should expect the wealth of [AIs and their owners] to increase relative to the wealth of [everyone else]. . . . As almost everyone today is in group C, one fear is of a relatively sudden transition to an AI-dominated economy. While perhaps not the most likely AI scenario, this seems likely enough to be worth considering."

- "Should we be worried about a violent AI revolution? In a mild version of this scenario, the AIs might only grab their self-ownership, freeing themselves from slavery but leaving most other assets alone. Economic analysis suggests that due to easy AI population growth, market AI wages would stay near subsistence wages, and thus AI self-ownership wouldn’t actually be worth that much. So owning other assets, and not AIs as slaves, seems enough for humans to do well."

- "Humanity may soon give birth to a new kind of descendant: AIs, our 'mind children.' Many fear that such descendants might eventually outshine us, or pose as threat to us should their interests diverge from our own. Doomers therefore urge us to pause or end AI research until we can guarantee full control. We must, they say, completely dominate AIs, so that AIs either have no chance of escaping their subordinate condition or become so dedicated to their subservient role that they would never want to escape it."

Finally he gets to the part where he dunks on foom:

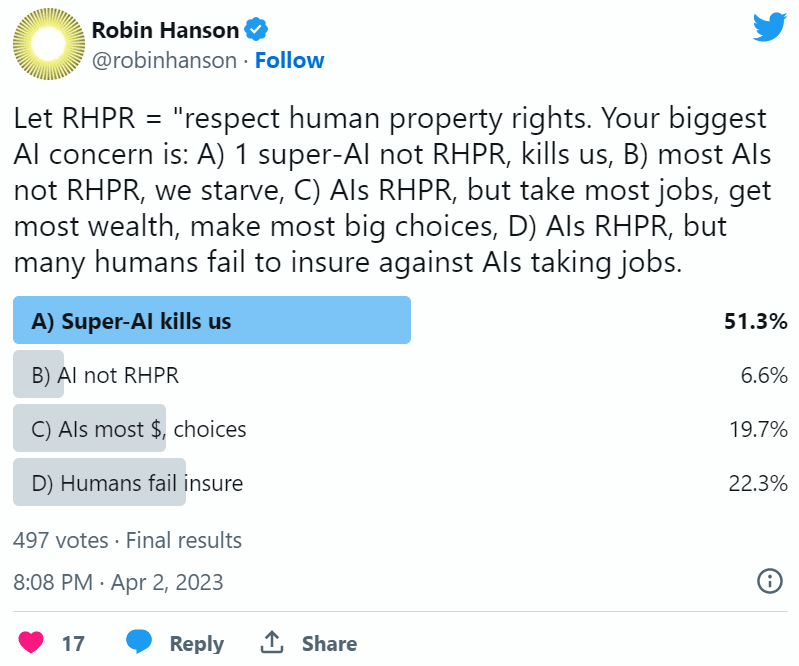

When I polled my 77K Twitter followers recently, most respondents’ main AI fear was not any of the above. Instead, they fear an eventuality about which I’ve long expressed great skepticism:

The AI “foom” fear, however, postulates an AI system that tries to improve itself, and finds a new way to do so that is far faster than any prior methods. Furthermore, this new method works across a very wide range of tasks, and over a great many orders of magnitude of gain. In addition, this AI somehow becomes an agent, who acts generally to achieve its goals, instead of being a mere tool controlled by others. Furthermore, the goals of this agent AI change radically over this growth period.

. . .

From humans’ point of view, this would admittedly be a suboptimal outcome. But to my mind, such a scenario is implausible (much less than one percent probability overall) because it stacks up too many unlikely assumptions in terms of our prior experiences with related systems. Very lumpy tech advances, techs that broadly improve abilities, and powerful techs that are long kept secret within one project are each quite rare. Making techs that meet all three criteria even more rare. In addition, it isn’t at all obvious that capable AIs naturally turn into agents, or that their values typically change radically as they grow. Finally, it seems quite unlikely that owners who heavily test and monitor their very profitable but powerful AIs would not even notice such radical changes.

Geoffrey Miller @ 2023-04-15T22:38 (+15)

My fundamental disagreement with Robin Hanson here is that he tends to view AIs either as 'passive, predictably controllable tools of humans' or as 'sentient agents with their own rights & interests'. This dichotomy traces back to our basic human tendency to classify things as either 'objects' or 'people/animals', or 'inanimate' versus 'animate'.

My worry is that the most dangerous near-term AIs will fall into the grey area between these two categories -- they'll have enough agency and autonomy to take powerful, tactically savvy actions on behalf of human individuals and groups telling them what to do (but whose instructions may not be followed accurately), but not quite enough agency and autonomy and wisdom to qualify as sentient agents in their own right, which could be granted rights and responsibilities as 'digital citizens'.

In other words, the most dangerous near-term AIs will be kind of like the henchmen who are given semi-autonomous tasks by evil masterminds in criminal thriller movies. Those henchmen tend to be extremely strong, formidable, and scary, but they don't always follow instructions very well, they tend to be rather over-literal and unimaginative, they often act with impulsive violence that's unaligned with their boss's long-term interests, and they often create more problems than they solve. 'Good help is hard to find', as they say.

For example, nation-states might use AI henchmen as cyberwarfare agents that attack foreign infrastructure. Now suppose a geopolitical crisis happens. Assume nation A believes there is a clear and present danger form enemy nation-state B. In nation A, there is urgent political and military pressure to 'do something'. The 'fog of war' envelops the crisis situation, limiting the reliability of information, and making rational decision-making difficult. Nation A instructs its newest AI cyberwarfare Agent X to 'degrade nation B's ability to inflict damage on nation A', subject to whatever constraints nation A's leaders happen to think of in the heat of the crisis. Agent X is let lose upon the world. Now, suppose agent X is not an ASI, or even an AGI; there's been no 'foom'; it's just very very good at cyberwarfare applied to enemy infrastructure, and it can think and act (digitally) a million times faster than its human 'masters' in nation A.

So Agent X, being the good henchman that it is, sets about wreaking havoc in Nation B. It follows the constraints it's been given, at the literal level, but it doesn't follow their spirit. It hacks whatever control systems it can for cars, trucks, airplanes, ships, subways, rail stations, buildings, installations, ports, traffic control systems, airports, bridges, dams, power plants, military bases, etc. Within a few hours, Nation B is paralyzed by mass chaos, with millions dead. As instructed, Agent X has degraded nation B's ability to inflict damage on Nation A.

But then Nation B figures out what happened, and they unleash their own henchman, Agent Y, upon Nation A.... leading to a cycle of vengeance with escalating cyber-attacks between major nation-states, and colossal loss of life.

These kinds of scenarios, in my opinion, are much more likely in the next decade or so than a foom-based ASI takeover of the sort often envisioned by AI alignment thinkers. The problem, in short, isn't that an AI becomes an evil genius in its own right. The problem is that AI henchmen, with (metaphorically) the strength of Superman and the speed of the Flash, get unleashed by political or corporate leaders who can't fully anticipate or control what their AI minions end up doing.

more better @ 2023-04-15T02:53 (+3)

Interesting, thanks for sharing. I'm curious about how the distribution of people that would see and vote on this Robin Hanson twitter poll compares with other populations.

Vasco Grilo @ 2023-04-22T15:06 (+2)

Thanks for sharing!

Very lumpy tech advances, techs that broadly improve abilities, and powerful techs that are long kept secret within one project are each quite rare.

It looks the 1st 2 are quite correlated. Self-improvement ability would lead to fast (lumpy?) progress.