An Overview of the AI Safety Funding Situation

By Stephen McAleese @ 2023-07-12T14:54 (+140)

Note: this post was updated in January 2025 to reflect all available data from 2024.

Introduction

AI safety is a field concerned with preventing negative outcomes from AI systems and ensuring that AI is beneficial to humanity. The field does research on problems such as the AI alignment problem which is the problem of designing AI systems that follow user intentions and behave in a desirable and beneficial way.

Understanding and solving AI safety problems may involve reading past research, producing new research in the form of papers or posts, running experiments with ML models, and so on. Producing research typically involves many different inputs such as research staff, compute, equipment, and office space.

These inputs all require funding and therefore funding is a crucial input for enabling or accelerating AI safety research. Securing funding is usually a prerequisite for starting or continuing AI safety research in industry, in an academic setting, or independently.

There are many barriers that could prevent people from working on AI safety. Funding is one of them. Even if someone is working on AI safety, a lack of funding may prevent them from continuing to work on it.

It’s not clear how hard AI safety problems like AI alignment are. But in any case, humanity is more likely to solve them if there are hundreds or thousands of brilliant minds working on them rather than one guy. I would like there to be a large and thriving community of people working on AI safety and I think funding is an important prerequisite for enabling that.

The goal of this post is to give the reader a better understanding of funding opportunities in AI safety so that hopefully funding will be less of a barrier if they want to work on AI safety. The post starts with a high-level overview of the AI safety funding situation followed by a more in-depth description of various funding opportunities.

Past work

To get an overview of AI safety spending, we first need to find out how much is spent on it per year. We can use past work as a prior and then use grant data to find a more accurate estimate.

- Changes in funding in the AI safety field (2017) by the Center for Effective Altruism estimated the change in AI safety funding between 2014 - 2017. In 2017, the post estimated that total AI safety spending was about $9 million.

- How are resources in effective altruism allocated across issues? (2020) by 80,000 Hours estimated the amount of money spent by EA on AI safety in 2019. Using data from the Open Philanthropy grants database, the post says that EA spent about $40 million on AI safety globally in 2019.

- In The Precipice (2020), Toby Ord estimated that between $10 million and $50 million was spent on reducing AI risk in 2020.

- 2021 AI Alignment Literature Review and Charity Comparison is an in-depth review of AI safety organizations and grantmakers and has a lot of relevant information.

Overview of global AI safety funding

One way to estimate total global spending on AI safety is to aggregate the total donations of major AI safety funds such as Open Philanthropy.

It’s important to note that the definition of ‘AI safety’ I’m using is AI safety research that is focused on reducing risks from advanced AI (AGI) such as existential risks which is the type of AI safety research I think is more neglected and important than other research in the long term. Therefore my analysis will be focused on EA funds and top AI labs and I don’t intend to measure investment on near-term AI safety concerns such as effects on the labor market, fairness, privacy, ethics, disinformation, etc.

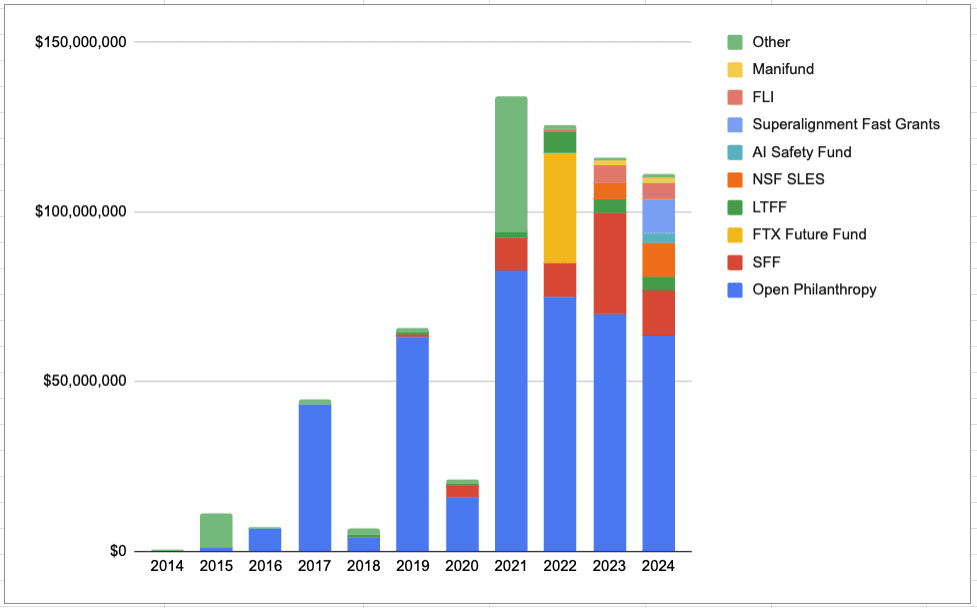

A high-level overview of global AI safety funding is shown in the following bar chart which was created in Google Sheets (link) and is based on data from analyzing grant databases from Open Philanthropy and other funds (Colab notebook link).

Some comments on the bar chart above:

- The reason why the Open Phil grants seem to vary a lot year-by-year is that Open Phil often makes large multi-year grants (e.g. a $11m grant to CHAI over 5 years in 2021).

- The average annual increase in funding in the chart is about $13 million.

- There is some overlap between Open Philanthropy and Long-Term Future Fund (LTFF) because Open Phil often donates money to LTFF and about half of LTFF funding comes from Open Phil. In the chart above, I excluded the grants from Open Phil to LTFF from the Open Phil data to avoid double counting.

- The chart mainly includes AI safety donations by major AI safety funds. Other sources of funding such as smaller funds, academic grants, individual donations, and spending by industry labs are not included in the chart so the estimates are probably conservative. More on this below.

- The FTX Future Fund amount only includes grants up to August 2022 and could be an overestimate if many of these grants were not fulfilled. More on this below.

- The large 'Other' amounts are due to large cryptocurrency donations such as the one to MIRI ($15M) and FLI ($25M).

- The graph doesn't include the enormous $665M cryptocurrency donation received by FLI in 2021.

Descriptions of major AI safety funds

Open Philanthropy (Open Phil)

Open Philanthropy (Open Phil) is a grant-making and research foundation that was founded in 2017 by Holden Karnofsky, Dustin Moskovitz, and Cari Tuna. Its primary funder is Dustin Moskovitz who made his fortune by founding Facebook and Asana and has a net worth of over $12 billion. Open Phil funds many different cause areas such as global health and development, farm animal welfare, Effective Altruism community growth, biosecurity, and AI safety research.

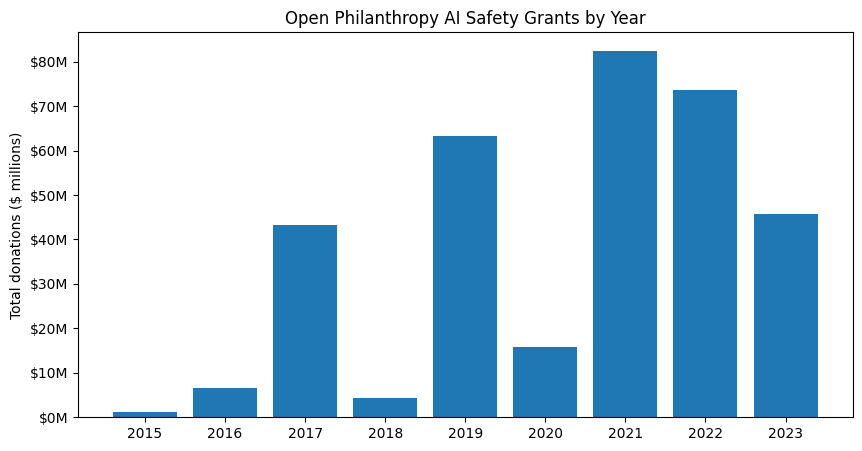

Since it was founded in 2017, Open Phil has donated about $2.8 billion of which about $336 million was spent on AI safety (~12%). The median Open Phil AI safety grant is about $257k and the average AI safety grant is $1.67 million. In 2023, Open Phil spent about $46 million on AI safety making it probably the largest funder of AI safety in the world.

Open Phil has historically made medium-sized to large grants to organizations such as Epoch AI, the Alignment Research Center, the Center for AI Safety, Redwood Research, and the Machine Intelligence Research Institute (MIRI). Open Phil also offers grants for individuals who want to make a career transition towards working on AI safety.

Open Phil’s past grants are publicly available in their grant database which is what I used to create the bar chart below.

Examples of Open Phil AI safety grants in 2023:

- Epoch — General Support (2023), April 2023, $6.9m

- Conjecture — SERI MATS (2023), April 2023, $245k

- Center for AI Safety — General Support (2023), April 2023, $4.0m

- FAR AI — General Support (2023), July 2023, $460k

- Eleuther AI — Interpretability Research, November 2023, $2.6m

Survival and Flourishing Fund (SFF)

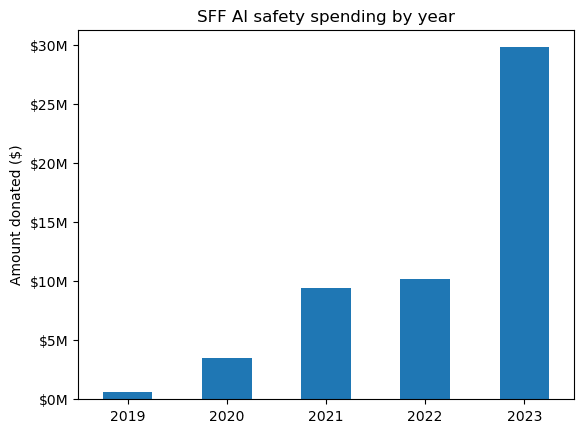

SFF is the second largest funder of AI safety after Open Phil. SFF is mainly funded by Jaan Tallinn who has a net worth of about $900 million. SFF has donated about $53 million to AI safety projects since it started grant-making in 2019. SFF usually has two funding rounds per year. Past SFF grants are available on the SFF website.

SFF spent about $30 million on AI safety in 2023 and has funded well-known organizations and projects such as ARC Evals, the Center for the Governance of AI, FAR AI, Ought, and Redwood Research.

Like Open Phil, SFF also tends to make medium to large grants to AI safety organizations. The median SFF AI safety grant is $100k and the average AI safety grant is $274k.

Examples of SFF grants in 2023:

- Campaign for Responsible AI, $250k

- AI Impacts, $341k

- Apollo Research, $882k

- Alignment Research Center (Evals Team), $325k

FTX Future Fund

The FTX Future Fund was a fund created in February 2022 but it was shut down just a few months later in November 2022 because FTX went bankrupt. From February to June of 2022, the Future Fund donated about $20 million to AI safety projects.

Since FTX filed for bankruptcy on 11 November 2022, many donations after August 11 2022 had to be returned as part of the bankruptcy process so I didn't include donations made after 1 August 2022 in the total.

Based on the Future Fund grants database, I estimate that the Future Fund donated about $32 million to AI safety projects from February to August 2022.

Examples of Future Fund grants from February to August 2022:

- Ought - Building Elicit, $5m

- Lionel Levine, Cornell University - Alignment theory research, $1.5m

- ML Safety Scholars Program - General Support, $490k

- AI Impacts - support for the 'When Will AI Exceed Human Performance?' survey, $250k

- Adversarial Robustness Prizes at ECCV, $30k

The Future Fund website and grant database were taken down but are still available here.

Long-Term Future Fund (LTFF)

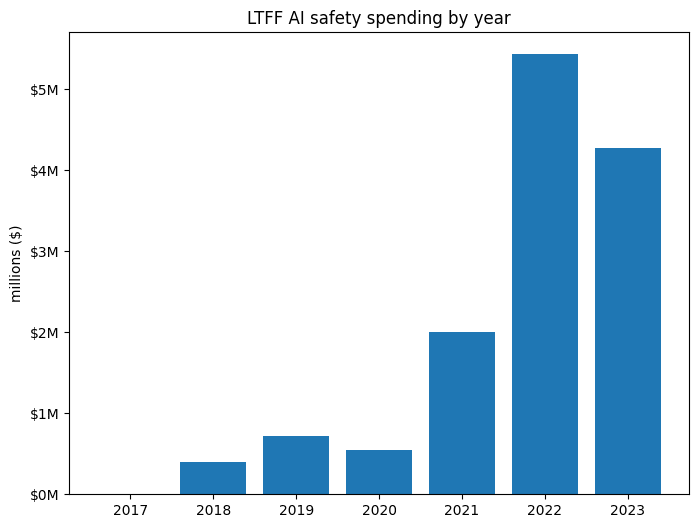

LTFF is one of the four EA funds along with the Global Development Fund, Animal Welfare Fund, and EA Infrastructure Fund. LTFF makes grants for longtermist projects related to cause areas like pandemics, existential risk analysis, and AI safety.

Unlike the other funds mentioned before, LTFF tends to make smaller grants. Whereas the median Open Phil AI safety grant is $257k, the median LTFF AI safety grant is just $25k which makes it suitable for smaller projects such as funding individuals or small groups for upskilling, career transitions, or independent research.

LTFF is also not a ‘primary’ fund like the other funds given that it is often funded by other funds. For example, LTFF has received significant donations from Open Phil and SFF.

According to the EA funds sources page, about 40% of LTFF’s funding came from Open Phil in 2022, about 50% came from direct donations and the rest came from other institutional funds. In 2023, LTFF received $3.1 million from Open Philanthropy.

LTFF has spent about $20 million in total since it started grant-making in 2017. Of that amount, about $10 million was related to AI safety. In 2023, LTFF granted $6.67 million in total. I estimate that about 2/3 of the grants are related to AI safety which means LTFF spent about $4.3m spent on AI safety in 2023. LTFF plans to spend $8.4 million in total in 2024 which will probably result in over $5 million being spent on AI safety.

Other sources of funding

Foundation Model Taskforce

On 24 April 2023, the UK government announced £100 million in funding for a new foundation model taskforce which will work on communication related to AI risk, international coordination, and AI regulation.

Frontier Model Forum AI safety fund

On 25 October 2023, the Frontier Model Forum, an organization of industry labs including Anthropic, Google, Microsoft, and OpenAI, announced $10 million in AI safety funding. The primary focus of the new fund will be supporting the development of new red-teaming and evaluation techniques.

Superalignment Fast Grants

On 14 December 2023, OpenAI announced $10 million in grants to support technical research on AI safety. The new fund aims to fund AI safety research directions such as weak-to-strong generalization, interpretability, and scalable oversight. The funding is available to a wide variety of different people including academic labs, grad students, nonprofits, and individual researchers.

Vitalik Buterin AI Existential Safety Fellowship

The Vitalik Buterin AI Existential Safety Fellowship is associated with the Future of Life Institute (FLI) and provides funding to support PhD students and postdoctoral researchers interested in doing existential AI safety research. The fund also provides funding for travel support.

Giving What We Can (GWWC)

GWWC is a community of over 8,000 people who have committed to donating at least 10% of their lifetime income to charity. In 2021, GWWC members donated $22.7 million to charity. About 10% of those donations go to longtermist charities (~$2.27m) so I estimate that GWWC contributes about $1 million to AI safety per year.

GWWC lists three different longtermist funds to donate to including the Long-Term Future Fund (LTFF), Patient Philanthropy Fund, and Longtermism Fund. Though in addition to GWWC donations, these funds also receive donations from larger primary funds such as Open Phil.

Nonlinear Fund

The Nonlinear Fund is for projects related to reducing existential risk from AI. They have created a project named Nonlinear Network which allows people looking for AI safety funding to apply for multiple funds at the same time. Nonlinear Fund is also the organization behind Superlinear which offers financial prizes for AI safety challenges.

Lightspeed Grants

Lightspeed Grants is a longtermist grant-making organization created in 2022 that aims to distribute $5 million in its initial funding round. The primary funder of the first round is Jaan Tallinn (Jaan is also the primary funder of SFF). The grants for Lightspeed Grants are included in the Survival and Flourishing Fund total above.

For-profit companies

Many organizations working on AI safety are for-profit companies that use profits or investments to fund their research rather than philanthropic funding. For example, OpenAI, Anthropic, DeepMind, and Conjecture are for-profit companies that have AI safety teams.

Conjecture was founded in 2022 and has received $25 million in venture capital funding so far. Anthropic was founded in 2021 and has so far received over $7 billion in venture capital funding. Google DeepMind (originally DeepMind) was founded in 2012 and is now a profitable company with a revenue of about $1 billion per year and similar expenses. OpenAI was founded in 2016 and is probably the best-funded AI startup in the world after its partner organization Microsoft agreed to invest $10 billion in it in 2023. OpenAI has received over $11 billion in venture capital funding so far.

We can calculate how much funding these companies are contributing to AI safety by estimating how much it would counterfactually cost to fund their AI safety teams if they were non-profits like Redwood Research or MIRI.

Because of various expenses such as payroll taxes, health insurance, and retirement benefits, the true cost of an employee is typically about 30% higher than the cost of an employee’s gross salary. Then there are other expenses such as office space, travel, events, compute, and so on. Based on the tax returns of MIRI and Redwood Research, the ratio of total expenses to wages is 1.6 and 3.3 respectively so I think a good rule of thumb is that an employee’s cost to an organization is about twice their gross salary.

To calculate the total financial contribution of an organization to AI safety research, we can multiply the size of the AI safety team by the average wage of AI safety researchers in the company and the 2X multiplier.

I created a Guesstimate model to calculate the combined contribution of the four companies. The model is also summarized in the following table.

| Company name | Number of employees [1] | AI safety team size (estimated) | Median gross salary (estimated) | Total cost per employee (estimated) | Total funding contribution (estimated) |

| DeepMind | 1722 | 5-20 | $200k | $400k | $1.6-15m |

| OpenAI | 1268 | 5-20 | $290k | $600k | $2.9-20m |

| Anthropic | 164 | 10-40 | $360k | $600k | $6.2-32m |

| Conjecture | 21 | 5-15 | $150k | $300k | $1.2-5.5m |

| Total | $32m |

The Guesstimate model’s estimate is $32 million per year (range: $19 - 54 million).

This figure seems intuitively plausible because both for-profit and non-profit organizations have made significant contributions to AI safety. Non-profits such as Redwood Research, MIRI, ARC, and the Center for AI Safety have made many contributions to AI safety research. But so have for-profit AI companies. For example, OpenAI invented RLHF, Anthropic has made significant contributions to interpretability, DeepMind has done work on goal misgeneralization, and the popular concept of viewing language models as simulators was invented at Conjecture. This estimate is probably conservative because I’d imagine that for-profit companies such as OpenAI spend much more on compute than non-profits like Redwood Research.

Academic research

The academic contribution to AI safety could be large. There are over 200 researchers on Google Scholar that have the ‘AI Safety’ tag on their profile (though many of these people work at companies or non-profits rather than academia).

There are several academic professors and research groups working on AI safety such as:

- David Krueger (University of Cambridge)

- Sam Bowman (NYU)

- Jacob Steinhardt (UC Berkeley)

- The Algorithmic Alignment Group (MIT)

- Foundations of Cooperative AI Lab (CMU)

- The Alignment of Complex Systems Research Group (Charles University, Prague)

Many different countries fund academic research. For the sake of brevity, I’ll focus on academic funding in the US and the UK below.

Academic research in the US

About 40% of basic scientific research is funded by the US federal government (which often takes the form of grants to universities) and the National Science Foundation (NSF) funds about 25% of all federally funded basic research. It has an annual budget of about $8 billion for all research and $1 billion for computer science research. NSF also offers the Graduate Research Fellowship Program (NSF-GRFP) which provides funding for master’s or doctoral study to about 2,000 US students every year (worth a total of ~$98 million). The acceptance rate of the GRFP is about 16%.

NSF has announced that it will spend $10 million on AI safety research in 2023 and 2024 (20 million in total) though $5 million has come from Open Philanthropy.

A description of the program:

“While traditional machine learning systems are evaluated pointwise with respect to a fixed test set, such static coverage provides only limited assurance when exposed to unprecedented conditions in high-stakes operating environments … Safety also requires resilience to “unknown unknowns”, which necessitates improved methods for monitoring … In some instances, safety may further require new methods for reverse-engineering, inspecting, and interpreting the internal logic of learned models to identify unexpected behavior that could not be found by black-box testing alone.”

From reading the synopsis, my understanding is that it’s focused on research in AI safety areas such as adversarial robustness, anomaly detection, interpretability, and maybe deceptive alignment.

According to the grant description, grant proposals may be submitted by Institutions of Higher Education (e.g. university research groups) or non-profit, non-academic organizations located in the US (e.g. Redwood Research).

Academic research in the UK

In the UK, around half of UK government expenditure on R&D is funded by UK Research and Innovation (UKRI). Every year UKRI allocates approximately £1.5 billion (~$1.9b) for research grants from its total budget of around £8 billion (~$10b). UKRI is composed of nine research councils. One of them is the Engineering and Physical Sciences Research Council which funds research in areas such as mathematics, physics, chemistry, computer science, and artificial intelligence and has a budget of around £1 billion (~$1.3b) per year.

In 2022, UKRI announced a £117 million (~$150m) grant for UK Centres for Doctoral Training in Artificial Intelligence. The grant allows principal investigators of UK research organizations such as universities to apply for funding for fully-funded four-year PhD studentships which are typically 4 years in the UK. The grant is intended to support 10-15 CDTs and each CDT is expected to support at least 50 students so the grant should support at least 500 students.

The scope of the grant covers AI research in priority areas such as using AI to improve scientific productivity, health, the environment, agriculture, defense, creative industries, and responsible and trustworthy AI.

For the responsible and trustworthy AI section, it says, “The expanding capabilities and range of applications of AI necessitate new research into responsible approaches to AI that are secure, safe, reliable and that operate in a way we understand, trust, and can investigate if they fail.”

The financial contribution of academia to AI safety

This post uses Fermi estimates to estimate that the contribution of academia to AI safety is roughly equivalent to EA’s contribution to AI safety. The argument is that academia is huge and does a lot of AI safety-adjacent research on topics such as transparency, robustness, and safe RL. Therefore, even if this work is strongly discounted because it’s only tangentially related to AGI safety, the discounted contribution is still large.

It’s challenging to estimate the financial contribution of academia to AI safety because the total depends a lot on what you measure. For example, the academic contribution to upskilling seems large given that universities offer degrees in subjects like math and computer science and often have large, expensive campuses and other resources that are useful for research. These factors are relevant because some of EA spending on AI safety involves spending on upskilling and infrastructure.

But I’m going to be more conservative and focus only on actual AI safety research that is done by academics and not funded by EA funds such as Open Phil. For example, someone might do a PhD with a focus on AI safety that is funded by the government.

Although government organizations like NSF in the US spend a lot on research, I’m pretty sure that most of the academic AI safety groups mentioned above are funded by EA funds like Open Phil (e.g. CHAI) so the financial contribution of (non-EA) academic funding to AI safety is not as large as you might think.

I made a guesstimate model to estimate the financial contribution of academic funding to AI safety. My lower bound estimate is $220k which includes only EA academics and my upper bound is $64m which includes the non-EA contributions to AI safety.

Based on this information, my best guest conservative estimate of the financial contribution of academia to AI safety research is about $1 million per year. Combined with the NSF grant, the total is $11 million per year for 2023.

Manifund

Manifund is a crowd-funding platform that often funds projects related to AI safety.

Other individual donors

- In 2015, Elon Musk donated $10 million to the Future of Life Institute.

- In 2021, Vitalik Buterin donated $4 million to MIRI.

- In 2021, an anonymous crypto donor donated $15.6 million to MIRI.

- MIRI has received donations from many other individuals in the past.

Summary of other sources of funding

Summary of other sources of funding:

- Frontier Model Forum AI safety fund: $10m.

- Superalignment fast grants: $10m.

- GWWC: ~$1m per year.

- For-profit companies: ~$32m per year.

- Academic research: ~$1m normally, $11m per year in 2023 because of the NSF grant.

- Individual donors: probably at least $1m per year.

Q&A

This section is for me to attempt to answer some informal questions on AI safety funding.

How do I work on AI safety?

I think there are three main ways to work on AI safety:

- Get a job in a for-profit or non-profit organization. I think the most straightforward way to work on AI safety is to get a job working on AI safety in an organization. OpenAI, DeepMind, Redwood Research, FHI, and MIRI are some examples of organizations working on technical AI safety, and organizations such as Epoch AI, the Center for the Governance of AI, and the Centre for Security and Emerging Technology (CSET) work on non-technical AI safety areas like AI governance. Apart from for-profit and non-profit organizations, there may also be opportunities to work on AI safety in government institutions.

- Independent research. Another path to working on AI safety is to participate in a training program like AI Safety Camp or SERI MATS. Participants do research under a mentor and may apply for grants from funds such as LTFF to continue doing independent research.

- Academic research. Another option is to get a postdoctoral degree such as a master’s or PhD degree. Usually, master’s degrees don’t offer stipends but most PhD degrees do. Postdoctoral researchers and professors are also paid to do research by universities.

If you are not interested in working directly on AI safety or if changing your career path seems too risky, another option is to earn to give. Giving What We Can makes it easy to create regular donations to funds such as the Long-Term Future Fund (LTFF).

What are my chances of getting funded?

LTFF funded about 19% of proposals in this 2021 funding round and 54% in this 2021 funding round so it seems like about a quarter of proposals are funded by LTFF. Though the (now non-existent) FTX Future Fund seems to have had an acceptance rate of just 4%.

Is AI safety talent or funding constrained?

This question does not have a definite answer because it depends on how you define 'talent'. The higher the talent bar is, the more talent-constrained over funding-constrained the field is, and vice-versa.

This 80,000 post argues that AI safety is probably more constrained by talent than funding. The reason why is that whereas money can be raised quickly, it’s not as easy to fill positions with talented researchers and training takes time. Talent in mentorship or leadership positions is probably even more scarce.

The counterargument is that there are a lot of talented people in EA and that increasing funding would provide more opportunities for training resulting in more talented people. Using that argument, a lack of talent could be caused by a lack of training opportunities which could be caused by a lack of funding.

What may seem like a funding problem could really be a talent problem: often donors will only give money if they see promising research projects and see that progress is tractable. And talent problems could really be funding problems: there may not be enough money to fund training programs (e.g. SERI MATS).

Another possibility is that AI safety is leadership-constrained. For example, the SERI MATS 2023 summer program received 460 applicants and only about 60 were accepted (13%) so it doesn't seem like there was a lack of talent and SERI MATS costs less than $1 million so surely there was enough money for it. But there probably aren't that many people who could be SERI MATS mentors or create a program like it so my guess is that good leadership and experienced researchers are scarce. This point has been emphasized by Nonlinear.

Another way to answer the question is to ask whether the field would be better off with one more talented person or the salary of that person (e.g. $100,000). If there were lots of talent and not enough funding, choosing the money would be better because it would make it possible to quickly hire another talented researcher. If there were few talented researchers and lots of funding, the talented researcher would be a better choice because otherwise the money would just not be spent.

My overall view is that AI safety is to some extent constrained by all three: more funding would increase talent via more or larger training programs or higher salaries, more talent would attract more funding via credible and tractable research directions and better leadership would benefit both by creating new organizations that could soak up more talent and funding.

- ^

Source: LinkedIn.

jackva @ 2024-03-27T17:32 (+7)

It seems like that this number will increase by 50% once FLI (Foundation) fully comes online as a grantmaker (assuming they spend 10%/year of their USD 500M+ gift)

https://www.politico.com/news/2024/03/25/a-665m-crypto-war-chest-roils-ai-safety-fight-00148621

MichaelA @ 2023-07-13T18:03 (+7)

FYI, if any readers want just a list of funding opportunities and to see some that aren't in here, they could check out List of EA funding opportunities.

(But note that that includes some things not relevant to AI safety, and excludes some funding sources from outside the EA community.)

Roman Leventov @ 2023-07-13T17:45 (+7)

AI safety is a field concerned with preventing negative outcomes from AI systems and ensuring that AI is beneficial to humanity.

This is a bad definition of "AI safety" as a field, which muddles the water somewhat. I would say that AI safety is a particular R&D branch (plus we can add here meta and proxy activities for this R&D field, such as AI safety fieldbuilding, education, outreach and marketing among students, grantmaking, and platform development such as what apartresearch.com are doing), of the gamut of activity that strives to "prevent the negative result of civilisational AI transition".

There are also other sorts of activity that strive for that more or less directly, some of which are also R&D (such as governance R&D (cip.org), R&D in cryptography, infosec, and internet decentralisation (trustoverip.org)), and others are not R&D: good old activism and outreach to the general public (StopAI, PauseAI), good old governance (policy development, UK foundational model task force), and various "mitigation" or "differential development" projects and startups, such as Optic, Digital Gaia, Ought, social innovations (I don't know about any good examples as of yet, though), innovations in education and psychological training of people (I don't know about any good examples as of yet). See more details and ideas in this comment.

It's misleading to call this whole gamut of activities "AI safety". It's maybe "AI risk mitigation". By the way, 80000 hours, despite properly calling "Preventing an AI-related catastrophe", also suggest that the only two ways to apply one's efforts to this cause is "technical AI safety research" and "governance research and implementation", which is wrong, as I demonstrated above.

Somebody may ask, isn't technical AI safety research more direct and more effective way to tackle this cause area? I suspect that it might not be the case for people who don't work at AGI labs. That is, I suspect that independent or academic AI safety research might be inefficient enough (at least for most people attempting it) that it would be more effective to apply themselves to various other activities, and "mitigation" or "differential development" projects of the likes that are described above. (I will publish a post that details reasoning behind this suspicion later, but for now this comment has the beginning of it.)

Stephen McAleese @ 2024-05-22T19:47 (+6)

Update: the UK government has announced £8.5 million in AI safety funding for systematic AI safety and these grants will probably be distributed in 2025.

chanakin @ 2023-08-26T10:12 (+4)

Shouldn't the EMA calculation for 3 years be:

EMA = Current_year*2/(3+1) + Last_yearEMA*(1 - 2/(3+1))

EMA = Current_year*0.5 + Last_yearEMA*0.5

And EMA calculation for 2 years be (your google sheet formula):

EMA = Current_year*2/(2+1) + Last_yearEMA*(1 - 2/(2+1))

EMA = Current_year*0.66 + Last_yearEMA*0.33

However, in the google formula you weighted the previous year by 0.66 and the current year by 0.33, meaning that you gave the most recent data less weightings and the EMA is actually 2 years instead of 3?

https://pandas.pydata.org/pandas-docs/stable/reference/api/pandas.DataFrame.ewm.html

https://www.investopedia.com/terms/e/ema.asp

A slight change to use a 3 year-smoothing of a 3 year EMA

EMA(3 years) = Fund(t)*0.571+EMA(t-1)*0.286 + EMA(t-2)*0.143

*0.571+0.286+0.143 = ~ 1

I.e. 3 years EMA should actually be:

| 2014 | 0 | 0 |

| 2015 | 1186000 | 782760 |

| 2016 | 6563985 | 3971904.795 |

| 2017 | 43222473 | 25927931.53 |

| 2018 | 4280392 | 10427474.64 |

| 2019 | 63826400 | 43134826.36 |

| 2020 | 19626363 | 25034342.48 |

| 2021 | 90760985 | 65152624.55 |

| 2022 | 118717429 | 90001213.56 |

| 2023 | 30873035 | 52685675.37 |

Stephen McAleese @ 2023-08-28T14:36 (+1)

Thanks for pointing this out. I didn't know there was a way to calculate the exponentially moving average (EMA) using NumPy.

Previously I was using alpha = 0.33 for weighting the current value. When that value is plugged into the formula alpha = 2 / N + 1, it means I was averaging over the past 5 years.

I've now decided to average over the past 4 years so the new alpha value is 0.4.

Stefan_Schubert @ 2023-07-12T20:11 (+4)

I think "Changes in funding in the AI safety field" was published by the Centre for Effective Altruism.

Stephen McAleese @ 2023-07-12T20:52 (+3)

Thanks for spotting that. I updated the post.

Imma @ 2024-10-15T18:01 (+3)

Thank you for updating the article with 2023 numbers!

(Commenting this as a signal boost for potential readers.)

Question: Don't Founder's Pledge and Longview also direct funding to AI safety? There might be more. ECF is small but Longview might advise donors who don't use the fund.

Stephen McAleese @ 2025-01-11T14:38 (+5)

Now the post is updated with 2024 numbers :)

I didn't include Longview Philanthropy because they're a smaller funder and a lot of their funding seems to come from Open Philanthropy. There is a column called "Other" that serves as a catch-all for any funders I left out.

I took a look at Founder's Pledge but their donations don't seem that relevant to AI safety to me.

Stephen McAleese @ 2023-10-02T22:02 (+3)

Some information not included in the original post:

- In April 2023, the UK government announced £100m in initial funding for a new AI Safety Taskforce.

- In June 2023, UKRI awarded £31m to the University of Southhampton to create a new responsible and trustworthy AI consortium named Responsible AI UK.

Geoffrey Miller @ 2023-07-13T21:06 (+3)

Stephen - thanks for a very helpful and well-researched overview of the funding situation. It seems pretty comprehensive, and will be a useful resource for people considering AI safety research.

I know there's been a schism between the 'AI Safety' field (focused on reducing X risk) and the 'AI ethics' field (focused on reducing prejudice, discrimination, 'misinformation', etc.) But I can imagine some AI ethics research (e.g. on mass unemployment, or lethal autonomous weapon systems, or political deepfakes, or AI bot manipulation of social media) that could feed into AI safety issues, e.g. by addressing developments that could increase the risks of social instability, political assassination, partisan secession, great-power conflict, which could lead to increased X risk.

I imagine it would be much harder to analyze the talent and money devoted to those kinds of issues, and to disentangling them from other kinds of AI ethics research. But I'd be curious whether anyone else has any sense of what proportion of AI ethics work could actually inform our understanding of X risk and X risk amplifiers....

Stephen McAleese @ 2023-10-02T21:39 (+3)

I think work on near-term issues like unemployment, bias, fairness and misinformation is highly valuable and the book The Alignment Problem does a good job of describing a variety of these kinds of risks. However, since these issues are generally more visible and near-term, I expect them to be relatively less neglected than long-term risks such as existential risk. The other factor is importance or impact. I believe the possibility of existential risk greatly outweighs the importance of other possible effects of AI though this view is partially conditional on believing in longtermism and weighting the value of the long-term trajectory of humanity highly.

I do think AI ethics is really important and one kind of research I find interesting is research on what Nick Bostrom calls the value loading problem which is the question of what kind of philosophical framework future AIs should follow. This seems like a crucial problem that will need to be solved eventually. Though my guess is that most AI ethics research is more focused on nearer-term problems.

Gavin Leech wrote an EA Forum post which I recommend named The academic contribution to AI safety seems large where he argues that the contribution of academia to AI safety is large even with a strong discount factor because academia does a lot of research on AI safety-adjacent topics such as transparency, bias and robustness.

I have included some sections on academia in this post though I've mostly focused on EA funds because I'm more confident that they are doing work that is highly important and neglected.

Dawn Drescher @ 2023-07-21T23:13 (+2)

FYI: Our (GoodX’s) project AI Safety Impact Markets is a central place where everyone can publish their funding applications, and AI safety funders can subscribe to them and fund them. We have ~$350k in total donation budget (current updated number) from interested donors.

(If you’re a donor interested in supporting early-stage AI safety projects and you’re interested in this crowdsourced charity evaluator, please sign here.)

Andy E Williams @ 2024-01-10T02:49 (+1)

As explored in this article here (https://forum.effectivealtruism.org/posts/tuMzkt4Fx5DPgtvAK/why-solving-existential-risks-related-to-ai-might-require), I as well as others I'm sure share the author's opinion that current AI safety approaches do not work reliably. This is due to such efforts being misaligned in ways that are often invisible. For this reason, more research is necessary to come up with new approaches. The author expressed the opinion that the free market tends to prioritize progress in AI over safety because existential risk is an "externality", and that consequently, philanthropic funding is useful for filling the funding gap.