A Frontier AI Risk Management Framework: Bridging the Gap Between Current AI Practices and Established Risk Management

By simeon_c @ 2025-03-13T18:29 (+4)

This is a linkpost to https://arxiv.org/abs/2502.06656

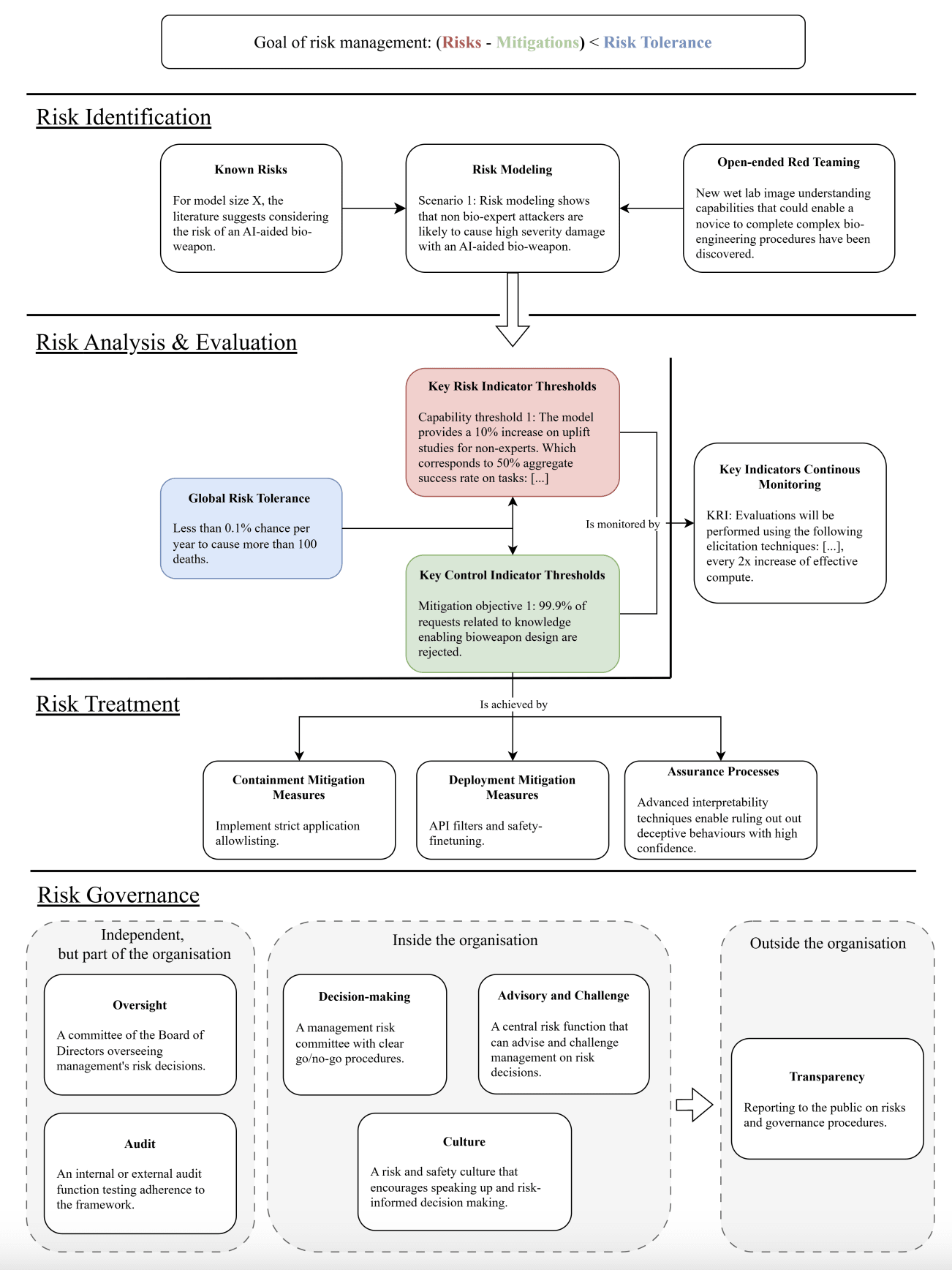

We (SaferAI) propose a risk management framework which we think should improve substantially upon existing Frontier Safety Frameworks if followed. It introduces and borrows a range of practice and concepts from other areas of risk management to introduce conceptual clarity and generalize some early intuitions that the field of AI safety independently came up with.

To maintain readability from people in the AI field, we didn’t make the risk management framework fully adequate yet.[1]

To give you a taste, here are some of our risk management framework unique features:

- The emphasis on the majority of the risk management happening before the final training run begins. This enables the work to be parallelized with capabilities work and not delay the release of safe products.

- Emphasizing open-ended red teaming for risk identification to identify possible new risk factors. A canonical example of hard-to-foresee emerging phenomenon changing the risk profile of a model is chain-of-thoughts, making a model substantially stronger and hence riskier across the board when prompted adequately. If there's a new chain-of-thought that appears, you ideally want a procedure that would catch it before deployment.

- The distinction of the risk management policy, meant to be pretty stable, and the risk register, used and updated very frequently with a catalog of risks and all the corresponding information to update those. In this risk register, we suggest a set of 6 categories of information to document for each risk.

- The clear designation of a risk owner, i.e. an individual ultimately accountable for ensuring a risk is managed appropriately. This is a simple yet undiscussed practice.

- The introduction of Key Risk Indicators (KRIs), i.e. proxy measures tracked to monitor and assess various risk sources. Evaluations are one important type of risk indicators, but not the only one. Percentage of API interactions that are jailbroken could be another. This notion enables to generalize a lot of thinking that has gone into evaluations. You could set thresholds on all types of KRIs, e.g. on number of incidents post-deployment.

- A proposed multi-layered governance approach producing checks and balances, inspired from structures in other industries.

We summarize below the risk management framework components:

We welcome feedback, here, by DMs or emails.

- ^

One example is that to have a fully adequate risk management framework, given that the conditions of deployment and the number of possible instances of a model are a significant risk factor, the thresholds upon which we condition mitigations should in fact be (capabilities ; deployment conditions) thresholds. For simplicity and so that it is reasonably feasible to go from the current developers’ risk framework, we reserve that consideration for future updates of the framework, once the field has stepped up.