Disentangling Some Important Forecasting Concepts and Terms

By Marcel2 @ 2023-06-25T17:31 (+16)

TL;DR / Intro

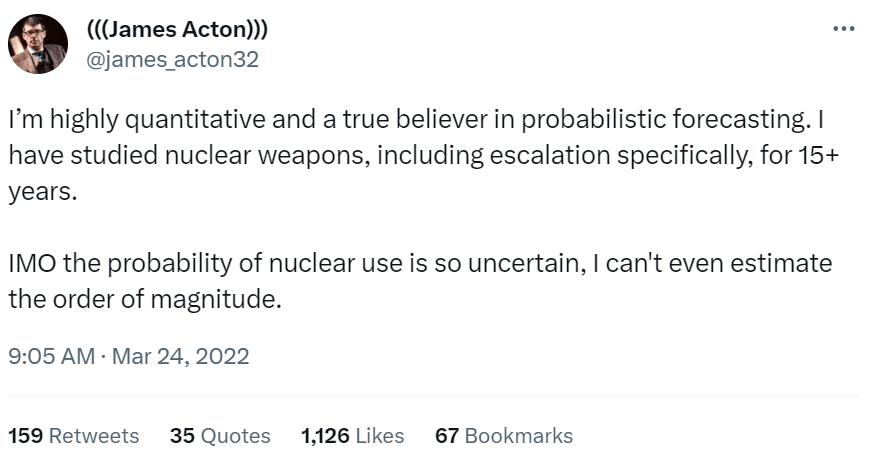

Some people say things like "You can't put numbers on the probability of X; nobody knows the probability / it's unknowable!" This sentiment is facially understandable, but it is ultimately misguided and based on conflating forecasting concepts that I have started calling “best estimates,” “forecast resilience,” and “forecast legibility.” These distinctions are especially important when discussing some existential risks (e.g., AI x-risk). I think the conflation of terms leads to bad analysis and communication, such as using unjustifiably low probability estimates for risks—or implicitly assuming no risk at all! Thus, I recommend disentangling these concepts.

The terms I suggest using are as follows:

- Best estimate (or “Error-minimizing estimate”): “Given my set of information and other analytical constraints, what is the estimate that minimizes my expected error (e.g., Brier score)?”

- Forecast resilience: “How much do I currently expect my 'best estimate' of this event could change prior to a given reference point in time or decision juncture?”

- Forecast legibility: “How much time/effort do I expect a given audience would require to understand the justification behind my estimate, and/or to understand my estimate is made in good faith as opposed to resulting from laziness, incompetence, or deliberate dishonesty?”

I especially welcome feedback on whether this topic is worth further elaboration.

Problems/Motivation

Recently, I was at a party with some EAs. One person (“Bob”) was complaining about the fact that most of the “cost-benefit analysis” supporting AI policy/safety has little empirical evidence and is otherwise too “speculative,” whereas there is clear evidence supporting the efficacy of causes like global health, poverty alleviation, and animal welfare.

I responded: “Sure, it’s true that those kinds of causes have way more empirical evidence supporting their efficacy, but you can still have ‘accurate’ or ‘justified’ forecasts that AI existential risk is >1%, you just can’t be as confident in your estimates and they may not be very ‘credible’ or ‘legible.’”

Bob: “What? Those are the same things; you can’t have an accurate forecast if you aren’t confident in it, and you can’t rely on uncredible forecasts. We just don’t have enough empirical evidence to make reliable, scientific forecasts.”[1]

This is just one of many conversations I have been a part of or witnessed that spiral into confusion or other epistemic failure modes. To be more specific, some of these problems include:

- Believing that it’s not possible to make any estimate or to “put a number on it.” I especially remember thinking that the way I was taught the difference between "risk" (estimates of possible outcomes where "you know the probabilities") and "Knightian uncertainty" (possible outcomes where "you cannot/don't know the probabilities") in an undergrad policy analysis class was just confused with itself (or borderline nonsense in some cases).[2]

- Assuming that uncertainty or the absence of clear evidence about something means we should assume it almost certainly will not happen (such as by not including it in a decision-making model)—what Scott Alexander recently named the “Safe Uncertainty Fallacy.”

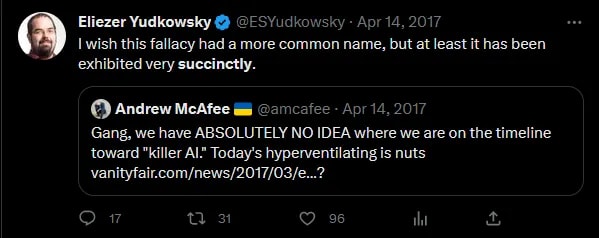

- Failing to understand how “falsifiability” applies to forecasting (especially while applying hypocritical standards[3]). Consider for example Marc Andreesen: “My response is that [the claim that AI could kill us] is non-scientific – What is the testable hypothesis? What would falsify the hypothesis? How do we know when we are getting into a danger zone? These questions go mainly unanswered apart from ‘You can’t prove it won’t happen!’”

- Not knowing how to properly judge the accuracy of people’s forecasts in retrospect, such as claiming someone is “wrong” if their forecast was on the wrong side of 50%. There are some exceptions where many people recognize that this is overly simplistic, such as when the best possible forecast was “obvious” (e.g., someone claims that rolling a 20 on a twenty-sided die is 5%), but it can quickly break down when the numbers become less cut-and-dry.[4]

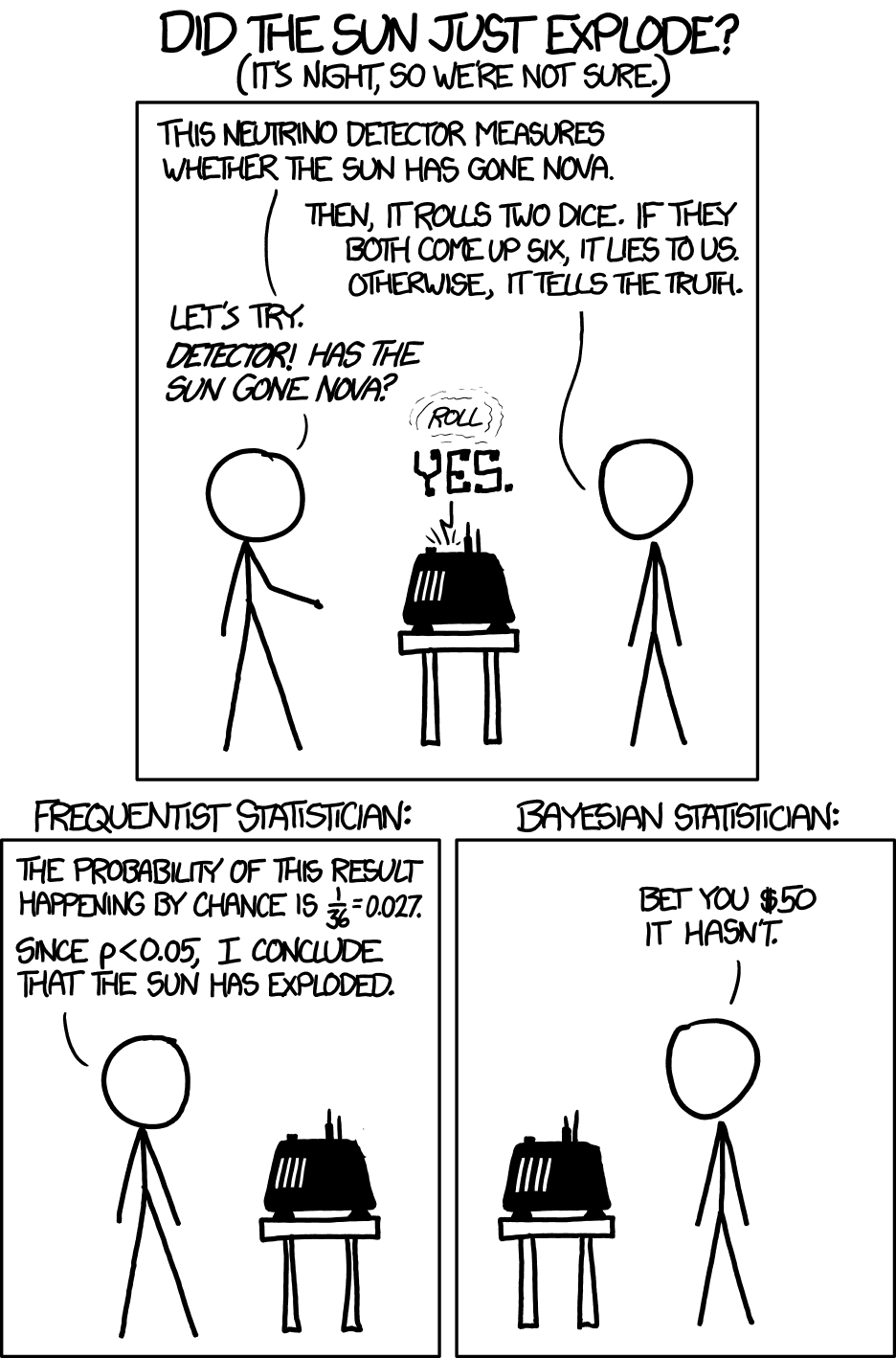

- Only reporting or considering the probability derived from methods with some explicit set of assumptions as “The Estimate,” rather than considering factors that do not neatly fit into “The Model.” I’ve been told that this can be a problem in bureaucratic settings such as the OIRA regulatory review processes.[5] As parodied by xkcd:

- Interpreting a “precise” estimate (e.g., 12.15% vs. 12%) as conveying more “confidence” (resilience) about the estimate than is actually intended.

- Interpreting someone’s probability estimates as implying “this is ‘the estimate’ and I do not expect to substantially change my belief.” (Ben Garfinkel gives some related commentary in a Hear This Idea podcast)

- Interpreting someone’s probability estimate as implying “other people should (obviously) believe this is ‘the correct estimate,’” when in fact the original forecaster might say “I think it’s totally reasonable to have different estimates.”

- Believing that true “randomness” is an inherent part of systems/processes such as coin flips (which are arguably deterministic[6]), rather than treating such randomness as a measure of our own analytical limitations.

It seems there are many reasons why people do these things, and this article will not try to address all of the reasons. However, I think one important and mitigatable factor is that people sometimes conflate forecasting concepts such as “best estimates,” resilience, and legibility. Thus, in this article I try to highlight and disentangle some of these concepts.

Distinguishing probability estimates, resilience, and legibility

I am still very uncertain about how best to define or label the various concepts; I mainly just present the following as suggestions to spark further discussion:

Best estimate (or more precisely, “Error-minimizing estimate”)

Basically, given an actual person’s set of information and analysis, what is the estimate that minimizes that person’s expected error (e.g., Brier score)?

- In a situation of theoretical pure uncertainty (i.e., you have practically-zero information about an event) the best guess will always be 50% in the case of two possible outcomes.[7] If someone says they are deeply uncertain about an event—e.g., “we have no empirical data on this!”—then they cannot then claim that a very low probability is justified, in line with the “Safe Uncertainty Fallacy.”[8]

- In the case of a flip of a fair coin, the best estimate for ordinary humans (as opposed to hypothetical supercomputers) will be roughly 50% heads vs. 50% tails.

- However, suppose that you have a coin which you know is heavily biased (80% vs. 20%[9]), but you have no idea if it's biased towards heads or tails. It seems that some people would instinctively say things like “you can't give a ‘good estimate’ in this situation,” but in fact your error-minimizing estimate is still 50%! It is no worse of a “best estimate” than with a fair coin.[10] I suspect the problem is the conflation of "best estimate" with terms like “resilience” (especially when considering how an audience might misinterpret someone's forecasts as having greater confidence/resilience than is actually intended).

“Resilience” (or “credal resilience”)

“How much do I currently expect my 'best estimate' of this event to change in response to receiving new information or thinking more about the problem prior to a given reference point in time or decision juncture (e.g., the event happening, a grant has to be written, ‘one year from now’)?”[11][12]

- Going back to the biased coin example: if someone is asked to estimate the probability that the coin is biased towards heads, someone’s best estimate prior to flipping the coin would still be 50%. However, if they are asked “what is the likelihood that the tenth flip will be heads,” someone might initially estimate 50% but report very low resilience (in relation to their resilience and estimate after the 9th flip). Another example is in the following footnote.[13]

- A resilience report can be highly dependent on the reference point (e.g., 10 minutes from now vs. 10 months from now), so it may not always make sense to think in terms of “generic” or reference-agnostic resilience reports.

- As illustrated in the following table, it is possible to have a highly “discriminatory”[14] estimate with high resilience and a low-discrimination forecast with high or low resilience. However, it is illogical to have a probability estimate that is close to 0% or 100% yet also believe “there is a decent chance my estimate will substantially change.” For example, you logically can’t say or imply “x-risk from AI is <0.1%, but like, IDK, when GPT-5 comes out there’s like a 10% chance I’ll think the risk is >1%.”

Low resilience | High resilience | |

Estimate w/ low discrimination | 100th flip of a biased coin | Fair coin flip |

Estimate w/ high discrimination | [Not logically possible?[15]] | 100th flip of a biased coin |

- I do not have a formula in mind for what the resilience measure should look like;[16] suggestions are welcome!

- One important failure mode that this distinction seeks to mitigate is that people sometimes interpret probability estimates as “I will indefinitely continue to believe that the probability is X; you cannot persuade me otherwise.” With the resilience report, someone can more clearly emphasize “I currently think the risk is ~40%, but I’m definitely willing to change my mind in response to new information/arguments.”

- A related benefit of this distinction is to highlight that one of the strengths of “scientific”/rigorous methods is that they sometimes help you to have higher resilience in your answers (e.g., statistical significance), and sometimes the absence of rigorous methods should be taken as suspicious. However, rigid scientific methods are often neither necessary nor sufficient for resilience, since formulaic empirical/quantitative methods (e.g,. randomized control trials) are not the only way of gaining knowledge about the world, they are not always applicable, and in some cases they can even give you false confidence (resilience) or mislead you when there are variables that models cannot neatly or “objectively” measure.

Forecast “legibility”[17]

The current definition I prefer is vaguely something like “How much time/effort do I expect a given audience would require to understand the justification behind my estimate (even if they do not necessarily update their own estimates to match), and/or to understand my estimate is made in good-faith as opposed to resulting from laziness, incompetence, or deliberate dishonesty?” (I do not have a more precise operationalization at the moment[18])

- I think this concept is incredibly important for discussions about catastrophic or existential risk from “unusual” sources, such as AI x-risk: I personally would estimate that AI x-risk is >5%, but I also recognize that convincing other people (even hypothetical good-faith critics) is often more time-consuming than inputting numbers on a calculator or writing up a mathematical proof that gets a stamp of peer-review approval.

- There are a few examples that may help illustrate this:

- Suppose you and someone else are forecasting the 2024 presidential election and the other person believes that the Democratic candidate is 70% likely to win, but you show them a Metaculus crowd prediction that claims the Democratic candidate is only 40% likely to win: if they are not familiar with Metaculus or no-money prediction polling (or crowdsourced forecasting more generally), it might take 20 minutes of explanation for them to consider this information relevant/credible. In contrast, appealing to a real-money prediction market might be more legible—it might only require a minute or less of explanation—because it is easier to explain to an average person why “having money on the line is a better signal of accuracy.” Crucially, it could theoretically be the case that prediction markets are actually far worse than polls, but the arguments for using markets are more persuasive/legible.

- For some people, the idea that there could be a bioengineered pandemic or nuclear war that leads to extinction seems much more legible than extinction from AI, especially given the widespread understanding of the power of nuclear weapons and recent experience with a pandemic. Yet, many people who have researched existential risk more deeply have argued that nuclear winter is very unlikely to lead to extinction, while others have found AI x-risk much higher. The existence of movies such as Terminator may help some people connect the dots, but without something that seems like a proof of concept, visceral experiences,

- (A coin flipping "app" that someone might think is rigged unless they can review the source code, compared to the legibility of a regular coin they can test[19])

- Consider the example of Dr. Michael Burry as dramatized in the Big Short: shorting housing bonds supposedly seemed crazy at the time, and Dr. Burry had a hard time justifying his actions to investors, even though he internally felt very justified in his own beliefs.

- Legibility can tend to be much higher for some research methods than others, e.g., airtight experimental methods vs. complex theoretical methods:

- Some experimental methods that can be documented/verified/replicated can be highly legible, especially when they rely on objective, empirical data and explicit calculations. One crucial reason for this is that the average person does not need to replicate an experiment if they can trust that the researchers followed a rigorous and well-defined procedure (e.g., a randomized control trial) that was inspected by peer review, especially if they believe that there are sufficient social or institutional deterrents against fraud or negligence (or checks against total incompetence). Additionally, well-designed experimental methods can unilaterally and semi-automatically test many counterarguments (e.g., "X, Y, Z, and Q were not confounding variables").

- In contrast, for someone to determine that highly subjective/intuition-based classifications (e.g., some qualitative data) were justified can be far more difficult: any given variable coding might invite tens of minutes worth of scrutiny, whereas objective measurements are often easier to evaluate or defend.[20] Importantly, in these cases of subjective coding or many other forms of analysis where there is not an "obviously correct procedure" or set of rules for researchers to follow, it can be harder to detect and thus deter (punish) intentional manipulation of data, given the potential for "honest mistakes." Additionally, with some of these methods the researcher has to do manual whack-a-rebuttal (and people unfamiliar with the arguments may think that their lack of rebuttals is just a result of not knowing the topic rather than a sign that good rebuttals do not exist), which can involve slow and error-prone feedback loops (e.g., people feel confident they are right when they are actually quite wrong, people just disengage from debates when they realize that they are “losing”).

- Still, it seems that forecasts are sometimes far less legible than they could be if the forecaster spent more time to clearly explain why they came to some conclusion (as opposed to the result being purely determined by the nature of the problem or the analytical methodology).[21]

- One important failure mode that this distinction helps to resolve is that people sometimes interpret probability estimates as “you clearly ought to believe that the probability is X; you are just stubborn/ignorant if you don’t agree with me.” In reality, I think it is totally understandable that people who are not familiar with the arguments for AIXR are skeptical at first.

Other Concepts

I do not want to get too deep into some other concepts, but I’ll briefly note two others that I have not fully fleshed out:

- “Ground Truth”: sometimes people talk about things such as coin flips as if they are inherently random events that “have a probability (e.g., 50% heads).” However, setting aside questions about true quantum “randomness,” it seems that many events are (more) deterministic (than people assume), the outcomes are just governed by inaccessible variables/information and incalculably complex relationships/dynamics that are just too complex and/or inaccessible. Once a coin has been flipped, the actual probability that it lands heads is basically either 100% or 0% rather than 50%, it’s just that our limited information and analytical capabilities prevent humans from making better estimates than ~50%.

- “Confidence (?)”: It seems that there should be some term that expresses the concept of “How much weight would I recommend someone attach to my forecast if it is being combined with other people’s forecasts on the same question?” For example, someone who has only thought a short bit about a question might recommend putting far less weight on their own forecasts compared to the forecast of someone who has thought much more about the question or who is a better forecaster. (However, I have considered a few other concepts that “confidence” should perhaps refer to.[22])

Closing Remarks

I think there are many points of confusion that undermine people’s ability to effectively make or correctly interpret forecasts. However, in my experience it seems that one important contributor to these problems is that people sometimes entangle concepts such as best estimates, resilience, and legibility. This post is an exploratory attempt to disentangle these concepts.

Ultimately, I am curious to hear people’s thoughts on:

- Whether they frequently see people (especially inside of EA) conflating these terms;

- Whether these instances of conflation are significant/impactful for discussions and decisions; and

- Whether the distinctions and explanations I provide make sense and help to reduce the frequency/significance of confusion?

- ^

I can’t remember exactly how everything was said, but this is basically how I interpreted it at the time.

- ^

I recognize this may seem like a bold claim, but it may simply be that my professors incorrectly interpreted/taught Knightian Uncertainty, as some of the claims they made about the inability to assign probabilities to things seemed clearly wrong when taken seriously/literally. FWIW, there were multiple situations where I felt my public policy professors did not fully understand what they were teaching. (In one case, I challenged a formula/algorithm for cost-benefit analysis that the professor had been teaching from a textbook "for years," and the professor actually reversed and admitted that the textbook's algorithm was wrong. )

- ^

For example, saying “your claims that the technology could be dangerous are unscientific and unfalsifiable” while at the same time (implicitly) forecasting that the technology will almost certainly be safe.

- ^

Relatedly, see https://www.lesswrong.com/posts/qgm3u5XZLGkKp8W8b/retrospective-forecasting: “More serious is that we don't know what we're talking about when we ask about the probability of an event taking place when said event happened.”

- ^

Basically, someone familiar with the process remarked that in some cases a loosely-predictable cost or benefit might simply not be incorporated into the review because the methodology or assumptions for calculating the effect are not sufficiently scientific or precedented (even if the method is justified).

- ^

For the sake of simplicity, I am setting aside some arguments about quantum mechanics, which is often not what people have in mind when they say that things like coin flips are “random.” Moreover, I have not looked too deeply into this but my guess is that once a coin has begun flipping, randomness at the level of quantum mechanics is not strong enough to change the outcome.

- ^

Or 1/n for n possible outcomes more generally.

- ^

Of course, often when people are doing this they implicitly mean “we have no empirical data [and this seems like a radical idea / the fact that we ‘have no empirical data’ actually is the data itself: this thing has never occurred],” but they fail to make their own reasoning explicit by default.

- ^

Note, what I would call the “ground truth” (a concept I originally discussed in more detail but ended up mostly removing and just briefly discussing in the "other concepts" section to avoid causing confusion and getting entangled in debates over quantum mechanics) of every flip is still either 100% heads or 100% tails, but with sufficient knowledge about the direction of the bias your best estimate would become either 80% tails or heads.

- ^

I think it’s plausible that people would be more likely to avoid such a mistake when the probabilities are cut-and-dry and the context (a coin flip) is familiar compared to other situations where I see people make this mistake.

- ^

Note that there are some potential issues with beliefs about changes in one’s own forecasting quality which this question does not intend to capture. For example, a forecaster should not factor in considerations like “there is a 1% chance that I will suffer a brain injury within the next year that impairs my ability to forecast accurately and will cause me to believe there is a 90% chance that X will happen, whereas I will otherwise think the probability is just 10%”

- ^

Some people might prefer to use terms that focus on expected level of “surprise,” maybe including terms like “forecast entropy”? However, my gut instinct (“low resilience”) tells me that people should be averse to using pedagogical terms where more-natural terms are available.

- ^

To further illustrate, suppose someone lays out 5 red dice and 5 green dice. You and nine other people each take one die at random, and you are told that one of the sets of dice rolls a 6 with probability 50% and the other set is normal, but you are not told which one is which. Suppose you are the last to roll and everyone rolls their dice on a publicly-viewable table, but you have to make one initial forecast and then one final forecast right before you roll. Your initial "best guess" for the probability that you roll a 6 will be [(50% * 1/6) + (50% * 3/6)] = 2/6. However, you can say that this initial estimate is low resilience, because you expect the estimate to change in response to seeing 9 other people roll their dice, which provides you with information about whether your die color is fair or weighted. In contrast, your final estimate will be high resilience. If you were the last of 100 people to go, you could probably say at the outset “I think there is a 50% chance that by the time I must roll my 'best estimate' will be ~1/2, but I also think there is a 50% chance that by that time my 'best estimate' will be ~1/6. So my current best guess of 2/6 is very likely to change.”

- ^

I.e., a probability close to 0 or 1.

- ^

I suppose it might depend on whether your formula for determining "high" vs. "low" resilience focuses on relative changes (1% → 2% is a doubling) as opposed to nominal changes (1% → 2% is only a 1% increase) in probability estimates? If someone assumes "low resilience" includes "I currently think the probability is 0.1%, but there's a 50% chance I will think the probability is actually 0.0000001%", then this cell in the table is not illogical. However I do not recommend such a conception of resilience; in this cell of the table, I had in mind a situation where someone thinks "I think the probability is ~0.1%, but I think there's a ~10% chance that by the end of this year I will think the probability is 10% rather than 0.1%" (which is illogical since it fails to incorporate expected updating based on the low resilience).

As I note in the next bullet, I am open to suggestions on formulas for measuring resilience! It might even help me with a task in my current job, at the Forecasting Research Institute.

- ^

In part because I don’t think it’s necessary to have a proof of concept, in part because I do not have a comparative advantage in the community for creating mathematical formulas, and in part because I’m not particularly optimistic about people being interested in this post.

- ^

An alternative I’ve considered is “credibility,” but I don’t know if this term is too loaded or confusing?

- ^

I do think that the evaluation of legibility probably has to be made in reference to a specific audience, and perhaps should also adopt the (unrealistic) assumption that the audience’s sole motivation is truth-seeking (or forecast error-minimization), rather than other motivations such as “not admitting you were wrong.”

- ^

I relegated this to a footnote upon review because it seemed a bit superfluous compared to the heavily condensed version included in text. The longer explanation is as follows:

Suppose that you have a strange-looking virtual coin-flip app on your phone which you know through personal experience is fair, but you present it to a random person who has never seen it before and you claim that flipping heads is 50% likely in the app. This claim is at least somewhat less legible than the same forecast for one of the real coins in the other person’s pocket: their best estimate for the app may be 50% heads with low-medium resilience, but if the first two flips are tails they might change their forecast (because they put little weight on your promise and have less rigid priors about the fairness of virtual coin flipping apps). However, a particularly paranoid person might think that the first few flips are designed to be normal to mislead people, then on the third flip it always is heads. Thus, someone might be skeptical unless they can access and review the app’s source code.

- ^

Note, however, that objectivity in a measurement (“I can objectively measure X”) does not mean that the metric is necessarily the right thing to focus on (“X is the most important thing to measure”).

- ^

(For example, in the Big Short, Dr. Burry is portrayed as a very ineffective communicator with some of his investors.)

- ^

There’s also a second definition I’ve considered which is basically “how much weight should my accuracy relative to others on this question be given when calculating my weighted average performance across many questions” (perhaps similar to wagering more money on a specific bet?)—but I have not worked this out that well. It also seems like this may just mathematically not make sense unless it is indirectly/inefficiently referring to my current preferred definition of resilience or other terms: if you think you are more likely to be “wrong” about one 80% estimate relative to another, should this not cause you to adjust your forecast towards 50%, unless you’re (inefficiently) trying to say something like “I will likely change my mind”?

niplav @ 2023-06-27T09:39 (+3)

This is a post that rings in my heart, thank you so much. I think people very often conflate these concepts, and I also think we're in a very complex space here (especially if you look at Bayesianism from below and see that it grinds on reality/boundedness pretty hard and produces some awful screeching sounds while applied in the world).

I agree that these concepts you've presented are at least antidotes to common confusions about forecasts, and I have some more things to say.

I feel confused about credal resilience:

The example you state appears correct, but I don't know how that would look like as a mathematical object. Some people have talked about probability distributions on probability distributions, in the case of a binary forecast that would be a function , which is…weird. Do I need to tack on the resilience to the distribution? Do I compute it out of the probability distribution on probability distributions? Perhaps the people talking about imprecise probabilities/infrabayesianism are onto something when they talk about convex sets of probability distributions as the correct objects instead of probability distributions per se.

One can note that AIXR is definitely falsifiable, the hard part is falsifying it and staying alive.

There will be a state of the world confirming or denying the outcome, there's just a correlation between our ability to observe those outcomes and the outcomes themselves.

Knightian uncertainty makes more sense in some restricted scenarios especially related to self-confirming/self-denying predictions. If one can read the brain state of a human and construct their predictions of the environment out of that, then one can construct an environment where the human has Knightian uncertainty by constructing outcomes that the human assigned the smallest probability to. (Even a uniform belief gets fooled: We'll pick one option and make that happen many times in a row, but as soon as our poor subject starts predicting that outcome we shift to the ones less likely in their belief).

It need not be such a fanciful scenario: It could be that my buddy James made a very strong prediction that he will finish cleaning his car by noon, so he is too confident and procrastinates until the bell tolls for him. (Or the other way around, where his high confidence makes him more likely to finish the cleaning early, in that case we'd call it Knightian certainty).

This is a very different case than the one people normally state when talking about Knightian uncertainty, but an (imho) much more defensible one. I agree that the common reasons named for Knightian uncertainty are bad.

Another common complaint I've heard is about forecasts with very wide distributions, a case which evoked especially strong reactions was the Cotra bio-anchors report with (iirc) non-negligible probabilities on 12 orders of magnitude. Some people apparently consider such models worse than useless, harkening back to forecast legibility. Apparently both very wide and very narrow distributions are socially punished, even though having a bad model allows for updating & refinement.

Another point touched on very shortly in the post is on forecast precision. We usually don't report forecasts with six or seven digits of precision, because at that level our forecasts are basically noise. But I believe that some of the common objections are about (perceived) undue precision; someone who reports 7 digits of precision is scammy, so reporting about 2 digits of precision is… fishy. Perhaps. I know there's a Tetlock paper on the value of precision in geopolitical forecasting, but it uses a method of rounding to probabilities instead of odds or log-odds. (Approaches based on noising probabilities and then tracking score development do not work—I'm not sure why, though.). It would be cool to know more about precision in forecasting and how it relates to other dimensions.

I think that also probabilities reported by humans are weird because we do not have the entire space of hypothesis in our mind at once, and instead can shift our probabilities during reflection (without receiving evidence). This can apply as well to different people: If I believe that X has a very good reasoning process based on observations on Xs past reasoning, I might not want to/have to follow Xs entire train of thought before raising my probability of their conclusion.

Sorry about the long comment without any links, I'm currently writing this offline and don't have my text notes file with me. I can supply more links if that sounds interesting/relevant.

Marcel D @ 2023-06-30T17:42 (+3)

Sorry about the delayed reply, I saw this and accidentally removed the notification (and I guess didn't receive an email notification, contrary to my expectations) but forgot to reply. Responding to some of your points/questions:

One can note that AIXR is definitely falsifiable, the hard part is falsifying it and staying alive.

I mostly agree with the sentiment that "if someone predicts AIXR and is right then they may not be alive", although I do now think it's entirely plausible that we could survive long enough during a hypothetical AI takeover to say "ah yeah, we're almost certainly headed for extinction"—it's just too late to do anything about it. The problem is how to define "falsify": if you can't 100% prove anything, you can't 100% falsify anything; can the last person alive say with 100% confidence "yep, we're about to go extinct?" No, but I think most people would say that this outcome basically "falsifies" the claim "there is no AIXR," even prior to the final person being killed.

Knightian uncertainty makes more sense in some restricted scenarios especially related to self-confirming/self-denying predictions.

This is interesting; I had not previously considered the interaction between self-affecting predictions and (Knightian) "uncertainty." I'll have to think more about this, but as you say I do still think Knightian uncertainty (as I was taught it) does not make much sense.

This can apply as well to different people: If I believe that X has a very good reasoning process based on observations on Xs past reasoning, I might not want to/have to follow Xs entire train of thought before raising my probability of their conclusion.

Yes, this is the point I'm trying to get at with forecast legibility, although I'm a bit confused about how it builds on the previous sentence.

Some people have talked about probability distributions on probability distributions, in the case of a binary forecast that would be a function , which is…weird. Do I need to tack on the resilience to the distribution? Do I compute it out of the probability distribution on probability distributions? Perhaps the people talking about imprecise probabilities/infrabayesianism are onto something when they talk about convex sets of probability distributions as the correct objects instead of probability distributions per se.

Unfortunately I'm not sure I understand this paragraph (including the mathematical portion). Thus, I'm not sure how to explain my view of resilience better than what I've already written and the summary illustration: someone who says "my best estimate is currently 50%, but within 30 minutes I think there is a 50% chance that my best estimate will become 75% and a 50% chance that my best estimate becomes 25%" has a less-resilient belief compared to someone who says "my best estimate is currently 50%, and I do not think that will change within 30 minutes." I don't know how to calculate/quantify the level of resilience between the two, but we can obviously see there is a difference.