Nuclear Expert Comment on Samotsvety Nuclear Risk Forecast

By Jhrosenberg @ 2022-03-26T09:22 (+135)

The below comment was written by J. Peter Scoblic and edited by Josh Rosenberg. Peter is a senior fellow with the International Security Program at New America, where he researches strategic foresight, prediction, and the future of nuclear weapons. He has also served as deputy staff director of the Senate Committee on Foreign Relations, where he worked on approval of the New START agreement, and he is the author of U.S. vs. Them, an intellectual history of conservatism and nuclear strategy.

I (Josh Rosenberg) am working with Phil Tetlock's research team on improving forecasting methods and practice, including through trying to facilitate increased dialogue between subject-matter experts and generalist forecasters. This post represents an example of what Daniel Kahneman has termed “adversarial collaboration.” So, despite some epistemic reluctance, Peter estimated the odds of nuclear war in an attempt to pinpoint areas of disagreement. We shared this forecast with the Samotsvety team in advance of posting to check for major errors and intend these comments in a supportive and collaborative spirit. Any remaining errors are our own.

In the next couple of weeks, our research team plans to post an invitation to a new Hybrid Forecasting-Persuasion Tournament that we hope will lead to further collaboration like the below on other existential risk-related topics. If you'd like to express interest in participating in that tournament, please add your email here.

Summary

On March 10th, the Samotsvety forecasting team published a Forum post assessing the risk that a London resident would be killed in a nuclear strike “in light of the war in Ukraine and fears of nuclear escalation.” It recommended against evacuating major cities because the risk of nuclear death was extremely low. However, the reasoning behind the forecast was questionable.

The following is intended as a constructive critique to improve the forecast quality of nuclear risk, heighten policymaker responsiveness to probabilistic predictions, and ultimately reduce the danger of nuclear war. By implication, it makes the case that greater subject matter expertise can benefit generalist forecasters—even well-calibrated ones.

The key takeaways are:

- Because a nuclear war has never been fought,[1] it is difficult to construct a baseline forecast from which to adjust in light of Russia’s invasion of Ukraine. Nuclear forecasting warrants greater epistemic humility than other subjects.

- The baseline forecast of U.S.-Russian nuclear conflict (absent Russia’s invasion of Ukraine) is unstated, but it appears to rest, in part, on the debatable assertion that strategic stability between the United States and Russia has improved since the end of the Cold War when many developments suggest the opposite is true.

- The relatively low probability of London being struck with a nuclear weapon during a NATO/Russia nuclear war rests on the assumption that nuclear escalation can likely be controlled—one of the most persistent and highly contested subjects in nuclear strategy. In other words, the aggregate forecast takes a highly confident position on an open question.

- The forecast does not seem to account for U.S. and Russian doctrine concerning the targeting and employment of nuclear weapons.

- The forecast is overly optimistic about the ability to evacuate a major city.

- Taking all of the above into account, my forecast of nuclear risk in the current environment is an order of magnitude higher. But experts view nuclear forecasting with suspicion because the lack of historical analogy generates a high degree of uncertainty.[2]

- Although providing a common measure of risk may be useful to the EA community, couching nuclear risk in terms of micromorts or lost hours of productivity sanitizes the threat and therefore may (perversely) disincentivize policymakers from taking potentially valuable preventive action. More broadly, such framing may reduce the perceived value of probabilistic forecasting to policymakers, handicapping a practice that many already view with suspicion.

Problems With Component Forecasts

“Will there be a NATO/Russia nuclear exchange killing at least one person in the next month?” (Samotsvety aggregate forecast: 0.067%/month [0.8%/year])[3]

Asking this question as the first step in assessing the risk of dying in a nuclear attack on London is problematic for two reasons.

- Like the ultimate question, it has no historical analogue—the degree of Knightian uncertainty is similar. Forecasting a nuclear exchange between NATO and Russia is as challenging as forecasting a Russian nuclear attack on London, meaning the question is not the most constructive first step in decomposition. Conventional fighting between NATO and Russia would almost certainly (95%) precede a nuclear exchange between NATO and Russia, and there are historical examples of conventional conflict spreading. So, a more sensible starting point might be to estimate the probability of a conventional military confrontation. (See below.)[4]

- Explicitly identifying conventional war as a likely precursor to nuclear war is important because it demonstrates that there are intermediate steps between the current state of affairs and the outbreak of nuclear war—developments that would provide concerned London residents the opportunity to evacuate. (Note: Some members of the forecasting team did begin their forecasts with this step, but those forecasts are not provided.)

Nevertheless, the nuclear question is certainly germane. The problem is that the rationale for the forecast is not clear, except that the forecasters seem to be adjusting downward from Luisa Rodriguez’s analysis, which puts the probability of a U.S./Russian nuclear exchange at 0.38%/year, because in the post-Cold War era “new de-escalation methods have been implemented and lessons have been learnt from close calls.”[5] There are three problems with these statements:

- It is not clear what “new de-escalation methods” the author is referring to. The phrase is hyperlinked to a Wikipedia page on the so-called Hotline, which was established in 1963 in the wake of the Cuban Missile Crisis (i.e., not “post-Cold War”), when it became clear that the fate of the world could depend upon the decisions of just two individuals—the leaders of the United States and the Soviet Union. Direct, personal communication proved crucial to the resolution of that situation, and the Hotline was established to facilitate such contact in the event of future crises. The Hotline is important but not new. There are, however, three other possibilities:

- The authors could be referring to the 1994 Clinton-Yeltsin pledge to “de-target” U.S. and Russian ICBMs and SLBMs such that if one were accidentally launched, it would land in the ocean. But these weapons can be retargeted in seconds—the measure is largely symbolic—and de-targeting them is not “de-escalatory.”

- It is also true that the United States no longer keeps its bomber fleet on airborne alert, but that status can be reversed in minutes, and is also not de-escalatory. (In fact, one could argue that the need to put the bomber fleet on alert during a crisis would be escalatory.)

- Finally—and most significantly—President Obama’s 2010 Nuclear Posture Review (PDF) called for “de-MIRVing” the U.S. ICBM force, meaning each land-based missile carries only a single warhead. The ICBM force is considered the most destabilizing leg of the nuclear triad because it is a highly attractive target. We deploy ICBMs in no small part to serve as a “nuclear sponge,” forcing the adversary to expend warheads to neutralize them. De-MIRVing makes ICBMs somewhat less attractive targets, and, in a crisis, it reduces the pressure on the U.S. president to use them quickly or risk losing a higher fraction of the 1,550 deployed strategic warheads allowed by New START. Unfortunately, Russia has not taken the same step, which reduces the stabilizing impact of the move.

- It is not clear what lessons we have learned from various “close calls.” Nuclear scholars continue to uncover new details about the Cuban missile crisis many decades later (see, for example, this recent book), and it is not clear even now that we fully understand what happened and why—let alone that we have incorporated those lessons into policymaking. Important documents in one close call—the so-called Able Archer incident—were declassified just last year. Also, it is important to recognize it is not enough that “lessons have been learnt.” They must have been learned by the two men with sole launch authority over the U.S. and Russian nuclear arsenals: Joe Biden and Vladimir Putin, respectively. In other words, even if historians and political scientists have learned from nuclear close calls, we cannot be certain that those lessons have been internalized by the two people who matter most in this situation.[6]

- The nuclear balance between the United States and Russia may not be more stable than during the Cold War. Stability has eroded in recent years because of the collapse of most U.S.-Russian arms control agreements (e.g., the ABM Treaty, the Open Skies Treaty, and the INF Treaty) that cultivated the transparency and predictability that are prerequisites of stability. In addition, the United States continues to develop and deploy missile defenses that Russia believes support a first-strike capability, and both the United States and Russia are modernizing their nuclear forces, including by developing new, low-yield weapons. Some experts consider such weapons de-stabilizing because they are seen as more “usable”—i.e., less likely to provoke full-scale, apocalyptic retaliation. (More on this below.) Ernest Moniz and Sam Nunn have gone so far as to write, “Not since the 1962 Cuban missile crisis has the risk of a U.S.-Russian confrontation involving the use of nuclear weapons been as high as it is today.” And that was before Russia invaded Ukraine.

Calculating the baseline probability of a nuclear war between the United States and Russia—i.e., the probability pre-Ukraine invasion and absent conventional confrontation—is difficult because no one has ever fought a nuclear war. Nuclear war is not analogous to conventional war, so it is difficult to form even rough comparison classes that would provide a base rate. It is a unique situation that approaches true Knightian uncertainty, muddying attempts to characterize it in terms of probabilistic risk. That said, for the sake of argument, where the forecasters adjusted downward from Luisa Rodriguez’s estimate of 0.38%/year, I would adjust upward to 0.65%/year given deterioration in U.S.-Russian strategic stability.

If a NATO/Russian conventional war breaks out over Ukraine, the probability of nuclear war skyrockets. An informal canvassing of nuclear experts yielded an unsurprisingly wide range of probabilities. Blending those forecasts with my own judgment, I would give a (heavily caveated) estimate of 25%.[7] Please note this is not the current probability of NATO/Russia nuclear war. It is the estimated probability of a conventional NATO/Russian conflict turning nuclear. Given that there has been no such conflict and given that the United States is taking steps to avoid direct engagement with Russian forces, the probability is much lower. To calculate how much lower, we need to add a step to the forecast—“Will Russia/NATO conventional warfare kill at least 100 people in the next year”—and condition the first nuclear forecast on that.

The probability of a Russia/NATO conventional war given Russia’s invasion of Ukraine is (marginally) easier to estimate than the probability of nuclear escalation because there is a long history of the two sides approaching but largely avoiding direct conflict, both during the Cold War and after. (For example, a 2018 battle in Syria provides an interesting example of how a fight between U.S. troops and Russian mercenaries did not escalate.) That enables forecasters to construct a rough comparison class from analogies, imperfect though they are. A thorough historical analysis is beyond the scope of this post, but it would offer some reason for optimism (such a war has never occurred) that is tempered by pessimism (the potential for miscalculation or accident remains). So, the probability is non-zero, but an argument can be made that it is low (<10%), especially given that President Biden has explicitly said that U.S. forces will not intervene in Ukraine. (“Our forces are not and will not be engaged in the conflict with Russia in Ukraine.”) For the sake of calculating the nuclear risk to London at this moment—i.e., producing a forecast that does not assume a conventional war between NATO and Russia is already underway—let’s take the midpoint and say the probability is 5%. But, again, caveat forecaster.

“Conditional on Russia/NATO nuclear warfare killing at least one person, London is hit with a nuclear weapon.” (Samotsvety aggregate forecast: 18%.)

This is the most problematic of the component forecasts because it implies a highly confident answer to one of the most significant and persistent questions in nuclear strategy: whether escalation can be controlled once nuclear weapons have been used. Is it possible to wage a “merely” tactical nuclear war, or will tactical war inevitably lead to a strategic nuclear exchange in which the homelands of nuclear-armed states are targeted? Would we “rationally” climb an escalatory ladder, pausing at each rung to evaluate pros and cons of proceeding, or would we race to the top in an attempt to gain advantage? Is the metaphorical ladder of escalation really just a slippery slope to Armageddon?

This debate stretches to the beginning of the Cold War, and there is little data upon which to base an opinion, let alone a fine-grained forecast. Scholars and practitioners have argued both sides at great length—often triggered by proposals to develop more low-yield battlefield nuclear weapons. Those arguing against such weapons doubt the ability to control escalation, while those arguing for such weapons believe not only that escalation is controllable but that the ability to escalate incrementally reduces the chance of war starting in the first place—i.e., that more “usable” nuclear weapons actually enhance deterrence. (For sample arguments against limited nuclear war, see here, here, and here. For examples in favor, see here, here, and here. For a good examination of multiple scenarios, see here.)

Nevertheless, the aggregate forecast takes a strong position. If there is an 18% chance that a Russia/NATO nuclear war would escalate to the point where London is hit with a nuclear weapon, then there is an 82% chance that London would not be hit with a nuclear weapon. Even though Russia and NATO have already exchanged nuclear fire, the forecasters believe that nuclear escalation is probably controllable. This is a belief that requires elucidation.

Unfortunately, the rationale the forecasters offer is not convincing:

[W]e consider it less likely that the crisis would escalate to targeting massive numbers of civilians, and in each escalation step, there may be avenues for de-escalation. In addition, targeting London would invite stronger retaliation than meddling in Europe, particularly since the UK, unlike countries in Northern Europe, is a nuclear state.

This suggests confusion about nuclear targeting policies and employment doctrine. Although details are highly classified, we can glean important information from open sources and declassified materials. A few points:

- U.S. nuclear doctrine does not explicitly target civilians. However, OPLAN 8010 indicates that command and control facilities, military leadership, and political leadership are all fair targets. Similarly, Russian nuclear doctrine calls for strikes on “administrative and political centers.” Obviously, many such targets are located in the capitals of the nuclear states, including London, making the city a strategic target.

- That said, it is not certain that Russia’s use of tactical nuclear weapons on the Continent would lead to a strike on London. But referring to the use of such weapons on the battlefield as “meddling in Europe” is poor phrasing. Here, “meddling” means the use of nuclear weapons against NATO countries, and per Article V an attack on one is an attack on all—an obligation that risks escalation.

- If the Russians decided to strike the United Kingdom but wanted to attempt to control escalation, their initial targets might be strictly military. Central London seems a little arbitrary. A better question might thus be: “How many people will die [in London?] if there is a nuclear strike against AWE/Aldermaston [nuclear R&D facility] or Coulport/Faslane [naval base housing the U.K.’s nuclear submarines]?”

- If Russia did target London, it is unlikely to be with a “tactical” nuclear weapon as the forecast implies because London is 2,000 kilometers from Russia. It may be more useful to ask which delivery system Russia would use—likely sea-launched cruise missiles (e.g., Kalibr) or possibly air-launched ballistic missiles (e.g., Kinzhal), whose warheads probably have a yield of several hundred kilotons. Regardless, a nuclear strike on London would probably (>70%) result in a retaliatory U.K. strike on Moscow. At which point—assuming command and control in Russia is still functioning—London would then be hit with more and/or higher-yield weapons. (In the early 1970s, the U.K. Ministry of Defence estimated that, in a nuclear exchange with the Soviet Union, London would be struck by two to four 5-megaton weapons.)

- Point being: Sure, a counterforce doctrine does not explicitly target civilians. But can a counterforce nuclear strike really not target civilians?

What does this all mean for the forecast? Given the degree of disagreement and the paucity of data, it would not be unreasonable to assign this question 50/50 odds. On the one hand, states have every reason to de-escalate as rapidly as possible. On the other, the game theoretic logic of nuclear war often favors defection over cooperation even though both sides will incur unacceptable losses. Ostensibly rational choices can lead to apocalypse. Plus, people are human. At best they are boundedly rational, the time pressure of a nuclear exchange favors System 1 thinking (intuition over reasoning), and research on anger and decision-making suggests that attacks prompt a desire to punish the aggressor. It is also worth noting that practitioners are not sanguine about this question. In 2018, General John Hyten, then head of U.S. Strategic Command, said this about escalation control after the annual Global Thunder exercise: “It ends the same way every time. It does. It ends bad. And bad meaning it ends with global nuclear war.”

So, I’d bump the 18% to the other side of “maybe” and then some: 65%.

Conditional on the above, informed and unbiased actors are not able to escape beforehand. (Samotsvety aggregate forecast: 25%.)

This question is confusing, but it seems to ask: if there were a nuclear attack on London, what is the probability that residents would have been able to escape beforehand—i.e., would there have been signals clearly portending a strike such that they could have avoided death if they had been paying attention to developments? However, the question of whether Russia attacks London is conditioned on there already being a nuclear war underway between NATO and Russia on the Continent. That would signal that we are already high on the escalatory ladder, and therefore the question is, once again, a function of the degree to which you believe that escalation is controllable—i.e., could a nuclear war be geographically contained? By forecasting that it is very likely (75%) that informed and unbiased actors would be able to evacuate successfully, the forecasters are again assuming that the ability to control escalation is high. And as I noted above, this is an open question. I am pessimistic about controllability and so would put the odds of not being able to escape on the other side of “maybe”: 65%. However, here I think we also need to account for sheer logistical difficulty because I expect many people would try to evacuate London if there were a nuclear exchange in Europe. So, I would assign significantly less optimistic odds to this question. Call it 70%.

Conditional on a nuclear bomb reaching central London, what proportion of people die? (Samotsvety aggregate forecast: 78%)

If Russia employed a single “tactical” nuclear weapon against central London—which is how I initially interpreted the forecast—I doubt that 78% of people would die. But there are a lot of variables at play: time of day; environmental conditions; surface detonation vs. airburst; and (obviously) the yield of the warhead. If we take the population of Central London as 1.6 million people, a bomb would have to kill ~1.25 million people to reach 78%. Using Alex Wellerstein’s NukeMap, I had to airburst a 1-megaton weapon to get that many prompt fatalities, and I am not aware of a “tactical” weapon with that yield.

A strategic Russian strike on London could certainly kill in excess of a million people, not least because death would stem not only from direct nuclear effects but also from radioactive fallout and from the breakdown of civil order in the wake of a strike. The EMP blast from airbursts would fry electronics, roads would be clogged with evacuees and stalled vehicles, and hospitals (assuming they are left standing) would be unable to handle the number of victims and the nature of their injuries. (There are only ~60 certified burn centers in the United States, for example, and few have experience with radiation burns.) People will also die in the months and years following the attacks because of injury sustained or radiation absorbed. (This is one of the reasons that counting fatalities from Hiroshima and Nagasaki is so difficult.)

I’d say the proportion of people who would die immediately from a tactical nuclear weapon striking Central London would be about 8%. If we allow that a nuclear attack on London could involve the use of one or more strategic weapons, then the 78% forecast is reasonable. (As noted above, I initially interpreted Samotsvety to be saying that they were modeling the effect of a single tactical nuclear strike on London, but in subsequent communication, they clarified that they intended to model a broader range of attack outcomes that includes the use of one or more strategic weapons, with the average proportion of residents killed being 78%. Regardless, Samotsvety and I arrived at the same number.)

Comparison Between Forecasts in This Post

Question | Samotsvety Aggregate without min/max | Question | Peter Scoblic's forecast (approximate) |

| - | - | Probability of Russia/NATO conventional war with 100+ troops killed in action in the next year | 5% |

| - | - | Conditional on Russia/NATO conventional war, probability of nuclear warfare | 25% |

| Will there be a Russia/NATO nuclear exchange killing at least one person in the next year? | 0.80% | Implied probability: Will there be a Russia/NATO nuclear exchange killing at least one person in the next year? | 1.25% |

| Will there be a Russia/NATO nuclear exchange killing at least one person in the next month? | 0.067% | Implied probability: Will there be a Russia/NATO nuclear exchange killing at least one person in the next month? (assuming constant risk per month)[8] | 0.10% |

| Conditional on Russia/NATO nuclear exchange killing at least one person, London is hit with a nuclear weapon | 18% | Conditional on Russia/NATO nuclear exchange killing at least one person, London is hit with a nuclear weapon | 65% |

| Conditional on the above, informed and unbiased actors are not able to escape beforehand | 25% | Conditional on the above, informed and unbiased actors are not able to escape beforehand | 70% |

| Conditional on a nuclear bomb reaching central London, what proportion of people die? | 78% | Conditional on a nuclear bomb reaching central London, what proportion of people die? | 78% |

| What is the (unconditional) chance of death from nukes in the next month due to staying in London? | 0.00241% | What is the (unconditional) chance of death from nukes in the next month due to staying in London? (See caveat in footnote)[8] | 0.037% |

Communicating Risk

I recognize that estimates of nuclear risk can usefully guide personal decisions (in this case whether to evacuate London) and that such estimates also enable comparison of different risks and can therefore guide funding decisions. That said, attempts to quantify nuclear risk and optimize courses of nuclear action have often obscured more than they have clarified. (See, for example, the RAND Corporation’s early efforts and the U.S. government’s efforts more broadly.) Yes, our understanding of what makes for well-calibrated forecasters is much more sophisticated than it once was, and I believe that the quantification of nuclear risk can help us identify and close off the most likely avenues to catastrophe. But quantifying such danger in “micromorts” or, worse, characterizing it in terms of hours of productivity lost trivializes the apocalypse, equating it with the cost of taking a brief nap every day.

Again, I recognize the benefits of thinking in such terms—especially for comparative purposes—so I sympathize with the desire to quantify risk such that foundations and individuals can allocate their philanthropic dollars most efficiently and effectively. But efforts to do so make three assumptions:

- We can make well-calibrated forecasts about low-probability events that lack comparison classes—particularly over the long term. We lack gold-standard metrics for assessing the quality of long-run forecasts, and I wonder about well-calibrated forecasts concerning low-probability, unique events in the short run. (Again: no one has ever fought a nuclear war, so what’s the base rate? This lack of data demands a high degree of epistemic humility and respect for uncertainty.) More optimistically, this problem might be solvable—or at least significantly ameliorated—by combining well-calibrated forecasters with subject-matter experts.

- We can quantify the consequences of the event in question (in this case a nuclear strike). Absent that, we cannot calculate the expected value of a potential future. But measuring the cost of a nuclear war in terms of lost productivity suggests a narrow view of human flourishing. Consider the 9/11 attacks, which claimed a “mere” 3,000 lives. To measure the lost productivity of those individuals and amortize it over their remaining years of life is to miss the point. The attacks fundamentally changed American society and politics, to say nothing of the geopolitical landscape. We can count dollars of GDP lost or casualties sustained, but these are tip-of-the-iceberg measures of the full extent of the damage that 9/11 wrought. Imagine, then, the loss of even a single city and the ramifications that would have. The point is that not all that counts can be counted. Which is not to say that we should not measure that which we can. We should. But it is crucial to understand not only the potential but also the limits of quantification.

- Decision-makers are rational, in the sense that they will pick the optimal course of action in any given situation—an assumption that assumes perfect information, unlimited processing power, and a clear set of preferences (as well as a particular cognitive style). These conditions do not accurately characterize government policymaking.

Irrespective of the limits of this particular nuclear forecast, I can virtually guarantee (95%) that policymakers will not take such forecasts seriously if couched in bloodless terms, especially if the reasoning is questionable. One theme of a recent working group on forecasting hosted by Perry World House at the University of Pennsylvania was that the chief forecasting challenge at this point lies not in generating well-calibrated forecasts, but in convincing people (especially policymakers) that they should factor such forecasts into their decisions. As one participant put it, “Well-calibrated forecasts are just table stakes”—i.e., they get you in the game, but they won’t help you win it. (For reasons why, see, for example, this piece.) To be fair, the Samotsvety post was not written to sway policymakers, but given the potential value of forecasting to decision-making, it is important to ask how we can most persuasively convey well-calibrated estimates of the future.

Yet, if policymakers did take such forecasts seriously, a new problem could arise. Because policymakers do not necessarily think in terms of expected value, they might decide that the implication of a 0.067% risk is that they do not need to give nuclear dangers the attention they deserve. The expected cost of low-probability, high-consequence events could be astronomical, but as Herman Kahn wrote, “Despite the fact that nuclear weapons have already been used twice, and the nuclear sword rattled many times, one can argue that for all practical purposes nuclear war is still (and hopefully will remain) so far from our experience that it is difficult to reason from, or illustrate arguments by, analogies from history.” I would suggest, then, that efforts to calculate nuclear risk must be accompanied by efforts to imagine nuclear risk. Only by considering the danger of nuclear war in qualitative terms can we truly appreciate its danger in quantitative terms.

- ^

Obviously, the United States used nuclear weapons against Japan in 1945 to horrific effect, but nuclear weapons have never been used against a nuclear-armed state. This lack of historical analogy increases the degree of future uncertainty, though one could argue that we can draw lessons about the willingness to use nuclear weapons from the bombings of Hiroshima and Nagasaki—and from the 75 years of non-use that followed.

- ^

See, for example, this comment from James Acton: “I’m highly quantitative and a true believer in probabilistic forecasting. I have studied nuclear weapons, including escalation specifically, for 15+ years. IMO the probability of nuclear use is so uncertain, I can't even estimate the order of magnitude.”

- ^

I use the “Aggregate without min/max” numbers throughout this document because the authors write, “We use the aggregate with min/max removed as our all-things-considered forecast for now given the extremity of outliers.”

- ^

One could go back even further and ask what the probability is conditioned on certain policy proposals now being debated, such as NATO imposition of a no-fly zone to allow for humanitarian relief, or on certain eventualities, such as the fallout from Russia’s use of a tactical nuclear weapon in Ukraine affecting NATO countries (a risk that Jens Stoltenberg has implicitly raised).

- ^

One thing confused me: the forecasters say this estimate is too pessimistic, but their baseline forecast in annual terms is 0.8%, which is higher. I assume this reflects a baseline that has already been adjusted upward due to Russia’s invasion of Ukraine—i.e., their baseline would be lower than 0.38% had they made this forecast at the same time Luisa Rodriguez made hers.

- ^

A March 23 New York Times article noted that many NATO leaders “have never had to think about nuclear deterrence or the effects of the detonation of battlefield nuclear weapons, designed to be less powerful than those that destroyed Hiroshima.”

- ^

Note: The probability of nuclear war would be affected by the severity of conflict between NATO and Russia. This 25% forecast assumes a war with at least 100 troops killed in action within a year.

- ^

Given that conventional conflict would likely precede nuclear conflict, the risk per month is not constant. It is lower now than it will be if NATO and Russian forces begin fighting. This has implications for a decision to evacuate major cities—i.e., one might reasonably wait for the (possible) outbreak of conventional hostilities—but for the sake of simplicity, I have assumed constant risk over the next year.

NunoSempere @ 2022-03-26T15:54 (+33)

Hey, thanks for the review. Perhaps unsurprisingly, we thought you were stronger when talking about the players in the field and the tools they have at their disposal, but weaker when talking about how this cashes out in terms of probabilities, particularly around parameters you consider to have "Knightian uncertainty".

We liked your overview of post-Soviet developments, and thought it was a good overview of reasons to be pessimistic. We would also have hoped for an expert overview of reasons for optimism (which an ensemble of experts could provide). For instance, the New START treaty did get renewed.

(We will discuss your analysis at the next Samotsvety meeting, and update our estimates accordingly.)

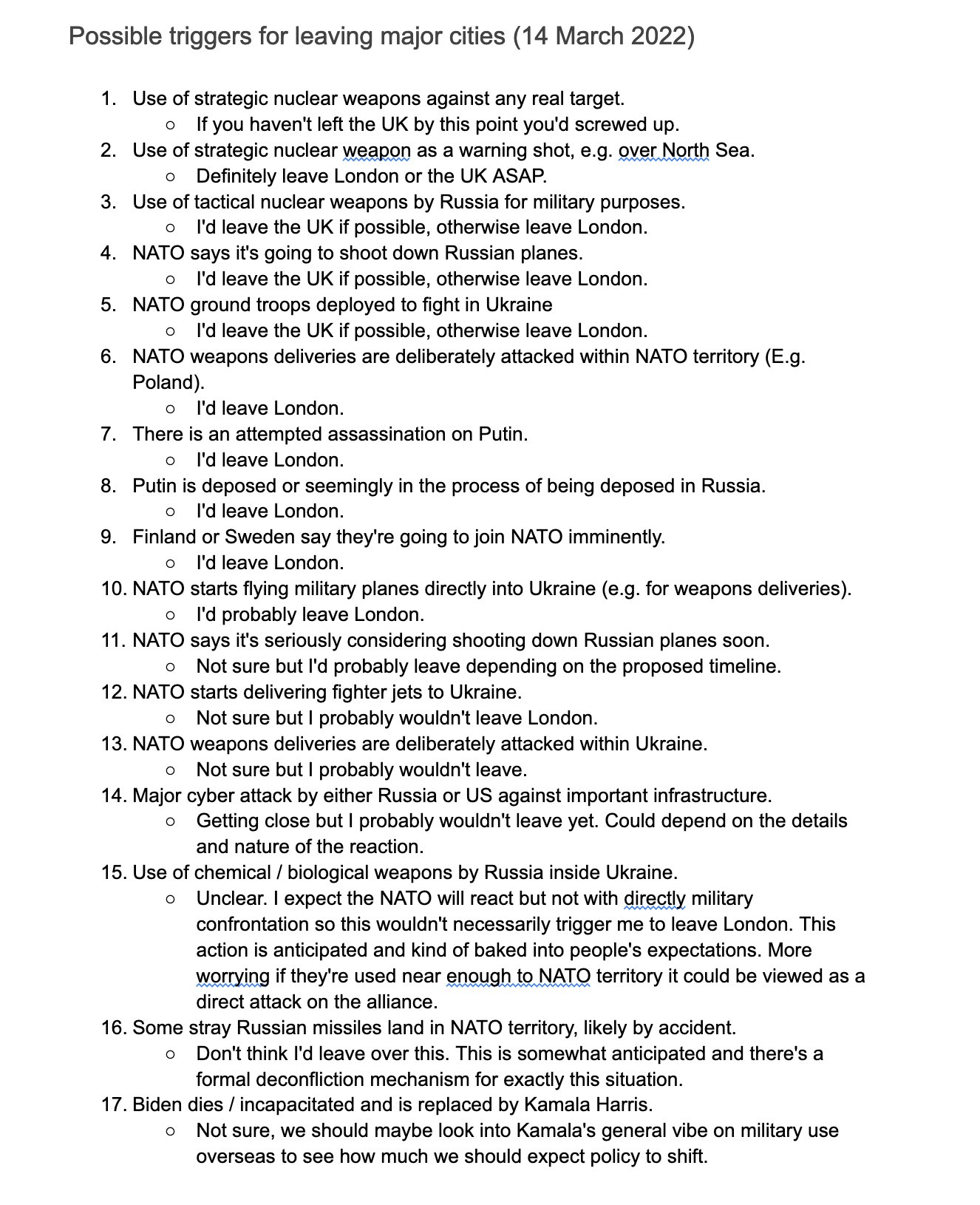

First, as mentioned in private communication, we think that you are underestimating the chance that the "informed and unbiased actors" in our audience would be able to avoid a nuclear strike. For instance, here are Robert Wiblin's triggers for leaving London. Arguably, readers are best positioned to estimate/adjust this number for themselves (based on e.g. how readily they responded to the covid crisis).

This single factor explains the first ~3x difference in our forecasts. After adjusting for that, Peter’s estimate falls in the range of forecasts in our sample. This is reassuring!

Second, we think that taking Luisa Rodríguez's 0.38%/year at face value is unadvisable. She aggregates forecaster and expert probabilities using the simple average (arithmetic mean), but best practices indicate the geometric mean of odds instead. If one does so, we arrive at a 0.13% baseline risk. From this starting point, we update upwards on the current situation — if you update from a lower baseline, your estimate should be lower.

This could account for the 1.5x to 3x difference in our forecast of any nuclear casualties. Making this adjustment would bring your forecast even closer to our aggregate.

These two factors together bridge the order of magnitude between our forecasts. But overall, we shouldn’t be that surprised that subject matter experts were more pessimistic/uncertain than forecasters. For instance, in Luisa Rodríguez’s sample, superforecasters were likewise around 10x lower than experts.

—Misha and Nuño

NunoSempere @ 2022-03-26T16:11 (+12)

On Laplace's law and Knightian uncertainty

Calculating the baseline probability of a nuclear war between the United States and Russia—i.e., the probability pre-Ukraine invasion and absent conventional confrontation—is difficult because no one has ever fought a nuclear war. Nuclear war is not analogous to conventional war, so it is difficult to form even rough comparison classes that would provide a base rate. It is a unique situation that approaches true Knightian uncertainty, muddying attempts to characterize it in terms of probabilistic risk. That said, for the sake of argument, where the forecasters adjusted downward from Luisa Rodriguez’s estimate of 0.38%/year, I would adjust upward to 0.65%/year given deterioration in U.S.-Russian strategic stability.

The probability of nuclear war doesn't seem like it would fall under the heading of Knightian uncertainty. For instance, we can start out by applying Laplace's law of succession, or a bit more complicated multi-step methods.

On the right ignorance prior

...“Conditional on Russia/NATO nuclear warfare killing at least one person, London is hit with a nuclear weapon.” (Samotsvety aggregate forecast: 18%.)

This is the most problematic of the component forecasts because it implies a highly confident answer to one of the most significant and persistent questions in nuclear strategy: whether escalation can be controlled once nuclear weapons have been used. Is it possible to wage a “merely” tactical nuclear war, or will tactical war inevitably lead to a strategic nuclear exchange in which the homelands of nuclear-armed states are targeted? Would we “rationally” climb an escalatory ladder, pausing at each rung to evaluate pros and cons of proceeding, or would we race to the top in an attempt to gain advantage? Is the metaphorical ladder of escalation really just a slippery slope to Armageddon?...

...Given the degree of disagreement and the paucity of data, it would not be unreasonable to assign this question 50/50 odds...

...Regardless, a nuclear strike on London would probably (>70%) result in a retaliatory U.K. strike on Moscow...

We don't think the answer is necessarily "highly confident". Just because an issue is complex doesn't mean our best guess should be near 50%. In particular, if there are many options, e.g., a one-off accident, containment in Ukraine, containment in Europe, and further escalation to e.g., London, and we are maximally uncertain about which one would happen, we should assign equal probability to all. So in this case with four possible outcomes, total uncertainty would cash out to 25% for each of them.

But we are not maximally uncertain. In particular, as the author points out, a nuclear strike to London would likely be followed by a nuclear strike to Moscow. Actors on both sides would want to avoid such an outcome, which brings our probability lower. Certainly, the fact that the reviewer is higher brings up a bit higher, though, since the logic of nuclear war is something we don't have deep experience with.

Jhrosenberg @ 2022-05-06T07:17 (+1)

(Below written by Peter in collaboration with Josh.)

It sounds like I have a somewhat different view of Knightian uncertainty, which is fine—I’m not sure that it substantially affects what we’re trying to accomplish. I’ll simply say that, to the extent that Knight saw uncertainty as signifying the absence of “statistics of past experience,” nuclear war strikes me as pretty close to a definitional example. I think we make the forecasting challenge easier by breaking the problem into pieces, moving us closer to risk. That’s one reason I wanted to add conventional conflict between NATO and Russia as an explicit condition: NATO has a long history of confronting Russia and, by and large, managed to avoid direct combat.

By contrast, the extremely limited history of nuclear war does not enable us to validate any particular model of the risk. I fear that the assumptions behind the models you cite may not work out well in practice and would like to see how they perform in a variety of as-similar-as-possible real world forecasts. That said, I am open to these being useful ways to model the risk. Are you aware of attempts to validate these types of methods as applied to forecasting rare events?

On the ignorance prior:

I agree that not all complex, debatable issues imply probabilities close to 50-50. However, your forecast will be sensitive to how you define the universe of "possible outcomes" that you see as roughly equally likely from an ignorance prior. Why not define the possible outcomes as: one-off accident, containment on one battlefield in Ukraine, containment in one region in Ukraine, containment in Ukraine, containment in Ukraine and immediately surrounding countries, etc.? Defining the ignorance prior universe in this way could stack the deck in favor of containment and lead to a very low probability of large-scale nuclear war. How can we adjudicate what a naive, unbiased description of the universe of outcomes would be?

As I noted, my view of the landscape is different: it seems to me that there is a strong chance of uncontrollable escalation if there is direct nuclear war between Russia and NATO. I agree that neither side wants to fight a nuclear war—if they did, we’d have had one already!— but neither side wants its weapons destroyed on the ground either. That creates a strong incentive to launch first, especially if one believes the other side is preparing to attack. In fact, even absent that condition, launching first is rational if you believe it is possible to “win” a nuclear war, in which case you want to pursue a damage-limitation strategy. If you believe there is a meaningful difference between 50 million dead and 100 million dead, then it makes sense to reduce casualties by (a) taking out as many of the enemy’s weapons as possible; (b) employing missile defenses to reduce the impact of whatever retaliatory strike the enemy manages; and (c) building up civil defenses (fallout shelters etc.) such that more people survive whatever warheads survive (a) and (b). In a sense “the logic of nuclear war” is oxymoronic because a prisoner’s dilemma-type dynamic governs the situation such that, even though cooperation (no war) is the best outcome, both sides are driven to defect (war). By taking actions that seem to be in our self-interest we ensure what we might euphemistically call a suboptimal outcome. When I talk about “strategic stability,” I am referring to a dynamic where the incentives to launch first or to launch-on-warning have been reduced, such that choosing cooperation makes more sense. New START (and START before it) attempts to boost strategic stability by establishing nuclear parity (at least with respect to strategic weapons). But its influence has been undercut by other developments that are de-stabilizing.

Thank you again for the thoughtful comments, and I’m happy to engage further if that would be clarifying or helpful to future forecasting efforts.

NunoSempere @ 2022-05-06T17:06 (+2)

Thanks for the detailed answers!

Jhrosenberg @ 2022-05-06T07:14 (+1)

Thanks for the reply and the thoughtful analysis, Misha and Nuño, and please accept our apologies for the delayed response. The below was written by Peter in collaboration with Josh.

First, regarding the Rodriguez estimate, I take your point about the geometric mean rather than arithmetic mean and that would move my probability of risk of nuclear war down a bit — thanks for pointing that out. To be honest, I had not dug into the details of the Rodriguez estimate and was attempting to remove your downward adjustment from it due to "new de-escalation methods" since I was not convinced by that point. To give a better independent estimate on this I'd need to dig into the original analysis and do some further thinking of my own. I'm curious: How much of an adjustment were you making based on the "new de-escalation methods" point?

Regarding some of the other points:

- On "informed and unbiased actors": I agree that if someone were following Rob Wiblin's triggers, they'd have a much higher probability of escape. However, I find the construction of the precise forecasting question somewhat confusing and, from context, had been interpreting it to mean that you were considering the probability that informed and unbiased actors would be able to escape after Russia/NATO nuclear warfare had begun but before London had been hit, which made me pessimistic because that seems like a fairly late trigger for escape. However, it seems that this was not your intention. If you're assuming something closer to Wiblin's triggers before Russia/NATO nuclear warfare begins, I'd expect greater chance of escape like you do. I would still have questions about how able/willing such people would be to potentially stay out of London for months at a time (as may be implied by some of Wiblin's triggers) and what fraction of readers would truly follow that protocol, though. As you say, perhaps it makes most sense for people to judge this for themselves, but describing the expected behavior in more detail may help craft a better forecasting question.

- On reasons for optimism from "post-Soviet developments": I am curious what, besides the New START extension, you may be thinking of getting others' views on. From my perspective, the New START extension was the bare minimum needed to maintain strategic predictability/transparency. It is important, but (and I say this as someone who worked closely on Senate approval of the treaty) it did not fundamentally change the nuclear balance or dramatically improve stability beyond the original START. Yes, it cut the number of deployed strategic warheads, which is significant, but 1,550 on each side is still plenty to end civilization as we know it (even if employed against only counterforce targets). The key benefit to New START was that it updated the verification provisions of the original START treaty, which was signed before the dissolution of the Soviet Union, so I question whether it should be considered a "post-Soviet development" for the purposes of adjusting forecasts relative to that era. START (and its verification provisions) had been allowed to lapse in December 2009, so the ratification of New START was crucial, but the value of its extension needs to be considered against the host of negative developments that I briefly alluded to in my response.

abukeki @ 2022-03-26T15:49 (+14)

Just one thought: there are so many ways for a nuclear war to start accidentally or through miscalculations (without necessarily a conventional war) that it just seems so absurd to see estimates like 0.1%. A big part of it is even just the inscrutable failure rate of complex early warning systems composed of software, ground/space based sensors and communications infrastructure. False alarms are much likelier to be acted on during times of high tension as I pointed out. E.g., during that incident Yeltsin, despite observing a weather rocket with a similar flight profile as a Trident SLBM and having his nuclear briefcase opened, decided to wait a bit until it became clear it was arcing away from Russia. But that was during a time of hugs & kisses with the West. Had it been Putin, in a paranoid state of mind, after months of hearing Western leaders call him an evil new Hitler who must be stopped, you're absolutely sure he wouldn't have decided to launch a little earlier?

Another thing is I'm uncomfortable with is the tunnel-vision focus on US-Russia (despite current events). As I also pointed out, China joining the other 2 in adopting launch on early warning raises the accident risk by at least 50% - at least since it's probably higher at first given their inexperience/initial kinks in the system that haven't been worked out, etc.[1]

As a last note, cities are likelier to be hit than many think. For one the not explicitly targeting civilians thing isn't even true. Plus the idea doesn't pass the smell test. If some country destroys all the US's cities in a massive attack, you think the US would only hit counterforce targets in retaliation? No, at a minimum the US would hit countervalue targets in response to a countervalue attack. Even if there were no explicit "policy" planning for that (impossible), a leader would simply order that type of targeting in that eventuality anyway.

plus other factors like a big portion of the risk from Launch on Warning being from misinterpreting and launching in response to incoming conventional missiles, and the arguably higher risk of China being involved in a conventional war with the US/allies which includes military strikes on targets in the Chinese mainland. ↩︎

NunoSempere @ 2022-03-26T16:24 (+15)

A big part of it is even just the inscrutable failure rate of complex early warning systems composed of software, ground/space based sensors and communications infrastructure

This list of nuclear close calls has 16 elements. Laplace's law of succession would give a close call a 5.8% of resulting in a nuclear detonation. Again per Laplace's law, with 16 close calls in (2022-1953), this would imply a (16+1)/(2022-1953+2) = 24% chance of seeing a close call each year. Combining the two forecast gives us 24% of 5.8%, which is 1.4%/year. But earlier warning systems were less robust to accidents and weather phenomena, and by now there is already a history of false alarms caused by non-threatening events, hence why an order of magnitude lower for a baseline year—as in the superforecaster estimates that Luisa Rodríguez references—doesn't seem crazy.

keller_scholl @ 2022-03-29T22:35 (+10)

I think that most of this is good analysis: I am not convinced by all of it, but it is universally well-grounded and useful. However, the point about Communicating Risk, in my view, misunderstands the point of the original post, and the spirit in which the discussion was happening at the time. It was not framed with the goal of "what should we, a group that includes a handful of policymakers among a large number, be aiming to convince with". Rather, I saw it as a personally relevant tool that I used to validate advice to friends and loved ones about when they should personally get out of town.

Evaluating the cost in effective hours of life made a comparison they and I could work with: how many hours of my life would I pay to avoid relocating for a month and paying for an AirBnB? I recognize that it's unusual to discuss GCRs this way, and I would never do it if I were writing in a RAND publication (I would use the preferred technostrategic language), but it was appropriate and useful in this context.

NunoSempere @ 2022-03-28T13:14 (+8)

I would also be extremely curious about how this estimate affects the author's personal decision-making. For instance, are you avoiding major cities? Do you think that the risk is much lower on one side of the Atlantic? Would you advise for a thousand people to not travel to London to attend a conference due to the increased risk?

Jhrosenberg @ 2022-05-06T07:11 (+2)

Peter says: No, I live in Washington, DC a few blocks from the White House, and I’m not suggesting evacuation at the moment because I think conventional conflict would precede nuclear conflict. But if we start trading bullets with Russian forces, odds of nuclear weapons use goes up sharply. And, yes, I do believe risk is higher in Europe than in the United States. But for the moment, I’d happily attend a conference in London.

Gurkenglas @ 2022-04-04T17:39 (+3)

If you multiply the odds of each step together, you get the level of danger from being in London.

As in Dissolving the Fermi Paradox, beware a product of point estimates.

Paul Ingrram @ 2022-03-28T09:27 (+1)

As someone who has worked on nuclear weapons policy for thirty years and recently joined the wider GCR community, I found this piece extremely helpful and convincing. I would draw people's attention in particular the final sentences... "I would suggest, then, that efforts to calculate nuclear risk must be accompanied by efforts to imagine nuclear risk. Only by considering the danger of nuclear war in qualitative terms can we truly appreciate its danger in quantitative terms." This, I believe, applies to any GCRs we choose to study.

Paul Ingram, CSER