EA Leaders Forum: Survey on EA priorities (data and analysis)

By Aaron Gertler 🔸 @ 2019-11-12T01:14 (+127)

Thanks to Alexander Gordon-Brown, Amy Labenz, Ben Todd, Jenna Peters, Joan Gass, Julia Wise, Rob Wiblin, Sky Mayhew, and Will MacAskill for assisting in various parts of this project, from finalizing survey questions to providing feedback on the final post.

***

Clarification on pronouns: “We” refers to the group of people who worked on the survey and helped with the writeup. “I” refers to me; I use it to note some specific decisions I made about presenting the data and my observations from attending the event.

***

This post is the second in a series of posts where we aim to share summaries of the feedback we have received about our own work and about the effective altruism community more generally. The first can be found here.

Overview

Each year, the EA Leaders Forum, organized by CEA, brings together executives, researchers, and other experienced staffers from a variety of EA-aligned organizations. At the event, they share ideas and discuss the present state (and possible futures) of effective altruism.

This year (during a date range centered around ~1 July), invitees were asked to complete a “Priorities for Effective Altruism” survey, compiled by CEA and 80,000 Hours, which covered the following broad topics:

- The resources and talents most needed by the community

- How EA’s resources should be allocated between different cause areas

- Bottlenecks on the community’s progress and impact

- Problems the community is facing, and mistakes we could be making now

This post is a summary of the survey’s findings (N = 33; 56 people received the survey).

Here’s a list of organizations respondents worked for, with the number of respondents from each organization in parentheses. Respondents included both leadership and other staff (an organization appearing on this list doesn’t mean that the org’s leader responded).

- 80,000 Hours (3)

- Animal Charity Evaluators (1)

- Center for Applied Rationality (1)

- Centre for Effective Altruism (3)

- Centre for the Study of Existential Risk (1)

- DeepMind (1)

- Effective Altruism Foundation (2)

- Effective Giving (1)

- Future of Humanity Institute (4)

- Global Priorities Institute (2)

- Good Food Institute (1)

- Machine Intelligence Research Institute (1)

- Open Philanthropy Project (6)

Three respondents work at organizations small enough that naming the organizations would be likely to de-anonymize the respondents. Three respondents don’t work at an EA-aligned organization, but are large donors and/or advisors to one or more such organizations.

What this data does and does not represent

This is a snapshot of some views held by a small group of people (albeit people with broad networks and a lot of experience with EA) as of July 2019. We’re sharing it as a conversation-starter, and because we felt that some people might be interested in seeing the data.

These results shouldn’t be taken as an authoritative or consensus view of effective altruism as a whole. They don’t represent everyone in EA, or even every leader of an EA organization. If you’re interested in seeing data that comes closer to this kind of representativeness, consider the 2018 EA Survey Series, which compiles responses from thousands of people.

Talent Needs

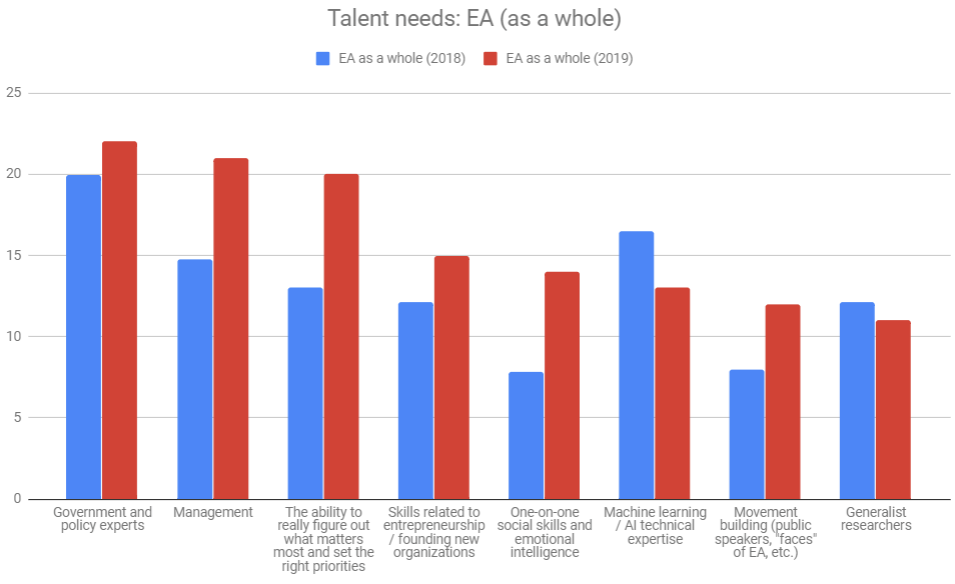

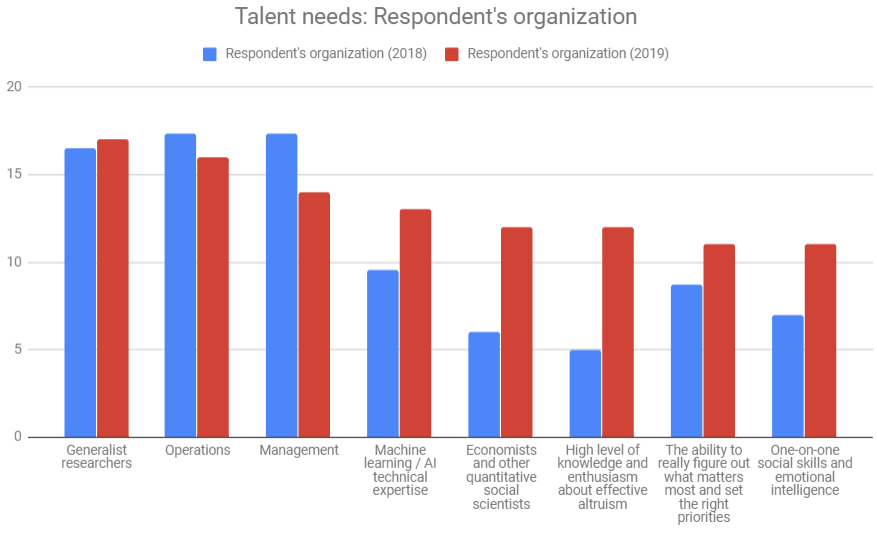

What types of talent do you currently think [your organization // EA as a whole] will need more of over the next 5 years? (Pick up to 6)

This question was the same as a question asked to Leaders Forum participants in 2018 (see 80,000 Hours’ summary of the 2018 Talent Gaps survey for more).

Here’s a graph showing how the most common responses from 2019 compare to the same categories in the 2018 talent needs survey from 80,000 Hours, for EA as a whole:

And for the respondent’s organization:

The following table contains data on every category (you can see sortable raw data here):

Notes:

- Two categories in the 2019 survey were not present in the 2018 survey; these cells were left blank in the 2018 column. (These are "Personal background..." and "High level of knowledge and enthusiasm...")

- Because of differences between the groups sampled, I made two corrections to the 2018 data:

- The 2018 survey had 38 respondents, compared to 33 respondents in 2019. I multiplied all 2018 figures by 33/38 and rounded them to provide better comparisons.

- After this, the sum of 2018 responses was 308; for all 2019 responses, 351. It’s possible that this indicates a difference in how many things participants thought were important in each year, but it also led to some confusing numbers (e.g. a 2019 category having more responses than its 2018 counterpart, but a smaller fraction of the total responses). To compensate, I multiplied all 2018 figures by 351/308 and rounded them.

- These corrections roughly cancelled out, with the 2018 sums reduced by roughly 1%, but I opted to include and mention them anyway. Such is the life of a data cleaner.

- While the groups of respondents in 2018 and 2019 overlapped substantially, there were some new survey-takers this year; shifts in perceived talent needs could partly reflect differences in the views of new respondents, rather than only a shift in the views of people who responded in both years.

- Some skills were named as important more often in 2019 than 2018. Those that saw the greatest increase (EA as a whole + respondent’s organization):

- Economists and other quantitative social scientists (+8)

- One-on-one social skills and emotional intelligence (+8)

- The ability to figure out what matters most / set the right priorities (+6)

- Movement building (e.g. public speakers, “faces” of EA) (+6)

- The skills that saw the greatest total decrease:

- Operations (-16)

- Other math, quant, or stats experts (-6)

- Administrators / assistants / office managers (-5)

- Web development (-5)

Other comments on talent needs

- “Some combination of humility (willing to do trivial-seeming things) plus taking oneself seriously.”

- “More executors; more people with different skills/abilities to what we already have a lot of; more people willing to take weird, high-variance paths, and more people who can communicate effectively with non-EAs.”

- “I think management capacity is particularly neglected, and relates strongly to our ability to bring in talent in all areas.”

Commentary

The 2019 results were very similar to those of 2018, with few exceptions. Demand remains high for people with skills in management, prioritization, and research, as well as experts on government and policy.

Differences between responses for 2018 and 2019:

- Operations, the area of most need in the 2018 survey, is seen as a less pressing need this year (though it still ranked 6th). This could indicate that we’ve begun to succeed at closing the operations skill bottleneck.

- However, more respondents perceived a need for operations talent for their own organizations than for EA as a whole. It might be the case that respondents perceive that the gap has closed more for other organizations than it actually has.

- This year saw an increase in perceived need for movement-building skills and for “one-on-one skills and emotional intelligence”. Taken together, these categories seem to indicate a greater focus on interpersonal skills.

Cause Priorities

Known causes

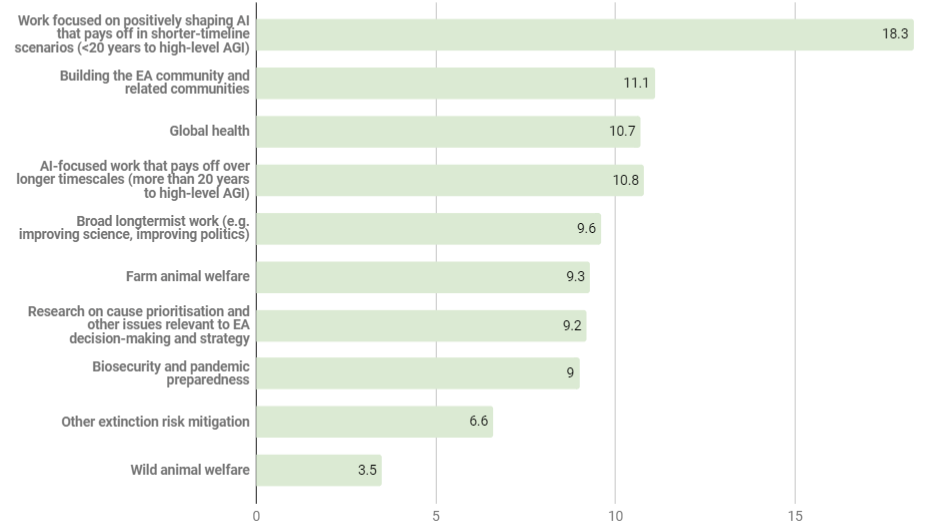

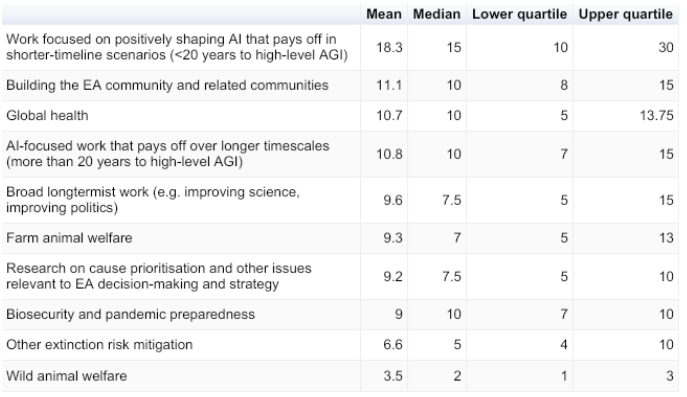

This year, we asked a question about how to ideally allocate resources across cause areas. (We asked a similar question last year, but with categories that were different enough that comparing the two years doesn’t seem productive.)

The question was as follows:

What (rough) percentage of resources should the EA community devote to the following areas over the next five years? Think of the resources of the community as something like some fraction of Open Phil's funding, possible donations from other large donors, and the human capital and influence of the ~1000 most engaged people.

This table shows the same data as above, with median and quartile data in addition to means. (If you ordered responses from least to greatest, the “lower quartile” number would be one-fourth of the way through the list [the 25th percentile], and the “upper quartile” number would be three-fourths of the way through the list [the 75th percentile].)

Other comments (known causes)

- “7% split between narrow long-termist work on non-GCR issues (e.g. S-risks), 7% to other short-termist work like scientific research”

- “3% to reducing suffering risk in carrying out our other work”

- “15% to explore various other cause areas; 7% on global development and economic growth (as opposed to global *health*); 3% on mental health.”

Our commentary (known causes)

Though many cause areas are not strictly focused on either the short-term or long-term future, one could group each of the specified priorities into one of three categories:

Near-term future: Global health, farm animal welfare, wild animal welfare

Long-term future: Positively shaping AI (shorter or longer timelines), biosecurity and pandemic preparedness, broad longtermist work, other extinction risk mitigation

Meta work: Building the EA community, research on cause priorisation

With these categories, we can sum each cause to get a sense for the average fraction of EA resources respondents think should go to different areas:

- Short-term: 23.5% of resources

- Long-term: 54.3%

- Meta: 20.3%

(Because respondents had the option to suggest additional priorities, these answers don’t add up to 100%.)

While long-term work was generally ranked as a higher priority than short-term or meta work, almost every attendee supported allocating resources to all three areas.

Cause X

What do you estimate is the probability (in %) that there exists a cause which ought to receive over 20% of EA resources (time, money, etc.), but currently receives little attention?

Of 25 total responses:

- Mean: 42.6% probability

- Median: 36.5%

- Lower quartile: 20%

- Upper quartile: 70%

Other comments (Cause X):

- "I'll interpret the question as follows: "What is the probability that, in 20 years, we will think that we should have focused 20% of resources on cause X over the years 2020-2024?"" (Respondent’s answer was 33%)

- “The probability that we find the cause within the next five years: 2%” (Respondent’s answer to the original question was 5% that the cause existed at all)

- "~100% if we allow narrow bets like 'technology X will turn out to pay off soon.' With more restriction for foreseeability from our current epistemic standpoint 70% (examples could be political activity, creating long-term EA investment funds at scale, certain techs, etc). Some issues with what counts as 'little' attention."” (We logged this as 70% in the aggregated data)

- "10%, but that's mostly because I think it's unlikely we could be sure enough about something being best to devote over 20% of resources to it, not because I don't think we'll find new effective causes.”

- “Depends how granularly you define cause area. I think within any big overarching cause such as "making AI go well" we are likely (>70%) to discover new angles that could be their own fields. I think it's fairly unlikely (<25%) that we discover another cause as large / expansive as our top few.” (Because this answer could have been interpreted as any of several numbers, we didn’t include it in the average)

- “I object to calling this ‘cause X’, so I’m not answering.”

Finally, since it turned out that no single response reached a mean of 20%, it seems likely that 20% was too high a bar for “Cause X” — that would make it a higher overall priority for respondents than any other option. If we ask this question again next year, we’ll consider lowering that bar.

Organizational constraints

Funding constraints

Overall, how funding-constrained is your organization?

(1 = how much things cost is never a practical limiting factor for you; 5 = you are considering shrinking to avoid running out of money)

Talent constraints

Overall, how talent-constrained is your organization?

(1 = you could hire many outstanding candidates who want to work at your org if you chose that approach, or had the capacity to absorb them, or had the money; 5 = you can't get any of the people you need to grow, or you are losing the good people you have)

Note: Responses from 2018 were taken on a 0-4 scale, so I normalized the data by adding 1 to all scores from 2018.

Other constraints noted by respondents

Including the 1-5 score if the respondent shared one:

- “Constraints are mainly internal governance and university bureaucracy.” (4)

- “Bureaucracy from our university, and wider academia; management and leadership constraints.” (3)

- “Research management constrained. We would be able to hire more researchers if we were able to offer better supervision and guidance on research priorities.” (4)

- “Constrained on some kinds of organizational capacity.” (4)

- “Constraints on time, management, and onboarding capacity make it hard to find and effectively use new people.” (4)

- “Need more mentoring capacity.” (3)

- “Management capacity.” (5)

- “Limited ability to absorb new people (3), difficulty getting public attention to our work (3), and limited ability for our cause area in general to absorb new resources (2); the last of these is related to constraints on managerial talent.”

- “We’re doing open-ended work for which it is hard to find the right path forward, regardless of the talent or money available.”

- “We’re currently extremely limited by the number of people who can figure out what to do on a high level and contribute to our overall strategic direction.”

- “Not wanting to overwhelm new managers. Wanting to preserve our culture.”

- “Limited management capacity and scoped work.”

- “Management-constrained, and it’s difficult to onboard people to do our less well-scoped work.”

- “Lack of a permanent CEO, meaning a hiring and strategy freeze.”

- “We are bottlenecked by learning how to do new types of work and training up people to do that work much more than the availability of good candidates.”

- “Onboarding capacity is low (especially for research mentorship)”

- “Institutional, bureaucratic and growth/maturation constraints (2.5)”

Commentary

Respondents’ views of funding and talent constraints have changed very little within the last year. This may indicate that established organizations have been able to roughly keep up with their own growth (finding new funding/people at the pace that expansion would require). We would expect these constraints to be different for newer and smaller organizations, so the scores here could fail to reflect how EA organisations as a whole are constrained on funding and talent.

Management and onboarding capacity are by far the most frequently-noted constraints in the “other” category. They seemed to overlap somewhat, given the number of respondents who mentioned them together.

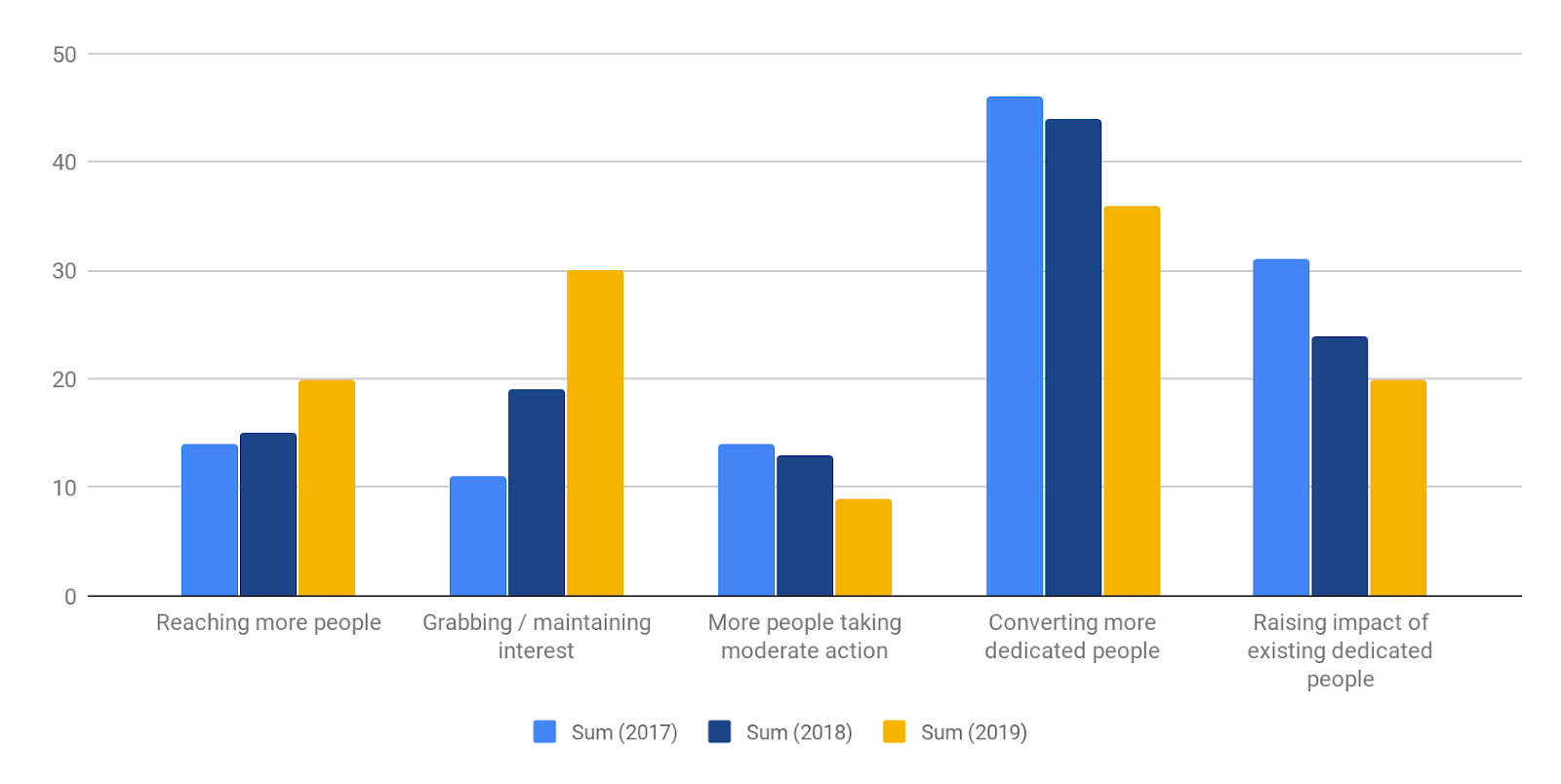

Bottlenecks to EA impact

What are the most pressing bottlenecks that are reducing the impact of the EA community right now?

These options are meant to refer to different stages in a “funnel” model of engagement. Each represents movement from one stage to the next. For example, “grabbing the interest of people who we reach” implies a bottleneck in getting people who have heard of effective altruism to continue following the movement in some way. (It’s not clear that the options were always interpreted in this way.)

These are the options respondents could choose from:

- Reaching more people of the right kind (note: this term was left undefined on the survey; in the future, we’d want to phrase this as something like “reaching more people aligned with EA’s values”)

- Grabbing the interest of people who we reach, so that they come back (i.e. not bouncing the right people)

- More people taking moderate action (e.g. making a moderate career change, taking the GWWC pledge, convincing a friend, learning a lot about a cause) converted from interested people due to better intro engagement (e.g. better-written content, ease in making initial connections)

- More dedicated people (e.g. people working at EA orgs, researching AI safety/biosecurity/economics, giving over $1m/year) converted from moderate engagement due to better advanced engagement (e.g. more in-depth discussions about the pros and cons of AI) (note: in the future, we’ll probably avoid giving specific cause areas in our examples)

- Increase the impact of existing dedicated people (e.g. better research, coordination, decision-making)

Other notes on bottlenecks:

- “It feels like a lot of the thinking around EA is very centralized.”

- “I think ‘reaching more people’ and ‘not bouncing people of the right kind’ would look somewhat qualitatively different from the status quo.”

- “I’m very tempted to say ‘reaching the right people’, but I generally think we should try to make sure the bottom of the funnel is fixed up before we do more of that.”

- “Hypothesis: As EA subfields are becoming increasingly deep and specialized, it's becoming difficult to find people who aren't intimidated by all the understanding required to develop the ambition to become experts themselves.”

- “I think poor communications and lack of management capacity turn off a lot of people who probably are value-aligned and could contribute a lot. I think those two factors contribute to EAs looking weirder than we really are, and pose a high barrier to entry for a lot of outsiders.”

- “A more natural breakdown of these bottlenecks for me would be about the engagement/endorsement of certain types of people: e.g. experts/prestigious, rank and file contributors, fans/laypeople. In this breakdown, I think the most pressing bottleneck is the first category (experts/prestigious) and I think it's less important whether those people are slightly involved or heavily involved.”

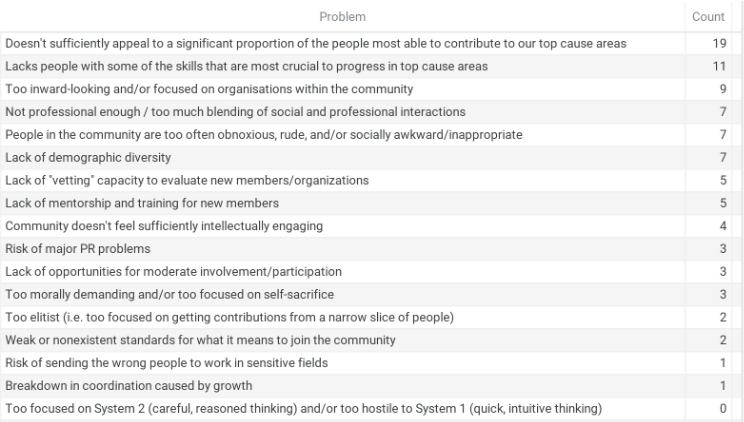

Problems with the EA community/movement

Before getting into these results, I’ll note that we collected almost all survey responses before the event began; many sessions and conversations during the event, inspired by this survey, covered ways to strengthen effective altruism. It also seemed to me, subjectively, as though many attendees were cheered by the community’s recent progress, and generally optimistic about the future of EA. (I was onsite for the event and participated in many conversations, but I didn’t attend most sessions and I didn’t take the survey.)

CEA’s Ben West interviewed some of this survey’s respondents — as well as other employees of EA organizations — in more detail. His writeup includes thoughts from his interviewees on the most exciting and promising aspects of EA, and we’d recommend reading that alongside this data (since questions about problems will naturally lead to answers that skew negative).

Here are some specific problems people often mention. Which of them do you think are most significant? (Choose up to 3)

What do you think is the most pressing problem facing the EA community right now?

- “I think the cluster around vetting and training is significant. Ditto demographic diversity.”

- “I think a lot of social factors (many of which are listed in your next question: we are a very young, white, male, elitist, socially awkward, and in my opinion often overconfident community) turn people off who would be value aligned and able to contribute in significant ways to our important cause areas.”

- “People interested in EA being risk averse in what they work on, and therefore wanting to work on things that are pretty mapped out and already thought well of in the community (e.g. working at an EA org, EtG), rather than trying to map out new effective roles (e.g. learning about some specific area of government which seems like it might be high leverage but about which the EA community doesn't yet know much).”

- “Things for longtermists to do other than AI and bio.”

- “Giving productive and win-generating work to the EAs who want jobs and opportunities for impact.”

- “Failure to reach people who, if we find them, would be very highly aligned and engaged. Especially overseas (China, India, Arab world, Spanish-speaking world, etc). “

- “Hard to say. I think it's plausibly something related to the (lack of) accessibility of existing networks, vetting constraints, and mentorship constraints. Or perhaps something related to inflexibility of organizations to change course and throw all their weight into certain problem areas or specific strategies that could have an outsized impact.”

- “Relationship between EA and longtermism, and how it influences movement strategy.”

- “Perception of insularity within EA by relevant and useful experts outside of EA.”

- “Groupthink.”

- “Not reaching the best people well.”

- Answers from one respondent:

- (1) EA community is too centralized (leading to groupthink)

- (2) the community has some unhealthy and non-inclusive norms around ruthless utility maximization (leading to burnout and exclusion of people, especially women, who want to have kids)

- (3) disproportionate focus on AI (leading to overfunding in that space and a lot of people getting frustrated because they have trouble contributing in that space)

- (4) too tightly coupled with the Bay Area rationalist community, which has a bad reputation in some circles

What personally most bothers you about engaging with the EA community?

- “I dislike a lot of online amateurism.”

- “Abrasive people, especially online.”

- “Using rationalist vocabulary.”

- “The social skills of some folks could be improved.”

- “Insularity, lack of diversity.”

- “Too buzzword-y (not literally that, but the thing behind it).”

- “Perceived hostility towards suffering-focused views.”

- “People aren't maximizing enough; they're too quick to settle for 'pretty good'.”

- “Being associated with "ends justify the means" type thinking.”

- “Hubris; arrogance without sufficient understanding of others' wisdom.”

- “Time-consuming and offputting for online interaction, e.g. the EA Forum.”

- “Awkward blurring of personal and professional. In-person events mainly feel like work.”

- “People saying crazy stuff online in the name of EA makes it harder to appeal to the people we want.”

- “Obnoxious, intellectually arrogant and/or unwelcoming people - I can't take interested but normie friends to participate, [because EA meetups and social events] cause alienation with them.”

- “That the part of the community I'm a part of feels so focused on talking about EA topics, and less on getting to know people, having fun, etc.”

- “Tension between the gatekeeping functions involved in community building work and not wanting to disappoint people; people criticizing my org for not providing all the things they want.”

- “To me, the community feels a bit young and overconfident: it seems like sometimes being "weird" is overvalued and common sense is undervalued. I think this is related to us being a younger community who haven't learned some of life's lessons yet.”

- “People being judgmental on lots of different axes: some expectation that everyone do all the good things all the time, so I feel judged about what I eat, how close I am with my coworkers (e.g. people thinking I shouldn't live with colleagues), etc.”

- “Some aspects of LessWrong culture (especially the norm that the saying potentially true things tactlessly tends to reliably get more upvotes than complaints about tact). By this, I *don't* mean complaints about any group of people's actual opinions. I just don't like cultures where it's socially commendable to signal harshness when it's possible to make the same points more empathetically.”

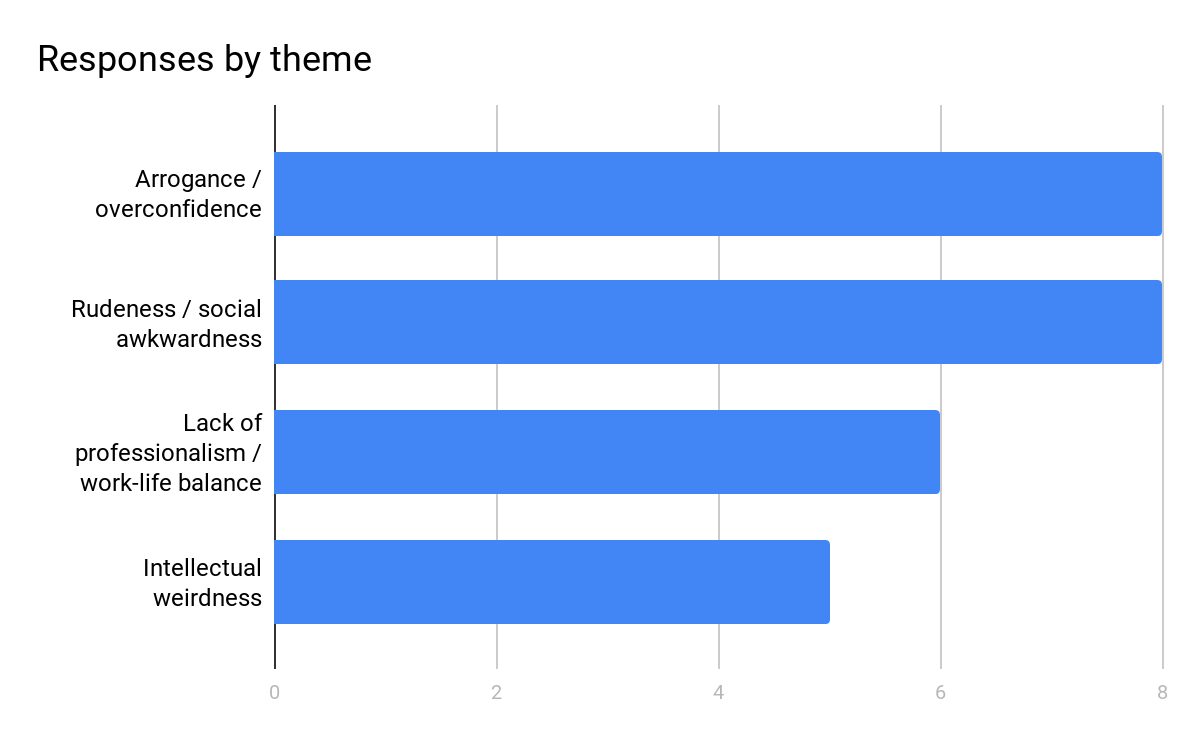

Most responses (both those above, and those which respondents asked we not share) included one or more of the following four “themes”:

- People in EA, or the movement as a whole, seeming arrogant/overconfident

- People in EA engaging in rude/socially awkward behavior

- The EA community and its organizations not being sufficiently professional, or failing to set good standards for work/life balance

- Weird ideas taking up too much of EA’s energy, being too visible, etc.

Below, we’ve charted the number of times we identified each theme:

Community Mistakes

What are some mistakes you're worried the EA community might be making? If in five years we really regret something we're doing today, what is it most likely to be?

- “The risk of catastrophic negative PR/scandal based on non-work aspects of individual/community behavior.”

- “Restricting movement growth to focus too closely on the inner circle.”

- “Overfunding or focusing too closely on AI work.”

- “Making too big a bet on AI and having it turn out to be a damp squib (which I think is likely). Being shorttermists in movement growth — pushing people into direct work rather than building skills or doing movement growth. Not paying enough attention to PR or other X-risks to the EA movement.”

- “Not figuring out how to translate our worldview into dominant cultural regimes.”

- “Focusing too much on a narrow set of career paths.”

- “Still being a community (for which EA is ill-suited) versus a professional association or similar.”

- “Not being ambitious enough, and not being critical enough about some of the assumptions we're making about what maximizes long-term value.”

- “Not focusing way more on student groups; not making it easier for leaders to communicate (e.g. via a group Slack that's actually used); focusing so much on the UK community.”

- “Not having an answer for what people without elitist credentials can do.”

- “Not working hard enough on diversity, or engagement with outside perspectives and expertise.”

- “Not finding more / better public faces for the movement. It would be great to find one or two people who would make great public intellectual types, and who would like to do it, and get them consistently speaking / writing.”

- “Careless outreach, especially in politically risky countries or areas such as policy; ill-thought-out publications, including online.”

- “Not thinking arguments through carefully enough, and therefore being wrong.”

- “I'm very uncertain about the current meme that EA should only be spread through high-fidelity 1-on-1 conversations. I think this is likely to lead to a demographic problem and ultimately to groupthink. I think we might be too quick to dismiss other forms of outreach.”

- “I think a lot of the problems I see could be natural growing pains, but some possibilities:

- (a) we are overconfident in a particular Bayesian-utilitarian intellectual framework

- (b) we are too insular and not making enough of an effort to hear and weigh the views of others

- (c) we are not working hard enough to find ways of holding each other and ourselves accountable for doing great work.”

Commentary

The most common theme in these answers seems to be the desire for EA to be more inclusive and welcoming. Respondents saw a lot of room for improvement on intellectual diversity, humility, and outreach, whether to distinct groups with different views or to the general population.

The second-most common theme concerned standards for EA research and strategy. Respondents wanted to see more work on important problems and a focus on thinking carefully without drawing conclusions too quickly. If I had to sum up these responses, I’d say something like: “Let’s hold ourselves to high standards for the work we produce.”

Overall, respondents generally agreed that EA should:

- Improve the quality of its intellectual work, largely by engaging in more self-criticism and challenging some of its prior assumptions (and by promoting norms around these practices).

- Be more diverse in many ways — in the people who make up the community, the intellectual views they hold, and the causes and careers they care about.

Having read these answers, my impression is that participants hoped that the community continues to foster the kindness, humility, and openness to new ideas that people associate with the best parts of EA, and that we make changes when that isn’t happening. (This spirit of inquiry and humility was quite prevalent at the event; I heard many variations on “I wish I’d been thinking about this more, and I plan to do so once the Forum is over.”)

Overall Commentary

Once again, we’d like to emphasize that these results are not meant to be representative of the entire EA movement, or even the views of, say, the thousand people who are most involved. They reflect a small group of participants at a single event.

Some weaknesses of the survey:

- Many respondents likely answered these questions quickly, without doing serious analysis. Some responses will thus represent gut reactions, though others likely represent deeply considered views (for example, if a respondent had been thinking for years about issues related to a particular question).

- The survey included 33 people from a range of organizations, but not all respondents answered each question. The average number of answers across multiple-choice or quantitative questions was 30. (All qualitative responses have been listed, save for responses from two participants who asked that their answers not be shared.)

- Some questions were open to multiple interpretations or misunderstandings. We think this is especially likely for “bottleneck” questions, as we did not explicitly state that each question was meant to refer to a stage in the “funnel” model.

Milan_Griffes @ 2019-11-16T17:08 (+41)

Were representatives from these groups invited to the Leaders Forum?

- GiveWell

- BERI

- Survival & Flourishing Fund

- LessWrong

- CSET

- Rethink Priorities

- Charity Entrepreneurship

- Founders Pledge

- Happier Lives Institute

If not, why not?

Habryka @ 2019-11-22T20:19 (+27)

No representative of LessWrong was invited to the forum. I don't know any reason for that, and I was invited to the previous year's forum.

My sense was that CEA decided to generally invite less organizations and people this year than last year.

Tee @ 2019-12-04T23:43 (+28)

FYI Rethink Charity and associated projects were also not invited, including Rethink Priorities and LEAN. We were also invited to forums in previous years

aarongertler @ 2019-11-26T14:49 (+16)

In order to preserve the possibility for staff of some organizations to attend without that fact being public, I'm going to refrain from answering further questions about which specific organizations did or didn't have representatives at the event. See my response to Michael for more on the general criteria that were used to select invitees.

Milan_Griffes @ 2019-11-26T17:03 (+7)

Why is it important for staff of some organizations to be able to attend anonymously?

aarongertler @ 2019-11-27T12:38 (+20)

Some organizations may want to avoid being connected explicitly to the EA movement -- for example, if almost all their work happens in non-EA circles, where EA might have a mixed reputation.

This obviously isn't the case for all organizations on your list, but answering for some organizations and not others makes it clear which orgs do fall into that category.

Milan_Griffes @ 2019-12-01T21:32 (+3)

Okay, I think this is a pretty bad thing to trade a lot of transparency for.

- The work of all the orgs I asked about falls solidly within EA circles (except for CSET, and maybe Founders Pledge)

- Folks outside of EA don't really read the EA Forum

- I have trouble imagining folks outside of EA being shocked to learn that an org they interface with was invited to a random event (EA has a mixed reputation outside of EA, but it's not toxic / there's minimal risk of guilt-by-association)

I wonder if the reason you gave is your true reason?

---

If CEA wants to hold a secret meeting to coordinate with some EA orgs, probably best to keep it secret.

If CEA wants to hold a publicly disclosed, invite-only meeting to coordinate with some EA orgs, probably best to make a full public disclosure.

The status quo feels like an unsatisfying middle ground with some trappings of transparency but a lot of substantive content withheld.

aarongertler @ 2019-12-08T14:54 (+32)

I wonder if the reason you gave is your true reason?

Yes, that is the true reason.

I was planning to answer “yes” or ”no” for all of the orgs you listed, until I consulted one of the orgs on the list and they asked me not to do this for them (making it difficult to do for the rest). I can see why you’d be skeptical of the reasons that orgs requested privacy, but we think that there are some reasonable concerns here, and we’d like to respect the orgs in question and be cooperative with them.

After talking more internally about this post, we remain uncertain about how to think about sharing information on invited orgs. There’s a transparency benefit, but we also think that it could cause misinterpretation or overinterpretation of a process that was (as Max noted) relatively ad hoc, on top of the privacy issues discussed above.

I can share that several of the orgs you listed did have "representatives", in the sense that people were invited who work for them, sit on their boards, or otherwise advise them. These invitees either didn't fill out the survey or were listed under other orgs they also work for. (We classified each person under one org -- perhaps we should have included all "related" orgs instead? A consideration for next year.)

The status quo feels like an unsatisfying middle ground with some trappings of transparency but a lot of substantive content withheld.

I can see how we ended up at an awkward middle ground here. In the past, we generally didn’t publish information from Leaders Forum (though 80,000 Hours did post results from a survey about talent gaps given to attendees of the 2018 event). We decided to publish more information this year, but I understand it’s frustrating that we’re not able to share everything you want to know.

To be clear, the event did skew longtermist, and I don’t want to indicate that the attendees were representative of the overall EA community; as we noted in the original post, we think that data from sources like the EA Survey is much more likely to be representative. (And as Max notes, given that the attendees weren’t representative, we might need to rethink the name of the event.)

RobBensinger @ 2019-12-08T22:30 (+45)

Speaking as a random EA — I work at an org that attended the forum (MIRI), but I didn't personally attend and am out of the loop on just about everything that was discussed there — I'd consider it a shame if CEA stopped sharing interesting take-aways from meetings based on an "everything should either be 100% publicly disclosed or 100% secret" policy.

I also don't think there's anything particularly odd about different orgs wanting different levels of public association with EA's brand, or having different levels of risk tolerance in general. EAs want to change the world, and the most leveraged positions in the world don't perfectly overlap with 'the most EA-friendly parts of the world'. Even in places where EA's reputation is fine today, it makes sense to have a diversified strategy where not every wonkish, well-informed, welfare-maximizing person in the world has equal exposure if EA's reputation takes a downturn in the future.

MIRI is happy to be EA-branded itself, but I'd consider it a pretty large mistake if MIRI started cutting itself off from everyone in the world who doesn't want to go all-in on EA (refuse to hear their arguments or recommendations, categorically disinvite them from any important meetings, etc.). So I feel like I'm logically forced to say this broad kind of thing is fine (without knowing enough about the implementation details in this particular case to weigh in on whether people are making all the right tradeoffs).

John_Maxwell @ 2019-11-12T07:57 (+34)

Thanks for this post!

One thing I noticed is that EA leaders seem to be concerned with both excessive intellectual weirdness and also excessive intellectual centralization. Was this a matter of leaders disagreeing with one another, or did some leaders express both positions?

There isn't necessarily a contradiction in expressing both positions. For example, perhaps there's an intellectual center and it's too weird. (Though, if the weirdness comes in the form of "People saying crazy stuff online", this explanation seems less likely.) You could also argue that we are open to weird ideas, just not the right weird ideas.

But I think it could be interesting to try & make this tradeoff more explicit in future surveys. It seems plausible that the de facto result of announcing survey results such as these is to move us in some direction along a single coarse intellectual centralization/decentralization dimension. (As I said, there might be a way to square this circle, but if so I think you want a longer post explaining how, not a survey like this.)

Another thought is that EA leaders are going to suggest nudges based on the position they perceive us to be occupying along a particular dimension--but perceptions may differ. Maybe one leader says "we need more talk and less action", and another leader says "we need less talk and more action", but they both agree on the ideal talk/action balance, they just disagree about the current balance (because they've made different observations about the current balance).

One way to address this problem in general for some dimension X is to have a rubric with 5 written descriptions of levels of X the community could aim for, and ask each leader to select the level of X that seems optimal to them. Another advantage of this scheme is if there's a fair amount of community variation in levels of X, the community could be below the optimal level of X on average, but if leaders publicly announce that levels of X should move up (without having specified a target level), people who are already above the ideal level of X might move even further above the ideal level.

aarongertler @ 2019-11-21T14:47 (+9)

One thing I noticed is that EA leaders seem to be concerned with both excessive intellectual weirdness and also excessive intellectual centralization. Was this a matter of leaders disagreeing with one another, or did some leaders express both positions?

In general, I think most people chose one concern or the other, but certainly not all of them. If I had to summarize a sort of aggregate position (a hazardous exercise, of course), I'd say:

"I wish we had more people in the community building on well-regarded work to advance our understanding of established cause areas. It seems like a lot of the new work that gets done (at least outside of the largest orgs) involves very speculative causes and doesn't apply the same standards of rigor used by, say, GiveWell. Meanwhile, when it comes to the major established cause areas, people seem content to listen to orgs' recommendations without trying to push things forward, which creates a sense of stagnation."

Again, this is an aggregate: I don't think any single attendee would approve of exactly this statement as a match for their beliefs.

But I think it could be interesting to try & make this tradeoff more explicit in future surveys.

That's an interesting idea! We'll revisit this post the next time we decide to send out this survey (it's not necessarily something we'll do every year), and hopefully whoever's in charge will consider how to let respondents prioritize avoiding weirdness vs. avoiding centralization.

Another thought is that EA leaders are going to suggest nudges based on the position they perceive us to be occupying along a particular dimension--but perceptions may differ.

This also seems likely to be a factor behind the distribution of responses.

John_Maxwell @ 2019-11-22T05:17 (+12)

Thanks for the aggregate position summary! I'd be interested to hear more about the motivation behind that wish, as it seems likely to me that doing shallow investigations of very speculative causes would actually be the comparative advantage of people who aren't employed at existing EA organizations. I'm especially curious given the high probability that people assigned to the existence of a Cause X that should be getting so much resources. It seems like having people who don't work at existing EA organizations (and are thus relatively unaffected by existing blind spots) do shallow investigations of very speculative causes would be just the thing for discovering Cause X.

For a while now I've been thinking that the crowdsourcing of alternate perspectives ("breadth-first" rather than "depth-first" exploration of idea space) is one of the internet's greatest strengths. (I also suspect "breadth-first" idea exploration is underrated in general.) On the flip side, I'd say one of the internet's greatest weaknesses is the ease with which disagreements become unnecessarily dramatic. So I think if someone was to do a meta-analysis of recent literature on, say, whether remittances are actually good for developing economies in the long run (critiquing GiveDirectly -- btw, I couldn't find any reference to academic research on the impact of remittances on Givewell's current GiveDirectly profile -- maybe they just didn't think to look it up -- case study in the value of an alternate perspective?), or whether usage of malaria bed nets for fishing is increasing or not (critiquing AMF), there's a sense in which we'd be playing against the strengths of the medium. Anyway, if organizations wanted critical feedback on their work, they could easily request that critical feedback publicly (solicited critical feedback is less likely to cause drama / bad feelings that unsolicited critical feedback), or even offer cash prizes for best critiques, and I see few cases of organizations doing that.

Maybe part of what's going on is that shallow investigations of very speculative causes only rarely amount to something? See this previous comment of mine for more discussion.

Jonas Vollmer @ 2019-11-13T23:17 (+27)

I find it hard to understand which responses were categorized as "intellectual weirdness" and I'm not sure which lessons to draw. E.g., we might be concerned about being off-putting to people who are most able to contribute to top cause areas by presenting weird ideas too prominently ("don't talk about electron suffering to newbies"). Or we might think EAs are getting obsessed with weird ideas of little practical relevance and should engage more with what common sense has to say ("don't spend your time thinking about electron suffering"). Or we might be concerned about PR risk, etc.

aarongertler @ 2019-11-21T14:51 (+8)

I think that all three of the concerns you mentioned were shared by some subset of the respondents.

Given the nature of the survey, it's hard to say that any one of those lessons is most important, but the one that felt most present at the event (to me) was more about publicizing weird ideas than pursuing them in the first place. My impression was that most attendees didn't see it as a problem for people to work on things like, say, psychedelics (to pick a cause that's closer to the mainstream than electron suffering). But they don't want a world where someone who first hears about EA is likely to hear about it as "a group of people doing highly speculative research into ideas that aren't obviously connected to the public good".

There was also some concern that "weirder" areas don't naturally lend themselves to rigorous examination, such that our research standards might slip the further we get from the best-established cause areas.

Jonas Vollmer @ 2019-11-23T17:18 (+14)

I share this perception; I think I'd prefer if we didn't lump these different concerns together as "Intellectual weirdness."

aarongertler @ 2019-11-25T13:29 (+4)

I think the lumping is present only in this post, so hopefully the concerns will remain separate elsewhere. Thanks for taking the time to tease apart those issues.

MichaelStJules @ 2019-11-13T18:53 (+16)

What criteria were used to decide which orgs/individuals should be invited? Should we consider leaders at EA-recommended orgs or orgs doing cost-effective work in EA cause areas, but not specifically EA-aligned (e.g. Gates Foundation?), too? (This was a concern raised about representativeness of the EA handbook. https://forum.effectivealtruism.org/posts/MQWAsdm8MSzYNkD9X/announcing-the-effective-altruism-handbook-2nd-edition#KR2uKZqSmno7ANTQJ)

Because of this, I don't think it really makes sense to aggregate data over all cause areas. The inclusion criteria are likely to draw pretty arbitrary lines, and respondents will obviously tend to want to see more resources go to the causes they're working on, and will differ in other ways significantly by cause area. If the proportions of people working in a given cause don't match the proportion of EA funding people would like to see go to that cause, that is interesting, though, but we still can't take much away from it.

It seems weird to me that DeepMind and the Good Food Institute are on this list, but not, say, the Against Malaria Foundation, GiveDirectly, Giving What We Can, J-PAL, IPA, or the Humane League.

As stated, some orgs are small and so were not named, but still responded. Maybe a breakdown by the cause area for all the respondents would be more useful with the data you have already?

aarongertler @ 2019-11-21T15:10 (+6)

What criteria were used to decide which orgs/individuals should be invited?

A small team of CEA staffers (I was not one of them) selected an initial invite list (58 people). At present, we see Leaders Forum as an event focused on movement building and coordination. We focus on inviting people who play a role in trying to shape the overall direction of the EA movement (whatever cause area they focus on), rather than people who mostly focus on direct research within a particular cause area. As you’d imagine, this distinction can be somewhat fuzzy, but that’s the mindset with which CEA approaches invites (though other factors can play a role).

To give a specific example, while the Against Malaria Foundation is an important charity for people in EA who want to support global health, I’m not aware of any AMF staffers who have both a strong interest in the EA movement as a whole and some relevant movement-building experience. I don’t think that, say, Rob Mather (AMF’s CEO), or a representative from the Gates Foundation, would get much value from the vast majority of conversations/sessions at the event.

I should also note that the event has gotten a bit smaller over time. The first Leaders Forum (2016) had ~100 invitees and 62 attendees and wasn’t as focused on any particular topic. The next year, we shifted to a stronger focus on movement-building in particular (including community health, movement strategy, and risks to EA), which naturally led to a smaller, more focused invite list.

As with any other CEA program, Leaders Forum may continue to change over time; we aren’t yet sure how many people we’ll invite next year.

Because of this, I don't think it really makes sense to aggregate data over all cause areas.

I mostly agree! For several reasons, I wouldn’t put much stock in the cause-area data. Most participants likely arrived at their answers very quickly, and the numbers are of course dependent on the backgrounds of the people who both (a) were invited and (b) took the time to respond. However, because we did conduct the survey, it felt appropriate to share what information came out of it, even if the value of that information is limited.

I do, however, think it’s good to have this information to check whether certain “extreme” conditions are present — for example, it would have been surprising and notable if wild animal welfare had wound up with a median score of “0”, as that would seem to imply that most attendees think the cause doesn’t matter at all.

As stated, some orgs are small and so were not named, but still responded. Maybe a breakdown by the cause area for all the respondents would be more useful with the data you have already?

Given the limited utility of the prioritization data, I don’t know how much more helpful a cause-area breakdown would be. (Also, many if not most respondents currently work on more than one of the areas mentioned, but not necessarily with an even split between areas — any number I came up with would be fairly subjective.)

It seems weird to me that DeepMind and the Good Food Institute are on this list, but not, say, the Against Malaria Foundation, GiveDirectly, Giving What We Can, J-PAL, IPA, or the Humane League.

In addition to what I noted above about the types of attendees we aimed for, I’ll note that the list of respondent organizations doesn’t perfectly match who we invited; quite a few other organizations had invitees who didn’t fill out the survey. However, I will note that Giving What We Can (which is a project of CEA and was represented by CEA staff) did have representatives there.

As for organizations like DeepMind or GFI: While some of the orgs on the list are focused on a single narrow area, the employees we invited often had backgrounds in EA movement building and (in some cases) direct experience in other cause areas. (One invitee has run at least three major EA-aligned projects in three different areas.)

This wasn’t necessarily the case for every attendee (as I mentioned, we considered factors other than community-building experience), but it’s an important reason that the org list looks the way it does.

AnonymousEAForumAccount @ 2019-12-02T22:15 (+23)

At present, we see Leaders Forum as an event focused on movement building and coordination. We focus on inviting people who play a role in trying to shape the overall direction of the EA movement (whatever cause area they focus on), rather than people who mostly focus on direct research within a particular cause area. As you’d imagine, this distinction can be somewhat fuzzy, but that’s the mindset with which CEA approaches invites (though other factors can play a role).

I really wish this had been included in the OP, in the section that discusses the weaknesses of the data. That section seems to frame the data as a more or less random subset of leaders of EA organizations (“These results shouldn’t be taken as an authoritative or consensus view of effective altruism as a whole. They don’t represent everyone in EA, or even every leader of an EA organization.”)

When I look at the list of organizations that were surveyed, it doesn’t look like the list of organizations most involved in movement building and coordination. It looks much more like a specific subset of that type of org: those focused on longtermism or x-risk (especially AI) and based in one of the main hubs (London accounts for ~50% of respondents, and the Bay accounts for ~30%).* Those that prioritize global poverty, and to a lesser extent animal welfare, seem notably missing. It’s possible the list of organizations that didn’t respond or weren’t named looks a lot different, but if that’s the case it seems worth calling attention to and possibly trying to rectify (e.g. did you email the survey to anyone or was it all done in person at the Leaders Forum?)

Some of the organizations I’d have expected to see included, even if the focus was movement building/coordination: GiveWell (strategy/growth staff, not pure research staff), LEAN, Charity Entrepreneurship, Vegan Outreach, Rethink Priorities, One for the World, Founders Pledge, etc. Most EAs would see these as EA organizations involved to some degree with movement building. But we’re not learning what they think, while we are apparently hearing from at least one org/person who “want to avoid being connected explicitly to the EA movement -- for example, if almost all their work happens in non-EA circles, where EA might have a mixed reputation.”

I’m worried that people who read this report are likely to misinterpret the data being presented as more broadly representative than it actually is (e.g. the implications of respondents believing ~30% of EA resources should go to AI work over the next 5 years are radically different if those respondents disproportionally omit people who favor other causes). I have the same concerns about this survey was presented as Jacy Reese expressed about how the leaders survey from 2 years ago (which also captured a narrow set of opinions) was presented:

My main general thought here is just that we shouldn't depend on so much from the reader. Most people, even most thoughtful EAs, won't read in full and come up with all the qualifications on their own, so it's important for article writers to include those themselves, and to include those upfront and center in their articles.

Lastly, I’ll note that there’s a certain irony in surveying only a narrow set of people, given that even among those respondents: “The most common theme in these answers [about problems in the EA community] seems to be the desire for EA to be more inclusive and welcoming. Respondents saw a lot of room for improvement on intellectual diversity, humility, and outreach, whether to distinct groups with different views or to the general population.” I suspect if a more diverse set of leaders had been surveyed, this theme would have been expressed even more strongly.

* GFI and Effective Giving both have London offices, but I’ve assumed their respondents were from other locations.

aarongertler @ 2019-12-08T21:40 (+10)

I agree with you that the orgs you mentioned (e.g. One for the World) are more focused on movement building than some of the other orgs that were invited.

I talked with Amy Labenz (who organized the event) in the course of writing my original reply. We want to clarify that when we said: “At present, we see Leaders Forum as an event focused on movement building and coordination. We focus on inviting people who play a role in trying to shape the overall direction of the EA movement (whatever cause area they focus on)”. We didn’t mean to over-emphasize “movement building” (in the sense of “bringing more people to EA”) relative to “people shaping the overall direction of the EA movement (in the sense of “figuring out what the movement should prioritize, growth or otherwise”).

My use of the term “movement building” was my slight misinterpretation of an internal document written by Amy. The event’s purpose was closer to discussing the goals, health, and trajectory of the movement (e.g. “how should we prioritize growth vs. other things?”) than discussing how to grow/build the movement (e.g. “how should we introduce EA to new people?”)

AnonymousEAForumAccount @ 2019-12-09T23:40 (+3)

Thanks Aaron, that’s a helpful clarification. Focusing on “people shaping the overall direction of the EA movement” rather than just movement building seems like a sensible decision. But one drawback is that coming up with a list of those people is a much more subjective (and network-reliant) exercise than, for example, making a list of movement building organizations and inviting representatives from each of them.

aarongertler @ 2019-12-04T11:51 (+6)

Thanks for your feedback on including the event's focus as a limitation of the survey. That's something we'll consider if we run a similar survey and decide to publish the data next year.

Some of the organizations you listed had representatives invited who either did not attend or did not fill out the survey. (The survey was emailed to all invitees, and some of those who filled it out didn't attend the event.) If everyone invited had filled it out, I think the list of represented organizations would look more diverse by your criteria.

AnonymousEAForumAccount @ 2019-12-06T17:13 (+1)

Thanks Aaron. Glad to hear the invitee list included a broader list of organizations, and that you'll consider a more explicit discussion of potential selection bias effects going forward.

Maxdalton @ 2019-12-08T13:24 (+18)

(I was the interim director of CEA during Leaders Forum, and I’m now the executive director.)

I think that CEA has a history of pushing longtermism in somewhat underhand ways (e.g. I think that I made a mistake when I published an “EA handbook” without sufficiently consulting non-longtermist researchers, and in a way that probably over-represented AI safety and under-represented material outside of traditional EA cause areas, resulting in a product that appeared to represent EA, without accurately doing so). Given this background, I think it’s reasonable to be suspicious of CEA’s cause prioritisation.

(I’ll be writing more about this in the future, and it feels a bit odd to get into this in a comment when it’s a major-ish update to CEA’s strategy, but I think it’s better to share more rather than less.) In the future, I’d like CEA to take a more agnostic approach to cause prioritisation, trying to construct non-gameable mechanisms for making decisions about how much we talk about different causes. An example of how this might work is that we might pay for an independent contractor to try to figure out who has spent more than two years full time thinking about cause prioritization, and then surveying those people. Obviously that project would be complicated - it’s hard to figure out exactly what “cause prio” means, it would be important to reach out through diverse networks to make sure there aren’t network biases etc.

Anyway, given this background of pushing longtermism, I think it’s reasonable to be skeptical of CEA’s approach on this sort of thing.

When I look at the list of organizations that were surveyed, it doesn’t look like the list of organizations most involved in movement building and coordination. It looks much more like a specific subset of that type of org: those focused on longtermism or x-risk (especially AI) and based in one of the main hubs (London accounts for ~50% of respondents, and the Bay accounts for ~30%).* Those that prioritize global poverty, and to a lesser extent animal welfare, seem notably missing. It’s possible the list of organizations that didn’t respond or weren’t named looks a lot different, but if that’s the case it seems worth calling attention to and possibly trying to rectify (e.g. did you email the survey to anyone or was it all done in person at the Leaders Forum?)

I think you’re probably right that there are some biases here. How the invite process worked this year was that Amy Labenz, who runs the event, draws up a longlist of potential attendees (asking some external advisors for suggestions about who should be invited). Then Amy, Julia Wise, and I voted yes/no/maybe on all of the individuals on the longlist (often adding comments). Amy made a final call about who to invite, based on those votes. I expect that all of this means that the final invite list is somewhat biased by our networks, and some background assumptions we have about individuals and orgs.

Given this, I think that it would be fair to view the attendees of the event as “some people who CEA staff think it would be useful to get together for a few days” rather than “the definitive list of EA leaders”. I think that we were also somewhat loose about what the criteria for inviting people should be, and I’d like us to be a bit clearer on that in the future (see a couple of paragraphs below). Given this, I think that calling the event “EA Leaders Forum” is probably a mistake, but others on the team think that changing the name could be confusing and have transition costs - we’re still talking about this, and haven’t reached resolution about whether we’ll keep the name for next year.

I also think CEA made some mistakes in the way we framed this post (not just the author, since it went through other readers before publication.) I think the post kind of frames this as “EA leaders think X”, which I expect would be the sort of thing that lots of EAs should update on. (Even though I think it does try to explicitly disavow this interpretation (see the section on “What this data does and does not represent”, I think the title suggests something that’s more like “EA leaders think these are the priorities - probably you should update towards these being the priorities”). I think that the reality is more like “some people that CEA staff think it’s useful to get together for an event think X”, which is something that people should update on less.

We’re currently at a team retreat where we’re talking more about what the goals of the event should be in the future. I think that it’s possible that the event looks pretty different in future years, and we’re not yet sure how. But I think that whatever we decide, we should think more carefully about the criteria for attendees, and that will include thinking carefully about the approach to cause prioritization.

AnonymousEAForumAccount @ 2019-12-09T23:38 (+2)

Thank you for taking the time to respond, Max. I appreciate your engagement, your explanation of how the invitation process worked this year, and your willingness to acknowledge that CEA may have historically been too aggressive in how it has pushed longtermism and how it has framed the results of past surveys.

In the future, I’d like CEA to take a more agnostic approach to cause prioritisation, trying to construct non-gameable mechanisms for making decisions about how much we talk about different causes.

Very glad to hear this. As you note, implementing this sort of thing in practice can be tricky. As CEA starts designing new mechanisms, I’d love to see you gather input (as early possible) from people who have expressed concern about CEA’s representativeness in the past (I’d be happy to offer opinions if you’d like). These also might be good people to serve as "external advisors" who generate suggestions for the invite list.

Good luck with the retreat! I look forward to seeing your strategy update once that’s written up.

Jorgen_Ljones @ 2019-11-13T16:26 (+16)

Regarding the question about the preferred resource allocation over the next five years, I would like to see someone take a stab at estimating the current allocation of resources over the same categories. My guess is that many of the cause areas are far from these numbers and it would imply a huge shift from status quo to increase or decrease the number of people and/or money going to each cause area.

The 3.5% allocation to wild animal welfare, for example, is 35 of the most engaged EAs contributing to the cause area and the money that goes with it. Or more people and less money if we trade off the resources against each other. Currently Wild Animal Initiative, the most significant EA actor in the field, employs eight people according a document on their website, most of them part-time. Going from here to 35 people would mean large investments and need of management, coordination and operations capacity. Especially if we should interpret it to mean 35 people on average over the next five years, given the position we're starting at.

I believe similar examples could be made from biosecurity (90 people and 9% of funding) or AI in shorter-timeline scenarios (180 people and 18% of funding). I guess that meta EA, global health and development and maybe farmed animal welfare are the categories that would need to scale back funding and people involved to reach these allocation targets.

Aaron commented that the respondents answered quickly to the survey questions, and this particular question even asks for a "rough" percentage of resource allocation. This might suggest that we shouldn't look too much at the exact average numbers, but only note the order of cause areas from most to least resources allocated.

Another possibility is that the respondents answered the questions based on the assumption that one could disregard all practical issues of how to get from status quo to this preferred allocation of resources. If so, I think it would be helpful to state this clearly with the question so that all respondents and readers have this in mind.

Liam_Donovan @ 2019-11-13T18:06 (+12)

You're estimating there are ~1000 people doing direct EA work? I would have guessed around an order of magnitude less (~100-200 people).

aarongertler @ 2019-11-13T20:09 (+8)

It depends on what you count as "direct". But if you consider all employees of GiveWell-supported charities, for example, I think you'd get to 1000. You can get to 100 just by adding up employees and full-time contractors at CEA, 80K, Open Phil, and GiveWell. CHAI, a single research organization, currently has 42 people listed on its "people" page, and while many are professors or graduate students who aren't working "full-time" on CHAI, I'd guess that this still represents 25-30 person-years per year of work on AI matters.

Milan_Griffes @ 2019-11-13T02:10 (+7)

Interesting, thanks for posting.

Can you share anything from the discussion about what to do about the problems?

(Most of this post is "here's what we think the problems are" – would be super interesting to see "...and here's what we think we should do about it.")

Grue_Slinky @ 2019-11-13T13:26 (+14)

Seconded: great post with good questions, but also soliciting anonymous recommendations (even if half-baked) seems valuable. To piggyback on John_Maxwell comment above, the EA leaders sound like they might have contradicting opinions, but it's possible they collectively agree on some more nuanced position. This could be clarified if we heard what they would actually have the movement do differently.

When I read Movement Collapse Scenarios, it struck me how EA is already pretty much on a Pareto frontier, in that I don't think we can improve anything about the movement without negatively affecting something else (or risking this). From that post, it seemed to me that most steps we could take in reducing risk from Dilution increase the risk from Sequestration, and vice versa.

And of course just being on a Pareto frontier by itself doesn't mean the movement's anywhere near the right point(s), because the tradeoffs we make certainly matter. It's just that when we say "EA should be more X" or "EA should have less Y", this is often meaningless (if taken literally), unless we're willing to make the tradeoff(s) that entails.

Michelle_Hutchinson @ 2019-11-22T16:44 (+10)

[I went to the leaders forum. I work for 80,000 Hours but these are my personal thoughts.]

One thing to say is in terms of next steps, it’s actually pretty useful just to sync up on what people thought were the problems we should be putting attention to: like that we should be thinking more about how to be overtly appreciative. For example, in my case that means that when I do 80,000 Hours advising calls I pay a bit more attention to making sure people realise I’m grateful for the amazing work they’re doing, or how nice it is to talk to someone working hard at finding the most impactful career path.

We did also brainstorm specific ways in which lots of people can do more to increase that vibe. One example is to point out on the forum when there are particular things you found useful or appreciated. (Eg: https://forum.effectivealtruism.org/posts/wL6nzXsHQEAZ2WJcR/summary-of-core-feedback-collected-by-cea-in-spring-summer#4KHwoAjsiED7tQEg3) I think that’s the kind of thing it’s easy to overlook doing, because it doesn’t seem like it’s moving dialogue forward in a way that offering constructive feedback does. But actually it seems really valuable to do, both because it gives writers on the forum information about what’s useful to people in a way that’s more specific than just upvotes, and because it gives everyone the sense that the forum is a friendly space to contribute.

We also discussed specific ways those at the Leaders Forum might use to try to improve the culture. In particular, we considered ways to have more open discussions about effective altruism as appreciative and inclusive. For example, many of us thought we should be doing more to highlight how amazing it is for someone to be earning a typical US salary and donating 10% of that, and thereby saving someone’s life from malaria every year. On the side of inclusivity, it seemed as if some people worried about effective altruism needing to be something that took over your life to the exclusion of other important life goals. Doing AMAs (like the one Will did recently) and podcast episodes in which people can candidly talk about their views seemed like good ways for some of us to talk about how we feel about these things. (For example, I just recorded a podcast episode about 80,000 Hours advising, in which I also chat briefly about how I’m about to go on maternity leave.) These are all small steps to take, but the hope would be that together they can influence the general culture of the effective altruism community towards feeling friendlier and more inclusive.

aarongertler @ 2019-11-21T14:59 (+9)

In a workshop titled "Solutions in EA", participants considered ways in which they/their organizations/the community could respond to some of these problems. They also voted on others' suggestions. Some of the most popular ideas:

- "In general, try to notice when we can appreciate people for things they've done and express that appreciation."

- "Profile individuals outside bio / AI that seem to be doing high impact things (e.g. Center for Election Science)."

- "Whenever possible, talk about how awesome it that (say) people with ordinary jobs donate 10% to effective charities, and how honored you are to be a part of a community of people like that."

- "Much heavier caveating about the certainty of our intellectual frameworks and advice in general; instead, more emphasis on high-level principles we want to see followed and illustration of relevant principles."

Milan_Griffes @ 2019-11-21T20:44 (+11)

Huh, these are pretty vague & aspirational. (Overall I agree with the sentiments they're expressing, but they're not very specific about what changes to make to the status quo.)

Did these ideas get more cashed out / more operationalized at the Leaders Forum? Did organizations come away with specific next actions they will be taking towards realizing these ideas?

aarongertler @ 2019-11-22T01:59 (+5)

Those were excerpts of notes taken during a longer and more detailed discussion, for which I wasn't in the room (since I was there for operations, not to take part in sessions).

My impression is that the discussion involved some amount of "cashing out and operationalization". The end-of-event survey given to participants included a question: "Are there any actions you plan to take as a results of the Forum?" and several answers clearly hearkened back to these discussion topics. That survey was separate from the Priorities survey and participants took it without the expectation that answers would be shared, so I won't go into specifics.

(I've shared this comment thread with a few attendees to whom I spoke about these issues, in case they want to say more.)

Benjamin_Todd @ 2019-12-06T01:15 (+3)

Ultimately the operationalising needs to be done by the organisations & community leaders themselves, when they do their own planning, given the details of how they interact with the community, and while balancing the considerations raised at the leaders forum against their other priorities.

RichardAnnilo @ 2022-05-02T16:31 (+6)

Is there a more up to date version of this somewhere?

NunoSempere @ 2019-12-09T14:00 (+4)

Hey, regarding your question "What types of talent do you currently think [your organization // EA as a whole] will need more of over the next 5 years? (Pick up to 6)", I think you might want to word it somewhat differently, and perhaps disambiguate between:

- On a scale 0-10, how much [X] talent will [EA/your organization] need over the next 5 years?".

- On a scale 0-10, how much will the need for [X] talent in [EA/your organization] increase over the next 5 years?".

This mainly has the advantage of allowing for more granular comparison. For example, maybe management is always a solid 10, whereas government expertise is a solid 7, but both always fall in the top 6, and the difference might sometimes be important. I also think that the second wording is somewhat easier to read / requires less cognitive labor.

Two book recommendations are The Power of Survey Design: A User's Guide for Managing Surveys, Interpreting Results, and Influencing Respondents and Improving survey questions - Design and Evaluation. I should have a short review/summary and some checklists somewhere, if you're interested.

Yarrow @ 2025-05-01T17:29 (+1)

Wow. This is my first time reading this post.

The last section — "Problems with the EA community/movement" — was surprising. I was surprised that people in leadership positions at EA or EA-related organizations seemed to feel dismayed and irritated by the EA Forum and the online EA community for many of the same reasons I do.

I guess I feel relieved that they also see these problems, but, on the other hand, this survey is from 2019. I wonder how many respondents since left EA because these things put them off too much — or other things, like the sexual harassment, the racism, etc. If they stayed in EA, I wonder why I don’t get a sense of any leaders in the EA community/movement trying to address these problems.

RafaDib @ 2024-08-06T18:44 (+1)

Is there an updated version of this (or a similar) survey?

OllieBase @ 2024-08-07T13:01 (+2)

A similar one was run last year, here :)

edoarad @ 2019-11-12T05:31 (+1)

From Bottlenecks to EA impact

More dedicated people (e.g. people working at EA orgs, researching AI safety/biosecurity/economics, giving over $1m/year) converted from moderate engagement due to better advanced engagement (e.g. more in-depth discussions about the pros and cons of AI) (note: in the future, we’ll probably avoid giving specific cause areas in our examples)

Is there a consensus that the conversion from moderate engagement should be through better advanced engagement?

aarongertler @ 2019-11-21T15:00 (+2)

I'm not sure how else a person might be "converted from moderate engagement", unless they choose to dive deeper on their own. Is there some alternative conversion method you were thinking of?

edoarad @ 2019-11-21T16:55 (+1)

Well, I imagine that many people are thinking "EA is great, I wish I was a more dedicated person, but I currently need to do X or learn Y or get Z". For example, I'd assume that having ten times the amount of EA orgs, they would mostly be filled by people that were only moderately engaged. Or perhaps we should just wait for them to gain enough career capital.

aarongertler @ 2019-11-22T02:04 (+2)

I read your reply as being about the possibility of conversion through additional opportunity (e.g. more job openings). The survey didn't go into that option, but personally, I'd expect that most attendees would classify new positions as being in this category (e.g. the Research Scholars Programme takes people with some topic-level expertise and helps them boost their research skills and dedication by funding them to work on an EA issue for several months).

edoarad @ 2019-11-12T05:17 (+1)

I'm a bit confused about the "Known Causes" part.

What is approximately the current distribution of resources? Was it displayed/known to respondents?

Even though someone is working within one cause, there are many practical reasons to use a "portfolio" approach (say; risk aversion, moral trade, diminishing marginal returns, making the EA brand more inclusive to people and ideas). It seems that each different approach will lend itself to a different portfolio. I'm not sure how to think about the data here, and what does the average mean.

aarongertler @ 2019-11-21T15:12 (+4)

We didn't attempt to estimate the current distribution of resources before sending out the survey.

I talk about the limitations of the prioritization data in my reply to another comment here. In short: I don't think the averages are too meaningful, but we wanted to share the data we had collected; the data is also a useful "sanity check" on some commonplace beliefs about EA (e.g. testing whether leaders really care about longtermism to the exclusion of all else).