EA Survey 2020: Demographics

By David_Moss @ 2021-05-20T14:10 (+107)

EA Survey 2020: Community Demographics

Summary

- We collected 2,166 valid responses from EAs in the survey

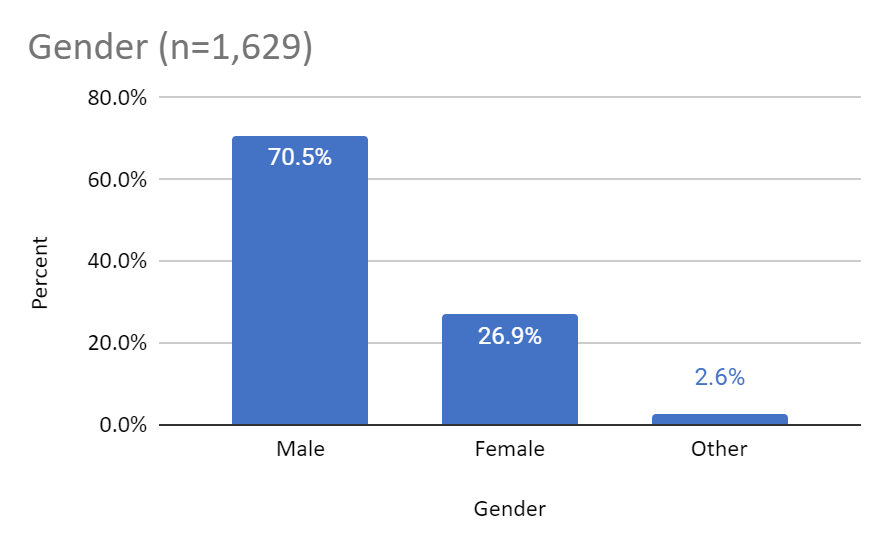

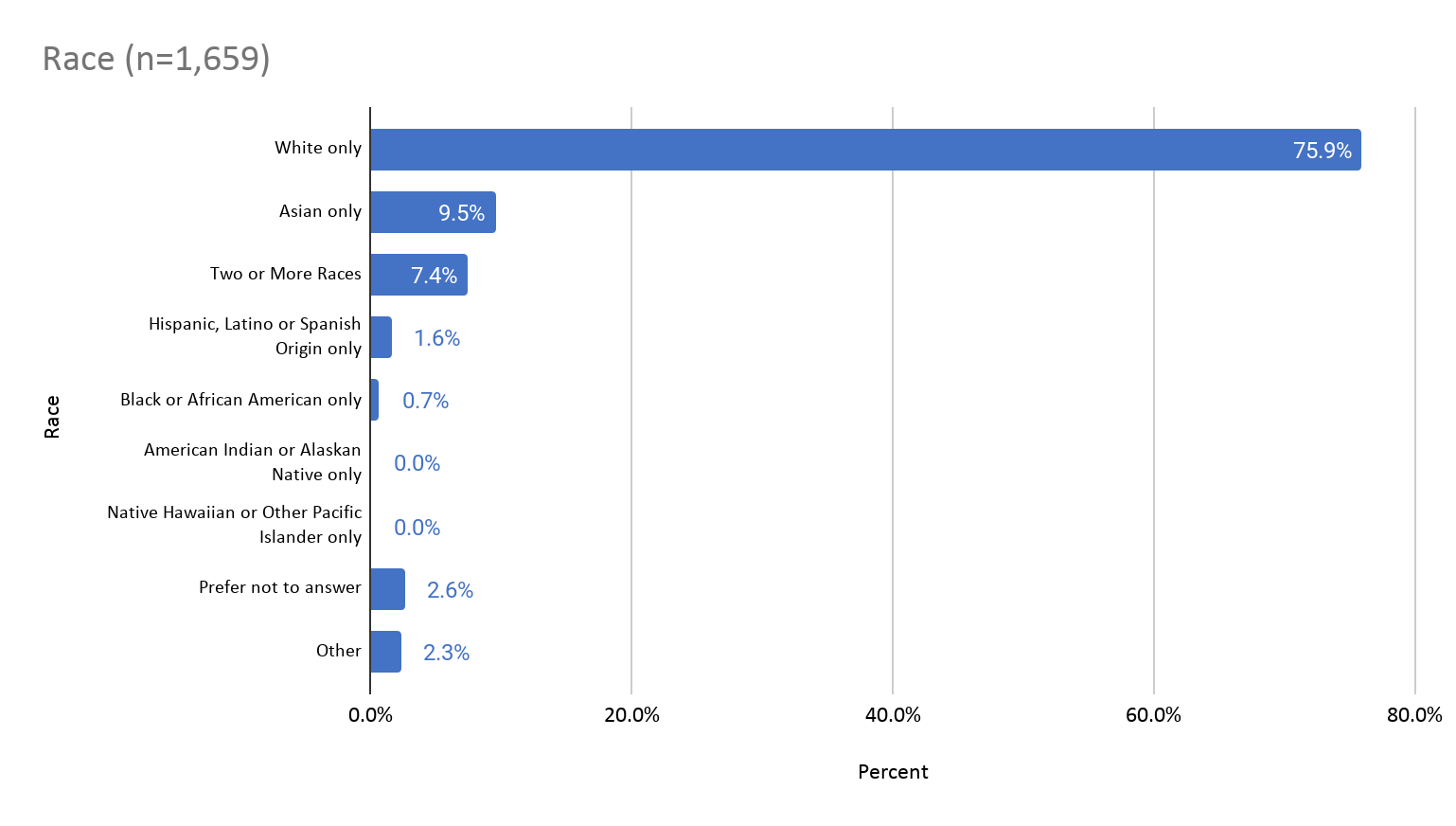

- The composition of the EA community remains similar to last year, in terms of age (82% 34 or younger), race (76% white) and gender (71% male)

- The median age when EAs reported getting involved in the community was 24

- More than two thirds (69%) of our sample were non-students and <15% were undergraduates.

- Roughly equal proportions of non-student EAs report being in for-profit (earning to give), for-profit (not earning to give), non-profit (EA), non-profit (not EA), government, think tank/lobbying/advocacy careers.

- More respondents seem to be prioritizing career capital than immediate impact

Introduction

The demographic composition of the EA community has been much discussed. In this post we report on the composition of the EA Survey sample. In future posts we will investigate whether these factors influence different outcomes such as cause selection or experience of the EA community.

This year, we removed questions about religion, politics, and diet to make room for an additional set of requested questions. However, we intend to re-include these next year and at least every other year going forward.

The normal caveats about not knowing the degree to which the EA Survey sample is representative of the broader EA population apply. Ultimately, since no one knows what the true composition of the EA community is, it is impossible for us to know to what degree the EA Survey is representative. We discuss these issues further here in a hosted dynamic document (with data, code, and commentary). In that document we investigate how key characteristics of our sample are sensitive to which source (e.g. EA Newsletter, Facebook link) referred participants to the EA Survey and to measures that seem like they may be proxies for participation rates (such as willingness to be contacted about future surveys).

Note: full size versions of graphs can be viewed by opening them in a new tab.

Basic Demographics

Gender

The proportion of respondents who were male, female or other was very similar (to within a percentage point) to last year.

Race/ethnicity

Our race/ethnicity question allowed respondents to pick multiple categories. We re-coded respondents based on whether they selected only one category or multiple. While around 83% of respondents selected ‘White’ (compared to 86.9% last year), some of these respondents also selected other categories. Taking this into account, around 75.9% of the sample selected only white.

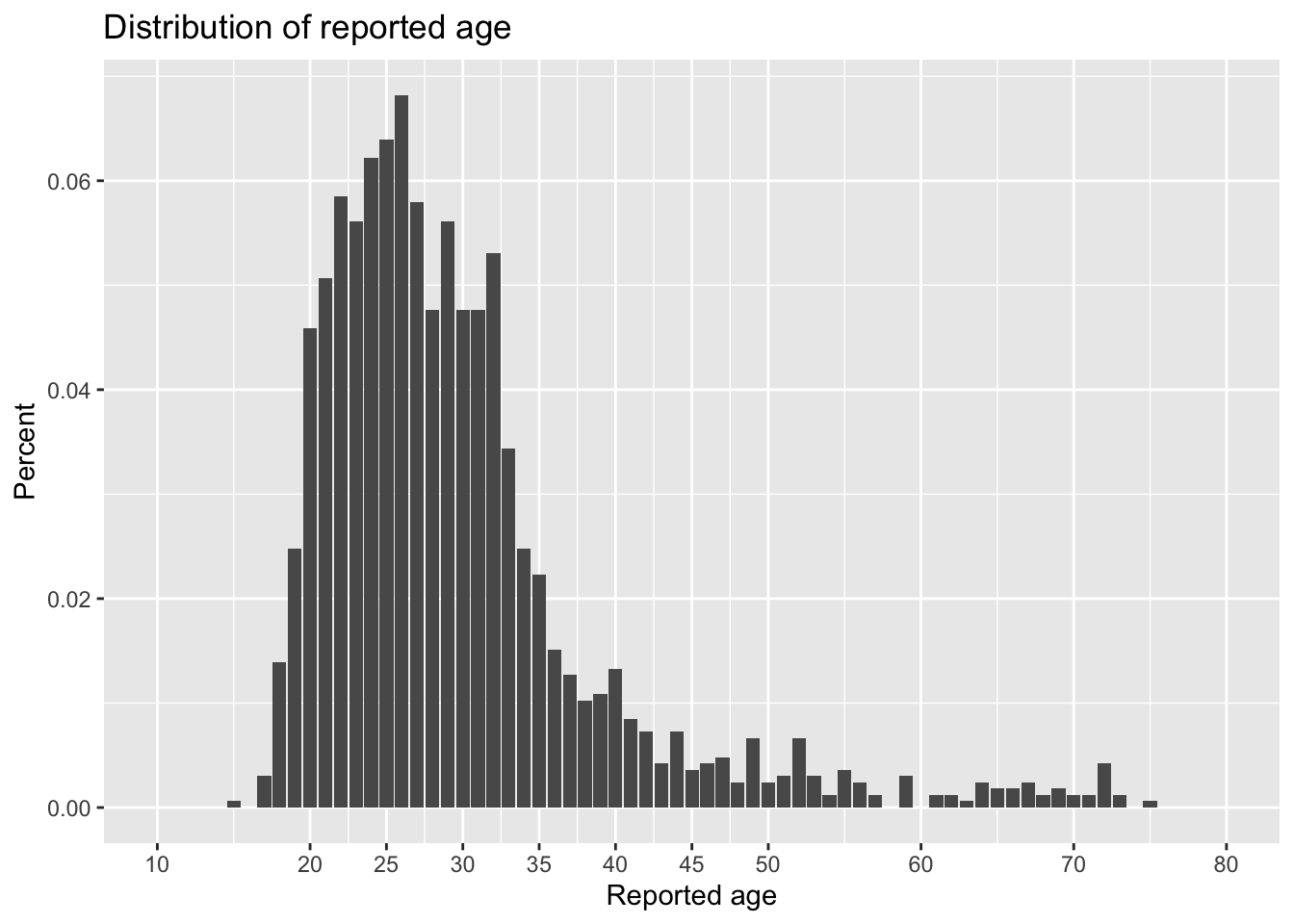

Age

The EA community remains disproportionately young, with a median age of 27 (mean 29). Moreover, there is an extremely sharp dropoff in the frequency of respondents from around age 35. Around 80% of our respondents are younger than this age, which means that there are fewer respondents in our sample older than 34 than there are women or non-white respondents.

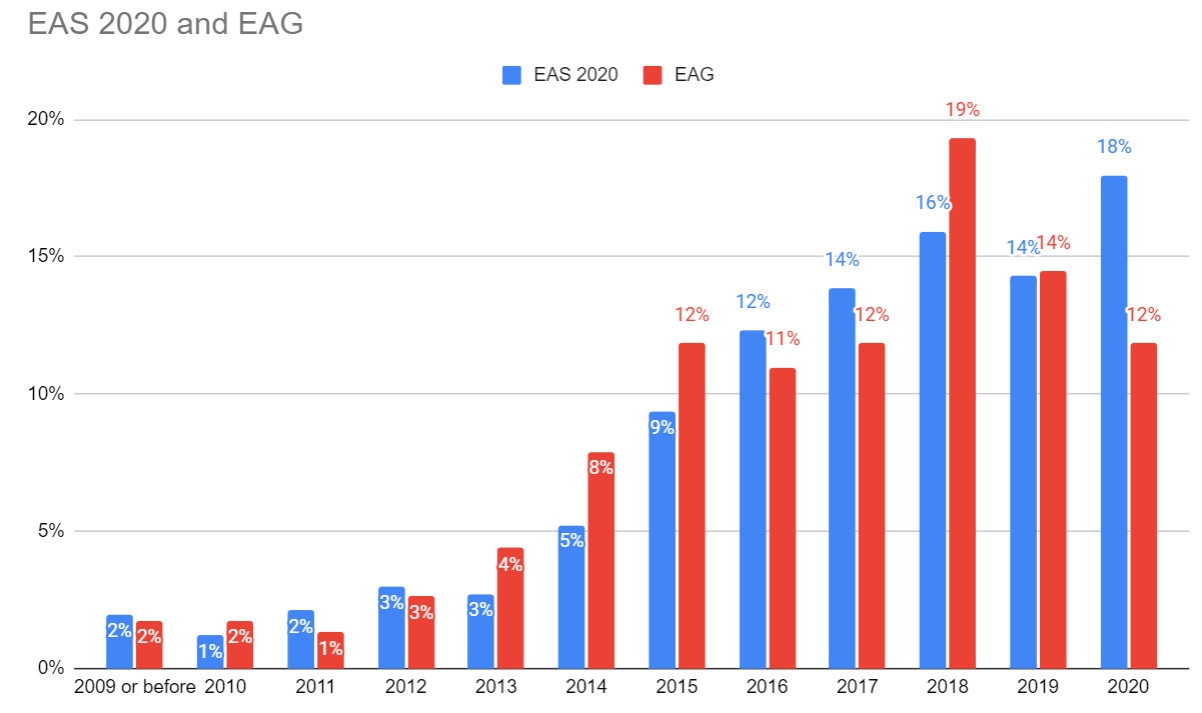

Changes in Age over Time

That said, as we noted last year, the average age of EA Survey respondents appears to have increased fairly steadily over time. This makes sense because EAs (like non-EAs) get one year older annually. However, this effect is counterbalanced by new EAs joining the movement each year, and these new members being younger than average. In this year’s survey, the average age of respondents was slightly lower than last year (though still higher than 2014-2015).

Age of First Getting Involved in EA

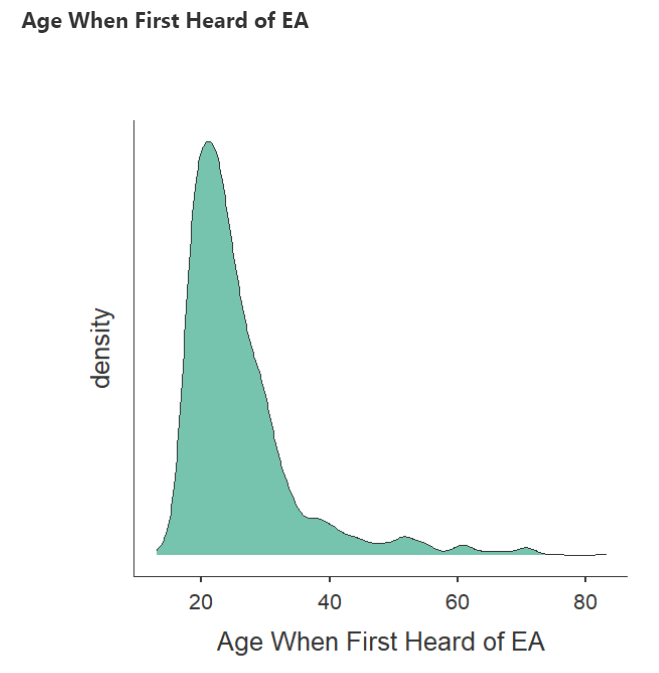

The age at which people typically first get involved in EA has previously been discussed (e.g. here).

The median age when respondents reported having first gotten involved in EA was 24 (with a slightly higher mean of 26). Notably, while young, this is slightly older than the typical age of an undergraduate student (which is often thought of as a common time for getting into EA).

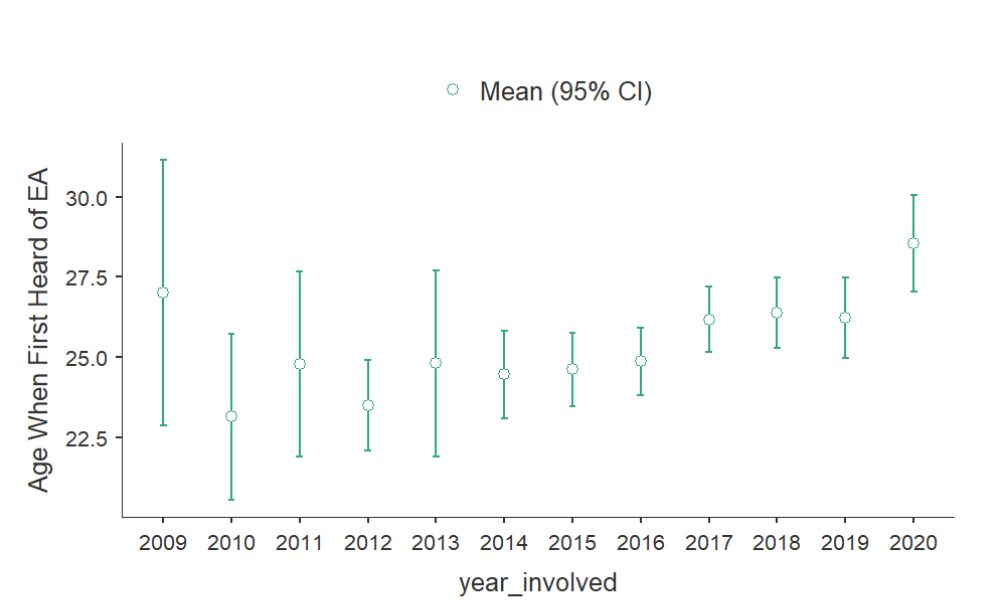

We also observe that the average age when people report getting involved in EA appears to increase across cohorts in our data, with people who got involved in more recent years reporting having heard about EA at an older age.

That said, we speculate that this might be explained by differential attrition. We have previously found that the respondents with the lowest self-reported engagement are, on average, considerably older (a finding which we replicated in the EAS 2020 sample). This could explain or reflect, a tendency for older people in the EA community to be more likely to drop out over time, which would also lead to the observed pattern that respondents from more recent cohorts are older. This tendency could be explained by the EA community being overwhelmingly composed of young people, which may lead to older individuals being more likely to disengage. Of course, it could also be that some factor leads to older people being less likely to join EA (leading to the community being overwhelmingly young people) and leads to older individuals being more likely to drop off.

It is also possible that EA has actually begun to recruit people who are, on average, older, in more recent years. This could be explained, for example, by the fact that the average age of people involved in EA is gradually increasing, making older people more likely to get involved. Note that this need not be explained by ‘affinity effects’ i.e. older people feeling more welcomed and interested in the community because people in the community are older, but by existing EAs recruiting progressively older people through their personal networks (given that older individuals’ personal networks may likewise be older).

Sexual orientation

This year, for the first time, we included an open comment question asking about sexual orientation that had been requested to examine the EA community’s level of diversity in this area.

Respondents were coded as straight/heterosexual, non-straight/non-heterosexual or unclear (this included responses which were uninterpretable, responses which stated they did not know etc.). We based our coding categories around the most commonly recurring response types. Overall, 73.4% of responses were coded as straight/heterosexual, while 19.9% were coded as non-straight (6.6% were unclear).

We observed extremely strong divergence across gender categories. 76.9% of responses from male participants identified as straight/heterosexual, while only 48.6% of female responses identified as such.

“First Generation” Students

This year, we were requested to ask whether respondents were in the first generation of their family to attend university. 17.2% of respondents reported being first generation students.

Unfortunately, as the percentage of first generation students in the broader population varies across countries and has changed dramatically over time (reducing in more recent years), these numbers are not straightforward to interpret. 11.5% of our respondents currently living in the US (who, of course, may not have attended university in the US) reported being first generation students, compared to 20.5% of UK resident respondents. In the US, in 2011-2012, 33% of students enrolled in postsecondary education had parents who had not attended college. As the number of first generation students in the US has been decreasing with time, however, these numbers have plausibly declined further since 2011-2012.

We would also expect our sample to contain a disproportionately low number of first generation students, because our sample contains a disproportionately high number of students from elite higher education institutions, which tend to have lower numbers of first generation students (for example, Harvard appears to have only ~15% first generation students).

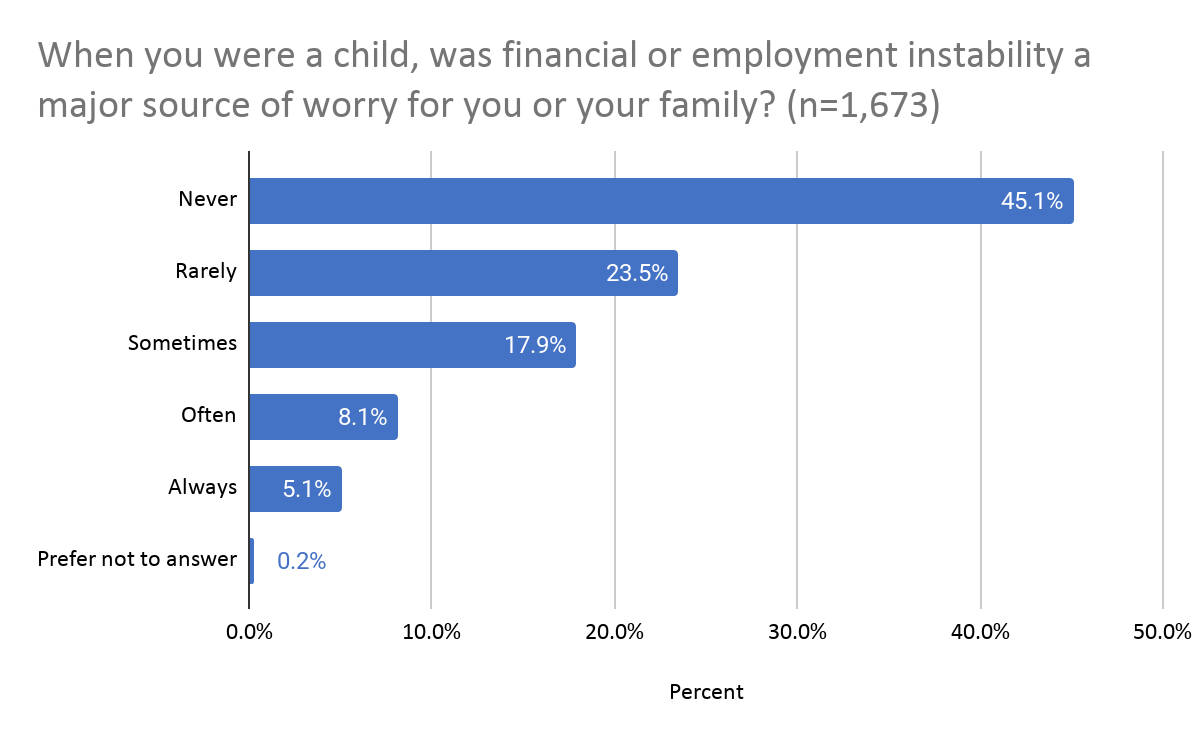

Financial or employment instability

We were also requested to ask respondents whether financial or employment instability was a major source of worry for them or their family when they were a child. As this is not an established question that is employed more broadly (in contrast to standard childhood SES or early life stress measures), we can’t make a rigorous comparison between the EA Survey sample and the wider population. We can observe, however, that only a small minority of respondents reported these factors often or always being a worry for them. Still, these results are hard to interpret, since they likely track varying elements of people’s objective SES, subjective SES and their propensity for anxiety or worry.

Careers and Education

Employment/student status

We cut a number of questions about careers from this year’s EA Survey, in order to accommodate requests for other new questions. As such, we have added results about careers back into this general demographic post, rather than releasing them in a separate careers and skills post.

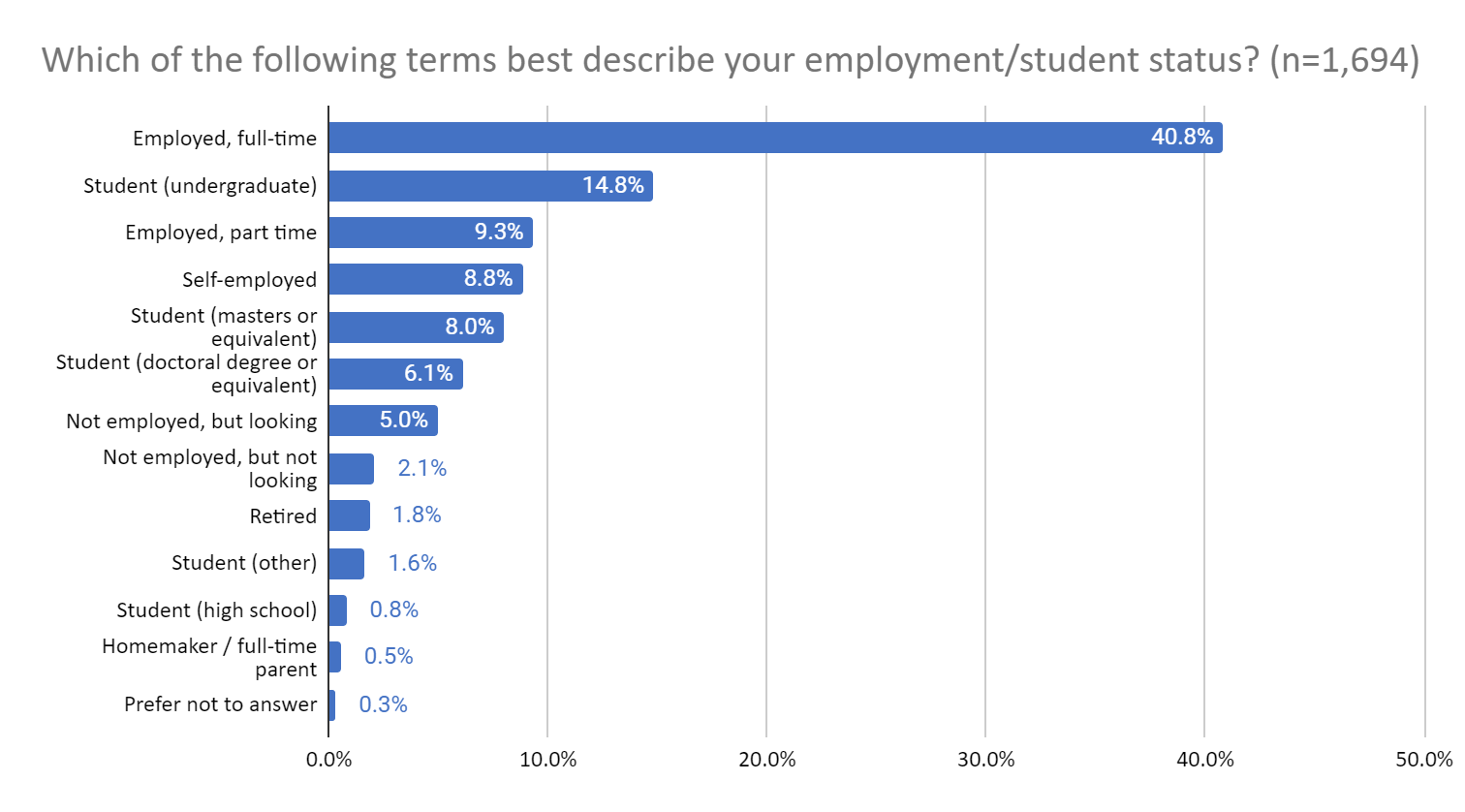

The majority of our respondents (68.7%) were non-students, while a little under one third (31.3%) were students.

A plurality were employed full-time (40.8%), many more than the next largest category, undergraduate students, who comprised less than 15% of our sample.

Last year we observed that our sample was extremely highly educated, with >45% having a postgraduate degree (and plausibly a higher percentage of people who would go on to have a postgraduate degree). Unfortunately, as we had to remove the question about respondents’ level of education, we cannot comment on that directly here. However, we can still observe that, among current students, a large number (45%) are currently studying for postgraduate degrees.

Current career

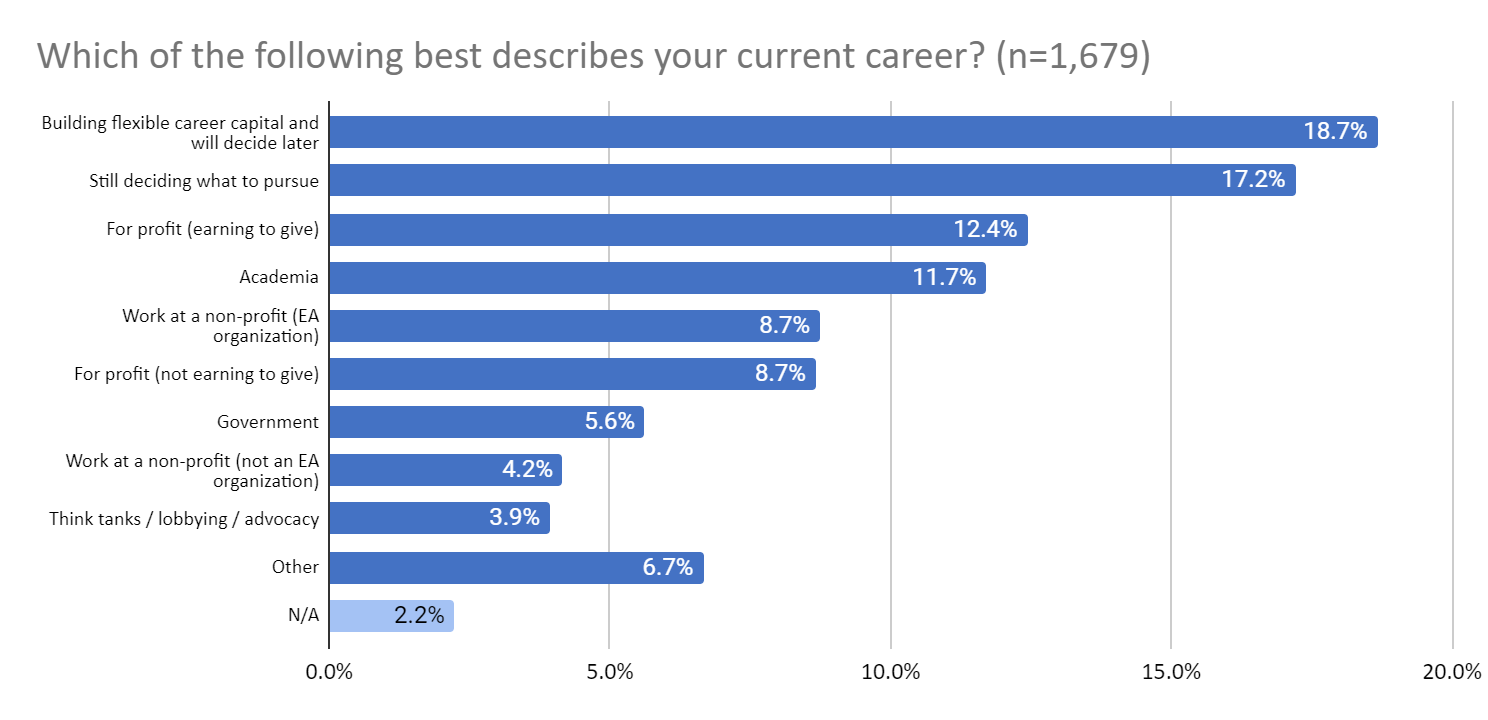

Last year we asked about respondents’ career path and skills and experience. And, in previous years, we asked more fine-grained questions about the kind of job or industry people work in. This year, both sets of questions were cut to make room for new questions. This year we asked about respondents’ current career. Respondents could select multiple options.

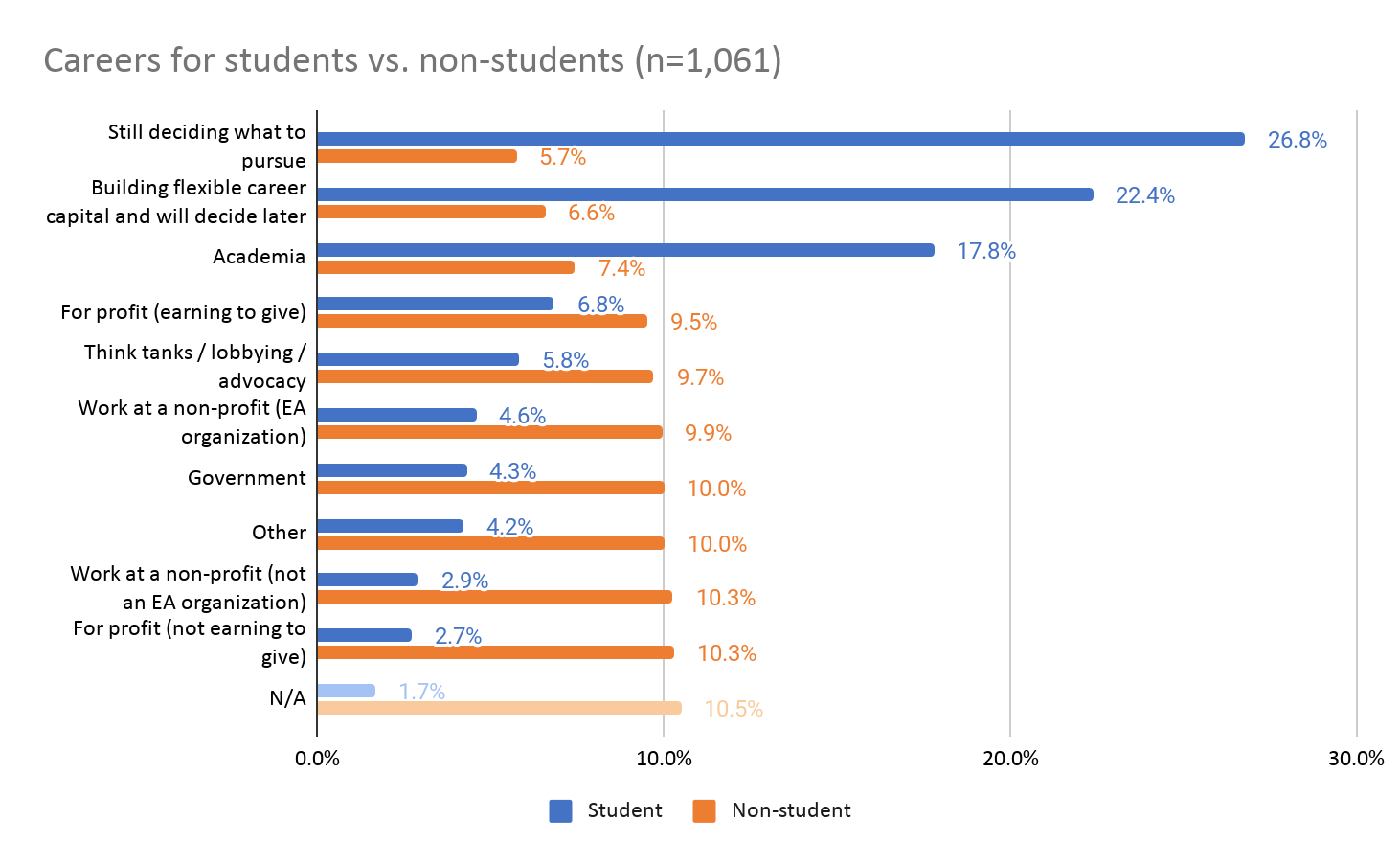

While the most common responses, by a long way, in the sample as a whole, were ‘building flexible career capital’ and ‘still deciding what to pursue’, when we look at the responses of non-students and students separately we see that, among non-students, responses are split very close to equally among different careers, with fewer selecting ‘building flexible career capital’ or ‘still deciding what to pursue.’

On its face, this would seem to suggest that no particular broad type of career particularly dominates among the EA community. Of course, this in itself doubtless means that certain broad career types (e.g. academia, non-profits) are much more common in EA than the general population. And, of course, more specific career types (e.g. software engineering) are likely still over-represented.

Career type across groups

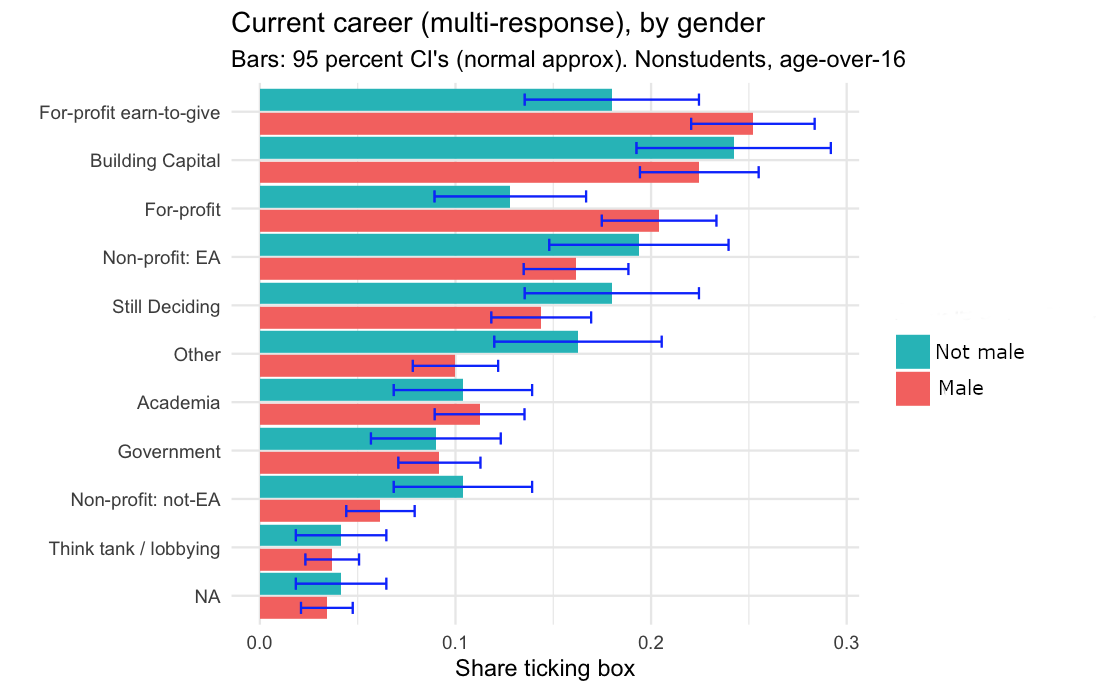

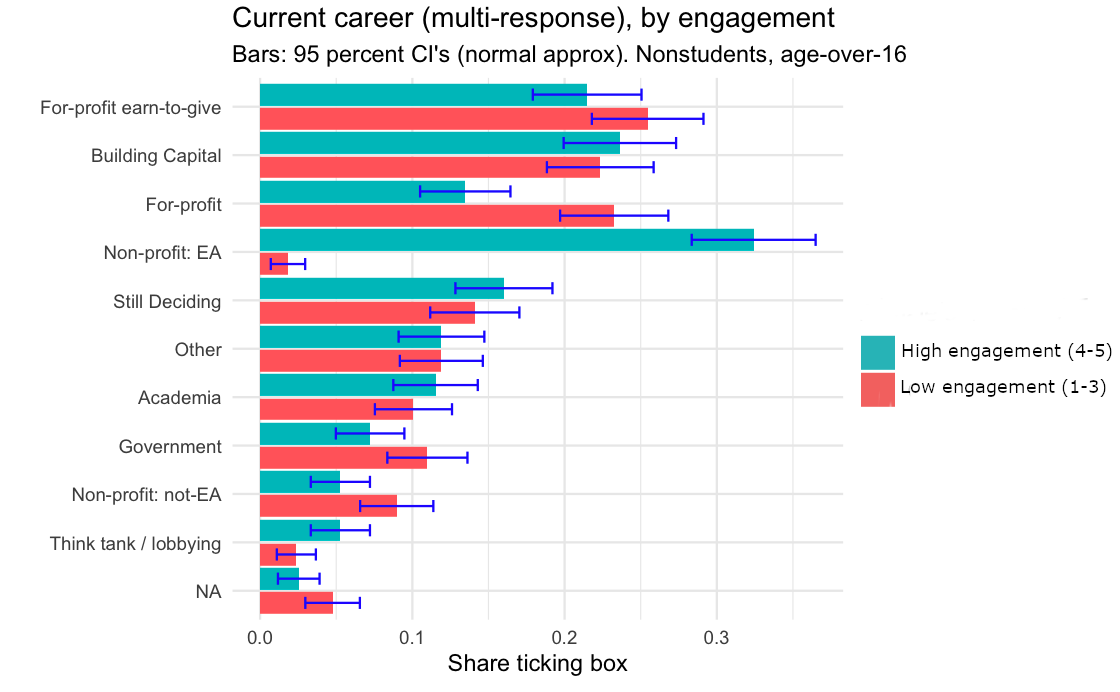

We examined differences in the proportions of (non-student) respondents aged over 16 in each type of career, based on gender, race and level of self-reported engagement in EA.

Male vs Female

A significantly higher percentage of male respondents appear to be in for profit (not earning to give) careers, than female respondents.

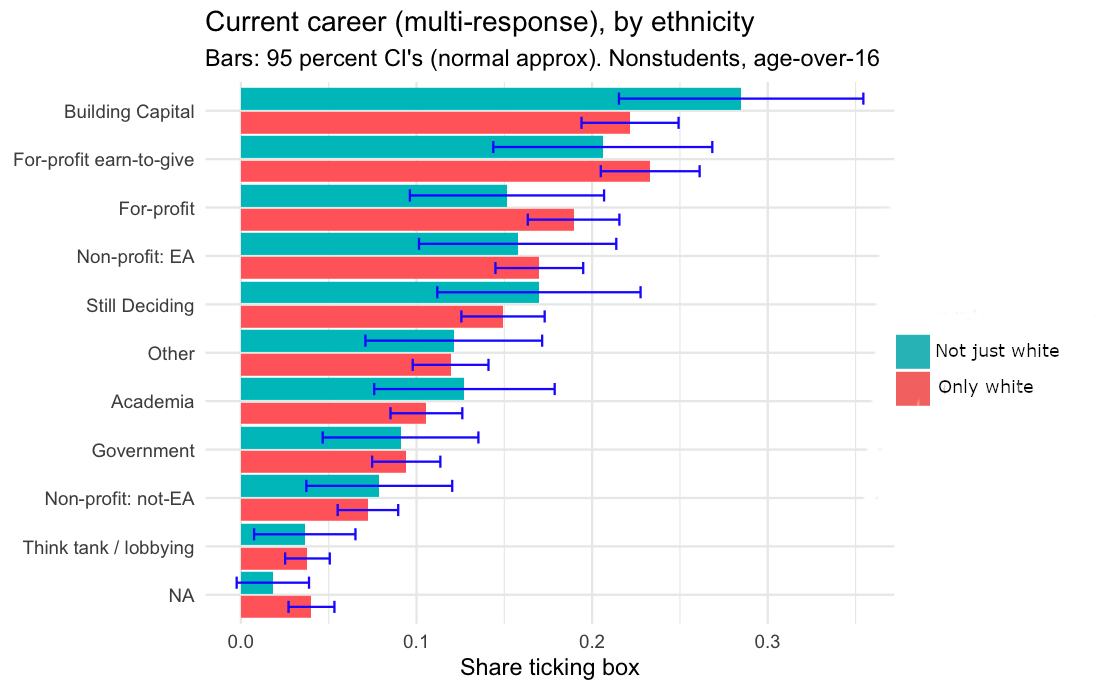

Race

We also compared the proportions in different career types for respondents who selected only the ‘white’ category, in contrast to those who selected any categories other than white. (Again, we include only non-students over 16).

We found no significant differences.

Level of Engagement

We also compared EAs lower in self-reported engagement to those who were highest in it.

Predictably, almost all those reporting specifically EA non-profit work were in the higher engagement groups (working in an EA org is specifically cited in the engagement scale as an example of being very highly engaged).

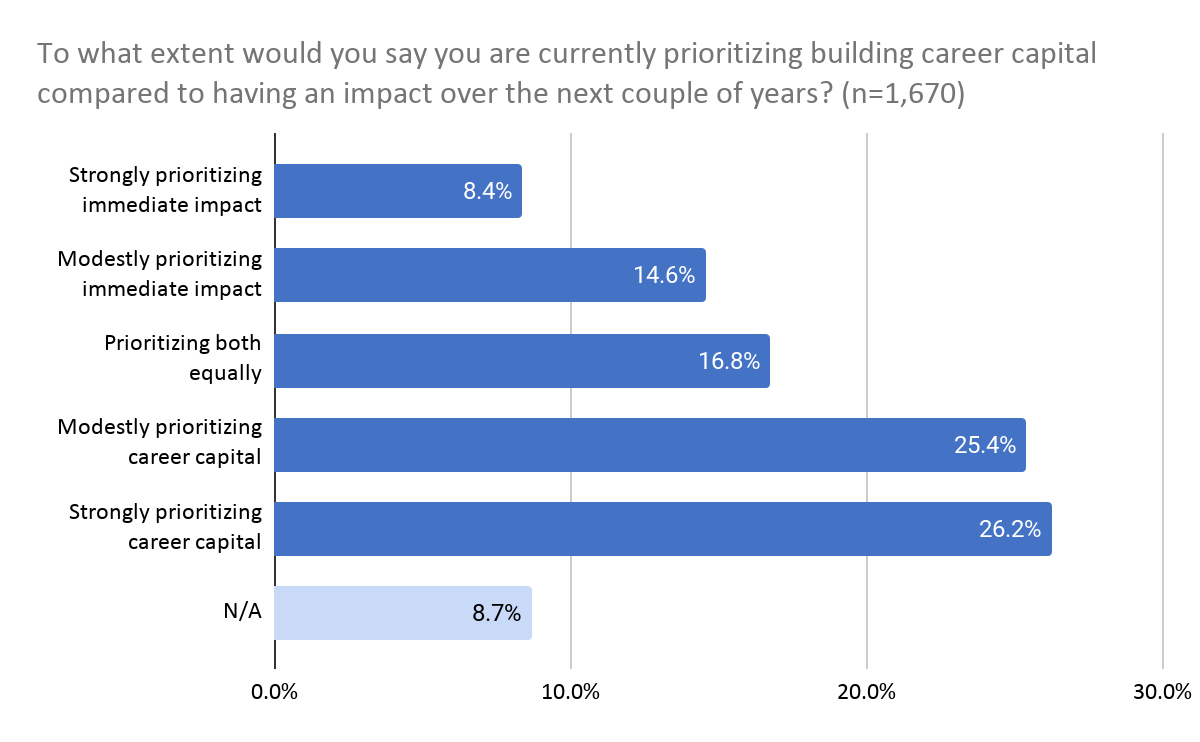

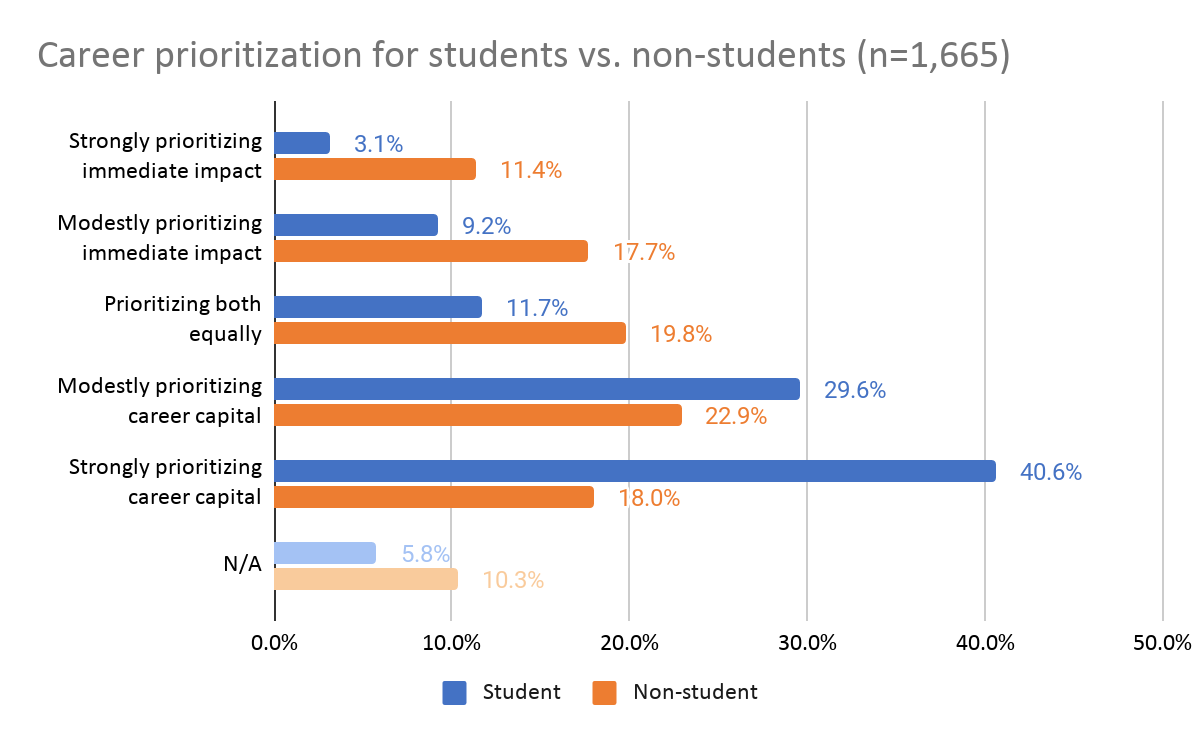

Career capital vs immediate impact

We also asked directly, to what extent respondents were currently prioritizing career capital or prioritizing having an impact in the next couple of years.

As before, while we saw a strong tendency towards prioritizing career capital in the sample as a whole, when we examined non-students specifically we saw a much more even split (albeit leaning towards prioritizing career capital).

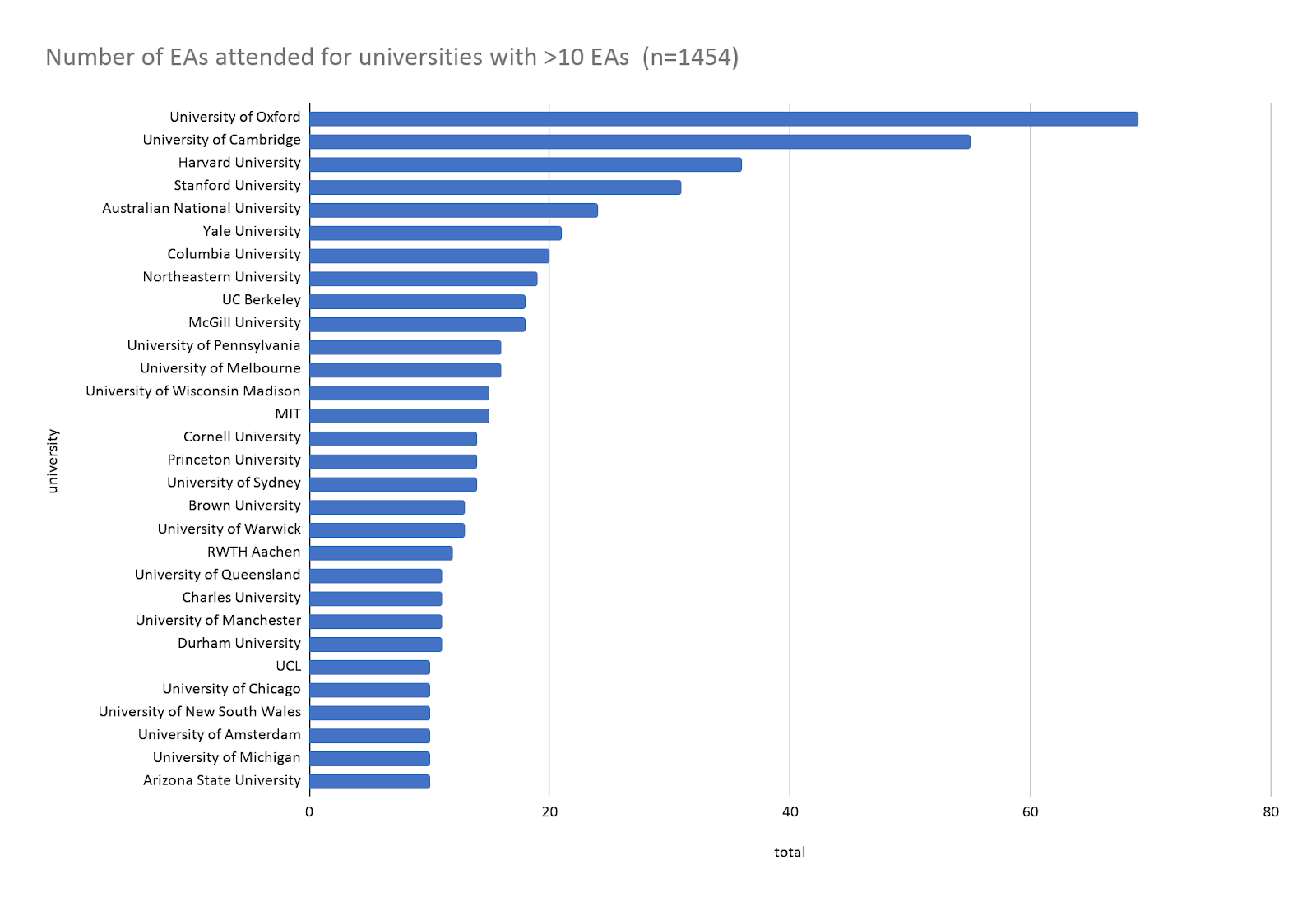

Universities attended

Last year we were requested to ask respondents which universities they attended for their Associate’s and/or Bachelor’s degree, while this year we asked respondents to list any universities they had attended.

As was the case last year, we found that a disproportionately large number of respondents attended elite universities. 8.7% of respondents had attended the University of Oxford or Cambridge (slightly higher than the 7.7% who reported attending one of these institutions for their Associate’s or Bachelor’s degree last year).

Conclusion

We will explore the relationship between some of these variables and other measures on the EA Survey in future posts.

Credits

The annual EA Survey is a project of Rethink Priorities. This post was written by David Moss, with contributions from Jacob Schmiess and David Reinstein. Thanks to Peter Hurford, Neil Dullaghan, Jason Schukraft, David Bernard, Joan Gass and Ben West for comments.

We would also like to express our appreciation to the Centre for Effective Altruism for supporting our work. Thanks also to everyone who took and shared the survey.

If you like our work, please consider subscribing to our newsletter. You can see all our work to date here.

jackmalde @ 2021-05-13T07:26 (+16)

We observed extremely strong divergence across gender categories. 76.9% of responses from male participants identified as straight/heterosexual, while only 48.6% of female responses identified as such.

The majority of females don't identify as heterosexual? Am I the only one who finds this super interesting? I mean in the UK around 2% of females in the wider population identify as LGB.

Even the male heterosexual figure is surprising low. Any sociologists or others want to chime in here?

David_Moss @ 2021-05-13T08:19 (+9)

The majority of females don't identify as heterosexual?

That's not quite right because some responses were coded as "unclear": around 33% of female responses were coded as not heterosexual, which is almost 3x as many for male respondents.

Both those percentages are still relatively high, of course. Unfortunately I don't think it's clear what to make of them, due to a number of factors: i) results for the general population surveys are inconsistent across different surveys, ii) results seem to vary a lot by age (for example, see here) and likely by other demographic factors (e.g. how liberal or educated the population is) which I would expect to raise EA numbers, iii) most surveys don't use the question format that we were asked to use (people writing in an unguided self-description) making comparison difficult, iv) as a result of that survey choice the results for our survey are very hard to interpret with large percentages of responses being explicitly coded as unclassifiable.

As such, while interesting, I think these results are hard to make anything of with any degree of confidence, which is why we haven't looked into them in any detail.

jackmalde @ 2021-05-13T16:33 (+4)

Thanks. Any particular reason why you decided to do unguided self-description?

You could include the regular options and an "other (please specify)" option too. That might give people choice, reduce time required for analysis, and make comparisons to general population surveys easier.

David_Moss @ 2021-05-13T18:50 (+2)

This was a specific question and question format that it was requested we include. I wouldn't like to speculate about the rationale, but I discuss some of the pros and cons associated with each style of question in general here.

reallyeli @ 2021-05-13T08:32 (+3)

I was surprised to see that this Gallup poll found no difference between college graduates and college nongraduates (in the US).

reallyeli @ 2021-05-13T08:28 (+4)

Younger people and more liberal people are much more likely to identify as not-straight, and EAs are generally young and liberal. I wonder how far this gets you to explaining this difference, which does need a lot of explaining since it's so big. Some stats on this (in the US).

david_reinstein @ 2021-05-15T17:44 (+2)

I was also surprised, but obviously we are far from a random sample of the population, there is a very unusual 'selection' process to

- know about EA

- identify with EA

- take the survey

E.g., (and its not a completely fair analogy but) about 30% of 2019 respondents said they were vegan vs about vs about 1-3% of comparable populations

Perhaps better analogy: Looking quickly at the 2018-2019 data, roughly half of responded studied computer science. This compares to about 5% of the US degrees granted , 10% if we include all engineering degrees.

But is this worth pursuing further? Should we dig into surprising was the EA/EA-survey population differs from the general population?

jackmalde @ 2021-05-15T21:08 (+9)

I would absolutely expect EAs to differ in various ways to the general population. The fact that a greater proportion of EAs are vegan is totally expected, and I can understand the computer science stat as well given how important AI is in EA at the moment.

However when it comes to sexuality it isn't clear to me why the EA population should differ. It may not be very important to understand why, but then again the reason why could be quite interesting and help us understand what draws people to EA in the first place. For example perhaps LGBTQ+ people are more prone to activism/trying to improve the world because they find themselves to be discriminated against, and this means they are more open to EA. If so, this might indicate that outreach to existing activists might be high value. Of course this is complete conjecture and I'm not actually sure if it's worth digging further (I asked the question mostly out of curiosity).

David_Moss @ 2021-05-16T07:56 (+9)

I agree that there's not a direct explanation of why we would expect this difference in the EA community, unlike in the case of veganism and computer science.

I also agree that properties of our sampling itself don't seem to offer good explanations of these results (although of course we can't rule this out). This would just push the explanation back a level, and it seems even harder to explain why we'd heavily oversample nonheterosexual (and especially female nonheterosexual EAs compared to female heterosexual EAs) than to explain why we might observe these differences in the EA community.

That said, we do have good reason to think that the EA community (given its other characteristic) would have higher percentages of nonheterosexual responses. Elite colleges in general also have substantially higher rates than the general population (it looks like around 15% at Harvard and Yale) and of course the EA community contains a very disproportionate percentage of elite college graduates. Also, although we used slightly different questions, it seems like the EA community may be more liberal than elite colleges, and we might expected higher self-reported nonheterosexuality in more liberal populations (comparing to Harvard and Yale again, they have around 12% somewhat/very conservatives, we have around 3% centre right or right- we have more 'libertarians', but if these are mostly socially liberal then the same might apply).

As I noted before though, I think that this is probably just a result of the particular question format used. I would expect more nonheterosexual responses where people can write in a free response compared to where they have to select either heterosexual or some other fixed category.

david_reinstein @ 2021-05-19T22:42 (+1)

Let's presume that the 'share non-straight is' a robust empirical finding and not an artifact of sample selection or of how the question was asked, or of the nonresponse etc. (We could dig into this further if it merited the effort)...

It is indeed somewhat surprised, but I am not who surprised, as I expect a group that is very different in some ways from the general population may likely be very different in other ways, and we may not always have a clear story for why. If we did want to look into it further it further, we might look into what share of the vegan population, or of the 'computer science population', in this mainly very-young age group, is not straight-identified. (of course those numbers may also be very very difficult together, particularly because of the difficulty of getting a representative sample of small populations, as I discuss here.

This may be very interesting from a sociological point of view but I am not sure if it is a first order important for us right now. That said, if we have time we may be able to get back to it.

BrianTan @ 2021-05-13T07:55 (+2)

Yeah I find this interesting and surprising too.

Aaron Gertler @ 2021-05-12T09:23 (+16)

It looks like the number of survey respondents dropped by about 14% from last year. Before someone else asks whether this represents a shrinking movement, I'd like to share my view:

- This was a weird year for getting people to participate in things. People were spending more time online, but I'd guess they also had less energy/drive to do non-fun things for altruistic reasons, especially if those things weren't related to the pandemic. I suspect that if we were to ask people who ran similar surveys, we'd often see response counts dropping to a similar degree.

- Since this time last year, participation metrics are up across the board for many EA things — more local groups, much more Forum activity, more GiveWell donors, a much faster rate of growth for Giving What We Can, etc.

Hence, I don't see the lower survey response count as a strong sign of movement shrinkage, so much as a sign of general fatigue showing up in the form of survey fatigue. (I think it was shared about as widely as it was last year, but differential sharing might also have mattered if that was a thing.)

Kerry_Vaughan @ 2021-05-12T17:34 (+11)

You probably already agree with this, but I think lower survey participation should make you think it's more likely that the effective altruism community is shrinking than you did before seeing that evidence.

If you as an individual or CEA as an institution have any metrics you track to determine whether effective altruism is growing or shrinking, I'd find it interesting to know more about what they are.

Pablo @ 2021-05-12T18:14 (+6)

He mentioned a number of relevant metrics:

Since this time last year, participation metrics are up across the board for many EA things — more local groups, much more Forum activity, more GiveWell donors, a much faster rate of growth for Giving What We Can, etc.

Kerry_Vaughan @ 2021-05-12T20:59 (+10)

From context, that appears to be an incomplete list of metrics selected as positive counterexamples. I assumed there are others as well.

Aaron Gertler @ 2021-05-24T08:00 (+8)

I do agree that lower survey participation is evidence in favor of a smaller community — I just think it's overwhelmed by other evidence.

The metrics I mentioned were the first that came to mind. Trying to think of more:

From what I've seen at other orgs (GiveDirectly, AMF, EA Funds), donations to big EA charities seem to generally be growing over time (GD is flat, the other two are way up). This isn't the same as "number of people in the a EA movement", but in the case of EA Funds, I think "monthly active donors" are quite likely to be people who'd think of themselves in that way.

EA.org activity is also up quite a bit (pageviews up 35% from Jan 1 - May 23, 2021 vs. 2020, avg. time on page also up slightly).

Are there any numbers that especially interest you, which I either haven't mentioned or have mentioned but not given specific data on?

Habryka @ 2021-05-24T08:52 (+29)

Just for the record, I find the evidence that EA is shrinking or stagnating on a substantial number of important dimensions pretty convincing. Relevant metrics include traffic to many EA-adjacent websites, Google trends for many EA-related terms, attendance at many non-student group meetups, total attendance at major EA conferences, number of people filling out the EA survey, and a good amount of community attrition among a lot of core people I care a lot about.

I think in terms of pure membership, I think EA is probably been pretty stable with some minor growth. I think it's somewhat more likely than not that average competence in members has been going down, because new members don't seem as good as the members who I've seen leave.

It seems very clear to me that growth is much slower than it was in 2015-2017, based on basically all available metrics. The obvious explanation of "sometime around late 2016 lots of people decided that we should stop pursuing super aggressive growth" seems like a relatively straightforward explanation and explains the data.

Aaron Gertler @ 2021-05-26T02:38 (+16)

Re: web traffic and Google trends — I think Peter Wildeford (née Hurford) is working on an update to his previous post on this. I'll be interested to see what the trends look like over the past two years given all the growth on other fronts. I would see continued decline/stagnation of Google/Wikipedia interest as solid evidence for movement shrinkage/stagnation.

attendance at many non-student group meetups

Do you have data on this across many meetups (or even just a couple of meetups in the Bay)?

I could easily believe this is happening, but I'm not aware of whatever source the claim comes from. (Also reasonable if it comes from e.g. conversations you've had with a bunch of organizers — just curious how you came to think this.)

total attendance at major EA conferences

This seems like much more a function of "how conferences are planned and marketed" than "how many people in the world would want to attend".

In my experience (though I haven't checked this with CEA's events team, so take it with a grain of salt), EA Global conferences have typically targeted certain numbers of attendees rather than aiming for as many people as possible. This breaks down a bit with virtual conferences, since it's easier to "fit" a very large number of people, but I still think the marketing for EAG Virtual 2020 was much less aggressive than the marketing for some of the earliest EAG conferences (and I'd guess that the standards for admission were higher).

If CEA wanted to break the attendance record for EA Global with the SF 2022 conference, I suspect they could do so, but there would be substantial tradeoffs involved (e.g. between size and average conversation quality, or size and the need for more aggressive marketing).

It seems very clear to me that growth is much slower than it was in 2015-2017, based on basically all available metrics. The obvious explanation of "sometime around late 2016 lots of people decided that we should stop pursuing super aggressive growth" seems like a relatively straightforward explanation and explains the data.

I think we basically agree on this — I don't know that I'd say "much", but certainly "slower", and the explanation checks out. But I do think that growth is positive , based on the metrics I've mentioned, and that EA Survey response counts don't mirror that overall trend.

(None of this means that EA is doing anywhere near as well as it could/should be — I don't mean to convey that I think current trends are especially good, or that I agree with any particular decision of the "reduce focus on growth" variety. I think I'm quite a bit more pro-growth than the average person working full-time in "meta-EA", though I haven't surveyed everyone about their opinions and can't say for sure.)

Habryka @ 2021-05-26T05:49 (+7)

I am also definitely interested in Peter Wildeford's new update on that post, and been awaiting it with great anticipation.

RyanCarey @ 2021-05-26T16:39 (+11)

My personal non-data-driven impression is that things are steady overall. Contracting in SF, steady in NYC and Oxford, growing in London, DC. "longtermism" growing. Look forward to seeing the data!

vaidehi_agarwalla @ 2021-09-04T06:56 (+6)

Looking farther back at the data, numbers of valid responses from self-identified EAs:

~1200 in 2014,~2300 people in 2015, ~1800 in 2017 and then the numbers discussed here suggest that the number of people sampled has been about the same.

Comments:

- Not sure about the jump from 2014 to 2015, I'd expect some combination of broader outreach of GWWC, maybe some technical issues with the survey data (?) and more awareness of there being an EA Survey in the first place?

- I was surprised that the overall numbers of responses has not changed significantly from 2015-2017. Perhaps it could be explained by the fact that there was no Survey taken in 2016?

- I would also expect there to be some increase from 2015-2020, even taking into account David's comment on the survey being longer. But there are probably lots of alternative explanations here.

- I was going to try and compare the survey response to the estimated community size since 2014-2015, but realised that there don't seem to be any population estimates aside from the 2019 EA Survey. Are estimates on population size in earlier years?

David_Moss @ 2021-09-04T08:27 (+4)

Not sure about the jump from 2014 to 2015, I'd expect some combination of broader outreach of GWWC, maybe some technical issues with the survey data (?) and more awareness of there being an EA Survey in the first place?

I think the total number of participants for the first EA Survey (EAS 2014) are basically not comparable to the later EA Surveys. It could be that higher awareness in 2015 than 2014 drives part of this, but there was definitely less distribution for EAS2014 (it wasn't shared at all by some major orgs). Whenever I am comparing numbers across surveys, I basically don't look at EAS 2014 (which was also substantially different in terms of content).

The highest comparability between surveys is for EAS 2018, 2019 and 2020.

I was surprised that the overall numbers of responses has not changed significantly from 2015-2017. Perhaps it could be explained by the fact that there was no Survey taken in 2016?

Appearances here are somewhat misleading, because although there was no EA Survey run in 2016, there was actually a similar amount of time in between EAS 2015 and EAS 2017 as any of the other EA Surveys (~15 months). But I do think it's possible that the appearance of skipping a year reduced turnout in EAS 2017.

I was going to try and compare the survey response to the estimated community size since 2014-2015, but realised that there don't seem to be any population estimates aside from the 2019 EA Survey. Are estimates on population size in earlier years?

We've only attempted this kind of model for EAS 2019 and EAS 2020. To use similar methods for earlier years, we'd need similar historical data to use as a benchmark. EA Forum data from back then may be available, but it may not be comparable in terms of the fraction of the population it's serving as a benchmark for. Back in 2015, the EA Forum was much more 'niche' than it is now (~16% of respondents were members), so we'd be basing our estimates on a niche subgroup, rather than a proxy for highly engaged EAs more broadly.

David_Moss @ 2021-05-12T19:20 (+6)

I think that the reduction in numbers in 2019 and then again in 2020 is quite likely to be explained by fewer people being willing to take the survey due to it having become longer/more demanding since 2018. (I think this change, in 2019, reduced respondents a bit in the 2019 survey and then also made people less willing to take the 2020 survey.)

I think it was shared about as widely as it was last year, but differential sharing might also have mattered if that was a thing.

We can compare data across different referrers (e.g. the EA Newsletter, EA Facebook etc.) and see that there were fairly consistent drops across most referrers, including those that we know shared it no less than they did last year (e.g. the same email being sent out the same number of times), so I don't think this explains it.

We are considering looking into growth and attrition (using cross-year data) more in a future analysis.

Also note that because the drop began in 2019, I don't think this can be attributed to the pandemic.

Kerry_Vaughan @ 2021-05-12T21:10 (+5)

In the world where changes to the survey explain the drop, I'd expect to see a similar number of people click through to the survey (especially in 2019) but a lower completion rate. Do you happen to have data on the completion rate by year?

If the number of people visiting the survey has dropped, then that seems consistent with the hypothesis that the drop is explained by the movement shrinking unless the increased time cost of completing the survey was made very clear upfront in 2019 and 2020.

David_Moss @ 2021-05-13T08:46 (+4)

If the number of people visiting the survey has dropped, then that seems consistent with the hypothesis that the drop is explained by the movement shrinking unless the increased time cost of completing the survey was made very clear upfront in 2019 and 2020

Unfortunately (for testing your hypothesis in this manner) the length of the survey is made very explicit upfront. The estimated length of the EAS2019 was 2-3x longer than EAS2018 (as it happened, this was an over-estimate, though it was still much longer than in 2018), while the estimated length of EAS2020 was a mere 2x longer than EAS2018.

Also, I would expect a longer, more demanding survey to lead to fewer total respondents in the year of the survey itself (and not merely lagged a year), since I think current-year uptake can be influenced by word of mouth and sharing (I imagine people would be less likely to share and recommend others take the survey if they found the survey long or annoying).

That said, as I noted in my original comment, I would expect to see lag effects (the survey being too long reduces response to the next year's survey) and I might expect these effects to be larger (and to stack if the next year's survey is itself too long) and this is exactly what we see: we see a moderate did from 2018 to 2019 and then a much larger dip from 2019 to 2020.

I'd expect to see a similar number of people click through to the survey (especially in 2019) but a lower completion rate

"Completion rate" is not entirely straightforward, because we explicitly instruct respondents that the final questions of the survey are especially optional "extra credit" questions and they should feel free to quit the survey before these. We can, however, look at the final questions of the main section of the survey (before the extra credit section) and here we see roughly the predicted pattern: a big drop in those 'completing' the main section from 2018 to 2019 followed by a smaller absolute drop 2019 to 2020, even though the percentage of those who started the survey completing the main section actually increased between 2019 and 2020 (which we might expect if some people, who are less inclined to take the survey, were put off taking it).

David_Moss @ 2021-05-13T11:08 (+12)

Another (and I think better way) of examining whether we are simply sampling fewer people or the population has shrunk is comparing numbers for subpopulations of the EA Survey(s) to known population sizes as we did here.

In 2019, we estimated that we sampled around 40% of the 'highly engaged' EA population. In 2020, using updated numbers, we estimated that we sampled around 35% of the highly engaged EA population.

If the true EA population had remained the same size 2019-2020 and we just sampled 35% rather than 40% overall, we would expect the number of EAs sampled in 2020 to decrease from 2513 to 2199 (which is pretty close to the 2166 we actually sampled).

But, as noted, we believe that we usually sample high and low engagement EAs at different rates (sampling relatively fewer less engaged EAs). And, if our sampling rate reduces overall, (a priori) I would expect this to hold up better among highly engaged EAs than among less engaged EAs (who may be less motivated to take the survey and more responsive to the survey becoming too onerous to complete).

The total number of highly engaged EAs in our sample this year was similar/slightly higher than 2019, implying that population slightly increased in size (as I would expect). We don't have any good proxies for the size of the less engaged EA population (this becomes harder and harder as we consider the progressively larger populations of progressively less engaged EAs), but I would guess that we probably experienced a yet larger reduction in sampling rate for this population, and that the true size of the population of less engaged EAs has probably increased (I would probably look to things like the EA newsletter, subscribers to 80,000 Hours mailing lists and so on for proxies for that group, but Aaron/people at 80K may disagree).

Benjamin_Todd @ 2021-05-21T11:36 (+2)

If the sampling rate of highly engaged EAs has gone down from 40% to 35%, but the number of them was the same, that would imply 14% growth.

You then say:

The total number of highly engaged EAs in our sample this year was similar/slightly higher than 2019

So the total growth should be 14% + growth in highly engaged EAs.

Could you give me the exact figure?

David_Moss @ 2021-05-21T11:53 (+4)

926 highly engaged in EAS2019, 933 in EAS2020.

Of course, much of this growth in the number of highly engaged EAs is likely due to EAs becoming more engaged, rather than there becoming more EAs. As it happens, EAS2020 had more 4's but fewer 5's, which I think can plausibly be explained by the general reduction in rate of people sampled, mentioned above, but a number of 1-3s moving into the 4 category and fewer 4s moving into the 5 category (which is more stringent, e.g. EA org employee, group leader etc.).

Benjamin_Todd @ 2021-05-20T19:13 (+3)

Last year, we estimated the completion rate by surveying various groups (e.g. everyone who works at 80k) about who took the survey this year.

This showed that among highly engaged EAs, the response rate was ~40%, which let David make these estimates.

If we repeated that process this year, we could make a new estimate of the total number of EAs, which would give us an estimate of the growth / shrinkage since 2019. This would be a noisy estimate, but one of the better methods I'm aware of, so I'd be excited to see this happen.

David_Moss @ 2021-05-20T19:16 (+2)

I kinda do this in this comment above: not estimating the total size again directly, but showing that I don't think the current numbers suggest a reduction in size.

evelynciara @ 2021-05-21T02:12 (+13)

I suggest putting the 2020 survey posts in a sequence, like I've done for the 2019 and 2018 series and Vaidehi has done for the 2017 series

BrianTan @ 2021-05-13T07:50 (+9)

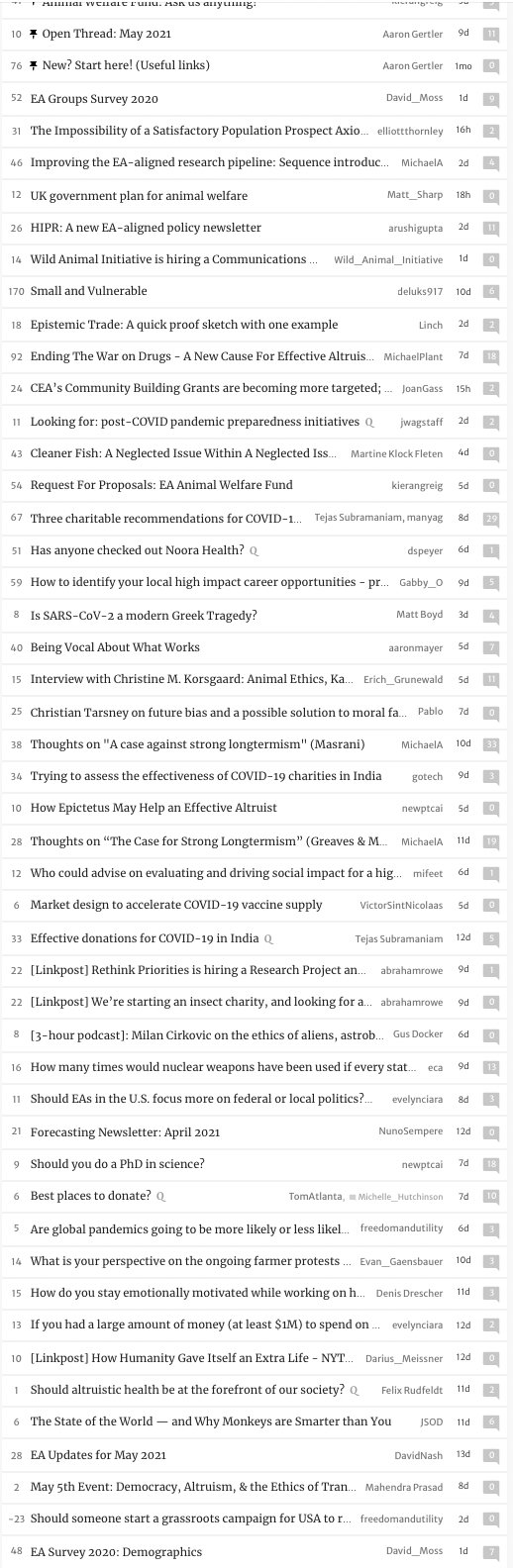

This comment isn't related to the content of this post, but for some unknown reason, I had to press "Load more" twice on the Frontpage of the forum before seeing this post, even if it already has 48 karma, 7 comments, and was only posted a day ago.

See the screenshot below (taken from an incognito tab, so I could make sure it's not just my account), where this post is featured at the bottom of so many lower karma and older posts. I would assume this should be near the top of the frontpage. Is this a bug?

I've flagged this via a Forum message to JP Addison in case it's a bug.

JP Addison @ 2021-05-20T14:26 (+4)

Sorry about the delay. I've fixed the issue and have reset the date of posting to now.

BrianTan @ 2021-05-20T14:48 (+2)

Thanks!

Linch @ 2021-05-20T05:43 (+2)

Hi Brian. Just a quick check, do you have "Community" tag at neutral or higher karma sorting (at the top, next to the "Front Page" header)?

"Community" is by default -25.

David_Moss @ 2021-05-20T07:26 (+11)

I have it on neutral and this was still virtually hidden from me: the same with the new How People Get Involved post, which was so far down the front page that I couldn't even fit it on a maximally zoomed out screenshot. I believe this is being looked into.

BrianTan @ 2021-05-20T10:45 (+4)

I only have two tags at the top of my frontpage, which are"Community" and "Personal". Both are at "+0". I don't remember changing those defaults, though I'm fine with them both being at +0.

This post isn't tagged under "Community" though, it's tagged as "Data (EA Community)". JP Addison told me I identified a bug, though I'm unsure what bug I identified, and it seems like JP hasn't fixed it.

I had the same problem for David Moss's next post on the EA Survey, where it appeared 3-4 pages down from the frontpage. Are you having the same problem or no? I can replicate the problem on an Incognito tab (while I'm logged out), so maybe others have the same problem?

BarryGrimes @ 2021-05-19T09:56 (+6)

Readers may be interested to compare the EA Survey data with the data that CEA has collected from event registrations over the past 1.5 years. This slide deck contains charts showing the following categories:

- Gender

- Race/ethnicity

- Age

- Career stage

- Which year attendees got engaged with EA

- Do attendees feel part of the EA community

A few items to highlight:

- The percentage of female and non-binary attendees ranged from 32% to 40%

- The percentage of non-white attendees ranged from 27% to 49%

- The percentage of attendees aged 18-35 ranged from 83-96%

- 46% of survey respondents at EA Global: Reconnect had not engaged seriously with EA before 2018

David_Moss @ 2021-05-19T10:48 (+2)

Thanks Barry. I agree it's interesting to make the comparison!

Do you know if these numbers (for attendees) differ from the numbers for applicants? I don't know if any of these events were selective to any degree (as EA Global is), but if so, I'd expect the figures for applicants to be a closer match to those of the EA Survey (and the community as a whole), even if you weren't explicitly filtering with promoting diversity in mind. I suppose there could also be other causes of this, in addition to self-selection, such as efforts you might have made to reach a diverse audience when promoting the events?

One thing I noted is that the attendees for these events appear to be even younger and more student-heavy than the EA Survey sample (of course, at least one of the events seems to have been specifically for students). This might explain the differences between your figures and those of the EA Survey. In EA Survey data, based on a quick look, student respondents appear to be slightly more female and slighly less white than non-students.

KathrynMecrow @ 2021-05-26T20:05 (+6)

To add to Barry's point, WANBAM has interfaced with over 500 people in the first two years of our operations, so a smaller pool than this survey (more here). Our demographics (setting aside gender of course) are quite different from the EA Survey findings. 40% of our recent round of mentees (120 people in total this round) are people of color. There is also significant geographic diversity with this round of mentees coming from the US, UK, Australia, Germany, Philippines, France, Singapore, Canada, Switzerland, Belgium, Norway, Netherlands, Spain, Brasil, Denmark Mexico, India, Hong Kong, New Zealand, Ghana, Czech Republic, Finland, and Sweden.

Mentees most frequently selected interest in emerging technologies (67%), Research (59%), Public Policy, and Politics (56%), International development (49%), Cause prioritization (49%), Community Building (43%), High impact philanthropy (41%), mental health and wellbeing (40%), Philanthropic education and outreach (33%), Operations (33%), Animal advocacy (30%), Women in technology (29%), and Earning to Give (20%).

I suspect there are subtle dynamics at play here around who is completing the survey. What's your phrasing on the external communications around who you want to take it? Do you specify a threshold of involvement in EA? Happy to put our heads together here. Thanks! Warmly, Kathryn

David_Moss @ 2021-05-27T09:55 (+16)

Thanks for your comment Kathryn!

Your comment makes it sound like you think there's some mystery to resolve here or that the composition of people who engaged with WANBAM conflicts with the EA Survey data. But it's hard for me to see how that would be the case. Is there any reason to think that the composition of people who choose to interact with WANBAM (or who get mentored by WANBAM) would be representative of the broader EA community as a whole?

WANBAM is prominently marketed as "a global network of women, trans people of any gender, and non-binary people" and explicitly has DEI as part of its mission. It seems like we would strongly expect the composition of people who choose to engage with WANBAM to be more "diverse" than the community as a whole. I don't think we should be surprised that the composition of people who interact with WANBAM differs from the composition of the wider community as a whole any more than we should be surprised that a 'LessWrongers in EA' group, or some such, differed from the composition of the broader community. Maybe an even closer analogy would be whether we should be surprised that a Diversity and Inclusion focused meetup at EAG has a more diverse set of attendees than the broader EA Global audience.

Also, it seems a little odd to even ask, but does WANBAM take any efforts to try to reach a more diverse audience in terms of race/ethnicity or geography or ensure that the people you mentor are a diverse group? If so, then it also seems clear that we'd expect WANBAM to have higher numbers from the groups you are deliberately trying to reach more of.

It's possible I'm missing something, but given all this, I don't see why we'd expect the people who WANBAM elect to mentor to be representative of the wider EA community (indeed, WANBAM explicitly focuses only on a minority of the EA community), so I don't see these results as having too much relevance to estimating the composition of the community as a whole.

---

Regarding external communications for the EA Survey. The EA Survey is promoted by a bunch of different outlets, including people just sharing it with their friends, and it goes without saying we don't directly control all of these messages. Still, the EA Survey itself isn't presented with any engagement requirement and the major 'promoters' make an effort to make clear that we encourage anyone with any level of involvement or affiliation with EA to take the survey. Here's a representative example from the EA Newsletter, which has been the major referrer in recent years:

If you think of yourself, however loosely, as an “effective altruist,” please consider taking the survey — even if you’re very new to EA! Every response helps us get a clearer picture.

Another thing we can do is compare the composition of people who took the EA Survey from the different referrers. It would be surprising if the referrers to the EA Survey, with their different messages, all happened to employ external communications that artificially reduce the apparent ethnic diversity of the EA community. In fact, all the figures for % not-only-white across the referrers are much lower than the 40% figure for WANBAM mentees, ranging between 17-28% (roughly in line with the sample as a whole). There was one exception, which was an email sent to local group organizers, which was 36% not-only-white. That outlier is not surprising to me since, as we observed in the EA Groups Survey, group organizers are much less white than the community as a whole (47% white). This makes sense, simply because there are a lot of groups run in majority non-white countries, meaning there are a lot of non-white organizers from these groups, even though the global community (heavily dominated by majority white countries) is majority white.

BarryGrimes @ 2021-05-28T13:01 (+1)

The EA Global events had a higher bar for entry in terms of understanding and involvement in EA, but we don’t make admissions decisions based on demographic quotas. The demographics of attendees and applicants are broadly similar. I did some analysis of this for EAG Virtual and EAGxVirtual last year. You can see the charts for gender and ethnicity here.

EA conferences tend to be more attractive to people who are newer to the movement, thinking through their career plans, and wanting to meet like-minded people. Our data supports your findings that newer members are more diverse. I expect the EA Survey receives more responses from people who have been involved in EA for a longer time. That’s my guess as to the main reason for the differences.

David_Moss @ 2021-05-31T16:42 (+2)

Thanks for the reply!

Interestingly, EAG attendees don't seem straightforwardly newer to EA than EAS respondents. I would agree that it's likely explained by things like age/student status and more generally which groups are more likely to be interested in this kind of event.