Buck's Quick takes

By Buck @ 2020-09-13T17:29 (+6)

nullBuck @ 2025-07-11T17:29 (+102)

An excerpt about the creation of PEPFAR, from "Days of Fire" by Peter Baker. I found this moving.

Another major initiative was shaping up around the same time. Since taking office, Bush had developed an interest in fighting AIDS in Africa. He had agreed to contribute to an international fund battling the disease and later started a program aimed at providing drugs to HIV-infected pregnant women to reduce the chances of transmitting the virus to their babies. But it had only whetted his appetite to do more. “When we did it, it revealed how unbelievably pathetic the U.S. effort was,” Michael Gerson said.

So Bush asked Bolten to come up with something more sweeping. Gerson was already thought of as “the custodian of compassionate conservatism within the White House,” as Bolten called him, and he took special interest in AIDS, which had killed his college roommate. Bolten assembled key White House policy aides Gary Edson, Jay Lefkowitz, and Kristen Silverberg in his office. In seeking something transformative, the only outsider they called in was Anthony Fauci, the renowned AIDS researcher and director of the National Institute of Allergy and Infectious Diseases.

“What if money were no object?” Bolten asked. “What would you do?”

Bolten and the others expected him to talk about research for a vaccine because that was what he worked on.

“I’d love to have a few billion more dollars for vaccine research,” Fauci said, “but we’re putting a lot of money into it, and I could not give you any assurance that another single dollar spent on vaccine research is going to get us to a vaccine any faster than we are now.”

Instead, he added, “The thing you can do now is treatment.”

The development of low-cost drugs meant for the first time the world could get a grip on the disease and stop it from being a death sentence for millions of people. “They need the money now,” Fauci said. “They don’t need a vaccine ten years from now.”

The aides crafted a plan in secret, keeping it even from Colin Powell and Tommy Thompson, the secretary of health and human services. They were ready for a final presentation to Bush on December 4. Just before heading into the meeting, Bush stopped by the Roosevelt Room to visit with Jewish leaders in town for the annual White House Hanukkah party later that day. The visitors were supportive of Bush’s confrontation with Iraq and showered him with praise. One of them, George Klein, founder of the Republican Jewish Coalition, recalled that his father had been among the Jewish leaders who tried to get Franklin Roosevelt to do more to stop the Holocaust. “I speak for everyone in this room when I say that if you had been president in the forties, there could have been millions of Jews saved,” the younger Klein said.

Bush choked up at the thought—“You could see his eyes well up,” Klein remembered—and went straight from that meeting to the AIDS meeting, the words ringing in his ears. Lefkowitz, who walked with the president from the Roosevelt Room to the Oval Office, was convinced that sense of moral imperative emboldened Bush as he listened to the arguments about what had shaped up as a $15 billion, five-year program. Daniels and other budget-minded aides “were kind of gasping” about spending so much money, especially with all the costs of the struggle against terrorism and the looming invasion of Iraq. But Bush steered the conversation to aides he knew favored the program, and they argued forcefully for it.

“Gerson, what do you think?” Bush asked.

“If we can do this and we don’t, it will be a source of shame,” Gerson said.

Bush thought so too. So while he mostly wrestled with the coming war, he quietly set in motion one of the most expansive lifesaving programs ever attempted. Somewhere deep inside, the notion of helping the hopeless appealed to a former drinker’s sense of redemption, the belief that nobody was beyond saving.

“Look, this is one of those moments when we can actually change the lives of millions of people, a whole continent,” he told Lefkowitz after the meeting broke up. “How can we not take this step?”

Mo Putera @ 2025-07-12T09:05 (+18)

The part about "what if money were no object?" reminds me of Justin Sandefur's point in his essay PEPFAR and the Costs of Cost-Benefit Analysis that (emphasis mine)

Budgets aren’t fixed

Economists’ standard optimization framework is to start with a fixed budget and allocate money across competing alternatives. At a high-level, this is also how the global development community (specifically OECD donors) tends to operate: foreign aid commitments are made as a proportion of national income, entirely divorced from specific policy goals. PEPFAR started with the goal instead: Set it, persuade key players it can be done, and ask for the money to do it.

Bush didn’t think like an economist. He was apparently allergic to measuring foreign aid in terms of dollars spent. Instead, the White House would start with health targets and solve for a budget, not vice versa. “In the government, it’s usually — here is how much money we think we can find, figure out what you can do with it,” recalled Mark Dybul, a physician who helped design PEPFAR, and later went on to lead it. “We tried that the first time and they came back and said, ‘That’s not what we want...Tell us how much it will cost and we’ll figure out if we can pay for it or not, but don’t start with a cost.’”

Economists are trained to look for trade-offs. This is good intellectual discipline. Pursuing “Investment A” means forgoing “Investment B.” But in many real-world cases, it’s not at all obvious that the realistic alternative to big new spending proposals is similar levels of big new spending on some better program. The realistic counterfactual might be nothing at all.

In retrospect, it seems clear that economists were far too quick to accept the total foreign aid budget envelope as a fixed constraint. The size of that budget, as PEPFAR would demonstrate, was very much up for debate.

When Bush pitched $15 billion over five years in his State of the Union, he noted that $10 billion would be funded by money that had not yet been promised. And indeed, 2003 marked a clear breaking point in the history of American foreign aid. In real-dollar terms, aid spending had been essentially flat for half a century at around $20 billion a year. By the end of Bush’s presidency, between PEPFAR and massive contracts for Iraq reconstruction, that number hovered around $35 billion. And it has stayed there since. (See Figure 2)

Compared to normal development spending, $15 billion may have sounded like a lot, but exactly one sentence after announcing that number in his State of the Union address, Bush pivoted to the case for invading Iraq, a war that would eventually cost America something in the region of $3 trillion — not to mention thousands of American and hundreds of thousands of Iraqi lives. Money was not a real constraint.

A broader lesson here, perhaps, is about getting counterfactuals right. In comparative cost-effectiveness analysis, the counterfactual to AIDS treatment is the best possible alternative use of that money to save lives. In practice, the actual alternative might simply be the status quo, no PEPFAR, and a 0.1% reduction in the fiscal year 2004 federal budget. Economists are often pessimistic about the prospects of big additional spending, not out of any deep knowledge of the budgeting process, but because holding that variable fixed makes analyzing the problem more tractable. In reality, there are lots of free variables.

Buck @ 2021-06-06T18:12 (+101)

Here's a crazy idea. I haven't run it by any EAIF people yet.

I want to have a program to fund people to write book reviews and post them to the EA Forum or LessWrong. (This idea came out of a conversation with a bunch of people at a retreat; I can’t remember exactly whose idea it was.)

Basic structure:

- Someone picks a book they want to review.

- Optionally, they email me asking how on-topic I think the book is (to reduce the probability of not getting the prize later).

- They write a review, and send it to me.

- If it’s the kind of review I want, I give them $500 in return for them posting the review to EA Forum or LW with a “This post sponsored by the EAIF” banner at the top. (I’d also love to set up an impact purchase thing but that’s probably too complicated).

- If I don’t want to give them the money, they can do whatever with the review.

What books are on topic: Anything of interest to people who want to have a massive altruistic impact on the world. More specifically:

- Things directly related to traditional EA topics

- Things about the world more generally. Eg macrohistory, how do governments work, The Doomsday Machine, history of science (eg Asimov’s “A Short History of Chemistry”)

- I think that books about self-help, productivity, or skill-building (eg management) are dubiously on topic.

Goals:

- I think that these book reviews might be directly useful. There are many topics where I’d love to know the basic EA-relevant takeaways, especially when combined with basic fact-checking.

- It might encourage people to practice useful skills, like writing, quickly learning about new topics, and thinking through what topics would be useful to know more about.

- I think it would be healthy for EA’s culture. I worry sometimes that EAs aren’t sufficiently interested in learning facts about the world that aren’t directly related to EA stuff. I think that this might be improved both by people writing these reviews and people reading them.

- Conversely, sometimes I worry that rationalists are too interested in thinking about the world by introspection or weird analogies relative to learning many facts about different aspects of the world; I think book reviews would maybe be a healthier way to direct energy towards intellectual development.

- It might surface some talented writers and thinkers who weren’t otherwise known to EA.

- It might produce good content on the EA Forum and LW that engages intellectually curious people.

Suggested elements of a book review:

- One paragraph summary of the book

- How compelling you found the book’s thesis, and why

- The main takeaways that relate to vastly improving the world, with emphasis on the surprising ones

- Optionally, epistemic spot checks

- Optionally, “book adversarial collaborations”, where you actually review two different books on the same topic.

Aaron Gertler @ 2021-06-08T19:14 (+18)

I worry sometimes that EAs aren’t sufficiently interested in learning facts about the world that aren’t directly related to EA stuff.

I share this concern, and I think a culture with more book reviews is a great way to achieve that (I've been happy to see all of Michael Aird's book summaries for that reason).

CEA briefly considered paying for book reviews (I was asked to write this review as a test of that idea). IIRC, the goal at the time was more about getting more engagement from people on the periphery of EA by creating EA-related content they'd find interesting for other reasons. But book reviews as a push toward levelling up more involved people // changing EA culture is a different angle, and one I like a lot.

One suggestion: I'd want the epistemic spot checks, or something similar, to be mandatory. Many interesting books fail the basic test of "is the author routinely saying true things?", and I think a good truth-oriented book review should check for that.

MichaelA @ 2021-06-11T09:58 (+3)

One suggestion: I'd want the epistemic spot checks, or something similar, to be mandatory. Many interesting books fail the basic test of "is the author routinely saying true things?", and I think a good truth-oriented book review should check for that.

I think that this may make sense / probably makes sense for receiving payment for book reviews. But I think I'd be opposed to discouraging people from just posting book summaries/reviews/notes in general unless they do this.

This is because I think it's possible to create useful book notes posts in only ~30 mins of extra time on top of the time one spends reading the book and making Anki cards anyway (assuming someone is making Anki cards as they read, which I'd suggest they do). (That time includes writing key takeaways from memory or adapting them from rough notes, copying the cards into the editor and formatting them, etc.) Given that, I think it's worthwhile for me to make such posts. But even doubling that time might make it no longer worthwhile, given how stretched my time is.

Me doing an epistemic spot check would also be useful for me anyway, but I don't think useful enough to justify the time, relative to focusing on my main projects whenever I'm at a computer, listening to books while I do chores etc., and churning out very quick notes posts when I finish.

All that said, I think highlighting the idea of doing epistemic spot checks, and highlighting why it's useful, would be good. And Michael2019 and MichaelEarly2020 probably should've done such epistemic spot checks and included them in book notes posts (as at that point I knew less and my time was less stretched), as probably should various other people. And maybe I should still do it now for the books that are especially relevant to my main projects.

Aaron Gertler @ 2021-06-11T18:48 (+7)

I think that this may make sense / probably makes sense for receiving payment for book reviews. But I think I'd be opposed to discouraging people from just posting book summaries/reviews/notes in general unless they do this.

Yep, agreed. If someone is creating e.g. an EAIF-funded book review, I want it to feel very "solid", like I can really trust what they're saying and what the author is saying.

But I also want Forum users to feel comfortable writing less time-intensive content (like your book notes). That's why we encourage epistemic statuses, have Shortform as an option, etc.

(Though it helps if, even for a shorter set of notes, someone can add a note about their process. As an example: "Copying over the most interesting bits and my immediate impressions. I haven't fact-checked anything, looked for other perspectives, etc.")

MichaelA @ 2021-06-11T19:15 (+2)

Yeah, I entirely agree, and your comment makes me realise that, although I make my process fairly obvious in my posts, I should probably in future add almost the exact sentences "I haven't fact-checked anything, looked for other perspectives, etc.", just to make that extra explicit. (I didn't interpret your comment as directed at my posts specifically - I'm just reporting a useful takeaway for me personally.)

vaidehi_agarwalla @ 2021-06-14T16:13 (+3)

I wonder if there's something in between these two points:

- they could check the most important 1-3 claims the author makes.

- they could include the kind of evidence and links for all claims that are made so readers can quickly check themselves

Habryka @ 2021-06-06T19:58 (+10)

Yeah, I really like this. SSC currently already has a book-review contest running on SSC, and maybe LW and the EAF could do something similar? (Probably not a contest, but something that creates a bit of momentum behind the idea of doing this)

Peter_Hurford @ 2021-06-10T17:55 (+4)

This does seem like a good model to try.

casebash @ 2021-06-06T22:23 (+7)

I'd be interested in this. I've been posting book reviews of the books I read to Facebook - mostly for my own benefit. These have mostly been written quickly, but if there was a decent chance of getting $500 I could pick out the most relevant books and relisten to them and then rewrite them.

MichaelA @ 2021-06-11T10:02 (+2)

I haven't read any of those reviews you've posted on FB, but I'd guess you should in any case post them to the Forum! Even if you don't have time for any further editing or polishing.

I say this because:

- This sort of thing often seems useful in general

- People can just ignore them if they're not useful, or not relevant to them

Maybe there being a decent chance of you getting $500 for them and/or you relistening and rewriting would be even better - I'm just saying that this simple step of putting them on the Forum already seems net positive anyway.

Could be as top-level posts or as shortforms, depending on the length, substantiveness, polish, etc.

Jordan Pieters @ 2021-06-14T09:38 (+6)

Perhaps it would be worthwhile to focus on books like those in this list of "most commonly planned to read books that have not been read by anyone yet"

saulius @ 2021-07-12T13:13 (+5)

I would also ask these people to optionally write or improve a summary of the book in Wikipedia if it has an Wikipedia article (or should have one). In many cases, it's not only EAs who would do more good if they knew ideas in a given book, especially when it's on a subject like pandemics or global warming rather than topics relevant to non-altruistic work too like management or productivity. When you google a book, Wikipedia is often the first result and so these articles receive a quite lot of traffic (you can see here how much traffic a given article receives).

Peter_Hurford @ 2021-06-10T17:55 (+5)

I've thought about this before and I would also like to see this happen.

Khorton @ 2021-06-06T21:53 (+4)

You can already pay for book reviews - what would make these different?

Buck @ 2021-06-06T23:42 (+5)

That might achieve the "these might be directly useful goal" and "produce interesting content" goals, if the reviewers knew about how to summarize the books from an EA perspective, how to do epistemic spot checks, and so on, which they probably don't. It wouldn't achieve any of the other goals, though.

Khorton @ 2021-06-07T12:27 (+4)

I wonder if there are better ways to encourage and reward talented writers to look for outside ideas - although I agree book reviews are attractive in their simplicity!

MichaelA @ 2021-06-11T10:07 (+3)

Yeah, this seems good to me.

I also just think in any case more people should post their notes, key takeaways, and (if they make them) Anki cards to the Forum, as either top-level posts or shortforms. I think this need only take ~30 mins of extra time on top of the time they spend reading or note-taking or whatever for their own benefit. (But doing what you propose would still add value by incentivising more effortful and even more useful versions of this.)

There are many topics where I’d love to know the basic EA-relevant takeaways [...]

The main takeaways that relate to vastly improving the world, with emphasis on the surprising ones

Yeah, I think this is worth emphasising, since:

- Those are things existing, non-EA summaries of the books are less likely to provide

- Those are things that even another EA reading the same book might not think of

- Coming up with key takeaways is an analytical exercise and will often draw on specific other knowledge, intuitions, experiences, etc. the reader has

Also, readers of this shortform may find posts tagged effective altruism books interesting.

reallyeli @ 2021-06-06T19:26 (+3)

Quick take is this sounds like a pretty good bet, mostly for the indirect effects. You could do it with a 'contest' framing instead of a 'I pay you to produce book reviews' framing; idk whether that's meaningfully better.

Max_Daniel @ 2021-06-06T18:28 (+2)

I don't think it's crazy at all. I think this sounds pretty good.

Buck @ 2020-09-19T05:00 (+94)

I’ve recently been thinking about medieval alchemy as a metaphor for longtermist EA.

I think there’s a sense in which it was an extremely reasonable choice to study alchemy. The basic hope of alchemy was that by fiddling around in various ways with substances you had, you’d be able to turn them into other things which had various helpful properties. It would be a really big deal if humans were able to do this.

And it seems a priori pretty reasonable to expect that humanity could get way better at manipulating substances, because there was an established history of people figuring out ways that you could do useful things by fiddling around with substances in weird ways, for example metallurgy or glassmaking, and we have lots of examples of materials having different and useful properties. If you had been particularly forward thinking, you might even have noted that it seems plausible that we’ll eventually be able to do the full range of manipulations of materials that life is able to do.

So I think that alchemists deserve a lot of points for spotting a really big and important consideration about the future. (I actually have no idea if any alchemists were thinking about it this way; that’s why I billed this as a metaphor rather than an analogy.) But they weren’t really very correct about how anything worked, and so most of their work before 1650 was pretty useless.

It’s interesting to think about whether EA is in a similar spot. I think EA has done a great job of identifying crucial and underrated considerations about how to do good and what the future will be like, eg x-risk and AI alignment. But I think our ideas for acting on these considerations seem much more tenuous. And it wouldn’t be super shocking to find out that later generations of longtermists think that our plans and ideas about the world are similarly inaccurate.

So what should you have done if you were an alchemist in the 1500s who agreed with this argument that you had some really underrated considerations but didn’t have great ideas for what to do about them?

I think that you should probably have done some of the following things:

- Try to establish the limits of your knowledge and be clear about the fact that you’re in possession of good questions rather than good answers.

- Do lots of measurements, write down your experiments clearly, and disseminate the results widely, so that other alchemists could make faster progress.

- Push for better scientific norms. (Scientific norms were in fact invented in large part by Robert Boyle for the sake of making chemistry a better field.)

- Work on building devices which would enable people to do experiments better.

Overall I feel like the alchemists did pretty well at making the world better, and if they’d been more altruistically motivated they would have been even better.

There are some reasons to think that pushing early chemistry forward is easier than working on improving the long term future, In particular, you might think that it’s only possible to work on x-risk stuff around the time of the hinge of history.

MaxRa @ 2020-10-16T07:14 (+3)

Huh, interesting thoughts, have you looked into the actual motivations behind it more? I'd've guessed that there was little "big if true" thinking in alchemy and mostly hopes for wealth and power.

Another thought, I suppose alchemy was more technical than something like magical potion brewing and in that way attracted other kinds of people, making it more proto-scientific? Another similar comparison might be sincere altruistic missionaries that work on finding the "true" interpretation of the bible/koran/..., sharing their progress in understanding it and working on convincing others to save them.

Regarding pushing chemnistry being easier than longtermism, I'd have guessed the big reasons why pushing scientific fields is easier are the possibility of repeating experiments and profitability of the knowledge. Are there really longtermists who find it plausible we can only work on x-risk stuff around the hinge? Even patient longtermists seem to want to save resources and I suppose invest in other capacity building. Ah, or do you mean "it's only possible to *directly* work on x-risk stuff", vs. indirectly? It just seemed odd to suggest that everything longtermists have done so far has not affected the probability of eventual x-risk, in the very least it has set in motion the longtermism movement earlier and shaping the culture and thinking style and so forth via institutions like FHI.

Buck @ 2021-11-01T18:53 (+74)

I think it's bad when people who've been around EA for less than a year sign the GWWC pledge. I care a lot about this.

I would prefer groups to strongly discourage new people from signing it.

I can imagine boycotting groups that encouraged signing the GWWC pledge (though I'd probably first want to post about why I feel so strongly about this, and warn them that I was going to do so).

I regret taking the pledge, and the fact that the EA community didn't discourage me from taking it is by far my biggest complaint about how the EA movement has treated me. (EDIT: TBC, I don't think anyone senior in the movement actively encouraged we to do it, but I am annoyed at them for not actively discouraging it.)

(writing this short post now because I don't have time to write the full post right now)

Ramiro @ 2021-11-02T21:46 (+23)

...I don't have time to write the full post right now

I'm eager to read the full post, or any expansion on what makes you think that groups should actively discourage newbies from take the Pledge.

Jordan Pieters @ 2021-11-07T21:45 (+9)

I'm also eager to read it. This would affect the activities of quite a few groups (mine included). I can't currently think of any good reasons why the pledge should be controversial.

HowieL @ 2021-11-09T15:26 (+21)

I'd be pretty interested in you writing this up. I think it could cause some mild changes in the way I treat my salary.

Luke Freeman @ 2021-11-21T22:18 (+19)

Hi Buck,

I’m very sorry to hear that you regret taking The Pledge and feel that the EA community in 2014 should have actively discouraged you from taking it in the first place.

If you believe it’s better for you and the world that you unpledge then you should feel free to do so. I also strongly endorse this statement from the 2017 post that KevinO quoted:

“The spirit of the Pledge is not to stop you from doing more good, and is not to lead you to ruin. If you find that it’s doing either of these things, you should probably break the Pledge.”

I would very much appreciate hearing further details about why you feel as strongly as you do about actively discouraging other people from taking The Pledge and the way this is done circa 2021.

Last year we collaborated with group leaders and CEA groups team to write a new guide to promoting GWWC within local and university groups (comment using this link). In that guide we tried to be pretty clear about the things to be careful of such as proposing that younger adults be encouraged to consider taking a trial pledge first if that is more appropriate for them (while also respecting their agency as adults) – there are many more people taking this option as of 2021 compared with 2014 (~50% vs ~25% respectively). We also find that most new members these days started by giving first before making a pledge, and this is something that we actively encourage and will be further developing during 2022.

Personally, I’ve found giving to be something that has been very meaningful to me during my entire involvement in EA and that The Pledge was a way of formalising that in a meaningful, motivating and sustainable way. My experience isn’t dissimilar to what we hear regularly from many members, and it has also been demonstrated by the various EA surveys (including the recent one by Open Philanthropy) which show GWWC to be a strong way in which people engage with EA (it was one of the top ranked for positive to negative engagement ratio).

However, I certainly don’t want to diminish any negative experiences that you or anyone else has had in the past and it is very important to me that we learn carefully how to get the most positive outcomes.

I’d be more than happy to talk further about this on a call, or by email, or to continue the discussion on this thread.

KevinO @ 2021-11-03T10:54 (+14)

From "Clarifying the Giving What We Can pledge" in 2017 (https://forum.effectivealtruism.org/posts/drJP6FPQaMt66LFGj/clarifying-the-giving-what-we-can-pledge#How_permanent_is_the_Pledge__)

"""

How permanent is the Pledge?

The Pledge is a promise, or oath, to be made seriously and with every expectation of keeping it. But if someone finds that they can no longer keep the Pledge (for instance due to serious unforeseen circumstances), then they can simply contact us, discuss the matter if need be, and cease to be a member. They can of course rejoin later if they renew their commitment.

Some of us find the analogy of marriage a helpful one: you make a promise with firm intent, you make life plans based on it, you structure things so that it’s difficult to back out of, and you commit your future self to doing something even if you don’t feel like it at the time. But at the same time, there’s a chance that things will change so drastically that you will break this tie.

Breaking the Pledge is not something to be done for reasons of convenience, or simply because you think your life would be better if you had more money. But we believe there are two kinds of situations where it’s acceptable to withdraw from the Pledge.

One situation is when it would impose extreme costs for you. If you find yourself in hardship and don’t have any way to donate what you committed to while maintaining a reasonable quality of life for yourself and your dependants, this is a good reason to withdraw your Pledge. (Note that during unemployment you donate only 1% of spending money, as described under “Circumstances that change the Pledge” below.)

The other is when you find that you have an option to do more good. For example, imagine you pledged and are now deciding whether to found a nonprofit (which will take all your financial resources) or keep your “day job” in order to be able to donate 10%. If you have good reason to believe that the nonprofit will do significantly more good than the donations, that founding the nonprofit is not compatible with donating 10% of your income, and that you would not be able to make up the gap in donations within a couple of years, withdrawing your Pledge would be a reasonable thing to do.

The spirit of the Pledge is not to stop you from doing more good, and is not to lead you to ruin. If you find that it’s doing either of these things, you should probably break the Pledge.

We understand that some people have a very strong definition of “pledge” as meaning something that must not be broken under any circumstances. If this is your sense of the word, and you wouldn’t want to take a pledge if there were any chance of you being unable to keep it, you might find that Try Giving on an ongoing basis is a better fit for you.

"""

KevinO @ 2021-11-03T10:57 (+4)

For what it's worth, I think it makes sense to stop pledging if that would allow you to do more good.

Daniel_Eth @ 2021-11-01T20:41 (+13)

I regret taking the pledge

I feel like you should be able to "unpledge" in that case, and further I don't think you should feel shame or face stigma for this. There's a few reasons I think this:

- You're working for an EA org. If you think your org is ~as effective as where you'd donate, it doesn't make sense for them to pay you money that you then donate (unless if you felt there was some psychological benefit to this, but clearly you feel the reverse)

- The community has a LOT of money now. I'm not sure what your salary is, but I'd guess it's lower than optimal given community resources, so you donating money to the community pot is probably the reverse of what I'd want.

- I don't want the community to be making people feel psychologically worse, and insofar as it is, I want an easy out for them. Therefore, I want people in your situation in general to unpledge and not feel shame or face stigma. My guess is that if you did so, you'd be sending a signal to others that doing so is acceptable.

- You signed the pledge under a set of assumptions which appear to no longer hold (eg., about how you'd feel about the pledge years out, how much money the community would have, etc)

- I'm generally pro-[people being able to "break contract" and similar without facing large penalties] (other than paying damages, but damages here would be zero since presumably no org made specific plans on the assumption that you'd continue to follow the pledge) – this reduces friction in making contracts to begin with and allows for more dynamism. Yes, a "pledge" in some ways has more meaning than a contract, but seeing as you (apparently) made the pledge relatively hastily (and perhaps under pressure from other? I find this unclear from your post), it doesn't seem like it was appropriate for you to have been making a lifelong commitment to the pledge, and I think we as a community should recognize that and adjust our response accordingly.

Larks @ 2021-11-03T00:47 (+22)

Here is the relevant version of the pledge, from December 2014:

I recognise that I can use part of my income to do a significant amount of good in the developing world. Since I can live well enough on a smaller income, I pledge that for the rest of my life or until the day I retire, I shall give at least ten percent of what I earn to whichever organisations can most effectively use it to help people in developing countries, now and in the years to come. I make this pledge freely, openly, and sincerely.

A large part of the point of the pledge is to bind your future self in case your future self is less altruistic. If you allow people to break it based on how they feel, that would dramatically undermine the purpose of the pledge. It might well be the case that the pledge is bad because it contains implicit empirical premises that might cease to hold - indeed I argued this at the time! - but that doesn't change the fact that someone did in fact make this commitment. If people want to make a weak statement of intent they are always able to do this - they can just say "yeah I will probably donate for as long as I feel like it". But the pledge is significantly different from this, and attempting to weaken it to be no commitment at all entails robbing people of the ability to make such commitments.

Your argument about damages seems quite strange. If I buy something from a small business, promise to pay in 30 days, and then do not do so, my failure to pay damages them. This is the case even if they hadn't made any specific plans for what to do with that cashflow, and even if I bought through an online platform and hence don't know exactly who would have received the money. The damages are precisely equal to the amount of money I owe the firm - and hence, in the case of the pledge, the damages would be equal to (the NPV of) the pledged income.

I agree with Buck that people should take lifelong commitments more seriously than they have. Part of taking them seriously is respecting their lifelong nature.

Khorton @ 2021-11-09T16:36 (+10)

I strongly agree that local groups should encourage people to give for a couple years before taking the GWWC Pledge, and that the Pledge isn't right for everyone (I've been donating 10% since childhood and have never taken the pledge).

When it comes to the 'Further Giving' Pledge, I think it wouldn't be unreasonable to encourage people to get some kind of pre-Pledge counselling or take a pre-Pledge class, to be absolutely certain people have thought through the implications of the commitment they are making .

edwardhaigh @ 2021-11-03T02:48 (+8)

I remember there being some sort of text saying you should try a 1% donation for a few years first to check you're happy making the pledge. Perhaps this issue has been resolved since you joined?

Buck @ 2020-09-13T17:29 (+71)

Edited to add: I think that I phrased this post misleadingly; I meant to complain mostly about low quality criticism of EA rather than eg criticism of comments. Sorry to be so unclear. I suspect most commenters misunderstood me.

I think that EAs, especially on the EA Forum, are too welcoming to low quality criticism [EDIT: of EA]. I feel like an easy way to get lots of upvotes is to make lots of vague critical comments about how EA isn’t intellectually rigorous enough, or inclusive enough, or whatever. This makes me feel less enthusiastic about engaging with the EA Forum, because it makes me feel like everything I’m saying is being read by a jeering crowd who just want excuses to call me a moron.

I’m not sure how to have a forum where people will listen to criticism open mindedly which doesn’t lead to this bias towards low quality criticism.

Linch @ 2020-09-13T18:03 (+23)

1. At an object level, I don't think I've noticed the dynamic particularly strongly on the EA Forum (as opposed to eg. social media). I feel like people are generally pretty positive about each other/the EA project (and if anything are less negative than is perhaps warranted sometimes?). There are occasionally low-quality critical posts (that to some degree reads to me as status plays) that pop up, but they usually get downvoted fairly quickly.

2. At a meta level, I'm not sure how to get around the problem of having a low bar for criticism in general. I think as an individual it's fairly hard to get good feedback without also being accepting of bad feedback, and likely something similar is true of groups as well?

howdoyousay? @ 2020-09-14T18:14 (+16)

I feel like an easy way to get lots of upvotes is to make lots of vague critical comments about how EA isn’t intellectually rigorous enough, or inclusive enough, or whatever. This makes me feel less enthusiastic about engaging with the EA Forum, because it makes me feel like everything I’m saying is being read by a jeering crowd who just want excuses to call me a moron.

Could you unpack this a bit? Is it the originating poster who makes you feel that there's a jeering crowd, or the people up-voting the OP which makes you feel the jeers?

As counterbalance...

Writing, and sharing your writing, is how you often come to know your own thoughts. I often recognise the kernel of truth someone is getting at before they've articulated it well, both in written posts and verbally. I'd rather encourage someone for getting at something even if it was lacking, and then guide them to do better. I'd especially prefer to do this given I personally know that it's difficult to make time to perfect a post whilst doing a job and other commitments.

This is even more the case when it's on a topic that hasn't been explored much, such as biases in thinking common to EAs or diversity issues. I accept that in liberal circles being critical on basis of diversity and inclusion or cognitive biases is a good signalling-win, and you might think it would follow suit in EA. But I'm reminded of what Will MacAskill said about 8 months ago on an 80k podcast that he was awake thinking his reputation would be in tatters after posting in the EA forum, that his post would be torn to shreds (didn't happen). For quite some time I was surprised at the diversity elephant in the room on EA, and welcomed when these critiques came forward. But I was in the room and not pointing out the elephant for a long time because I - like Will - had fears about being torn to shreds for putting myself out there, and I don't think this is unusual.

I also think that criticisms of underlying trends in groups are really difficult to get at in a substantive way, and though they often come across as put-downs from someone who wants to feel bigger, it is not always clear whether that's due to authorial intent or reader's perception. I still think there's something that can be taken from them though. I remember a scathing article about yuppies who listen to NPR to feel educated and part of the world for signalling purposes. It was very mean-spirited but definitely gave me food for thought on my media consumption and what I am (not) achieving from it. I think a healthy attitude for a community is willingness to find usefulness in seemingly threatening criticism. As all groups are vulnerable to effects of polarisation and fractiousness, this attitude could be a good protective element.

So in summary, even if someone could have done better on articulating their 'vague critical comments', I think it's good to encourage the start of a conversation on a topic which is not easy to bring up or articulate, but is important. So I would say go on ahead and upvote that criticism whilst giving feedback on ways to improve it. If that person hasn't nailed it, it's started the conversation at least, and maybe someone else will deliver the argument better. And I think there is a role for us as a community to be curious and open to 'vague critical comments' and find the important message, and that will prove more useful than the alternative of shunning it.

Denise_Melchin @ 2020-09-13T19:33 (+11)

I have felt this way as well. I have been a bit unhappy with how many upvotes in my view low quality critiques of mine have gotten (and think I may have fallen prey to a poor incentive structure there). Over the last couple of months I have tried harder to avoid that by having a mental checklist before I post anything but not sure whether I am succeeding. At least I have gotten fewer wildly upvoted comments!

Thomas Kwa @ 2020-09-23T22:46 (+8)

I've upvoted some low quality criticism of EA. Some of this is due to emotional biases or whatever, but a reason I still endorse is that I haven't read strong responses to some obvious criticism.

Example: I currently believe that an important reason EA is slightly uninclusive and moderately undiverse is because EA community-building was targeted at people with a lot of power as a necessary strategic move. Rich people, top university students, etc. It feels like it's worked, but I haven't seen a good writeup of the effects of this.

I think the same low-quality criticisms keep popping up because there's no quick rebuttal. I wish there were a post of "fallacies about problems with EA" that one could quickly link to.

agent18 @ 2020-09-13T19:28 (+7)

I think that EAs, especially on the EA Forum, are too welcoming to low quality criticism.

can you show one actual example of what exactly you mean?

Buck @ 2020-09-13T22:45 (+27)

I thought this post was really bad, basically for the reasons described by Rohin in his comment. I think it's pretty sad that that post has positive karma.

MichaelStJules @ 2020-09-14T00:01 (+11)

I actually strong upvoted that post, because I wanted to see more engagement with the topic, decision-making under deep uncertainty, since that's a major point in my skepticism of strong longtermism. I just reduced my vote to a regular upvote. It's worth noting that Rohin's comment had more karma than the post itself (even before I reduced my vote).

MichaelDickens @ 2020-09-24T00:12 (+10)

I pretty much agree with your OP. Regarding that post in particular, I am uncertain about whether it's a good or bad post. It's bad in the sense that its author doesn't seem to have a great grasp of longtermism, and the post basically doesn't move the conversation forward at all. It's good in the sense that it's engaging with an important question, and the author clearly put some effort into it. I don't know how to balance these considerations.

Max_Daniel @ 2020-09-23T23:23 (+10)

I agree that post is low-quality in some sense (which is why I didn't upvote it), but my impression is that its central flaw is being misinformed, in a way that's fairly easy to identify. I'm more worried about criticism where it's not even clear how much I agree with the criticism or where it's socially costly to argue against the criticism because of the way it has been framed.

It also looks like the post got a fair number of downvotes, and that its karma is way lower than for other posts by the same author or on similar topics. So it actually seems to me the karma system is working well in that case.

(Possibly there is an issue where "has a fair number of downvotes" on the EA FOrum corresponds to "has zero karma" in fora with different voting norms/rules, and so the former here appearing too positive if one is more used to fora with the latter norm. Conversely I used to be confused why posts on the Alignment Forum that seemed great to me had more votes than karma score.)

Denise_Melchin @ 2020-09-24T09:12 (+8)

It also looks like the post got a fair number of downvotes, and that its karma is way lower than for other posts by the same author or on similar topics. So it actually seems to me the karma system is working well in that case.

That's what I thought as well. The top critical comment also has more karma than the top level post, which I have always considered to be functionally equivalent to a top level post being below par.

Max_Daniel @ 2020-09-23T23:11 (+3)

I agree with this as stated, though I'm not sure how much overlap there is between the things we consider low-quality criticism. (I can think of at least one example where I was mildly annoyed that something got a lot of upvotes, but it seems awkward to point to publicly.)

I'm not so worried about becoming the target of low-quality criticism myself. I'm actually more worried about low-quality criticism crowding out higher-quality criticism. I can definitely think of instances where I wanted to say X but then was like "oh no, if I say X then people will lump this together with some other person saying nearby thing Y in a bad way, so I either need to be extra careful and explain that I'm not saying Y or shouldn't say X after all".

I'm overall not super worried because I think the opposite failure mode, i.e. appearing too unwelcoming of criticism, is worse.

MichaelStJules @ 2020-09-14T00:38 (+2)

I've proposed before that voting shouldn't be anonymous, and that (strong) downvotes should require explanation (either your own comment or a link to someone else's). Maybe strong upvotes should, too?

Of course, this is perhaps a bad sign about the EA community as a whole, and fixing forum incentives might hide the issue.

This makes me feel less enthusiastic about engaging with the EA Forum, because it makes me feel like everything I’m saying is being read by a jeering crowd who just want excuses to call me a moron.

How much of this do you think is due to the tone or framing of the criticism rather than just its content (accurate or not)?

Larks @ 2020-09-14T01:25 (+11)

I've proposed before that voting shouldn't be anonymous, and that (strong) downvotes should require explanation (either your own comment or a link to someone else's). Maybe strong upvotes should, too?

It seems this could lead to a lot of comments and very rapid ascending through the meta hierarchy! What if I want to strong downvote your strong downvote explanation?

MichaelStJules @ 2020-09-14T01:38 (+4)

It seems this could lead to a lot of comments and very rapid ascending through the meta hierarchy! What if I want to strong downvote your strong downvote explanation?

I don't really expect this to happen much, and I'd expect strong downvotes to decay quickly down a thread (which is my impression of what happens now when people do explain voluntarily), unless people are actually just being uncivil.

I also don't see why this would be a particularly bad thing. I'd rather people hash out their differences properly and come to a mutual understanding than essentially just call each other's comments very stupid without explanation.

Lukas_Gloor @ 2020-09-13T19:55 (+2)

I thought the same thing recently.

Buck @ 2024-08-25T03:33 (+37)

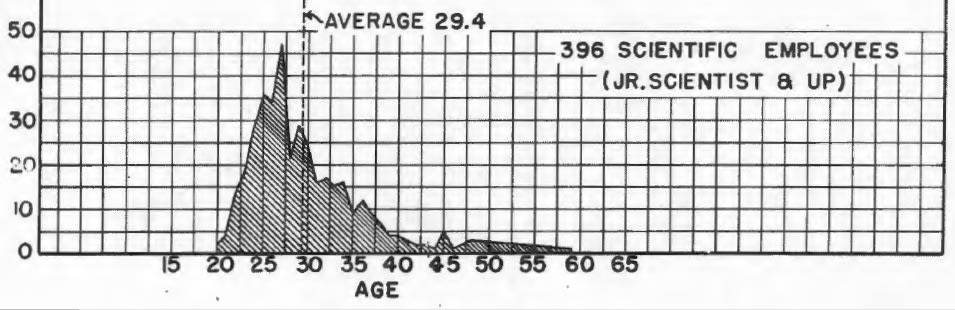

Alex Wellerstein notes the age distribution of Manhattan Project employees:

Sometimes people criticize EA for having too many young people; I think that this age distribution is interesting context for that.

[Thanks to Nate Thomas for sending me this graph.]

Habryka @ 2024-08-25T20:09 (+38)

This is an interesting datapoint, though... just to be clear, I would not consider the Manhattan project a success on the dimension of wisdom or even positive impact.

They did sure build some powerful technology, and they also sure didn't seem to think much about whether it was good to build that powerful technology (with many of them regretting it later).

I feel like the argument of "the only other community that was working on technology of world-ending proportions, which to be clear, did end up mostly just running full steam ahead at building the world-destroyer, was also very young" is not an amazing argument against criticism of EA/AI-Safety.

Karthik Tadepalli @ 2024-08-28T07:23 (+6)

It is a data point against a different kind of criticism, that sounds more like "EA is a bunch of 20-something dilettantes running around having urgent conversations instead of doing anything in the world". I hear that flavor of criticism more than "EA might build the world destroyer", and I suspect it is more common in the world.

NickLaing @ 2024-08-28T11:39 (+2)

I haven't seen many people accuse EAs of not doing anything (which is kind of a soft vote of confidence.) I thought the criticisms were more along the lines of arrogance/directing money wrong places/Influencing beind closed doors (AI)/ not focusing on systematic change. More that the things we do are bad/useless but less that we are only talking?

Pretty uncertain though

I suppose I'm on the GHD bent though. Do you have any any articles to share along these lines?

Karthik Tadepalli @ 2024-08-28T15:43 (+2)

I was thinking about the "no systemic change" thing mainly, and no articles I can think of, just a general vibe

Buck @ 2024-08-26T17:52 (+5)

yeah I totally agree

huw @ 2024-08-25T05:14 (+14)

My super rough impression here is many of the younger people on the project were the grad students of the senior researchers on the project; such an age distribution seems like it would've been really common throughout most academia if so.

In my perception, the criticism levelled against EA is different. The version I've seen people argue revolves around EA lacking the hierarchy of experience required to restrain the worst impulses of having a lot of young people in concentration. The Manhattan Project had an unusual amount of intellectual freedom for a military project, sure, but it also did have a pretty defined hierarchy that would've restrained those impulses. Nor do I think EA necessarily lacks leadership, but I think it does lack a permission structure.

And I think that gets to the heart of the actual criticism. An age-based one is, well, blatantly ageist and not worth paying attention to. But that lack of a permission structure might be important!

Jason @ 2024-08-25T22:44 (+7)

It's notable in that respect that the chart is for the "scientific employees."

NickLaing @ 2024-08-26T16:47 (+2)

That's true I'm assuming that doesn't include managers, accountants, support staff who might be older on average?

Linch @ 2024-08-28T03:34 (+12)

Another aspect here is that scientists in the 1940s are at a different life stage/might just be more generally "mature" than people of a similar age/nationality/social class today. (eg most Americans back then in their late twenties probably were married and had multiple children, life expectancy at birth in the 1910s is about 50 so 30 is middle-aged, society overall was not organized as a gerontocracy, etc).

huw @ 2024-08-28T13:34 (+4)

Probably wartime has this effect on people, too

Rían O.M @ 2024-08-29T10:56 (+4)

Similar for the Apollo 11 Moon landing: average age in the control room was 28.

yanni kyriacos @ 2024-08-26T00:40 (+4)

I think age / inexperience is contributing to whatever the hell I'm experiencing here.

Not enough mentors telling them darn kids to tuck their shirts in.

Buck @ 2021-09-01T01:11 (+32)

When I was 19, I moved to San Francisco to do a coding bootcamp. I got a bunch better at Ruby programming and also learned a bunch of web technologies (SQL, Rails, JavaScript, etc).

It was a great experience for me, for a bunch of reasons.

- I got a bunch better at programming and web development.

- It was a great learning environment for me. We spent basically all day pair programming, which makes it really easy to stay motivated and engaged. And we had homework and readings in the evenings and weekends. I was living in the office at the time, with a bunch of the other students, and it was super easy for me to spend most of my waking hours programming and learning about web development. I think that it was very healthy for me to practice working really long hours in a supportive environment.

- The basic way the course worked is that every day you’d be given a project with step-by-step instructions, and you’d try to implement the instructions with your partner. I think it was really healthy for me to repeatedly practice the skill of reading the description of a project, then reading the step-by-step breakdown, and then figuring out how to code everything.

- Because we pair programmed every day, tips and tricks quickly percolated through the cohort. We were programming in Ruby, which has lots of neat little language features that it’s hard to pick up all of on your own; these were transmitted very naturally. I also was pushed to learn my text editor better.

- The specific content that I learned was sometimes kind of fiddly; it was helpful to have more experienced people around to give advice when things went wrong.

- I think that this was probably a better learning experience than most tech or research internships I could have gotten. If I’d had access to the best tech/research internships, maybe that would have been better. I think that this was probably a much better learning experience than eg most Google internships seem to be.

- I met rationalists and EAs in the Bay.

- I spent a bunch of time with real adults who had had real jobs before. The median age of students was like 25. Most of the people had had jobs before and felt dissatisfied with them and wanted to make a career transition. I think that spending this time with them helped me grow up faster.

- I somehow convinced my university that this coding bootcamp was a semester abroad (thanks to my friend Andrew Donnellan for suggesting this to me; that suggestion plausibly accelerated my career by six months), which meant that I graduated on schedule even though I then spent six months working for App Academy as a TA (which incidentally was also a good experience.)

Some ways in which my experience was unusual:

- I was a much stronger programmer on the way in to the program than most of my peers.

- I am deeply extroverted and am fine with pair programming every day.

It seems plausible to me that more undergrad EAs should do something like this, especially if they can get college credit for it (which I imagine might be hard for most students—I think I only got away with it because my university didn’t really know what was going on). The basic argument here is that it might be good for them the same way it was good for me.

More specifically, I think that there are a bunch of EAs who want to do technical AI alignment work and who are reasonably strong but not stellar coders. I think that if they did a coding bootcamp between, say, freshman and sophomore year, they might come back to school and be a bunch stronger. The bootcamp I did was focused on web app programming with Ruby and Rails and JavaScript. I think that these skills are pretty generically useful to software engineers. I often am glad to be better than my coworkers at quickly building web apps, and I learned those skills at App Academy (though being a professional web developer for a while also helped). Eg in our current research, even aside from the web app we use for getting our contractors to label data, we have to deal with a bunch of different computers that are sending data back and forth and storing it in databases or Redis queues or whatever. A reasonable fraction of undergrad EAs would seem like much more attractive candidates to me if they’d done a bootcamp. (They’d probably seem very marginally less attractive to normal employers than if they’d done something more prestigious-seeming with that summer, but most people don’t do very prestigious-seeming things in their first summer anyway. And the skills they had learned would probably be fairly attractive to some employers.)

This is just a speculative idea, rather than a promise, but I’d be interested in considering funding people to do bootcamps over the summer—they often cost maybe $15k. I am most interested in funding people to do bootcamps if they are already successful students at prestigious schools, or have other indicators of talent and conscientious, and have evidence that they’re EA aligned.

Another thing I like about this is that a coding bootcamp seems like a fairly healthy excuse to hang out in the Bay Area for a summer. I like that they involve working hard and being really focused on a concrete skill that relates to the outside world.

I am not sure whether I’d recommend someone do a web programming bootcamp or a data science bootcamp—though data science might seem more relevant, I think the practical programming stuff in the web programming bootcamp might actually be more helpful on the margin. (Especially for people who are already doing ML courses in school.)

I don’t think there are really any bootcamps focused on ML research and engineering. I think it’s plausible that we could make one happen. Eg I know someone competent and experienced who might run a bootcamp like this over a summer if we paid them a reasonable salary.

Aaron Gertler @ 2021-09-17T10:11 (+5)

See my comment here, which applies to this Shortform as well; I think it would be a strong top-level post, and I'd be interested to see how other users felt about tech bootcamps they attended.

Jack R @ 2021-09-01T05:53 (+2)

This seems like really good advice, thanks for writing this!

Also, I'm compiling a list of CS/ML bootcamps here (anyone should feel free to add items).

billzito @ 2021-10-27T01:44 (+6)

Some chance it's outdated, but my advice as of 2017 was for people to do one of the top bootcamps as ranked by coursereport: https://www.coursereport.com/

I think most bootcamps that aren't a top bootcamp are a much worse experience based on a good amount of anecdotal evidence and some job placement data. I did Hack Reactor in 2016 (as of 2016, App Academy, Hack Reactor, and Full Stack Academy were the best ranked bootcamps, but I think a decent amount has changed since then).

Jack R @ 2021-10-27T05:52 (+2)

Good to know--thanks Bill!

billzito @ 2021-10-29T03:26 (+1)

Of course :)

Buck @ 2024-11-15T18:44 (+31)

Well known EA sympathizer Richard Hanania writes about his donation to the Shrimp Welfare Project.

Mitchell Laughlin🔸 @ 2024-11-16T11:25 (+27)

I have some hesitations about supporting Richard Hanania given what I understand of his views and history. But in the same way I would say I support *example economic policy* of *example politician I don't like* if I believed it was genuinely good policy, I think I should also say that I found this article of Richard's quite warming.

Buck @ 2021-08-26T17:06 (+31)

Doing lots of good vs getting really rich

Here in the EA community, we’re trying to do lots of good. Recently I’ve been thinking about the similarities and differences between a community focused on doing lots of good and a community focused on getting really rich.

I think this is interesting for a few reasons:

- I found it clarifying to articulate the main differences between how we should behave and how the wealth-seeking community should behave.

- I think that EAs make mistakes that you can notice by thinking about how the wealth-seeking community would behave, and then thinking about whether there’s a good reason for us behaving differently.

—— Here are some things that I think the wealth-seeking community would do.

- There are some types of people who should try to get rich by following some obvious career path that’s a good fit for them. For example, if you’re a not-particularly-entrepreneurial person who won math competitions in high school, it seems pretty plausible that you should work as a quant trader. If you think you’d succeed at being a really high-powered lawyer, maybe you should do that.

- But a lot of people should probably try to become entrepreneurs. In college, they should start small businesses, develop appropriate skills (eg building web apps), start trying to make various plans about how they might develop some expertise that they could turn into a startup, and otherwise practice skills that would help them with this. These people should be thinking about what risks to take, what jobs to maybe take to develop skills that they’ll need later, and so on.

I often think about EA careers somewhat similarly:

- Some people are natural good fits for particular cookie-cutter roles that give them an opportunity to have a lot of impact. For example, if you are an excellent programmer and ML researcher, I (and many other people) would love to hire you to work on applied alignment research; basically all you have to do to get these roles is to obtain those skills and then apply for a job.

- But for most people, the way they will have impact is much more bespoke and relies much more on them trying to be strategic and spot good opportunities to do good things that other people wouldn’t have otherwise done.

I feel like many EAs don’t take this distinction as seriously as they should. I fear that EAs see that there exist roles of the first type—you basically just have to learn some stuff, show up, and do what you’re told, and you have a bunch of impact—and then they don’t realize that the strategy they should be following is going to involve being much more strategic and making many more hard decisions about what risks to take. Like, I want to say something like “Imagine you suddenly decided that your goal was to make ten million dollars in the next ten years. You’d be like, damn, that seems hard, I’m going to have to do something really smart in order to do that, I’d better start scheming. I want you to have more of that attitude to EA.”

Important differences:

- Members of the EA community are much more aligned with each other than wealth-seeking people are. (Maybe we’re supposed to be imagining a community of people who wanted to maximize total wealth of the community for some reason.)

- Opportunities for high impact are biased to be earlier in your career than opportunities for high income. (For example, running great student groups at top universities is pretty high up there in impact-per-year according to me; there isn’t really a similarly good moneymaking opportunity for which students are unusually well suited.)

- The space of opportunities to do very large amounts of good seems much narrower than the space of opportunities to make money. So you end up with EAs wanting to work with each other much more than the wealth-maximizing people want to work with each other.

- It seems harder to make lots of money in a weird, bespoke, non-entrepreneurial role than it is to have lots of impact. There are many EAs who have particular roles which are great fits for them and which allow them to produce a whole bunch of value. I know of relatively fewer cases where someone gets a job which seems weirdly tailored to them and is really high paid.

- I think this is mostly because my sense is that in the for-profit world, it’s hard to get people to be productive in weird jobs, and you’re mostly only able to hire people for roles that everyone involved understands very well already. And so even if someone would be able to produce a huge amount of value in some particular role, it’s hard for them to get paid commensurately, because the employer will be skeptical that they’ll actually produce all that value, and other potential employers will also be skeptical and so won’t bid their price up.

Stefan_Schubert @ 2021-08-26T18:18 (+13)

Thanks, this is an interesting analogy.

If too few EAs go into more bespoke roles, then one reason could be risk-aversion. Rightly or wrongly, they may view those paths as more insecure and risky (for them personally; though I expect personal and altruistic risk correlate to a fair degree). If so, then one possibility is that EA funders and institutions/orgs should try to make them less risky or otherwise more appealing (there may already be some such projects).

In recent years, EA has put less emphasis on self-sacrifice, arguing that we can't expect people to live on very little. There may be a parallel to risk - that we can't expect people to take on more risk than they're comfortable with, but instead must make the risky paths more appealing.

JP Addison @ 2021-08-26T21:30 (+4)

I like this chain of reasoning. I’m trying to think of concrete examples, and it seems a bit hard to come up with clear ones, but I think this might just be a function of the bespoke-ness.

Aaron Gertler @ 2021-09-17T10:07 (+6)

I'm commenting on a few Shortforms I think should be top-level posts so that more people see them, they can be tagged, etc. This is one of the clearest cases I've seen; I think the comparison is really interesting, and a lot of people who are promising EA candidates will have "become really rich" as a viable option, such that they'd benefit especially from thinking about this comparisons themselves.

Anyway, would you consider making this a top-level post? I don't think the text would need to be edited all — it could be as-is, plus a link to the Shortform comments.

Ben Pace @ 2021-09-02T06:44 (+6)

Something I imagined while reading this was being part of a strangely massive (~1000 person) extended family whose goal was to increase the net wealth of the family. I think it would be natural to join one of the family businesses, it would be natural to make your own startup, and also it would be somewhat natural to provide services for the family that aren't directly about making the money yourself. Helping make connections, find housing, etc.

capybaralet @ 2021-09-02T13:47 (+3)

Reminds me of The House of Saud (although I'm not saying they have this goal, or any shared goal):

"The family in total is estimated to comprise some 15,000 members; however, the majority of power, influence and wealth is possessed by a group of about 2,000 of them. Some estimates of the royal family's wealth measure their net worth at $1.4 trillion"

https://en.wikipedia.org/wiki/House_of_Saud

Ben_West @ 2021-09-08T13:20 (+2)

Thanks for writing this up. At the risk of asking obvious question, I'm interested in why you think entrepreneurship is valuable in EA.

One explanation for why entrepreneurship has high financial returns is information asymmetry/adverse selection: it's hard to tell if someone is a good CEO apart from "does their business do well", so they are forced to have their compensation tied closely to business outcomes (instead of something like "does their manager think they are doing a good job"), which have high variance; as a result of this variance and people being risk-averse, expected returns need to be high in order to compensate these entrepreneurs.

It's not obvious to me that this information asymmetry exists in EA. E.g. I expect "Buck thinks X is a good group leader" correlates better with "X is a good group leader" than "Buck thinks X will be a successful startup" correlates with "X is a successful startup".

It seems like there might be a "market failure" in EA where people can reasonably be known to be doing good work, but are not compensated appropriately for their work, unless they do some weird bespoke thing.

Charles He @ 2021-09-08T19:03 (+14)

You seem to be wise and thoughtful, but I don't understand the premise of this question or this belief:

One explanation for why entrepreneurship has high financial returns is information asymmetry/adverse selection: it's hard to tell if someone is a good CEO apart from "does their business do well", so they are forced to have their compensation tied closely to business outcomes (instead of something like "does their manager think they are doing a good job"), which have high variance; as a result of this variance and people being risk-averse, expected returns need to be high in order to compensate these entrepreneurs.

It's not obvious to me that this information asymmetry exists in EA. E.g. I expect "Buck thinks X is a good group leader" correlates better with "X is a good group leader" than "Buck thinks X will be a successful startup" correlates with "X is a successful startup".

But the reasoning [that existing orgs are often poor at rewarding/supporting/fostering new (extraordinary) leadership] seems to apply:

For example, GiveWell was a scrappy, somewhat polemical startup, and the work done there ultimately succeeded and created Open Phil and to a large degree, the present EA movement.

I don't think any of this would have happened if Holden Karnofsky and Elie Hassenfeld had to say, go into Charity Navigator (or a dozen other low-wattage meta-charities that we will never hear of) and try to turn it around from the inside. While being somewhat vague, my models of orgs and information from EA orgs do not suggest that they are any better at this (for mostly benign, natural reasons, e.g. "focus").

It seems that the main value of entrepreneurship is the creation of new orgs to have impact, both from the founder and from the many other staff/participants in the org.

Typically (and maybe ideally) new orgs are in wholly new territory (underserved cause areas, untried interventions) and inherently there are fewer people who can evaluate them.

It seems like there might be a "market failure" in EA where people can reasonably be known to be doing good work, but are not compensated appropriately for their work, unless they do some weird bespoke thing.

It seems that the now canonized posts Really Hard and Denise Melchin's experiences suggest this has exactly happened, extensively even. I think it is very likely that both of these people are not just useful, but are/could be highly impactful in EA and do not "deserve" the experiences their described.

[I think the main counterpoint would be that only the top X% of people are eligible for EA work or something like that and X% is quite small. I would be willing to understand this idea, but it doesn't seem plausible/acceptable to me. Note that currently, there is a concerted effort to foster/sweep in very high potential longtermists and high potential EAs in early career stages, which seems invaluable and correct. In this effort, my guess is that the concurrent theme of focusing on very high quality candidates is related to experiences of the "production function" of work in AI/longtermism. However, I think this focus does not apply in the same way to other cause areas.]

Again, as mentioned at the top, I feel like I've missed the point and I'm just beating a caricature of what you said.

Ben_West @ 2021-09-08T21:51 (+3)

Thanks! "EA organizations are bad" is a reasonable answer.

(In contrast, "for-profit organizations are bad" doesn't seem like reasonable answer for why for-profit entrepreneurship exists, as adverse selection isn't something better organizations can reasonably get around. It seems important to distinguish these, because it tells us how much effort EA organizations should put into supporting entrepreneur-type positions.)

Jamie_Harris @ 2021-09-02T22:52 (+2)

Maybe there's some lesson to be learned. And I do think that EAs should often aspire to be more entrepreneurial.

But maybe the main lesson is for the people trying to get really rich, not the other way round. I imagine both communities have their biases. I imagine that lots of people try entrepreneurial schemes for similar reasons to why lots of people buy lottery tickets. And Id guess that this often has to do with scope neglect, excessive self confidence / sense of exceptionalism, and/or desperation.

Buck @ 2026-02-01T03:05 (+29)

@Ryan Greenblatt and I are going to record another podcast together (see the previous one here). We'd love to hear topics that you'd like us to discuss. (The questions people proposed last time are here, for reference.) We're most likely to discuss issues related to AI, but a broad set of topics other than "preventing AI takeover" are on topic. E.g. last time we talked about the cost to the far future of humans making bad decisions about what to do with AI, and the risk of galactic scale wild animal suffering.

Noah Birnbaum @ 2026-02-01T16:05 (+7)

I would love to hear any updated takes on this post from Ryan.

Pablo @ 2026-02-02T15:00 (+2)

I’d be interested in seeing you guys elaborate on the comments you make here in response to Rob’s question that some control methods, such as AI boxing, may be “a bit of a dick move”.

ParetoPrinciple @ 2026-02-03T03:33 (+1)

Much of the stuff that catches your interest on the 80,000 hours website's problem profiles would be something I'd like to watch you do a podcast on, or costly if I end up getting it from people whose work I'm less familiar with. Also, neurology, cogpsych/evopsych/epistasis (e.g. like this 80k podcast with Randy Neese, this 80k podcast with Athena Aktipis), and especially more quantitative modelling approaches to culture change/trends (e.g. 80k podcast with Cass Sunstein, 80k podcast with Tom Moynihan, 80k podcasts with David Duvenaud and Karnofsky). A lot of the intermediate-yet-upstream -type stuff with the AI situation, even deepfakes etc is hard to hear takes from from people who haven't really established that they do serious thinking.

Oscar Sykes @ 2026-02-01T12:48 (+1)

What have you learnt about running organisations and managing from running Redwood?

Oscar Sykes @ 2026-02-01T12:47 (+1)

In the last episode you talk about how you were considering shutting down Redwood and joining labs. Why were you initially considering it and why did you eventually decide against it?

Buck @ 2021-01-11T02:01 (+28)

I know a lot of people through a shared interest in truth-seeking and epistemics. I also know a lot of people through a shared interest in trying to do good in the world.

I think I would have naively expected that the people who care less about the world would be better at having good epistemics. For example, people who care a lot about particular causes might end up getting really mindkilled by politics, or might end up strongly affiliated with groups that have false beliefs as part of their tribal identity.

But I don’t think that this prediction is true: I think that I see a weak positive correlation between how altruistic people are and how good their epistemics seem.

----

I think the main reason for this is that striving for accurate beliefs is unpleasant and unrewarding. In particular, having accurate beliefs involves doing things like trying actively to step outside the current frame you’re using, and looking for ways you might be wrong, and maintaining constant vigilance against disagreeing with people because they’re annoying and stupid.

Altruists often seem to me to do better than people who instrumentally value epistemics; I think this is because valuing epistemics terminally has some attractive properties compared to valuing it instrumentally. One reason this is better is that it means that you’re less likely to stop being rational when it stops being fun. For example, I find many animal rights activists very annoying, and if I didn’t feel tied to them by virtue of our shared interest in the welfare of animals, I’d be tempted to sneer at them.

Another reason is that if you’re an altruist, you find yourself interested in various subjects that aren’t the subjects you would have learned about for fun--you have less of an opportunity to only ever think in the way you think in by default. I think that it might be healthy that altruists are forced by the world to learn subjects that are further from their predispositions.

----

I think it’s indeed true that altruistic people sometimes end up mindkilled. But I think that truth-seeking-enthusiasts seem to get mindkilled at around the same rate. One major mechanism here is that truth-seekers often start to really hate opinions that they regularly hear bad arguments for, and they end up rationalizing their way into dumb contrarian takes.

I think it’s common for altruists to avoid saying unpopular true things because they don’t want to get in trouble; I think that this isn’t actually that bad for epistemics.

----

I think that EAs would have much worse epistemics if EA wasn’t pretty strongly tied to the rationalist community; I’d be pretty worried about weakening those ties. I think my claim here is that being altruistic seems to make you overall a bit better at using rationality techniques, instead of it making you substantially worse.

EdoArad @ 2021-01-11T09:33 (+3)

I tried searching the literature a bit, as I'm sure that there are studies on the relation between rationality and altruistic behavior. The most relevant paper I found (from about 20 minutes of search and reading) is The cognitive basis of social behavior (2015). It seems to agree with your hypothesis. From the abstract: