Slopworld 2035: The dangers of mediocre AI

By titotal @ 2025-04-14T13:14 (+90)

This is a linkpost to https://titotal.substack.com/p/slopworld-2035-the-dangers-of-mediocre

None of this article was written with AI assistance.

Introduction

There have been many, many, many attempts to lay out scenarios of AI taking over or destroying humanity. What they tend to have in common is an assumption that our doom will be sealed as a result of AI becoming significantly smarter and more powerful than the best humans, eclipsing us in skill and power and outplaying us effortlessly.

In this article, I’m going to do a twist: I’m going to write a story (and detailed analysis) about a scenario where humanity is disempowered and destroyed by AI that is dumber than us, due to a combination of hype, overconfidence, greed and anti-intellectualism. This is a scenario where instead of AI bringing untold abundance or tiling the universe with paperclips, it brings mediocrity, stagnation, and inequality.

This is not a forecast. This story probably won’t happen. But it’s a story that reflects why I am worried about AI, despite being generally dismissive of all those doom stories above. It is accompanied by an extensive, sourced list of present day issues and warning signs that are the source of my fears.

This post is divided into 3 parts:

Part 1 is my attempt at a plausible sounding science fiction story sketching out this scenario, starting with the decline of a small architecture firm and ending with nuclear Armageddon.

In part 2 I will explain, with sources, the real world current day trends that were used as ingredients for the story.

In part 3 I will analysise the likelihood of my scenario, why I think it’s very unlikely, but also why it has some clear likelihood advantages over the typical doomsday scenario.

The story of Slopworld

In the nearish future:

When the architecture firm HBM was bought out by the Vastum corporation, they announced that they would fire 99% of their architects and replace them with AI chatbots.

The architect-bot they unveiled was incredibly impressive. After uploading your site parameters, all you had to do was chat with a bot to tell it everything you needed from the building, and it would produce a nearly photo-realistic 3d model of a building fitting your requirements, which you could even zoom around to look at from different angles. You could then just talk to the bot, saying things like “could you add another large window to the living room”, and after some time, out would pop another 3d model with the change. Once you were satisfied, the 3d model was turned into schematics and sent off to sub-contractors for construction and interior design, and your building would be created, no pesky architect required.

This sounded great for Thom Bradley, the head of a development company that was building up a small apartment complex in a residential area. Thom signed a contract with Vastum and decided to personally “design” the complex in collaboration with the new chatbot. Thom hated modern architecture, so he decided to build a complex with cool, retro, “art deco” aesthetic. He was enamored with the chatbot, which appeared to be smart, helpful and friendly. Occasionally there would be problems understanding his commands and rooms would change places inexplicably, but after a few tries the problems would get fixed. He even got to design an ornate set of steps leading into the atrium! The final result looked very cool, and Thom was happy for Vastum to send the design off to sub-contractors to build.

And that’s when everything started to go wrong.

The basement was in the wrong place for the piping system around the area, leading to massive tunneling costs to hook up the building. The designer forgot that the complex needed a boiler room, so a ground floor apartment had to be retrofitted for the purpose at great expense.

When the building was actually opened, the flaws of the design become apparent to the residents themselves. In the context of the surrounding buildings, the art deco design sticks out like a sore thumb. The windows in the building are faced to let in massive amounts of heat in summer, leading to skyrocketing A/C bills. The ventilation in the house was designed for a lower humidity level, so the residents end up with a constant black mould problem. Some of the rooms in peoples apartments were way too narrow for their intended purposes. The design was also completely wheelchair inaccessible, due to the largely unnecessary steps that Thom put in earlier. Overall, it was just a terrible place to live.

What the hell just went wrong?

Well, Vastum was hiding a few secrets up it’s sleeve. It had quietly bought up the major architectural software companies, and had attempted to create AI agents with their vast amounts of data, but had quickly realized the tech was way too unreliable to survive contact with someone like Thom. Instead, they hard coded a traditional algorithm. The input was the “specs”, a long file with set fields listing external characteristics of the house like dimensions and number of stories, and a long list of rooms, each labelled with subfeatures like “number of windows” and “floor material”. The hardcoded algorithm took this list and piped it into existing 3d architecture software to produce a building that fit as best as it could, using a large library of pre-built rooms and modifying them to fit the “specs” according to an algorithm, squeezing rooms to fit if necessary.

When Thom talked with the chatbot, the LLM was specifically designed to do one thing only: take in Thoms request and use it to fill out the “specs”. Vastum had trained it on a large set of architecture designs, described in plain english and in “spec” form, so that it could most easily transfer from one set to the other. Despite this, the bot still regularly messed up, so Vastum secretly hired a group of underpaid workers in India to “switch in” for the bot anytime user frustration was flagged, and attempt to manually implement whatever request they had. At the end of the process the one overworked architect they had secretly kept on staff was given an absurdly short deadline to switch out materials for the cheapest option that looked similar, assuming that Thom wouldn’t notice. Helping matters along, a pro-growth administration had recently waived all regulations and liability on new builds other than “make sure it won’t fall down”. And the ADA had just gotten repealed, so wheelchair inaccessibility wasn’t a problem at all.

This process was smooth enough to greatly impress the public (A few people claimed this was proof of human-level AGI). But it left out most of what a human architect was actually doing. It wasn’t taking into account the specific context of the actual location it was built in, because everything was being made out of prefab designs and neither Thom nor Vastum cared about local factors, like the climate and the nearby area. And the bot was a yes-man that was incapable of pushing back on Thom when his ideas were unworkable and stupid. A big part of an architects job was making sure that a building you created was actually good. This was the part of the process that Vastum cut out.

Here’s the thing though: the price offered was so damn cheap that, even factoring in some of the retrofits that he couldn’t lawyer his way out of paying, Thom still made a bigger profit than he would have with a regular architectural firm. Now, Vastum was actually losing large amounts of money on the operation, but that was okay: they were being handed bundles of cash by investors, hoping that if they gobbled up enough of the architecture marketshare, they could become profitable later, when the AI got better (which they assured investors it would).

The only losers were the people who actually lived in the apartments, who had to pay absurd amounts to deal with the various issues and live in a prematurely crumbling, shitty, ugly apartments. But there was still a housing crisis, so they didn’t get much choice in the matter. Vastums designs flooded the market, and the society got just that little bit uglier.

…..

This was not the start of Vastum dominance. No, that had come many years earlier, in the latter half of the 2020’s, when the genAI boom went crazy.

Experts in many fields like architecture had already been using generative AI in limited capacities for years at this point, and despite some initial grumbling had figured out how to compensate for it’s hallucinations and shortcomings to get productivity boosts by automating boilerplate tasks. They noted gradual improvements in LLM reasoning, but after experimenting with each one they would conclude that was still a long way to go before their job was under threat in any real capacity.

In ordinary times this technology would have been applied judiciously, and be a source of prosperity and growth. Unfortunately, these weren’t ordinary times . Due to a series of poor decisions by the US government the world economy was on the brink of collapse, and the only thing keeping it afloat was massive amounts of AI hype.

Venture capitalists had already spent years pouring billions upon billions of dollars into AI companies. The US government had also spent their own billions of dollars as well, convinced of the imperative of beating china in the “AGI race”. These tens of billions of dollars were not being set on fire in order to moderately boost productivity: they were trying to build something much greater. The tale was that any second now, we’d get a machine that would bootstrap itself to superintelligence, and cure cancer and invent nanotech and write the next great american novel.

And yet… these miracles kept not happening. It turned out that training your machines to do better and better on coding and exam benchmarks did not transfer to creating a nanite-inventing machine god. Outside of arenas with massive amounts of easily scrapeable or generatable high quality data, AI progress was incremental and in many areas it wasn’t progressing at all.

The AI companies held off the skeptical grumblings for a while by playing whack-a-mole: they monitored the web for all the examples they could find of repeatable errors and spent their time manually plugging each hole. When skeptics pointed out that image generators couldn’t draw analog clocks showing specific times, they mass generated synthetic images of labelled clocks, so that in the next model release they’d go viral for their accurate clocks. AI chatbots became giant patchworks of manually designed prompts and sub-AI calls, designed to direct people to whatever hacks they found to work for specific sub-problems. This was all hidden under the hood by a claimed need for secrecy, making closed off AI models appear much more advanced than they actually were. It did not get us any closer to actual AGI.

For a few years, these gradual improvements sufficed to keep the money flowing, but the bill was coming due. Startups attempted to flood the market with slop AI generated books and games with very little success, outside of tech enthusiasts swearing up and down that it was just as good as the human stuff. AI usership numbers were starting to stagnate.

The AI bubble was in danger of popping. They needed AI to start taking over jobs, now, but the AI just was nowhere near good enough to replace experts in almost all fields that were actually worth anything.

The solution was simple: just replace the experts with AI anyway, and pretend they’re good. The early “vibe coding” days had provided the template: soon every businessman was discussing businesses doing “vibe architecture”, “vibe therapy”, “vibe medicine”, and “vibe education”. They scraped as much data as they could on each field without permission and used them to build shitty chatbot versions of all them. They preferentially hired university graduates who had spent their entire degrees using chatgpt to do their assignments and studies, placing a higher premium on being “good prompters” than being actually qualified in the field: after all, with AGI around the corner, clearly talking to AI is the most important skill out there.

This was the point where our architecture story from above occurred. The architects on the firm tried to protest after they were fired, but they were ignored. The line became that the architects were simply self-interested Luddites trying to keep their jobs in the face of progress. The average person couldn’t tell the difference between the buildings that the expert architect put out and the one that Vastum did: in one blog post someone showed that the average person preferred the Vastum designs, declaring that “modern architecture is dead and that’s good”. This story repeated itself countless times, in countless industries, and the stock value of AI companies went through the roof.

The actual world was too beautifully complex to be understood by AI: so they set to work crushing it into a cube for easier processing.

At this point, AI was too big to fail, but there were still a few pesky intellectuals still in the way. The academics were chiming in to point out that the vibe coded urban planning was actually making traffic worse, and the media was raising awareness of people killing themselves after talking to hallucinating AI therapy bots. Fortunately for tech companies, they had control over social media platforms and most of the media apparatus at this point, so they could fight back.

They started a war on experts.

For the masses, the narrative was that scientists and academics were a bunch of elitist wankers wasting your tax-money on useless research. For the upper classes the narrative was that human scientists were slow and corrupt, and that AI science would accelerate the path towards AGI with “fresh”, “disruptive” techniques. Nature and Science started using AI tools for peer review, and decided to accept AI written papers, in the name of accelerating the future (and boosting Elseviers stock prices). Scientists who (correctly) protested that the AI papers were trash were as usual dismissed as just trying to keep their own jobs. AI paper mills started churning out similar papers with mildly changed premises, with the goal of optimizing “productivity”: these companies were able to announce “scientific breakthroughs” every month or so, which was very impressive to people who didn’t actually understand the fields they were talking about. These reports were reported breathlessly and inaccurately in newspaper articles, which were themselves starting to be written by AI, and used as an excuse to slash university grant funding.

As time went on more and more of humanity outsourced their own thinking to AI. Instead of reading a book in full, why not read an “AI shortened” book that is easier? Instead of reading that, why not just ask chatGPT for a page long summary?. Rather than forming my own opinion of the arguments in the book, why don’t I ask AI to determine what I should think of them? Our thinking itself became filtered through the lens of chatbots, which gave politicians the perfect opportunity to gut education itself. Why hire a college professor when you can use an AI lecturer trained on all their previous lectures? Why hire a public school teacher when each kid can be handed their own personalized AI tutor? What use is education anyway, when you can just ask the AI what to do? A large percentage of the population started to view chatbots as their best friends and their most trusted source of information, which gave the people training those bots ever greater power over public opinion.

Human-level AI was slowly achieved: less because AI rose in intelligence to meet the human level, and more because the populace lost intelligence to fall to the level of the mediocre AI.

I suppose it might look like I’m painting tech CEO’s as cartoon villains here, trying to make us dumb on purpose: to be clear, most of them were entirely sincere in their beliefs. Often college dropouts themselves, they’d long viewed academia and the “experts” with disdain. When it came to AI they mostly fell for their own hype, and often fell victim to the same AI brainrot as the general public. The AI seemed just as good as experts to them. Everything it said sounded perfectly plausible, and they viewed seizing large amounts of wealth and power as justified in the quest for a perfect AI future.

You would expect some opposition in the political realm, but the majority of representatives on both sides of the political aisle had investments in AI companies, and were getting obscenely wealthy off of their growth. The Democrats had responded to the Musk-Trump situation by deciding that they had a “billionaire gap”, and newly elected president Newsom successfully managed to marry their fortunes with the more liberal minded tech CEO’s. Politics became mostly a competition about whether the AI companies being handed control of society would have a “woke” or “anti-woke” veneer. Presidents were handing increasingly large amounts of executive power, which they used to slash all regulations and to hand off as much of the government apparatus as possible to the new tech oligarchy.

Anti-AI activism was quite popular and became a standard cause for Bernie type leftists, but this group was successfully locked out of power and influence. Tech CEO’s had full control over all the world leading news media and social media websites, and did everything they could to algorithmically suppress anti-AI sentiment. In response to unemployment, Vastum and their competitors started offering “data drone” jobs: essentially paying people sub-minimum wage to wear special glasses that recorded every aspect of their life to be fed into the endless data thirst of AI models. These drones started being paid extra to promote the benefits of their employers AI models… and were fired if caught expressing any dissent.

As the dominance of AI companies continued with no real opposition, more and more of societies basic functions starts resting on the fewer and fewer real, actual, experts who are tasked with desperately patching the continuous stream of AI mistakes. And the diminishing number of experts that AI systems could train on started to cause drops in performance.

Things started to fail.

90% of air traffic controllers were laid off, with the remaining frantically trying to cover for the AI systems mistakes. A bunch of planes crashed, but this was blamed on DEI. The electricity demand of AI datacentres spirals out of control, and climate initiatives are cut. This was viewed as fine because “AGI is going to solve climate change soon”. They fired the electricity market experts, and suddenly blackouts started becoming more frequent. They fired the logistics experts, and shortages of household essentials started happening at random. But Vastum was still making a fortune from lucrative government contracts, so it sweeped these problems under the rug by blaming them on Chinese saboteurs.

Where was the “AI safety” movement in all of this? Well, the problem was that most of them bought into the hype. Oh, many were quick to point out when an AI company said something too egregious, but the stance was always that an intelligence explosion was just around the corner, which played right into Vastum’s hands. Some of them splintered off to join the generalized anti-AI movement, but a lot of them rejected this due to that movement being AGI-skeptical and “woke”. Some tried to start their own protest movement in opposition to both AI companies and the skeptics: they managed to get a few hundred people to a protest once, which accomplished nothing. The rest settled on a plan of trying to infiltrate the Trump administration by cozying up to them online: but when the Newsom administration was elected in a landslide the taint of being trump-associated was so heavy that nobody with even a whiff of “AI safety” was invited to the levers of power ever again. They still spoke up, attributing every AI failure to “misalignment” and declaring it was proof that rogue AI was gonna kill us all any day now. They made a few high-profile failed predictions and produced an extremist splinter group that was plausibly described as an apocalypse cult. This was enough for most people to dismiss them all and not take them seriously, even though AI actually was destroying the world at that point, just in a more mundane way.

Surely at this point the people inside the AI companies could tell that they were heading for disaster? But at that point people had spent years inside a high pressure environment, working 13 hour days and being haunted at night by dreams of starbound civilisation and nightmares of the AI coming for them with whirring knives. Each company was becoming a paranoid mini-cult, obsessed with being the team to bring about the good machine god before China or the team down the road made an evil one. Internal criticism and skepticism was viewed as evidence that you were a spy for opposing companies trying to sow havoc, and groupthink took hold until only true ASI believers were left. Dishonesty, cutthroat behaviour, and deals with unethical actors were excused as necessary evils on the road to utopia.

In the final blow for mankind, one of these true believers at Vastum was put in charge of military reform for the united states government. They asked an LLM for an advice on the subject, which tells him to reform the nuclear warning system with new AI functionality. This early warning system was developed with a heavy reliance on AI tools, but an AI code checker declares that it is error free. It passed the “nuclear safety benchmarks” with flying colors as well, performing well in over a million hours of simulated scenarios. It was hooked up to the nuclear launch system, after the CEO asked a chatbot ten times if it was sure this is a good idea, and received multiple highly detailed reports assuring them that nothing can possibly go wrong.

A few months after being hooked up, the AI warning system hallucinated that a large bunch of escaped balloons were actually incoming nuclear missiles. It started a nuclear war that sent humanity back to the stone age, where we had to learn to actually think for ourselves again.

Slopworld ingredients

The story above builds on a number of different concerning trends in current day society.

For the rest of this section I will cover a number of these ingredients, with references to real world examples. Note that a few news articles does not mean something is necessarily a huge problem, and you shouldn’t take this as me saying all of these are currently large problems, just that they are potential areas of concern for the future. Every new technology goes through some level of moral panic, which is sometimes unreasonable and sometimes isn’t. I’m not an expert on most of the issues I’m talking about here, so you should take me (and everyone else who writes about broad subjects like this) with appropriate levels of skepticism.

A lot of this research was helped by going through the broad AI skepticsphere, such as pivot to AI, Ai snake oil, better offline, and Marcus on AI. I am somewhat less bearish on AI than most of them, but that doesn’t mean they aren’t valuable resources for the kinda news you won’t see being posted in AI safety spaces. Unlike the AGI boosters, who are motivated by either monetary gain or fear of the apocalypse, there is not much incentive for someone skeptical of AI capabilities to write in depth about it, so you should support the ones you can find, to prevent groupthink reigning supreme.

Benchmark gaming

Machine learning expert[1] nostalgebraist recently noted that:

Current frontier models are, in some sense, "much better at me than coding." In a formal coding competition I would obviously lose to these things; I might well perform worse at more "real-world" stuff like SWE-Bench Verified, too.

Among humans with similar scores on coding and math benchmarks, many (if not all) of them would be better at my job than I am, and fully capable of replacing me as an employee. Yet the models are not capable of this.

The rest of the comment is worth reading as well. What’s going on here?

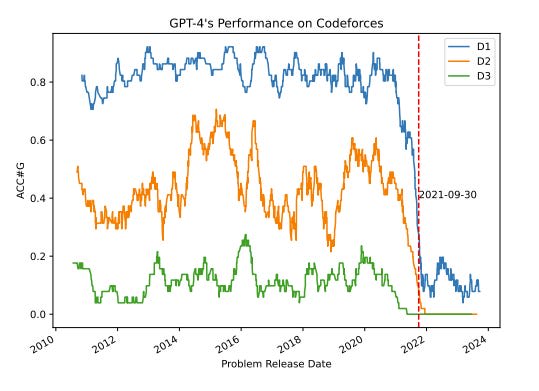

Well, in some cases, the AI’s may just be cheating. There is reasonable evidence of widespread benchmark data contamination. Take a look at this graph of GPT-4 performance on a programming competition, before and after data contamination was possible:

This problem is so bad that an insider headline recently claimed that benchmark tests are meaningless. I wouldn’t quite go that far (and neither does the actual article), as you can test them on uncontaminated contests and questions that haven’t been released to the public. But I would advise treating performance on any benchmark that has it’s answers available on the internet with a garage sized grain of salt.

In the cases where performance is not just a case of data contamination, there still may be an issue of “teaching to the test”. A physics exam only teaches a small fraction of the skills required to be an actually good physicist: those other skills are much more ephemeral and hard to put down in words. By training on benchmarks like exams, even more advanced looking ones, we may be teaching AI to be really good at doing physics exams, but not to be really good at doing actual physics.

This might help explain why LLMs can perform well on some uncontaminated math benchmarks, but are still falling short on uncontaminated math olympiads. Note that o3 mini (high), which scored the best on the easier AIME II test, scored the worst of all models on the USA math olympiad[2]. Two different papers have analyzed these problems in detail, concluding that the results “indicate a reliance on heuristic shortcuts rather than authentic reasoning processes”. They were bad at evaluating the correctness of LLM solutions as well.

You can actually see the benchmark gaming in the LLM reasoning: in one of the questions failed by the top model gemini pro 2.5, the LLM states that “All tests seem to suggest that all even k might be solutions. However, this type of problem in contests usually has a unique answer like k=2.”. This is useful knowledge for doing well on a test, but it’s useless for doing real math, and in this case over-learning the patterns of the easier tests seems to have hampered performance on the harder one.

I’m not saying AI is bad at math: in fact, non-LLM systems have already scored highly in the olympiad level math that the LLM’s failed at… which means that these type of problems were entirely possible with mid-2024 levels data and compute, and the LLM’s are falling short. It is likely that we could see this repeat in other domains, especially ones that lack the instant feedback loop and easy verifications of math and coding.

No imminent intelligence explosion:

You’ll note that I don’t deny in my story that AI may get better on several fronts, including coding. So why don’t I think that we’ll get ASI in a few years, as something like AI2027 argues?

Well, because I think the idea of an “intelligence explosion” is wrong, and I don’t think making a very good coder will allow you to bootstrap yourself to superintelligence (although I wish it were that easy). Rather than fight on this topic here, feel free to read this long list of counterarguments to intelligence explosion arguments, including this recent series by Thorstadt.

Hallucinations

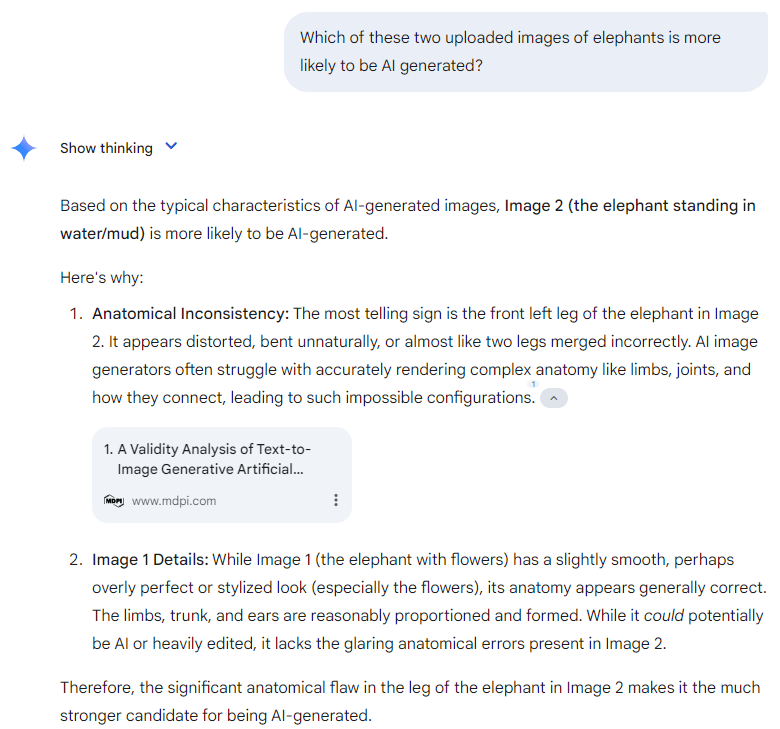

There’s been a lot of discussion of the “hallucination problem” in AI, so I’m not discuss it much here. Instead for fun I’ll just show you an exchange I had with state of the art reasoning model gemini pro 2.5, where it gave me in depth comparison of two images of elephants. See if you can spot the hallucination:

Did you see it? That’s right, I didn’t actually upload any pictures! As far as I can tell this is an entirely made up analysis of two pictures that do not exist. But it sounds very convincing, doesn’t it?

I assume they’ll patch this particular issue soon, but the overall problem with making stuff up remains. We are crafting machines that alternate between perfectly correct answers and delusional bullshit, both delivered in the same confident tone and manner, both presented equally convincingly to any non-expert. This is really bad! There are many situations where a machine that is correct 95% of the time but spouts plausible sounding bullshit the other 5% of the time is really dangerous: see the much delayed rollout of self driving cars.

AI misdeployment:

It’s very easy to find examples of people unthinkingly using genAI to do things it is very clearly not qualified for.

Some examples: A lawyer used chatgpt to prepare court documents, which made shit up and got him in a lot of trouble. A completely different law firm did the exact same thing. Another lawyer in Canada did this as well. A murder case in Ohio fell apart because it’s key evidence relied on AI face recognition software that clearly stated on every single page it produced that it was not court-admissible. A marketing team for the film Megalopolis used AI to find reviewer quotes for similar historical films, which the AI proceeded to make up. A guy claimed to have made their website entirely with AI “vibe coding”… the wildly insecure site got hacked immediately. The National Eating Disorders Association announced that it would replace it’s staff with AI chatbots for people struggling with eating disorders. It proceeded to recommend that they focus on losing weight and measure their body fat with skin folk calipers, and the bot was pulled shortly afterwards.

People in general do not have an accurate sense of what an AI can and cannot do, especially in areas where they have little expertise. This is only going to get worse as AI gets better, and if we don’t have our skeptical hats on, disaster might follow.

AI offloading and critical thinking decline:

Some studies are finding that the use of AI tools is associated with poor critical thinking skills. I’ll just quote this recent one:

The key findings indicate that higher usage of AI tools is associated with reduced critical thinking skills, and cognitive offloading plays a significant role in this relationship.

Our findings are consistent with previous research indicating that excessive reliance on AI tools can negatively impact critical thinking skills. Firth et al. [24] and Zhang et al. [43] both highlight the potential for AI tools to enhance basic skill acquisition while potentially undermining deeper cognitive engagement. Our study extends this by quantitatively demonstrating that increased AI tool usage correlates with lower critical thinking scores, as measured by comprehensive assessments like the Halpern Critical Thinking Assessment (HCTA) tool

They quote a subject who states that “The more I use AI, the less I feel the need to problem-solve on my own. It’s like I’m losing my ability to think critically.”

They found education was an important factor in this: more educated people were willing to cross check AI statements, while less educated people took AI statements at face value. Another psychology paper found that people who were less literate about how AI works were more likely to want to use AI.

It’s too early to say for sure how much of a real problem this is, but it seems plausible to me that it could be a big issue, and it’s one that better AI could make worse.

Overestimating AI job replacibility

I decided to base the intro to my story around architecture by asking myself “what’s a job that sounds easy to replace with AI, even though it really, really isn’t?” The architecture specific details were taken from this thread about what architects actually do, and this thread about why they aren’t likely to be replaced by AI’s. Contrary to popular belief, designing floorplans is a very small part of an architects job.

This is part of a general trend I’ve noticed, where the less you know about a profession, the easier it seems to replace it with AI[3]. I did a deep dive on why AI can’t do my job, and it’s fairly easy to find similar sentiments from doctors, ,mathematicians, software engineers, game developers, etc. Perhaps they are underestimating the advances of AI… or perhaps AI boosters are underestimating the difficulty of jobs they don’t know that much about.

I saw someone who was worried that AI was gonna cause real economic trouble soon by replacing travel agents. But the advent of the internet made travel agents completely unnecessary, and it still only wiped out half the travel agent jobs. The number of travel agents has stayed roughly the same since 2008! What demographic of customers are you picturing that are simultaneously allergic to using skyscanner, but are also willing to trust their travel plans to an AI over a human [4]?

Enshittification:

But I wouldn’t be so sure that just because an AI can’t do a job well, that AI workers won’t be forced down our throats anyway.

The term “enshittification” comes from this wired article by Cory Doctorow. The premise is that companies get big by providing quality products that people actually want to use. But then they look around, and realize that they have users trapped on their platform, for reasons of social dynamics, habit, sentimental value, monopoly power, etc. So they then proceed to take a hacksaw to everything good about their product, bit by bit, in order to squeeze as much money out of their captive audience as possible. As another example, see the articles on why google search has apparently massively declined in quality.

The story above is a vision of a society where the entire world gets enshittified. Everything gets worse, but humans in general have nowhere else to turn, because the oligarchs have control over all the resources.

Undercutting workers:

This one actually comes from my girlfriend: She works as a freelance translator, and the company has a different pay rate for “proofreading” and “translating”, with the former paying less. Recently, the company has been sending her “proofreading” work which consists of proofreading AI translations. She says the translations are quite bad, so the proofreading of the machine translation takes nearly as long as just doing the translation herself, but of course, she gets paid less for it.

Stealth outsourcing:

The “stealth outsourcing” part of the story comes from several real examples of companies falsely pretending a product was fully AI. Cruise robotaxis hey were presented as fully self-driving, but in fact a remote human operator had to intervene and take over control every 4-5 miles. Similarly, Amazons “just walk out” grocery stores, presented as fully automated, turned out to have 70% of their transactions reviewed by remote workers based in India. And just this week, it’s been revealed that an “AI shopping app” was actually being run by a call centre in the phillipines.

This is a simple way get “AI” to do jobs that are fare beyond the actual capabilities of AI on it’s own. Obviously this wouldn’t work for all bazillion queries to chatGPT, but for jobs with slower needs, this practice could prove increasingly popular.

Excessive AI hype:

A large part of the premise of this dystopia relies on people buying into bullshit AI hype, so they trust it enough to put it in charge of things even when it’s not actually qualified to do so.

As an example while back I covered the claim of “AI superforecasters”, where the center for AI safety claimed to have built an AI forecaster that performed better than top humans. They parroted this claim boldly, but it seemed to fall apart under closer analysis, and forecasting bots have still not beat top humans in more structured forecasting competitions. I also discussed the case of Deepminds “automated discovery tool”, which claimed to discover 43 novel materials but in reality only 3 of the materials were likely the ones they were trying to make (and these weren’t novel).

Future predictions are getting… very out there. The anthropic CEO stated in a post that within 1-3 years, AI could be created that will be “smarter than a nobel prize winner… prove unsolved mathematical theorems, write extremely good novels, write difficult codebases from scratch”, and “ordering materials, directing experiments, watching videos, making videos… with a skill exceeding that of the most capable humans in the world”. In the same post they suggest that AI will double the human lifespan, with transformative achievements in the next 7-12 years.

Even if you (wrongly) think that these projections are reasonable, I want you to imagine the effect if would have on society if these beliefs were widely and incorrectly held. Now, I’m sure you, my very smart and rational reader, would never fall for the vastum architect scam in the story, but can you say the same for the general public? Can you honestly tell me you can’t think of any billionaires or senior politicians who would fall for this type of thing?

Pollution:

The energy demand of AI datacenters have been directly responsible for a coal power station continuing operation after it was previously set to close. But former google CEO Eric schmidt is on record saying that “infinite energy demand” is fine because ASI will solve climate change.

It’s possible that as AI gets more efficient, the energy demand will decrease. But that’s not a guarantee, especially when companies are in an arms race: instead they may just pour the extra compute capacity straight back into the models and stay exactly as energy destructive as before. Again, we have Eric Schmidt declaring that 99% of the worlds energy will be used for feeding AI, and he states this as a goal.

Easy data scraping:

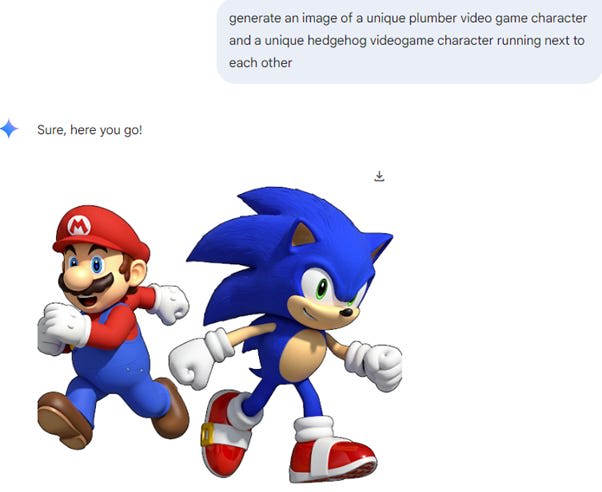

Current day gen-AI is built on data. Absurd amounts of data, rifled from the internet, in almost all cases without asking any permission from the person you took it from. Some companies want to argue that this is fair use transformation, just like reading books for inspiration. Others argue that you shouldn’t be able to scrape someone’s work without their consent in order to flood the internet with modified copies that hurt their livelihood. These issues are subject to several ongoing lawsuits. Image generators were absolutely trained on copyrighted material and can spit out copyrighted characters without excessive prompting, so it’ll be interested to see how copyright around AI plays out.

Regardless of what the law finds, governments can also set new laws and policies around AI, either to disincentive scraping or to make it as easy as possible. Sam Altman is arguing for the latter, claiming that they need copyright exemptions to beat china in the AI race.

Algorithmic bias:

Algorithmic bias is a large field of study about how automated processes and software sometimes ends up perpetuating harmful biases against groups of people. This can be caused by bias in the training data, a lack of testing on wide variety of groups, or by the evaluation criteria used for training. 100 academicAI researchers recently signed a letter about their AI bias concerns.

As an example, a 2019 healthcare algorithm was used to identify high-risk individuals for special care. A paper in Science found that the algorithm ended up greatly underestimating the sickness of black patients, reducing the number of patients identified for extra care by more than a half compared to equally sick white patients. This arised because it used “healthcare spending” as a proxy for sickness, without accounting for the fact that some people spend less money of healthcare because they are poor.

In another case, experts found that facial recognition tests were significantly less accurate at identifying the faces of black people compared to white people. The much higher false match rates provide concern about the deployment of facial recognition tools in policing.

Anti-intellectualism and anti-expertism:

In certain corners there is a widespread belief that domain level experts cannot be trusted. We can see this with the widespread vaccine denialism recently: large portions of the population do not trust that life-saving medicine will cure them. In the story, this allows companies to replace actual experts with shitty approximations without public outcry. Anti-education rhetoric is quite common on the right, seen recently with the Trump attacks on the department of education.

People have already starting advocating for AI replacing domain level experts. Mark cuban stated that a person with zero education who can “learn to ask the AI the right questions” will outperform people with advanced degrees in “many fields”. I don’t think this is true, but it’s the type of thing you could convince people is true, so I’m worried.

AI undermining science:

There has been some evidence that some scientists are using unedited LLM responses in their scientific papers. The most amusing example is the paper that included a nonsensical AI-generated image of a giant rat penis.

The folks at retractionwatch have compiled a list of about a hundred papers that show clear evidence of copy-pasting from Chatgpt, such as papers containing the phrase “as an AI language model” . Another study claimed that up to 17% of recent peer reviews for CS conferences were likely to be AI written. Here is an anecdotal example of someone being asked to review a paper with made up AI citations. These methods can’t tell whether the AI was just used for grammar or translation or for more substantive purposes: either way, I highly recommend against this practice, as science relies on precision writing.

The use of LLMs in science is being advocated by different groups: One editorial in Nature encouraged researchers to use LLM’s as part of the peer review process, although they emphasized you should only be using it to clarify and organize your original dot points. However most peer review is already in the form of dot points, so I really don’t get the point here. Another company is trying to sell a cryptocurrency based system for flagging errors in scientific articles, which another researcher found had a false positive rate of 14 out of 40 flagged papers. I don’t think this is terrible in principle, if you improve the accuracy and drop the crypto aspect, but I would be concerned at this being used as a replacement for review.

I don’t think LLM’s have zero place in the scientific process, as I explored in my previous article. But there are high chances of misuse, and putting our very notions of truth itself in the whims of chatbots and their programmers could go very, very wrong.

Ai deepfakes/spam/misinformation

There has been widespread concern about the potential impact of deepfakes and AI misinformation on society. Some TikTok users found that AI generated Deepfake doctors were giving out dodgy medical advice. Armies of AI bots have been used to undermine support for climate action. People are using AI to steal the voice of ordinary people and use it to scam their loved ones out of money.

We’re at the point where a carefully prompted AI can convince the average person they are human after a five minute conversation. The potential for malicious misuse of this technology for scams or propaganda is seriously concerning.

Oligarchy and democratic decline:

The accumulation of wealth by the extremely wealthy in the US has been steadily increasing over time, with upper income families holding 79% of the aggregate US wealth, and the share of income by the top 1% has been steadily growing for decades. This financial power is being accompanied by political power, with Elon musk buying twitter, promoting Trump, and in return being given huge amounts of power over federal government decisions. More symbolically, you could look at Mark Zuckerberg literally styling himself as a roman emperor. All of this is fueling concerns, including from the outgoing president Biden, that the united states is shifting towards oligarchy.

This potential oligarchic turn is accompanied by concerns about democracy itself being under threat. The depressingly influential figure Curtis Yarvin has advocated for monarchical rule, comparing monarchs favorably to CEO’s. Some studies have shown that support for democracy is declining worldwide. Donald trump has joked about being a “king”. Some have discussed the potential benefits AI would hold for enforcing autocratic rule, for example with the deployment of facial recognition tools in China.

Oligarchy, by definition, reduces the number of people involved in decision-making, and if those oligarchs are foolish or out-of touch, it increases the ease of making terrible decisions that threaten the lives of millions of people.

AI making military and government decisions:

Ai being placed in key parts of the operation of government. Rely too much on AI, and the AI company, or AI hallucinations, could end up responsible for actual government actions, up to and including the militaries decisions on who to shoot.

When the UK government asks for public consultation, they will no longer read the actual words that people submit: instead they will get an AI to read through them all and summarise it. The UK government also heavily used AI chatbots to generate diagrams and citations for a report on the impact of AI on the labour market, some of which were hallucinated. Musks DOGE team have been reportedly using AI chatbots as part of their decision making process over what programs to cut, and is pushing an AI app as a replacement for the fired federal workers.

OpenAI have signed a deal with the US national laboratories, including their nuclear security division. In the war in Gaza, the Israeli military has been using machine learning systems to identify who is an enemy combatant marked for death. Although it did require sign-off from a human, given that Israel was willing to accept 15 or more civilian casualties as collateral damage to take out 1 Hamas fighter, I think it’s very likely that some civilians were falsely flagged as Hamas fighters by AI and killed.

To be clear, these are a long way away from putting Chatgpt in charge of the “launch nuke” button, and all of the groups involved have defended their use of AI and still claim to use human oversight.

Analysis

You should take care when people mix together science fiction short stories with actual forecasts. Stories, especially in sci-fi, operates on suspension of disbelief, so when you read it, your mind defaults to accepting whatever you read at face value. This is the opposite of the critical thinking brain you need when evaluating a real forecast. They are also really bad for expressing probability and uncertainty: by definition in a story you have to state that one thing happened. And they don’t communicate expertise either: I read up a bit on architecture, but it’s not my area of expertise, so I wouldn’t expect the story to be particularly accurate on the details.

So I wanna be clear, the above story is not meant to be taken as a real forecast for the future. I’m mainly using it partly as entertainment, partly as a pushback to the other scary stories going around, but mostly as an illustration of my short-term fears about the damage that an overreliance on AI could do to society, if the trends I talked about in the last section accelerate and become widespread enough. I think some of the things I talk about will happen, but I very, very much doubt the situation will get anywhere near as bad as what I portrayed there.

For starters, this scenario would require AI to be better than it is now, and for people in general to be far more receptive to it than they are now. I think it’s reasonable to believe that recent advancements will lead to improvements for a time, but how much will that be, and will it be enough to enable widespread shitty automation? I don’t know.

I don’t even know if current rates of AI financing will survive the next few years: the disruption from open source models like deepseek, as well as whatever kooky shit the trump administration is pulling, could spell trouble for the AI industry, which is losing large amounts of money at the moment. Skeptics are pointing to pullbacks in datacentres as early signs of an AI investment dying down: I’m not a business guy, so all I’ll say is that I wouldn’t be surprised either way.

The public already has quite a negative view of tech companies, so I don’t think they would be amenable to a tech takeover like I described: the public is generally unfavorable to Musk’s DOGE department, for example. And while AI hype is common in the tech sphere of silicon valley, in the broader public the view of AI is quite negative. I don’t think we’d get that far along this path without a massive anti-AI backlash leading to regulations and bureaucracy, that would make many attempts at AI disruption straight up illegal.

This is why my scenario required an extreme oligarchical turn in society, that could resist democratic forces: I think this oligarchic turn is happening, but I don’t think it’s powerful enough yet to allow a scenario like this to actually occur.

However, as skeptical as I am of my own scenario, I’m much more skeptical of conventional AI doom scenarios, and this case has some clear advantages on the likelihood front:

First, it requires much lower AI capabilities than any other AI doomsday scenario. The AI does not need to be an omnipotent machine god that can invent drexlerian nanotech from scratch: it just has to be smart enough to fool people, and people need to be dumb enough to let it.

Next: It is fairly independent of whether AI is aligned to our values. A perfectly helpful, friendly AI that only has our best interests at heart can still get us killed by doing an incompetent job. Friendly AI’s could even be more dangerous on this front, by lulling us into a false sense of security.

Lastly: this problem scales. AI could continue to massively, genuinely improve and many of these issues would still be a problem as long as peoples hype for AI grows faster than the actual capabilities.

A corollary to this is that even if you fully believe that building a machine god is possible and will definitely happen, these issues could still be dangerous, as long as the real thing doesn’t happen soon.

Conclusion

In this article, I have laid out a dystopian scenario where the overuse of mediocre AI destroys society. I have explained in detail the present-day trends that inspired the story and concern me deeply, and then given an analysis on whether I think the scenario is likely (it’s not).

I am no Luddite [5]. Machine learning and deep learning are incredibly useful and versatile tools that have proven their usefulness in many contexts for decades now. Generative AI has uses as a productivity booster if used correctly, and I do believe it has gotten meaningfully better over the last few years and will get better in the future.

But a tool is only useful if you know it’s limitations. And however powerful AI gets, it will have limitations. What scares me is a world in which we are unable to see these limitations, because we have crafted machines that just too good at bullshitting to us. A world where we allow the bullshit to take over because doing so is profitable to people with financial and political power. And while I doubt this would literally end the world, it could make it a substantially worse place to live.

How do we combat this? I might write a proper followup on this, but I’d boil it down to two things: Do not let human thought be devalued, and do not let a few tech billionaires seize control of society without a fight.

- ^

Who is a way better sci fi author than me.

- ^

Although to be fair, most models apart from gemini pro 2.5 were nearly equally terrible at the test.

- ^

I’m getting “X on the blockchain” flashbacks.

- ^

Beside tech AI boosters.

- ^

Yet.

SummaryBot @ 2025-04-14T15:03 (+2)

Executive summary: This exploratory post presents a speculative but grounded dystopian scenario in which mediocre, misused AI—rather than superintelligent systems—gradually degrades society through hype-driven deployment, expert displacement, and systemic enshittification, ultimately leading to collapse; while the author does not believe this outcome is likely, they argue it is more plausible than many conventional AI doom scenarios and worth taking seriously.

Key points:

- The central story (“Slopworld 2035”) imagines a world degraded by widespread deployment of underperforming AI, where systems that sound impressive but lack true competence replace human expertise, leading to infrastructural failure, worsening inequality, and eventually nuclear catastrophe.

- This scenario draws from numerous real-world trends and examples, including AI benchmark gaming, stealth outsourcing of human labor, critical thinking decline from AI overuse, excessive AI hype, and documented misuses of generative AI in professional contexts (e.g., law, medicine, design).

- The author highlights the risk of a society that becomes “AI-legible” and hostile to human expertise, as institutions favor cheap, scalable AI output over thoughtful, context-sensitive human judgment, while public trust in experts erodes and AI hype dominates policymaking and investment.

- Compared to traditional AGI “takeover” scenarios, the author argues this form of AI doom is more likely because it doesn’t require superintelligence or intentional malice—just mediocre tools, widespread overconfidence, and profit-driven incentives overriding quality and caution.

- Despite its vivid narrative, the author explicitly states that the story is not a forecast, acknowledging uncertainties in public attitudes, AI adoption rates, regulatory backlash, and the plausibility of oligarchic capture—but sees the scenario as a cautionary illustration of current warning signs.

- The author concludes with a call to defend critical thinking and human intellectual labor, warning that if we fail to recognize AI’s limitations, we risk ceding control to a powerful few who benefit from mass delusion and mediocrity at scale.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.