Are there important things that aren't quantifiable?

By Joseph @ 2022-09-12T11:49 (+9)

I'm reading Thinking in Systems: A Primer, and I came across the phrase "pay attention to what is important, not just what is quantifiable." It made me think of the famous Robert Kennedy speech about GDP (then called GNP): "It measures neither our wit nor our courage, neither our wisdom nor our learning, neither our compassion nor our devotion to our country, it measures everything in short, except that which makes life worthwhile."

But I found myself having trouble thinking of things that are important and non-quantifiable. I thought about health, but we can use a system like DALY/QALY/WALY. I thought about happiness, but we can easily ask people about experienced well-being. Courage could certainly be measured through setting some type of elaborate (and unethical) scenario and observing reaction. While many of these things are rough proxies for the thing we want to measure rather than the thing we want to measure itself, if the construct validity is high enough then I'm viewing these proxies as "good enough" to count.

My rough impression as of now is that things exist that are difficult to measure that we aren't currently able to quantify, but that are quantifiable with better techniques/technology (such as courage). Are there things that we can't quantify?

Seth Gibson @ 2024-07-13T23:14 (+5)

This is basically what Hillary Greaves talked about in her talk on five approaches to moral cluelessness. Essentially, I agree that it is hard to come up with things that we care about that are not quantifiable, but that is only over the short term.

None of the proxies you mention are good for measuring the total effect for the things we care about in the long term:

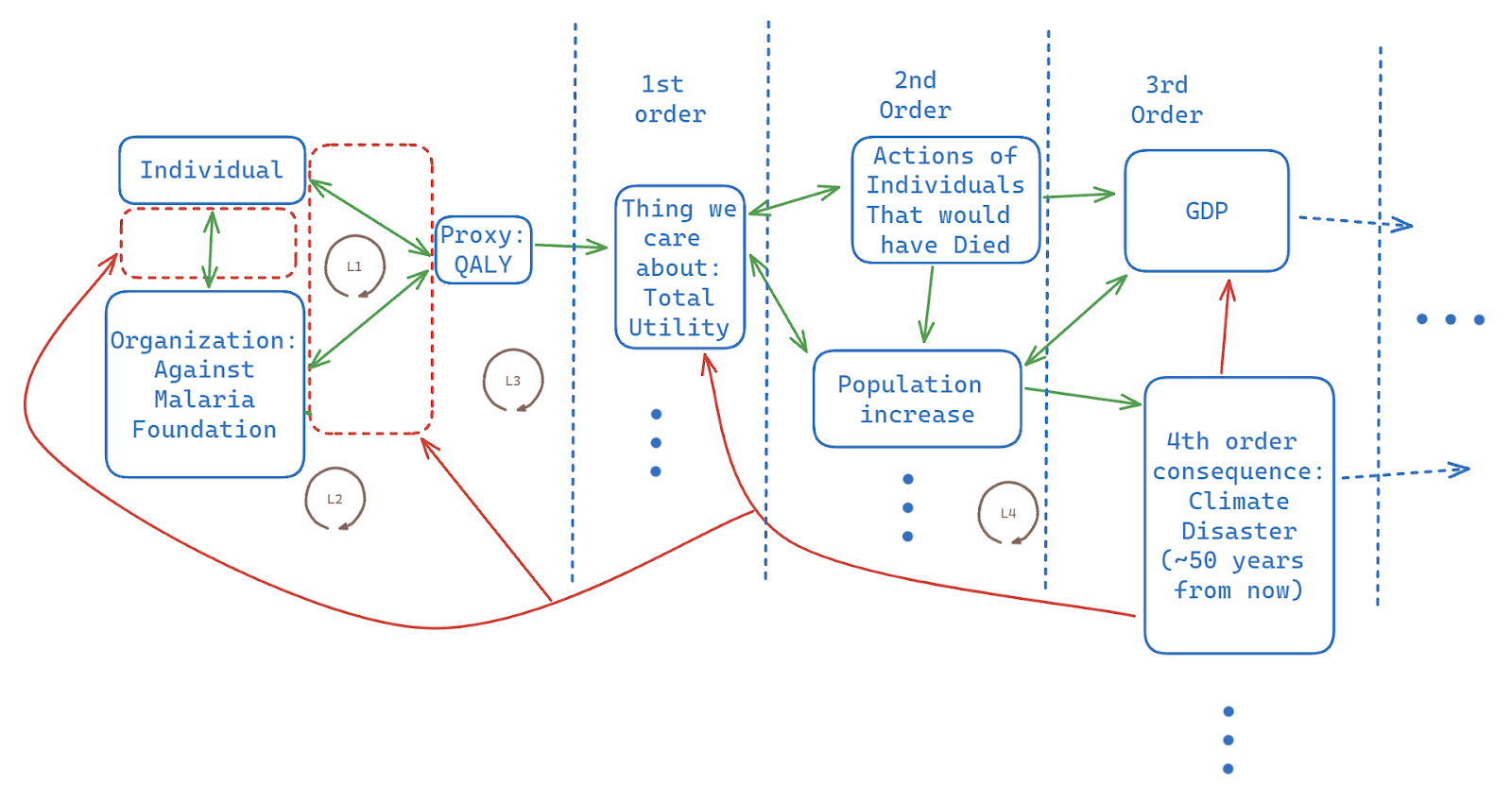

Loop 1 provides a feedback loop where a person gets motivated to provide bed-nets to those in other countries. The second and third order consequences lead to Loops 2 and 3, which break off the relationship between the individual and that cause area/organization. Loop 4 represents the decrease in the thing we care about due to effects from climate change.

This is just one example that I came up with for second and third order consequences (one with low confidence, since I did not do fact checking for it). There would of course be many more both positive and negative second and third order consequences, and I would be interested to hear your thoughts (there is a certain amount of dampening that goes on from second to third order, although I am not sure how much).

It is extremely difficult for us to measure the overall net benefit or loss from things like population increase over the long term. If we develop an aligned super-intelligent AI, however, it seems likely that things will go well in the long run future (assuming we get there, which is uncertain). My personal qualitative "proxy" is "good alignment work," when it comes to AI safety.

My intuition is that while quantitative proxies are helpful, they do not tell us all that we need to know. How do you measure the impact of an AI safety paper? I do not really think you can. You can try to measure citations, but that only gets you so far, because you also need to worry about how much the research contributes to alignment, whether it comes at a good time, and so on. The same issues crop up for many other areas. Therefore, one has to rely on qualitative proxies in addition to quantitative ones.