Revisiting the karma system

By Arepo @ 2022-05-29T14:19 (+61)

Epistemic status: grumpy, not committed.

There was quite a lot of discussion of the karma system in the comments to the forum 2.0 announcement, but it didn’t seem very conclusive and as far as I know, hasn’t been publicly discussed since.

That seems enough concern that it’s worth revisiting. My worries are:

- Karma concentration exacerbates groupthink by allowing a relatively small number of people to influence which threads and comments have greatest visibility

- It leads to substantial karma inflation over time, strongly biasing recent posts to get more upvotes

Point 1) was discussed a lot in the original comments. The response was that because it’s a pseudo-logarithmic scale, this shouldn’t be much of a concern. I think we now have reasons to be sceptical of this response:

- There are plenty of people with quite powerful upvotes now - mine are currently worth 5 karma, very close to 6, and I’ve posted less than a dozen top level posts. That will give me 3-6 times the strong voting power of a forum beginner, which seems like way too much.

- While top level posts are the main concern, comments get a much lower level of interest, so the effect of one or two strong votes can stand out much more if you’re skimming through them.

- The people with the highest karma naturally tend to be the most active users, who’re likely already the most committed EAs. This means we already have a natural source of groupthink (assuming the more committed you are to a social group the more likely you are to have bought into any given belief it tends to hold). So groupthinky posts would already tend to get more attention, and having these active users have greater voting power multiplies this effect.

Point 2) is confounded by the movement and user base having grown, so a higher proportion of posts having been made in later years, when there were more potential upvoters. Nonetheless, unless you believe that the number of posts has proliferated faster than the number of users (so that karma is stretched evenly), it seems self-evident that there is at least some degree of karmic inflation.

So my current stance is that, while the magnitude of both effects is difficult to gauge because of complementary factors, both effects are probably in themselves net negative, and therefore things we should not be using tools to complement - we might even want to actively counteract them. I don’t have a specific fix in mind, though plenty were discussed in the comments section linked above. This is just a quick post to encourage discussion of alternative… so over to you, commenters!

Holly Morgan @ 2022-05-29T15:51 (+52)

From the Forum user manual:

Posts that focus on the EA community itself are given a "community" tag. By default, these posts will have a weighting of "-25" on the Forum's front page (see below), appearing only if they have a lot of upvotes.

I wonder if this negative weighting for the Frontpage should be greater and/or used more, as I worry that the community looks too gossip-y/navel-gazing to newer users. E.g. I'd classify the 13 posts currently on the Frontpage (when not logged in) as only talking about more object-level stuff around half of the time:

Tagged 'Community'

- On funding, trust relationships, and scaling our community [PalmCone memo]

- Some unfun lessons I learned as a junior grantmaker

- On being ambitious: failing successfully & less unnecessarily

About the community but not tagged as such

- Revisiting the karma system

- High Impact Medicine, 6 months later - Update & Key Lessons [tagged as 'Building effective altruism']

Unclear

- Introducing Asterisk [tagged as 'Community projects']

- Open Philanthropy's Cause Exploration Prizes: $120k for written work on global health and wellbeing

- Monthly Overload of EA - June 2022

Not about the community

- Types of information hazards

- Will there be an EA answer to the predictable famines later this year?

- Energy Access in Sub-Saharan Africa: Open Philanthropy Cause Exploration Prize Submission

- Quantifying Uncertainty in GiveWell's GiveDirectly Cost-Effectiveness Analysis

- Are you really in a race? The Cautionary Tales of Szilárd and Ellsberg

Linch @ 2022-05-30T04:32 (+19)

I agree that -25 may not be enough at the current stage, maybe -75 or -100 will be better.

Stefan_Schubert @ 2022-05-29T14:47 (+45)

That will give me 3-6 times the strong voting power of a forum beginner, which seems like way too much.

Personally I'd rather want the difference to be bigger, since I find it much more informative what the best-informed users think.

Ideally the karma system would also be more sensitive to the average quality of users' comments/posts. Right now sheer quantity is awarded more than ideal, in my view. But I realise it's non-trivial to improve on the current system.

MichaelStJules @ 2022-05-29T15:40 (+11)

We could give weight to the average vote per comment/post, e.g. a factor calculated by adding all weighted votes on someone's comments and posts and then dividing by the number of votes on their comments (not the number of comments/posts, to avoid penalizing comments in threads that aren't really read).

We could also use a prior on this factor, so that users with a small number of highly upvoted things don't get too much power.

Charles He @ 2022-05-29T18:30 (+5)

I don't fully understand what you're saying, but my guess is that you're suggesting we should take a user's total karma and divide that by votes. I don't understand what it means to "give weight to this"—does the resulting calculation become their strong vote power? I am not being arch, I literally fully don't understand, like I'm dumb.

I know someone who has some data and studied the forum voting realizations and weak/strong upvotes. They are are totally not a nerd, I swear!

Thoughts:

A proximate issue with the idea I think you are proposing is that currently, voting patterns and concentration of voting or strong upvotes differs in a systematic way by the "class of post/comment":

- There is a class of "workaday" comments/posts that no one finds problematic and gets just regular upvotes on.

- Then there is a class of "I'm fighting the War in Heaven for the Lisan al Gaib" comments/posts that gets a large amount of strong upvotes. In my opinion, the karma gains here aren't by merit or content.

I had an idea to filter for this (that is sort of exactly the opposite of yours) to downweight the karma of these comments by their environment, to get a "true measure" of the content. Also, the War in Heaven posts have a sort of frenzy to them. It's not impossible that giving everyone a 2x - 10x multiplier on their karma might contribute to this frenzy, so moderating this algorithmically seems good.

A deeper issue is that people will be much more risk averse in a system like this that awards them for their average, not total karma.

In my opinion, people are already too risk averse, in a way that prevents confrontation, but at least that leads to a lot of generally positive comments/posts, which is a good thing.

Now, this sort of gives a new incentive, to aim for zingers or home runs. This seems pretty bad. I actually don't think it is that dangerous/powerful in terms of actual karma gain, because as you mentioned, this can be moderated algorithmically. I think it's more a problem that this can lead to changes in perception or culture shifts.

MichaelStJules @ 2022-05-29T20:47 (+8)

Now, this sort of gives a new incentive, to aim for zingers or home runs. This seems pretty bad.

Hmm ya, that seems fair. It might generally encourage preaching to a minority of strong supporters who strong upvote, with the rest indifferent and abstaining from voting.

Charles He @ 2022-05-29T21:10 (+4)

This is a good point itself.

This raises a new, pretty sanguine idea:

We could have a meta voting system that awards karma or adjusts upvoting power dependent on getting upvotes from different groups of people.

Examples motivating this vision:

- If the two of us had a 15 comment long exchange, and we upvoted each other each time, we would gain a lot of karma. I don't think our karma gains should be worth hugely more than say, 4 "outside" people upvoting both of us once for that exchange.

- If you receive a strong upvote for the first time, from someone from another "faction", or from a person who normally doesn't upvote you, that should be noted, rewarded and encouraged (but still anonymized[1]).

- On the other hand, upvotes from the same group of people who upvoted you 50x in the past, and does it 5x a week, should be attenuated somewhat.

This is a little tricky:

- There's some care here, so we aren't getting the opposite problem of encouraging arbitrary upvotes from random people. We probably want to filter to get substantial opinions. (At the risk of being ideological, I think "diversity", which is the idea here, is valuable but needs to be thoughtfully designed and not done blindly.)

- Some of the ideas, like solving for "factions", involves "the vote graph" (a literal mathematical object, analogous to the friend graph on FB but for votes). This requires literal graph theory, e.g. I would probably consult a computer scientist or applied math lady.

- I could also see using the view graph as useful.

This isn't exactly the same as your idea, but a lot of your idea can be folded in (while less direct, there's several ways we can bake in something like "people who get strong upvotes consistently are more rewarded").

- ^

Maybe there is a tension between notifying people of upvoting diversity, and giving away identity, which might be one reason why some actual graph theory probably needs to be used.

MichaelStJules @ 2022-05-29T21:56 (+8)

I think this is an interesting idea. I would probably recommend against trying to "group" users, because it would be messy and hard to understand, and I am just generally worried about grouping users and treating them differently based on their groups. Just weakening your upvotes on users you often upvote seems practical and easy to understand.

Would minority views/interests become less visible this way, though?

Charles He @ 2022-05-29T22:11 (+2)

I agree. There's issues. It seems comple and not transparent at first[1]

I would probably recommend against trying to "group" users, because it would be messy and hard to understand, and I am just generally worried about grouping users and treating them differently based on their groups. Just weakening your upvotes on users you often upvote seems practical and easy to understand.

Would minority views/interests become less visible this way, though?

I think the goal of using the graphs and building "factions" ("faction" is not the best word I would use here, like if you were to put this on a grant proposal) is that it makes it visible and legible.

This might be more general and useful than it seems and can be used prosocially.

For example, once legible, you could identify minority views and give them credence (or just make this a "slider" for people to examine).

Like you said, this is hard to execute. I think this is hard in the sense that the designer needs to find the right patterns for both socialization and practical use. Once found, the patterns can ultimately can be relatively simple and transparent.

Misc comments:

- To be clear, I think the ideas I'm discussing in this post and reforms to the forum is at least a major project, up to a major set of useful interventions, maybe comparable to all of "prediction markets" (in the sense of the diversity of different projects it could support, investment, and potential impact that would justify it).

- This isn't something I am actively pursuing (but these forum discussions are interesting and hard to resist).

Guy Raveh @ 2022-05-29T22:47 (+3)

This sounds somewhat like a search-engine eigenvector thing, e.g. PageRank.

Charles He @ 2022-05-30T23:32 (+2)

Yes, I think that's right, I am guessing because both involve graph theory (and I guess in pagerank using the edges turns it into a linear algebra problem too?).

Note that I hardly know any more about graph theory.

Guy Raveh @ 2022-05-31T06:41 (+3)

PageRank mostly involved graph theory in the mere observation that there's a directed graph of pages linking to each other. It then immediately turns to linear algebra, where the idea is that you want a page's weight to correspond to the sum of the weights of the pages linking to it - and this exactly describes finding an eigenvector of the graph matrix.

On second thought I guess your idea for karma is more complicated, maybe I'll look at some simple examples and see what comes up if I happen to have the time.

Charles He @ 2022-05-31T12:28 (+2)

That’s interesting to know about pagerank. It’s smart it just goes to linear algebra.

I think building the graph requires data that isn’t publicly available like identity of votes and views. It might be hard to get a similar dataset to see if a method works or not. Some of the “clustering techniques” might not apply to other data.

Maybe there is a literature for this already.

MichaelStJules @ 2022-05-29T20:30 (+8)

I don't fully understand what you're saying, but my guess is that you're saying is like, that a user's total karma and divide that by votes.

Ya, that's right, total karma divided by the number of votes.

I don't understand what it means to "give weight to this"—does the resulting calculation become their strong vote power?

What I proposed could be what determines strong vote power alone, but strong vote power could be based on a combination of multiple things. What I meant by "give weight to this" is that it could just be one of multiple determinants of strong vote power.

A deeper issue is that people will be much more risk averse in a system like this that awards them for their average, not total karma.

This is why I was thinking it would only be one factor. We could use both their total and average to determine the strong vote power. But also, maybe a bit of risk-aversion is good? The EA Forum is getting used more, so it would be good if the comments and posts people made were high quality rather than noise.

Also, again, it wouldn't penalize users for making comments that don't get voted on; it encourages them to chase strong upvotes and avoid downvotes (relative to regular upvotes or no votes).

Charles He @ 2022-05-29T18:33 (+2)

I did have thought about a meta karma system, but where the voting effect or output differs by context (so a strong upvote or strong downvote has a different effect on comments or posts depending on the situation).

This would avoid the current situation of "I need to make sure the comment I like ranks highly or has the karma I want" and also prevent certain kinds of hysteresis.

While the voting effect or output can change radically in this system, based on context, IMO, it's important make sure that the latent underlying voting power of the user accumulates in a fairly mundane/simple/gradual way, otherwise you get these weird incentives.

MichaelStJules @ 2022-05-29T20:44 (+4)

Maybe this underweights many people appreciating a given comment or post and regular upvoting it, but few of them appreciating it enough to strong upvote it, relative to a post or comment getting both a few strong upvotes and at most a few regular votes?

Vaidehi Agarwalla @ 2022-05-30T00:05 (+8)

I think having +6/7 votes seems too high because it's not clear who is voting when you see the breakdown of karma to votes. I end up never using my strong upvote, but would if it was in the 3-5 range

I think it would also help if we had upvotes for usefulness vs I agree with this vs "this applies to me" other things, and if we had in-post polls especially for anecdotal posts.

Stefan_Schubert @ 2022-05-30T09:56 (+4)

I agree that a multi-dimensional voting system would have some advantages.

Linch @ 2022-05-30T04:30 (+6)

I'd be interested in an empirical experiment of looking at posts via unweighted karma vs weighted karma, though of course "ground truth" might be hard.

Arepo @ 2022-05-30T08:39 (+4)

'Personally I'd rather want the difference to be bigger, since I find it much more informative what the best-informed users think.'

This seems very strange to me. I accept that there's some correlation between upvoted posters and epistemic rigour, but there's a huge amount of noise, both in reasons for upvotes and in subject areas. EA includes a huge diversity of subject areas each requiring specialist knowledge. If I want to learn improv, I don't go to a Fields Medalist winner or Pulitzer prize winning environmental journalist, so why should the equivalent be true on here?

Stefan_Schubert @ 2022-05-30T09:55 (+18)

I think that a fairly large fraction of posts is of a generalist nature. Also, my guess is that people with a large voting power usually don't vote on topics they don't know (though no doubt there are exceptions).

I'd welcome topic-specific karma in principle, but I'm unsure how hard it is to implement/how much of a priority it is. And whether karma is topic-specific or not, I think that large differences in voting power increase accuracy and quality.

Akhil @ 2022-05-29T15:48 (+3)

I don't think I am too convinced by the logical flow of your argument, which if I understand correctly is:

more karma = more informed = higher value on opinion

I think that at each of these steps (more karma --> more informed, more informed --> higher value of opinion), you lose a bunch of definition, such that I am a lot less convinced of this.

Stefan_Schubert @ 2022-05-29T17:34 (+2)

I'm not saying that there's a perfect correlation between levels of karma and quality of opinion. The second paragraph of my comment points to quantity of comments/posts being a distorting factor; and there are no doubt others.

Guy Raveh @ 2022-05-29T21:16 (+2)

Downvoted because I think "more active on the forum for a longer time" may be a good proxy for "well informed about what other forum posters think" (and even that's doubtful), but is a bad proxy for "well informed about reality".

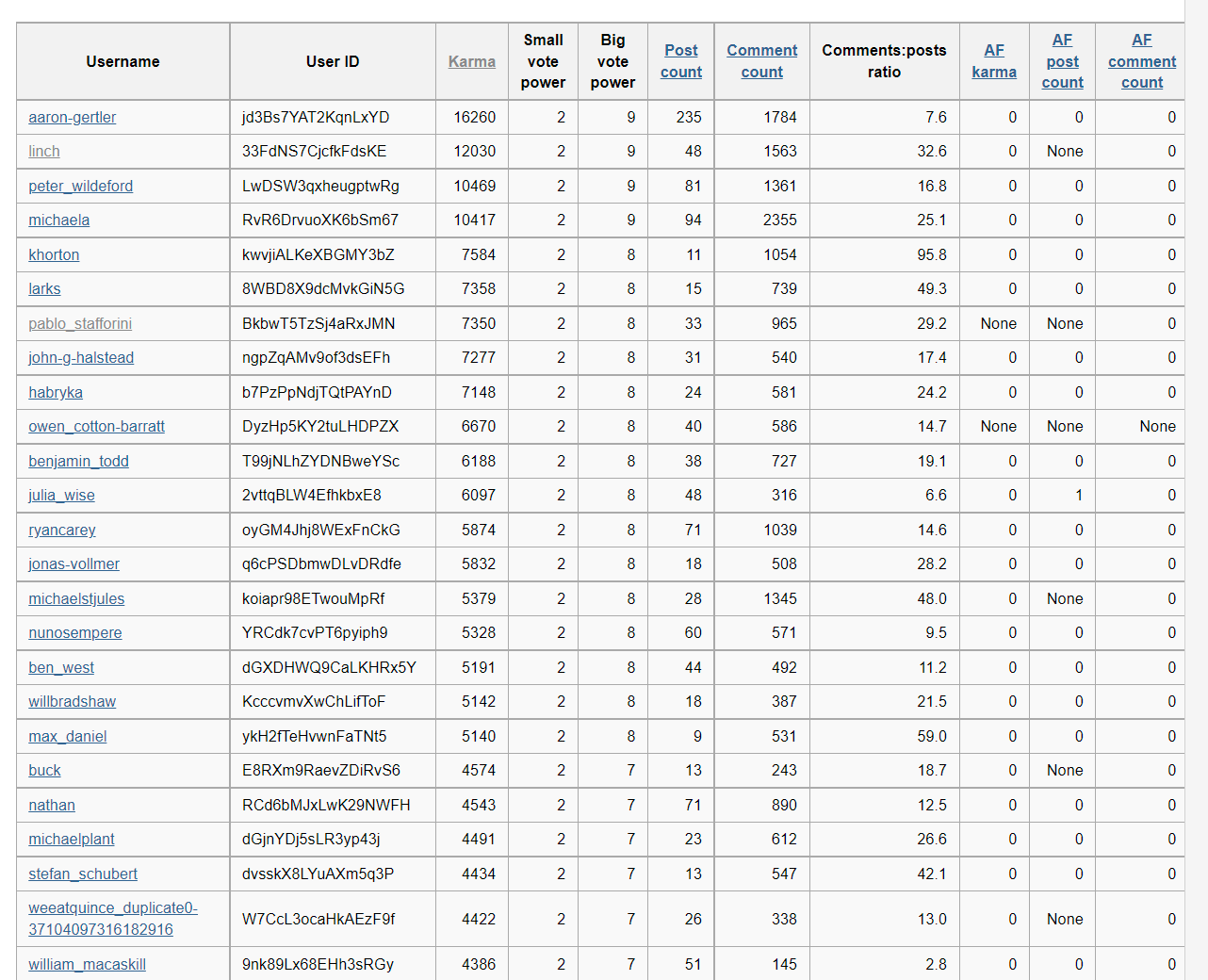

Charles He @ 2022-05-29T21:27 (+6)

Ok, this comment is ideological (but really interesting).

This comment is pushing on a lot of things, please don't take any of this personally .

(or take it personally if you're one of the top people listed here and fighting me!)

So below is the actual list of top karma users.

BIG CAVEATS:

- I am happy to ruthlessly, mercilessly attack, the top ranked person there, on substantial issues like on their vision of the forum. Like, karma means nothing in the real world (but for onlookers, my choice is wildly not career optimal[1]).

- Some people listed are sort of dumb-dumbs.

- This list is missing the vast majority (95%) of the talented EAs and people just contributing in a public way, much less those who don't post or blog.

- The ranking and composition could be drastically improved

But, yes, contra you, I would say that this list of people do have better and reasonable views of reality than the average person and probably average EA.

More generally, this is positively correlated with karma.

Secondly, importantly, we don't need EAs on the forum to have the best "view of reality".

We need EAs to have the best views of generating impact, such as creating good meta systems, avoiding traps, driving good culture, allocating funding correctly, attracting real outside talent, and appointing EAs and others to be impactful in reality.

- ^

Another issue is that there isn't really a good way to resolve "beef" between EAs right now.

Funders and other senior EAs, in the most prosocial, prudent way, are wary of this bad behavior and consequent effects (lock-in). So it's really not career optimal to just randomly fight and be disagreeable.

MichaelStJules @ 2022-05-29T23:59 (+8)

Some people listed are sort of dumb-dumbs.

I think I would disagree with this. At the very least, I think people on the list write pretty useful posts and comments.

Still, the ranking doesn't really match who I think consistently makes the most valuable comments or posts, and I think it reflects volume too much. I'm probably as high as I am mainly because of a large number of comments that got 1 or 2 regular upvotes (I've made the 5th most comments among EA Forum users).

(I don't think people should be penalized for making posts or comments that don't get much or any votes; this would discourage writing on technical or niche topics, and commenting on posts that aren't getting much attention anymore or never did. This is why I proposed dividing total karma by number of votes on your comments/posts, rather than dividing total karma by the number of your comments/posts.)

This list is missing the vast majority (95%) of the talented EAs and people just contributing in a public way, much less those who don't post or blog.

I agree.

The ranking and composition could be drastically improved

I agree that they could probably be improved substantially. I'm not sure about "drastically", but I think "substantially" is enough to do something about it.

Charles He @ 2022-05-30T02:28 (+2)

Thank you for the corrections, which I agree with. It is generous of you to graciously indulge my comment.

Guy Raveh @ 2022-05-29T22:40 (+3)

Ok, debate aside as it's 2am here, where does one get these data?

Charles He @ 2022-05-30T02:30 (+3)

It’s on Issa Rice’s site: https://eaforum.issarice.com/userlist?sort=karma

Stefan_Schubert @ 2022-05-29T21:47 (+2)

I'm not quite sure I follow your reasoning. I explicitly say in the second paragraph of my comment that "right now sheer quantity is awarded more than ideal".

MichaelStJules @ 2022-05-29T16:53 (+42)

A single user with a decent amount of karma can unilaterally decide to censor a post and hide it from the front page with a strong downvote. Giving people unilateral and anonymous censorship power like this seems bad.

IanDavidMoss @ 2022-05-29T17:58 (+19)

I would be in favor of eliminating strong downvotes entirely. If a post or comment is going to be censored or given less visibility, it should be because a lot of people wanted that to happen rather than just two or three.

MichaelStJules @ 2022-05-29T20:55 (+1)

Ya, I agree. I think the only things I strong downvote are things worth reporting as norm-breaking, like spam or hostile/abusive comments.

We could also just weaken (strong) downvotes relative to upvotes.

Habryka @ 2022-05-30T01:00 (+12)

Enough people look at the All-Posts page that this is rarely an issue, at least on LessWrong where I've looked at the analytics for this. Indeed, many of the most active voters prefer to use the all-posts page, and a post having negative karma tends to actually attract a bit more attention than a post having low karma.

MichaelStJules @ 2022-05-30T02:21 (+10)

Ah, I wasn't aware of the All-Posts page. That's helpful, but I'd wonder if it's also being used as much on the EA Forum. I'm probably relatively active on the EA Forum, and I only check Pinned Posts and Frontpage Posts, and I sometimes hit "Load more" for the latter.

Note also that one strong downvote might not put a post into negative karma, but just low positive karma, if the submitter has enough karma themself or others have upvoted the post.

Arepo @ 2022-05-30T08:47 (+4)

As a datum I rarely look beyond the front page posts, and tbh the majority of my engagement probably comes from the EA forum digest recommendations, which I imagine are basically a curated version of the same.

Pablo @ 2022-05-31T13:45 (+9)

Does this happen often enough for it to be a significant worry? I agree that this is a way the karma system could be abused, but what matters is whether it is in fact frequently abused in this way.

MichaelStJules @ 2022-05-31T14:27 (+4)

It's not clear people would even know it's an abuse of the system to strong downvote a post early on, rather than wait.

I don't have access to data on this, but a few related anecdotes, although not directly instances of it.

This post itself was starting to pick up upvotes and then I think either received a bunch of downvotes or a strong downvote (or people removed upvotes), so I switched my regular upvote into a strong one. I think it went from 20-25 karma to 11-16. I'd guess this wouldn't be enough to hide it from the front page, though, but if it had been hidden early on, I might not have made my comment starting this thread.

I've seen a few suffering-focused ethics-related comments get strong downvoted by users with substantial karma, in particular a few of my own comments on my shortform (some that I had shared on the 80,000 Hours website, and another in an SFE/NU FB group) and a comment about utilitarianism.net omitting discussion of negative utilitarianism. I'm not aware of this happening to posts, but I wouldn't be surprised if such users would do the same to posts, including early on. These might have been 2 years ago by now, though.

MichaelStJules @ 2022-05-31T16:14 (+2)

One option could be disabling strong downvotes (or their impact on sorting) on posts for the first X hours a post is up. Maybe 24 hours?

Stefan_Schubert @ 2022-05-31T16:42 (+3)

I think there are some posts that should be made invisible; and that it's good if strong downvotes make them so. Thus, I would like empirical evidence that such a reform would do more good than harm. My hunch is that it wouldn't.

Lizka @ 2022-05-29T15:25 (+30)

You can now look at Forum posts from all time and sort them by inflation-adjusted karma. I highly recommend that readers explore this view!

MichaelStJules @ 2022-06-30T19:21 (+12)

I think we need to adjust further, since most of the top posts are about 1 year old or newer, and 4 of the top 9 to 11 posts are about 3 months old or newer.

EDIT: Maybe rather than average, use the average of the top N or top p% of the month?

MichaelStJules @ 2022-06-30T20:46 (+2)

Or, instead of normalizing, just show the top N or p% of posts of each few months?

Aaron__Maiwald @ 2022-05-29T17:32 (+7)

Out of curiosity: how do you adjust for karma inflation?

JP Addison @ 2022-05-30T16:10 (+2)

Relative to average of ~the month. It's not based on standard deviation, just karma/(avg karma).

MichaelStJules @ 2022-06-30T19:23 (+2)

I think we need to adjust further, since most of the top posts are about 1 year old or newer, and 4 of the top 9 to 11 posts are about 3 months old or newer.

Maybe rather than average, use the average of the top N or top p% of the month?

Aaron__Maiwald @ 2022-05-30T21:53 (+1)

Thanks :)

karthik-t @ 2022-05-29T19:20 (+1)

I would imagine the forum has a record of the total voting power of all users, which is increasing over time, and the karma can be downscaled by this total.

Charles He @ 2022-05-29T20:14 (+2)

Yes, by the way, this is sort of immediately available.

You can see all downvotes/upvotes and whether they are weak or strong.

So a 4-tuple of votes is available for each comment/post.

IanDavidMoss @ 2022-05-30T17:06 (+4)

Sorry if I'm being dense, but where is this 4-tuple available?

Charles He @ 2022-05-30T23:27 (+2)

I sent a PM.

Tobias Häberli @ 2022-06-06T17:05 (+1)

That's very cool!

Does it adjust the karma for when the post was posted?

Or does it adjust for when the karma was given/taken?

For example:

The post with the highest inflation-adjusted karma was posted 2014, and had 70 upvotes out of 69 total votes in 2019 and now sits at 179 upvotes out of 125 total votes. Does the inflation adjustment consider that the average size of a vote after 2019 was around 2?

JP Addison @ 2022-06-06T18:59 (+3)

It's just the posted-at date of the post, and makes no attempt to adjust for when the karma was given. So if something has stayed a classic for much longer than its peers, it will rank highly on this metric.

Guy Raveh @ 2022-05-29T21:19 (+1)

I love this. I've been really noticing the upvote inflation, and that's when I only started being active here last November.

Arepo @ 2022-05-30T18:03 (+14)

For those who enjoy irony: the upvotes on this post pushed me over the threshold not only to 6-karma strong upvotes, but for my 'single' upvoted now being double-weighted.

Guy Raveh @ 2022-06-01T20:08 (+3)

While most of my comments here "magically" went from score ≥3 to a negative score (so, collapsed by default) over the last few hours, presumably due to someone strongly downvoting them. Including this one, which I find somewhat puzzling/worrying.

I know this comment sounds petty, but I do think it exemplifies the problem.

Edit: Charles below made this seem more reasonable.

Charles He @ 2022-06-01T20:55 (+3)

It collapses at -5, not just negative -1.

I didn’t downvote your comments and I think for most people, it’s bad when they go negative, like it’s a bad experience.

To be fair, the specific comment (which I didn’t downvote) says you think it’s a personal norm to downvote ideas you think are harmful (which is a subjective and complex judgement)…and they downvoted it.

Guy Raveh @ 2022-06-01T21:08 (+3)

Thanks, this makes better sense than what I thought.

DavidB @ 2024-07-11T18:48 (+10)

Thanks, Arepo. I think that this is a really important conversation. Although I've been interested in EA for quite some time, I only recently joined the EA Forum. There are some things that I would like to post, but I can't because I have no karma.

I'm not sure what a good alternative to the karma system is. I've often thought that a magazine or an academic journal on EA would be worthwhile, but I assume that it would do little to reduce group think since the peer reviewers would likely be EA's thought leaders and the same sorts of people with the most karma. It may also be that we don't need a system at all. People could then search for the content that interests them. Of course, this would cause other problems. But I think it's at least worth entertaining.

P.S. I hope this comment earns me some karma. I need it to write original posts, not just comments!

Arepo @ 2024-07-14T06:01 (+2)

I remember finding getting started really irritating. I just gave you the max karma I can on your two comments. Hopefully that will get you across the threshold.

smallsilo @ 2022-05-30T08:51 (+8)

A suggestion that might preserve the value of giving higher karma users more voting power, while addressing some of the concerns: give users with karma the option to +1 a post instead of +2/+3, if they wish.

IanDavidMoss @ 2022-05-30T17:20 (+20)

I think the issue is more that different users have very disparate norms about how often to vote, when to use a strong vote, and what to use it on. My sense (from a combination of noticing voting patterns and reading specific users' comments about how they vote) is that most are pretty low-key about voting, but a few high-karma users are much more intense about it and don't hesitate to throw their weight around. These users can then have a wildly disproportionate effect on discourse because if their vote is worth, say, 7 points, their opinion on one piece of content vs. another can be and often is worth a full 14 points.

In addition to scaling down the weight of strong votes as MichaelStJules suggested, another corrective we could think about is giving all users a limited allocation of strong upvotes/downvotes they can use, say, each month. That way high-karma users can still act in a kind of decentralized moderator role on the level of individual posts and comments, but it's more difficult for one person to exert too much influence over the whole site.

Linch @ 2022-05-30T19:02 (+4)

This feels reasonable to me. Personally, I very rarely strong-upvote (except the default strong-upvote for my own posts), and almost never strong-downvote unless it's clear spam. If there's a clearer "use it or lose it" policy, I think I'd be more inclined to ration out more strong-upvotes and strong-downvotes for favorite/least favorite (in terms of usefulness) post or comment that week.

Charles He @ 2022-05-31T20:37 (+3)

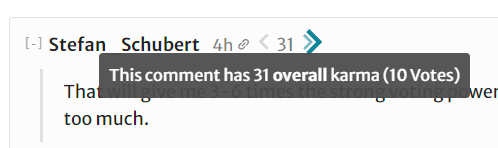

a few high-karma users are much more intense about it and don't hesitate to throw their weight around. These users can then have a wildly disproportionate effect on discourse because if their vote is worth, say, 7 points, their opinion on one piece of content vs. another can be and often is worth a full 14 points.

It would be interesting to get peoples guesses/hypothesis of this specific behavior, then see how often it actually occurs.

Personally, my guess is that EA forum accounts with large karma don't often do this behavior "negatively" (they rarely strong downvote and and negatively comment). When this does happen, I expect to find this to be positive and reasonable.

In case anyone is interested, my activity referred to above, begun almost half a year ago, provides data to identify instances of the behavior mentioned by IanDavidMoss, and other potentially interesting temporal patterns of voting and commenting. There are several other interesting things that can examined too.

I would be willing to share this data, as well as provide various kinds of technical assistance to people working on any principled technical project[1] related to the forum or relevant data.

- ^

I do not currently expect to personally work on an EA associated project related to the forum or accept EA funding to do so.

rodeo_flagellum @ 2022-05-29T16:25 (+8)

This post has a fair number of downvotes but is also generating, in my mind, a valuable discussion on karma, which heavily guides how content on EAF is disseminated.

I think it would be good if more people who've downvoted share their contentions (it may well be the case that those who've already commented contributed the contentions).

Guy Raveh @ 2022-05-29T21:25 (+1)

Can you know how many downvotes it has, beyond the boolean "does it have more votes than karma"?

Charles He @ 2022-05-29T21:34 (+4)

The post, as of 2:33 pm PST has:

- 8 big upvotes

- 7 small upvotes

- 1 "cancel" (was a vote but cancelled)

- 1 small downvote

- 1 big downvote

The above might not be perfect, I am not going to go through the codebase to figure this out.

Charles He @ 2022-05-29T19:11 (+2)

Can the OP give instances of groupthink?

The people with the highest karma naturally tend to be the most active users, who’re likely already the most committed EAs. This means we already have a natural source of groupthink (assuming the more committed you are to a social group the more likely you are to have bought into any given belief it tends to hold). So groupthinky posts would already tend to get more attention, and having these active users have greater voting power multiplies this effect.

A major argument of this post is "groupthink".

Unfortunately, most uses of "groupthink" in arguments are disappointing.

Often authors mention the issue, but don't offer any specific instances of groupthink, or how their solution solves it, even though it seems easy to do—they wrote up a whole idea motivated by it.

The simplest explanation for the above is that "groupthink" is a safe rhetorical thing to sprinkle onto arguments. Then, well, it becomes sort of a red flag for arguments without substance.

I guess I can immediately think of 3-5 instances or outcomes of groupthink off the top of my head[1], and like, if I spent more time, maybe 15 total different actual realizations of groupthink or issues.

Most of the issues are probably due to a streetlamp effect, very low tractability/EV, and are thorny politically, driven by founders/lock-in effect, or have a dependency on another issue.

Many of these issues are not blocked by virtue or ability to think about it, and it's unclear how they would be affected by voting.

I think there are several voting reforms and ways of changing the forum. In addition to the ones vaguely mentioned in this comment (admittedly the comment sort of feels like vaporware since the person won't be able to get back to it), a modification or editing of voting power or karma could be useful.

I'm mentioning this because it would be good to have issues/groupthink that could be solved or addressed (or maybe risk made worse) by any of these reforms.

- ^

One groupthink issue is the baked in tendency toward low quality or pseudo criticism.

This both crowds out and degrades real criticism (bigotry of low expectations). It is rewarded and self-perpetuates without any impact, and so seems like the definition of groupthink.

Arepo @ 2022-05-30T09:32 (+7)

Often authors mention the issue, but don't offer any specific instances of groupthink, or how their solution solves it, even though it seems easy to do—they wrote up a whole idea motivated by it.

You've seriously loaded the terms of engagement here. Any given belief shared widely among EAs and not among intelligent people in general is a candidate for potential groupthink, but qua them being shared EA beliefs, if I just listed a few of them I would expect you and most other forum users to consider them not groupthink - because things we believe are true don't qualify.

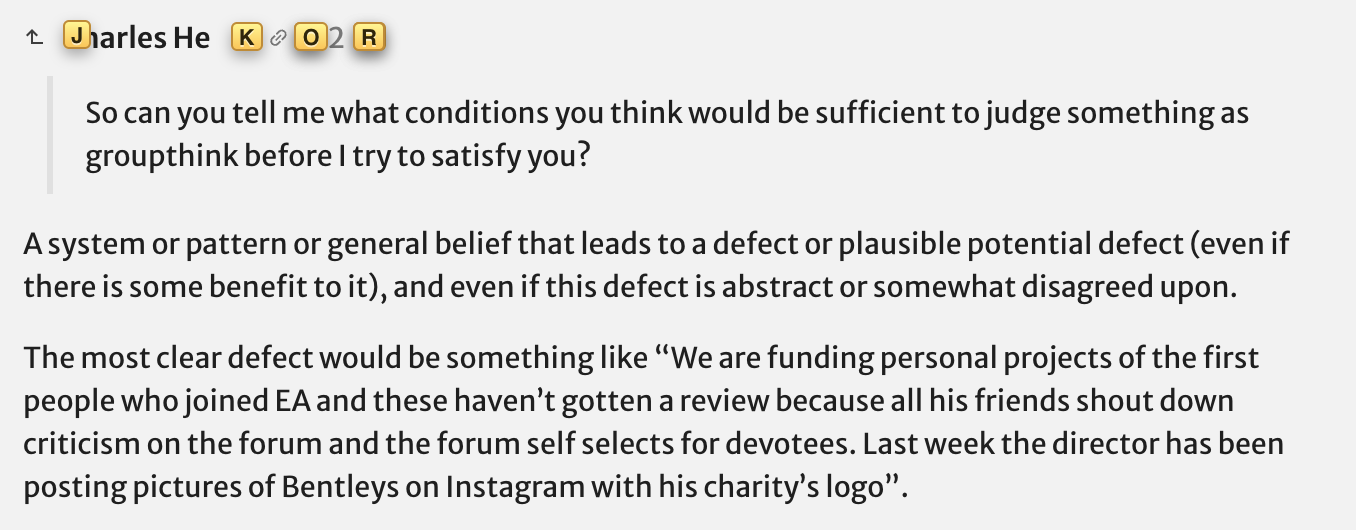

So can you tell me what conditions you think would be sufficient to judge something as groupthink before I try to satisfy you?

Also do we agree that if groupthink turns out to be a phenomenon among EAs then the karma system would tend to accentuate it? Because if that's true then unless you think the probability of EA groupthink is 0, this is going to be an expected downside of the karma system - so the argument should be whether the upsides outweigh the downsides, not whether the downsides exist.

Charles He @ 2022-05-30T12:36 (+2)

So can you tell me what conditions you think would be sufficient to judge something as groupthink before I try to satisfy you?

A system or pattern or general belief that leads to a defect or plausible potential defect (even if there is some benefit to it), and even if this defect is abstract or somewhat disagreed upon.

The most clear defect would be something like “We are funding personal projects of the first people who joined EA and these haven’t gotten a review because all his friends shout down criticism on the forum and the forum self selects for devotees. Last week the director has been posting pictures of Bentleys on Instagram with his charity’s logo”.

The most marginal defects would be “meta” and whose consequences are abstract. A pretty tenuous but still acceptable one (I think?) is “we only are getting very intelligent people with high conscientiousness and this isn’t adequate. ”.

Because if that's true then unless you think the probability of EA groupthink is 0, this is going to be an expected downside of the karma system - so the argument should be whether the upsides outweigh the downsides, not whether the downsides exist.

Right, you say this…but seem a little shy to list the downsides.

Also, it seems like you are close to implicating literally any belief?

As we both know, the probably of groupthink isn’t zero. I mentioned I can think of up to 15 instances, and gave one example.

I would expect you and most other forum users to consider them not groupthink - because things we believe are true don't qualify.

My current read is that this seems a little ideological to me and relies on sharp views of the world.

I’m worried what you will end up saying is not only that EAs must examine themselves with useful and sharp criticism that covers a wide range of issues, but all mechanical ways where prior beliefs are maintained must be removed, even without any specific or likely issue?

One pragmatic and key issue is that you might have highly divergent and low valuations of the benefits of these systems. For example, there is a general sentiment worrying about a kind of EA “Eternal September” and your vision of karma reforms are exactly the opposite of most solutions to this (and well, have no real chance of taking place).

Another issue are systemic effects. Karma and voting is unlikely to be the root issue of any defects in EA (and IMO not even close). However, we might think it affects “systems of discussion” in pernicious ways as you mention. Yet, since it’s not central or the root reason, deleting the current karma system without a clear reason or tight theory might lead to a reaction that is the opposite of what you intend (I think this is likely), so it’s blind and counterproductive.

The deepest and biggest issue of all is that many ideological views that involve disruption are hollow and themselves just expressions of futile dogma, e.g cultural revolution with red books, or a tech startup with a narrative of disrupting the world but simply deploying historically large amounts of investor money.

Wrote this on my phone, there might be bad spelling or grammar issues but if the comment has the dumbs it’s on me.

Arepo @ 2022-05-30T18:48 (+11)

Fwiw I didn't downvote this comment, though I would guess the downvotes were based on the somewhat personal remarks/rhetoric. I'm also finding it hard to parse some of what you say.

A system or pattern or general belief that leads to a defect or plausible potential defect (even if there is some benefit to it), and even if this defect is abstract or somewhat disagreed upon.

This still leaves a lot of room for subjective interpretation, but in the interests of good faith, I'll give what I believe is a fairly clear example from my own recent investigations: it seems that somewhere between 20-80% of the EA community believe that the orthogonality thesis shows that AI is extremely likely to wipe us all out. This is based on a drastic misreading of an often-cited 10-year old paper, which is available publicly for any EA to check.

Another odd belief, albeit one which seems more muddled than mistaken is the role of neglectedness in 'ITN' reasoning. What we ultimately care about is the amount of good done per resource unit, ie, roughly, <importance>*<tractability>. Neglectedness is just a heuristic for estimating tractability absent more precise methods. Perhaps it's a heuristic with interesting mathematical properties, but it's not a separate factor, as it's often presented. For example, in 80k's new climate change profile, they cite 'not neglected' as one of the two main arguments against working on it. I find this quite disappointing - all it gives us is a weak a priori probabilistic inference which is totally insensitive to the type of things the money has been spent on and the scale of the problem, which seems much less than we could learn about tractability by looking directly at the best opportunities to contribute to the field, as Founders Pledge did.

Also, it seems like you are close to implicating literally any belief?

I don't know why you conclude this. I specified 'belief shared widely among EAs and not among intelligent people in general'. That is a very small subset of beliefs, albeit a fairly large subset of EA ones. And I do think we should be very cautious about a karma system that biases towards promoting those views.

Charles He @ 2022-05-30T19:33 (+2)

I don't know why you conclude this. I specified 'belief shared widely among EAs and not among intelligent people in general'. That is a very small subset of beliefs, albeit a fairly large subset of EA ones. And I do think we should be very cautious about a karma system that biases towards promoting those views.

You are right. My mindset writing this comment was bad, but I remember thinking the reply seemed not specific and general, and I reacted harshly, this was unnecessary and wrong.

This still leaves a lot of room for subjective interpretation, but in the interests of good faith, I'll give what I believe is a fairly clear example from my own recent investigations: it seems that somewhere between 20-80% of the EA community believe that the orthogonality thesis shows that AI is extremely likely to wipe us all out. This is based on a drastic misreading of an often-cited 10-year old paper, which is available publicly for any EA to check.

I do not know the details of the orthogonality thesis and can't speak to this very specific claim (but this is not at all refuting you, I am just literally clueless and can't comment on something I don't understand).

To both say the truth and be agreeable, it's clear that the beliefs in AI safety are from EAs following the opinions of a group of experts. This just comes from people's outright statements.

In reality, those experts are not at the majority of AI people and it's unclear exactly how EA would update or change its mind.

Furthermore, I see things like the below, that, without further context, could be wild violations of "epistemic norms", or just common sense.

For background, I believe this person is interviewing or speaking to researchers in AI, some of whom are world experts. Below is how they seem to represent their processes and mindset when communicating with these experts.

One of my models about community-building in general is that there’s many types of people, some who will be markedly more sympathetic to AI safety arguments than others, and saying the same things that would convince an EA to someone whose values don’t align will not be fruitful. A second model is that older people who are established in their careers will have more formalized world models and will be more resistance to change. This means that changing one’s mind requires much more of a dialogue and integration of ideas into a world model than with younger people. The thing I want to say overall: I think changing minds takes more careful, individual-focused or individual-type-focused effort than would be expected initially.

I think one’s attitude as an interviewer matters a lot for outcomes. Like in therapy, which is also about changing beliefs and behaviors, I think the relationship between the two people substantially influences openness to discussion, separate from the persuasiveness of the arguments. I also suspect interviewers might have to be decently “in-group” to have these conversations with interviewees. However, I expect that that in-group-ness could take many forms: college students working under a professor in their school (I hear this works for the AltProtein space), graduate students (faculty frequently do report their research being guided by their graduate students) or colleagues. In any case, I think the following probably helped my case as an interviewer: I typically come across as noticeably friendly (also AFAB), decently-versed in AI and safety arguments, and with status markers. (Though this was not a university-associated project, I’m a postdoc at Stanford who did some AI work at UC Berkeley).

The person who wrote the above is concerned about image, PR and things like initial conditions, and this is entirely justified, reasonable and prudent for any EA intervention or belief. Also, the person who wrote the above is conscientious, intellectually modest, and highly thoughtful, altruistic and principled.

However, at the same time, at least from their writing above, their entire attitude seems to be based on conversion—yet, their conversations is not with students or laypeople like important public figures, but the actual experts in AI.

So if you're speaking with the experts in AI and adopting this attitude that they are preconverts and you are focused on working around their beliefs, it seems like, in some reads of this, would be that you are cutting off criticism and outside thought. In this ungenerous view, it's a further red flag that you have to be so careful—that's an issue in itself.

For context, in any intervention, getting the opinion or updating from experts is sort of the whole game (maybe once you're at "GiveWell levels" and working with dozens of experts it's different, but even then I'm not sure—EA has updated heavily on cultured meat from almost a single expert).

Pablo @ 2022-05-30T19:06 (+4)

My apologies: I now see that earlier today I accidentally strongly downvoted your comment. What happened was that I followed the wrong Vimium link: I wanted to select the permalink ("k") but selected the downvote arrow instead ("o"), and then my attempt to undo this mistake resulted in an unintended strong downvote. Not sure why I didn't notice this originally.

Charles He @ 2022-05-30T19:07 (+1)

Ha.

Honestly, I was being an ass in the comment and I was updating based on your downvote.

Now I'm not sure anymore!

Bayes is hard.

(Note that I cancelled the upvote on this comment so it doesn't show up in the "newsfeed")