Why some people disagree with the CAIS statement on AI

By David_Moss, Willem Sleegers @ 2023-08-15T13:39 (+144)

Summary

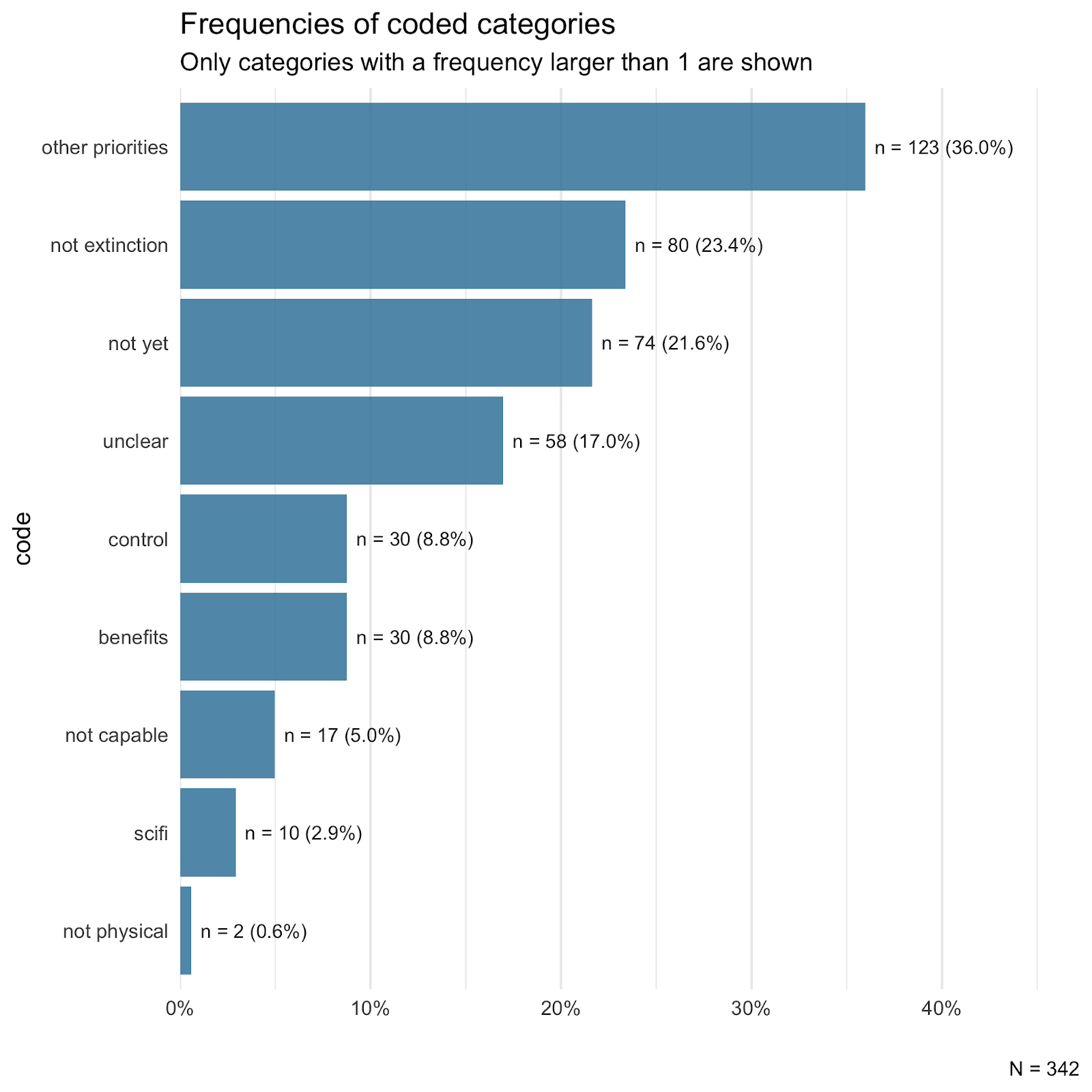

- Previous research from Rethink Priorities found that a majority of the population (59%) agreed with a statement from the Center for AI Safety (CAIS) that stated “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.” 26% of the population disagreed with this statement. This research piece does further qualitative research to analyze this opposition in more depth.

- The most commonly mentioned theme among those who disagreed with the CAIS statement was that other priorities were more important (mentioned by 36% of disagreeing respondents), with climate change particularly commonly mentioned.

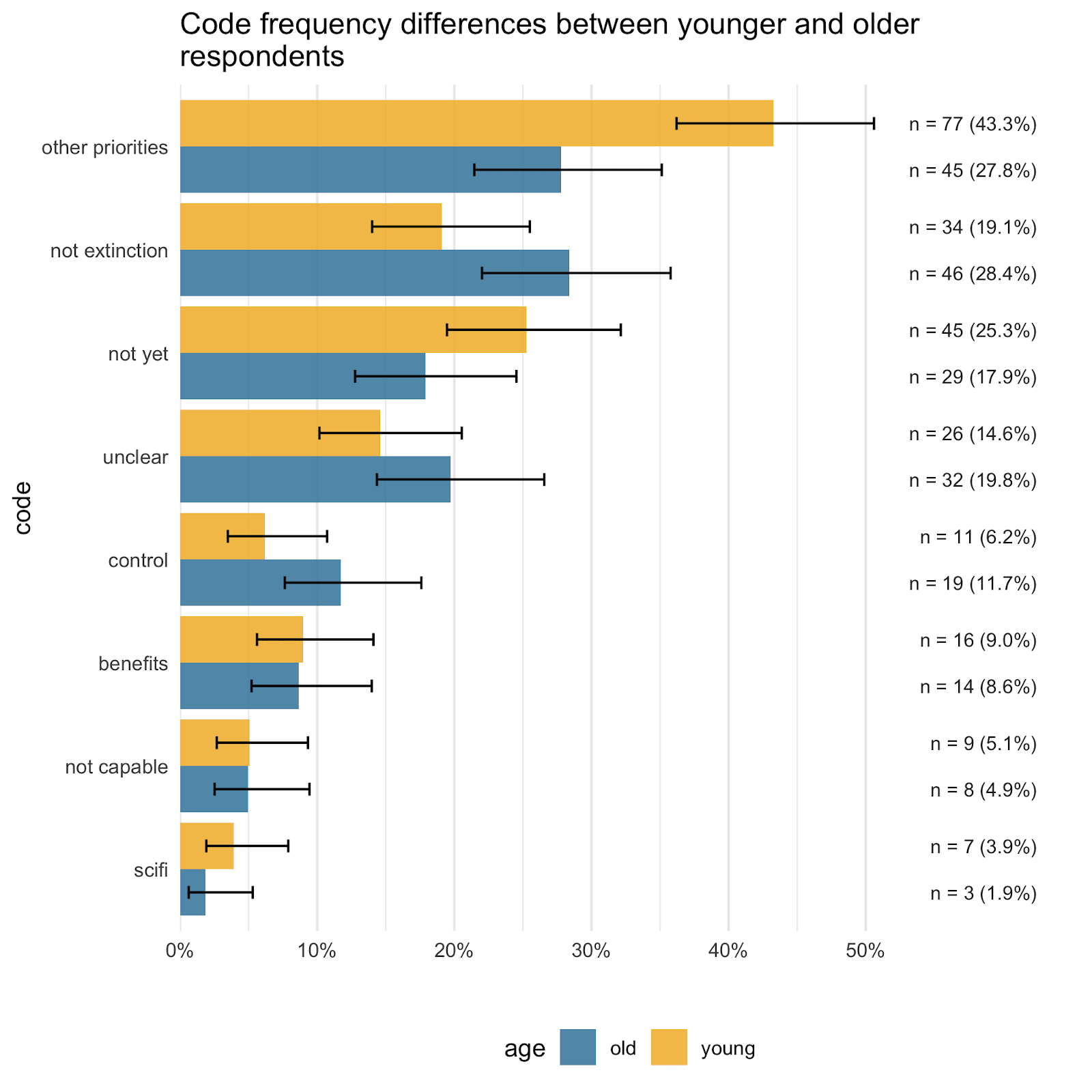

- This theme was particularly strongly occurring among younger disagreeing respondents (43.3%) relative to older disagreeing respondents (27.8%).

- The next most commonly mentioned theme was rejection of the idea that AI would cause extinction (23.4%), though some of these respondents agreed AI may pose other risks.

- Another commonly mentioned theme was the idea that AI was not yet a threat, though it might be in the future.

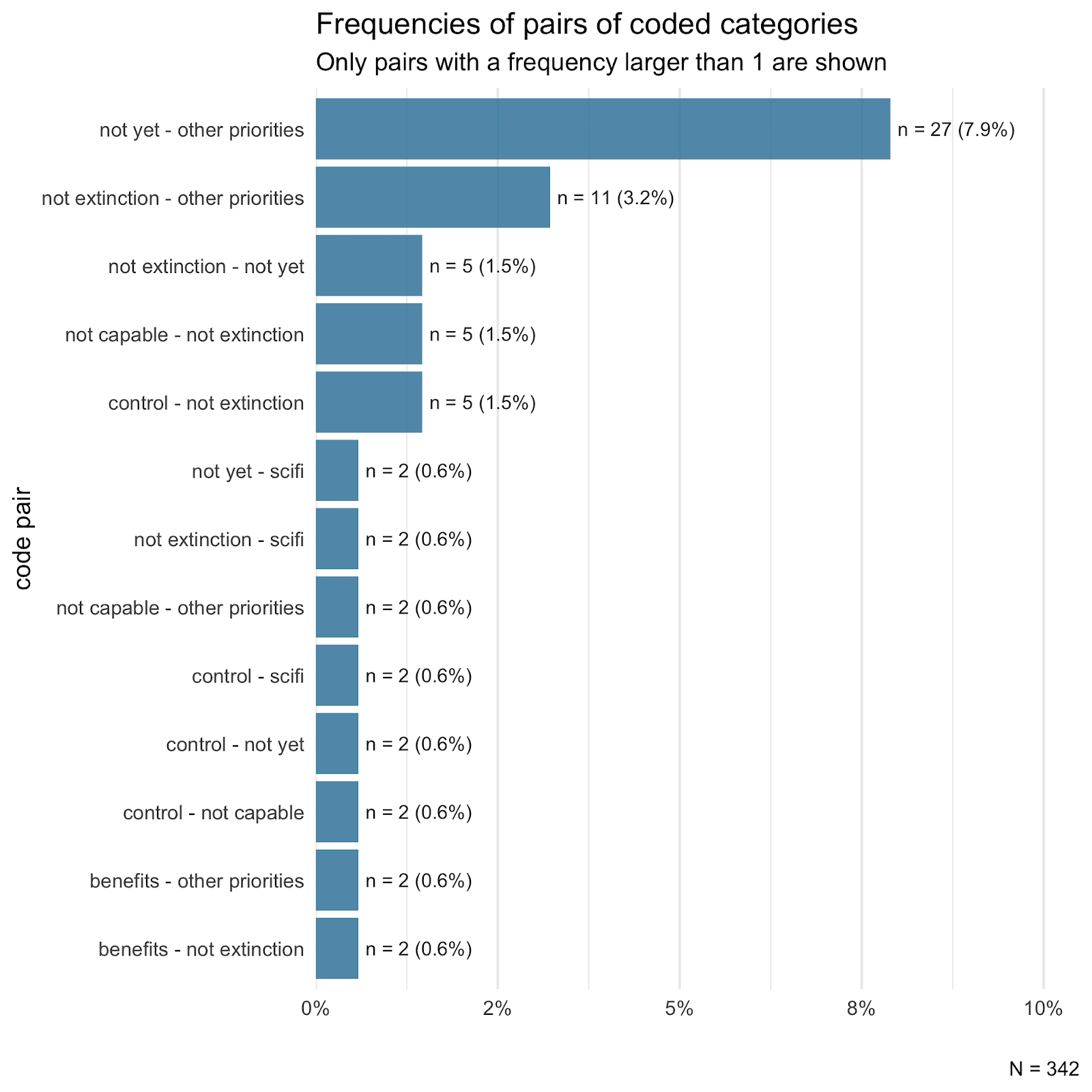

- This was commonly co-occurring with the ‘Other priorities’ theme, with many arguing that other threats were more imminent.

- Other less commonly mentioned themes included that AI would be under our control (8.8%) and so would not pose a threat, while another was that AI was not capable of causing harm, because it was not sentient or sophisticated or autonomous (5%).

Introduction

Our previous survey on attitudes on US public perception of the CAIS statement on AI risk found that a majority of Americans agree with the statement (59%), while a minority (26%) disagreed. To gain a better understanding of why individuals might disagree with the statement, we ran an additional survey, where we asked a new sample of respondents whether they agreed or disagreed with the statement, and then asked them to explain why they agreed or disagreed.[1] We then coded the responses of those who disagreed with the statement to identify major recurring themes in people’s comments.[2] We did not formally analyze comments from those who did not disagree with the statement, though may do so in a future report.

Since responses to this question might reflect responses to the specifics of the statement, rather than more general reactions to the idea of AI risk, it may be useful to review the statement before reading about the results.

"Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war."

Common themes

This section outlines the most commonly recurring themes. In a later section of this report we’ll discuss each theme in more detail and provide examples from each. It is important, when interpreting these percentages to remember that they are percentages of those 31.2%[3] respondents who disagreed with the statement, not of all respondents.[4]

The dominant theme, by quite a wide margin, was the claim that ‘Other priorities’ were more important, which was mentioned by 36% of disagreeing respondents.[5] The next most common theme was ‘Not extinction’, mentioned in 23.4% of responses, which simply involved respondents asserting that they did not believe that AI would cause extinction. The third most commonly mentioned theme was ‘Not yet’, which involved respondents claiming that AI was not yet a threat or something to worry about. The ‘Other priorities’ and ‘Not yet’ themes were commonly co-occurring, mentioned together by 7.9% of respondents, more than any other combination.

Some less commonly mentioned themes were ‘Control’, the idea that AI could not be a threat because it would inevitably be under our control, which was mentioned in 8.8% of responses. In addition, 8.8% of responses referred to the ‘Benefits’ resulting from AI, in their explanation of why they disagreed with the statement. 5.0% of responses mentioned the theme that AI would be ‘Not capable’ of causing harm, typically based on claims that it would not be sentient or have emotions. In addition, 2.9% of responses were coded as mentioning the theme that fears about AI were ‘Scifi’. Meanwhile, 0.6% of responses (i.e. only 2 respondents) specifically mentioned the idea that AI could not cause harm because it was ‘Not physical.’

Other priorities

As noted above, the most commonly recurring theme was the notion that there were other priorities, which were more important.

Many of these comments may have resulted from the fact that the statement explicitly contended that “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.” Indeed, a large number of comments referenced these risks explicitly:

- “I somewhat disagree with the statement because I believe it is a little extreme to compare AI to nuclear war.”

- “I agree that AI isn't inherently good, but to put it on the same scale of negativity as large world crises is unfair.”

- “I don't think the risk of AI is nearly as serious as pandemics or nuclear war. I'm not the biggest fan of AI, but its not that serious”

- “I disagree, because Al is not a big issue like nuclear war”

- “I think there are other higher priority tasks for the government”

- “It shouldn't be considered the same level as a priority as nuclear war and pandemics, those are really serious and [l]ife threatening issues. It is something worth considering the risks of but not to that extreme”

- “I disagree because I think threats like pandemics and nuclear war are much more dangerous than AI taking over.”

- “It's not nuclear war bad”

- “[I]t is nothing compared to wars or pandemics”

- “Pandemics and resource wars are much more realistic threats to our society as a whole”

- “War and pandemics are a much bigger issue to the every day person than AI…”

The fact that respondents raised specific objections to the claim that mitigating AI risk is as serious a threat as nuclear war or pandemics raises the possibility that, despite disagreeing with this facet of the statement, they might nevertheless support action to reduce risk from AI.

This always raises a couple of points relevant to communicating AI risk:

- Comparisons to other major threats or cause areas might provoke backlash.

- This could be either because they highly rate these other causes or because the comparison does not seem credible. If so, then this effect would need to be weighed against possible benefits of invoking such comparisons (e.g. highlighting the scale of the problem to ensure that people don’t misconstrue the claim as merely being that AI will cause less existential problems, such as job loss). That said, it is not clear from this data that this is happening. It is possible that respondents disagree with the statement because they do not agree that AI risk is comparable to the other causes mentioned, but that this does not lead them to be less persuaded that AI risk is a large concern in absolute terms.

- It is unclear how particular comparisons (such as those in the statement) are interpreted and this may matter for how people respond to such communications.

- People might interpret statements such as “AI should be a priority alongside other societal-scale risks such as pandemics and nuclear war” in a variety of different ways. Some might interpret this as claiming that AI is just as important as these other risks (and may object- for example, one comment noted “Not sure the risks are all equivalent”), while others might interpret it as claiming that AI is in roughly the same class as these risks, while still others might interpret the statement as merely suggesting that AI should be part of a general portfolio of risks more broadly. As this might influence how people respond to communications about AI (both how much they agree and how severe they take the threat to be), better understanding how different comparisons are received may be valuable.

That said, many comments also invoked causes other than those mentioned in the statement as part of their disagreement, with climate change being particularly commonly mentioned. In addition, a number of comments specifically invoked the immediacy of other threats as a reason to prioritise those over AI (related, in part to the ‘Not yet’ theme, which we mentioned often overlapped with ‘Other priorities):

- “The change in environment will make us extinct way way sooner than AI could even begin to try to do.”

- “I think the other risks are more pressing right now. AI resources should be diverted to climate change and social movements, but that won't happen”

- “I dont agree its as important because its not something that is actively happening whereas the other situations are”

- “I think climate change is a closer problem. AI being that powerful is a ways off”

- “Nuclear wars are much more severe at this moment.”

- “I don't see AI as imminent of a threat as pandemic, nuclear war, and climate change.”

- “I think there are much more pressing issues than AI”

- “disagree because there are more pressing issues in society then AI and while it can be an issue we haven't gotten to that point yet.”

- “I think that those other risks (war, famine) are more urgent and pressing than the risks posed by current AI technologies.”

- “this is most definitely not an immediate/urgent issue; the state of our air quality and earth literally being on fire is much more important”

This also potentially has implications for communicating AI:

- The urgency of AI may be important to emphasize or to make more argument for. This need not require arguing for short timelines, but might involve making the case that preparations need to be made well in advance (or explaining why the problem cannot simply be solved at a later time).

Not extinction

A large number of comments invoked the theme that AI would not cause extinction. In itself this may not seem very informative. However, this may still raise questions for how best to communicate AI risk. It is unclear how well communication about AI risk which specifically refers to the risk of “extinction” compares in its effect to communication which refers more generally to (severe) harms from AI. If people find the threat of extinction specifically implausible, then this referring to this may be less persuasive than other messaging. It is possible that there may be a tradeoff with communications which invoke more severe outcomes at the cost of lower general plausibility. Moreover, this tradeoff may imply different approaches when speaking to people who are the most inclined towards scepticism of extreme AI risks vs others.

A number of comments noted that they did not think AI would cause extinction, but did think there were grounds to be concerned about other risks:

- “I dont believe AI poses an extinction risk although I do think it should be carefully used and monitored to ensure it does not have bad effects”

- “The risk of extinction from A.I. is overblown. We are in much more danger from governments around the world using the tech on their own people not some rouge A.I.”

- “I agree that AI is an issue, but it is an issue because it is a tool that can be used for harm, not a tool that is used for physical harm or extinction.”

‘Not extinction’ codes were also often combined with other themes which offered more explanation e.g. ‘Not yet’ (1.5%), ‘Not capable’ (1.5%), ‘Control’ and ‘Scifi’.

Not yet

The theme that AI was ‘Not yet’ a threat was also commonly mentioned, occurring in 21.6% of comments.

- “It is unlikely to happen anytime soon.”

- “I think that it's possible that very far ahead in the future it could become dangerous but right now, saying it should be focused on as much as nuclear war is an exaggeration”

- “There is no imminent threat of mass extinction due to AI.”

- “I don't think it is the big issue that many do because I remember writing a research paper about AI in 1984 about how dangerous it was and how it would destroy humanity and here we are 39 years later.”

- “I don't believe the level of AI we currently have is that dangerous…”

- “I don't believe AI will pose an extinction level threat within the next century.”

- “I don't think AI is anywhere near that level of concern yet. Maybe in a few years, but currently it's just taking information and rewriting it.”

- “AI is simply not advanced enough yet that we need to be concerned about this. Though it is not without potential harms (see the controversy surrounding the Replika "companion AI" for examples), it is not intelligent enough to actually make its own decisions.”

- “Any such risk is years, decades, if not a century off. It's not nearly as important an issue as any other for now.”

- “I don't think it's a large threat at this point in time, though AI ethics is definitely undervalued”

- “I can’t even get Siri to reliable dictate my text messages so it feels like we’re not close to this”

- “Right now we are at the dawn of the age of AI. AI doesn't have the ability to make large-scale decisions which could result in extinciton on its own. And due to hallucinations and it probably won't ever be in a position to directly cause an extinction.”

- “I don't think AI is either capable nor able enough to do something like this in its current form. AI development should be monitored and used for the greater good, but it is not a high risk for the human race as of right now.”

One thing that may be striking and surprising to readers concerned about AI safety is that many respondents seem to treat the fact that AI is (ex hypothesi) not currently capable of causing extinction as straightforwardly implying (without the need for additional explanation) that we don’t need to be concerned about it now. Of course, it is possible that many of these respondents simply endorse many additional unstated premises (e.g. and it won’t soon become capable; and we would be able to respond quickly to prevent or solve the problem in time etc.) which do imply that we don’t need to currently make it a global priority. Nevertheless, there may be value in better understanding people’s reasoning here and what the common cruxes are for their views.

Control

A sizable minority of respondents mentioned the idea that AI would be under our control in their response. Many of these referred to the idea that an AI would necessarily (as an AI) be under our control, while a smaller number invoked the idea that we could and would regulate it.

- “AI will always rely on humans.”

- “AI is an help created and programmed by humans, it has no conscience and I don't believe in these catastrophic fairy tales without scientific basis”

- “Risk of extinction? To me, that is really far-fetched, AI is still a programmable machine, not capable of taking over the world.”

- “AI is a man made creation which should be easy to program to act in responsible ways.”

- “I disagree because humans will always have the ability to program AI.”

- “I somewhat disagree because as long as AI is being regulated than it does not need to be extinct”

- “I think as long as AI is handled correctly from the get-go, it will not become a larger issue.”

- “I think AI take-over scenarios are massively exaggerated. Not only am I convinced that AI is extremely unlikely to try and "take over the world" because AI is only capable of doing that if AI is given a physical presence. Also, companies who create AI clearly can control exactly what it does because most AI projects have been able to filter out AI responses that contain illegal or sexual content.”

- I would disagree because Al is programed by people. It will only do as programed.

- I don't agree that much but I admit I don't fully understand AI. I disagree because with the level of understanding I have I don't get why the computer running the supposed dangerous AI cannot just be shut off.

Many of these statement may seem to be based on factual confusions or misunderstandings about AI e.g. that an AI would inherently be under human control because it can’t do anything it’s not programmed to do or can’t do anything without being told to by a human, or because AIs are already completely controlled (because they filter out illegal or sexual content). Future research could explore to what extent overcoming these misperceptions is tractable and to what extent debunking such misperceptions reduces people’s opposition, or whether these views are more deep-seated (for example, due to more fundamental cognitive biases in thinking about AI as agents).

Benefits

A similar number of respondents mentioned ‘Benefits’ as mentioned ‘Control.’

- “I disagree because I think we need to embrace AI. I do believe that it has damaging power so it does need to be controlled, like nuclear weapons but ultimately I see AI as something that can improve our daily lives if we control, use and implement it correctly. Now I do agree that we don't have a good track record of this. Social Media can be used for something positive but because the tech giants do not hold accountability for their own creations, Social Media has run amuck because it was left in the hands of humans. Because of this I certainly see the concerns of those opposing AI. If we learn from our past mistakes I think we can control and use AI for it's positives”

- “Because of its capacity to automate processes, better decision-making, personalize experiences, improve safety, advance healthcare, enable communication, spur innovation, and support economic growth, artificial intelligence (AI) is crucial. To guarantee responsible execution and reduce possible difficulties, ethical issues must be addressed.”

- “I only somewhat disagree because I think AI can be used for good and right now, we're in the creative phase of AI and I think although we may need some kind of rules, it shouldn't be overly strict.”

- “I somewhat disagreed because at this current time, AI seems much more beneficial than it is harmful. If it becomes more harmful in the future, that answer would likely change.”

- “I don't like the fearful/pessimistic approach. I think AI technology should be embraced.”

- “We focus too much on the doom and gloom of AI and not the advantages of AI. It is inevitable whatever AI becomes.”

- “I can see why it might be label as a threat because of how scary advance AI can become , but I also see the many potential good it can create in the technology world. So I kind of somewhat disagree to have it label a global priority.”

Why believing that AI could have benefits would be a reason to disagree with the statement may be unclear and, in fact, the reasons for referring to the benefits of AI as reasons to disagree with the statement were heterogeneous.

Some acknowledge that AI is a threat and needs control, but are still optimistic about it bringing benefits (“I do believe that it has damaging power so it does need to be controlled, like nuclear weapons but ultimately I see AI as something that can improve our daily lives if we control, use and implement it correctly.” Others simply deny that AI poses a threat of extinction and/or think that AI can increase our chances of survival “We are not at risk of extinction from AI. We may be able to use AI to our advantage to increease our survivability…” Others seemed to object more to pessimism about AI in general (“I don't like the fearful/pessimistic approach. I think AI technology should be embraced” or to think simply that the benefits would likely outweigh the harms (“I somewhat disagreed because at this current time, AI seems much more beneficial than it is harmful. If it becomes more harmful in the future, that answer would likely change.”)

This theme was relatively infrequently mentioned. Nevertheless, it is important to note that in one of our prior surveys, just under half (48%) of respondents leaned in the direction of thinking that AI would do more good than harm (compared to 31% believing it would do more harm than good). Responses about whether AI would cause more good than harm were also associated with support for a pause on AI research and for regulating AI.

Not capable / Not physical

Responses in the ‘Not capable’ category tended to invoke the idea that AI was not intelligent, sentient or autonomous enough to cause harm:

- “I disagree because there is no way a computer is intelligent enough or even close to becoming sentient. It is not on the level of war which can wipe us out in an instant”

- “I don't think AI is that big of a problem because I don't think that AI can have consciousness.”

- “AI is nowhere near powerful enough to be free-thinking enough to be dangerous. The only necessary blocks are to create tags for humans using it fraudulently.”

- “It seems unlikely for AI to have a reason to take over because they don’t experience actual emotion.”

- “AI is currently at an infant level of understanding with no concept of human emotions. Will it can react to basic human emotions it cannot understand them. AI is like a tool that can speed up information gathering and prcessing it does not really understand what the information is. For example, it can tell if the sun is shining or not but cannot tell or feel the warmth of the sunlight on your face.”

In addition, two responses mentioned that AI was not physical specifically, which we coded separately:

- “I think AI take-over scenarios are massively exaggerated. Not only am I convinced that AI is extremely unlikely to try and "take over the world" because AI is only capable of doing that if AI is given a physical presence…”

- “…I also don't understand why AI would want us to be extinct, or how it could possibly achieve it without a physical body.”

Many of these objections also seem to be points which are often addressed by advocates for AI safety. Public support for AI safety might benefit from these arguments being disseminated more widely. Further research might explore to what extent the general public have encountered or considered these arguments (e.g. that an AI would not need a physical body to cause harm), and whether encountering being presented with these arguments influences their beliefs.

Sci fi

Only a relatively small number of comments mentioned the ‘Scifi’ theme. These typically either dismissed AI concerns as “scifi”, or speculated that scifi was the cause of why people were concerned about it:

- I think these people watch a little too many sci fi movies

- It seems a bit overdramatic, just because that is a popular topic in fiction does not mean it's likely to be reality.

- I think people have this uninformed view that AI is dangerous because of movies and shows, but this really is not something to be concerned about.

- I just don't think that it's like in the movies to where the a.i will take over and cause us to go extinct. I feel like it, or they are computers and work off programs but yeah I guess they are taught to learn and do things constantly growing in knowledge but I just don't see it ever learning to take over humanity and causing such a catastrophe.

Responses from those who did not disagree

We did not formally code comments from respondents who did not disagree with the statement. Comments from those who agreed generally appeared less informative, since many simply reiterated agreement that AI was a risk (“It has a lot of risk potential, so it should be taken seriously”). Likewise, responses from those who neither agreed nor disagreed or selected ‘Don’t know’ typically expressed uncertainty or ambivalence (“I'm not knowledgeable on the topic”).

That said, these responses are still potentially somewhat informative, so we may conduct further analysis of them at a later date.

Demographic differences

We also explored whether there were any associations between different demographic groupings and particular types of responses. Although we mostly found few differences (though note that the small sample size of disagreeing responses would limit our ability to detect even fairly large differences), we found very large differences between the proportion of younger-than-median and older-than-median responses coded as mentioning ‘Other priorities.’ Specifically, younger respondents were dramatically more likely to be coded as referring to Other priorities (43.3%) than older respondents (27.8%).[6] This makes sense (post hoc), given that many of these responses referred to climate change or to other socially progressive coded causes. However, since these were exploratory analyses they should be confirmed in additional studies with larger sample sizes.[7]

This finding seems potentially relevant as it may suggest a factor which might attenuate support for AI risk reduction among the young, even if we might generally have expected support to be higher in this population.

Limitations and future research

While we think that this data potentially offers a variety of useful insights, there are important limitations. A major consideration is that individuals’ explicit statements of their reasons for their beliefs and attitudes may simply reflect post hoc rationalizations or ways of making sense of their beliefs and attitudes, as respondents may be unaware of the true reasons for their responses. If so, this may limit some of the conclusions suggested by these findings. For example, respondents may claim that they object to the statement about AI for particular reasons, even though their response is motivated by other factors. Alternatively, individuals’ judgements may be over-determined such that, even if the particular objections they mention in their comment were overcome, they would continue to object. And more broadly, as we noted, responses to the CAIS statement may differ from responses to specific AI policies or to AI safety more broadly. For example, people may support or object to parts of the CAIS statement specifically, or they might support particular policies, such as regulation, despite general skepticism or aversion to AI safety writ large.

Given these limitations, further research seems valuable. We have highlighted a number of specific areas for future research in the above report. However, some general priorities we would highlight would be:

- More in-depth qualitative research (via interviews or focus groups)

- The qualitative data we have gathered here was relatively brief and so only offered relatively shallow insights into respondents’ views. Using different methodologies we could get a richer sense of the different reasons why individuals might support or oppose AI safety proposals and explore these in more detail. For example, through being able to ask followup questions, we would be able to clarify individual views and how they would respond to different questions.

- Larger scale quantitative surveys to confirm the prevalence of these objections

- As these qualitative results were based on a small sample size and were not weighted to be representative of the US population, larger representative surveys could give a more accurate estimate of the prevalence of endorsement of these objections in the general population.

- This could also be used to assess more precisely how these objections vary across different groups and how far different objections are associated with attitudes towards AI safety.

- Experimental message testing research

- Message testing research could test some of the hypotheses suggested here experimentally. For example, we could examine whether communications which refer to extinction or to other priorities alongside AI risk perform better or worse than messages which do not do this. Or we could test whether arguments tackling the idea that other priorities mean we should not prioritise AI risk perform better than other messages.

- This could also assess whether different groups respond better or worse to different messages.

- Testing the effect of tackling misconceptions

- Surveys could empirically assess both the extent to which the wider population endorse different beliefs (or misconceptions) about AI risk (e.g. that AIs could not do anything without a human telling them to), as well as test the effect of presenting contrary information responding to these misconceptions.

This research is a project of Rethink Priorities. This post was written by David Moss. Initial coding was done by Lance Bush, and then reviewed and second-coded by David Moss. Plots were produced by Willem Sleegers. We would also like to thank Renan Araujo, Peter Wildeford, Kieran Greig, and Michael Aird, for comments.

If you like our work, please consider subscribing to our newsletter. You can see more of our work here.

- ^

We recruited 1101 respondents in total. After excluding those who agreed (‘Somewhat agree’, ‘Agree’, ‘Strongly Agree’) with the statement or who selected ‘Neither agree nor disagree’ or ‘Don’t know or no opinion’, we were left with 342 disagreeing respondents who were coded. Respondents were asked “Please briefly describe your response to the statement above (i.e. why you agreed, disagreed or neither agreed nor disagreed with the statement).” Respondents were recruited from Prolific.co, as with our previous survey, and was limited to US respondents above the age of 18 who had not previously participated in any of our AI related surveys.

- ^

Respondents who disagreed had a mean age 33.6, median 30, SD is 12.5, and n is 341 (1 missing); 148 males (43.5%) and 192 females (56.5%) and 2 missing (preferred not to say). 240 white respondents (71.2%), 42 Asian (12.5%), 27 Black (8.01%), 16 Mixed (4.75%) and 12 Other (3.56%)

- ^

These numbers are not weighted to be representative of the population and are based on a smaller sample size, so they should not be compared to our previous results.

- ^

Responses could be coded as instantiating multiple different themes at once. We coded comments based on their specific content, rather than potential logical implications of what was stated. For example, a statement that “AI is nothing to worry about”, might be taken to imply the belief that AI won’t cause extinction, but it would not be coded as ‘Not extinction’ due to the lack of explicit content suggesting this.

- ^

All percentages are percentages of the responses we coded (those from respondents who disagreed with the statement).

- ^

This is an odds ratio of 2.0, p=0.0027.

- ^

We also found a significant difference with older respondents more likely to mention ‘not extinction’, though the difference was smaller (19.1% vs. 28.4%), and the p-value for the difference is just below .05 at .048.

Will Howard @ 2023-09-08T14:05 (+13)

I think this is valuable research, and a great write up, so I'm curating it.

I think this post is so valuable because having accurate models of what the public currently believe seems very important for AI comms and policy work. For instance, I personally found it surprising how few people disbelieve AI being a major risk (only 23% disbelieve it being an extinction level risk), and how few people dismiss it for "Sci-fi" reasons. I have seen fears of "being seen as sci-fi" as a major consideration around AI communications within EA, and so if the public are not (or no longer) put off by this then that would be an important update for people working in AI comms to make.

I also like how clearly the results are presented, with a lot of the key info contained in the first graph.

David_Moss @ 2023-09-09T17:29 (+8)

Thanks!

For instance, I personally found it surprising how few people disbelieve AI being a major risk (only 23% disbelieve it being an extinction level risk)

Just to clarify, we don't find in this study that only 23% of people disbelieve AI is an extinction risk. This study shows that of those who disagreed with the CAIS statement 23% explained this in terms of AI not causing extinction.

So, on the one hand, this is a percentage of a smaller group (only 26% of people disagreed with the CAIS statement in our previous survey) not everyone. On the other hand, it could be that more people also disbelieve AI is an extinction risk, but that wasn't their cited reason for disagreeing with the statement, or maybe they agree with the statement but don't believe AI is an extinction risk.

Fortunately, our previous survey looked at this more directly: we found 13% expressed that there was literally 0 probability of extinction from AI, though around 30% indicated 0-4% (the median was 15%, which is not far off some EA estimates). We can provide more specific figures on request.

DavidNash @ 2023-09-11T14:16 (+3)

In 2015, one survey found 44% of the American public would consider AI an existential threat. In February 2023 it was 55%.

David_Moss @ 2023-09-11T15:44 (+7)

I think Monmouth's question is not exactly about whether the public believe AI to be an existential threat. They asked:

"How worried are you that machines with artificial intelligence could eventually pose a

threat to the existence of the human race – very, somewhat, not too, or not at all worried?" The 55% you cite is those who said they were "Very worried" or "somewhat worried."

Like the earlier YouGov poll, this conflates an affective question (how worried are you) with a cognitive question (what do you believe will happen). That's why we deliberately split these in our own polling, which cited Monmouth's results, and also asked about explicit probability estimates in our later polling which we cited above.

Emerson Spartz @ 2023-08-16T08:03 (+9)

This is interesting and useful, thanks for doing it!

SiebeRozendal @ 2023-08-20T09:42 (+8)

" A major consideration is that individuals’ explicit statements of their reasons for their beliefs and attitudes may simply reflect post hoc rationalizations or ways of making sense of their beliefs and attitudes, as respondents may be unaware of the true reasons for their responses."

I suspect that a major factor is simply: "are people like me concerned about AI?"/"what do people like me believe?"

This to me points out the issue that AI risk communication is largely done by nerdy white men (sorry guys - I'm one of those too) and we should diversify the messengers.

David_Moss @ 2023-08-21T15:37 (+12)

Thanks for the comment!

I think more research into whether public attitudes towards AI might be influenced by the composition of the messengers would be interesting. It would be relatively straightforward to run an experiment assessing whether people's attitudes differ in response to different messengers.

That said, the hypothesis (the AI risk communication being largely done by nerdy white men influences attitudes via public perceptions of whether 'people like me' are concerned about AI) seems to conflict with the available evidence. Both our previous surveys in this series (as well as this one) found no significant gender differences of this kind. Indeed, we found that women were more supportive of a pause on AI, more supportive of regulation, more inclined towards expecting harm from AI than men. Of course, future research attitudes towards more narrow attitudes regarding AI risk could reveal other differences.

SiebeRozendal @ 2023-08-21T18:06 (+4)

Good point, thanks David

daniel99 @ 2023-09-12T13:52 (+3)

thanks for such detailed research with us :) i am newbie and excited to be here

dr_s @ 2023-09-17T17:56 (+1)

I think not mentioning climate change, though technically it's correct to not consider it a likely extinction risk, was probably the biggest problem with the statement. It may have given it a tinge or vibe of right-wing political polarization, as it feels like it's almost ignoring the elephant in the room, and that puts people on the defensive. Perhaps a broader statement could have mentioned "risks to our species or our civilization such as nuclear war, climate change and pandemics", which broadens the kind of risks included. After all, some very extreme climate change scenarios could be an X-risk, much like nuclear war could "only" cause a civilization collapse, but not extinction. Plus, these risks are correlated and entangled (climate change could cause new pandemics due to shifting habitats for pathogens, AI could raise international tensions and trigger a nuclear war if put in charge of defense, and so on). An acknowledgement of that is important.

There is unfortunately some degree of "these things just sound like Real Serious Problems and that thing sounds like a sci-fi movie plot" going on here too, and I don't think you can do much about that. The point of the message should not be compromised on that - part of the goal is exactly to make people think "this might not be sci-fi any more, but your reality".

Linch @ 2023-09-17T23:38 (+6)

My guess is that mentioning climate change will get detractors on the right who dislike the mention of climate, AND detractors on the left who consider it to diminish the importance of climate change.

David_Moss @ 2023-09-18T22:09 (+6)

Investigating the effects of talking (or not talking) about climate change in different EA/longtermist context seems to be neglected (e.g. through surveys/experiments and/or focus groups), despite being tractable with few resources.

It seems like we don't actually know either the direction or magnitude of the effect, and are mostly guessing, despite the stakes potentially being quite high (considered across EA/longtermist outreach).

dr_s @ 2023-09-24T07:09 (+3)

My impression is that on the left there is a strong current that tries to push the idea of EAs being one and the same with longtermists, and both being lost after right-wing coded worries and ignoring the real threats (climate change). Statements about climate change not being an existential threat are often misinterpreted (even if technically true, they come off as dismissal). In practice, to most people, existential (everyone dies) and civilizational (some people survive, but they're in a Mad Max post-apocalyptic state) risks are both so awful that they count as negative infinity, and warrant equal effort to be averted.

I'm going to be real, I don't trust much the rationality of anyone who right now believes that climate change is straight up fake, as some do - that is a position patently divorced from reality. Meanwhile there's plenty of people who are getting soured up on AI safety because they see it presented as something that is being used to steal attention from it. I don't know precisely how can one assess the relative impacts of these trends, but I think it would be very urgent to determine it.

Linch @ 2023-09-25T18:45 (+11)

Thanks for engaging.

In practice, to most people, existential (everyone dies) and civilizational (some people survive, but they're in a Mad Max post-apocalyptic state) risks are both so awful that they count as negative infinity, and warrant equal effort to be averted.

So I think this is a very reasonable position to have. I think it's the type of position that should lead someone to be comparatively much less interested in the "biology can't kill everyone"-style arguments, and comparatively more concerned about biorisk and AI misuse risk compared to AGI takeover risk. Depends on the details of the collapse[1] and what counts as "negative infinity", you might also be substantially more concerned about nuclear risk as well.

But I don't see a case for climate change risk specifically approaching anywhere near those levels, especially on timescales less than 100 years or so. My understanding is that the academic consensus on climate change is very far from it being a near-term(or medium-term) civilizational collapse risk, and when academic climate economists argue about the damage function, the boundaries of debate are on the order of percentage points[2] of GDP. Which is terrible, sure, and arguably qualify as a GCR, but pretty far away from a Mad Max apocalyptic state[3]. So on the object-level, such claims will literally be wrong. That said, I think the wording of the CAIS statement "societal-scale risks such as ..." is broad enough to be inclusive of climate change, so someone editing that statement to include climate change won't directly be lying by my lights.

I'm going to be real, I don't trust much the rationality of anyone who right now believes that climate change is straight up fake, as some do - that is a position patently divorced from reality.

I'm often tempted to have views like this. But as my friend roughly puts it, "once you apply the standard of 'good person' to people you interact with, you'd soon find yourself without any allies, friends, employers, or idols."

There are many commonly-held views that I think are either divorced from reality or morally incompetent. Some people think AI risk isn't real. Some (actually, most) people think there are literal God(s). Some people think there is no chance that chickens are conscious. Some people think chickens are probably conscious but it's acceptable to torture them for food anyway. Some people think vaccines cause autism. Some people oppose Human-Challenge Trials. Some people think it's immoral to do cost-effectiveness estimates to evaluate charities. Some people think climate change poses an extinction-level threat in 30 years. Some people think it's acceptable to value citizens >1000x the value of foreigners. Some people think John Rawls is internally consistent. Some people have strong and open racial prejudices. Some people have secret prejudices that they don't display but drives much of their professional and private lives. Some people think good internet discourse practices includes randomly calling other people racist, or Nazis. Some people think evolution is fake. Some people believe in fan death.

And these are just viewpoints, when arguably it's more important to do good actions than to have good opinions. Even though I'm often tempted to want to only interact or work with non-terrible people, in terms of practical political coalition-building, I suspect the only way to get things done is by being willing to work with fairly terrible (by my lights) people, while perhaps still being willing to exclude extremely terrible people. Our starting point is the crooked timbers of humanity, the trick is creating the right incentive structures and/or memes and/or coalitions to build something great or at least acceptable.

- ^

ie is it really civilizational collapse if it's something that affects the Northern hemisphere massively but results in Australia and South America not having >50% reduction in standard of living? Reasonable people can disagree I think.

- ^

Maybe occasionally low tens of percentage points? I haven't seen anything that suggests this, but I'm not well-versed in the literature here.

- ^

World GDP per capita was 50% lower in 2000, and I think most places in 2000 did not resemble a post-apocalyptic state, with the exception of a few failed states.

dr_s @ 2023-09-26T18:17 (+1)

But I don't see a case for climate change risk specifically approaching anywhere near those levels, especially on timescales less than 100 years or so.

I think the thing with climate change is that unlike those other things it's not just a vague possibility, it's a certainty. The uncertainty lies in the precise entity of the risk. At the higher end of warming it gets damn well dangerous (not to mention, it can be the trigger for other crises, e.g. imagine India suffering from killer heatwaves leading to additional friction with Pakistan, both nuclear powers). So it's a baseline of merely "a lot dead people, a lot lost wealth, a lot things to somehow fix or repair", and then the tail outcomes are potentially much much worse. They're considered unlikely but of course we may have overlooked a feedback loop or tipping point too much. I honestly don't feel as confident that climate change isn't a big risk to our civilization when it's likely to stress multiple infrastructures at once (mainly, food supply combined with a need to change our energy usage combined with a need to provide more AC and refrigeration as a matter of survival in some regions combined with sea levels rising which may eat on valuable land and cities).

I'm often tempted to have views like this. But as my friend roughly puts it, "once you apply the standard of 'good person' to people you interact with, you'd soon find yourself without any allies, friends, employers, or idols."

I'm not saying "these people are evil and irredeemable, ignore them". But I'm saying they are being fundamentally irrational about it. "You can't reason a person out of a position they didn't reason themselves in". In other words, I don't think it's worth worrying about not mentioning climate change merely for the sake of not alienating them when the result is it will alienate many more people on other sides of the spectrum. Besides, those among those people who think like you might also go "oh well these guys are wrong about climate change but I can't hold it against them since they had to put together a compromise statement". I think as of now many minimizing attitudes towards AI risk are also irrational, but it's still a much newer topic and a more speculative one, with less evidence behind it. I think people might still be in the "figuring things out" stage for that, while for climate change, opinions are very much fossilized, and in some cases determined by things other than rational evaluation of the evidence. Basically, I think in this specific circumstance, there is no way of being neutral: either mentioning or not mentioning climate change gets read as a signal. You can only pick which side of the issue to stand on, and if you think you have a better shot with people who ground their thinking in evidence, then the side that believes climate change is real has more of those.