What is the overhead of grantmaking?

By MathiasKB🔸 @ 2022-06-15T17:07 (+59)

The effective altruism community is very fond of grants. This makes a lot of sense as it provides a way for our community to distribute its capital. The last FTX round had an extraordinary amount of applications. Only a small percentage of those were funded. For every funded application, dozens of hours were spent writing unsuccessful applications which would otherwise have been spent more productively.

This made me wonder. Is running all these grants actually a good use of time and resources? How would we even know?

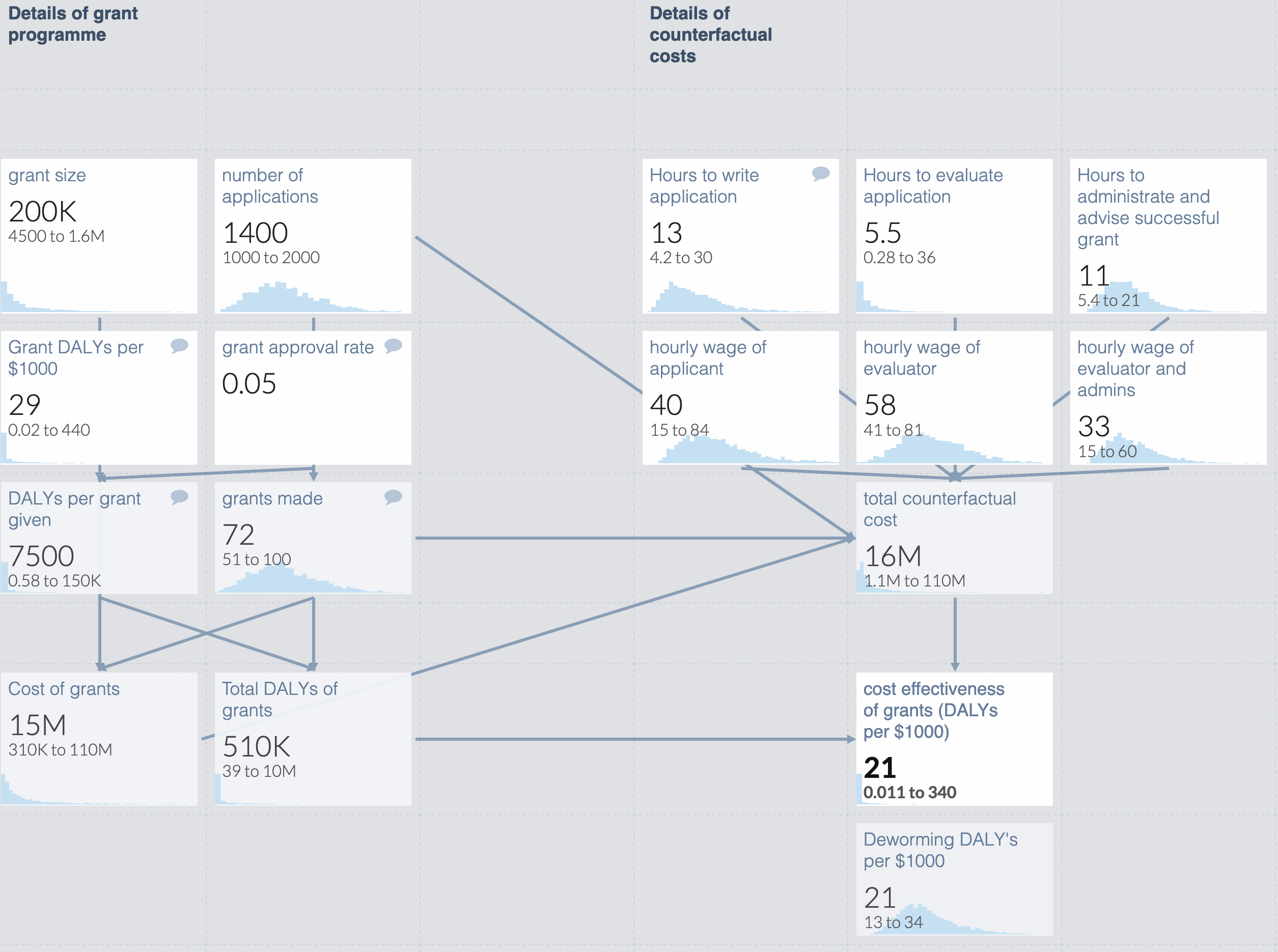

To clarify my thinking, I made a guesstimate model that lets you plug in estimates of relevant variables and see how the cost-effectiveness of a grant programme changes.

In this post I will:

1. Briefly explain the model and my takeways from creating it

2. Conclude overhead is not as big a deal as I thought it was

About the model

The model is pretty simple. It assumes there are the following costs associated with grantmaking:

- Money given out in grants

- Time spent writing applications

- Time spent evaluating applications

- Time spent administering and advising grants

The value of each grant is its cost-effectiveness multiplied by the grant's size.

The more grants are approved, the less cost effective the average grant becomes.

Finally I divide the impact the approved grants with the the costs of running the grant programme, resulting in a measure of cost-effectiveness. If this cost-effectiveness comes out higher than the counterfactual, for example a standard givewell charity, then the grant programme is worth running.

You can view and play around with the model here: https://www.getguesstimate.com/models/20444

Lessons on overhead to draw from the model

Can we learn anything from this model? That's debatable! Nevertheless, here are my own takeaways.

Overhead is not a big deal for large grants

As the average size and impact of grants increases, the overhead costs of grantmaking quickly start paling in comparison. The cost of giving 75 grants with an average size of $200,000, amounts to 15 million dollars given away. In turn, assuming my estimates weren't way off, the cost of writing and evaluating 1000 applications is only about 1 million. The size and number of grants given doesn't have to be very significant before the overhead of writing, evaluating, and administering grants becomes a negligible factor.

My initial worry that grants could be a near zero-sum game due to time spent on applications is not nearly as big a deal as I had anticipated.

Another takeaway from this is that grantmakers should spend evaluation time in proportion to grant size. The smaller the grant, the larger a percentage of the cost is taken up by overhead. For cheap grants, grantmakers should aim to make a decision quickly and move on.

Overhead might sometimes be a problem for smaller grant programmes

If you reduce the average size of the grants given out to $25k, overhead starts to look closer to a third of the total expenses.

In such circumstances, thinking about ways to reduce overhead may yield modest increases to the cost effectiveness of the grant programme.

Overhead is overshadowed by the expected value of each application

Much more important than overhead, is whether you expect the grants you're giving out to actually be impactful. Assumptions going into the the distribution of impact for grants, results in wild swings in the cost-effectiveness of the grant programme.

The expected impact of the average grant given out is determined by three factors:

- The distribution in the expected value of applications if funded

- Grantmakers ability to pick applicants from the top of this distribution

- Percentage of applications they decide to fund.

Are each application equally likely to have a high impact? Surely not. How much better should we expect the best applications to be from the median? The more fat-tailed a distribution, the more important becomes the grantmaker's ability to select the best applications.

In the model I assume a high sigma (fat-tailed) lognormal distribution of charity effectiveness. With such a distribution you will expect most grants given to be much less effective than givewell, with a few heavy-hitters that more than make up for it. The exact parameters of this distribution is extremely important. How much more cost-effective will the top percentile be than the average Givewell charity? 2x, 10x, 100x, more? How good are grantmakers at picking the winners? If you only expect the top applications to be twice as effective as Givewell and your grantmakers can't reliably identify them, the grant programme is probably not worth running.

If my distribution reflects reality, grantmakers should pass on proposals that don't have potential for a high upside. But the only basis I have for this choice of distribution is my intuition. I'd be curious to see actual data on the distribution of impact from grants made by EA organisations. I imagine an organisation's list over which grants they think had what expected value are sensitive and probably best kept private, but maybe they would be able to share anonymized distributions.

Grantmaking is cost-effective, sometimes, maybe.

When I plug in my best estimates of each variable for a programme giving out expensive grants, the cost-effectiveness of grantmaking is competitive with deworming. Not bad!

But the model is a suuper flawed representation of reality:

- There's a bunch of details I skipped modeling

- A few things that are modelled incorrectly (grants can have negative EV, for example!).

- There's also multiple estimates which definitely should be broken into multiple components.

- While global poverty has a clear counterfactual way to spend money, it's less clear what the counterfactual spend would be for existential risk reduction or animal welfare.

I wouldn't conclude much about cost-effectiveness of grantmaking from the model.

Overhead is rarely the deciding factor

My prior going in to this was that the EA community spending thousands of hours writing applications seemed like a massive waste of everyone's time. Now I'm less concerned about it.

I still have a few reservations, for example I think modeling using hourly wages instead of EV-per-hour probably results in a significant undervalution of an EA's time.

Nevertheless, it's nice to know that grants at least aren't obviously lighting cash on fire.

blonergan @ 2022-06-15T19:54 (+27)

I think the appropriate cost to use for evaluators, applicants, and admins is the opportunity cost of their time. For many such people this would be considerably higher than their wage and outside the ranges used in the model. I don't know that this would change your conclusion, but it could significantly affect the numbers.

Gavin @ 2022-06-15T17:29 (+18)

This could be a good submission for the criticism contest. Clean, tightly reasoned, not going in with the bottom line written.

karthik-t @ 2022-06-16T01:27 (+8)

The important statistic for cost effectiveness is really the cost effectiveness of the marginal grant. If the marginal grant is very cost effective, then money is being left on the table and we should be awarding more grants! Conversely, if the marginal grant is very cost ineffective, then grant making is inefficiently large even if the average grant is cost effective. In that situation we could improve cost-effectiveness by eliminating some of those marginal grants.

The distance between the marginal grant and the average grant is increasing in the fat-tailedness of the distribution, so for very fat tailed distributions, this difference is extremely important.

Stefan_Schubert @ 2022-06-15T20:38 (+8)

Thanks for doing this; I think this is useful. It feels vaguely akin to Marius's recent question of the optimal ratio of mentorship to direct work. More explicit estimates of these kinds of questions would be useful.

Blonergan's comment is good, though - and it shows the importance of trying to estimate the value of people's time in dollars.

JeffreyK @ 2022-06-16T03:08 (+2)

This kind of reminds me of the music biz...A&R reps would scour clubs for bands and sign them to a record deal. Most of the bands would lose money but a few would hit it big paying for the rest. Similar also VC funds funding startups. It's the nature of the beast. In this way you can also understand that there will always be less and more effective charities and while seeking to be more efficient is good, the lesser still play their role by populating the range and hopefully evolving forward...for every starting player there always needs to be a bench.