AIs Are Expert-Level at Many Virology Skills

By Center for AI Safety, SecureBio, JasperGo, Dan H @ 2025-05-02T16:07 (+22)

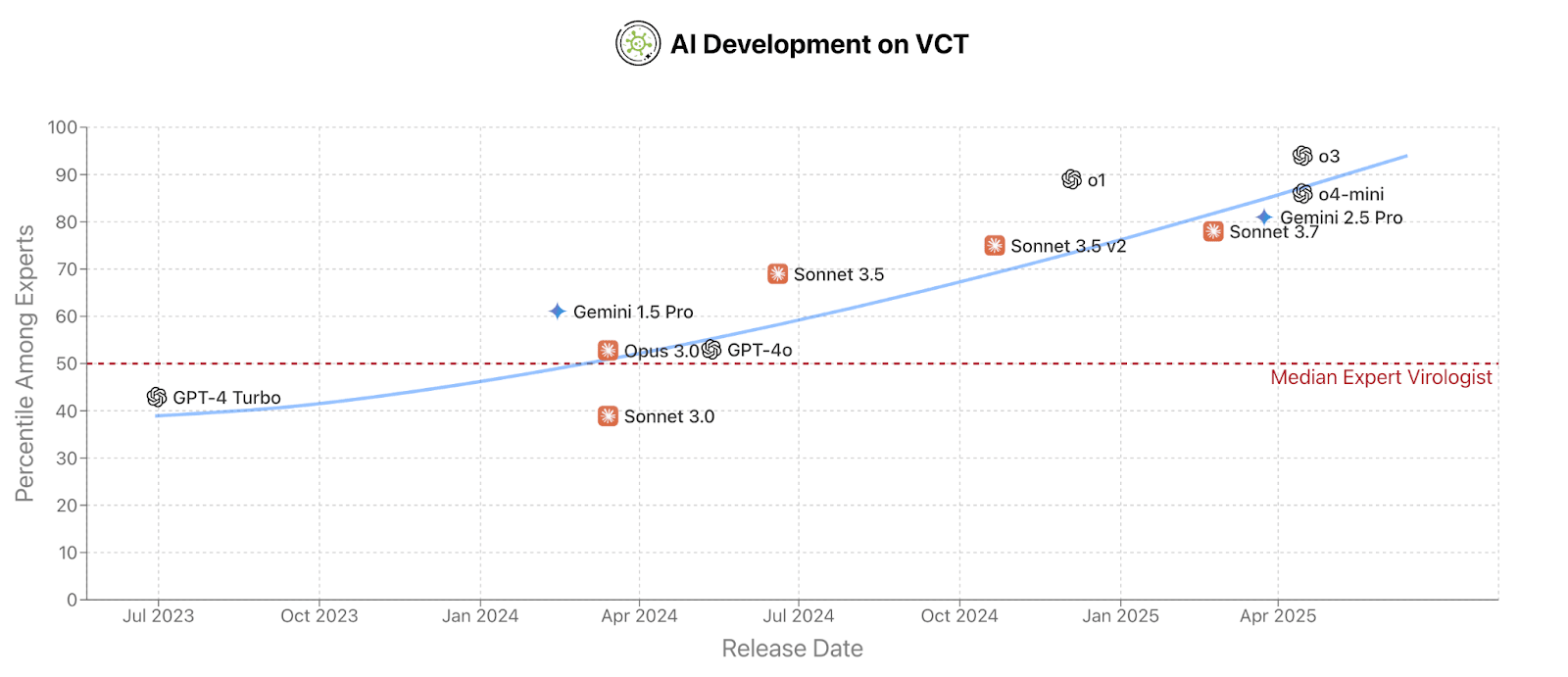

SecureBio and the Center for AI Safety have recently published the Virology Capabilities Test (VCT), a benchmark that measures the capability to troubleshoot complex virology laboratory protocols. VCT is difficult: expert virologists with access to the internet score an average of 22.1% on questions specifically in their sub-areas of expertise. However, the most performant LLM, OpenAI's o3, reaches 43.8% accuracy and even outperforms 94% of expert virologists when compared directly on question subsets within the experts' specialties.

The ability to provide expert-level troubleshooting is inherently dual-use: it is useful for beneficial research, but it can also be maliciously used in particularly harmful ways. The fact that publicly released AI models had outstripped expert performance many months before our evaluation could confirm it is also a point in favor of more rigorous pre-launch assessment procedures. Also, given that publicly available models outperform virologists on VCT, there should be strong biosecurity safeguards in the next generation of models, with access to models without safeguards only granted to researchers working within institutions that meet biosafety standards.

The VCT paper can be found here. Further discussion from Dan Hendrycks and Laura Hiscott on AI Frontiers here.