Blueprints (& lenses) for longtermist decision-making

By Owen Cotton-Barratt @ 2020-12-21T17:25 (+49)

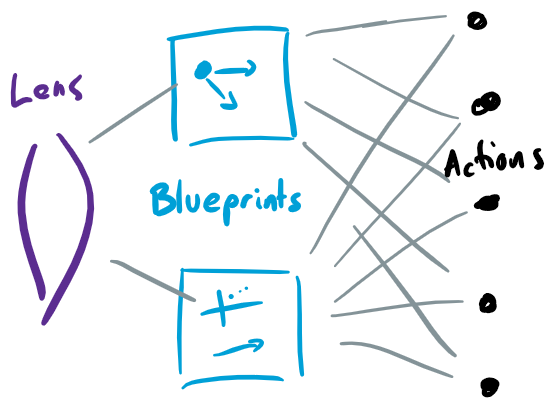

tl;dr: This is a post about ontologies. I think that working out what to do is always confusing, but that longtermism poses large extra challenges. I'd like the community to be able to discuss those productively, and I think that some good discussions might be facilitated by having better language for disambiguating between different meta-levels. So I propose using blueprint to refer to principles for deciding between actions, and lens to refer to principles for deciding between blueprints.

My preferred definition of "longtermism" is:

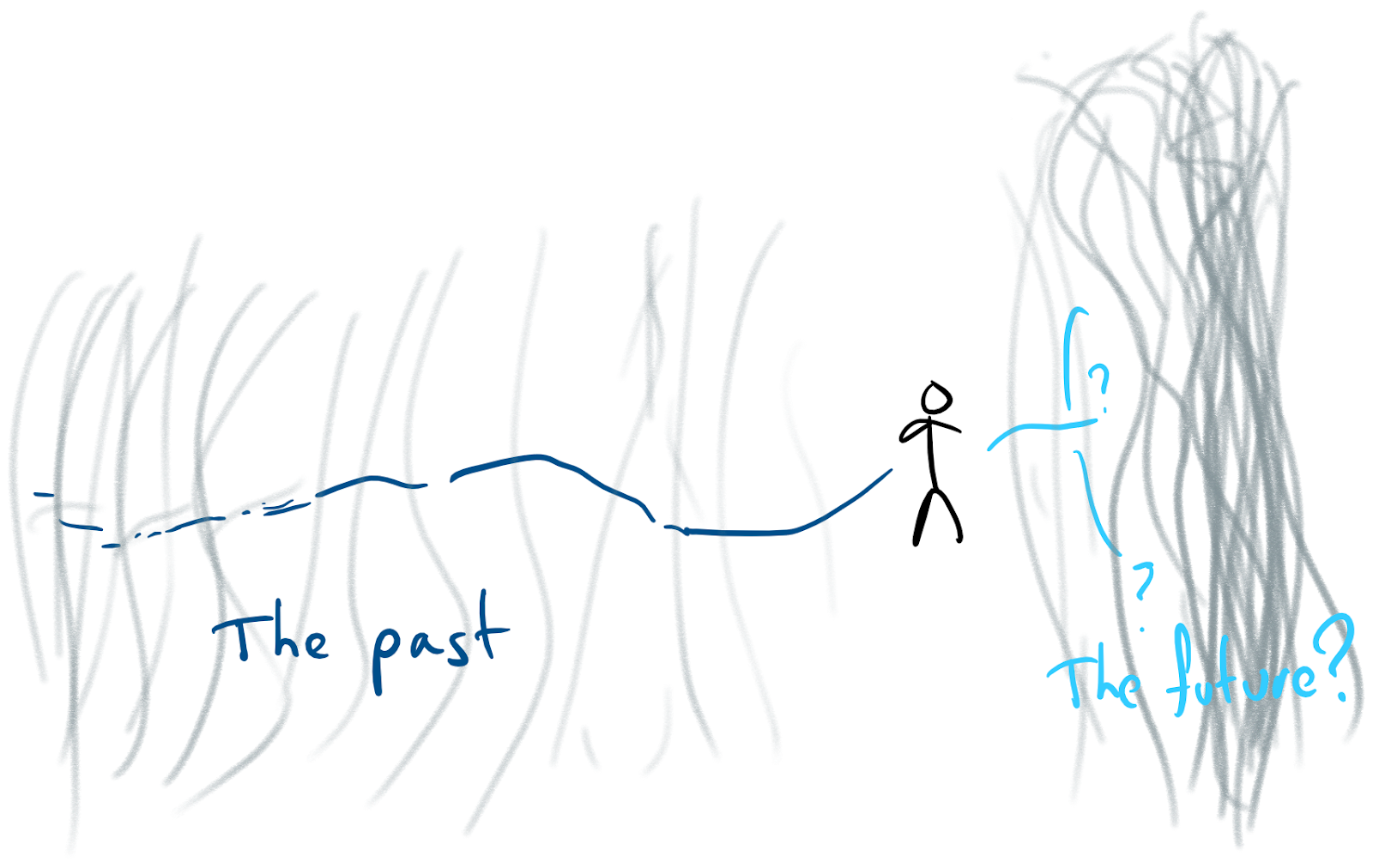

Longtermism is the view that the very long term effects of our actions should be a significant part of our decisions about what to do today.

This is ostensibly action-guiding. But how in practice am I supposed to apply it to decisions I'm personally making? What about my friends? We can see (imperfectly) into the past, but the future is closer to a fog bank of uncertainty, so it's extremely hard to pin down what the very long term effects of our actions will be in order to consider those directly to make decisions. (This is sometimes discussed as the problem of "cluelessness".)

Blueprints

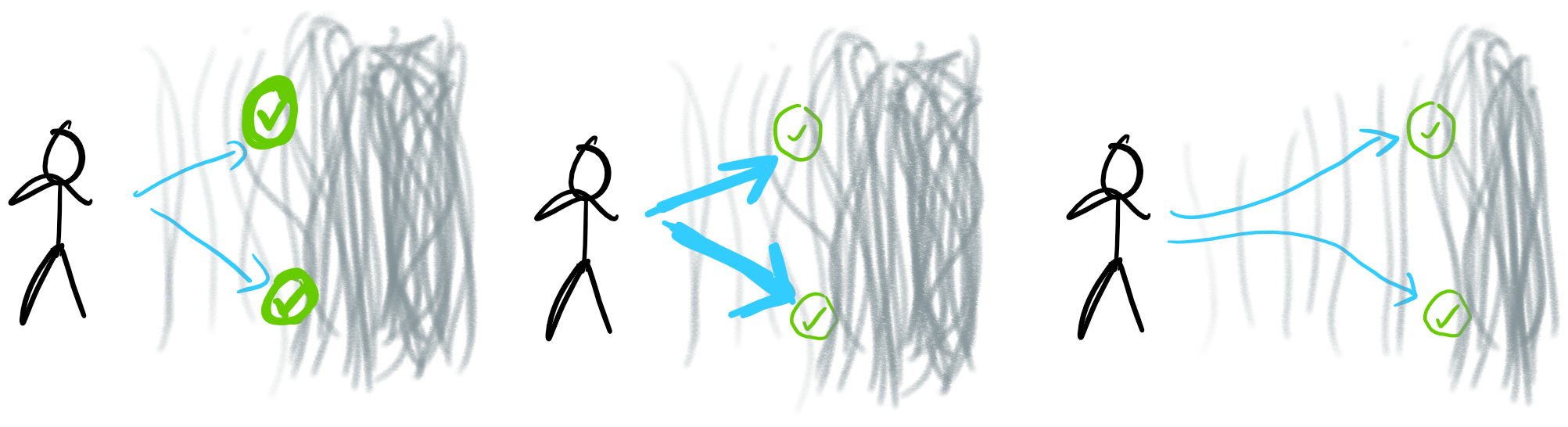

Pragmatically, if we want to make decisions on longtermist grounds, we are going to end up using some heuristics (implementable by a human mind on a reasonable timescale) to do that. These could be discussed explicitly among the longtermist community, or arise organically as each individual wrestles with the epistemic challenges for themselves. On the whole I prefer to have more explicit discussion.

Let's say that a blueprint for longtermism is a set of decision-guiding principles (for boundedly rational actors) that aim to provide good (longtermist) behaviour when followed.

There are lots of different possible blueprints, varying a lot in both complexity (how hard they are to describe) and quality (how good they would be to follow). For example here's a mix of possibilities that I can describe succinctly (not all of which I'm a fan of):

- Invest in having accurate views about the future, and then take the actions that are predicted to be best according to those views

- Try to maximise the donations to a particular set of charities (which have been vetted as good uses of money)

- Take steps to build support for "longtermism" as an idea, and particularly for getting well-positioned and thoughtful adherents

- Think about the most likely causes of astronomical suffering, and look for actions that make them less likely

- Try to improve decision-making across society, particularly in places which have more leverage over other parts of society

- Any of the above subject to constraint of "behave in a way perceived by commonsense morality to be good"

- The heuristics of "be helpful to people" and "try to steer so that you end up working in places close to things that seem like they might matter"

- [My implicit blueprint for longtermism; i.e. the principles I actually use when I want to select actions on longtermist grounds, which I may not know how to codify explicitly]

Since we can't anticipate the long-run effects of our actions very well, blueprints try to help by providing proxy goals that are things to aim for which lie (at least partially) within our foresight horizons (e.g. "intellectual progress made on AI alignment by 2040"), and/or other heuristics that specify how to choose good actions without necessarily referring to the effects of the actions (e.g. "be considerate"). Some blueprints might specify a whole lot of different proxy goals and heuristics (in fact I guess this is the norm for people's implicit blueprints).

Lenses

OK, great, but now we're left with the problem of deciding between blueprints. Whatever our deep uncertainty about how to choose actions, can it really be easier to handle it at the meta-level?

I think in some ways it can. Since the best blueprint is likely to vary less between people than the best action, we can put more time and attention on trying to get a good blueprint than we could possibly spare for each object-level decision. (Of course part of a blueprint might be a body of case studies.) We can involve more people in those discussions. We can look at them using multiple different perspectives to compare.

Let's say that a lens on longtermism is a perspective that we can apply to get some insight into how good a given blueprint is. Just as it's too challenging to comprehensively assess how good different actions are, and we must fall back on some approaches (blueprints) for making it easier to think about, it's also too challenging to comprehensively assess how good different blueprints are, so we need some way to get tractable approximations to this.

Some example lenses:

- Timeless lens

- Would this have been a good blueprint 50 years ago? 150? 500?

- Does it seem like it would still be good if almost the whole of society was trying to follow it?

- Optimistic lens

- Assume someone was doing a really good job within the bounds of the advice laid out in the blueprints; how good would that seem?

- Pessimistic lens

- Assume someone was technically following the advice in the blueprint but otherwise not doing a great job of things / making some errors of judgement; how good would that seem?

- Simplification lens

- If someone rounded the blueprint off to something simpler and then followed that, how good would it seem?

- Maxipok lens

- Does it seem like following this will lead to a reduction in existential risk?

- Passing-the-buck lens

- Will this lead to people in the future being better equipped (motivationally, materially, and epistemically) to do things for the good of the long run future?

The idea is not that we choose a single best lens, but rather that each of these gives us some noisy information about how (robustly) good the blueprint in question is. Then we can have the most confidence in blueprints that simultaneously look good under many of these lenses.

How do we decide what lenses to use, or which to put more weight on? Doesn't the problem just regress? All I can say to this is that it seems like it's getting a bit easier as we step up meta-levels. I think that I can kind of recognise what are reasonable lenses to use, and I guess that my judgements will be reasonably correlated with those of other people who've spent a while thinking about it. I do think that there could be interesting discussions about how to choose lenses, but I don't presently feel like it's a big enough part of the puzzle to be worth trying to name the perspectives we'll use for

[Of note: my example lenses don't all feel like they're of the same type. For instance "maxipok lens" and "passing-the-buck lens" kind of look like they're asking how good a blueprint would be achieving a particular proxy goal. This suggests that there isn't a clean line between what can be used as a blueprint versus a lens. It also means that you could combine some of these lenses, and consider e.g. the timeless+maxipok lens asking whether following the blueprint would have been good for reducing existential risk at various past points. Perhaps a cleaner set of concepts could be worked out here.]

Implications

I think that longtermism poses deep problems of bounded rationality, and working out how to address those (in theory and in practice) is crucial if the longtermist project is to have the best chance of succeeding at its aims. I think this means we should have a lot of discussion about:

- the relative value of various proxy goals and heuristics;

- what good blueprints would look like (& how this might vary in different situations); &

- how useful different lenses are (& how to go about finding new good ones).

Fortunately, I think that a lot of existing discussion is implicitly about these topics. But it seems to me like sometimes they get blurred together, and that we'd have an easier time discussing them (and perhaps thinking clearly ourselves) if we had a better ontology. This post lays out suggestion for such an ontology.

Of course ultimately we should judge frameworks by their fruits. This post is laying out a framework, and not applying it. I've found an earlier (& more confused!) version of this framework already somewhat helpful for my own thinking, and am hopeful that this will be helpful for others, but we'll have to wait and see. So this post has two purposes:

- Present the ontology as I have it now

- In the hope that that may be useful for others for their own thinking

- In the hope that it may inspire conversations explicitly about proxy goals, blueprints, etc.

- As something I can refer back to later if I want to present work which builds on it

- Invite discussion and critique of the ontology itself

- Suggestions for refactorings, complaints about things it's missing seem particularly valuable

- Ideas for changes/upgrades to the terminology are of course welcome

Finally I want to invite readers to attempt to describe their own implicit blueprints. I think this might be quite difficult -- I've gone through a couple of iterations for myself, and don't yet feel satisfied with the description -- but if it feels accessible to someone I think it might lead to some really good discussion.

weeatquince @ 2020-12-28T16:52 (+10)

Thank you for the excellent post

I want to invite readers to attempt to describe their own implicit blueprints.

My primary blueprint is as follows:

I want the world in 30 years time to be in as good a state as it can be in order to face whatever challenges that will come next.

This is based of a few ideas.

- Firstly, that almost no business government or policy-maker ever makes plans beyond a 25-30 year time horizon and my own understanding of how to manage situations of high uncertainty put in place the 30 year time limit.

- Secondly there is an idea that is common across long-term policy making of setting out a clear vision of what you want to achieve in the long-term is a useful step. The Welsh Future Generations Bill and the work on long-term policy making in Portugal from the School of International Futures are examples of this.

Maybe you could describe this as a lens of: What is current best practice in long-term policy thinking?

This is combined with a few alternative approaches (alternative blueprints) such as: what will the world look like in 1, 2, 5, 10, 20 years? What are the biggest risks the world will face in the next 30 years? Of issues that really matter what what is politically of interest right now.

I think that longtermism poses deep problems of bounded rationality, and working out how to address those (in theory and in practice) is crucial if the longtermist project is to have the best chance of succeeding at its aims. I think this means we should have a lot of discussion about: ...

I strongly agree.

However I think very few in the longtermism community are actually in need of lenses and blueprints right now. In fact sometimes I fell like I am the only one thinking like this. Maybe it is useful to staff like you at at FHI deciding what to research and it is definitely useful to me as someone working on policy making from a longtermist perspective. But most folk are not making any such decisions .

For what it is worth one of my main concerns with the longtermism community at present is it feels very divorced from actual decisions about how to make the world better. Worse it sometimes feels like folk in the longtermism community think that expected value calculations are the only valid decision making tool. I plan to write more on this at some point and would be interested in talking though if you fancy it.

Owen_Cotton-Barratt @ 2021-01-04T14:06 (+7)

My primary blueprint is as follows:

I want the world in 30 years time to be in as good a state as it can be in order to face whatever challenges that will come next.

I like this! I sometimes use a perspective which is pretty close (though often think about 50 years rather than 30 years, and hold it in conjunction with "what are the challenges we might need to face in the next 50 years?"). I think 30 vs 50 years is a kind-of interesting question. I've thought about 50 because if I imagine e.g. that we're going to face critical junctures with the development of AI in 40 years, that's within the scope where I can imagine it being impacted by causal pathways that I can envision -- e.g. critical technology being developed by people who studied under professors who are currently students making career decisions. By 60 years it feels a bit too tenuous for me to hold on to.

I kind of agree that if looking at policy specifically a shorter time horizon feels good.