EA Forum Prize: Winners for December 2020

By Aaron Gertler 🔸 @ 2021-02-16T08:46 (+26)

CEA is pleased to announce the winners of the December 2020 EA Forum Prize!

- In first place*: “"Patient vs urgent longtermism" has little direct bearing on giving now vs later,” by Owen Cotton-Barratt.

- Second place ($500): “My mistakes on the path to impact,” by Denise Melchin.

- Third place ($300): “Health and happiness research topics — Part 1: Background on QALYs and DALYs,” by Derek Foster.

- Fourth place ($200): “Big List of Cause Candidates,” by Nuño Sempere.

- Fifth place ($200): “Improving Institutional Decision-Making: a new working group,” by Ian David Moss, Laura Green, and Vicky Clayton.

*Because Owen is a trustee of CEA, he won't receive the $500 prize associated with first place. We've bumped up the second and third-place prizes to match the typical first- and second-place prizes, respectively.

The following users were each awarded a Comment Prize ($75):

- Alexander Gordon-Brown on comparing the cost-effectiveness of GiveWell’s top charities and charities focused on “big-picture issues”

- tamgent on paths to impact for civil servants

- Ross Rheingans-Yoo on why funds being “funged” might not be so bad for grantmakers

- Arepo on “minor” global catastrophic risks

- Asya Bergal on some reasons that applications to the Long-Term Future Fund have been rejected

See here for a list of all prize announcements and winning posts.

What is the EA Forum Prize?

Certain posts and comments exemplify the kind of content we most want to see on the EA Forum. They are well-researched and well-organized; they care about informing readers, not just persuading them.

The Prize is an incentive to create content like this. But more importantly, we see it as an opportunity to showcase excellent work as an example and inspiration to the Forum's users.

About the winning posts and comments

Note: I write this section in first person based on my own thoughts, rather than by attempting to summarize the views of the other judges.

”Patient vs urgent longtermism" has little direct bearing on giving now vs later

This post is a response to having heard multiple people express something like "I'm persuaded by the case for patient longtermism, so I want to save money rather than give now"[...] there is no direct implication that "patient longtermists" should be less willing to spend money now than "urgent longtermists".

I love that the very first sentence of the post explicitly states its purpose, and that the purpose is to make progress in a long-running discussion with practical implications for hundreds or thousands of people in the community. Posts in that category tend to be among the very best on the Forum.

Other things I like:

- Owen uses bold text for major points! This is easy to do and very helpful. More people should do it.

- He also discusses many nuances to his conclusions, and points out specific types of people/investments for whom these nuances might apply.

- Finally, he ends the post with a discussion of a thought experiment — this helped me link my understanding of his conceptual points into an actual model of the world. I also found it useful to compare how our intuitions differed around the thought experiment, and how that might lead me to a different set of conclusions.

My mistakes on the path to impact

Doing a lot of good has been a major priority in my life for several years now. Unfortunately I made some substantial mistakes which have lowered my expected impact a lot, and I am on a less promising trajectory than I would have expected a few years ago. In the hope that other people can learn from my mistakes, I thought it made sense to write them up here!

This is now the highest-karma Forum post of all time — this isn’t a perfect measure of quality, but it’s still a good indicator of how many people found the author’s story useful or insightful. Another indicator: The many excellent comments, some of which could have been strong posts unto themselves.

Things I appreciated in this post:

- The way the post was split up into background/context, a list of specific mistakes, and a discussion of “systematic errors” that led to the mistakes being made. This combines a personal story (a naturally interesting “hook”) with multiple forms of advice — some aimed at people seeking EA jobs, some with much broader applications (e.g. “I underestimated the cost of having too few data points”).

- If I ever publish a similar post (which is much more likely thanks to this post), I’m likely to steal this format and produce better writing as a result.

- Denise’s open invitation for people to criticize/re-evaluate her story: “Please point out if you think I forgot some mistakes or misanalysed them.” When a post is this personal, it can be hard for people to comment on it “neutrally”, as they might if they read the story somewhere other than an online forum where the author would see their comments. The aforementioned line does a lot to counteract this.

- That said, I wouldn’t hold it against an author who wanted to share their story without inviting “critical assessment” — but given that Denise was interested in seeing such assessment, I’m glad she specified this.

- In the conclusion, Denise discusses how she’s found a more productive way to engage with effective altruism, even after her difficult experience. It would have been easy for this post to focus entirely on mistakes and misery; instead, it shares a positive vision for how someone with similar concerns can approach the search for impact.

Health and happiness research topics — Part 1: Background on QALYs and DALYs

HALYs have a number of major shortcomings in their current form. In particular, they:

- neglect non-health consequences of health interventions

- rely on poorly-informed judgements of the general public

- fail to acknowledge extreme suffering (and happiness)

- are difficult to interpret, capturing some but not all spillover effects

- are of little use in prioritising across sectors or cause areas

This can lead to inefficient allocation of resources, in healthcare and beyond.

This is a deeply-researched introduction to a crucial question in EA, and one that I expect to recommend to many, many people. In college, I read a long book on the science of subjective well-being; if I could, I’d take back the time I spent on that, read every word of this post, and end up equally well-informed with hours to spare.

Features of the post that I liked:

- Derek’s use of a sequence to hold this post and the rest of the series. If you have a collection of related posts (or know of someone else’s collection), please consider sequencing it!

- The use of many tables, diagrams, and images, even for things like questionnaires which could conceivably have been rendered in text.

- The beautiful “Key takeaways” section, which effectively captures the most important points/elements of the post.

- The discussion of further research priorities in this field — I’ve mentioned this in many other prize posts, but it’s really good when a post gives interested readers further questions they can explore. This helps the Forum serve as an engine of intellectual progress.

- I’d have been even happier to see a tidy list of research questions all in one section — though I can’t really fault the author for publishing 98% of the post I wanted, rather than 100% (and that list of questions might still happen in one of the seven forthcoming posts in this series).

Big List of Cause Candidates

In the last few years, there have been many dozens of posts about potential new EA cause areas, causes and interventions. Searching for new causes seems like a worthy endeavour, but on their own, the submissions can be quite scattered and chaotic. Collecting and categorizing these cause candidates seemed like a clear next step.

This certainly is a big list of cause candidates. Mostly, it seems really good that someone has produced a near-comprehensive list that can be used for reference (I’ve linked to it a few times already). Specific things that are nice about the way the list was made:

- The authors often share post excerpts and/or summaries for their categories, rather than simply linking to related posts. This gives readers a chance to explore EA’s broad collection of causes without having to read dozens of different articles.

- The links to relevant EA Forum tags, rather than only lists of articles. The tags will be naturally updated over time, which gives this post additional longevity as a resource.

- The relative lack of filtering. I don’t think some of the areas listed here are very plausible as EA cause areas — but I also suspect the authors felt the same way, and I’m glad they chose to present (almost?) everything rather than being more selective. After all, causes they excluded might have been causes that I, or any number of other readers, would have wanted on the list.

- The authors’ detailed description of their search method — if someone wants to replicate this post in five years to produce an even bigger list, they can just read the instructions.

Improving Institutional Decision-Making: a new working group

This post starts with a summary section that includes bold text. It was love at first sight. In fact, I’m going to just write the bold text and nothing else:

Recent and planned efforts to develop improving institutional decision-making (IIDM) as a cause area [...] IIDM remains underexplored [...] a working definition of IIDM [...] a new meta initiative aiming to disentangle and make intellectual progress on IIDM [...] you can get involved.

This is a good summary of what the post is about! Excellent work, team.

...anyway, this is exactly what I’d hope to see in a post introducing a new project. There are links to related resources and organizations, descriptions of the project’s plans and goals, and specific requests aimed at people who want to help (questions to answer, a form to fill out, and a description of the experience they’d find most valuable).

As a bonus, the post includes the best breakdown I’ve seen of what “improving institutional decision-making” might actually entail (from aligning institutions’ values to improving the accuracy of their forecasts).

The winning comments

I won’t write up an analysis of each comment. Instead, here are my thoughts on selecting comments for the prize.

I recently made an update to the linked post — it might be worth reading again, even if you’ve seen it before.

The voting process

The current prize judges are:

- Aaron Gertler

- Peter Hurford (chose not to vote this month)

- Rob Wiblin

- Larks

- Vaidehi Agarwalla

- Luisa Rodriguez (our newest member!)

All posts published in the titular month qualified for voting, save for those in the following categories:

- Procedural posts from CEA and EA Funds (for example, posts announcing a new application round for one of the Funds)

- Posts linking to others’ content with little or no additional commentary

- Posts which got fewer than five additional votes after being posted (not counting the author’s automatic vote)

Voters recused themselves from voting on posts written by themselves or their colleagues. Otherwise, they used their own individual criteria for choosing posts, though they broadly agree with the goals outlined above.

Judges each had ten votes to distribute between the month’s posts. They also had a number of “extra” votes equal to [10 - the number of votes made last month]. For example, a judge who cast 7 votes last month would have 13 this month. No judge could cast more than three votes for any single post.

The winning comments were chosen by Aaron Gertler, though other judges had the chance to nominate comments and veto comments they didn’t think should win.

Feedback

If the Prize has changed the way you read or write on the Forum, or you have an idea for how we could improve it, please leave a comment or contact me.

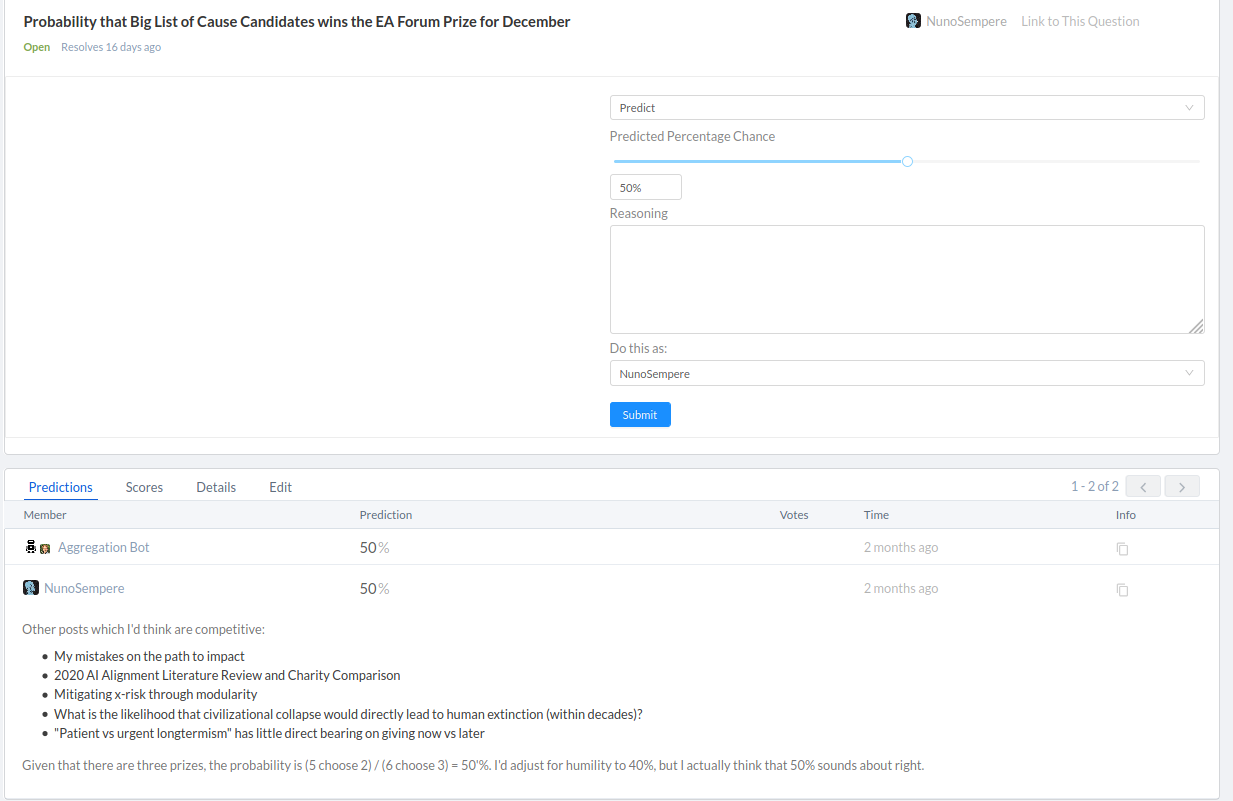

NunoSempere @ 2021-02-16T11:04 (+7)

Nice! One other cool thing about the Big List of Cause Candidates is that people have been coming up with suggestions, and I have been updating the list as they do so.

Incidentally, the Big List of Candidates post was selected as a project by using a very rudimentary forecasting/evaluation system, similar to the ones here and here. If you want to participate in that kind of thing by suggesting, carrying out or evaluating potential projects, you can sign up here.

In particular, as a novelty, I assigned a 50% chance that it would in fact get an EA forum prize.

Note that the forecast assumed that I was competing against fewer posts, but also that there would be fewer prizes, so the errors happily cancelled out.

I think that that kind of forecast/comment:

- Makes me look arrogant/not humble/unvirtuous, at least to some people. In particular, I strongly take the stance that the characters in In praise of unhistoric heroism who are ~"contented by sweeping offices instead of chasing the biggest projects they can find" are in fact making a mistake by not asking the question "but what are the most valuable things I could be doing?" (or, by using a forecasting system/setup to explore that question)

- Is still really interesting because I think that forecasting funding decisions might be a workable method in order to amplify them, which is particularly valuable given that EA might be vetting constrained. Ideally I (or other forecasters) would get to do that with EA funds or OP grants, but I thought that the forum prize could be a nice beginning.

The other posts I thought were particularly strong are:

- 2020 AI Alignment Literature Review and Charity Comparison. Note that Larks is one of the judges and last year withdrew from the prize.

- What is the likelihood that civilizational collapse would directly lead to human extinction (within decades)? Also by one of the judges now.

- Mitigating x-risk through modularity.

I correctly guessed My mistakes on the path to impact and "Patient vs urgent longtermism" has little direct bearing on giving now vs later.