The winners of the Change Our Mind Contest—and some reflections

By GiveWell @ 2022-12-15T19:33 (+192)

This is a linkpost to https://blog.givewell.org/2022/12/15/change-our-mind-contest-winners

Author: Isabel Arjmand, GiveWell Special Projects Officer

In September, we announced the Change Our Mind Contest for critiques of our cost-effectiveness analyses. Today, we're excited to announce the winners!

We're very grateful that so many people engaged deeply with our work. This contest was GiveWell's most successful effort so far to solicit external criticism from the public, and it wouldn't have been possible without the participation of people who share our goal of allocating funding to cost-effective programs.

Overall, we received 49 entries engaging with our prompts. We were very happy with the quality of entries we received—their authors brought a great deal of thought and expertise to engaging with our cost-effectiveness analyses.

Because we were impressed by the quality of entries, we've decided to award two first-place prizes and eight honorable mentions. (We stated in September that we would give a minimum of one first-place, one runner-up, and one honorable mention prize.) We also awarded $20,000 to the piece of criticism that inspired this contest.

Winners are listed below, followed by our reflections on this contest and responses to the winners.

The prize-winners

Given the overall quality of the entries we received, selecting a set of winners required a lot of deliberation.

We're still in the process of determining which critiques to incorporate into our cost-effectiveness analyses and to what extent they'll change the bottom line; we don't agree with all the critiques in the first-place and honorable mention entries, but each prize-winner raised issues that we believe were worth considering. In several cases, we plan to further investigate the questions raised by these entries.

Within categories, the winners are listed alphabetically by the last name of the author who submitted the entry.

First-place prizes – $20,000 each[1]

- Noah Haber for "GiveWell's Uncertainty Problem." The author argues that without properly accounting for uncertainty, GiveWell is likely to allocate its portfolio of funding suboptimally, and proposes methods for addressing uncertainty.

- Matthew Romer and Paul Romer Present for "An Examination of GiveWell’s Water Quality Intervention Cost-Effectiveness Analysis." The authors suggest several changes to GiveWell's analysis of water chlorination programs, which overall make Dispensers for Safe Water's program appear less cost-effective.

To give a general sense of the magnitude of the changes we currently anticipate, our best guess is that Matthew Romer and Paul Romer Present's entry will change our estimate of the cost-effectiveness of Dispensers for Safe Water by very roughly 5 to 10% and that Noah Haber's entry may lead to an overall shift in how we account for uncertainty (but it's too early to say how it would impact any given intervention). Overall, we currently expect that entries to the contest may shift the allocation of resources between programs but are unlikely to lead to us adding or removing any programs from our list of recommended charities.

Honorable mentions – $5,000 each

- Alex Bates for a critical review of GiveWell's 2022 cost-effectiveness model

- Dr. Samantha Field and Dr. Yannish Naik for "A critique of GiveWell’s CEA model for Conditional Cash Transfers for vaccination in Nigeria (New Incentives)"

- Akash Kulgod for "Cost-effectiveness of iron fortification in India is lower than GiveWell's estimates"

- Sam Nolan, Hannah Rokebrand, and Tanae Rao for "Quantifying Uncertainty in GiveWell Cost-Effectiveness Analyses"

- Isobel Phillips for "Improving GiveWell's modelling of insecticide resistance may change their cost per life saved for AMF by up to 20%"

- Tanae Rao and Ricky Huang for "Hard Problems in GiveWell's Moral Weights Approach"

- Dr. Dylan Walters, Alison Greig, Steve Gilbert, Dr. Mandana Arabi, and Sameen Ahsan for critiques of GiveWell's overall methodology and conceptual approach as well as critiques of the methodology in our vitamin A supplementation analysis

- Trevor Woolley and Ethan Ligon for "GiveWell’s Moral Weights Underweight the Value of Transfers to the Poor"

Participation prizes – $500 each

39 entries, not individually listed here.

All entries that met our criteria will receive participation prizes if they didn't win a larger prize. To meet our requirements, authors had to share a critique that addressed our cost-effectiveness analysis and proposed a change that could make a material difference to our bottom line—this is no small feat, and we really appreciate everyone who took the time to do so!

Prize for inspiring the Change Our Mind Contest – $20,000

Joel McGuire, Samuel Dupret, and Michael Plant for "Deworming and decay: replicating GiveWell’s cost-effectiveness analysis."

In July 2022, these three researchers at the Happier Lives Institute shared a critique of how GiveWell models the long-term benefits of deworming; they argue we should treat those benefits as decaying over time rather than remaining constant. We responded to their critique here. We're in the process of incorporating this critique, and our best guess is that it will lead to a 10% to 30% decrease in our estimate of the cost-effectiveness of deworming, which we roughly estimate would have influenced $2 to $8 million in funding.

Because this work influenced our thinking and played a role in prompting the Change Our Mind Contest, we decided to make a grant of $20,000 to the Happier Lives Institute.

Logistics for prize-winners

We will be emailing the author who submitted each prize-winning entry, including those that won participation prizes. If you and your co-authors have not received an email by early January, please feel free to reach out to change-our-mind@givewell.org.

Reflections on this contest

There's a robust community of people who are excited to engage with our work.

We received 49 entries that met the contest criteria, all of which represented meaningful engagement with our work. These entries came from a wide range of people—from health economists and from people in entirely unrelated fields, from the global health community and from the effective altruism community, from students and from professionals with years of work experience.

People submitted entries on many different topics. We received at least two entries on each of the six cost-effectiveness analyses we pointed people toward, plus some entries on other programs, and many cross-cutting entries on issues like uncertainty, the discount rate for future benefits, our moral weights, and more.

In order to manage all the suggestions we received, one of our researchers reviewed all 49 entries and created a dashboard for tracking the 100 discrete suggestions we identified. For each of those, we're tracking whether we plan to do additional work to address the suggestion and how high-priority that work is.

We're so glad that people were excited to contribute to our decision-making, and we'll be continuing to look for ways to collaborate with the public to improve our work.

We have room for improvement, particularly on transparency.

We’re proud of being an unusually transparent research organization; transparency is one of our core values. Transparency has two facets: making information publicly available, and also making it easy to understand. We generally succeed at publishing the information that drives our decisions. But, we could do more to enable people to understand why we believe what we believe.

Some entries proposed changes that are actually very similar to what we’re already doing, but where the authors didn’t realize that because of the way our work is presented (e.g., a calculation takes place in a separate spreadsheet, or the name of a parameter doesn’t clearly represent its purpose). In other cases, entries flagged areas where the assumptions underlying our judgments aren't apparent (e.g., in the case of development effects from averted cases of malaria). We appreciate these authors bringing those issues to our attention, and we hope to improve the clarity of our work!

People brought us new ideas—and old ones we hadn’t implemented.

Some entries covered ideas we hadn't considered but found worth pursuing. For example, an entry arguing that iron fortification might be less cost-effective than we think inspired us to dig into the questions it raised about the prevalence of iron deficiency anemia in India. In this case, our current best guess is that our view on iron fortification won't change much, but we believe it's worthwhile for us to consider this issue.

Other entries covered issues we were aware of but hadn't resolved. For example, we've known for a while that a calculation in our cost-effectiveness analysis for the Against Malaria Foundation is both poorly structured and presented in a confusing way. A few entries flagged perceived issues with how we calculate mortality in that analysis, and some authors thought the calculations were mistaken in a way that would have a significant impact on our bottom line (e.g., some entries understandably believed we'd failed to account for indirect deaths from malaria). We don't think any of these entries captured the precise problem with the current calculation, but they homed in on a weak point in our analysis. Several months ago we created a revised version of our internal analysis that fixes the issue, but we haven't yet finalized and published it. We're likely to publish this revision in the next few months. In general, people flagging a known issue can help us prioritize changes.

This contest was worth doing.

We haven't done anything like this before, and we weren't really sure what to expect. We saw this as an opportunity to lean into our values, particularly transparency and truth-seeking, in service of helping people as much as we can with our funding decisions. The contest succeeded in that goal; we identified improvements we can make to our cost-effectiveness analyses in terms of both accuracy and clarity. And beyond that, this contest established that there are people who care deeply about our work and want to help us improve it. To everyone who participated—thank you!

Appendix: Discussion of winning entries

In this section, we share our initial thoughts on the two first-place entries.

Noah Haber on uncertainty

Several entries focused on how GiveWell could improve its approach to uncertainty. This entry stood out for its clear demonstration of how failing to account for uncertainty can lead to suboptimal allocations, even in a risk-neutral framework.

In brief, this entry argues that when prioritizing by estimated expected value, one will sometimes select more uncertain programs whose true values are lower, over less uncertain programs whose true values are higher. This "optimizer's curse" or "winner's curse" can create a portfolio that is systematically less valuable than it could be if uncertainty was properly accounted for. This issue has been raised before, but we haven't ever fully addressed it.[2]

We'd like to consider the issues presented in this post and other recent criticisms of our approach to uncertainty in more depth. In the meantime, we'll share some initial thoughts:

- We really appreciated that this piece drew a clear link between incorporating uncertainty and the ranking of programs. It shows that if we don’t account for uncertainty explicitly, we may be allocating too much funding to more uncertain programs, which lowers the value of our overall funding allocation.

- Currently, we make ad hoc adjustments for uncertainty, such as our strict internal validity adjustment for deworming. However, we haven’t adopted any rules for penalizing more uncertain programs, either quantitatively or qualitatively. This entry updates us toward believing we should consider a more systematic approach.

- We’re not sure if conducting the full uncertainty modeling recommended by the entry is the right approach for GiveWell, and we’d like to explore alternative approaches to addressing this issue.

- The entry argues the best approach would be to model uncertainty using a probabilistic sensitivity analysis (PSA). This would involve selecting and parameterizing probability distributions for key parameters; running repeat simulations to obtain a distribution of potential outcomes; and using this distribution as the basis for decision-making.

- We’re not sure if this is the right approach because we think there could be some important drawbacks. It could limit accessibility of our models externally, make it difficult to compare across models if we’re unsure if uncertainty is being equally accounted for across programs, and make it harder to understand intuitively what’s driving our bottom line on which programs are more cost-effective. We would want to weigh those downsides against the benefits of PSA.

- On the other hand, if we find that this problem leads to a sufficiently large impact on the value of our allocations, it might be worth the costs of a more complicated modeling approach. We’d like to do more work to explore how big of an impact this problem has on our portfolio.

Overall, we think handling uncertainty is an important issue, and we appreciate the nudge to consider it more deeply!

Matthew Romer and Paul Romer Present on water

This entry clearly presented a series of plausible changes to how we estimate the cost-effectiveness of water quality interventions, specifically Dispensers for Safe Water (DSW) and in-line chlorination (ILC). We believe the authors understand our analysis well and were able to identify some weak points in our cost-effectiveness analysis; we expect to make some but not all of the changes they propose.

To briefly summarize in our own words, this entry suggests that GiveWell should:

- Include Haushofer et al. (2021) in its meta-analysis on the effect of water chlorination on all-cause mortality.

- Use a formal Bayesian approach rather than a "plausibility cap" to estimate the effect of water chlorination on all-cause mortality.

- Revise our estimate for the age distribution of deaths averted by water chlorination, as well as for the medical costs averted.

- Discount both future costs and future benefits to account for changes over time.

- Revise the cost estimates for ILC.

- Review a calculation in our leverage and funging adjustment that they believe may contain an error.

We share some initial thoughts here, noting that we're still in the process of deciding whether and how to incorporate these suggestions:

- Including Haushofer et al.: The choice to exclude Haushofer et al. was difficult, but we're currently comfortable with our decision to exclude it. See more on this page, including in footnote 39. For our practical decision-making (versus a context like Cochrane meta-analyses where stricter decision rules might be needed), we think it makes sense to exclude it given (a) the fact that we find the implied intervention effect implausible across the 95% confidence interval; (b) the divergence of these results from the other strongest pieces of evidence we have; and (c) the large effect that including it would have on the pooled result.

- Using a formal Bayesian approach: We're planning to consider this in more depth. Estimating effect sizes is difficult in cases where the point estimates from available evidence seem implausibly high to us. As the authors note, we've used a Bayesian approach in some of our other analyses (e.g., deworming), and it might be reasonable to use here. The plausibility cap we're currently using seems like one reasonable approach in this context, but we haven't fully explored other approaches. If we don't move to a Bayesian approach, we may still make other changes inspired by this point.

- Revising the ages of deaths averted and medical costs averted:

- On the ages of deaths averted: We think it's reasonable to use the age structure of direct deaths from enteric infections for indirect deaths as well because those deaths are still linked to enteric infections, based on the idea that enteric infections increase the risk of other diseases.

- On medical costs averted: Our published cost-effectiveness analysis uses an outdated method to estimate medical costs averted by water quality interventions, and we're now internally using a method that we believe is better aligned with our analyses for interventions like those conducted by our top charities.

- Discounting future benefits and costs: We hadn't realized that we're treating future deaths differently in the New Incentives analysis—thank you for flagging that. For grants where the benefits occur more than a few years in the future (like this Dispensers for Safe Water grant), we generally want to account for both changing disease burdens (in this case, a decline in deaths from diarrhea) and general uncertainty over time, and we didn't do that in this case. We're less sure that we'd want to discount costs (versus benefits) in the future, given (a) consistency with our other analyses and (b) the fact that from our perspective, the costs are "spent" when we decide to make a grant.

- Revising costs of ILC: It's true that we're using very rough cost figures for ILC in our published analysis. We expect to learn more over time and incorporate that in future analyses we publish.

- Reviewing funging calculation: This seems like a likely error (that makes a small difference to the bottom line). We'll correct it if upon further review we confirm that it's an error!

Our best guess overall is that after more thoroughly reviewing these suggestions, we'll revise our water quality cost-effectiveness analysis and our estimate of the cost-effectiveness of Dispensers for Safe Water will change by very roughly 5 to 10%.

Notes

Both of these entries were outstanding, and they represent very different approaches. Because they are similarly excellent, we are naming two winners rather than one winner and one runner-up. ↩︎

For example, a former GiveWell researcher wrote this post, which makes a different argument from Noah Haber's piece but addresses a similar problem. ↩︎

NickLaing @ 2022-12-15T19:49 (+20)

Thanks so much for this - I'm not sure I've heard of another organisation paying people to criticise them in a constructive way. This kind of thing should be positive media for Effective altruism but of course it won't get out there ;).

And most wholesome of all giving 20,000 to one of your biggest criticisers and even competitors. Amazing!

Kevin Lacker @ 2022-12-15T21:49 (+12)

I'm glad this contest happened, but I was hoping to see some deeper reflection. To me it seems like the most concerning criticisms of the GiveWell approach are criticisms along more fundamental lines. Such as -

- It's more effective to focus on economic growth than one-off improvements to public health. In the long run no country has improved public health problems via a charitable model, but many have done it through economic growth.

- NGOs in unstable or war-torn countries are enabling bad political actors. By funding public health in a dictatorship you are indirectly funding the dictator, who can reduce their own public health spending to counteract any benefit of NGO spending.

This might be too much to expect from any self-reflection exercise, though.

Lorenzo Buonanno @ 2022-12-15T22:28 (+9)

You might be interested in the response to a similar comment here

Jason @ 2022-12-15T23:26 (+8)

#2 would have been largely in scope, I think. GiveWell's analyses usually include an adjustment for estimated crowding out of other public health expenditures.

#1 is more a cause-prioritization argument in my book. The basic contours of that argument are fairly clear and well-known; the added value would be in identifying a specific program that can be implemented within the money available to EA orgs for global health/development and running a convincing cost-benefit analysis on it. That's a much harder thing to model than GiveWell-type interventions and would need a lot more incentive/resources than $20K.

NickLaing @ 2022-12-16T05:56 (+3)

Hey Kevin I do like those points and I think especially number 2 is worth a lot f consideration - not only in unstable and war torn countries but also in stable, stagnant countries like Uganda where I work. Jason's answer is also excellent.

Agree that number 2 is a very awkward situation, and working in health in a low income country myself I ask myself this all the time. The worst case scenario in terms of propping up a dictator though I think is funding them directly - which a LOT of government to government aid does. Fortunately against malaria foundation don't give a high proportion of money to evil dictatorships but they do give some. Same goes for deworm the world. I think there should be some kind of small negative adjustment (even if token) from GiveWell on this front.

I find the "economic growth" argument a tricky one as my big issue here is tractability". I'm not sure we know well at all how to actually stimulate economic growth consistently and well. There are a whole lot of theories but the solid empirical research base is very poor. I'd be VERY happy to fund economic growth if I had a moderate degree of certainty that the intervention would work.

Kevin Lacker @ 2022-12-16T17:46 (+2)

against malaria foundation don't give a high proportion of money to evil dictatorships but they do give some. Same goes for deworm the world.

I was wondering about this, because I was reading a book about the DRC - Dancing in the Glory of Monsters - which was broadly opposed to NGO activity in the country as propping up the regime. And I was trying to figure out how to square this criticism with the messages from the NGOs themselves. I am not really sure, though, because the pro-NGO side of the debate (like EA) and the anti-NGO side of the debate (like that book) seem to mostly be ignoring each other.

I think there should be some kind of small negative adjustment (even if token) from GiveWell on this front.

Yeah, I don't even know if it's the sort of thing that you can adjust for. It's kind of unmeasurable, right? Or maybe you can measure something like, the net QALYs of a particular country being a dictatorship instead of a democracy, and make an argument that supporting a dictator is less bad than the particular public health intervention is good.

I would at least like to see people from the EA NGO world engage with this line of criticism, from people who are concerned that "the NGO system in poor countries, overall, is doing more unmeasurable harm than measurable good".

Kirsten @ 2022-12-16T18:21 (+4)

The argument I've found most persuasive is "it's easier to fight back against an unjust government if you're healthy/have more money".

Jack_S @ 2022-12-17T12:49 (+1)

I definitely don't think it's too much to expect from a self-reflection exercise, and I'm sure they've considered these issues.

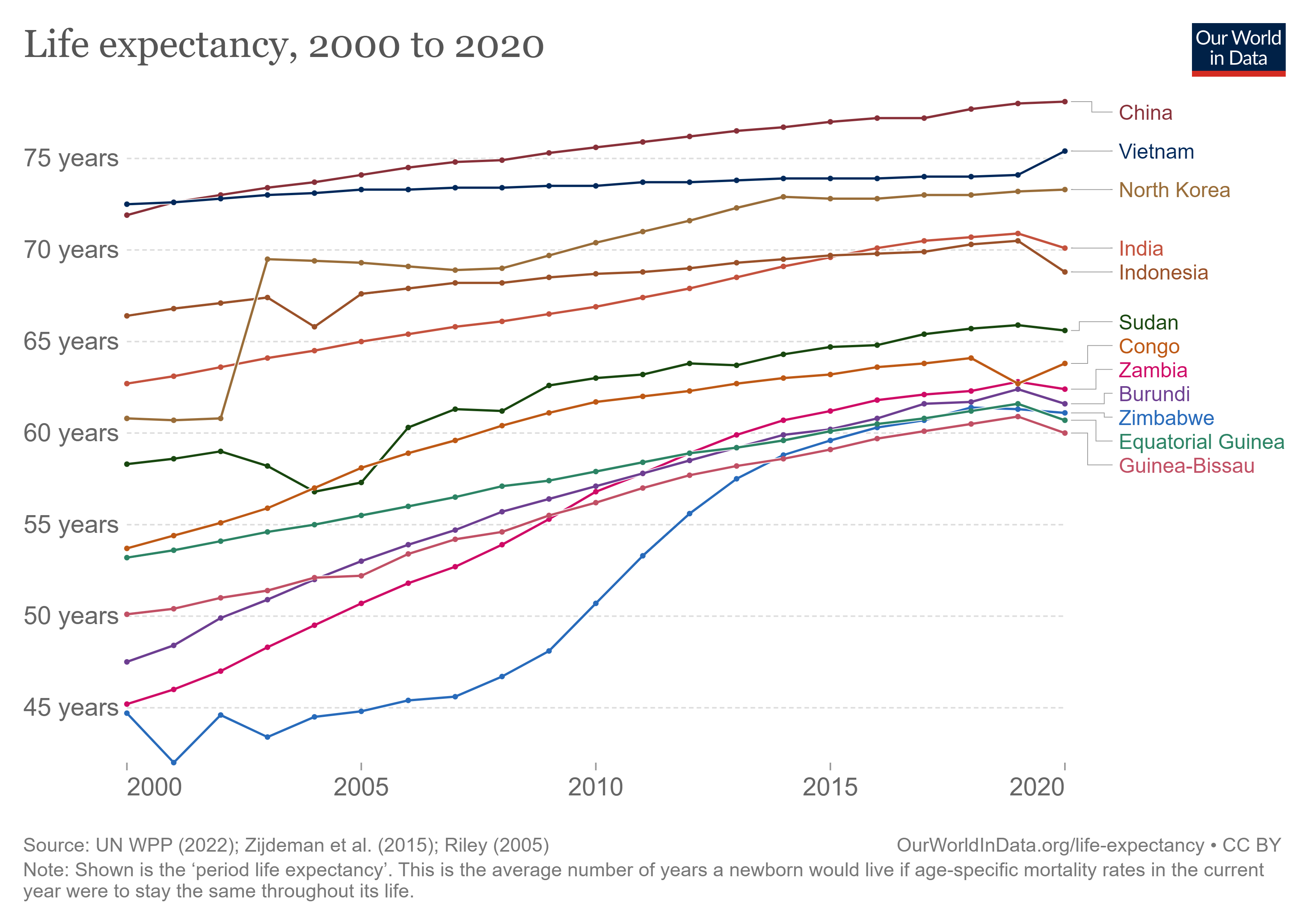

For no. 1, I wouldn't actually credit growth so much. Most of the rapid increases in life expectancy in poor countries over the last century have come from factors not directly related to economic growth (edit: growth in the countries themselves), including state capacity, access to new technology (vaccines), and support from international orgs/ NGOs. China pre- and post- 1978 seems like one clear example here- the most significant health improvements came before economic growth. Can you identify the 'growth miracles' vs. countries that barely grew over the last 20 years in the below graph?

I'd also say that reliably improving growth (or state capacity) is considerably more difficult than reliably providing a limited slice of healthcare. Even if GiveWell had a more reliable theory of change for charitably-funded growth interventions, they probably aren't going to attract donations- donating to lobbying African governments to remove tariffs doesn't sound like an easy sell, even for an EA-aligned donor.

For 2, I think you're making two points- supporting dictators and crowding out domestic spending.

On the dictator front, there is a trade-off, but there are a few factors:

- I'm very confident that countries with very weak state capacity (Eritrea?) would not be providing noticeably better health care if there were fewer NGOs.

- NGOs probably provide some minor legitimacy to dictators, but I doubt any of these regimes would be threatened by their departure, even if all NGOs simultaneously left (which isn't going to happen). So the marginal negative impact of increased legitimacy from a single NGO must be very small.

On the 'crowding out' front, I don't have a good sense of the data, but I'd suspect that the issue might be worse in non-dictatorships- countries/ regions that are easier/ more desirable for western NGOs to set up shop, but where local authorities might provide semi-decent care in the absence of NGOs. This article illustrates some of the problems in rural Kenya and Uganda (where I think there's a particularly high NGO-to-local people ratio).

I suspect GiveWell's response to this is that the GiveWell-supported charities target a very specific health problem- they may sometimes try to work with local healthcare providers to make both actors more effective, but, if they don't, the interventions should be so much more effective per marginal dollar than domestic healthcare spending that any crowding effect is more than canceled out. Many crowding problems are more macro than micro (affecting national policy), so the marginal impact of a new effective NGO on, say, a decision whether or not to increase healthcare spending, is probably minimal. When you've got major donors (UN, Gates) spending billions in your country, AMF spending a few extra million is unlikely to have a major effect. But I'm open to arguments here.

DMMF @ 2022-12-15T21:47 (+4)

Very curious to learn if first prize winning Paul Romer is Nobel Prize winning economist Paul Romer

MHR @ 2022-12-15T23:01 (+10)

No relation!