Existential risk pessimism and the time of perils

By David Thorstad @ 2022-08-12T14:42 (+182)

Note: This post is adapted from a GPI working paper. We like hearing what you think about our work, and we hope that you like hearing from us too!

1 Introduction

Many EAs endorse two claims about existential risk. First, existential risk is currently high:

(Existential Risk Pessimism) Per-century existential risk is very high.

For example, Toby Ord (2020) puts the risk of existential catastrophe by 2100 at 1/6, and participants at the Oxford Global Catastrophic Risk Conference in 2008 estimated a median 19% chance of human extinction by 2100 (Sandberg and Bostrom 2008). Let’s ballpark Pessimism using a 20% estimate of per-century risk.

Second, many EAs think that it is very important to mitigate existential risk:

(Astronomical Value Thesis) Efforts to mitigate existential risk have astronomically high expected value.

You might think that Existential Risk Pessimism supports the Astronomical Value Thesis. After all, it is usually more important to mitigate large risks than to mitigate small risks.

In this post, I extend a series of models due to Toby Ord and Tom Adamczewski to do five things:

- I show that across a range of assumptions, Existential Risk Pessimism tends to hamper, not support the Astronomical Value Thesis.

- I argue that the most plausible way to combine Existential Risk Pessimism with the Astronomical Value Thesis is through the Time of Perils Hypothesis.

- I clarify two features that the Time of Perils Hypothesis must have if it is going to vindicate the Astronomical Value Thesis.

- I suggest that arguments for the Time of Perils Hypothesis which do not appeal to AI are not strong enough to ground the relevant kind of Time of Perils Hypothesis.

- I draw implications for existential risk mitigation as a cause area.

Proofs are in the appendix if that’s your jam.

2 The Simple Model

Let’s start with a Simple Model of existential risk mitigation due to Toby Ord and Tom Adamczewski. On this model, it will turn out that Existential Risk Pessimism has no bearing on the Astronomical Value Thesis, and also that the Astronomical Value Thesis is false.

Many of the assumptions made by this model are patently untrue. The question I ask in the rest of the post will be which assumptions a Pessimist should challenge in order to bear out the Astronomical Value Thesis. We will see that recovering the Astronomical Value Thesis by changing model assumptions is harder than it appears.

The Simple Model makes three assumptions.

- Constant value: Each century of human existence has some constant value .

- Constant risk: Humans face a constant level of per-century existential risk .

- All risk is extinction risk: All existential risks are risks of human extinction, so that no value will be realized after an existential catastrophe.

Under these assumptions, we can evaluate the expected value of the current world , incorporating possible future continuations, as follows:

(Simple Model)

On this model, the value of our world today depends on the value of a century of human existence as well as the risk of existential catastrophe. Setting at a pessimistic values the world at a mere four times the value of a century of human life, whereas an optimistic risk of values the world at the value of nearly a thousand centuries.

But we're not interested in the value of the world. We care about the value of existential risk mitigation. Suppose you can act to reduce existential risk in your own century. More concretely, you can take some action which will reduce risk this century by some fraction , from to . How good is this action?

On the Simple Model, it turns out that . This result is surprising for two reasons.

- Pessimism is irrelevant: The value of existential risk mitigation is entirely independent of the starting level of risk .

- Astronomical value begone!: The value of existential risk mitigation is capped at the value of the current century. Nothing to sneeze at, but hardly astronomical.

That’s not good for the Pessimist. She’d like to do better by challenging assumptions of the model. Which assumptions would she have to challenge in order to square Pessimism with the Astronomical Value Thesis?

3 Modifying the Simple Model

In this section, I extend an analysis by Toby and Tom to consider four ways we might change the Simple Model. I argue that the last, adopting a Time of Perils Hypothesis, is the most viable. I also place constraints on the versions of the Time of Perils Hypothesis that are strong enough to do the trick.

3.1 Absolute versus relative risk reduction

In working through the Simple Model, we considered the value of reducing existential risk by some fraction of its original amount. But this might seem like comparing apples to oranges. Reducing existential risk from 20% to 10% may be harder than reducing existential risk from 2% to 1%, even though both involve reducing existential risk to half of its original amount. Wouldn’t it be more realistic to compare the value of reducing existential risk from 20% to 19% with the value of reducing risk from 2% to 1%?

More formally, we were concerned about relative reduction of existential risk from its original level by the fraction , to . Instead, the objection goes, we should have been concerned with the value of absolute risk reduction from to . Will this change help the Pessimist?

It will not! On the Simple Model, the value of absolute risk reduction is . Now we have:

- Pessimism is harmful: The value of existential risk mitigation grows inversely with the starting level of existential risk. If you are 100 as pessimistic as I am, you should be 100 less enthusiastic than I am about absolute risk reduction of any fixed magnitude.

- Astronomical value is still gone: The value of existential risk mitigation remains capped at the value v of the current century.

That didn’t help. What might help the Pessimist?

3.2 Value growth

The Simple Model assumed that each additional century of human existence has some constant value . That’s bonkers. If we don’t mess things up, future centuries may be better than the current century. These centuries may support higher populations, with longer lifespans and higher levels of welfare. What happens if we modify the Simple Model to build in value growth?

Value growth will certainly boost the value of existential risk mitigation. But it turns out that value growth alone is not enough to square Existential Risk Pessimism with the Astronomical Value Thesis. We’ll also see that the more value growth we assume, the more antagonistic Pessimism becomes to the Astronomical Value Thesis.

Let be the value of the present century. Toby and Tom consider a model on which value grows linearly over time, so that the value of the century from now will be times as great as the value of the present century, if we live to reach it.

(Linear Growth)

On this model, the value of reducing existential risk by some (relative) fraction is . Perhaps you think Toby and Tom weren’t generous enough. Fine! Let’s square it. Consider quadratic value growth, so that the century from now is times as good as this one.

(Quadratic Growth)

On this model, the value of reducing existential risk by is . How do these models behave?

To see the problem, consider the value of a 10% (relative) risk reduction in this century (Table 1).

Table 1: Value of 10% relative risk reduction across growth models and risk levels

| |||||

1.6 | 0.9 | 0.4 | 0.1 | 0.1 | |

16.0 | 8.3 | 2.8 | 0.4 | 0.1 | |

160.0 | 82 | 26.9 | 3.0 | 0.1 |

This table reveals two things:

- Pessimism is (very) harmful: The value of existential risk mitigation decreases linearly (linear growth) or quadratically (quadratic growth) in the starting level of existential risk. This means that Existential Risk Pessimism emerges as a major antagonist to the Astronomical Value Thesis. If you are 100 as pessimistic as I am about existential risk, on the quadratic model you should be 10,000 less enthusiastic about existential risk reduction!

- Astronomical Value Thesis is false given Pessimism: On some growth modes (i.e. quadratic growth) we can get astronomically high values for existential risk reduction. But we have to be less pessimistic to do it. If you think per-century risk is at 20%, existential risk reduction doesn’t provide more than a few times the value of the present century.

Now it looks reasonably certain that Existential Risk Pessimism, far from supporting the Astronomical Value Thesis, could well scuttle it. Let’s consider one more change to the Simple Model, just to make sure we’ve correctly diagnosed the problem.

3.3 Global risk reduction

The Simple Model assumes that we can only affect existential risk in our own century. This may seem implausible. Our actions affect the future in many ways. Why couldn’t our actions reduce future risks as well?

Now it’s not implausible to assume our actions could have measurable effects on risk in nearby centuries. But this will not be enough to save the Pessimist. On the Simple Model, cutting risk over the next centuries all the way to zero gives only times the value of the present century. To salvage the Astronomical Value Thesis, we would need to imagine that our actions today can significantly alter levels of existential risk across very distant centuries. That is less plausible. Are we to imagine that institutions founded to combat risk today will stand or spawn descendants millions of years hence?

More surprisingly, even if we assume that actions today can significantly lower existential risk across all future centuries, this assumption may still not be enough to ground an astronomical value for existential risk mitigation. Consider an action which reduces per-century risk by the fraction in all centuries, from to each century. On the Simple Model, the value of is then . What does this imply?

First, the good news. In principle the value of existential risk reduction is unbounded in the fraction by which risk is reduced. No matter how small the value v of a century of life, and no matter how high the starting risk , a 100% reduction of risk across all centuries carries infinite value, and more generally we can drive value as high as we like if we reduce risk by a large enough fraction.

Now, the bad news.

- The Astronomical Value Thesis is still probably false: Even though the value of existential risk reduction is in principle unbounded, in practice it is unlikely to be astronomical. To illustrate, setting risk to a pessimistic 20% values a 10% reduction in existential risk across all centuries at once at a modest . Even a 90% reduction across all centuries at once is worth only 45 times as much as the present century.

- Pessimism is still a problem: At the risk of beating a dead horse, the value of existential risk reduction varies inversely with . If you’re as pessimistic as I am, you should be less hot on existential risk mitigation.

Now it’s starting to look like Existential Risk Pessimism is the problem. Is there a way to tone down our pessimism enough to make the Astronomical Value Thesis true? Why yes! We should be Pessimists about the near future, and optimists about the long-term future. Let’s see how this would work.

3.4 The Time of Perils

Pessimists often think that humanity is living through a uniquely perilous period. Rapid technological growth has given us the ability to quickly destroy ourselves. If we learn to manage the risks posed by new technologies, we will enter a period of safety. But until we do this, risk will remain high.

Here’s how Carl Sagan put the point:

It might be a familiar progression, transpiring on many worlds … life slowly forms; a kaleidoscopic procession of creatures evolves; intelligence emerges … and then technology is invented. It dawns on them that there are such things as laws of Nature … and that knowledge of these laws can be made both to save and to take lives, both on unprecedented scales. Science, they recognize, grants immense powers. In a flash, they create world-altering contrivances. Some planetary civilizations see their way through, place limits on what may and what must not be done, and safely pass through the time of perils. Others [who] are not so lucky or so prudent, perish. (Sagan 1997, p. 173).

Following Sagan, let the Time of Perils Hypothesis be the view that existential risk will remain high for several centuries, but drop to a low level if humanity survives this Time of Perils. Could the Time of Perils Hypothesis save the Pessimist?

To math it up, let be the length of the perilous period: the number of centuries for which humanity will experience high levels of risk. Assume we face constant risk throughout the perilous period, with set to a pessimistically high level. If we survive the perilous period, existential risk will drop to the level of post-peril risk, where is much lower than .

On this model, the value of the world today is:

(Time of Perils)

That works out to a mouthful:

but it’s really not so bad.

Let be the value of living in a world forever stuck at the perilous level of risk, and be the value of living in a post-peril world. Let SAFE be the proposition that humanity will reach a post-peril world and note that . Then the value of the world today is a probability-weighted average of the values of the safe and perilous worlds.

As the length of the perilous period and the perilous risk level trend upwards, the value of the world tends towards the low value of the perilous world envisioned by the Simple Model. But as the perilous period shortens and the perilous risk decreases, the value of the world tends towards the high value of a post-peril world. We’ll see the same trends when we think about the value of existential risk mitigation.

Let be an action which reduces existential risk in this century by the fraction , and assume that the perilous period lasts at least one century. Then we have:

This equation decomposes the value of into two parts, corresponding to the expected increase in value (if any) that will be realized during the perilous and post-peril periods. The first term, is bounded above by v, so it won’t matter much. The Astronomical Value Thesis needs to pump up the second term, , representing the value we could get after the Time of Perils. Call this the crucial factor.

How do we make the crucial factor astronomically large? First, we need the perilous period to be short. The crucial factor decays exponentially in , so a long perilous period will make the crucial factor quite small. Second, we need a very low post-peril risk . The value of a post-peril future is determined entirely by the level of post-peril risk, and we saw in Section 2 that this value cannot be high unless risk is very low.

To see the point in practice, assume a pessimistic 20% level of risk during the perilous period. Table 2 looks at the value of a 10% reduction in relative risk across various assumptions about the length of the perilous period and the level of post-peril risk.

Table 2: Value of 10% relative risk reduction against post-peril risk and perilous period length

| |||||

1.6 | 0.9 | 0.4 | 0.1 | 0.1 | |

16.0 | 8.3 | 2.8 | 0.4 | 0.1 | |

160.0 | 82 | 26.9 | 3.0 | 0.1 |

Note that:

- Short is sweet: With a short 2-century perilous period, the value of ranges from 1.6-160, and may be astronomical once we build in value growth. But with a long 50-century perilous period, there isn’t much we can do to make the value of large.

- Post-peril risk must be very low: Even if post-peril risk drops by 2,000% to 1% per century, the Astronomical Value Thesis is in trouble. We need risk to drop very low, towards something like 0.01%. Note that for the Pessimist, this is a reduction of 200,000%!

What does this mean for Pessimism and the Astronomical Value Thesis? It’s time to take stock.

3.5 Taking stock

So far, we’ve seen three things:

- Across a range of assumptions, Existential Risk Pessimism tends to hamper, not support the Astronomical Value Thesis: The best-case scenario is the Simple Model, on which Pessimism is merely irrelevant to the Astronomical Value Thesis. On other models the value of risk reduction decreases linearly or even quadratically in our level of pessimism.

- The most plausible way to combine Existential Risk Pessimism with the Astronomical Value Thesis is through the Time of Perils Hypothesis: The Simple Model doesn’t bear out the Astronomical Value Thesis. It’s not enough to think about absolute risk reduction; value growth; or global risk reduction. We need to temper our pessimism somehow, and the best way to do that is the Time of Perils Hypothesis: we’ll be Pessimists for a few centuries, then optimists thereafter.

- Two features that a Time of Perils Hypothesis must have if it is going to vindicate the Astronomical Value Thesis: We need (a) a fairly short perilous period, and (b) a very low level of post-peril risk.

New question: why would you believe a Time of Perils Hypothesis with the required form?

One reason you might believe this is because you think that superintelligence will come along, and that if superintelligence doesn’t kill us all, it would radically and permanently lower the level of existential risk. Let’s set that aside as part of our conclusion and ask a weaker question: is there any other good way to ground a strong enough Time of Perils Hypothesis? I want to walk through two popular arguments and suggest that they aren’t enough to do the trick.

4 Space: The final frontier?

You might think that the Time of Perils will end as humanity expands across the stars. So long as humanity remains tied to a single planet, we can be wiped out by a single catastrophe. But if humanity settles many different planets, it may take an unlikely series of independent calamities to wipe us all out.

The problem with space settlement is that it’s not fast enough to help the Pessimist. To see the point, distinguish two types of existential risks:

- Anthropogenic risks: Risks posed by human activity, such as greenhouse gas emissions and bioterrorism.

- Natural risks: Risks posed by the environment, such as asteroid impacts and naturally occurring diseases.

It’s quite right that space settlement would quickly drive down natural risk. It’s highly unlikely for three planets in the same solar system to be struck by planet-busting asteroids in the same century. But the problem is that Pessimists weren’t terribly concerned about natural risk. For example, Toby Ord estimates natural risk in the next century at 1/10,000, but overall risk at 1/6. So Pessimists shouldn’t think that a drop in natural risk will do much to bring the Time of Perils to a close.

Could settling the stars drive down anthropogenic risks? Recall that the Pessimist needs a (a) quick and (b) very sharp drop in existential risk to bring the Time of Perils to an end. The problem is that although space settlement will help with some anthropogenic risks, it’s unlikely to drive a quick and very sharp drop in the risks that count.

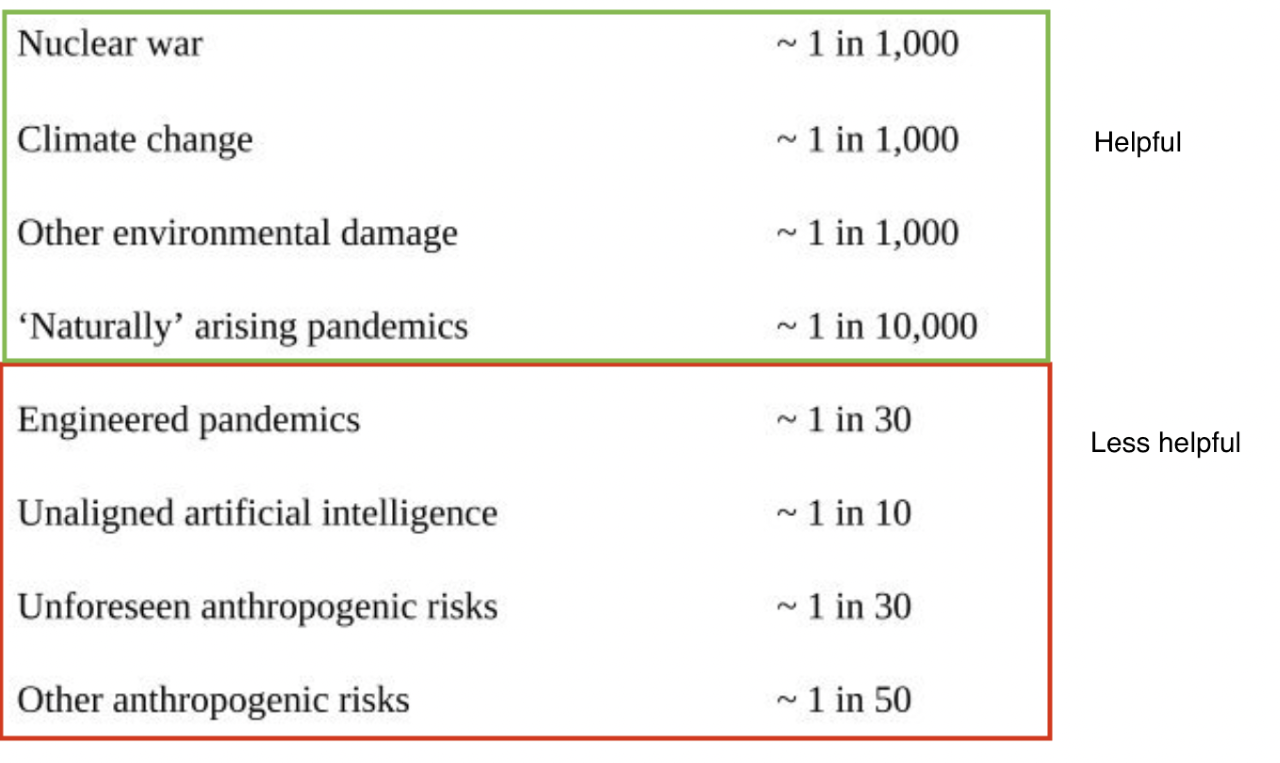

Figure 1: Toby Ord’s (2020) estimates of anthropogenic risk

To see the point, consider Toby Ord’s estimates of the risk posed by various anthropogenic threats (Figure 1). Short-term space settlement would doubtless bring relief for the threats in the green box. After we’re done destroying this planet, we can always find another. And it is much easier to nuke Russia than it is to nuke Mars. But the Pessimist thinks that the threats in the green box are inconsequential compared to those in the red box. So we can’t bring the Time of Perils to an end by focusing on the green box.

What about the threats in the red box, such as AI risk and engineered pandemics? Perhaps long-term space settlement will help with these threats. It is not so easy to send a sleeper virus to Alpha Centauri. But we saw that the Pessimist needs a fast end to the Time of Perils, within the next 10-20 centuries at most. Could near-term space settlement reduce the threats in the red box?

Perhaps it would help a bit. But it’s hard to see how we could chop 34 orders of magnitude off these threats just by settling Mars. Are we to imagine that a superintelligent machine could come to control all life on earth, but find itself stymied by a few stubborn Martian colonists? That a dastardly group of scientists designs and unleashes a pandemic which kills every human living on earth, but cannot manage to transport the pathogen to other planets within our solar system? Perhaps there is some plausibility to these scenarios. But if you put 99.9% probability or better on such scenarios, then boy do I have a bridge to sell you.

So far, we have seen that banking on space settlement won’t be enough to ground a Time of Perils Hypothesis of the form the Pessimist needs. How else might she argue for the Time of Perils Hypothesis?

5 An existential risk Kuznets curve?

Consider the risk of climate catastrophe. Climate risk increases with growth in consumption, which emits fossil fuels. Climate risk decreases with spending on climate safety, such as reforestation and other forms of carbon capture.

Economists have noted that two dynamics push towards reduced climate risk in sufficiently wealthy societies. First, the marginal utility of additional consumption decreases, reducing the benefits of fossil fuel emissions. Second, as society becomes wealthier we have more to lose by destroying our climate. These dynamics exert pressure towards an increase in safety spending relative to consumption.

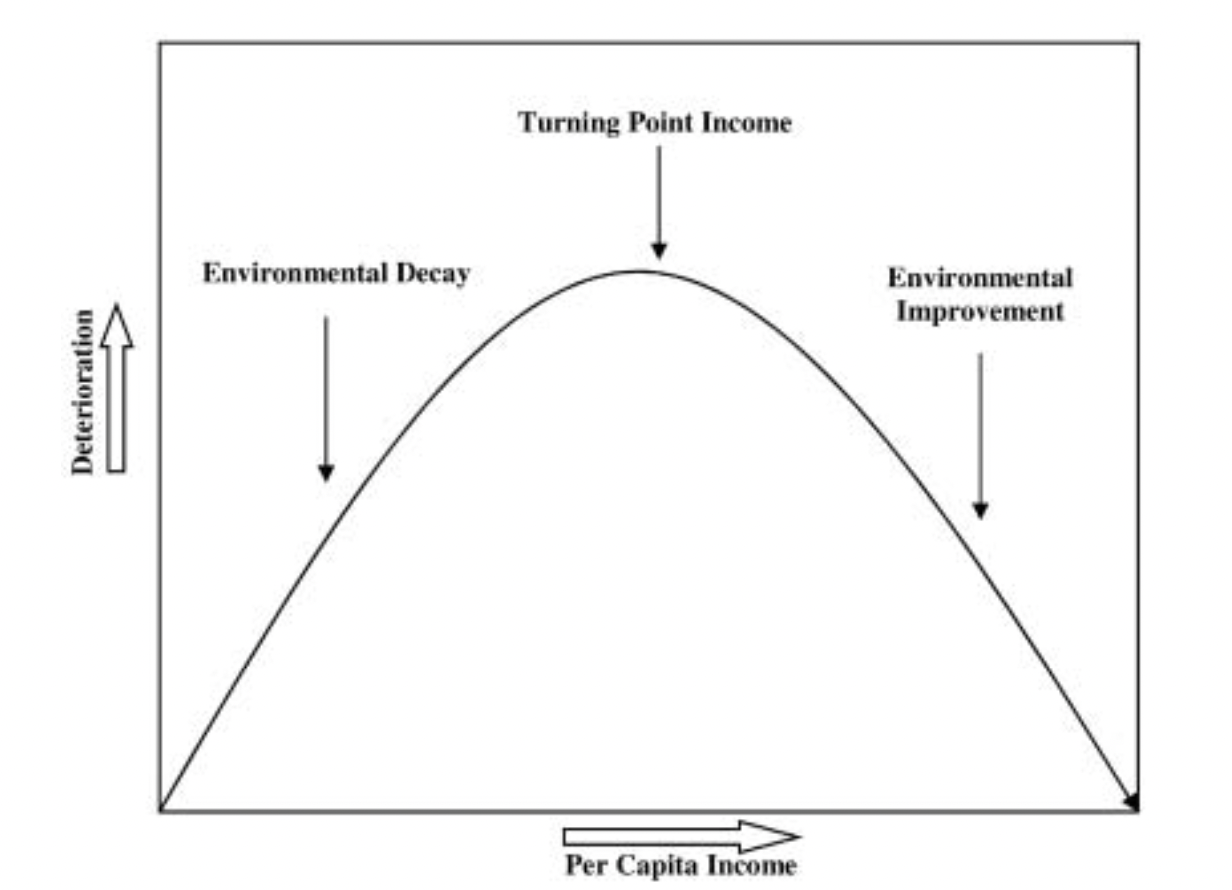

Some economists think that this dynamic is sufficient to generate an environmental Kuznets curve (Figure 2): an inverse U-shaped relationship between per-capita income and environmental degradation. Societies initially become wealthy by polluting their environments. But past a high threshold of wealth, rational societies should be expected to improve the environment more quickly than they destroy it, due to the diminishing marginal utility of consumption and the increasing importance of climate safety.

Figure 2: The environmental Kuznets curve. Reprinted from (Yandle et al. 2002).

Now everyone admits that this dynamic is not fast enough to stop the world from causing irresponsible levels of environmental harm. We’ve already done that. But it may well be enough to prevent the most catastrophic warming scenarios, where 10-20C warming may lead to human extinction or permanent curtailment of human potential.

Leopold Aschenbrenner (2020) argues that the same dynamic repeats for other existential risks. In Leopold’s model, society is divided into separate consumption and safety sectors. At time , the consumption sector produces consumption outputs as a function of the current level of consumption technology , and the labor force producing consumption goods :

Here is a constant determining the influence of technology on production.

Similarly, the safety sector produces safety outputs as a function of safety technology and the labor force producing safety outputs :

As in the environmental case, Leopold takes existential risk to increase with consumption outputs and decrease with safety outputs. In particular, he assumes:

for constants .

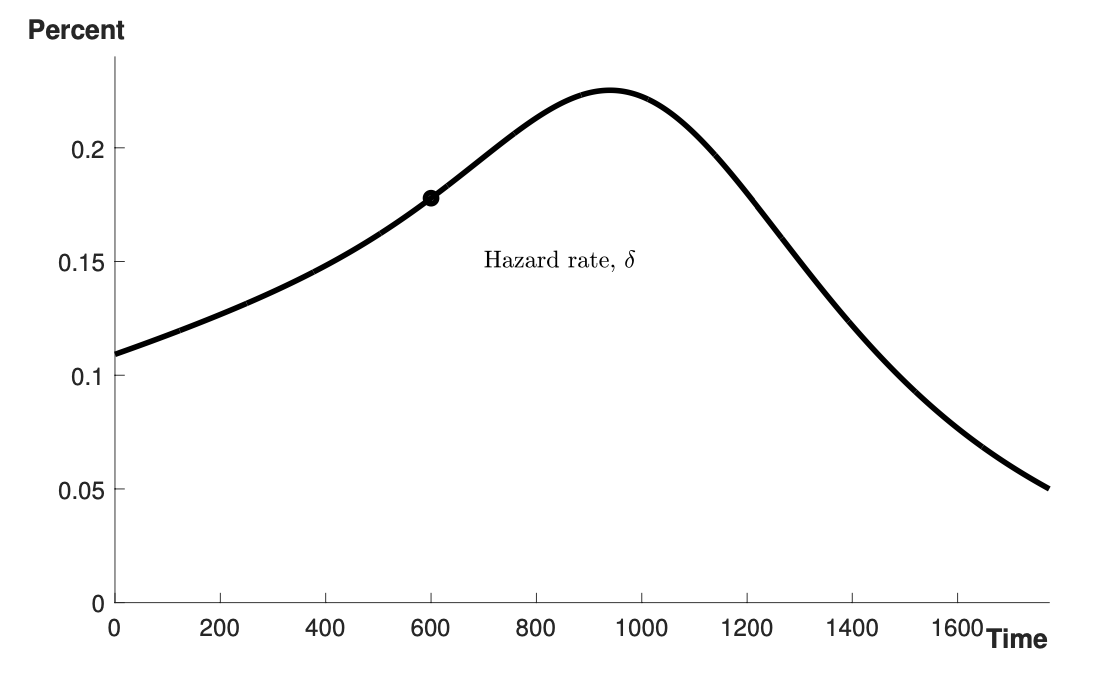

Leopold proves that under a variety of conditions, optimal resource allocation should lead society to invest quickly enough in safety over consumption to drive existential risk towards zero. Leopold also shows that under a range of assumptions, his model grounds an existential risk Kuznets curve: a U-shaped relationship between time and existential risk (Figure 3). Although existential risk remains high today and may increase for several centuries, eventually the diminishing marginal utility of consumption and the increasing importance of safety should chase risk exponentially towards zero. Until that happens, humanity remains in a Time of Perils, but afterwards, we should expect low levels of post-peril risk continuing indefinitely into the future.

Figure 3: The existential risk Kuznets curve. Reprinted from (Aschenbrenner 2020).

I think this is among the best arguments for the Time of Perils hypothesis. But I don’t think the model will save the Pessimist, because it disagrees with the Pessimist about the source of existential risk.

Leopold’s model treats consumption as the source of existential risk. But most Pessimists do not think that consumption is even the primary determinant of existential risk. In the special case of climate risk, consumption does indeed drive risk by emitting fossil fuels and causing other forms of environmental degradation. But Pessimists think most existential risk comes from things like rogue AI and engineered pandemics. These risks aren’t caused by consumption. They’re caused by technological growth. Risks from superintelligence grow with advances in technologies such as machine learning, and bioterrorism risks grow with advances in our capacity to synthesize, analyze and distribute biological materials. So a reduction in existential risk may be largely achieved through slowing growth of technology rather than slowing consumption.

We could revise (3) to let technologies A and B replace consumption outputs C as the main drivers of existential risk. But if we did this, we would lose all of the main theorems of the model, and hence we would not get a Time of Perils Hypothesis of the form we need.

6 What we’ve seen so far

Previously, we saw that:

- Across a range of assumptions, Existential Risk Pessimism tends to hamper, not support the Astronomical Value Thesis.

- The most plausible way to combine Existential Risk Pessimism with the Astronomical Value Thesis is through the Time of Perils Hypothesis.

- The right Time of Perils Hypothesis must have (a) a fairly short perilous period, and (b) a very low level of post-peril risk.

Then we asked: is this form of the Time of Perils Hypothesis true? In the previous two sections, we saw that:

- Arguments for the Time of Perils Hypothesis which do not appeal to AI are not strong enough to ground the relevant kind of Time of Perils Hypothesis: It won’t do to bank on space settlement, because that won’t do enough to solve the most pressing anthropogenic risks. And we can’t give a Kuznets-style economic argument for the Time of Perils Hypothesis without disagreeing with the Pessimist about the root causes of existential risk.

If that is right, then what might it imply for existential risk as a cause area?

7 Implications for existential risk mitigation as a cause area

To a large extent, these implications are up for discussion. But here are some things I think we might conclude from the discussion in this post.

- Optimism helps: If you’re an optimist about current levels of existential risk, you should think it’s even more important to reduce existential risk than if you’re a Pessimist. This has two important implications.

- Mistargeted pushback: A common reaction to existential risk mitigation is that it can’t be very important, because levels of existential risk are actually much lower than EAs take them to be. All of the models in this paper strongly suggest that this reaction misses the mark. Within reason, the lower you think that existential risk is, the more enthusiastic you should be about reducing it.

- Danger for Pessimists: On the other hand, there is a live danger that existential risk mitigation may not be so valuable for Pessimists.

- Cooling (slightly) on the Astronomical Value Thesis: Across the board, the models in this post suggest that it is harder than many EAs might think to support the Astronomical Value Thesis. The Astronomical Value Thesis could well be true, but it isn’t obvious.

- Warming (slightly) on short-termist cause areas: If that is right, then it is less obvious than it may seem that existential risk mitigation is always more valuable than short-termist causes such as global health and poverty.

- The Time of Perils Hypothesis is very important: Pessimists should think that settling the truth value of the Time of Perils Hypothesis is one of the most crucial outstanding research questions facing humanity today. If the Time of Perils Hypothesis is false, then Pessimists may have to radically change their opinion of existential risk mitigation as a cause area.

- But what about AI?: One way that EAs often argue for the Time of Perils Hypothesis is by arguing that superintelligent AI will soon be developed, and once superintelligence arrives it will have the foresight to drive existential risk down to a permanently low level. If I am right that other prominent ways of arguing for the Time of Perils Hypothesis are not successful, then the story about superintelligence becomes more important. This suggests that it is even more important than before to make sure we have the correct view about the likelihood of superintelligence bringing an end to the Time of Perils.

8 Key uncertainties

In the spirit of humility, I think it is important to end with a discussion of some uncertainties in my post.

- Modeling assumptions: I’ve tried to be as generous as possible in thinking of ways to square Pessimism with the Astronomical Value Thesis. I’ve only found one model that works, the Time of Perils model. But I could well have missed other models that work. I’d be very interested to see them!

- Fancier math: I’ve kept the models fairly simple, for example using discrete rather than continuous models, and treating quantities like risk as constants rather than variables. I think my conclusions are robust to some ways of making the models fancier, but I’m not sure if they’re robust to all ways of making the models fancier. If they’re not, that could be bad.

- Arguments for the Time of Perils Hypothesis: I’ve only considered two arguments for the Time of Perils Hypothesis. There are others (wisdom, anyone?) that I didn’t address. Perhaps these are very good arguments.

- Drawing conclusions: Are the implications that I draw for existential risk mitigation as a cause area well supported by the models? Are there other implications that might be worth considering?

- Unknown unknowns: No author is a perfect judge of where they might have gone wrong. Most of us are quite poor judges of this. I am sure that I made, or could have made mistakes that I have not even considered.

What do you think?

9 Acknowledgments

Thanks to Tom Adamczewski, Gustav Alexandrie, Tom Bush, Tomi Francis, David Holmes, Toby Ord, Sami Petersen, Carl Shulman, and Phil Trammell for comments on this work. Thanks to audiences at the Center for Population-Level Bioethics, EAGxOxford and the Global Priorities Institute for comments and discussion. Thanks to Anna Ragg, Rhys Southan and Natasha Oughton for research assistance.

Appendix (Proofs)

The Simple Model

(Simple Model)

Note that is a truncated geometric series so that:

Let be an intervention reducing risk in this century to , and let be the result of performing . Then

And hence:

Absolute risk reduction

Let be an intervention reducing risk in this century to for . Then:

So that:

Linear growth

(Linear Growth)

Note that is a polylogarithm with order . Recalling that

we have:

If produces a relative reduction of risk by then:

So that:

Quadratic growth

(Quadratic Growth)

Note that is a polylogarithm with order . Recalling that

we have

With as before we have:

Giving:

Global risk reduction

If produces a global (relative) reduction in risk by , then

so that

The Time of Perils

(Time of Perils)

Note that:

If leads to a relative reduction of risk by in the next century, then:

Subtracting term-wise gives:

References

Alvarez, Luis W., Alvarez, Walter, Asaro, Frank, and Michel, Helen V. 1980. “Extraterrestrial cause for the Cretaceous-Tertiary extinction.” Science 208:1095–1180.

Aschenbrenner, Leopold. 2020. “Existential risk and growth.” Global Priorities Institute Working Paper 6-2020.

Bostrom, Nick. 2013. “Existential risk prevention as a global priority.” Global Policy 4:15–31.

—. 2014. Superintelligence. Oxford University Press.

Ćirković, Milan. 2019. “Space colonization remains the only long-term option for humanity: A reply to Torres.” Futures 105:166–173.

Dasgupta, Susmita, Laplante, Benoit, Wang, Hua, and Wheeler, David. 2002. “Confronting the environmental Kuznets curve.” Journal of Economic Perspectives 16:147–168.

Deudney, Daniel. 2020. Dark skies: Space expansionism, planetary geopolitics, and the ends of humanity. Oxford University Press.

Gottlieb, Joseph. 2019. “Space colonization and existential risk.” Journal of the American Philosophical Association 5:306–320.

Grossman, Gene and Krueger, Alan. 1995. “Economic growth and the environment.” Quarterly Journal of Economics 110:353–377.

John, Tyler and MacAskill, William. 2021. “Longtermist institutional reform.” In Natalie Cargill and Tyler John (eds.), The long view. FIRST.

Jones, Charles. 2016. “Life and growth.” Journal of Political Economy 124:539–78.

Kaul, Inge, Grunberg, Isabelle, and Stern, Marc (eds.). 1999. Global public goods: International cooperation in the 21st century. Oxford University Press.

Mogensen, Andreas. 2019. “Doomsday rings twice.” Global Priorities Institute Working Paper 1-2019.

Musk, Elon. 2017. “Making humans a multi-planetary species.” New Space 5:46–61.

Ord, Toby. 2020. The precipice. Bloomsbury.

Parfit, Derek. 2011. On what matters, volume 1. Oxford University Press.

Rees, Martin. 2003. Our final hour. Basic books.

Sagan, Carl. 1997. Pale blue dot: A vision of the human future in space. Ballantine Books.

Sandberg, Anders and Bostrom, Nick. 2008. “Global catastrophic risks survey.” Technical Report 2008-1, Future of Humanity Institute.

Schulte, Peter et al. 2010. “The Chicxulub asteroid impact and mass extinction at the Cretaceous-Paleogene boundary.” Science 327:1214–1218.

Schwartz, James. 2011. “Our moral obligation to support space exploration.” Environmental Ethics 33:67–88.

Shulman, Carl and Thornley, Elliott. forthcoming. “Tradeoffs between longtermism and other social metrics: Is longtermism relevant to existential risk in practice?” In Jacob Barrett, Hilary Greaves, and David Thorstad (eds.), Longtermism. Oxford University Press.

Solow, Robert. 1956. “A contribution to the theory of economic growth.” Quarterly Journal of Economics 70:65–94.

Stokey, Nancy. 1998. “Are there limits to growth?” International Economic Review 39.

Thompson, Dennis. 2010. “Representing future generations: Political presentism and democratic trusteeship.” Critical Review of International Social and Political Philosophy 13:17–37.

Tokarska, Katarzyna, Gillett, Nathan, Weaver, Andrew, Arora, Viek, and Eby, Michael. 2016. “The climate response to five trillion tonnes of carbon.” Nature Climate Change 6:815–55.

Torres, Phil. 2018. “Space colonization and suffering risks: Reassessing the ‘maxipok rule’.” Futures 100:74–85.

Yandle, Bruce, Bhattarai, Madhusadan, and Vijayaraghavan, Maya. 2002. “The environmental Kuznets curve: A primer.” Technical report, Property and Environment Research Center.

Mark Xu @ 2022-08-14T03:24 (+105)

I think this model is kind of misleading, and that the original astronomical waste argument is still strong. It seems to me that a ton of the work in this model is being done by the assumption of constant risk, even in post-peril worlds. I think this is pretty strange. Here are some brief comments:

- If you're talking about the probability of a universal quantifier, such as "for all humans x, x will die", then it seems really weird to say that this remains constant, even when the thing you're quantifying over grows larger.

- For instance, it seems clear that if there were only 100 humans, the probability of x-risk would be much higher than if there were 10^6 humans. So it seems like if there are 10^20 humans, it should be harder to cause extinction than 10^10 humans.

- Assuming constant risk has the implication that human extinction is guaranteed to happen at some point in the future, which puts sharp bounds on the goodness of existential risk reduction.

- It's not that hard to get exponentially decreasing probability on universal quantifiers if you assume independence in survival amongst some "unit" of humanity. In computing applications, it's not that hard to drive down the probability of error exponentially in the resources allocated, because each unit of resource can ~halve the probability of error. Naively, each human doesn't want to die, so there are # humans rolls for surviving/solving x-risk.

- It seems like the probability of x-risk ought to be inversely proportional to the current estimated amount of value at stake. This seems to follow if you assume that civilization acts as a "value maximizer" and it's not that hard to reduce x-risk. Haven't worked it out, so wouldn't be surprised if I was making some basic error here.

- Generally, it seems like most of the risk is going to come from worlds where the chance of extinction isn't actually a universal quantifier, and there's some correlation amongst seemingly independent roles for survival. In particularly bad cases, humans go extinct if there exists someone that wants to destroy the universe, so we actually see an extremely rapid increasing probability of extinction as we get more humans. These worlds would require extremely strong coordination and governance solutions.

- These worlds are also slightly physically impossible because parts of humanity will rapidly become causally isolated from each other. I don't know enough cosmology to have an intuition for which way the functional form will ultimately go.

- Generally, it seems like the naive view is that as humans get richer/smarter, they'll allocate more and more resources towards not dying. At equilibrium, it seems reasonable to first-order-assume we'll drive existential risk down until the marginal cost equals the marginal benefit, so the key question is how this equilibrium behaves. It seems like my guess is that it will depend heavily on the total amount of value available in the future, determined by physical constraints (and potentially more galaxy-brained considerations).

- This view seems to allow you to recover more the more naive astronomical waste perspective.

- This makes me feel like the model makes kind of strong assumptions about the amount it will ultimately cost to drive down existential risk. E.g. you seem to imply that rl = 0.0001 is small, but an independent chance that large each century suggests that the probability humanity survives for ~10^10 years is ~0. This feels quite absurd to me.

- The sentence: "Note that for the Pessimist, this is a reduction of 200,000%", but humans routinely reduce the probabilities of failures by more than 200,000% via engineering efforts and produce highly complex and artifacts like computers, airplanes, rockets, satellites, etc. It feels like you should naively expect "breaking" human civilization to be harder than breaking an airplane, especially when civilization is actively trying to ensure that it doesn't go extinct.

- Also, you seem to assume each century has some constant value v eventually, which seems reasonable to me, but the implication "Warming (slightly) on short-termist cause areas" relies on an assumption that the current century is close to value v, when it seems like even pretty naive bounds (e.g. percent of sun's energy), suggest that the current century is not even within a factor of 10^9 of the long-run value-per-century humanity could reach.

- Assuming that value grows quadratically seems also quite weird, because of analysis like eternity in 6 hours, which seems to imply that a resource-maximizing civilization will undergo a period of incredibly rapid expansion to achieve per-century rates of value much higher than the current century, and then have nowhere else to go. A better model from my perspective is logistic growth of value, with the upper bound given by some weak proxy like "suppose that value is linear in the amount of energy a civilization uses, then take the total amount of value in the year 2020", with the ultimate unit being "value in 2020". This would produce much higher numbers, and give a more intuitive sense of "astronomical waste."

I like the process of proposing concrete models for things as a substrate for disagreement, and I appreciate that you wrote this. It feels much better to articulate objections like "I don't think this particular parameter should be constant in your model" than to have abstract arguments. I also like how it's now more clear that if you do believe that risk in post-peril worlds is constant, then the argument for longtermism is much weaker (although I think still quite strong because of my comments about v).

David Thorstad @ 2022-08-14T07:03 (+15)

Thanks Mark! This is extremely helpful.

I agree that it's important to look in detail at models to see what is going on. We can't settle debates about value from the armchair.

I'll try to type up some thoughts in a few edits, since I want to make sure to think about what to say.

Population growth: It's definitely possible to decompose the components of the Ord/Adamczewski/Thorstad model into their macroeconomic determinants (population, capital, technology, etc.). Economists like to do this. For example, Leopold does this.

It can also be helpful to decompose the model in other ways. Sociologists might want to split up things like v and r into a more fine-grained model of their social/political determinants, for example.

I tend to think that population growth is not going to be enough to substantially reverse the conclusions of the model, although I'd be really interested to see if you wanted to work through the conclusions here. For example, my impression with Leopold's model is that if you literally cut population growth out of the model, the long-term qualitative behavior and conclusions wouldn't change that much: it would still be the case that the driving assumptions are (a) research in safety technologies exponentially decreases risk, (b) the rate at which safety research exponentially decreases risk is "fast", in the sense specified by Leopold's main theorem.

(I didn't check this explicitly. I'd be curious if someone wanted to verify this).

I also think it is important to think about some ways in which population growth can be bad for the value of existential risk mitigation. For example, the economist Maya Eden is looking at non-Malthusian models of population growth on which there is room for the human population to grow quite substantially for quite some time. She thinks that these models can often make it very important to do things that will kickstart population growth now, like staying at home to raise children or investing in economic growth. Insofar as investments and research into existential risk mitigation take us away from those activities, it might turn out that existential risk mitigation is relatively less valuable -- quite substantially so, on some of Maya's models. We just managed to talk Maya into contributing a paper to an anthology on longtermism that GPI is putting together, so I hope that some of these models will be in print within 1-2 years.

In the meantime, maybe some people would like to play around with population in Leopold's model and report back on what happens?

First edit: Constant risk and guaranteed extinction: A nitpick: constant risk doesn't assume guaranteed extinction, but rather extinction with probability 1. Probability 0 events are maximally unlikely, but not impossible. (Example: for every point on 3-D space there's probability 0 that a dart lands centered around that point. But it does land centered around one).

More to the point, it's not quite the assumption of constant risk that gives probability 1 to (eventual) extinction. To get probability <1 of eventual extinction, in most models you something stronger like (a) indefinitely sustained decay in extinction risk of exponential or higher speed, and (b) a "fast" rate of decay in your chosen functional form. This is pretty clear, for example, in Leopold's model.

I think that claims like (a) and (b) can definitely be true, but that it is important to think carefully about why they are true. For example, Leopold generates (a) explicitly through a specified mechanism: producing safety technologies exponentially decreases risk. I've argued that (a) might not be true. Leopold doesn't generate (b). Leopold just put (b) as part of his conclusion: if (b) isn't true, we're toast.

Second edit: Allocating resources towards safety: I think it would be very valuable to do more explicit modelling about the effect of resources on safety.

Leopold's model discusses one way to do this. If you assume that safety research drives down risk exponentially at a reasonably quick rate, then we can just spend a lot more on safety as we get richer. I discuss this model in Section 5.

That's not the only mechanism you could propose for using resources to provide safety. Did you have another mechanism in mind?

I think it would be super-useful to have an alternative to Leopold's model. More models are (almost) always better.

In the spirit of our shared belief in the importance of models, I would encourage you to write down your model of choice explicitly and study it a bit. I think it could be very valuable for readers to work through the kinds of modelling assumptions needed to get a Time of Perils Hypothesis of the needed form, and why we might believe/disbelieve these assumptions.

Third edit: Constant value: It would definitely be bad to assume that all centuries have constant value. I think the exact word I used for that assumption was "bonkers"! My intention with the value growth models in Section 3 was to show that the conclusions are robust to some models of value growth (linear, quadratic) and in another comment I've sketched some calculations to suggest that my the model's conclusions might even be robust to cubic, quartic (!) or maybe even quintic (!!) growth.

I definitely didn't consider logistic growth explicitly. It could be quite interesting to think about the case of logistic growth. Do you want to write this up and see what happens?

I don't think that the warming on short-termist cause areas should rely on an assumption of constant v. This is the case for a number of reasons. (a) Anything we can do to decrease the value of longtermist causes translates into a warming in our (relative) attitudes towards short-termist cause areas; (b) Short-termist causes can often have quite good longtermist implications, as for example in the Maya Eden models I mentioned earlier, or in some of Tyler Cowen's work on economic growth. (c) As we saw in the model, probabilities matter. Even if you think there is an astronomical amount of value out there to be gained, if you think we're sufficiently unlikely to gain it then short-termist causes can look relatively more attractive.

A concluding thought: I do very much like your emphasis on concrete models as a substitute for disgareement. Perhaps I could interest you in working out some of the models you raised in more detail, for example by modeling the effects of population growth using a standard macroeconomic model (Leopold uses a Solow-style model, but you could use another, and maybe go more endogenous)? I'd be curious to see what you find!

MichaelStJules @ 2022-08-14T14:11 (+2)

Another risk is replacement by aliens (life or AI), either they get to where we want to expand to first and we're prevented from generating much value there or we have to leave regions we previously occupied, or even have it all taken over. If they are expansive like "grabby aliens", we might not be left with much or anything. We might expect aliens from multiple directions effectively boxing us in a bounded region of space.

A nonzero lower bound for the existential risk rate would be reasonable on this account, although I still wouldn't assign full weight to this model, and might still assign some weight to decreasing risk models with risks approaching 0. Maybe we're so far from aliens that we will practically never encounter them.

On the other hand, there seem to be some pretty hard limits on the value we can generate set by the accelerating expansion of the universe, but this is probably better captured with a bound on the number of terms in the sum and not an existential risk rate. This would prevent the sum from becoming infinite with high probability, although we might want to allow exotic possibilities of infinities.

Linch @ 2023-04-13T20:15 (+53)

Tl;dr is that if you enumerate all the hypotheses:

a) the conjunction "x-risk will be high this century" + "it will be low again some time in the future"" is not that surprising compared to the space of all possibilities, because all other hypotheses are also surprising.

b) (weaker claim) almost all of the surprise of the conjunction "x-risk will be high this century" + "it will be low again some time in the future" comes from the first claim that x-risk will be high, so we can't say Time of Perils is uniquely surprising if people already buy the first claim.

Your paper talks about the lack of strong positive arguments for Time of Perils that does not route through AI. But if I'm correct, the lack of strong positive arguments for Time of Perils can't by itself get you below <10% for Time of Perils without strong positive arguments for alternative hypotheses.

____

Longer argument:

Thorstad positions priors of x-risk as high vs low as an abstract choice. And then says that thinking it's currently high but will be very low in the future as a surprising claim.

We know that the prior rate of x-risk cannot be very high (evidence: humanity's history[1]). We have some empirical observations and speculations (eg Ord's book) that it might be very high this century. What does this tell us about x-risk 1000 years from now?

Framed this way, you can do one of the following:

- Deny evidence like Ord's book and just stick with your priors about x-risk this century.

- Think x-risk has always been high, the evidence in Ord's book is consistent with it being high, it will continue being high this century, and it will be high forever (until we die).

- (step-change hypothesis) Think x-risk has historically been low, the evidence in Ord's book updates us towards it being high now, and it will be high forever after.

- (time of perils hypothesis) Think x-risk has historically been low, the evidence in Ord's book updates us towards it being high now, and it will go back to being low later.

Thorstad wants to position (4) as really surprising compared to the other hypothesis. But framed this way, I don't think any other hypothesis are very strong either.

As the paper is primarily about x-risk pessimism, we can rule (1) out by assumption (also I personally think it's pretty wild to not update hugely on the observed evidence).

(2) is kinda crazy because we exist. You need to appeal to extraordinarily good luck or anthropics to explain why (2) is consistent with us being around, and also we have observed evidence against (eg I don't think there are many near misses pre-1900).

So we're left to debate between (3) and (4). I don't think you should think of (3) as significantly more likely than (4) in either the abstract or the particulars, and I'd want strong positive arguments for (3) over (4) before assigning (4) <10% credence, never mind <1 in 100,000.

- ^

Your choice of plausible priors include backing out survival rates from timeline of humanity (3 million years), timeline of the homo sapiens subspecies (300,000 years), length of human civilization (6,000 years), lifespan of typical species (1-10 million years), timeline of multicellular life (600 million years), etc.

David Johnston @ 2023-04-17T11:31 (+25)

I don't see this. First, David's claim is that a short time of perils with low risk thereafter seems unlikely - which is only a fraction of hypothesis 4, so I can easily see how you could get H3+H4_bad:H4_good >> 10:1

I don't even see why it's so implausible that H3 is strongly preferred to H4. There are many hypotheses we could make about time varying risk:

- Monotonic trend (many varieties)

- Oscillation (many varieties)

- Random walk (many varieties)

- ...

If we aren't trying to carefully consider technological change (and ignoring AI seems to force us not to do this carefully) then it's not at all clear how to weigh all the different options. Many possible weightings do support hypothesis 3 over hypothesis 4:

- If we expect regular oscillation or time symmetric random walks, then I think we usually get H3 (integrated oscillation = high risk; the lack of risk in the past suggests that period of oscillation is long)

- If we expect rare, sudden changes then we get H3

- Monotonic trend obviously favours H3

If I imagine going through this this exercise, I wouldn't be that surprised to see H3 strongly favoured over H4 - but I don't really see it as a very valuable exercise. The risk under consideration is technologically driven, so not considering technology very carefully seems to be a mistake.

Linch @ 2023-04-17T13:39 (+10)

Fair overall. I talked to some other people, and I think I missed the oscillation model when writing my original comment, which in retrospect is a pretty large mistake. I still don't think you can buy that many 9s on priors alone, but sure, if I think about it more maybe you can buy 1-3 9s. :/

First, David's claim is that a short time of perils with low risk thereafter seems unlikely.

Suppose you were put to cryogenic sleep. You wake up in the 41st century. Before learning anything about this new world, is your prior really[1] that the 41st century world is as (or more) perilous as the 21st century?

If we aren't trying to carefully consider technological change (and ignoring AI seems to force us not to do this carefully) then it's not at all clear how to weigh all the different options. Many possible weightings do support hypothesis 3 over hypothesis 4

[...]

Yeah I need to think about this more. I'm less used to thinking about mathematical functions and more used to thinking about plausible reference classes. Anyway, when I think about (rare but existent) other survival risks that jumps from 1 in 10,000 in a time period to 1 in 5, I get the following observations:

Each of the following seems plausible:

- risk goes up and stays up for a while before going back down to background levels

- Concrete example: some infectious diseases

- risk goes down to baseline almost immediately

- Concrete example: somebody shoots me. (If they miss and I get away, or some time after I recover, I assume my own mortality risk is back to baseline).

- risk (quickly )monotonically goes up until either you die, or goes back down again.

- Concrete example: Russian roulette

- risk starts going down but never quite goes back to baseline

- Concrete example: "curable" cancer, infectious disease with long sequelae

On the other hand, the following seems rarer/approximately never happens (at least of the limited set of things I've considered):

- risks constantly stay as high as 1:4 for many timesteps

- Like this just feels like a pretty absurd risk profile.

- I don't think even death row prisoners, kamikaze pilots, etc, have this profile, for context.

- Which makes me be a bit confused about long "time of perils" or continously elevated constant risk model.

- Like this just feels like a pretty absurd risk profile.

- risks go back to much lower than background levels

- Convoluted stories are possible but it's hard to buy even one OOM I think.

- Which makes me (somewhat) appreciate that arguments for existentially stable utopia (ESU) are disadvantaged in the prior.

Now maybe I just lack creativity, and I'm definitely not thinking about things exhaustively.

And of course I'm cheating some by picking a reference class I actually have an intellectual handle on (humans have mostly figured out how to estimate amortized individual risks; life insurance exists). Trying to figure out x-risk 100,000 years from now is maybe less like modeling my prognosis after I get a rare disease and more like modeling my 10-year survival odds after being kidnapped by aliens. But in a way this is sort of my point: the whole situation is just really confusing so your priors should have a bunch of reference classes and some plausible ignorance priors, not the type of thing you have 10^-5 to ^ 10^-9 odds against before you see the evidence.

- ^

tbc this thought experiment only works for people who think current x-risk is high.

David Johnston @ 2023-04-18T03:12 (+9)

I'm writing quickly because I think this is a tricky issue and I'm trying not to spend too long on it. If I don't make sense, I might have misspoken or made a reasoning error.

One way I thought about the problem (quite different to yours, very rough): variation in existential risk rate depends mostly on technology. At a wide enough interval (say, 100 years of tech development at current rates), change in existential risk with change in technology is hard to predict, though following Aschenbrenner and Xu's observations it's plausible that it tends to some equilibrium in the long run. You could perhaps model a mixture of a purely random walk and walks directed towards uncertain equilibria.

Also, technological growth probably has an upper limit somewhere, though quite unclear where, so even the purely random walk probably settles down eventually.

There's uncertainty over a) how long it takes to "eventually" settle down, b) how much "randomness" there is as we approach an equilibrium c) how quickly equilibrium is approached, if it is approached.

I don't know what you get if you try to parametrise that and integrate it all out, but I would also be surprised if it put and overwhelmingly low credence in a short sharp time of troubles.

I think "one-off displacement from equilibrium" probably isn't a great analogy for tech-driven existential risk.

I think "high and sustained risk" seems weird partly because surviving for a long period under such conditions is weird, so conditioning on survival usually suggests that risk isn't so high after all - so in many cases risk really does go down for survivors. But this effect only applies to survivors, and the other possibility is that we underestimated risk and we die. So I'm not sure that this effect changes conclusions. I'm also not sure how this affects your evaluation of your impact on risk - probably makes it smaller?

I think this observation might apply to your thought experiment, which conditions on survival.

Linch @ 2023-04-18T03:48 (+9)

Appreciate your comments!

(As an aside, it might not make a difference mathematically, but numerically one possible difference between us is that I think of the underlying unit to be ~logarithmic rather than linear)

Also, technological growth probably has an upper limit somewhere, though quite unclear where, so even the purely random walk probably settles down eventually.

Agreed, an important part of my model is something like nontrivial credence in a) technological completion conjecture and b) there aren't "that many" technologies laying around to be discovered. So I zoom in and think about technological risks, a lot of my (proposed) model is thinking about the a) underlying distribution of scary vs worldsaving technologies and b) whether/how much the world is prepared for each scary technology as they appears, c) how high is the sharpness of dropoff of lethality for survival from each new scary technology conditional upon survival in the previous timestep.

I think "high and sustained risk" seems weird partly because surviving for a long period under such conditions is weird, so conditioning on survival usually suggests that risk isn't so high after all - so risk really does go down for survivors. But this effect only applies to the fraction who survive, so I'm not sure that it changes conclusions

I think I probably didn't make the point well enough, but roughly speaking, you only care about worlds where you survive, so my guess is that you'll systematically overestimate longterm risk if your mixture model doesn't update on survival at each time step to be evidence that survival is more likely on future time steps. But you do have to be careful here.

I'm also not sure how this affects your evaluation of your impact on risk - probably makes it smaller?

Yeah I think this is true. A friend brought up this point, roughly, the important parts of your risk reduction comes from temporarily vulnerable worlds. But if you're not careful, you might "borrow" your risk-reduction from permanently vulnerable worlds (given yourself credit for high microextinctions averted), and also "borrow" your EV_of_future from permanently invulnerable worlds (given yourself credit for a share of an overwhelmingly large future). But to the extent those are different and anti-correlated worlds (which accords with David's original point, just a bit more nuanced), then your actual EV can be a noticeably smaller slice.

Vasco Grilo @ 2023-05-22T15:36 (+7)

Hi David,

- If we expect regular oscillation or time symmetric random walks, then I think we usually get H3 (integrated oscillation = high risk; the lack of risk in the past suggests that period of oscillation is long)

We can still get H4 if the amplitude of the oscillation or random walk decreases over time, right?

- If we expect rare, sudden changes then we get H3

Only if the sudden change has a sufficiently large magnitude, right?

David Johnston @ 2023-05-23T11:47 (+3)

We can still get H4 if the amplitude of the oscillation or random walk decreases over time, right?

The average needs to fall, not the amplitude. If we're looking at risk in percentage points (rather than, say, logits, which might be a better parametrisation), small average implies small amplitude, but small amplitude does not imply small average.

Only if the sudden change has a sufficiently large magnitude, right?

The large magnitude is an observation - we have seen risk go from quite low to quite high over a short period of time. If we expect such large magnitude changes to be rare, then we might expect the present conditions to persist.

Vasco Grilo @ 2023-05-24T16:27 (+2)

Thanks for the clarifications!

The average needs to fall, not the amplitude. If we're looking at risk in percentage points (rather than, say, logits, which might be a better parametrisation), small average implies small amplitude, but small amplitude does not imply small average.

Agreed. I meant that, if the risk is usually quite low (e.g. 0.001 % per century), but sometimes jumps to a high value (e.g. 1 % per century), the cumulative risk (over all time) may still be significantly below 100 % (e.g. 90 %) if the magnitude of the jumps decreases quickly, and risk does not stay high for long.

The large magnitude is an observation - we have seen risk go from quite low to quite high over a short period of time. If we expect such large magnitude changes to be rare, then we might expect the present conditions to persist.

Why should we expect the present conditions to persist if we expect large magnitude changes to be rare?

David Johnston @ 2023-05-24T22:17 (+3)

Because we are more likely to see no big changes than to see another big change.

if the risk is usually quite low (e.g. 0.001 % per century), but sometimes jumps to a high value (e.g. 1 % per century), the cumulative risk (over all time) may still be significantly below 100 % (e.g. 90 %) if the magnitude of the jumps decreases quickly, and risk does not stay high for long.

I would call this model “transient deviation” rather than “random walk” or “regular oscillation”

t_adamczewski @ 2022-08-17T12:05 (+28)

Thanks for this article! In my view, it's a really good contribution to the debate, and the issues it raises are under-rated by longtermists.

I do feel uneasy about being given joint credit with Toby Ord for the model (and since this is now public I want to say so publicly). Though I may have made an expository contribution, the model is definitely the work of Ord.

In my undergraduate days of 2017, based on Ord's unpublished draft, I wrote this article. I think my contributions were:

- A more explicit (or, depending on your perspective, plodding and equation-laden) exposition of the Ord model

- Longer discussion (around 7,000 words vs Ord's 3,000)

- Some extensions of the model

I now view the extensions as far less useful than the exposition of the core model proposed by Ord; the extensions were not worth mathematising. At the time I had a fondness for them -- today I look back upon that as somewhat sophomoric. Indeed, this article uses none of my extensions (at least as of the last time I read a draft). So I don't think it's correct to say the model is even in part due to me.

By the way, Ord's model has now been published as appendix E of The Precipice, but in even more summary form than the unpublished document I looked at in 2017. This shortening might lead someone looking at the published record today to underestimate Ord's contribution and overestimate mine. My article does credit him for everything that is his, and so is a good source for the genealogy of the idea.

David Thorstad @ 2022-08-17T12:23 (+10)

Thanks Tom! It's really good to hear from you.

I just wanted to express a perspective that perhaps Tom is being overly modest about his own contributions.

As someone who has read carefully through both Tom and Toby's work, I can say that I found both Tom and Toby's work stimulating in a complimentary way, and that I would not have learned as much as I have by reading either aticle alone.

MichaelStJules @ 2022-08-13T14:57 (+24)

Many EAs endorse two claims about existential risk. First, existential risk is currently high:

"(Existential Risk Pessimism) Per-century existential risk is very high."

For example, Toby Ord (2020) puts the risk of existential catastrophe by 2100 at 1/6, and participants at the Oxford Global Catastrophic Risk Conference in 2008 estimated a median 19% chance of human extinction by 2100 (Sandberg and Bostrom 2008). Let’s ballpark Pessimism using a 20% estimate of per-century risk.

This isn't really important for the implications of the post, but is it really true that many EAs endorse Existential Risk Pessimism (rather than something like Time of Perils)? I rarely see discussion of per-period existential risk estimates. The estimates you cite here are for existential risk this century (by 2100), not per century. I doubt those coming up with the figures you cite believe per century risk is about 20% on average (median), where this represents the probability of a new existential catastrophe (or multiple) starting in the given century, conditional on none before.

There was some discussion of per-period risks here and here.

matthew.vandermerwe @ 2022-08-15T14:28 (+24)

I doubt those coming up with the figures you cite believe per century risk is about 20% on average

Indeed! In The Precipice, Ord estimates a 50% chance that humanity never suffers an existential catastrophe (p.169).

David Thorstad @ 2022-08-13T16:02 (+4)

Thanks Michael -- you've found the terminological bane of my existence! (And the links are very helpful).

Of course it's quite true that many Pessimists hold a Time of Perils View. One way to read the upshot of the paper is that Pessimists should probably hold a Time of Perils View.

The terminological problem is that, as you've said, my statement of Existential Risk Pessimism implies (or at least sneakily fails to rule out) that I'm talking about risk in all centuries, in which case Existential Risk Pessimism is strictly speaking incompatible with the Time of Perils View.

The natural solution to this problem is to let Existential Risk Pessimism be defined as a view about risk in this century. I tried that in the first draft. The problem I ran in to with this terminology is that I kept having to mention the assumption of constant risk throughout sections 2-3.3. And it made the paper very clunky to say this so many times. It also kinda ruined the punchline, because by the time I said the words "constant risk" a few times, everyone knew what the solution in Section 3.4 would be.

Readers complained so much about this that I changed it to the current terminology. As you mention, the current terminology is ... a bit misleading.

I really don't know how to fix this. I feel like both ways of defining Existential Risk Pessimism have drawn a lot of complaints from readers.

Surely there is a better third option, but i don't know what it is. Do you have any thoughts?

MichaelStJules @ 2022-08-13T16:37 (+6)

I would just change the part of the intro I quoted to speak of this view as a possible view someone might hold, without attributing the view to Ord or those others currently cited, and without claiming many EAs actually hold it. If you could find others to attribute the view to, that would be good.

You could continue to cite the same people, but clearly distinguish the hypotheses: "While these are claims only about risks this century, what if a high risk probability persisted and compounded each century? Let's call this hypothesis Existential Risk Pessimism:"

matthew.vandermerwe @ 2022-09-05T08:55 (+19)

Crossposting Carl Shulman's comment on a recent post 'The discount rate is not zero', which is relevant here:

It's quite likely the extinction/existential catastrophe rate approaches zero within a few centuries if civilization survives, because:

- Riches and technology make us comprehensively immune to natural disasters.

- Cheap ubiquitous detection, barriers, and sterilization make civilization immune to biothreats

- Advanced tech makes neutral parties immune to the effects of nuclear winter.

- Local cheap production makes for small supply chains that can regrow from disruption as industry becomes more like information goods.

- Space colonization creates robustness against local disruption.

- Aligned AI blocks threats from misaligned AI (and many other things).

- Advanced technology enables stable policies (e.g. the same AI police systems enforce treaties banning WMD war for billions of years), and the world is likely to wind up in some stable situation (bouncing around until it does).

If we're more than 50% likely to get to that kind of robust state, which I think is true, and I believe Toby does as well, then the life expectancy of civilization is very long, almost as long on a log scale as with 100%.

Your argument depends on 99%+++ credence that such safe stable states won't be attained, which is doubtful for 50% credence, and quite implausible at that level. A classic paper by the climate economist Martin Weitzman shows that the average discount rate over long periods is set by the lowest plausible rate (as the possibilities of high rates drop out after a short period and you get a constant factor penalty for the probability of low discount rates, not exponential decay).

Owen Cotton-Barratt @ 2022-08-13T15:43 (+16)

You say:

Arguments for the Time of Perils Hypothesis which do not appeal to AI are not strong enough to ground the relevant kind of Time of Perils Hypothesis

What you've shown is that some very specific alternate arguments aren't enough to ground the relevant kind of Time of Perils hypothesis. But the implicature of your statement here is that one needs to appeal to AI to ground the relevant hypothesis, which I think (1) you haven't shown, and (2) is most likely false (though I think it's easier to ground with AI).

I guess with a broad enough conception of "AI" I think the statement would be true. I think to get stability against political risks one needs systems that are extremely robust / internally error-correcting. It's my view that one could likely build such systems out of humans organized in novel ways with certain types of information flow in the system, but I think that's far out of reach at the moment that the social technology to enable it could conceivably be called "AI".

David Thorstad @ 2022-08-13T15:53 (+6)

Thanks Owen! It's good to hear from you.

I'd definitely like to make sure that I consider the best arguments for the Time of Perils Hypothesis. Did you have some other arguments in mind?

Owen Cotton-Barratt @ 2022-08-13T16:10 (+18)

I haven't laid out a particular argument because I think the AI argument is by some way the strongest, and I haven't done the work to flesh out the alternatives.

I guess I thought you were making a claim like "the only possible arguments that will work need to rest on AI". If instead you're making a claim like "among the arguments people have cleanly articulated to date, the only ones that appear to work are those which rest on AI" that's a much weaker claim (and I think it's important to disambiguate that that's the version you're making, as my guess is that there are other arguments which give you a Time of Perils with much lower probability than AI but still significant probability; but that AFAIK nobody's worked out the boundaries of what's implied by the arguments).

Arepo @ 2022-08-13T13:02 (+15)

I think this was a fantastic post - interesting and somehow, among all that maths, quite fun to read :P I'm about to start working on a related research project, so if you'd be willing to talk through this in person I'd love to arrange something. I'll PM you my contact details.

A couple of stylistic suggestions:

Change the title! My immediate response was 'oh god, another doom and gloom post', and one friend already mentioned to me he was going to put off reading it 'until he was in too good a mood'. I think that's doing it an injustice.

Label the axes tables explicitly - I found them hard to parse, even having felt like I grasped the surrounding discussion.

Substantively, my main disagreement is on the space side. Firstly I agree with weeatquince that in 20 centuries we could get way beyond one offworld colony. Elon Musk's goal last I heard was to get a self-sustaining Mars settlement by the 2050s, and if we get that far I'd expect the 'forcing function' he/Zubrin describe to incentivise faster colonisation of other habitats. Even assuming Musk is underestimating the challenge by a factor of 2-3, such that it will take a century then if there aren't any hard limits like insuperable health problems associated with microgravity, a colonisation rate of 1 new self-sustaining colony per colony per century, or 2^n total colonies, where n is number of centuries from now seems quite plausible to me! - at least up to the point where we've started using up all the rocky bodies in the solar system (but we'll hopefully be sending colonisation missions to other star systems by then - which would necessarily be capable of self-sufficiency even if our whole system is gone by the time they get there).

Secondly, I think even short term it's a better defence than you give it credit for. Engineered pandemics would have a much tougher time both reaching Mars without advance warning from everyone dying on Earth, and then spreading around a world which is presumably going to be heavily airlocked, and generally have much more active monitoring and control of its environment. Obviously it's hard to say much about 'other/unforeseen anthropogenic risks', but we should presumably discount some of their risk for similar reasons. More importantly IMO, the 'green' probability estimates you cite are for those things directly wiping out humanity, not the risk of them causing some major catastrophe, the latter of which I would guess is in total much higher than the risk of catastrophe from the red box. And IMO the EA movement tends to overestimate the probability that we can fully rebuild (and then reach the stars) from civilisational collapse. If you put that probability at 90-99% then such catastrophes are essentially a 0.01-0.1x multiplier on the loss of value of collapse - so they could still end up being a major x-risk factor if the triggering events are sufficiently likely (this is the focus of the project I mentioned).

David Thorstad @ 2022-08-13T13:49 (+13)

Thanks! Super-interesting. Please do PM me your contact details.

I'll try to type up a proper reply within a couple of hours. I'm currently being chased out of my house by the 90+-degree heat in England. Not good with no A/C. I think I'll be able to add something to the discussion once I've gone somewhere cool and downed a pint of ice cream.

Edit: Okay, semi-proper response below.

The presentational suggestions are extremely helpful. Many thanks!

1. How far could we go?: I'm really curious to hear more about how quickly you think we might settle the galaxy, and how we could go about doing that. I've heard similar views to yours from some people at GPI, for example Tomi Francis.

I guess I tend to think that settling $2^n$ planets in $n$ centuries might be a bit fast, especially in the short-term. This would put us at just over a million planets in twenty centuries. And while that might not sound like a lot, let's remember that the closest nearby star (Alpha Centauri) is more than four light years away. So if we're going to make it to even a few dozen planets in 2,000 years, we need to travel several light years, and drop enough people and supplies to make the settlement viable and worthwhile. And to make it to a million planets, we'd have to rinse and repeat many times.

I certainly wouldn't want to rule out that possibility in principle. And I have to admit that as a philosopher, I'm a bit under-qualified to pronounce on the future of space travel. But do you have some thoughts about how we might get this far in the near future?