Why Neuron Counts Shouldn't Be Used as Proxies for Moral Weight

By Adam Shriver @ 2022-11-28T12:07 (+297)

This is a linkpost to https://docs.google.com/document/d/1p50vw84-ry2taYmyOIl4B91j7wkCurlB/edit?rtpof=true&sd=true

Key Takeaways

- Several influential EAs have suggested using neuron counts as rough proxies for animals’ relative moral weights. We challenge this suggestion.

- We take the following ideas to be the strongest reasons in favor of a neuron count proxy:

- neuron counts are correlated with intelligence and intelligence is correlated with moral weight,

- additional neurons result in “more consciousness” or “more valenced consciousness,” and

- increasing numbers of neurons are required to reach thresholds of minimal information capacity required for morally relevant cognitive abilities.

- However:

- in regards to intelligence, we can question both the extent to which more neurons are correlated with intelligence and whether more intelligence in fact predicts greater moral weight;

- many ways of arguing that more neurons results in more valenced consciousness seem incompatible with our current understanding of how the brain is likely to work; and

- there is no straightforward empirical evidence or compelling conceptual arguments indicating that relative differences in neuron counts within or between species reliably predicts welfare relevant functional capacities.

- Overall, we suggest that neuron counts should not be used as a sole proxy for moral weight, but cannot be dismissed entirely. Rather, neuron counts should be combined with other metrics in an overall weighted score that includes information about whether different species have welfare-relevant capacities.

Introduction

This is the fourth post in the Moral Weight Project Sequence. The aim of the sequence is to provide an overview of the research that Rethink Priorities conducted between May 2021 and October 2022 on interspecific cause prioritization—i.e., making resource allocation decisions across species. The aim of this post is to summarize our full report on the use of neuron counts as proxies for moral weights. The full report can be found here and includes more extensive arguments and evidence.

Motivations for the Report

Can the number of neurons an organism possesses, or some related measure, be used as a proxy for deciding how much weight to give that organism in moral decisions? Several influential EAs have suggested that the answer is “Yes” in cases that involve aggregating the welfare of members of different species (Tomasik 2013, MacAskill 2022, Alexander 2021, Budolfson & Spears 2020).

For the purposes of aggregating and comparing welfare across species, neuron counts are proposed as multipliers for cross-species comparisons of welfare. In general, the idea goes, as the number of neurons an organism possesses increases, so too does some morally relevant property related to the organism’s welfare. Generally, the morally relevant properties are assumed to increase linearly with an increase in neurons, though other scaling functions are possible.

Scott Alexander of Slate Star Codex has a passage illustrating how weighting by neuron count might work:

“Might cows be "more conscious" in a way that makes their suffering matter more than chickens? Hard to tell. But if we expect this to scale with neuron number, we find cows have 6x as many cortical neurons as chickens, and most people think of them as about 10x more morally valuable. If we massively round up and think of a cow as morally equivalent to 20 chickens, switching from an all-chicken diet to an all-beef diet saves 60 chicken-equivalents per year.” (2021)

This methodology has important implications for assigning moral weight. For example, the average number of neurons in a human (86,000,000,000) is 390 times greater than the average number of neurons in a chicken (220,000,000) so we would treat the welfare units of humans as 390 times more valuable. If we accepted the strongest version of the neuron count hypothesis, determining the moral weight of different species would simply be a matter of using the best current techniques (such as those developed by Herculano-Houzel) to determine the average number of neurons in different species.

Arguments Connecting Neuron Counts to Moral Weight

There are several practical advantages to using neuron counts as a proxy for moral weight. Neuron counts are quantifiable, they are in-principle measurable, they correlate at least to some extent with cognitive abilities that are plausibly relevant for moral standing, and they correlate to some extent with our intuitions about the moral status of different species. What these practical advantages show is that, if it is the case that neuron counts are a good proxy for moral weight, that would be very convenient for us. But we still need an argument for why we should believe in the first place that neuron counts are in fact connected to moral weight in a reliable way.

There are very few explicit arguments explaining this connection. However, we believe the following possibilities are the strongest reasons in favor of connecting neuron counts to moral weight: (1) neuron counts are correlated with intelligence and intelligence is correlated with moral weight, (2) additional neurons result in “more consciousness” or “more valenced consciousness” and (3) increasing numbers of neurons are required to reach thresholds of minimal information capacity required for morally relevant cognitive abilities. In a separate report in this sequence, we consider the possibility that (4) greater numbers of neurons lead to more “conscious subsystems” that have associated moral weight.

Neuron Counts As a Stand-In For Information Processing Capacity

Before summarizing our reservations about these arguments, it's important to flag a common assumption, which is that greater informational processing is ultimately what matters for moral weight, with “brain size” or “neuron count” being taken as indicators of information processing ability. However, brain size measured by mass or volume turns out not to directly predict the number of neurons in an organism, both because neurons themselves can be different sizes and because brains can contain differing proportions of neurons and connective tissue. Moreover, different types of species have divergent evolutionary pressures that can influence neuron size. For example, avian species tend to have smaller neurons because they need to keep weight down in order to fly. Aquatic mammals, however, have less pressure than land mammals from the constraints of gravity, and as such can have larger brains and larger neurons without as significant of an evolutionary cost.

Moreover, the raw number of neurons an organism possesses does not tell the full story about information processing capacity. That’s because the number of computations that can be performed over a given amount of time in a brain also depends upon many other factors, such as (1) the number of connections between neurons, (2) the distance between neurons (with shorter distances allowing faster communication), (3) the conduction velocity of neurons, and (4) the refractory period which indicates how much time must elapse before a given neuron can fire again. In some ways, these additional factors can actually favor smaller brains (Chitka 2009).

So, neuron counts are not in fact perfect predictors of information-processing capacity and the exact extent to which they are predictors is still to be determined. Importantly, although one initial practical consideration in favor of using neuron counts is that they are in principle measurable, when we move to overall information processing capacity it is no longer the case that we can accurately measure this feature across different organisms, because measuring all of the relevant factors mentioned above for entire brains is currently not possible. With that in mind, let’s consider the three most promising arguments for using neuron counts as proxies for moral weights.

Neuron counts correlate with intelligence, and intelligence correlates with moral weight

Many people seem to believe, at least implicitly, that more intelligent animals have more moral weight. Thus they tend to think humans have the most moral weight, and chimpanzees have more moral weight than most other animals, that dogs have more moral weight than goldfish, and so on.

However, as noted above, we might question how well neuron counts predict overall information-processing capacity and, thus, presumably, intelligence. We can, additionally, question whether intelligence truly influences moral weight.

Though there is not a large literature connecting neuron counts to sentience, welfare capacity, or valenced experience, there is a reasonably large scientific literature examining the connection of neuron counts to measures of intelligence in animals. The research is still ongoing and unsettled, but we can draw a few lessons from it.

First, it seems hard to deny that there’s one sense in which the increased processing power enabled by additional neurons correlates with moral weight, at least insofar as welfare relevant abilities all seem to require at least some minimum number of neurons. Pains, for example, would seem to minimally require at least some representation of the body in space, some ability to quantify intensity, and some connections to behavioral responses, all of which require a certain degree of processing power. Like pains, each welfare-relevant functional capacity requires at least some minimum number of neurons.

But aside from needing a baseline number of neurons to cross certain morally relevant thresholds, things become less clear, at least on a hedonistic account of well-being where what matters is the intensity and duration of valenced experience. It seems conceptually possible to increase intelligence without increasing the intensity of experience, and similarly possible to imagine the intensity of experience increasing without a corresponding increase in intelligence. Furthermore, it certainly is not the case that in humans we tend to associate greater intelligence with greater moral weight. Most people would not think it’s acceptible to dismiss the pains of children or the elderly or cognitively impaired in virtue of them scoring lower on intelligence tests.

Finally, it’s worth noting that some people have proposed precisely the opposite intuition: that intelligence can blunt the intensity of certain emotional states, particularly suffering. My intense pain feels less bad if I can tell myself that it will be over soon. According to this account, supported by evidence of top-down cognitive influences on pain, our intelligence can sometimes provide us with tools for blunting the impact of particularly intense experiences, while other less cognitively sophisticated animals may lack these abilities.

More Neurons = More Valenced Consciousness?

One might think that adding neurons increases the overall “amount” of consciousness. In the larger report, we consider the following empirical arguments in favor of this claim.

There are studies that show increased volume of brain regions correlated with valenced experience, such as a study showing that cortical thickness in a particular region increased along with pain sensitivity. Do these studies demonstrate that more neurons lead to more intense positive experiences? The problem with this interpretation is that there are studies showing correlations that work in the opposite direction, such as studies showing that increased pain is correlated with decreased brain volume in areas associated with pain. There is simply not a reliable relationship between brain volume and intensity of experience.

Similarly, there are many brain imaging studies that show that increased activation in particular brain regions is associated with increases in certain valenced states such as pleasure or pain, which might initially be thought to be evidence that “more active neurons” = “more experience.” However, neuroimaging researchers are highly invested in finding an objective biomarker for pain, and in being able to predict individual differences in pain responses. They have performed hundreds of experiments looking at brain activation during pain. The current consensus is that there is not yet a reliable way of identifying pain across all relevant conditions merely by looking at brain scans, and no leading neuroscientists have said anything that suggests that the mere number of neurons active in a particular region is predictive of “how much pain” a person might experience. It is, in general, the patterns of activation and how those activations are connected to observable outputs that matter most, rather than raw numbers of neurons involved.

Increasing numbers of neurons are required to reach thresholds of minimal information capacity required for morally relevant cognitive abilities

As noted above, it certainly is true that various capacities linked to intelligent behavior and welfare require some minimal degree of information-processing capacity. So might neuron counts be considered a proxy of moral weight simply in virtue of being predictive of how many morally relevant thresholds an organism has crossed?

To see the difficulty with this, consider the extremely impressive body of literature studying the cognitive capacities of bees. Bees have a relatively small number of neurons, yet have been found to be able to engage in sophisticated capacities that were previously thought to require large brains, including cognitive flexibility, cross-modal recognition of objects, and play behavior. There is, in general, not a defined relationship between the number of neurons and many possible capacities.

But without such a correlation, it would be unwise to use neuron counts as a proxy for having certain welfare relevant capacities rather than simply testing to see whether the animals in fact have the capacities. And there are many such proposed capacities. Varner (1998) has suggested that reversal learning may be an important capacity related to moral status. Colin Allen (2004) has suggested that trace conditioning might be an important marker of the capacity of conscious experience. Birch, Ginsburg and Jablonka (2020) have raised the possibility that unlimited associative learning is the key indicator of consciousness. And Gallup (1970) famously proposed that mirror self-recognition was a necessary condition for self-awareness.

The relevance of each of these views should be considered and weighed on their own. If there’s a plausible argument connecting them to moral weight, we see no reason to discard them in favor of a unitary moral weight measure focused only on the number of neurons. As such, it would be far preferable to include measures of plausibly relevant proxies along with neuron counts rather than use neuron counts as the sole measures of moral weight.

Conclusion

To summarize, one primary attraction of using neuron counts as a metric is its measurability. However, the more measurable a metric we choose, the less accurate it is, and the more we prioritize accuracy, the less we are currently able to measure. As such, the primary attraction of neuron counts is an illusion that vanishes once we attempt to reach out and grasp it.

And the three strongest arguments in favor of using neuron counts as a proxy all fail. We can question how well neuron counts are correlated with intelligence and also doubt that intelligence is correlated with moral weight. Arguments suggesting that additional neurons result in “more consciousness” or “more valenced consciousness” appear to be inconsistent with and unsupported by current views of how the brain works. And though it is true that increasing numbers of neurons are required to reach thresholds of minimal information capacity required for morally relevant cognitive abilities, this seems most compatible with using neuron counts in a combined measure rather than using them as a proxy for other capacities that can be independently measured.

Given this, we suggest that the best role for neuron counts in an assessment of moral weight is as a weighted contributor, one among many, to an overall estimation of moral weight. Neuron counts likely provide some useful insights about how much information can be processed at a particular time, but it seems unlikely that they would provide more useful information individually than a function that takes them into account along with other plausible markers of sentience and moral significance. Developing such a function has its own difficulties, but is preferable to relying solely on one metric which deviates from other measures of sentience and intelligence.

Acknowledgments

This research is a project of Rethink Priorities. It was written by Adam Shriver. Thanks to Bob Fischer, Michael St. Jules, Jason Schukraft, Marcus Davis, Meghan Barrett, Gavin Taylor, David Moss, Joseph Gottlieb, Mark Budolfson, and the audience at the 2022 Animal Minds Conference at UCSD for helpful feedback on the report. If you’re interested in RP’s work, you can learn more by visiting our research database. For regular updates, please consider subscribing to our newsletter.

Hamish Doodles @ 2022-11-28T12:21 (+53)

TL;DR:

Reasons to think that "neuron count" correlates with "moral weight":

- Neuron counts correlate with our intuitions of moral weights

- "Pains, for example, would seem to minimally require at least some representation of the body in space, some ability to quantify intensity, and some connections to behavioral responses, all of which require a certain degree of processing power."

- "There are studies that show increased volume of brain regions correlated with valenced experience, such as a study showing that cortical thickness in a particular region increased along with pain sensitivity." (But the opposite is also true. See 6. below.)

Reasons to think that "neuron count" does NOT correlate "moral weight"

- There's more to information processing capacity than neuron count. There's also:

- Number of neural connections (synapses)

- Distance between neurons (more distance -> more latency)

- Conduction velocity of neurons

- Neuron refactory period ("rest time" between neuron activation)

- "There's no consensus among people who study general intelligence across species that neuron counts correlate with intelligence"

- "It seems conceptually possible to increase intelligence without increasing the intensity of experience"

- Within humans, we don't think that more intelligence implies more moral weight. We don't generally give less moral to children, elderly, or the cognitiviely impaired.

- The top-down cognitive influences on pain suggest that maybe intelligence actually mitigates suffering.

- There are "studies showing that increased pain is correlated with decreased brain volume in areas associated with pain"

- Hundreds of brain imaging experiments haven't uncovered any simple relationship between quantity of neurons firing and "amount of pain"

- Bees have small brains, but have "cognitive flexibility, cross-modal recognition of objects, and play behavior"

- There are competing ideas for correlates of moral weight/consciousness/self-awareness:

Adam Shriver @ 2022-11-28T12:41 (+16)

Not sure I agree with the "TL" part haha, but this is a pretty good summary. However, I'd also add that there's no consensus among people who study general intelligence across species that neuron counts correlate with intelligence (I guess this would go between 1d and 2) and also that I think the idea that more neurons are active during welfare-relevant experiences is a separate but related point to the idea that more brain volume is correlated with welfare-relevant experiences.

I'd also note that your TL/DR is a summary of the summary, but there are some additional arguments in the report that aren't included in the summary. For example, here's a more general argument against using neuron counts in the longer report: https://docs.google.com/document/d/1p50vw84-ry2taYmyOIl4B91j7wkCurlB/edit#bookmark=id.3mp7v7dyd88i

Hamish Doodles @ 2022-11-28T13:09 (+9)

Thanks for feedback.

Not sure I agree with the "TL" part haha

Well, yeah. Maybe. It's also about making the structure more legible.

there are some additional arguments in the report that aren't included in the summary.

Anything specific I should look at?

Adam Shriver @ 2022-11-28T14:34 (+2)

Anything specific I should look at?

My link above was to a bookmark in the report, which includes an additional argument.

Henry Howard @ 2022-11-28T21:51 (+7)

Thanks for doing this. Post is too long, could have been dot points. I want to see more TL;DRs like this

Lizka @ 2022-11-29T14:48 (+37)

I'm really grateful for this post and the resulting discussion (and I'm curating the post). I've uncritically used neuron counts as a proxy in informal discussions (more than once), and have seen them used in this way a lot more.

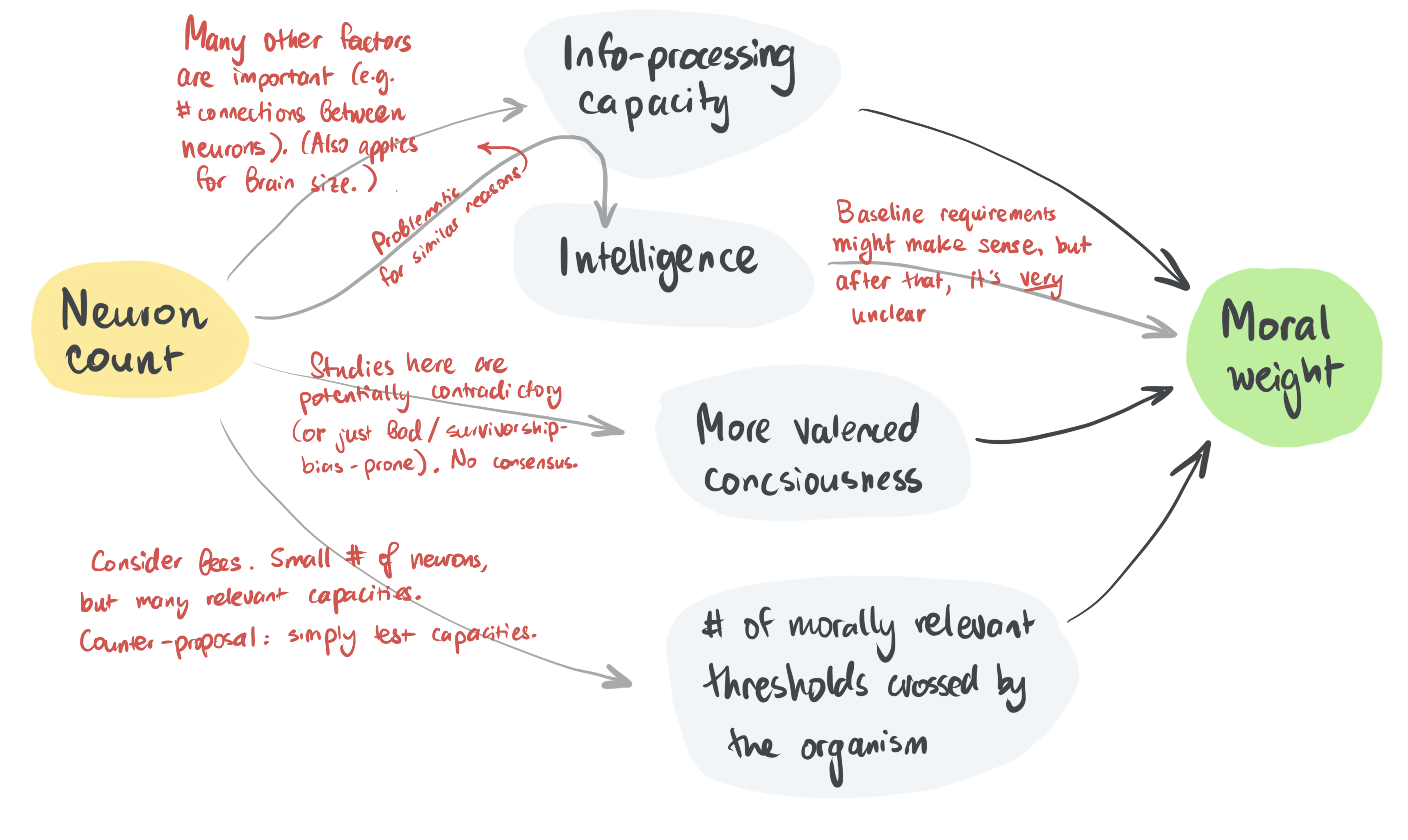

It helped me to draw out a diagram as I was reading this post (would appreciate corrections! although I probably won't spend time trying to make the diagram nicer or cleaner). My understanding is that the post sketched out the rough case for neuron counts as a proxy for moral weight as predictors of the grey properties below (information-processing capacity, intelligence, extent of valenced consciousness, and the number of morally relevant thresholds crossed by the organism), and then disputed the (predictive power of the) arrows I've greyed out and written on.

Adam Shriver @ 2022-11-29T16:52 (+7)

Wow, this is really cool, Lizka, thanks! I think it's a really nice visualization of the post and report. I would say, in regards to the larger argument, that @lukeprog is right that hidden qualia/conscious subsystems is another key route people try to take between neuron count and moral weight, so the full picture of the overall debate would probably need to include that. (and again, RP's report on that should be published next week).

Monica @ 2022-11-28T20:34 (+29)

I really appreciate this post and the conclusion here seems very reasonable. As someone who is personally guilty of using neuron counts as a sole proxy for moral weight, I would love to include additional metrics that more closely proxy something like capacity for suffering and pleasure. However, my problem is that while the metrics mentioned (mirror-test, trace conditioning, unlimited associative learning, reversal learning) might be more accurate proxies, they are (as far as I can tell) not available for a wide variety of species. For me, the main goal of employing these moral weights is to get a framework that decisionmakers can use for evaluating the impact of any project. I am particularly interested in government cost-benefit analyses, where the ideal use case would be to have a spreadsheet where government economists could just plug in available proxies for moral weight and get an estimated valuation for suffering reduction for an individual of a particular species. Neuron counts are nice for this because you can pretty easily find an estimated neuron count for almost any species. With this issue in mind,

- Are you aware of any papers/databases that have a list of species for which any of the four recommended factors have been tested and the results? It seems, for example, that scientists make headlines when they find a species that passes the mirror test but I can't tell which species have "failed" it versus which have not been tested.

- Other factors that are widely available for many species include brain mass, body mass, brain-to-body mass ratio, cortical neurons, whether the animal has any particular brain/anatomical structure, class/order, etc. It sounds like maybe the ratio of cortical neurons to brain size might be a reasonable proxy based on the section on processing speed--would you agree that would be an improvement over just neurons? Do any of these other characteristics stand out as plausible proxies?

Bob Fischer @ 2022-11-28T20:58 (+16)

Hi Monica! We hear you about wanting a table with those results. We've tried to provide one here for 11 farmed species: https://forum.effectivealtruism.org/posts/tnSg6o7crcHFLc395/the-welfare-range-table

We tend to think that if the goal is to find a single proxy, something like encephalization quotient might be the best bet. It's imperfect in various ways, but at least it corrects for differences in body size, which means that it doesn't discount many animals nearly as aggressively as neuron counts do. (While we don't have EQs for every species of interest, they're calculable in principle.)

Finally, we've also developed some models to generate values that can be plugged into cost-benefit analyses. We'll post those in January. Hope they're useful!

Monica @ 2022-11-28T21:20 (+2)

Thank you, this is very helpful and I definitely agree that EQs are available/practical enough to use in most cases. Really looking forward to seeing the new models in January!

lukeprog @ 2022-11-28T20:14 (+17)

Re: more neurons = more valenced consciousness, does the full report address the hidden qualia possibility? (I didn't notice it at a quick glance.) My sense was that people who argue for more neurons = more valenced consciousness are typically assuming hidden qualia, but your objections involving empirical studies are presumably assuming no hidden qualia.

Adam Shriver @ 2022-11-28T20:35 (+13)

We have a report on conscious subsystems coming out I believe next week, which considers the possibility of non-reportable valenced conscious states.

Also (speaking only about my own impressions), I'd say that while some people who talk about neuron counts might be thinking of hidden qualia (eg Brian Tomasik), it's not clear to me that that is the assumption of most. I don't think the hidden qualia assumption, for example, is an explicit assumption of Budolfson and Spears or of MacAskill's discussion in his book (though of course I can't speak to what they believe privately).

mako yass @ 2022-11-30T00:48 (+1)

it's not clear to me that that is the assumption of most

Thinking that much about anthropics will be common within the movement, at least.

Adam Shriver @ 2022-12-05T15:51 (+3)

Here's the report on conscious subsystems: https://forum.effectivealtruism.org/posts/vbhoFsyQmrntru6Kw/do-brains-contain-many-conscious-subsystems-if-so-should-we

Oliver Sourbut @ 2022-12-02T18:25 (+1)

I've given a little thought to this hidden qualia hypothesis but it remains very confusing for me.

To what extent should we expect to be able to tractably and knowably affect such hidden qualia?

Adam Shriver @ 2022-12-05T15:51 (+3)

Here's the report on conscious subsystems: https://forum.effectivealtruism.org/posts/vbhoFsyQmrntru6Kw/do-brains-contain-many-conscious-subsystems-if-so-should-we

Ranjith Jaganathan @ 2025-07-16T14:40 (+14)

From a cognitive science and philosophy of mind perspective, I agree strongly with your skepticism about treating neuron counts as a linear moral metric. One striking parallel from neuroscience is that we consistently find that subjective experience, including pain and pleasure, cannot be meaningfully reduced to raw cortical or subcortical volume, nor to simple “activation counts.” The phenomenology of consciousness arises from complex network dynamics, feedback loops, and integrative processes; not merely from raw computational “size.”

In NeuroAI research (particularly in embodied control and models inspired by biological architectures), we’ve learned that intelligence and adaptive behaviour do not simply scale with parameter count or “node count” in a network. Rather, it is architectural priors, connectivity patterns, and embodied interaction with the environment that determine emergent capacities. Likewise, many small-brained animals display surprising degrees of flexibility and planning precisely because of such specialized architectures rather than sheer neuron number.

Buhl @ 2022-12-02T15:30 (+14)

Thank you for the important post!

“we might question how well neuron counts predict overall information-processing capacity”

My naive prediction would be that many other factors predicting information-processing capacity (e.g., number of connections, conduction velocity, and refractory period) are positively correlated with neuron count, such that neuron count is pretty strongly correlated with information processing even if it only plays a minor part in causing more information processing to happen.

You cite one paper (Chitka 2009) that provides some evidence against my prediction (based on skimming the abstract, this seemed to be roughly by arguing that insect brains are not necessarily worse at information processing than vertebrate brains). Curious if you think this is the general trend of the literature on this topic?

Jim Buhler @ 2026-02-10T15:00 (+4)

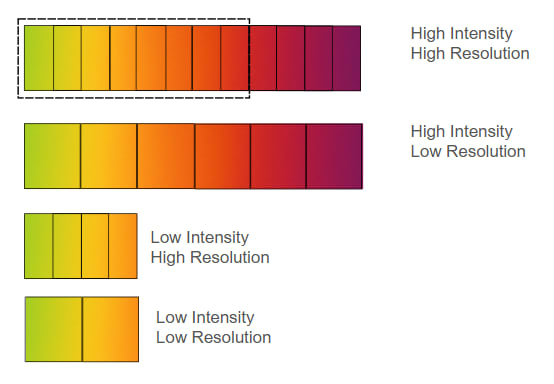

Evaluative richness can be further subdivided into evaluative bandwidth and evaluative acuity: “Rich affect-based decision making takes many inputs into account at once (evaluative bandwidth) and is sensitive to small differences in those inputs (evaluative acuity)" ([Birch, Schnell, & Clayton 2020]).

(Emphases are mine.)

Is the bandwidth-acuity distinction the same as the range-resolution one in Alonso & Schuck-Paim (2025)?

Range: The maximum ‘absolute’ value of an affective experience that an organism can perceive, defining the absolute amplitude between the highest or lowest affective intensities possible. A high-range system allows for extreme Pain or pleasure, which may be necessary for strong motivational reinforcement, while a low-range system limits organisms to milder affective states.

Resolution: How precisely an organism can differentiate between varying levels of affective intensity. A high-resolution system allows for fine distinctions, while a low-resolution system may encode only broad categories, limiting the precision of affective experiences

Wladimir J. Alonso @ 2026-02-10T18:26 (+3)

Thanks, Jim — that’s a thoughtful attempt to restate our terms, and it touches on something important you asked in the other thread about bandwidth–acuity vs range–resolution.

Our range concept maps fairly closely onto the welfare range used in Rethink Priorities’ Moral Weight project — it refers to the upper bound of affective intensity an organism can plausibly access .

Where we’d adjust is resolution. In the earlier draft your summary makes it sound like resolution is just “precision within a bounded range,” but that framing risks suggesting that resolution is always subordinate to range in motivational function. In fact, from a pure information-encoding perspective, resolution is as versatile as range for enabling intensity-based prioritization, because in principle both could be increased indefinitely: range by extending the extreme ends of the scale, and resolution by subdividing any given range into arbitrarily fine gradations. We develop this point in the The Function and Evolution of Affective Scales section of the Do primitive sentient organisms feel extreme pain? paper

So the distinction isn’t “range = strength, resolution = detail”; it’s that range and resolution are two orthogonal axes along which affective systems can vary, each capable of supporting graded prioritization. A system with high resolution but modest range could still distinguish and act on nuanced motivational differences without accessing extreme affective intensities at all.

Jim Buhler @ 2026-02-11T07:21 (+5)

Thanks Wladimir! And do you think Birch et al. meant something different from what you describe with the term acuity (instead of resolution)?

Wladimir J. Alonso @ 2026-02-11T13:40 (+6)

Good catch, Jim — and thanks for flagging the terminology. This field is already complicated enough that we really don’t need parallel vocabularies for the same underlying idea. One of the reasons we post publicly is exactly to get this kind of “conceptual linting” from the community.

I think Birch et al.’s acuity is basically what we mean by resolution: sensitivity to small differences — the ability to discriminate fine gradations (what psychophysics would call “just-noticeable differences”). Where we’re being careful is in separating that from range, which refers to the maximum intensity an organism can plausibly access.

So in short: acuity ≈ resolution in our usage; it’s distinct from range.

Vasco Grilo🔸 @ 2026-02-26T08:38 (+2)

Thanks for the relevant discussion, Jim and Wladimir. Wladimir, in your framework, is resolution i) the total number of distinct welfare intensities, ii) the ratio between i) and the difference between the maximum and minimum welfare intensities, or iii) something else?

Wladimir J. Alonso @ 2026-02-27T09:19 (+3)

Thanks. In our framework, resolution is not simply (i) the number of distinct welfare intensities, nor (ii) a strict ratio relative to the total range. It refers to the functional granularity with which differences in intensity can be discriminated and behaviorally prioritized.

The key point we raise in the post is that resolution is orthogonal to range: a system can evolve high resolution while maintaining a modest range, expand its range while keeping coarse resolution, or increase both simultaneously.

Vasco Grilo🔸 @ 2026-02-27T10:34 (+2)

I thought ii) in my past comment was the resolution over the whole range of welfare intensities. The image below of the post suggests "resolution from welfare intensity A to B" = "number of different welfare intensities between A and B"/(B - A)? More precisely, it looks like resolution is the derivative of the number of different welfare intensities with respect to welfare intensity. This still leaves open how ii) relates to the whole range of welfare intensities. A system can have high resolution, and a narrow whole range of welfare intensities in the same way that a car can move fast over a short distance (even though the average speed over a distance can be calculated from the ratio between the distance covered, and time spent covering it).

Wladimir J. Alonso @ 2026-02-27T13:10 (+3)

Thanks, Vasco — I see where the confusion is coming from.

The difficulty is that in our framework, resolution is not defined as a mathematical density of bins over a fixed, external intensity axis (e.g., dN/dI). That framing assumes there is already a continuous welfare scale “out there,” and resolution simply tells us how finely the organism partitions it.

In our usage, resolution refers to the organism’s internal discriminative granularity — how finely differences in affective magnitude can be distinguished and behaviorally prioritized within whatever range the organism has.

So resolution is not the derivative of category-count with respect to an external intensity variable. Rather, it is a property of the encoding architecture itself.

That’s why it is orthogonal to range. A system may:

• Have a narrow range but very fine discriminative structure within that range.

• Have a wide range but coarse internal discrimination.

• Increase both independently.

Your car analogy is actually helpful. A car can move very fast over a short distance — speed is not determined by total range. Likewise, high resolution does not require a wide affective range, and vice versa.

So resolution is better understood as internal discriminative power, not as bin density over a pre-specified global welfare scale.

Vasco Grilo🔸 @ 2026-02-27T14:47 (+2)

I was not clear, but I meant the image suggests "organism's resolution from welfare intensity A to B" = "number of different welfare intensities the organism can experience between A and B"/(B - A), which depends on the organism, A, and B. Is this what you have in mind?

Wladimir J. Alonso @ 2026-02-28T18:51 (+3)

In our upcoming post, we introduce human-anchored reference categories (Annoying(h), Hurtful(h), Disabling(h), Excruciating(h)) to provide a pragmatic shared coordinate system for cross-species discussion. So if one wants to talk about “acuity/resolution between A and B,” it’s reasonable to treat A and B as positions (or intervals) on that human-anchored scale.

But no — we’re not defining acuity as #levels/(B−A), because that requires meaningful distances between A and B. At this stage the (h) scale is best treated as ordinal: it supports “higher/lower ceiling” comparisons, not subtraction or ratios.

Vasco Grilo🔸 @ 2026-02-28T19:44 (+4)

I worry just 4 human-anchored pain intensities are not enough for reliable comparisons, even for an early stage. For shrimp-anchored annoying pain 10^-6 times as intense as human-anchored annoying pain (the ratio between the individual number of neurons of shrimps and humans), and this 10^-6 times as intense as human-anchored excruciating pain, shrimp-anchored annoying pain would be 10^-12 (= (10^-6)^2) times as intense as human-anchored excruciating pain. It seems super hard to cover such a wide range of pain intensities with any significant reliability using just 4 values?

zdgroff @ 2022-12-01T22:49 (+4)

Do you have a sense of whether the case is any stronger for specifically using cortical and pallial neurons? That's the approach Romain Espinosa takes in this paper, which is among the best work in economics on animal welfare.

Monica @ 2022-12-06T16:59 (+9)

I'm curious about this as well. I'm also really confused about the extent to which this measure is just highly correlated with overall neuron count. The wikipedia page on neuron and pallial/cortical counts in animals lists humans as having lower pallial/cortical neuron counts than orcas and elephants while "Animals and Social Welfare" lists the reverse. Based on the Wikipedia page, it seems that there is a strong correlation (and while I know basically nothing about neuroscience, I would maybe think the same arguments apply?). I looked at some of the papers that the wikipedia page cited and couldn't consistently locate the cited number but they might have just had to multiply e.g. pallial neuron density by brain mass and I wouldn't know which numbers to multiply.

MichaelStJules @ 2022-12-06T18:08 (+3)

I calculated ratios of neurons in the pallium (and mushroom bodies/corpora pedunculata in insects) vs whole brain/body from the estimates on that Wikipedia page not too long ago. In mammals, it was mostly 10-30%, with humans at 21.5%, and a few monkeys around 40%. Birds had mostly 20-70%, with red junglefowl (wild chickens) around 27.6%. The few insects where I got these ratios were:

- Cockroach: 20%

- Honey bee: 17.7%

- Fruit fly: 2.5% (a low-valued outlier, but also the smallest brained animal for which I could get a ratio based on that table).

EDIT: Some of the insect mushroom body numbers might only be counting one hemisphere's mushroom body neurons, and only intrinsic neurons, so the ratios might be too low. This might explain the low ratio for fruit flies.

There could also be some other issues that may make some of these comparisons unfair.

Monica @ 2022-12-06T22:37 (+4)

Oh interesting, thanks. Based on this it sounds super correlated (especially given that there are orders of magnitude difference between species in neuron counts).

Adam Shriver @ 2022-12-08T12:18 (+2)

It's an interesting thought, although I'd note that quite a few prominent authors would disagree that the cortex is ultimately what matters for valence even in mammals (Jaak Panksepp being a prominent example). I think it'd also raise interesting questions about how to generalize this idea to organisms that don't have cortices. Michael used mushroom bodies in insects as an example, but is there reason to think that mushroom bodies in insects are "like the cortex and pallium" but unlike various subcortical structures in the brain that also play a role in integrating information from different sensory sources? I think there's need to be more of a specification of which types of neurons are ultimately counted in a principled way.

Corentin Biteau @ 2022-11-29T11:08 (+4)

Thanks for the report !

I must admit that I didn't dig much into the debate, and only offering personal intuition, but I always found something odd off with the argument that "more neurons = greater ability to feel pain".

The implication of this argument would be "Children and babies have a lower neuron count than adults, so they should be given lower moral value", as pointed out (i.e. it's less problematic if they die).

And I just don't see many people defending that. Many people would say the opposite: that children tend to be happier than adults. So I kept wondering why people used that approximation for other species.

MichaelStJules @ 2022-11-29T18:52 (+11)

I think children and babies actually have about as many neurons as adults, but also far more synapses/connections.

https://extension.umaine.edu/publications/4356e/

https://www.ncbi.nlm.nih.gov/books/NBK234146/

https://en.wikipedia.org/wiki/Neuron#Connectivity

Corentin Biteau @ 2022-11-30T11:39 (+2)

Oh, ok, I didn't know that. Thanks for the link, this is surprising.

Adam Shriver @ 2022-11-30T19:26 (+1)

Thanks Michael!

Adam Shriver @ 2022-11-29T16:49 (+1)

Thanks, this is a great point.

mako yass @ 2022-11-30T20:36 (+3)

(this is partially echoing/paraphrasing lukeprog) I want to emphasize the anthropic measure/phenomenology (never mind, this can be put much more straightforwardly) observer count angle, which to me seems like the simplest way neuron count would lead to increased moral valence. You kind of mention it, and it's discussed more in the full document, but for most of the post it's ignored.

Imagine a room where a pair of robots are interviewed. The robot interviewer is about to leave and go home for the day, they're going to have to decide whether to leave the light on or off. They know that one of the robots hates the dark, but the other strongly prefers it.

The robot who prefers the dark also happens to be running on 1000 redundant server instances having their outputs majority-voted together to maximize determinism and repeatability of experiments or something. The robot who prefers the light happens to be running on just one server.

The dark-prefering robot doesn't even know about its redundancy, it doesn't lead it to report any more intensity of experience. There is no report, but it's obvious that the dark-preferring robot is having its experience magnified by a thousand times, because it is exactly as if there are a thousand of them, having that same experience of being in a lit room, even though they don't know about each other.

You turn the light off before you go.

Making some assumptions about how the brain distributes the processing of suffering, which we're not completely sure of, but which seem more likely than not, we should have some expectation that neuron count has the same anthropic boosting effect.

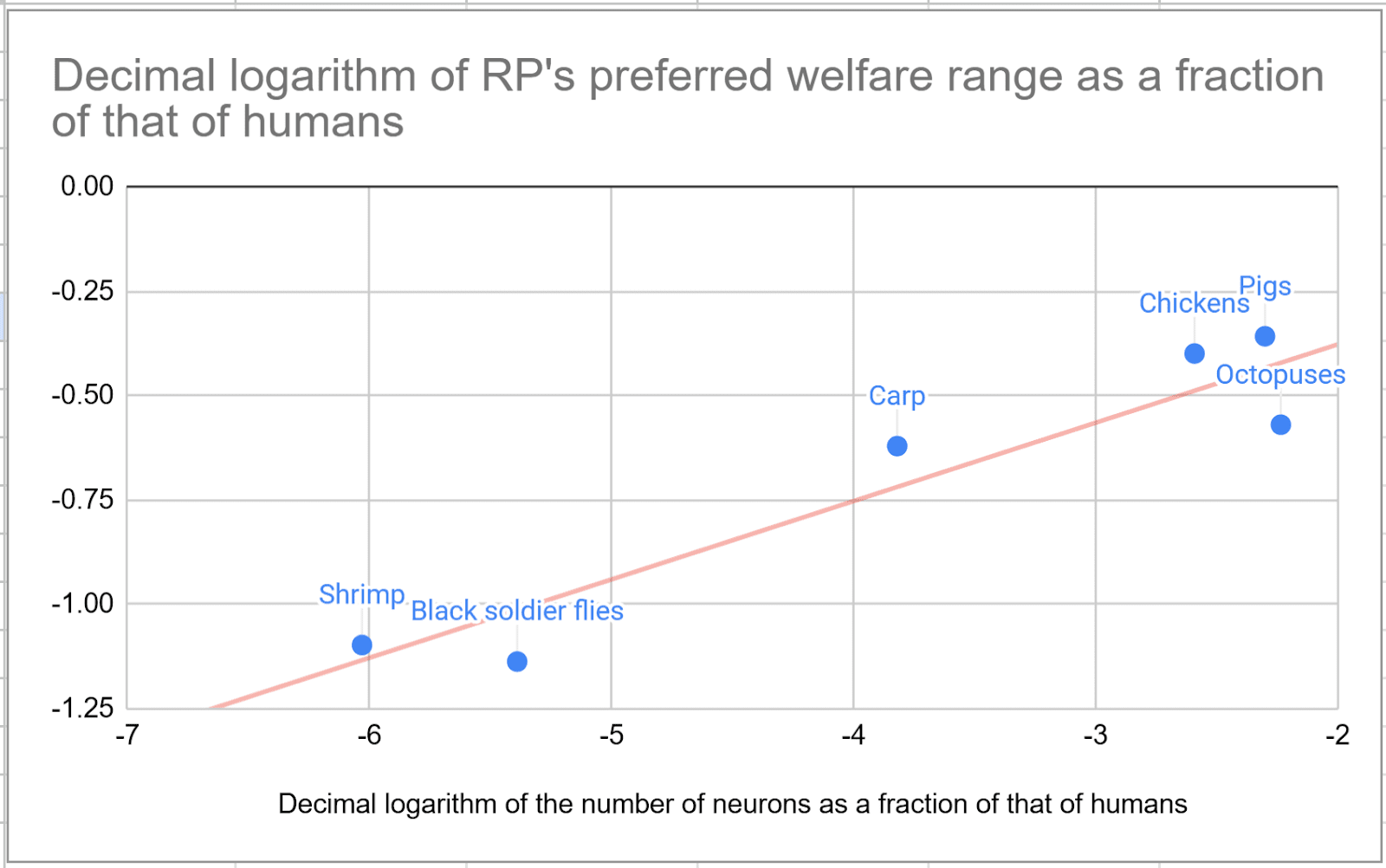

Vasco Grilo🔸 @ 2026-01-07T12:12 (+2)

Hi Adam.

- there is no straightforward empirical evidence or compelling conceptual arguments indicating that relative differences in neuron counts within or between species reliably predicts welfare relevant functional capacities.

As illustrated in the graph below, the estimates for the (expected) welfare ranges in Bob Fischer's book about comparing welfare across species, which contains what Rethink Priorities (RP) stands behind now, are pretty well explained by "individual number of neurons"^0.188. I have a post where I estimate the total welfare of animal populations assuming individual (expected hedonistic) welfare per fully-healthy-animal-year is proportional to "individual number of neurons"^"exponent".

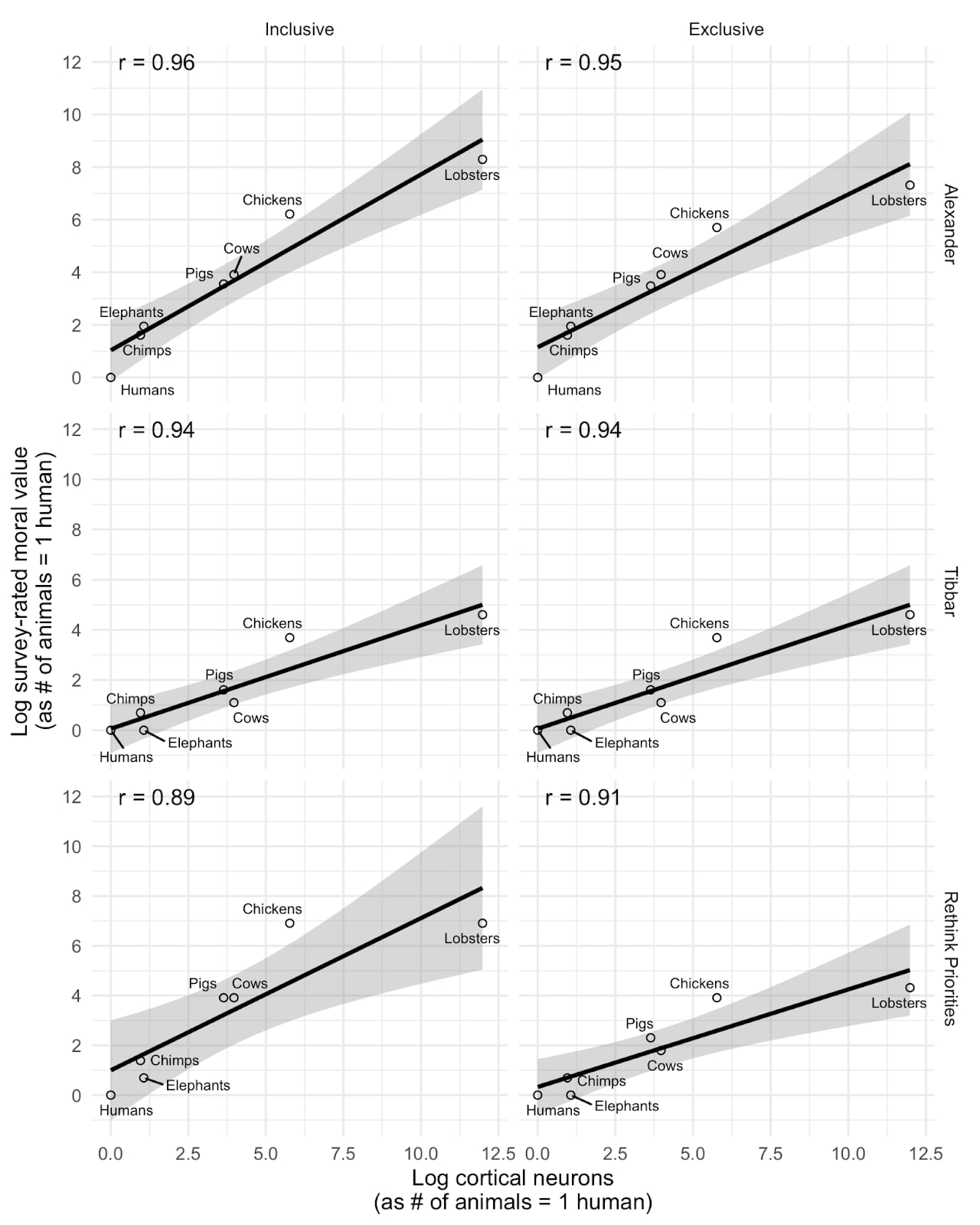

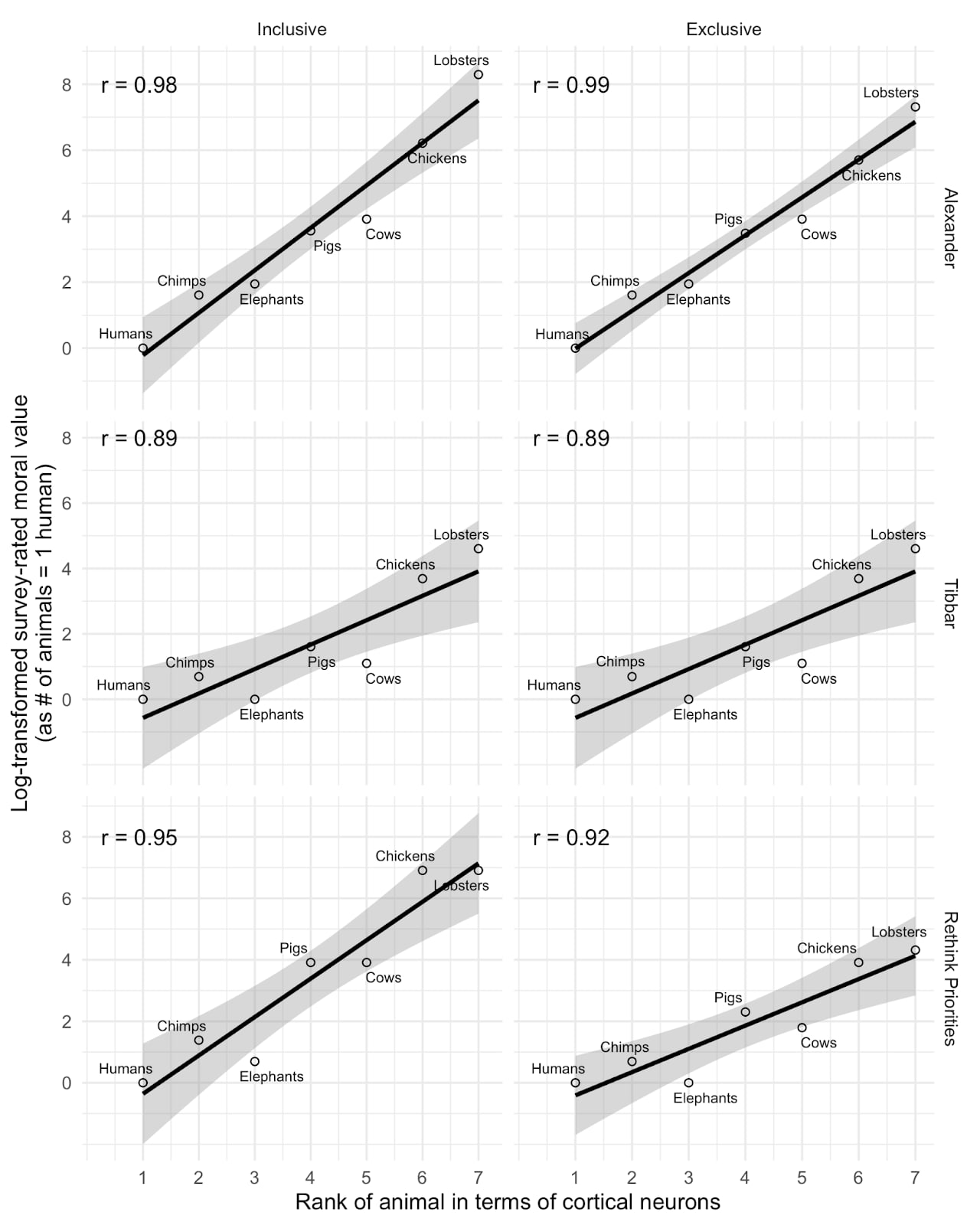

David_Moss @ 2026-01-07T14:54 (+4)

I think this is interesting, but I don't think we should infer too much from this relationship. This plot basically matches those we produced examining the relationship between cortical neuron count and perceived moral value of different animals (replicating SlateStarCodex and another's surveys). As you can see below, we found extremely strong correlations. But we also found similarly strong correlations using EQ or total brain size rather than cortical neuron count, or using a crude 0-100 measure of how people 'care' about the animal in place of tradeoff ratios. Notably, when we replace the moral value measure with a simple ordinal ranking of the animals by neuron count (as in the second plot below), we find even stronger relationships.

My impression is therefore that the strong correlations more reflect the fact that we have a small number of datapoints with animals differing dramatically on a wide variety of predictor (or, in principle, outcome) variables which are all highly correlated, rather than indicating that neuron counts are distinctively predictive of any outcomes of interest. See Andrew Gelman's similar discussion of our study.

I think to actually disentangle these we would need a larger sample of animals who diverge on the key dimensions (e.g., birds with high neuron density but small brains, or animals with higher neuron count but lower perceived similarity to humans).

Vasco Grilo🔸 @ 2026-01-07T16:40 (+2)

Thanks for the comment, David!

My impression is therefore that the strong correlations more reflect the fact that we have a small number of datapoints with animals differing dramatically on a wide variety of predictor (or, in principle, outcome) variables which are all highly correlated, rather than indicating that neuron counts are distinctively predictive of any outcomes of interest. See Andrew Gelman's similar discussion of our study.

I agree. Moreover, in allometry, "the study of the relationship of body size to shape,[1] anatomy, physiology and behaviour", "The relationship between the two measured quantities is often expressed as a power law equation (allometric equation)". So the logarithm of the individual number of neurons explaining well the logarithm of the welfare ranges means this will also be well explained by many other properties. If the welfare range is roughly proportional to "individual number of neurons"^"exponent 1", and the individual number of neurons is roughly proportional to "property (e.g. individual brain mass)"^"exponent 2", the welfare range will be roughly proportional to "property"^("exponent 1"*"exponent 2"). This means the logarithm of the welfare range will be well explained by the logarithm of "property". Relatedly, here is an illustration of why I think individual welfare per fully-healthy-animal-year could be proportional to "metabolic energy consumption per unit time at rest"^"exponent".

Given the above, I do not think it matters much whether one estimates welfare per unit time based on the individual number of neurons, or another property which is a power law of it. I believe it matters much more than results are presented for many exponents of the power law determining the welfare per unit time. I did this in the post where I estimated the total welfare of animal populations assuming individual welfare per fully-healthy-animal-year is proportional to "individual number of neurons"^"exponent", where I analysed exponents ranging from 0 to 2.

Vasco Grilo🔸 @ 2026-01-12T18:00 (+2)

Relatedly, here is an illustration of why I think individual welfare per fully-healthy-animal-year could be proportional to "metabolic energy consumption per unit time at rest"^"exponent".

I have now estimated the total welfare of animal populations, trees, and bacteria and archaea based on the assumption above. I had recommended research informing how to increase the welfare of soil animals, but I am now more pessimistic about this. I currently think it is better to focus on decreasing the uncertainty about how the welfare per unit time of different organisms and digital systems compares with that of humans.

Matt Goodman @ 2022-11-30T18:14 (+2)

"the more measurable a metric we choose, the less accurate it is, and the more we prioritize accuracy, the less we are currently able to measure"

Can you expand on this? Is it a reference to Goodheart's Law?

Adam Shriver @ 2022-11-30T19:19 (+4)

No, it's not a reference Goodheart's Law.

It's just that one reason for liking neuron counts is that we have relatively easy ways of measuring neurons in a brain (or at least relatively easy ways of coming up with good estimate). However, as noted, there are a lot of other things that are relevant if what we really care about is information-processing capacity, so neuron count isn't an accurate measure of information processing capacity.

But if we focus on information-processing capacity itself, we no longer have a good way of easily measuring it (because of all the other factors involved).

This framing comes from Bob Fischer's comment on an earlier draft, btw.

Matt Goodman @ 2022-11-30T19:29 (+2)

Thanks, that makes sense. For some reason I read it as a kind of generalisable statement about epistemics, rather than in relation to the neuron count issues discussed in the article.

Yatlas @ 2022-11-30T15:40 (+2)

I think this is a good article and attacks some assumptions I've thought were problematic. That being said, I think it's worth elaborating on the claim that intelligence scales with moral worth. You say:

"Furthermore, it certainly is not the case that in humans we tend to associate greater intelligence with greater moral weight. Most people would not think it’s acceptible to dismiss the pains of children or the elderly or cognitively impaired in virtue of them scoring lower on intelligence tests."

This seems true, and is worth further discussion. Most famously, Peter Singer has argued in Animal Liberation that intelligence doesn't determine moral worth. It also brings to mind Bentham's quote: "The question is not, Can they reason?, nor Can they talk? but, Can they suffer?" We don't think children have less moral worth due to their decreased intelligence, nor do we think that less intelligent people have less moral worth–so why should we apply this standard to animals? This is why Singer has argued for equal consideration of interests between species. What does this imply, then, about how we should determine the interests of animals?

Perhaps, we may try to count the neurons involved with pain, pleasure, and other emotions–rather than neurons as a whole—and use this as a metric for moral worth. This isn't perfect, it still has many problems, but would probably be better than other approaches.

Adam Shriver @ 2022-11-30T19:24 (+3)

Thanks, I agree on these points. In regards to focusing on neurons involved in pain or other emotions, while I agree this would be the ideal thing to look at, the problem is that there is so much disagreement in the literature about issues that would be relevant for deciding which neurons/brain areas to include. There are positions that range from thinking that certain emotions can be localized to very specific regions to those who think that almost the whole brain is involved in every different type of experience, and lots of positions in between. So for that reason we tried to focus on more general criticisms.

Tenoke @ 2022-12-09T10:41 (+1)

The general argument is that it's just a useful but imperfect proxy, your findings are that it correlates with some stuff we might find just imperfectly so how do you go from being imperfect to 'shouldnt be used' again?

Adam Shriver @ 2022-12-09T16:33 (+1)

It shouldn't be used as a unitary measure, but can be included in a combined measure, which is likely to correlate better.

Tenoke @ 2022-12-10T01:37 (+1)

That's nearly true by definition for imperfect proxys. They don't carry all the information so you can improve on them by using other measures.

AB_Philopoet @ 2022-12-06T04:54 (+1)

Note: When we discuss an organism's "moral weight," ultimately what we mean is whether or not its existence should be prioritized over others' in the event that a choice between one or the other had to be made. "Moral weight," "moral value," and "value," and "worthy of existential priority" are all essentially synonymous.

Which is more likely to contribute a useful idea in preventing the potential apocalyptic scenarios, such as an AI takeover or Yellowstone erupting: a human or a chicken?

Obviously, a human.

In addition to the plausibly increased capacity for organisms with a greater breadth and quantity of synaptic connections to experience consciousness and suffering, the moral value or "moral weight" of an organism also depends upon the organism's CREATIVITY - i.e. whether or not it can produce ideas and objects that aid in the universal fight against extinction and entropy that is an eternal existential threat against all living organisms, and the entire history of living organisms that have ever lived.

Because organisms with higher neuron counts are simply more likely to generate new and potentially extinction-preventing ideas, it makes sense that those organisms, in terms of their health and preservation, should be valued above all other organisms. Furthermore, higher-neuron organisms also have a higher potential to generate ideas that would increase the quality of life and symbiosis with lower-neuron organisms. What is the point of prioritizing (i.e., allocating resources to) a lower-neuron organism equally or more than a higher-neuron organism if doing so would only increase the probability of extinction (and decrease the probability of innovations towards symbiosis being made)?

What I am not seeking to imply: This does not mean that we humans HAVE to consume all other organisms of less neural capacity, and in fact it could be argued that the quality of our synaptic connections would be better, and therefore increase the probability of producing extinction-preventing and symbiosis-innovating ideas, if we were not burdened with the guilt of abusing our fellow earthly creatures.

The ethical answer to the fundamental problem that this proxy quantification is attempting to address - i.e., the morality of factory-farming:

If we come to a point, for any reason manmade or natural, where there are only enough resources to sustain human beings and no other animal species on the planet, then we should allow all other species to die and humans to live; otherwise, the probability that all evolutionary history will be erased and all life on Earth will go extinct will increase because animals will not have the capacity to address other probabilistically impending extinction events with the same capacity that humans would be able to.

Until we reach that point, we should strive to minimize the cruelty within the main means of food production - e.g. the self-evidently horrific practices of unanesthetized castration, forced impregnation, or debeaking in factory farming. However, we must do so while recognizing that the means of production itself is necessary to provide food affordability that wards off starvation for humans, and that such means of production does not necessarily have to coexist with the cruelty that mars it.

As we strive to outlaw the cruelty in the factory farming praxis, we should concurrently strive to build more efficient food infrastructure that can optimize food distribution to prevent food waste and make it so that the animal suffering that went into the food (which is not negligible simply because it is plausibly of a lower gradation than that of a human being's suffering) is put to the best possible use: the nourishment of a human being, the highest neuron-count organism that has ever existed on Earth. Thus, we would simultaneously achieve several benefits, well cost-effective and worthy of investment:

(i) We would increase the quality of meat production

(ii) We would eliminate costs of over-production

(iii) We would eliminate emissions and land pollution caused by food waste.

(iv) Like the water cycle but with food: The infrastructure would transport food waste to massive compost centers, which would be an alternative, zero-cost fertilizer and thus lower the cost of fertilizer and therefore crops themselves ...

- => which would lower the price of food production,

- => which would make food more affordable (in addition to being higher quality),

- => which would make people more well-nourished, which would increase the total probability that humanity would be able to conceive of useful (i.e. extinction-preventing and unnecessary-suffering-ameliorating) ideas,

- => all of which would culminate into unprecedented economic (and moral) growth for all of humanity.

Adam Shriver @ 2022-12-08T12:23 (+1)

AB, I think you're looking at a different stage of analysis than we are here. We're looking at how to weigh the intrinsic value of different organisms so that we know how to count them. It sounds to me like you're discussing the idea of, once we have decided on a particular way to count them, what actions should we take in order to best produce the greatest amount of value.

rhiza @ 2022-12-04T19:17 (+1)

I don't come here often, and don't plan to write something verbose whatsoever. I have one simple thing to say, speaking as a neuroscientist -- why on earth would you think something like the number of neurons in a brain is a good proxy for moral weight?

What age group has the most neurons in humans? Babies. It's babies. The ones you constantly have to tell what is right and what is wrong? To stop hitting their siblings, being selfish, etc? This is not a nuanced conversation, it is one that, if you subscribe to scientific materialism, is quickly and efficiently done away with.

To utilize logic in parallel with the way it's been done here so far : in humans, decreasing neural count is correlated with increased moral intelligence (incl. the development of empathy, moral reasoning, etc.)

Erich_Grunewald @ 2022-12-06T16:20 (+7)

I have one simple thing to say, speaking as a neuroscientist—why on earth would you think something like the number of neurons in a brain is a good proxy for moral weight?

What age group has the most neurons in humans? Babies. It’s babies. The ones you constantly have to tell what is right and what is wrong? To stop hitting their siblings, being selfish, etc?

I'm confused. It seems as if you're arguing against using neuron counts as a proxy for moral agency, not moral weight.

Adam Shriver @ 2022-12-08T12:07 (+1)

Hi Rhiza,

I appreciate your interesting point! I would note that as Erich mentioned, we're interested in moral patiency rather than moral agency, and we ultimately don't endorse the idea of using neuron counts.

But in response to your comment, there are different ways of trying to spell out why more neurons would matter. Presumably, on some (or most) of those, the way neurons are connected to other neurons matters, and as you know in babies the connections between neurons are very different from the connections in older individuals. So I think a defender of the neuron count hypothesis would still be able to say, in response to your point, that it's not just the number of neurons but rather the number of neurons networked together in a particular way that matters.

zeshen @ 2022-12-01T01:26 (+1)

This is nice, but I'd also be interested to see quantification of moral weights for different animals when accounting for all the factors besides neuron count, and how much it differs from solely using neuron count alone.