The Precipice - Summary/Review

By Nikola @ 2022-10-11T00:06 (+10)

This is a linkpost for https://blog.drustvo-evo.hr/en/2021/05/23/what-lies-in-humanitys-future/ because people might find it useful. My views have evolved since then.

Our planet is a lonely speck in the great enveloping cosmic dark. In our obscurity, in all this vastness, there is no hint that help will come from elsewhere to save us from ourselves.

Carl Sagan, Pale Blue Dot

Humanity stands at a precipice.

In the centuries and millennia to come, we have the opportunity to eradicate disease, poverty, and unnecessary suffering. We can discover the secrets of the universe, create beautiful art, and do good in the universe. It would be a tragedy of unimaginable scale to lose this opportunity.

There have been times in our history when we had close calls with extinction, and currently existential risk is very likely the highest it’s ever been, due to anthropogenic risks like climate change, unaligned artificial intelligence, nuclear risk, and risk of engineered pandemics.

In his sobering magnum opus, The Precipice, Toby Ord argues that existential risk reduction is among the pressing moral issues of our time, outlines which risks are most significant, and proposes a path forward.

The Stakes

We have made great progress in the realm of human well-being in the past decades. Advancements in medicine, agriculture, and technology have decreased poverty, hunger, and increased our general levels of well-being. It’s not unimaginable that in the future, we will be able to completely eradicate problems like hunger and poverty. The future could be a time of flourishing for sentient beings in the universe, and we have the chance to make this come true.

The scale of the good we can achieve in the future is mindboggling. Just the Milky Way is vast, and could house orders of magnitude more sentient beings than currently exist on Earth. The number of people who have lived before us is around 100 billion, but the number of people who could live after us is enormous (a conservative estimate from Nick Bostrom’s Superintelligence is 1043. That’s a one with 43 zeros after it.)

If there are so many people in the future, and future people have moral worth, that would mean that most moral worth lies in the future, and that the most important thing we could do is make sure to maximize our positive impact on the long-term future. This line of thought is called longtermism. Of course, it is hard to predict how our actions will impact the future, but this doesn’t mean that we can’t at least do our best. As Ord puts it,

A willingness to think seriously about imprecise probabilities of unprecedented events is crucial to grappling with risks to humanity’s future.

We neglect these existential risks grossly. One especially poignant example is that

The international body responsible for the continued prohibition of bioweapons (the Biological Weapons Convention) has an annual budget of just $1.4 million—less than the average McDonald’s restaurant.

The Risks

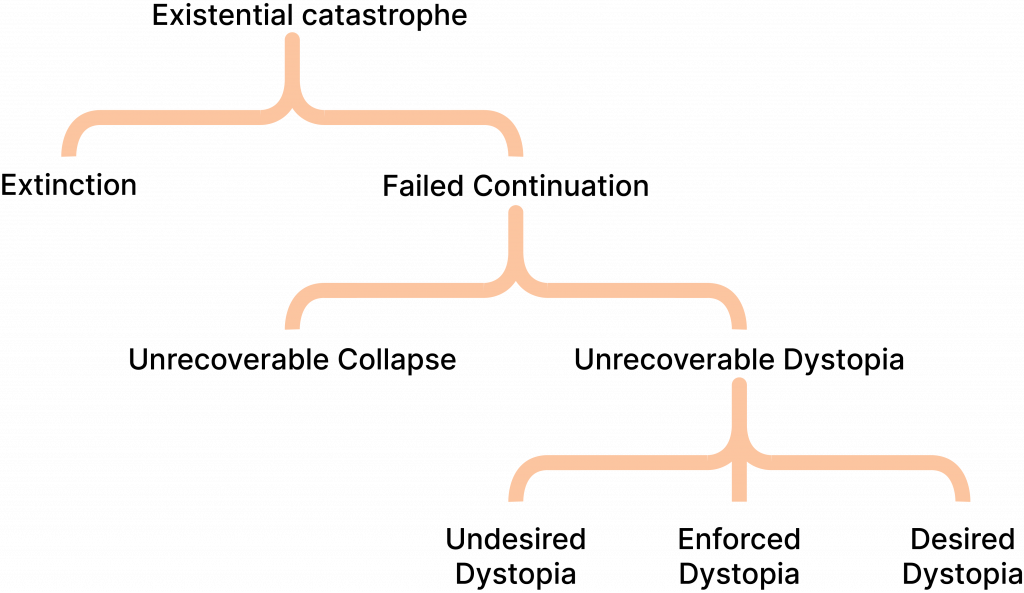

Toby Ord defines existential risk (x-risk for short) as risk that threatens the destruction of humanity’s long-term potential.

Various groups of the x-risk landscape

The risks that humanity has faced for most of its history, along with any other species on Earth, are natural risks. Things like supervolcanic eruptions, asteroids, and supernovae. These are likely here to stay for a while, but due to their low probability (compared to other risks), they shouldn’t be our top priority right now.

Ord makes an interesting point: the technologies needed to prevent some of these risks could themselves pose existential risks. There are many more asteroids that almost hit Earth than ones that actually hit it. Asteroid deflection technology could stop a small number of asteroids from hitting Earth, but, in the wrong hands, could turn many close-encounter asteroids into weapons of greater destructive power than the world’s most powerful nuclear bombs.

The risks that are uniquely caused by the actions of humans are called anthropogenic risks.

Nuclear risk is the anthropogenic risk whose shadow loomed over the second half of the 20th century. There were too many close calls in the Cold War, where the fate of the world rested on the haste decision of one person. We have been lucky so far, but there is no guarantee that our luck will continue.

Climate change might be the anthropogenic risk that will define the coming decades. While Ord’s best predictions about climate change are grim, he points out that climate change does not, in itself, represent a significant existential risk, but a risk factor, a catalyst for other existential risks.

Future risks are existential risks that will be enabled by upcoming developments in technology.

Engineered pandemics are one of the most dangerous and, currently, most conceivable existential risks. Current safety standards are both inadequately stringent and inadequately enforced. There have been numerous accidental outbreaks of disease from laboratories which almost caused pandemics.

Ord’s main concern aren’t natural pathogens leaking out from laboratories, but engineered pathogens, which could be made to be extremely contagious and lethal. The coronavirus has, even with its relatively low mortality, deeply impacted humanity, but the impact of an engineered pandemic could be much worse.

Unaligned artificial intelligence is the existential risk that Ord finds the most dangerous. He points to the alignment problem, that is, the problem of creating an advanced artificial intelligence whose interests are aligned with the interests of humanity. We do not have a solution to the AI alignment problem, and the gap between AI safety research and AI developments seems to only be getting wider.

Such an outcome needn’t involve the extinction of humanity. But it could easily be an existential catastrophe nonetheless. Humanity would have permanently ceded its control over the future. Our future would be at the mercy of how a small number of people set up the computer system that took over. If we are lucky, this could leave us with a good or decent outcome, or we could just as easily have a deeply flawed or dystopian future locked in forever.

The Path Forward

In the last part of the book, Toby Ord lays out a rough plan for humanity’s future, divided into three phases:

1. Reaching Existential Security

This step requires us to face existential risks and to reach a state of existential security, where existential risk is low and stays low.

2. The Long Reflection

After having reached existential security, we should take time to think about which decisions we ought to make in the future.

The Long Reflection is portrayed as a period where most of our needs are met, society is in a stable state, and a lot of discussion and thought is invested into important questions, like:

- How much control should artificial intelligence have over human lives?

- How much should we change what it means to be human?

- How important is the suffering of non-human animals and aliens?

- Should we colonize the whole galaxy (or universe)?

- Should we allow aging to kill humans?

It’s important to sit down and think before making large moral decisions, and the importance of decisions like these is enormous. Getting such a question wrong could mean causing immense harm without an easy way to fix it (for instance, populating the galaxy with septillions of wild animals who lead lives filled with suffering).

3. Achieving Our Potential

This step can wait until we’ve figured out what we should actually try to achieve. We still don’t know what would be the best way to shape the future of the universe, and our priority should be paving the way for others to figure this out. We might have been born too soon to witness the wonders humanity could achieve, but we are alive in a time where we can affect the probability of it coming true.

The Precipice is, in many ways, a spiritual successor to Carl Sagan’s Pale Blue Dot, written when we are much more certain about dangers Sagan could only speculate about, but also aware of dangers which weren’t imaginable in Sagan’s time.

We stand on the shoulders of giants. People who, in a world filled with dragons and spectres, tried to build a better future. The torch has been passed to us, and with it a great responsibility. A duty towards those who came before us and those who will come after us, who will to us be like a butterfly to a caterpillar.