Thoughts on EA Epistemics Projects

By hbesceli @ 2024-07-31T08:31 (+32)

I’m excited to see more projects that are focused on improving EAs’ epistemics.

I work at the EA Infrastructure Fund, and I’m wanting to get a better sense of whether it’s worth us doing more grantmaking in this area, and what valuable projects in this area would look like. (These are my own opinions and this post isn’t intended to reflect the opinions of EAIF as a whole.)

I’m pretty early on in thinking about this. With this post I’m aiming to spell out a bit of why I’m currently excited about this area, and what kinds of projects I’m excited about in particular.

Why EA epistemics?

Basically, it seems to me that having good epistemics is pretty important for effectively improving the world. And I think EA could do better on this front.

I think EA already does well on this front. Relative to other communities I’m aware of, I think EA has pretty good epistemics. And I think we could do better, and if we did, that would be pretty valuable.

I don’t have a great sense of how tractable 'improving EA's epistemics' is, though I suspect that there’s some valuable work to be done here. In part this is because I think that EA and the world in general already contains a lot of good epistemic practices, tools and culture and that there’s significant gains to more widespread adoption of this within EA. Though I also think that there’s a lot of room for innovation here as well, or pushing the forefront of good epistemics - I think a bunch of this has happened within EA already, and I’d be surprised if there wasn’t more progress we could make.

My impression is that this area is somewhat neglected. I don’t know of many strongly epistemics focused projects, though it’s possible that there’s a bunch of things that are happening here that I’m unaware of. I’d find it particularly useful to hear any examples of things related to the following areas.

The kinds of projects I currently feel excited about

Projects which support the epistemics of EA related organisations

- Most EA work (eg. people working towards effectively improving the world) happens within organisations. And so being able to improve the epistemics of such organisations seems particularly valuable.

- I think having good epistemic culture and practices within an organisation can be hard. Often most of the focus of an organisation is on their object level work, and it can be easy for the project of improving the organisation’s epistemic culture and practices to fall by the wayside.

- The bar for providing value here is probably going to be pretty high. The opportunity cost of organisations’ time will be pretty high, and I could see it being difficult to provide value externally. That being said, I think if there was a project that ended up being significantly useful to EA related organisations, and ended up significantly improving the organisation's epistemics, that seems pretty good to me.

- An example of a potential project here: A consultancy which provides organisations support in improving their epistemics.

Projects which help people think better about real problems

- By real problems, I mean problems with stakes or consequences for the world, as opposed to toy problems.

- For example, epistemics coaching seems like a plausibly valuable service within the community. I’m a lot more excited about the version of this which is eg. you hire someone who is a great thinker to give you feedback on a problem that you are working on, and how to think well about it - and less excited about this which is eg. you hire someone to generally teach you how to have good epistemics, or things which treat epistemics as a ‘school subject’ (though maybe this could be good to). Main reasons here

- Thinking well on a toy problem can look pretty different to thinking well in a real world problem. Real world problems often have things like having stakeholders, deadlines, people with different opinions, limited information etc. And I think a lot of the value of having good epistemics is being able to think well in these kinds of situations.

- Also, I expect this to mean that any kind of epistemics service has a lot better feedback loops, and is less likely to end up promoting some kind of epistemic approach which isn’t useful (or even harmful).

Projects which are treat good epistemics as an area of active ongoing development for EAs

- Here’s an unfair caricature of EAs relationship with epistemics: Epistemic development is a project for people who are getting involved in EA. Once they’ve learnt the things (like BOTECs and what scout mindset is and so on), then job done, they are ready for the EA job market. I don’t think this is a fair characterisation, though I think EA’s relationship with epistemics resembles this more than I’d like it to.

- I’m more excited about a version of the EA community which more strongly treats having good epistemics as a value that people are continually aspiring towards (even once they are ‘working in EA’) and continues to support them in this aim.

Projects which are focused on building communities or social environments where having good epistemics is a core aspect of the community, if not the core aspect of the community.

- I think EA groups have ‘good epistemics’ as one focus of what they do, though I think they are also more focused on eg. people learning the ‘EA canon’ than I would like, and more focused on recruitment than I would like. (I’m not trying to make a claim here about what EA groups should be doing, plausibly having these focuses makes sense for other reasons, though I’d also additionally/ separately be excited about groups with a stronger focus on thinking well).

Final thoughts

In general I’m excited about EA being a great epistemic environment. Or maybe more specifically, I’m excited for EAs to be in a great epistemic environment (that environment might not necessarily be the EA community). Here’s a question:

How much do you agree with the following statement: ‘I’m in a social environment that helps me think better about the problems I’m trying to solve’?

I’m excited about a world where EAs can answer ‘yes, 10/10’ to this question, in particular EAs that are working on particularly pressing problems.

Thanks to Alejandro Ortega, Caleb Parikh and Jamie Harris for previous comments on this. They don’t necessarily (and in fact probably don’t) agree with everything I’ve written here

NunoSempere @ 2024-07-31T16:58 (+28)

For a while, I've been thinking about the following problem: as you get better models of the world/ability to get better models of the world, you start noticing things that are inconvenient for others. Some of those inconvenient truths can break coordination games people are playing, and leave them with worse alternatives.

Some examples:

- The reason why organization X is doing weird things is because their director is weirdly incompetent

- XYZ is explained by people jockeying for influence inside some organization

- Y is the case but would be super inconvenient to the ideology du jour

- Z is the case, but our major funder is committed to believing that this is not the case

- E.g., AI is important, but a big funder thinks that it will be important in a different way and there is no bandwidth to communicate.

- Jobs in some area are very well paid, which creates incentives for people to justify that area

- Someone builds their identity on a linchpin which is ultimately false ("my pet area is important")

- "If I stopped believing in [my religion] my wife would leave me"—true story.

- Such and such a cluster of people systematically overestimate how altruistic they are, which has a bunch of bad effects for themselves and others when they interact with organizations focused on effectiveness

Poetically, if you stare into the abyss, the abyss then later stares at others through your eyes, and people don't like that.

I don't really have many conclusions here. So far when I notice a situation like the above I tend to just leave, but this doesn't seem like a great solution, or like a solution at all sometimes. I'm wondering whether you've thought about this, about whether and how some parts of what EA does are premised on things that are false.

Perhaps relatedly or perhaps as a non-sequitur, I'm also curious about what changed since your post a year ago talking about how EA doesn't bring out the best in you.

hbesceli @ 2024-08-01T09:44 (+7)

Perhaps relatedly or perhaps as a non-sequitur, I'm also curious about what changed since your post a year ago talking about how EA doesn't bring out the best in you.

This seems related to me, and I don't have a full answer here, but some things that come to mind:

- For me personally, I feel a lot happier engaging with EA than I did previously. I don't quite know why this is, I think some combination of: being more selective in terms of what I engage with and how, having a more realistic view of what EA is and what to expect from it, being part of other social environments which I get value from and make me feel less 'attached to EA' and my mental health improving. And also perhaps having a stronger view of what I want to be different with EA, and feeling more willing to stand behind that.

- I still feel pretty wary of things which I feel that EA 'brings out of me' (envy, dismissiveness, self-centredness etc.) which I don't like, and it still can feel like a struggle to avoid the pull of those things.

hbesceli @ 2024-08-01T09:51 (+5)

as you get better models of the world/ability to get better models of the world, you start noticing things that are inconvenient for others. Some of those inconvenient truths can break coordination games people are playing, and leave them with worse alternatives.

I haven't thought about this particular framing before, and it's interesting to me to think about - I don't quite have an opinion on it at the moment. Here's some of the things that are on my mind at the moment which feel related to this.

Jona @ 2024-07-31T12:00 (+13)

- Thanks for creating this post!

- I think it could be worth clarifying how you operationalize EA epistemics. In this comment, I mostly focus on epistemics at EA-related organizations and focus on "improving decision-making at organizations" as a concrete outcome of good epistemics.

- I think I can potentially provide value by adding anecdotal data points from my work on improving epistemics of EA related organizations. For context, I work at cFactual, supporting high-impact organizations and individuals during pivotal times. So far we have done 20+ projects partnering with 10+ EA adjacent organizations.

- Note that there might be several sampling biases and selection effects, e.g., organizations who work with us are likely not representing all high-impact organizations.

- So please read it what it is: Mixed confidence thoughts based on weak anecdotal data which are based on doing projects on important decisions for almost 2 years.

- Overall, I agree with you that epistemics at EA orgs tend to be better than what I have seen while doing for-profit-driven consulting in the private, public and social sectors.

- For example, following a simple decision document structure including: Epistemic status, RAPID, current best guess, main alternatives considered, best arguments for the best guess, best arguments against the best guess, key uncertainties and cruxes, most likely failure mode and things we would do if we had more time, is something I have never seen in the non-EA world.

- The services we list under "Regular management and leadership support for boards and executives" are gaps we see that often ultimately improve organizational decision-making.

- Note that clients pay us, hence, we are not listing things, which could be useful but don't have a business model (like writing a report on improving risk management by considering base rates and how risks link and compound).

- I think many of the gaps, we are seeing, are more about getting the basics right in the first place and don't require sophisticated decision-making methods, e.g.,

- spending more time developing goals incl. OKRs, plans, theories of change, impact measurement and risk management

- Quite often it is hard for leaders to spend time on the important things instead of the urgent and important things, e.g., more sophisticated risk management still seems neglected at some organizations even after the FTX fall-out

- improving executive- and organization-wide reflection, prioritization and planning rhythms

- asking the right questions and doing the right, time-effective analysis at a level which is decision-relevant

- getting an outside view on important decisions and CEO performance from well-run boards, advisors and coaches

- improving the executive team structure and hiring the right people to spend more time on the topics above

- spending more time developing goals incl. OKRs, plans, theories of change, impact measurement and risk management

- Overall, I think the highest variance on whether an organization has good epistemics can be explained by hiring the right people and the right people simply spending more time on the prioritized, important topics. I think there are various tweaks on culture (e.g., rewarding if someone changes someone's mind, introducing an obligation to dissent and Watch team backup), processes (e.g., having a structured and regular retro and prioritization session, making forecasts when launching a new project) as well as targeted upskilling (e.g., there are great existing calibration tools which could be included in the onboarding process) but the main thing seems to be something simple like having the right people, in the right roles spending their time on the things that matter most.

- I think simply creating a structured menu of things organizations currently do to improve epistemics (aka a google doc) could be a cost-effective MVP for improving epistemics at organizations

- To provide more concrete, anecdotal data on improving epistemics of key organizational decisions, the comments I leave most often when redteaming google docs of high impact orgs are roughly the following:

- What are the goals?

- Did you consider all alternatives? Are there some shades of grey between Option A and B? Did you also consider postponing the decisions?

- What is the most likely failure mode?

- What are the main cruxes and uncertainties which would influence the outcome of the decision and how can we get data on this quickly? What would you do if you had more time?

- Part X doesn't seem consistent with part Y

- To be very clear,

- I also think that I am making many of these prioritization and reasoning mistakes myself! Once a month, I imagine providing advice to cFactual as an outsider and every time I shake my head due to obvious mistakes I am making.

- I also think there is room to use more sophisticated methods like forecasting for strategy, impact measurement and risk management or other tools mentioned here and here

Chris Leong @ 2024-08-01T05:27 (+9)

Three Potential Project Ideas:

Alternate Perspectives Fellowship:

A bunch of EA's explore different non-EA perspectives for X number of weeks then each participant writes up a blog post based on something that they learned or one place where they updated. There could also be a cohort post that contains a paragraph or two from each participant about their biggest updates.

It probably wouldn't make sense for this to scale massively. Instead, you'd want to try to recruit skilled communicators or decision-makers such that this course could be impactful even with only a small number of participants.

Rethinking EA Fellowship

Get a bunch of smart young EA's from different backgrounds into the same room for two weeks. In the first week, ask them to rethink EA/the EA community from the ground up taking into account how the situation has shifted since EA was founded.

During the second week, bring in a bunch of experienced EA's who can share their thoughts on why things are the way that they are. The young EA's can then revise their suggestions taking their feedback into account.

Alternate Positive Vision Competition

We had a criticism competition before. Unfortunately, only a small proportion of the criticism seemed valuable (no offense to anyone!) and too much criticism creates a negative social environment. So what I'd love to propose as an alternative would be a competition to craft an alternate positive vision.

Nathan Young @ 2024-07-31T15:15 (+8)

I am happy to see this. Have you messaged people on the EA and epistemics slack?

Here are some epistemics projects I am excited about:

- Polymarket and Nate Silver - It looks to me that forecasting was 1 - 5% of the Democrats dropping Biden from their ticket. Being able to rapidly see the drop in his % chance of winning during the debate, holding focus on this poor performance over the following weeks and seeing momentum increase for other candidates all seemed powerful[1].

- X Community Notes - that one of the largest social media platforms in the world has a truth seeking process with good incentives is great. For all Musk's faults, he has pushed this and it is to his credit. I think someone should run a think tank to lobby X and other orgs into even better truth seeking

- The Swift Centre - large conflict of interest, since I forecast for them, but as a forecasting consultancy that is managing to stand largely (entirely?) without grant funding, just getting standard business gigs, if I were gonna suggest epistemics consulting, I'd probably recommend us. The Swift Centre is a professional or that has worked with DeepMind and the Open Nuclear Network.

- Discourse mapping - Many discussions happen often and we don't move forward. Personally I'm really excited about trying to find consensus positions to allow focus to be freed for more important stuff. Here is the site my team mocked up for Control AI, but I think we could have similar discourse mapping for SB 1047, different approaches to AI safety

- The Forum's AI Welfare Week - I enjoyed a week of focus on a single topic. I reckon if we did about 10 of these we might really start to get somewhere. Perhaps with clustering on different groups based on their positions on initial spectra.

- Sage's Fatebook.io - a tool for quickly making and tracking forecasts. The only tool I've found that I show to non-forecasting business people that they say "oh what's that, can I use that". I think Sage should charge for this and try and push it as a standard SaaS product.[2]

And a quick note:

An example of a potential project here: A consultancy which provides organisations support in improving their epistemics.

I think the obvious question here should be "how would you know such a consultancy has good epistemics".

As a personal note, I've been building epistemic tools for years, eg estimaker.app or casting around for forecasting questions to write on. The FTXFF was pretty supportive of this stuff, but since it's fall I've not felt like big EA finds my work particularly interesting or worthy of support. Many of the people I see doing interesting tinkering work like this end up moving to AI Safety.

- ^

Not that powerful and positively impactful aren't the same thing, but here people who said Biden was too old should be glad he is gone, by their own lights

- ^

Though maybe we let Adam finish his honeymoon first. Congratulations to the happy couple!

Saul Munn @ 2024-08-01T06:51 (+1)

It looks to me that forecasting was 1 - 5% of the Democrats dropping Biden from their ticket.

curious where you're getting this from?

Nathan Young @ 2024-08-01T11:43 (+4)

I made it up[1].

But, as I say in the following sentences it seems plausible to me that without betting markets to keep the numbers accessible and Silver to keep pushing on them, it would have taken longer for the initial crash to become visible, it could have faded from the news and it could have been hard to see that others were gaining momentum.

All of these changes seem to increase the chance of biden staying in, which was pretty knife edgy for a long time.

Saul Munn @ 2024-08-02T03:25 (+3)

thanks for the response!

looks like the link in the footnotes is private. maybe there’s a public version you could share?

re: the rest — makes sense. 1%-5% doesn’t seem crazy to me, i think i would’ve “made up” 0.5%-2%, and these aren’t way off.

Nathan Young @ 2024-08-02T19:00 (+4)

How about now https://nathanpmyoung.substack.com/p/forecasting-is-mostly-vibes-so-is

Saul Munn @ 2024-08-04T04:21 (+1)

works! thx

hbesceli @ 2024-07-31T15:32 (+1)

Have you messaged people on the EA and epistemics slack?

... there is an EA and epistemics slack?? (cool!) if it's free for anyone to join, would you be able to send me an access link or somesuch?

Ozzie Gooen @ 2024-07-31T16:02 (+11)

Invited! Feel free for others who are somewhat active in the space to ping me for invites.

peterhartree @ 2024-08-01T11:06 (+6)

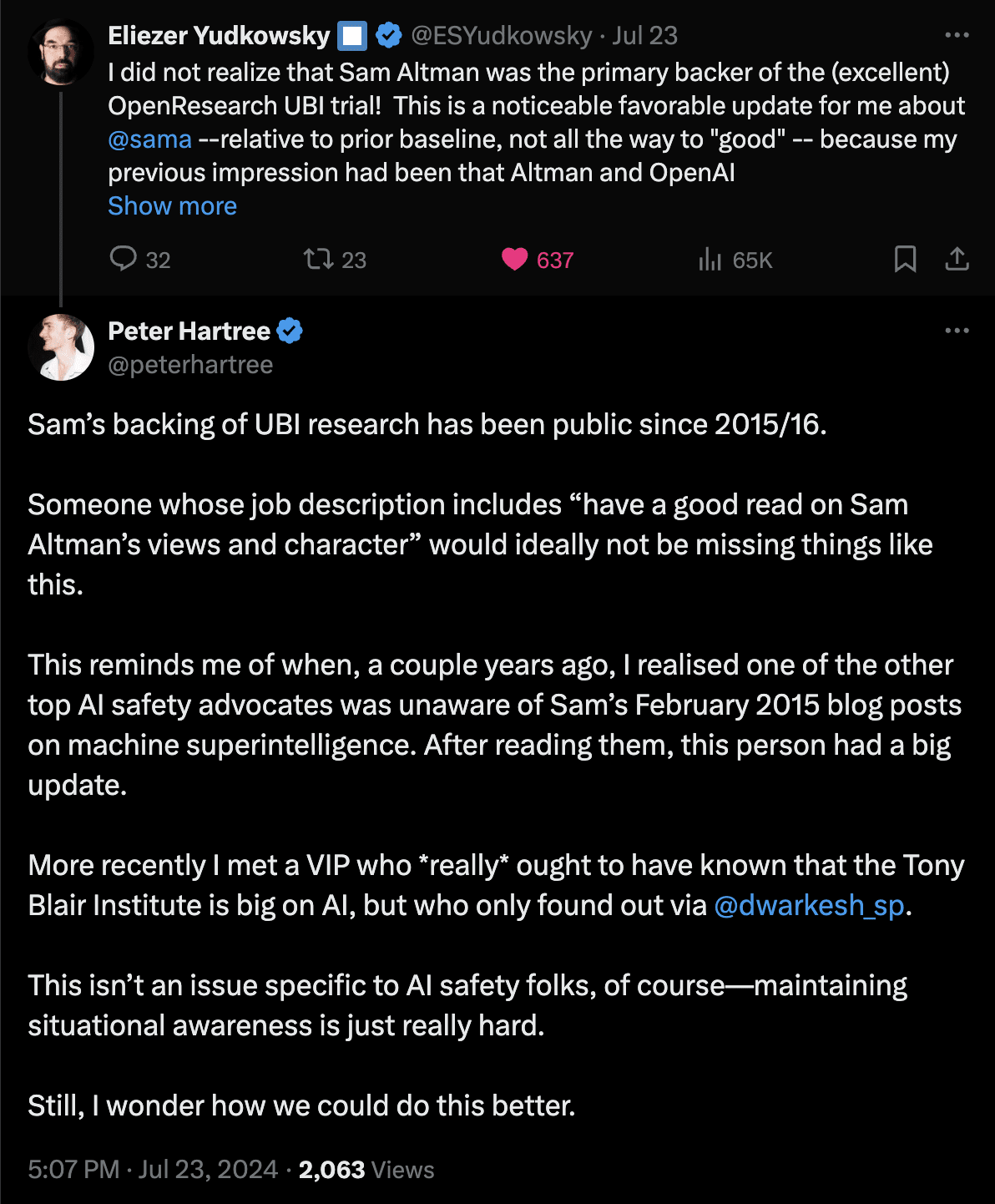

I think that "awareness of important simple facts" is a surprisingly big problem.

Over the years, I've had many experiences of "wow, I would have expected person X to know about important fact Y, but they didn't".

The issue came to mind again last week:

My sense is that many people, including very influential folks, could systematically—and efficiently—improve their awareness of "simple important facts".

There may be quick wins here. For example, there are existing tools that aren't widely used (e.g. Twitter lists; Tweetdeck). There are good email newsletters that aren't reliably read. Just encouraging people to make this an explicit priority and treat it seriously (e.g. have a plan) could go a long way.

I may explore this challenge further sometime soon.

I'd like to get a better sense of things like:

a. What particular things would particular influential figures in AI safety ideally do?

b. How can I make those things happen?

As a very small step, I encouraged Peter Wildeford to re-share his AI tech and AI policy Twitter lists yesterday. Recommended.

Happy to hear from anyone with thoughts on this stuff (p@pjh.is). I'm especially interested to speak with people working on AI safety who'd like to improve their own awareness of "important simple facts".

peterhartree @ 2024-08-01T11:24 (+4)

There are good email newsletters that aren't reliably read.

Readit.bot turns any newsletter into a personal podcast feed.

TYPE III AUDIO works with authors and orgs to make podcast feeds of their newsletters—currently Zvi, CAIS, ChinAI and FLI EU AI Act, but we may do a bunch more soon.

JamesN @ 2024-07-31T19:43 (+3)

I think this is a really important area, and it is great someone is thinking whether EAIF could expand more into this sort of area.

To provide some thoughts of my own having explored working with a few EA entities through our consultancy (https://www.daymark-di.com/) that works to help organisations improve their decision making processes, along with discussions I've had with others on similar endeavours:

- Funding is a key bottleneck, which isn't surpising. I think there is naturally an aversion to consultancy-type support in EA organisations, mostly driven by lack of funds to pay for it and partly because I think they are concerned how it'll look in progress reports if they spend money on consultants.

- EAIF funding could make this easier, as it'll remove the entire (or a large part) of the cost above.

There does appear a fairly frequent assumption that EA organisations suffer less from poor epistemics and decision making practices, which my experience suggests is somewhat true but unfortunately not entirely. I want to repeat what Jona from cFactual commented below that there are lots of actions EA organisations take that are very positive, such as BOTECs, likely failure models, and using decision matrices. This should be praised as many organisations don't even do these. However, the simple existence of these is too often assumed to mean good analysis and judgment will naturally occur and the systems/processes that are needed to make them useful are often lacking. To be more concrete, as an example few BOTECs incorporate second order probability/confidence correctly (or it is conflated with first order probability) and they subsequently fail to properly account for the uncertainty of the calculation and the accurate comparisons that can be made between options.

It has been surprising to observe the difference between some EA organisations and non-EA institutions when it comes to interest in improving their epistemics/decision making. With large institutions (including governmental) being more receptive and proactive in trying to improve - often those institutions are mostly being constrained by their slow procurement processes as opposed appetite.

When it comes to future projects, my recommendations of those with highest value add would be:

- Projects that help organisations improve their accountability, incentive, and prioritisation mechanisms. In particular helping to identify and implement internal processes that properly link workstreams/actions/decisions/judgments to the goals of the organisation and that role's objectives. This is something which is most useful to larger organisations (and especially those that make funding/granting decisions or recommendations), but can also be something which smaller organisations would benefit from.

- Projects that help organisations improve their processes to more accurately and efficiently reason under uncertainty, namely assisting with defining the prediction/diagnostic they are trying to make a judgment on, identify the causal chain and predictors (and their relative importance) that underpins their judgment, and provide them with a framework to communicate their uncertainty and reasoning in a transparent and consistent way.

- Projects that provide external scrutiny and advanced decision modelling capabilities. I think at a low level there are some relatively easy wins that can be gained from having an entity/service which red teams and provides external assessment of big decisions that organisations are looking at making (e.g. new requests for new funding). At a greater level there should be more advanced modelling (including using tools such as Bayesian models) which can provide transparent and updatable analysis which can expose conditional relationships that we can't properly account for in our minds.

- I think funding entities like EA Funds could utilise such an entity/entities to inform their grant making decisions (e.g. if such analysis was instrumental in the decision EA Funds made on a grant request, they'd pay half of the cost of the analysis).