My tentative best guess on how EAs and Rationalists sometimes turn crazy

By Habryka [Deactivated] @ 2023-06-21T04:11 (+164)

Epistemic status: This is a pretty detailed hypothesis that I think overall doesn’t add up to more than 50% of my probability mass on explaining datapoints like FTX, Leverage Research, the LaSota crew etc., but is still my leading guess for what is going on. I might also be really confused about the whole topic.

Since the FTX explosion, I’ve been thinking a lot about what caused FTX and, relatedly, what caused other similarly crazy- or immoral-seeming groups of people in connection with the EA/Rationality/X-risk communities.

I think there is a common thread between a lot of the people behaving in crazy or reckless ways, that it can be explained, and that understanding what is going on there might be of enormous importance in modeling the future impact of the extended LW/EA social network.

The central thesis: "People want to fit in"

I think the vast majority of the variance in whether people turn crazy (and ironically also whether people end up aggressively “normal”) is dependent on their desire to fit into their social environment. The forces of conformity are enormous and strong, and most people are willing to quite drastically change how they relate to themselves, and what they are willing to do, based on relatively weak social forces, especially in the context of a bunch of social hyperstimulus (lovebombing is one central example of social hyperstimulus, but also twitter-mobs and social-justice cancelling behaviors seem similar to me in that they evoke extraordinarily strong reactions in people).

My current model of this kind of motivation in people is quite path-dependent and myopic. Even if someone could leave a social context that seems kind of crazy or abusive to them and find a different social context that is better, with often only a few weeks of effort, they rarely do this (they won't necessarily find a great social context, since social relationships do take quite a while to form, but at least when I've observed abusive dynamics, it wouldn't take them very long to find one that is better than the bad situation in which they are currently in). Instead people are very attached, much more than I think rational choice theory would generally predict, to the social context that they end up in, with people very rarely even considering the option of leaving and joining another one.

This means that I currently think that the vast majority of people (around 90% of the population or so) are totally capable of being pressured into adopting extreme beliefs, being moved to extreme violence, or participating in highly immoral behavior, if you just put them into a social context where the incentives push in the right direction (see also Milgram and the effectiveness of military drafts).

In this model, the primary reason for why people are not crazy is because social institutions and groups that drive people to extreme action tend to be short lived. The argument here is an argument from selection, not planning. Cults that drive people to extreme action die out quite quickly since they make enemies, or engage in various types of self-destructive behavior. Moderate religions that include some crazy stuff, but mostly cause people to care for themselves and not go crazy, survive through the ages and become the primary social context for a large fraction of the population.

There is still a question of how you end up with groups of people who do take pretty crazy beliefs extremely seriously. I think there are a lot of different attractors that cause groups to end up with more of the crazy kind of social pressure. Sometimes people who are more straightforwardly crazy, who have really quite atypical brains, end up in positions of power and set a bunch of bad incentives. Sometimes it’s lead poisoning. Sometimes it’s sexual competition. But my current best guess for what explains the majority of the variance here is virtue-signaling races combined with evaporative cooling.

Eliezer has already talked a bunch about this in his essays on cults, but here is my current short story for how groups of people end up having some really strong social forces towards crazy behavior.

- There is a relatively normal social group.

- There is a demanding standard that the group is oriented around, which is external to any specific group member. This can be something like “devotion to god” or it can be something like the EA narrative of trying to help as many people as possible.

- When individuals signal that they are living their life according to the demanding standard, they get status and respect. The inclusion criterion in the group is whether someone is sufficiently living up to the demanding standard, according to vague social consensus.

- At the beginning this looks pretty benign and like a bunch of people coming together to be good improv theater actors or something, or to have a local rationality meetup.

- But if group members are insecure enough, or if there is some limited pool of resources to divide up that each member really wants for themselves, then each member experiences a strong pressure to signal their devotion harder and harder, often burning substantial personal resources.

- People who don’t want to live up to the demanding standard leave, which causes evaporative cooling and this raises the standards for the people who remain. Frequently this also causes the group to lose critical mass.

- The preceding steps cause a runaway signaling race in which people increasingly devote their resources to living up to the group's extreme standard, and profess more and more extreme beliefs in order to signal that they are living up to that extreme standard

I think the central driver in this story is the same central driver that causes most people to be boring, which is the desire to fit in. Same force, but if you set up the conditions a bit differently, and add a few additional things to the mix, you get pretty crazy results.

Applying this model to EA and Rationality

I think the primary way the EA/Rationality community creates crazy stuff is by the mechanism above. I think a lot of this is just that we aren’t very conventional and so we tend to develop novel standards and social structures, and those aren’t selected for not-exploding, and so things we do explode more frequently. But I do also think we have a bunch of conditions that make the above dynamics more likely to happen, and also make the consequences of the above dynamics worse.

But before I go into the details of the consequences, I want to talk a bit more about the evidence I have for this being a good model.

- Eliezer wrote about something quite close to this 10+ years ago and derived it from a bunch of observations of other cults, before really our community had shown much of any of these dynamics, so it wins some “non-hindsight bias” points.

- I think this fits the LaSota crew situation in a lot of detail. A bunch of insecure people who really want a place to belong find the LaSota crew, which offers them a place to belong, but comes with (pretty crazy) high standards. People go crazy trying to demonstrate devotion to the crazy standard.

- I also think this fits the FTX situation quite well. My current best model of what happened at an individual psychological level was many people being attracted to FTX/Alameda because of the potential resources, then many rounds of evaporative cooling as anyone who was not extremely hardcore according to the group standard was kicked out, with there being a constant sense of insecurity for everyone involved that came from the frequent purges of people who seemed to not be on board with the group standard.

- This also fits my independent evidence from researching cults and other more extreme social groups, and what the dynamics there tend to be. One concrete prediction of this model is that the people who feel most insecure tend to be driven to the most extreme actions, which is borne out in a bunch of cult situations.

Now, I think a bunch of EA and Rationality stuff tends to make the dynamics here worse:

- We tend to attract people who are unwelcome in other parts of the world. This includes a lot of autistic people, trans people, atheists from religious communities, etc.

- The standards that we have in our groups, especially within EA, have signaling spirals that pass through a bunch of possibilities that sure seem really scary, like terrorism or fraud (unlike e.g. a group of monks, who might have signaling spirals that cause them to meditate all day, which can be individually destructive but does not have a ton of externalities). Indeed, many of our standards directly encourage *doing big things* and *thinking worldscale*.

- We are generally quite isolationist, which means that there are fewer norms that we share with more long-lived groups which might act as antibodies for the most destructive kind of ideas (importantly, I think these memes are not optimized for not causing collateral damage in other ways; indeed, many stability-memes make many forms of innovation or growth or thinking a bunch harder, and I am very glad we don’t have them).

- We attract a lot of people who are deeply ambitious (and also our standards encourage ambition), which means even periods of relative plenty can induce strong insecurities because people’s goals are unbounded, they are never satisfied, and marginal resources are always useful.

Now one might think that because we have a lot of smart people, we might be able to avoid the worst outcomes here, by just not enforcing extreme standards that seem pretty crazy. And indeed I think this does help! However, I also think it’s not enough because:

Social miasma is much dumber than the average member of a group

I think a key point to pay attention to in what is going on in these kind of runaway signaling dynamics is: “how does a person know what the group standard is?”.

And the short answer to that is “well, the group standard is what everyone else believes the group standard is”. And this is the exact context in which social miasma dynamics come into play. To any individual in a group, it can easily be the case that they think the group standard seems dumb, but in a situation of risk aversion, the important part is that you do things that look to everyone like the kind of thing that others would think is part of the standard. In practice this boils down to a very limited kind of reasoning where you do things that look vaguely associated with whatever you think the standard is, often without that standard being grounded in much of any robust internal logic. And things that are inconsistent with the actual standard upon substantial reflection do not actually get punished, as long as they look like the kind of behavior that looks like it was generated by someone trying to follow the standard.

(Duncan gives a bunch more gears and details on this in his “Common Knowledge and Social Miasma” post: https://medium.com/@ThingMaker/common-knowledge-and-miasma-20d0076f9c8e)

How do people avoid turning crazy?

Despite me thinking the dynamics above are real and common, there are definitely things that both individuals and groups can do to make this kind of craziness less likely, and less bad when it happens.

First of all, there are some obvious things this theory predicts:

- Don’t put yourself into positions of insecurity. This is particularly hard if you do indeed have world-scale ambitions. Have warning flags against desperation, especially when that desperation is related to things that your in-group wants to signal. Also, be willing to meditate on not achieving your world-scale goals, because if you are too desperate to achieve them you will probably go insane (for this kind of reason, and also some others).

- Avoid groups with strong evaporative cooling dynamics. As part of that, avoid very steep status gradients within (or on the boundary of) a group. Smooth social gradients are better than strict in-and-out dynamics.

- Probably be grounded in more than one social group. Even being part of two different high-intensity groups seems like it should reduce the dynamics here a lot.

- To some degree, avoid attracting people who have few other options, since it makes the already high switching and exit costs even higher.

- Confidentiality and obscurity feel like they worsen the relevant dynamics a lot, since they prevent other people from sanity-checking your takes (though this is also much more broadly applicable). For example, being involved in crimes makes it much harder to get outside feedback on your decisions, since telling people what decisions you are facing now exposes you to the risk of them outing you. Or working on dangerous technologies that you can't tell anyone about makes it harder to get feedback on whether you are making the right tradeoffs (since doing so would usually involve leaking some of the details behind the dangerous technology).

- Combat general social miasma dynamics (e.g. by running surveys or otherwise collapsing a bunch of the weird social uncertainty that makes things insane). Public conversations seem like they should help a bunch, though my sense is that if the conversation ends up being less about the object-level and more about persecuting people (or trying to police what people think) this can make things worse.

There are a lot of other dynamics that I think are relevant here, and I think there are a lot more things one can do to fight against these dynamics, and there are also a ton of other factors that I haven’t talked about (willingness to do crazy mental experiments, contrarianism causing active distaste for certain forms of common sense, some people using a bunch of drugs, high price of Bay Area housing, messed up gender-ratio and some associated dynamics, and many more things). This is definitely not a comprehensive treatment, but it feels like currently one of the most important pieces for understanding what is going on when people in the extended EA/Rationality/X-Risk social network turn crazy in scary ways.

titotal @ 2023-06-21T09:27 (+98)

My theory is that while EA/rationalism is not a cult, it contains enough ingredients of a cult that it’s relatively easy for someone to go off and make their own.

Not everyone follows every ingredient, and many of the ingredients are actually correct/good, but here are some examples:

- Devoting ones life to a higher purpose (saving the world)

- High cost signalling of group membership (donating large amounts of income)

- The use of in-group shibboleths (like “in-group, and “shibboleths”)

- The use of weird rituals and breaking social norms (Bayesian updating, “radical honesty”, etc)

- A tendency to isolate oneself from non-group members (group houses, EA orgs)

- the belief that the world is crazy, but we have found the truth (rationalist thinking)

- the following of sacred texts explaining the truth of everything (the sequences)

- And even the belief in an imminent apocalypse (AI doom)

These ingredients do not make EA/rationalism in general a cult, because it lacks enforced conformity and control by a leader. Plenty of people, including myself, have posted on Lesswrong critiquing the sequences and Yudkowsky and been massively upvoted for it. It’s decentralised across the internet, if someone wants to leave there’s nothing stopping them.

However, what seems to have happened is that multiple people have taken these base ingredients and just added in the conformity and charismatic leader parts. You put these ingredients in a small company or a group house, put an unethical or mentally unwell leader in charge, and you have everything you need for an abusive cult environment. Now it’s far more difficult to leave, because your housing/income is on the line, and the leader can use the already established breaking of social norms as an excuse to push boundaries and consent in the name of the greater good. This seems to have happened multiple times already.

I don’t know what to do if this theory is correct, besides to take extra scrutiny of leaders of sub-groups within EA, and maybe ease up on unnecessary rituals and jargon.

Habryka @ 2023-06-21T16:41 (+38)

For what it's worth, I view the central aim for this post to be to argue against the "cult" model, which I find quite unhelpful.

In my experience the cult model tends to have relatively few mechanistic parts, and mostly seems to put people into some kind of ingroup/outgroup mode of thinking where people make a list of traits, and then somehow magically as something has more of those traits, it gets "worse and scarier" on some kind of generic dimension.

Like, FTX just wasn't that much of a cult. Early CEA just wasn't that much of a cult by this definition. I still think they caused enormous harm.

I think breaking things down into "conformity + insecurity + novelty" is much more helpful in understanding what is actually going on.

For example, I really don't think "rituals and jargon" have much to do what drives people to do more crazy things. Basically all religions have rituals and tons of jargon! So does academia. Those things are not remotely reliable indicators, or are even correlated at all as far as I can tell.

John G. Halstead @ 2023-06-22T13:34 (+41)

Could you elaborate on how early CEA caused enormous harm? I'm interested to hear your thoughts.

Habryka @ 2023-06-24T19:41 (+6)

I would like to give this a proper treatment, though that would really take a long time. I think I've written about this some in the past in my comments, and I will try to dig them up in the next few days (though if someone else remembers which comments those were, I would appreciate someone else linking them).

(Context for other readers, I worked at CEA in 2015 and 2016, running both EAG 2015 and 2016)

Linch @ 2023-08-29T02:52 (+11)

Did you have a chance to look at your old comments, btw?

Habryka @ 2023-08-29T05:16 (+2)

I searched for like 5-10 minutes but didn't find them. Haven't gotten around to searching again.

titotal @ 2023-06-22T11:18 (+17)

Unfortunately, I think there is a correlation between traits and rituals that are seen as "weird", and the popups of these bad behaviour.

For example, it seems like far more of these incidence are occurring in the rationalist community than in the EA community, despite the overlap between the two. It also seems like way more is happening in the bay area than in other places.

I don't think your model sufficiently explains why would be the case, whereas my theory posits the explanation that the bay area rats have a much greater density of "cult ingredients" than, say, the EA meetup in some university in belgium or wherever. People have lives and friends outside of EA, they don't fully buy into the worldview, etc.

I'm not trying to throw the word "cult" out as a perjorative conversation ender. I don't think Leverage was a full on religious cult, but it had enough in common with them that it ended up with the same harmful effects on people. I think the rationalist worldview leads to an increased ease of forming these sorts of harmful groups, which does not mean the worldview is inherently wrong or bad, just that you need to be careful.

David_Moss @ 2023-06-23T11:01 (+4)

For example, it seems like far more of these incidence are occurring in the rationalist community than in the EA community, despite the overlap between the two. It also seems like way more is happening in the bay area than in other places.

I don't think your model sufficiently explains why would be the case

One explanation could simply be that social pressure in non-rationalist EA mostly pushes people towards "boring" practices rather than "extreme" practices.

Ozzie Gooen @ 2023-06-22T02:06 (+9)

I think cults are a useful lens to understand other social institutions, including EA.

I don't like most "is a cult"/"is not a cult" binaries. There's of often a wide gradient.

To me, lots of academia does do highly on several cult-like markers. Political parties can do very highly. (Some of the MAGA/Proud Boys stuff comes to mind).

The inner circle of FTX seemed to have some cult-like behaviors, though to me, that's also true for a list of startups and intense situations.

I did like this test, where you can grade groups on a scale to see how "cult-like" they are.

http://www.neopagan.net/ABCDEF.html

All that said, I do of course get pretty annoyed when most people criticize EA as being a cult, where I often get the sense that they treat it as a binary, and also are implying something that I don't believe.

Michael_PJ @ 2023-06-21T16:38 (+28)

I think another contributing factor is that as a community we a) prize (and enjoy!) unconventional thinking, and b) have norms that well-presented ideas should be discussed on their merits, even if they seem strange. That means that even the non-insane members of the community often say things that sound insane from a common-sense perspective, and that many insane ideas are presented at length and discussed seriously by the community.

I think experiencing enough of this can disable your "insane idea alarm". Normally, if someone says something that sounds insane, you go "this person is insane" and run away. But in the EA/rationalist community you instead go "hm, maybe this is actually a great but out-oft-the-box idea?".

And then the bad state is that someone says something like "you should all come and live in a house where I am the ruler and I impose quasi-military discipline on you" and you go "hm, maybe this is an innovative productivity technique for generating world-saving teams" instead of immediately running away screaming.

ChanaMessinger @ 2023-06-22T13:48 (+5)

I strongly resonate with this; I think this dynamic also selects for people who are open-minded in a particular way (which I broadly think is great!), so you're going to get more of it than usual.

John G. Halstead @ 2023-06-22T13:33 (+25)

Thanks for this, I agree with almost all of it. Just one observation, which I think is important. You say "Now one might think that because we have a lot of smart people, we might be able to avoid the worst outcomes here, by just not enforcing extreme standards that seem pretty crazy."

I think the main factor that could contain craziness is social judgment rather intelligence. So, I think the prevalence of autism in the community means that EAs will be less able to than other social groups to contain craziness.

Vasco Grilo @ 2023-06-22T18:00 (+10)

Hi John,

Is there concrete data on the prevalence of autism in the EA community?

David_Moss @ 2023-06-23T13:03 (+22)

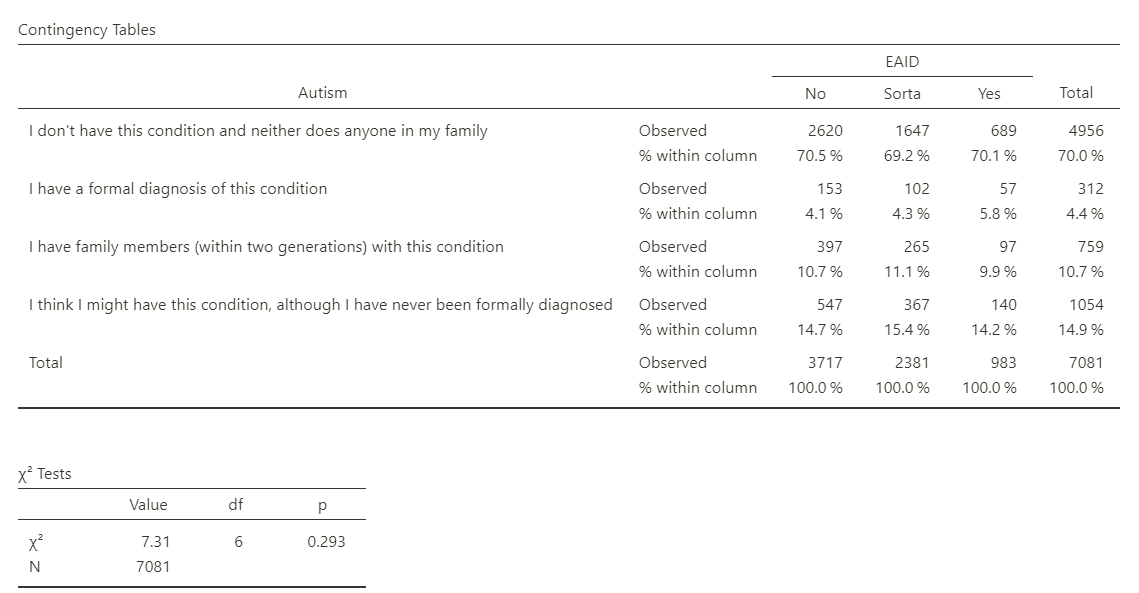

The 2020 SlateStarCodex survey has some data on this. Obviously it is quite limited by the fact that the sample is pre-selected for the (rationalist-leaning) SSC audience already, which you might also expect to be associated with autism.

This survey asked about identification as EA (No/Sorta/Yes) and autism (I don't have this condition and neither does anyone in my family/I have family members (within two generations) with this condition/I think I might have this condition, although I have never been formally diagnosed/I have a formal diagnosis of this condition). Unfortunately, these leave a lot of researcher degrees of freedom (e.g. what to do with the "Sorta" and the "never been formally diagnosed" respondents).

Just looking at the two raw measures straightforwardly, we see no significant differences, though you can see that there are more people with a formal diagnosis in the "Yes" category.

I'd be wary of p-hacking from here, though fwiw, with different, more focused analyses, the results are borderline significant (both sides of the borderline), e.g. looking at whether someone said "Yes" they are an EA * whether they are formally diagnosed with autism, there is a significant association (p=0.022).

I have previously considered whether it would be worth including a question about this in the EAS, but it seems quite sensitive and relatively low value given our high space constraints.

John G. Halstead @ 2023-06-22T19:33 (+8)

Not to my knowledge, though I think it's pretty clear

David Mathers @ 2023-06-23T11:31 (+6)

This sounds kind of plausible to me, but couldn't you equally say that you'd expect autism to be mitigating factor to cultiness, because cults are about conformity to group shibboleths for social reasons which autistic people are better at avoiding ? (At least, I'd have thought we are. Maybe only I have that perception?) That kind of makes me think that actually it is just easy to generate plausible-sounding hypothesis about the effects of a fairly broad and nebulous thing like "autistic traits" and maybe none of them should be taken that seriously without statistical evidence to back them up.

David Mathers @ 2023-06-21T08:58 (+22)

I think this sounds sensible in general, but FTX might have just been greed. Probably only a handful of people knew about the fraud, after all.

NickLaing @ 2023-06-21T11:18 (+2)

Yep I think this is most likely

ChanaMessinger @ 2023-06-22T13:53 (+20)

I'd be interested in more thoughts if you have them on evidence or predictions one could have made ahead of time that would distinguish this model from other (like maybe a lot of what's going on is youth and will evaporate over time (youth still has to be mediated by things like what you describe, but as an example).

Also, my understanding is that SBF wasn't very insecure? Does that affect your model or is the point that the leader / norm setter doesn't have to be?

Aaron Bergman @ 2023-06-24T01:24 (+14)

Seems like the forces that turn people crazy are the same ones that lead people to do anything good and interesting at all. At least for EA, a core function of orgs/elites/high status community members is to make the kind of signaling you describe highly correlated with actually doing good. Of course it seems impossible to make them correlate perfectly, and that’s why setting with super high social optimization pressure (like FTX) are gonna be bad regardless.

But (again for EA specifically) I suspect the forces you describe would actually be good to increase on the margin for people not living in Berkeley and/or in a group house which is probably a majority of self-identified EAs but a strong minority of the people-hours OP interacts with irl.

Jobst Heitzig (vodle.it) @ 2023-09-13T05:45 (+5)

The "impossible to correlate perfectly" piece is like in AI alignment, where one could also argue that perfect alignment of a reward function to the "true" utility function is impossible.

Indeed, one might even argue that the joint cognition implemented by the EA/rationality/x-risk community as a whole is a form of "artificial" intelligence, let's call it "EI" and thus we face an "EI alignment" problem. As EA becomes more powerful in the world, we get "ESI" (effective altruism superhuman intelligence) and related risks from misaligned ESI.

The obvious solution in my opinion is the same for AI and EI: don't maximize, since the metric you might aim to maximize is most likely imperfectly aligned with true utility. Rather satisfice: be ambitious, but not infinitely so. After reaching an ambitious goal, check if your reward function still makes sense before setting the next, more ambitious goal. And have some human users constantly verify your reward function :-)

RyanCarey @ 2023-06-21T12:36 (+14)

This could explain the psychology of followers, but I'm not sure it speaks to the psychology of people who lead the cults, and how we can and should deal with these people.

Habryka @ 2023-06-21T16:41 (+18)

My current model is that the "leaders" are mostly the result of the same kind of dynamic, though I really don't feel confident in this.

At least in my experience of being in leadership positions, conformity pressures are still enormously huge (and indeed often greater than being in less of a leadership position, since people apply a lot more pressure what you believe and care a lot more about shaping your behavior).

Indeed, I would say that one of the primary predictions of my model is that solo-extremists are extremely rare, and small groups of extremists are much more common.

Karl von Wendt @ 2023-06-22T12:41 (+10)

I think a major contributing factor in almost all extremely unhealthy group dynamics is narcissism. Pathological narcissists have a high incentive to use the dynamics you describe to their advantage and are (as I have heard and have some anecdotical evidence, but can't currently back up with facts) often found in leading positions in charities, religious cults, and all kinds of organizations. Since they hunger for being admired and are very good at manipulating people, they are attracted to charities and altruistic movements. Of course, a pathological narcissist is selfish by definition and completely unable to feel anything like compassion, so it's the antithesis of an altruist.

The problem is made worse because there are two types of narcissists: "grandiose" types who are easily recognizable, like Trump, and "covert" ones who are almost impossible to detect because they are often extremely skilled at gaslighting. My mother-in-law is of the latter type; it took me more than 40 years to figure that out and even realize that my wife had a traumatic childhood (she didn't realize this herself all that time, she just thought something was wrong with her). About 1-6% of adults are assumed to be narcisissts, with the real dangerous types probably making up only a small fraction of that. Still, it means that in any group of people there's on average one narcissist for every 100 members, and I have personally met at least one of them in the EA community. They often end up in leading positions, which of course doesn't mean that all leaders are narcissists. But if something goes badly wrong with an altruistic movement, narcissism is one of the possible reasons I'd look into first.

It's difficult to tell from a distance, but for me, it looks like SBF may be a pathological narcissist as well. It would at least explain why he was so "generous" and supported EA so much with the money he embezzled.

Muireall @ 2023-06-21T15:06 (+10)

Social dynamics seem important, but I also think Scott Alexander in "Epistemic Learned Helplessness" put his finger on something important in objecting to the rationalist mission of creating people who would believe something once it had been proven to them. Together with "taking ideas seriously"/decompartmentalization, attempting to follow the rules of rationality itself can be very destabilizing.

Sarah Levin @ 2023-06-23T19:53 (+8)

I think trying to figure out the common thread "explaining datapoints like FTX, Leverage Research, [and] the LaSota crew" won't yield much of worth because those three things aren't especially similar to each other, either in their internal workings or in their external effects. "World-scale financial crime," "cause a nervous breakdown in your employee," and "stab your landlord with a sword" aren't similar to each other and I don't get why you'd expect to find a common cause. "All happy families are alike; each unhappy family is unhappy in its own way."

There's a separate question of why EAs and rationalists tolerate weirdos, which is more fruitful. But an answer there is also gonna have to explain why they welcome controversial figures like Peter Singer or Eliezer Yudkowsky, and why extremely ideological group houses like early Toby Ord's [EDIT: Nope, false] or more recently the Karnofsky/Amodei household exercise such strong intellectual influence in ways that mainstream society wouldn't accept. And frankly if you took away the tolerance for weirdos there wouldn't be much left of either movement.

Toby_Ord @ 2023-06-24T10:32 (+52)

extremely ideological group houses like early Toby Ord's

You haven't got your facts straight. I have never lived in a group house, let alone an extremely ideological one.

Sarah Levin @ 2023-06-24T16:46 (+15)

Huh! Retracted. I'm sorry.

DC @ 2023-06-23T21:59 (+7)

I think trying to figure out the common thread "explaining datapoints like FTX, Leverage Research, [and] the LaSota crew" won't yield much of worth because those three things aren't especially similar to each other, either in their internal workings or in their external effects.

There are many disparate factors between different cases. The particulars of each incident really matter lest people draw the wrong conclusions. However I think figuring out the common threads insofar as there are any is what we need, as otherwise we will overindex on particular cases and learn things that don't generalize. I have meditated on what the commonalities could be and think they at least share what one may call "Perverse Maximization", which I intend to apply to deontology (i.e. the sword stabbing) as well as utilitarianism. Maybe there's a better word than "maximize".

I think I discovered a shared commonality between the sort of overconfidence these extremist positions hold and the underconfidence that EAs who are overwhelmed by analysis paralysis hold: a sort of 'inability to terminate closure-seeking process'. In the case of overconfidents, it's "I have found the right thing and I just need to zealously keep applying it over and over". In the case of underconfidents, it's "this choice isn't right or perfect, I need to find something better, no I need something better, I must find the right thing". Both share an intolerance of ambiguity, uncertainty, and shades of gray. The latter is just more people suffering quiet misery of their perfectionism or being the ones manipulated than it ending up in any kind of outward explosion.

Nathan Young @ 2023-06-21T08:17 (+5)

And the short answer to that is “well, the group standard is what everyone else believes the group standard is”. And this is the exact context in which social miasma dynamics come into play. To any individual in a group, it can easily be the case that they think the group standard seems dumb, but in a situation of risk aversion, the important part is that you do things that look to everyone like the kind of thing that others would think is part of the standard.

- Combat general social miasma dynamics (e.g. by running surveys or otherwise collapsing a bunch of the weird social uncertainty that makes things insane). Public conversations seem like they should help a bunch, though my sense is that if the conversation ends up being less about the object-level and more about persecuting people (or trying to police what people think) this can make things worse.

My general sense is that we can do a lot to surface what we as a community actually believe and that will help a lot here.

eg Polls like this where we learn what the median respondent actually thinks https://viewpoints.xyz/polls/ea-strategy-1/analytics

ChanaMessinger @ 2023-06-22T13:50 (+4)

Yeah, I'm confused about this. Seems like some amount of "collapsing social uncertainty" is very good for healthy community dynamics, and too much (like having a live ranking of where you stand) would be wildly bad. I don't think I currently have a precise way of cutting these things. My current best guess is that the more you push to make the work descriptive, the better, and the more it becomes normative and "shape up!"-oriented, the worse, but it's hard to know exactly what ratio of descriptive:normative you're accomplishing via any given attempt at transparency or common knowledge creation.

Nathan Young @ 2023-06-22T15:38 (+1)

exactly what ratio you're accomplishing.

Sorry what do you mean here? With my poll specifically? The community in general?

ChanaMessinger @ 2023-06-22T15:39 (+2)

Ratio of descriptive: "this is how things are" to normative: "shape up"

Joseph Lemien @ 2023-06-21T14:42 (+3)

I'm nervous about non-representative samples (such as only polling people who are active on Twitter) to strongly inform important decisions, but in general I am supportive of doing some polling to learn what people tend to think. I'd rather have some rough data on what people want than simply going by "vibes" and by what the people I read/talk to tend to say.

Evan_Gaensbauer @ 2023-06-21T04:42 (+4)

Do you intend to build on this by writing about the roles played by those other dynamics you mentioned but didn't write about in this post?

Jeffrey Kursonis @ 2023-06-22T20:58 (+1)

I have many years of being in multiple kinds of religious communities and I can say I've seen this kind of dynamic...this is great thinking.

I remember reading about a group of people early in Alameda, before FTX started and that had a good number of EA's among them, who stood up against SBF and demanded a better contract and he dismissed them and they all left...one can't help but think that helped create an evaporative concentration of people willing to go along with his crazy.

As an aside, for pondering, the evaporative cooling dynamic is something I experience in cooking almost daily where you reduce the liquid in order to make it a sauce with more concentrated flavors...they always refer to this as reduction, or a reduction sauce. In this case it's a good thing...but knowing this dynamic in cooking helped me understand your argument.