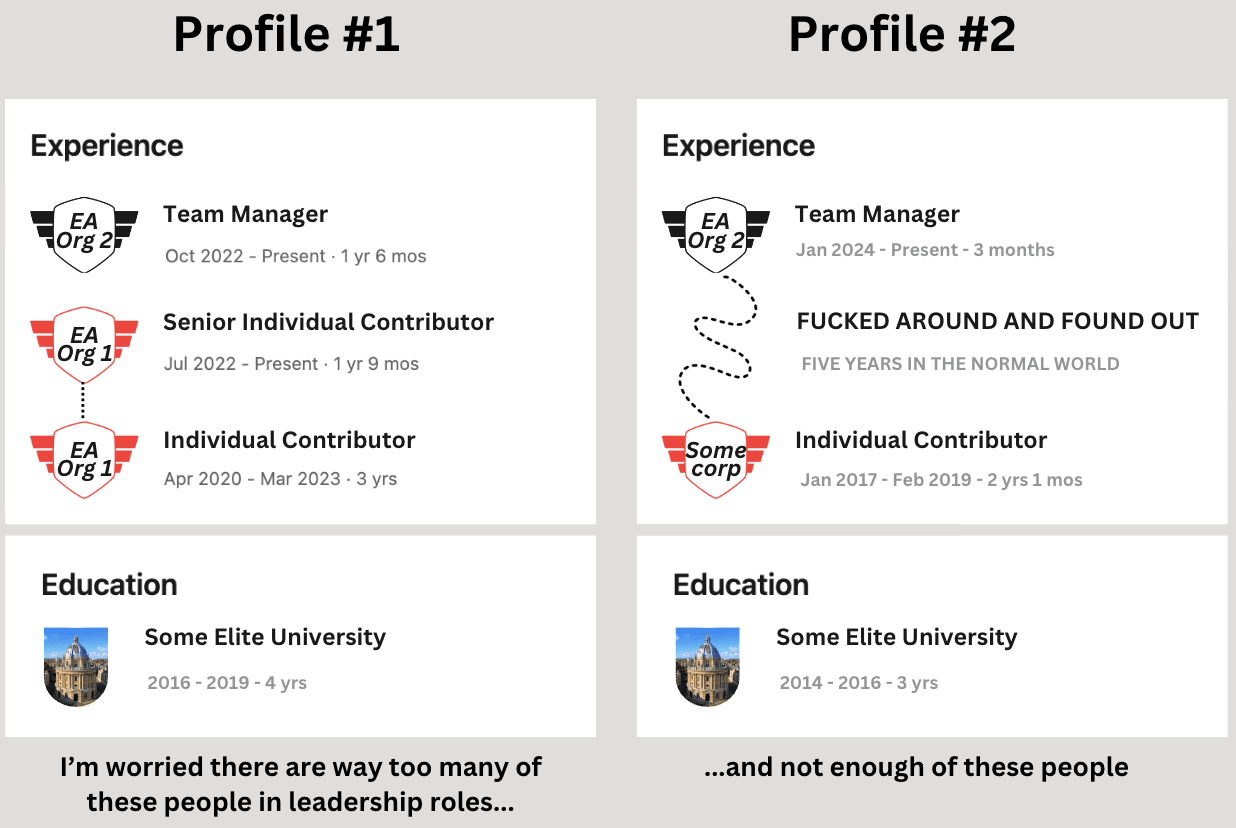

Why I worry about about EA leadership, explained through two completely made-up LinkedIn profiles

By undefined @ 2024-03-16T09:41 (+76)

The following story is fictional and does not depict any actual person or event...da da.

(You better believe this is a draft amnesty thingi).

Epistemic status: very low confidence, but niggling worry. Would LOVE for people to tell me this isn't something to worry about.

- - - - -

I've been around EA for about six years, and every now and then I have an old sneaky peak at the old LinkedIn profile.

Something I've noticed is that there seems to be a lot of people in leadership positions whose LinkedIn looks a lot like Profile #1 and not a lot who look like Profile #2. Allow me to spell out some of the important distinctions:

Profile #1:

- Immediately jumped into the EA ecosystem as an individual contributor

- Worked their way up through the ranks through good old fashioned hard work

- Has approximately zero experience in the non-EA workforce and definitely non managing non-EAs. Now they manage people

Profile #2:

- Like Profile #1, went to a prestigious uni, maybe did post grad, doesn't matter, not the major point of this post

- Got some grad gig in Mega Large Corporation and got exposure to normal people, probably crushed by the bureaucracy and politics at some point

- Most importantly, Fucked Around And Found Out (FAAFO) for the next five years. Did lots of different things across multiple industries. Gained a bunch of skills in the commercial world. Had their heart broken. Was not fossilized by EA norms. But NOW THEY'RE BACK BAYBEEE....

- - - - - -

If I had more time and energy I'd probably make some more evidenced claims about Meta issues, and how things like SBF, sexual misconduct cases or Nonlinear could have been helped with more of #2 than #1 but don't have the time or energy (I'm also less sure about this claim).

I also expect people in group 1 to downvote this and people in group 2 to upvote it, but most importantly, I want feedback on whether people think this is a thing, and if it is a thing, is it bad.

Lorenzo Buonanno @ 2024-03-16T17:40 (+142)

This doesn't really match my (relatively little) experience. I think it might be because we disagree on what counts as "EA Leadership": we probably have a different idea of what counts as "EA" and/or we have a different idea of what counts as "Leadership".

I think you might be considering as "EA leadership" senior people working in "meta-EA" orgs (e.g. CEA) and "only-EA experience" to also include people doing more direct work (e.g. GiveWell). So the CEO of OpenPhilanthropy would count as profile #1 having mostly previous experience at OpenPhilanthropy and GiveWell, but the CEO of GiveWell wouldn't count as profile #2 because they're not in an "EA leadership position". Is that correct?

most importantly, I want feedback on whether people think this is a thing, and if it is a thing, is it bad.

I think the easiest way would be to compile a list of people in leadership positions and check their LinkedIn profiles.

Working on the assumption above for what you mean by "EA Leadership", while there is no canonical list of “meta-EA leaders”, a non-random sample could be this public list of some Meta Coordination Forum participants.[1]

Here's a quick (and inaccurate) short summary of what they show on LinkedIn before their roles:[2]

Edit: this is getting a lot of votes, I want to really highlight that this is not how I would compile a list of "EA Leadership".[3]

- CEO of Open Philanthropy since 2023: 6 years at OpenPhilanthropy before becoming co-CEO, 3 years as a research analyst at GiveWell

- CEA Head of Events since 2015: 2 years as "plaintiff’s attorney in Federal Court" at a non-EA org, 3 years at MIRI, and lots of things before

- Managing Director/co-founder at Effektiv Spenden since 2022: 3 years as Associate Director of a non-EA org, 5 years as Project Leader and Program Manager at non-EA orgs, 9 Years at BCG, ...

- Website Director at 80,000 Hours since 2021: 1 year as research analyst at 80k, 5 years of PhD + University Lecturer, 2 years as content editor for a professor

- Research Analyst at Open Philanthropy since 2018: 3 years analyst at J.P. Morgan, 3 years D.Phil. at Oxford

- Division Manager at The Centre for Effective Altruism since 2020: 2 years at CEA, 1 year founding and selling a startup, 7 months recruiting at Ought (EA AI Safety org), 4 years founding and selling another startup, 8 years R&D Team Lead at Epic

- CEO at Clinton Health Access Initiative since 2022: 2 years as Managing Director of GiveWell, 8 years as CEO at IDinsight, 2 years at the world bank, 1 year as Project Coordinator at J-PAL

- Project Lead at EA Funds since 2022: 5 months research assistant at GPI, 4 months contractor at CEA, 1 year director at EA London, 1 year founder of a Charity Entrepreneurship charity

- Community Health Analyst at CEA since 2022: 9 years mathematics teacher at three schools, 2 months instructor at two rationality camps

- Program Director at Open Philanthropy since 2023: 8 years at Open Philanthropy

- Co-Founder at BlueDot Impact since 2022: 3 years founder of Biosecurity Fundamentals/AI Safety Fundamentals/Existential Risk Alliance, 3 years Officer Cadet in the Royal Air Force, 7 years actor in a soap opera

- Senior Program Officer at Open Philanthropy since 2022: 5 years at GiveWell, 1 year at CEA, 4 years at L.E.K. Consulting.

- AI Alignment Researcher at Charles University since 2022: I assume they don't count as "Leader" according to your classification

- Co-founder at Charity Entrepreneurship/AIM since 2018: 2 years co-founder of Charity Science Health, 3 years co-founder of Charity Science

- Executive Director at Atlas Fellowship since 2022: 2 years executive director at EA Funds, 7 years co-founder at Center on Long-term Risk, in parallel 4 years board member at Polaris Ventures/Center for Emerging Risk Research

- Community Liaison at CEA since 2015: 4 years as mental health clinician, 1 year as social work intern, 2 years donor services administrative assistant at Oxfam

- Co-Founder at Cambridge Boston Alignment Initiative since 2022: 2 years program director at Stanford Existential Risks Initiative, ~2 years of research roles while in university

- Farm Animal Welfare Program Officer at Open Philanthropy since 2015: 2 years as policy advisor and litigation fellow at The Humane Society of the United States, 3 years of J.D. Law, 1 year associate consultant at Bain

- Board member and trustee of Effective Ventures: 10 years founder of Sendwave and Wave, 2 years engineer at a videogame company, 1 year cofounder at a startup

- Previous ED of CEA since 2019: 2 years at CEA, 4 months researcher at GWWC, 2 months researcher at FHI

- Senior Program Associate at Open Philanthropy since 2022: 1 year chief of staff at Forethought Foundation, 3 years at FHI, 2 years executive director at Stiftung für Effektiven Altruismus, 1 year vice president at Etudes Sans Frontières

- Director of One-on-One Programme at 80k since 2022: 4 years at 80k, 2 years head of research operations at GPI, 4 years ED at GWWC, 4 years PhD in Philosophy of which 2 as director of operations at CEA

- AI Safety and governance consultant since 2022: 1 year at Future Fund, 7 years at Open Philanthropy, 2 years at FHI, 5 years PhD in Philosophy

- Head of Community Health and Special Projects at CEA since 2021: 3 years at CEA, 3 years research analyst at Open Philanthropy, 6 months staff assistant at a hospital

- CEO of 80k since 2024: 6 years at 80k, 2 years assistant director at FHI, 4 years Co-founder and Director of Special Projects at CEA, 1 year

Climate Science Advisor for the Office of the President of the Maldives, (in parallel) 4 years PhD in atmospheric, oceanic and planetary physics, 2 years Executive Director at a climate change policy analysis group - CEO of Lightcone since 2021: 5 years project lead on LessWrong, 1 year director of strategy at CEA, 3 years co-founder and head of design at (I think) a web agency, 1 year internships at Leverage/CFAR/MIRI

- CEO of Effective Ventures since 2023: 4 years at CEA, 5 years Engagement Manager at McKinsey, 3 years Technology Consultant at Deloitte, 1 year Graduate Engineer at EDF Energy

- CEO of Longview Philanthropy since 2022: 3 years at Longview, 4 years at Goldman Sachs, 2 years teacher of mathematics

- Director of Research at Giving What We Can since 2022: 4 years researcher at founders pledge, 1 year cofounder and director of EA Netherands, 2 years supporting UBI experiments

- Cofounder of CEA/GWWC/80k since 2009: (in parallel) 6 years research fellow at GPI, (in parallel) 9 years associate professor at Oxford, (in parallel) 4 years PhD in Philosophy

- CEO of CEA since 2024: 1 year CEO of EV, 3 years chief of staff/program officer/fellow at Open Philanthropy, 1 year director of product and strategy at a market research consultancy firm, 2 years associate consultant at Bain, 1 year as cowboy at a college, 1 year Interim Kitchen Manager/Butcher/Head Cook at a boarding house

I think that even in this highly biased sample there are plenty of examples of Profile #2, a quick count is 50%. Did you have a different reference class in mind?

- ^

Note that this is a list of people who:

1. Were invited and planned to attend a top "meta meta-EA" event.

2. Decided to have their name public (12 people out of 43 did not disclose their intention to attend)I think this skews heavily in favour of #1, people less professionally close to EA are probably more likely to opt-out, and to not care as much about "meta meta" things even if they were invited.

Also note, from the original post:This is not a canonical list of “key people working in meta EA” or “EA leaders.” There are plenty of people who are not attending this event who are doing high-value work in the meta-EA space. We’ve invited people for a variety of reasons: some because they are leaders of meta EA orgs or run teams within those orgs and so have significant decision-making power or strategic influence, and others because they have expertise or work for an organization that we think it could be valuable to learn from or cooperate with.

- ^

This was compiled very quickly, there will be errors. Apologies to the people mentioned, this is only meant to be a quick overview.

Also note that different people spend very different amounts of time polishing their LinkedIn profile, things are self-reported and not verified, and some might not mention their "real world" experiences or some "EA" experiences.

- ^

E.g. I would include senior people at GiveWell's top charities (where most of the money goes) and at GiveWell itself

Joseph Lemien @ 2024-03-17T01:32 (+21)

Lorenzo, I want to applaud you for actually gathering some data. As I was reading this post I thought to myself "this is provable, we could just do a low-fidelity, quick-and-dirty test by looking at some LinkedIn profiles of anyone with a job title of something like Manager or Director at a sample of EA orgs." And lo and behold, the first comment I see if a beautifully done, quick gathering of data. Bravo!

James Herbert @ 2024-03-17T19:04 (+10)

I love that you actually did this Lorenzo!

However, I think some of your categorisations are quite debatable. I wouldn't give much weight to experience that has been gained part-time alongside studies. This means I would probably categorise numbers 2 and 11 differently, and I suspect a couple more.

Lorenzo Buonanno @ 2024-03-17T19:16 (+6)

I agree many of these were judgment calls, in both directions. Feels weird to mark people as having less "real world work experience" because they were studying while working instead of just working, but might be a good proxy for the work not being full-time.

For 2 I left out a ton of things that I didn't know how to summarize in the summary, I think it's more profile #2 than profile #1. I guess it might depend on whether "Personalized Medicine LLC" is another name for "MetaMed", or they were two different companies. (Edit: it probably shouldn't count, I guess only the 2 years before their last role count, will tentatively change it as closer to profile #1)

For 11 idk how to consider the experience as teenage actor and as Officer Cadet at the air force. maybe it's a 10 hour a week kind of thing and shouldn't count, I honestly have no idea

Yanni Kyriacos @ 2024-03-22T00:06 (+1)

Just to be clear, my conception of Type 2 is that they're still involved in EA (e.g. through ETG, volunteering, meetups), but their job isn't, for some period of time.

Which is partly why I think 25%/75% is better than 50/50

Vilfredo's Ghost @ 2024-03-18T08:02 (+1)

If RAF cadets work like USAF cadets it means they went to college at some kind of military academy and were essentially full time plus in military training alongside school.

James Herbert @ 2024-03-18T10:08 (+9)

I feel icky spending this much time discussing the CVs of people I've never met (if the people concerned see this and feel weird I'm sorry!). That being said, I think what we're talking about here is quite different from the USAF cadets. I remember attending a recruitment event for a similar scheme run by the British Army and, if I recall correctly, it's basically a fun uni club thing the various forces offer to recruit uni grads. A weekly training night, some socials, some adventurous trips, etc. You can check the official website here.

Yanni Kyriacos @ 2024-03-17T09:24 (+10)

I'll try and loop back to your reply when I'm less exhausted (having a two year old is quite the thing). But off the top of my head:

1. bloody well done for going the extra mile on this ❤️

2. if you're right about the 50%, that worries me. I was hoping for something more like 30%/ 70%. This is serious diversity problem IMO.

Linch @ 2024-03-16T22:09 (+53)

If I had more time and energy I'd probably make some more evidenced claims about Meta issues, and how things like SBF, sexual misconduct cases or Nonlinear could have been helped with more of #2 than #1 but don't have the time or energy (I'm also less sure about this claim).

At the risk of saying the obvious, literally every single person at Alameda and FTX's inner circle worked in large corporations in the for-profit sector out of college and before Alameda/FTX. (SBF: Jane Street, Gary Wang: Google, Caroline Ellison: Jane Street, Nishad Singh: Facebook/Meta). It wasn't 5 whole years though, so maybe that made a difference? But they also joined a for-profit company pretty quickly, rather than working at EA nonprofits.

(though you said you were less sure about this claim, and I don't want to harp on it)

Yanni Kyriacos @ 2024-03-17T09:19 (+6)

I wasn't clear. I was actually pointing to an intuition I have that SBF 'got away with it' by taking advantage of EAs unusually high levels of contentiousness.

Jeff Kaufman @ 2024-03-16T10:30 (+42)

Thanks for writing this, and for liberating the draft!

As someone who's closer to category #2 than #1 (I worked in the corporate world for a long time before starting at an ea-aligned and -founded biosecurity nonprofit) I'm, as you say, naturally inclined to like this post. But considerations in the other direction:

-

People who didn't spend 5+ years in the corporate world and instead spent them doing altruistically useful things probably got a bunch of altruistically useful things done.

-

If you're advising young EAs on what to do after graduating this is effectively suggesting they wait 3-10y before substantially contributing, which is time during which they might drift away from EA (or AI might end the world as we know it, etc).

-

People who went to grad school (ex: my PhD biosecurity coworkers) are already pretty far into their career by the time they fully finish school. But maybe you're mostly thinking about college grads?

To the extent that you're thinking about where to recruit EAs for direct work, though, if you can find people with more experience in a wide range of work that's certainly valuable!

RedTeam @ 2024-03-17T06:27 (+23)

Thank you for this post - I definitely have some similar and related concerns about experience levels in senior roles in EA organisations. And I likewise don't know how worried to be about it - in particular I'm unclear about whether what I've observed is representative or exceptional cases.

What I have observed is not from some structured analysis, but from a random selection of people and organisations I've looked up in the course of exploring job opportunities at various EA organisations. So in line with note above about being unsure whether it's representative, it's very unclear to me how much weight to assign to these observations.

Concerns: narrowness of skillsets/lack of diversity of experience*; limited experience of 'what good looks like'; compounding effect of this on junior staff joining now.

*By 'experience' I do not strictly mean number of years working; though there may often be a correlation, I have met colleagues who have been in the workforce 40 years and have relatively limited experience/skillset and colleagues in the workforce for 5 years who have picked up a huge amount of experience, knowledge and skills.

Observations I've been surprised by:

- Hiring managers with reasonably large teams who have only been in the workforce for a couple of years themselves and / or have no experience in the operational field they're line managing (e.g. in charge of all grant processes, but never previously worked in a grant-giving organisation).

- Entire organisations populated solely with staff fresh out of university. Appreciate these are often startups, appreciate you can be exceptionally bright and have a lot to contribute at 22/post-PhD, but also you don't know what you don't know at that very early career stage.

- EA orgs with significant budgets where some or all of the senior leadership team have been in the workforce for 3 years or less, and / or have only ever worked in start ups or organisations that have a very small number of employees.

Unfortunately, it is not just limited to potential(ly unfounded concerns about) inexperience of individuals. As I've been reading job descriptions, application forms etc., specific content has also made me uneasy / feel there is a lack of experience in some of these organisations. This has included, but is not limited to: poor/entirely absent consideration of disabilities and the Equality Act in job application processes; statements of 'we are going to implement xxxx' that seem wildly unrealistic/naive; job descriptions that demonstrate a lack of knowledge of how an operational area works; and funders describing practices or desired future practices that are intentionally actively avoided by established grant funding organisations due to the amount of bias it would lead to in grant awarding decisions.

Why am I concerned?

In the case of the content that surprised me, I'm concerned both because of the risks associated with the specific issues but also that they may be indicators of the organisations as a whole having poor controls and low risk maturity - and it's very difficult for organisations to be effective and efficient when that is the case.

Also I've been in the workforce for nearly 15 years, and when I think about my own experience at different organisations, it strikes me that:

- I've learned an awful lot over that time and I see a huge difference in my professional competence and decision making now versus early in my career.

- There has been a big difference in the quality of processes, learning and experience at different organisations. And being honest, in larger organisations I learned more; working with colleagues who had 20+ years' experience and had been in charge of large, complex projects or big teams or had just been around long enough to see some issues that only happen infrequently has been very valuable. Seeing how things work in lots of different organisations and sectors has taught me a lot.

- I worry a lack of diversity amongst employees in any organisation. For all organisations, decision-making is likely to be worse if there's a lack of diversity of opinions and experience. And in particular orgs where everyone did the same degree can have big blind spots, narrow expertise, or entrenched group think issues. For EA organisations in particular, in the same way as I think career politicians are problematic, I worry that people who have only worked in EA orgs may lack understanding of the experience of the vast majority of the population who don't work in the EA sector, and this could be problematic for all sorts of reasons, particularly when trying to engage the wider public.

- Many of these EA orgs are in growth mode. I've seen how difficult rapid growth can be for organisations. Periods of rapid growth are often high risk not just because of the higher stakes of strategic decision-making in these periods, but because on the operational side it is very different having a 5 person organisation vs a 20 person organisation vs a 100 person organisation vs a 1,000 person organisation - you need completely different controls, systems, processes and skillsets as you grow and it can be very challenging to make this transition at the same time as (1) trying to deliver the growth/ambitions and (2) probably being in a position where you have quite a lot of vacancies as the hiring into roles will always lag behind identification of the need for additional staff. Trying to do this also with a team who also hasn't seen what an organisation of the size they are aiming for looks like is going to mean even greater challenges.

Importantly - I suspect there are advantages too:

- I can see real advantages, particularly culturally, to having a workforce where all or most staff are early career or otherwise have not experienced work outside of EA orgs. For example, lots of long-established or large organisations really struggle to shift culture and get away from misogyny or to get buy-in on prioritising mental health. Younger staff members - as a generalisation - tend to have more progressive views. And experience in the private sector can cause even progressive individuals to pick up bad habits/take on negative elements of culture.

- Though I've been alarmed by some elements of application processes, some elements of the unorthodox application processes have been positive. It's refreshing to see very honest descriptions/information, and prompts that try to minimise time from candidates in early application stages.

- Perhaps linked to culture being set by more progressive leaders, in many EA orgs I've looked into there is a focus on time and budget for learning/wellbeing that is in line with best practice and well ahead of many organisations in other sectors. E.g. I've commonly seen a commitment to £5k personal development spend from EA orgs; in many sectors, even in large organisations this type of commitment is still rare and it's much more common to see lip-service type statements like 'learning and development is a priority' without budgetary backing.

N.B. In the spirit of drafts amnesty week, this response is largely off the top of my head so is rough around the edges and could probably include better examples/explanations/expansions if I spent a long time thinking about and redrafting it. However, I took the approach of better rough and shared than unfinished and unsaid.

Alexander_Berger @ 2024-03-21T20:08 (+8)

FWIW I think I'm an example of Type 1 (literally, in Lorenzo's data) and I also agree that abstractly more of Type 2 would be helpful (but I think there are various tradeoffs and difficulties that make it not straightforwardly clear what to do about it).

Yanni Kyriacos @ 2024-03-21T23:58 (+3)

Just to be clear, my conception of Type 2 is that they're still involved in EA (e.g. through ETG, volunteering, meetups), but their job isn't, for some period of time.

Which is partly why I think 25%/75% is better than 50/50

gewind @ 2024-03-23T14:28 (+7)

Thanks for this post! To add one thought that keeps bugging me: I believe joining an EA org on average lowers career capital by a medium to large extent in comparison to alternative plausible careers.

-

many jobs are less specifically relevant for the continuous development of industry-job market cash-cow skills (or whatever one wants to call that).

-

joining an EA-org feels rather awkward to explain to the majority of employers with a feeling that most are not particularly moved towards "PRO-employ" by the explanation one will give.

If true, this has some severe implications which IMO are not discussed prominently enough (both in the struggle for attracting talent and in the discussion on leadership's abilities which this post adresses, see e.g. lock-in effects and biases).

In my personal career decisions, this suspicion has had a large multiplier effect on the uncertainties I am struggling with (say, the good-ness of E2G (I believe the community under-estimates), EA (..), how much to invest into career capital vs. when to start cashing in).

Rebecca @ 2024-03-17T11:03 (+7)

I agree that starting with some non-EA experience is good (and this is the approach I took), though 5 years seems too long.

Yanni Kyriacos @ 2024-03-17T23:32 (+3)

You might be right. It's long enough for values to drift and lose touch with EA. Maybe 2 as a minimum and 4 as a maximum? Very person dependant.

Rebecca @ 2024-03-18T00:33 (+4)

2 worked well for me I think

Yanni Kyriacos @ 2024-03-17T02:10 (+6)

People are asking for data on this, and I think it is a good intuition to do so, but I know myself and the options were either write/design this in 20 minutes or it never exists at all.

Maybe it’s motivated reasoning but sometimes I hope posts like this provide the groundwork for scaffolding, whereby someone takes the tiny amount of confidence I had and builds on it.

DC @ 2024-03-19T01:22 (+5)

I feel a little alienation by the emphasis on elite education from both sides of this kind of debate. Not that there's necessarily much that can be changed there, it's probably just the nature of the game mostly. But I find a little odd that the "be more normal [with career capital]" camp presumes normal to include being in the upper middle class of the Anglo world. That's usually the sort of person making the critique. Though I could see a blue-collar worker levying it too.

Yanni Kyriacos @ 2024-03-19T02:38 (+2)

Honestly I paused on this point for a bit as I was writing the post. The main reason I left it is because I didn't want to open up that debate, as EA so obviously has a strategy of "target elite universities".

But I TOTALLY feel you on this one.

Elizabeth @ 2024-03-17T21:15 (+4)

I don't know that I think having more profile 2s will fix the problems you list, and other people have addressed that there are more profile 2s than you would think. But I do think that having profile 2 (or a hybrid) feel like a more available option would be good for people emotionally and improve overall EA productivity.

Yanni Kyriacos @ 2024-03-22T00:05 (+1)

Just to be clear, my conception of Type 2 is that they're still involved in EA (e.g. through ETG, volunteering, meetups), but their job isn't, for some period of time.

Which is partly why I think 25%/75% is better than 50/50

lilly @ 2024-03-16T13:47 (+4)

Yeah, I think this is basically right. EA orgs probably favor Profile 1 people because they've demonstrated more EA alignment, meaning: (1) the Profile 1 people will tend to be more familiar with EA orgs than the Profile 2 people, so may be better positioned to assess their fit for any given org/role, (2) conversely, EA orgs will tend to be more familiar with Profile 1 people, since they've been in the community for a while, meaning orgs may be better able to assess a prospective Profile 1 employee's fit, and (3) if the Profile 1 employee leaves/is fired, they'll be less inclined to trash/sue the EA org.

Favoring Profile 1 people because of (3) would be bad (and I hope orgs aren't explicitly or implicitly doing this!), but favoring them because of (1) + (2) seems pretty reasonable, even though there are downsides associated with this (e.g., bad norms are less likely to get challenged, insights/innovations from other spheres won't make it into EA, etc).

That said, I think one thing your post misses is that there are a lot of people who are closer to Profile 2 people (professionally) who are pretty embedded in EA (socially, academically, extracurricularly, etc). And I think orgs also tend to favor these people, which may mitigate at least some of the aforementioned downsides of EA being an insular ecosystem (i.e., the insights/innovations from other spheres one, if not the challenging norms one).

A final piece of speculation: getting a job at an EA org is a lot more prestigious for EAs than it is for people outside of EA, and the career capital conferred by working at EA orgs has a much lower exchange rate outside of EA. As a result, it wouldn't shock me if top Profile 2 candidates are applying to EA jobs at much lower rates and are much less likely to take EA jobs they're offered. If this is the case, the discrepancy you're observing may not reflect an unwillingness of EA orgs to hire impressive Profile 2 candidates, but rather a lack of interest from Profile 2 candidates whose backgrounds are on par with the Profile 1 candidates'.

Ulrik Horn @ 2024-03-16T13:04 (+3)

Edit: For people downvoting this, please don't if you do it because that data is already available. I made this comment before Lorenzo's excellent data was published (see his comment, above).

I think having data at least just roughly on how many percent there is of each would help me. If its 95% and 5% it feels like we lack diversity in experience. If it's 30% and 70% I think I would be less worried.