EA is more than longtermism

By Fran @ 2022-05-03T15:18 (+160)

Acknowledgements

A big thank you to Bruce Tsai, Shakeel Hashim, Ines, and Nathan Young for their insightful notes and additions (though they do not necessarily agree with/endorse anything in this post).

Important Note

This post is a quick exploration of the question, 'is EA just longtermism?' (I come to the conclusion that EA is not). This post is not a comprehensive overview of EA priorities nor does it dive into the question from every angle - it is mostly just my brief thoughts. As such, there are quite a few things missing from this post (many of the comments do a great job of filling in some gaps). In the future, maybe I'll have the chance to write a better post on this topic (or perhaps someone else will; please let me know if you do so I can link to it here).

Also, I've changed the title from 'Is EA just longtermism now?' so my main point is clear right off the bat.

Preface

In this post, I address the question: is Effective Altruism (EA) just longtermism? I then ask, among other questions, what factors contribute to this perception? What are the implications?

1. Introduction

Recently, I’ve heard a few criticisms of Effective Altruism (EA) that hinge on the following: “EA only cares about longtermism.” I’d like to explore this perspective a bit more and the questions that naturally follow, namely: How true is it? Where does it come from? Is it bad? Should it be true?

2. Is EA just longtermism?

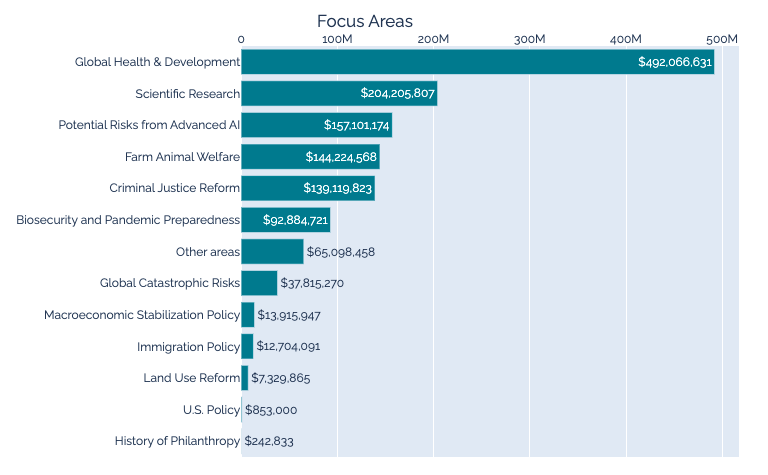

In 2021, around 60% of funds deployed by the Effective Altruism movement came from Open Philanthropy (1). Thus, we can use their grant data to try and explore EA funding priorities. The following graph (from Effective Altruism Data) shows Open Philanthropy’s total spending, by cause area, since 2012:

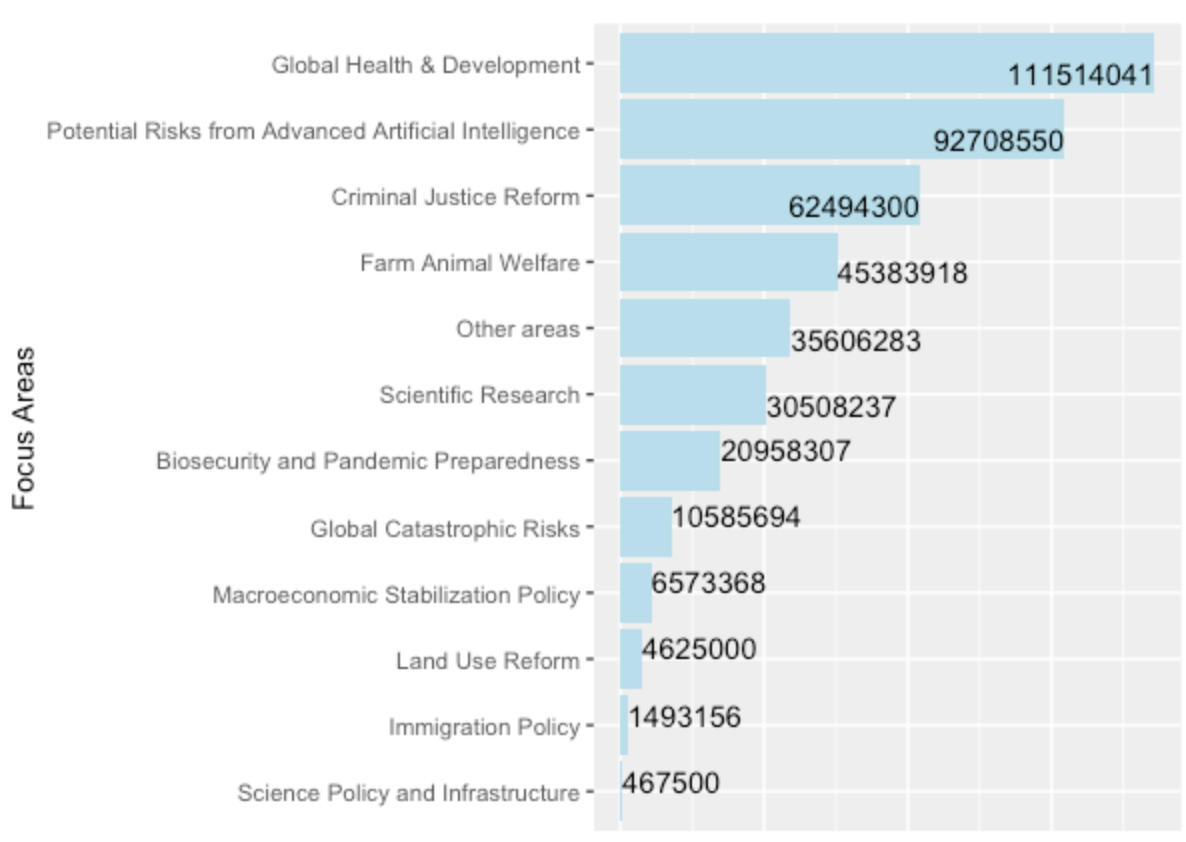

Overall, Global Health & Development accounts for the majority of funds deployed. How has that changed in recent years, as AI Safety concerns grow? We can look at this uglier graph (bear with me) showing Open Philanthropy grants deployed from January, 2021 to present (data from the Open Philanthropy Grants Database):

We see that Global Health & Development is still the leading fund-recipient; however, Risks from Advanced AI is now a closer second. We can also note that the third and fourth most funded areas, Criminal Justice Reform and Farm Animal Welfare, are not primarily driven by a goal to influence the long-term future

With this data, I feel pretty confident that EA is not just longtermism. However, it is also true (and well-known) that funding for longtermist issues, particularly AI Safety, has increased. Additionally, the above data doesn't provide a full picture of the EA funding landscape nor community priorities. This raises a few more questions:

2.1 Funding has indeed increased, but what exactly is contributing to the view that EA essentially is longtermism/AI Safety?

(Note: this list is just an exploration and not meant to claim whether the below things are good or bad, or true)

- William Macaskill’s upcoming book, What We Owe the Future, has generated considerable promotion and discussion. Following Toby Ord’s The Precipice, published in March, 2020, I imagine this has contributed to the outside perception that EA is becoming synonymous with longtermism.

- The longtermist approach to philanthropy is different from mainstream, traditional philanthropy. When trying to describe a concept like Effective Altruism, sometimes the thing that most differentiates it is what stands out, consequently becoming its defining feature.

- Of the longtermist causes, AI Safety receives the most funding, and furthermore, has a unique ‘weirdness’ factor that generates interest and discussion. For example, some of the popular thought experiments used to explain Alignment concerns can feel unrealistic, or something out of a sci-fi movie. I think this can serve to both: 1. draw in onlookers whose intuition is to scoff, 2. give AI-related discussions the advantage of being particularly interesting/compelling, leading to more attention.

- AI Alignment is an ill-defined problem with no clear solution and tons of uncertainties: What counts as AGI? What does it mean for an AI system to be fair or aligned? What are the best approaches to Alignment research? With so many fundamental questions unanswered, it’s easy to generate ample AI Safety discussion in highly visible places (e.g. forums, social media, etc.) to the point that it can appear to dominate EA discourse.

- AI Alignment is a growing concern within the EA movement, so it's been highlighted recently by EA-aligned orgs (for example, AI Safety technical research is listed as the top recommended career path by 80,000 Hours).

- Within the AI Safety space, there is cross-over between EA and other groups, namely tech and rationalism. Those who learn about EA through these groups may only interact with EA spaces focussed on AI Safety/crossing over into other groups–I imagine this shapes their understanding of EA as a whole.

- For some, the recent announcement of the FTX Future Fund seemed to solidify the idea that EA is now essentially billionaires distributing money to protect the long-term future.

- [Edit: There are many more factors to consider that others have outlined in the comments below :)]

2.2 Is this view a bad thing? If so, what can we do?

Is it actually a problem that some people feel EA is “just longtermism”. I would say, yes, insofar that it is better to have an accurate picture of an idea/movement versus an inaccurate one. Beyond that, such a perception may turn away people who could be convinced to work on cause areas more unrelated to longtermism, like farmed animal welfare, but would disagree with longtermist arguments. If this group is large enough, then it seems important to try and promote a clearer outside understanding of EA, allowing the movement to grow in various directions and find its different target audiences, rather than having its pieces eclipsed by one cause area or worldview.

What can we do?

I’m not sure, there are likely a few strategies (e.g. Shakeel Hashim suggested we could put in some efforts to promote older EA content, such as Doing Good Better, or organizations associated with causes like Global Health and Farmed Animal Welfare).

2.3 So EA isn’t “just longtermism,” but maybe it’s “a lot of longtermism”? And maybe it’s moving towards becoming “just longtermism”?

I have no clue if this is true, but if so, then the relevant questions are:

2.4 What if EA was just longtermism? Would that be bad? Should EA just be longtermism?

I’m not sure. I think it’s true that EA being “just longtermism” leads to worse optics (though this is just a notable downside, not an argument against shifting towards longtermism). We see particularly charged critiques like,

Longtermism is an excuse to ignore the global poor and minority groups suffering today. It allows the privileged to justify mistreating others in the name of countless future lives, when in actuality, they’re obsessed with pursuing profitable technologies that result in their version of ‘utopia’–AGI, colonizing mars, emulated minds–things only other privileged people would be able to access, anyway.

I personally disagree with this. As a counter-argument:

Longtermism, as a worldview, does not want present day people to suffer; instead, it wants to work towards a future with as much fluorishing as possible, for everyone. This idea is not as unusual as it is sometimes framed - we hear something very similar with climate change advocacy (i.e. “We need climate interventions to protect the future of our planet. Future generations could stand to suffer immensely poor environmental conditions due to our choices”). An individual or elite few individuals could twist longtermist arguments to justify poor behavior, but this is true of all philanthropy.

Finally, there are many conclusions one can draw from longtermist arguments–but the ones worth pursuing will be well thought-out. Critiques can often highlight niche tech rather than the prominent concerns held by the longtermist community at large: risks from advanced Artificial Intelligence, pandemic preparedness, and global catastrophic risks. Notably, working on these issues can often improve the lives of people living today (e.g. working towards safe advanced AI includes addressing already present issues, like racial or gender bias in today’s systems).

But back to the optics–so longtermism can be less intuitively digestible, it can be framed in a highly negative way–does that matter? If there is a strong case for longtermism, should we not shift our priorities towards it? In which case, the real question is, does the case for longtermism hold?

This leads me to the conclusion: if EA were to become "just longtermism," that’s fine, conditional on the arguments being incredibly strong. And if there are strong arguments against longtermism, the EA community (in my experience) is very keen to hear them.

Conclusion

Overall, I hope this post generates some useful discussion around EA and longtermism. I posed quite a few questions, and offered some of my personal thoughts; however, I hold all these ideas loosely and would be very happy to hear other perspectives.

Citations

AnonymousEAForumAccount @ 2022-05-04T03:33 (+197)

what exactly is contributing to the view that EA essentially is longtermism/AI Safety?

For me, it’s been stuff like:

- People (generally those who prioritize AI) describing global poverty as “rounding error”.

- From late 2017 to early 2021, effectivealtruism.org (the de facto landing page for EA) had at least 3 articles on longtermist/AI causes (all listed above the single animal welfare article), but none on global poverty.

- The EA Grants program granted ~16x more money to longtermist projects as global poverty and animal welfare projects combined. [Edit: this statistic only refers to the first round of EA Grants, the only round for which grant data has been published. ]

- The EA Handbook 2.0 heavily emphasized AI relative to global poverty and animal welfare. As one EA commented: “By page count, AI is 45.7% of the entire causes sections. And as Catherine Low pointed out, in both the animal and the global poverty articles (which I didn't count toward the page count), more than half the article was dedicated to why we might not choose this cause area, with much of that space also focused on far-future of humanity. I'd find it hard for anyone to read this and not take away that the community consensus is that AI risk is clearly the most important thing to focus on.” When changes to the handbook were promised in response to this type of criticism, those changes were then deprioritized and the Handbook wasn’t updated for years.

- 80k’s “top recommended problems” skew heavily longtermist; global health and poverty and factory farming don’t make the cut and are instead listed as “other pressing problems”

- For the Community Building Grants program, “The primary metric used to assess grants at the end of the first year is the number of group members who apply for internships or graduate programs in priority areas and reach at least the interview stage… We used the 80,000 Hours list of priority paths as the basis for our list of accredited roles, but expanded it to be somewhat broader.” Since 80k’s priority paths are predominantly longtermist, groups were in large part evaluated by how many members they steered into longtermist jobs or studies.

- GPI’s research agenda focuses on, and essentially assumes, longtermism

- CFAR’s website went from emphasizing things like “turning cognitive science into cognitive practice”, to things like “we are focused on the existential win... we see AI safety as especially key here”

- Etc.

James Ozden @ 2022-05-04T18:12 (+81)

Some things from EA Global London 2022 that stood out for me (I think someone else might have mentioned one of them):

- An email to everyone promoting Will's new book (on longtermism)

- Giving out free bookmarks about Will's book when picking up your pass.

These things might feel small but considering this is one of the main EA conferences, having the actual conference organisers associate so strongly with the promotion of a longtermist (albeit yes, also one of the main founders of EA) book made me think "Wow, CEA is really trying to push longtermism to attendees". This seems quite reasonable given the potential significance of the book, I just wonder if CEA have done this for any other worldview-focused books recently (last 1-3 years) or would do so in the future e.g. a new book on animal farming.

Curious to get someone else's take on this or if it just felt important in my head.

Other small things:

- On the sidebar of the EA Forum, there's three recommended articles: Replacing Guilt, the EA Handbook (which as you mentioned here, is mostly focused on longtermism) and the most important century by Holden. Again, essentially 1.5 longtermist texts to <0.5 from other worldviews.

As the main landing page for EA discussion, this also feels like a reasonably significant nudge in a specific direction.

On a somewhat related point, I do generally think there are many less 'thought-leaders' for global health or animal-inclusive worldviews relative to the longtermist one. For example, we have people such as Holden, Ben Todd, Will McAskill etc. who all produce reasonably frequent and great content on why longtermism is compelling, yet very few (if anyone?) is doing content creation or thought leadership on that level for neartermist worldviews. This might be another reason why longtermist content is much more frequently sign-posted too, but I'm not 100% sure on this.

[FWIW I do find longtermism quite compelling, but it also seems amiss to not mention the cultural influence longtermism has in certain EA spaces]

Chris Leong @ 2022-05-08T22:20 (+7)

Having the actual conference organisers associate so strongly with the promotion of a longtermist (albeit yes, also one of the main founders of EA) book made me think "Wow, CEA is really trying to push longtermism to attendees"

I don't know, it just feels weird to focus on this point vs. other points that seem much more solid.

He's a founder of the movement, so if he wrote a book on anything, I'm pretty sure they'd be promoting it hard as well.

MichaelPlant @ 2022-05-04T11:37 (+62)

Yeah, this is an excellent list. To me, the OP seems to miss the obvious the point, which is that if you look at what the central EA individuals, organisations, and materials are promoting, you very quickly get the impression that, to misquote Henry Ford, "you can have any view you want, so long as it's longtermism". One's mileage may vary, of course, as to whether one thinks this is a good result.

To add to the list, the 8-week EA Introductory Fellowship curriculum, the main entry point for students, i.e. the EAs of the future, has 5 sections on cause areas, of which 3 are on longtermism. As far as I can tell, there are no critiques of longtermism anywhere, even in the "what might we be missing?" week, which I found puzzling.

[Disclosure: when I saw the Fellowship curriculum about a year ago, I raised this issue with Aaron Gertler, who said it had been created without much/any input from non-longtermists, this was perhaps an oversight, and I would be welcome to make some suggestions. I meant to make some, but never prioritised it, in large part because it was unclear to me if any suggestions I made would get incorporated.]

MaxDalton @ 2022-05-04T14:00 (+40)

(Not a response to your whole comment, hope that's OK.)

I agree that there should be some critiques of longtermism or working on X risk in the curriculum. We're working on an update at the moment. Does anyone have thoughts on what the best critiques are?

Some of my current thoughts:

- Why I am probably not a longtermist

- This post arguing that it's not clear if X risk reduction is positive

- On infinite ethics (and Ajeya's crazy train metaphor)

MichaelPlant @ 2022-05-04T19:32 (+65)

IMO good-faith, strong, fully written-up, readable, explicit critiques of longtermism are in short supply; indeed, I can't think of any. The three you raise are good, but they are somewhat tentative and limited in scope. I think that stronger objections could be made.

FWIW, on the EA facebook page, I raised three critiques of longtermism in response to Finn Moorhouse's excellent recent article on the subject, but all my comments were very brief.

The first critique involves defending person-affecting views in population ethics and arguing that, when you look at the details, the assumptions underlying them are surprisingly hard to reject. My own thinking here is very influenced by Bader (2022), which I think is a philosophical masterclass, but is also very dense and doesn't address longtermism directly. There are other papers arguing for person-affecting views, e.g. Narveson (1967) and Heyd (2012) but both are now a bit dated - particularly Narveson - in the sense they don't respond to the more sophisticated challenges to their views that have since been raised in the literature. For the latest survey of the literature and those challenges - albeit not one sympathetic to person-affecting views - see Greaves (2017).

The second draws on a couple of suggestions made by Webb (2021) and Berger (2021) about cluelessness. Webb (2021) is a reasonably substantial EA forum post about how we might worry that, the further in the future something happens, the smaller the expected value we should assign to it, which acts as an effective discount. However, Webb (2021) is pretty non-committal about how serious a challenge this is for longtermism and doesn't frame it as one. Berger (2021) is talking on the 80k podcasts and suggests that longtermist interventions are either 'narrow' (e.g. AI safety) or 'broad' ('improving politics'), where the former are not robustly good, and the latter are questionably better than existing 'near-termist' interventions such as cash transfers to the global poor. I wouldn't describe this as a worked-out thesis though and Berger doesn't state it very directly.

The third critique is that, a la Torres, longtermism might lead us towards totalitarianism. I don't think this is a really serious objection, but I would like to see longtermists engage with it and say why they don't believe it is.

I should probably disclose I'm currently in discussion with Forethought about a grant to write up some critiques of longtermism in order to fill some of this literature gap. Ideally, I'll produce 2-3 articles within the next 18 months.

SiebeRozendal @ 2022-05-12T02:55 (+7)

I strongly welcome the critiques you'll hopefully write, Michael!

AnonymousEAForumAccount @ 2022-05-04T20:08 (+9)

Why I am probably not a longtermist seems like the best of these options, by a very wide margin. The other two posts are much too technical/jargony for introductory audiences.

Also, A longtermist critique of “The expected value of extinction risk reduction is positive” isn’t even a critique of longtermism, it’s a longtermist arguing against one longtermist cause (x-risk reduction) in favor of other longtermist causes (such as s-risk reduction and trajectory change). So it doesn’t seem like a good fit for even a more advanced curriculum unless it was accompanied by other critiques targeting longtermism itself (e.g. critiques based on cluelessness.)

Reducing the probability of human extinction is a highly popular cause area among longtermist EAs. Unfortunately, this sometimes seems to go as far as conflating longtermism with this specific cause, which can contribute to the neglect of other causes.[1] Here, I will evaluate Brauner and Grosse-Holz’s argument for the positive expected value (EV) of extinction risk reduction from a longtermist perspective. I argue that the EV of extinction risk reduction is not robustly positive,[2] such that other longtermist interventions such as s-risk reduction and trajectory changes are more promising, upon consideration of counterarguments to Brauner and Grosse-Holz’s ethical premises and their predictions of the nature of future civilizations.

MichaelStJules @ 2022-05-05T20:15 (+11)

The longtermist critique is a critique of arguments for a particular (perhaps the main) priority in the longtermism community, extinction risk reduction. I don't think it's necessary to endorse longtermism to be sympathetic to the critique. That extinction risk reduction might not be robustly positive is a separate point from the claim that s-risk reduction and trajectory changes are more promising.

Someone could think extinction risk reduction, s-risk reduction and trajectory changes are all not robustly positive, or that no intervention aimed at any of them is robustly positive. The post can be one piece of this, arguing against extinction risk reduction. I'm personally sympathetic to the claim that no longtermist intervention will look robustly positive or extremely cost-effective when you try to deal with the details and indirect effects.

The case for stable very long-lasting trajectory changes other than those related to extinction hasn't been argued persuasively, as far as I know, in cost-effectiveness terms over, say, animal welfare, and there are lots of large indirect effects to worry about. S-risk work often has potential for backfire, too. Still, I'm personally sympathetic to both enough to want to investigate further, at least over extinction risk reduction.

Arjun_Kh @ 2022-05-04T20:21 (+8)

The strongest academic critique of longtermism I know of is The Scope of Longtermism by GPI's David Thorstad. Here's the abstract:

Longtermism holds roughly that in many decision situations, the best thing we can do is what is best for the long-term future. The scope question for longtermism asks: how large is the class of decision situations for which longtermism holds? Although longtermism was initially developed to describe the situation of cause-neutral philanthropic decisionmaking, it is increasingly suggested that longtermism holds in many or most decision problems that humans face. By contrast, I suggest that the scope of longtermism may be more restricted than commonly supposed. After specifying my target, swamping axiological strong longtermism (swamping ASL), I give two arguments for the rarity thesis that the options needed to vindicate swamping ASL in a given decision problem are rare. I use the rarity thesis to pose two challenges to the scope of longtermism: the area challenge that swamping ASL often fails when we restrict our attention to specific cause areas, and the challenge from option unawareness that swamping ASL may fail when decision problems are modified to incorporate agents’ limited awareness of the options available to them.

David Thorstad @ 2022-07-28T11:39 (+1)

Just saw this and came here to say thanks! Glad you liked it.

MichaelPlant @ 2022-05-04T16:32 (+37)

I obviously expected this comment would get a mix of upvotes and downvotes, but I'd be pleased if any of the downvoters would be kind enough to explain on what grounds they are downvoting.

Do you disagree with the empirical claim that central EAs entities promote longtermism (the claim we should give priority to improving the longterm)?

Do you disagree with the empirical claim that there is pressure within EA to agree with longtermism, e.g. if you don't, it carries a perceived or real social or other penalty (such, as, er, getting random downvotes)?

Are my claims about the structure of the EA Introductory Fellowship false?

Is it something about what I put in the disclaimer?

Charles He @ 2022-05-04T21:35 (+13)

The top comment:

- Is a huge contribution. I supported it, it's great. It's obviously written by someone who leans toward non-longtermist cause areas, but somehow that makes their impartial vibe more impressive.

- The person lays out their ideas and aims transparently, and I think even an strong longtermist "opponent" would appreciate it, maybe even gain some perspective of being sort of "oppressed".

Your comment:

- Your comment slots below this top comment, but doesn't seem to be a natural reply. It is plausible you replied because you wanted a top slot.

- You immediately slide into rhetoric with a quote that would rally supporters, but is unlikely to be appreciated by people who disagree with you ("you can have any view you want, so long as it's longtermism"). That seems like something you would say at a political rally and is objectively false. This is bad.

- As a positive and related to the top comment, you do add your fellowship point, and Max Dalton picks this up, which is productive (from the perspective of a proponent of non-longtermist cause areas).

But I think the biggest issue is that, for a moment, there was this thing where people could have listened.

You sort of just walked past the savasana, slouched a bit and then slugged longtermism in the gut, while the top comment was opening up the issue for thought.

The danger is that people would find this alienating in and scoring points on the internet isn't a good thing for EA, right?

(As a side issue, I'm unsure or ambivalent if criticism specifically needs to be prescribed into introduction materials, especially as a consequence of activism by opponents. It might be the case that more room or better content for other cause areas should exist. However, prescribing or giving check boxes something rigid could just lead to a unhealthy, adversarial dynamic. But I'm really unsure and obviously the CEO of CEA takes this seriously).

MichaelPlant @ 2022-05-05T13:39 (+37)

Hmm. This is very helpful, thank you very much. I don't think we're on the same page, but it's useful for indicating where those differences may lie.

You immediately slide into rhetoric with a quote that would rally supporters, but is unlikely to be appreciated by people who disagree with you

I'm not what you mean by 'supporters'. Supporters of what? Supporters of 'non-longtermism'? Supporters of the view that "EA is just longtermism"? FWIW, I have a lot of respect for (very many) longtermists: I see them as seriously and sincerely engaged in a credible altruistic project, just not one I (currently?) consider the priority; I hope they would view me in the same way about my efforts to make lives happier, and that we would be able to cooperate engage in moral trade where possible.

What I am less happy is the (growing) sense that EA is only longtermism - it's the only credible game in town - which is the subject of this post. One can be a longtermist - indeed of any moral persuasion - and object to that if you want the effective altruism community to be a pluralistic and inclusive place.

On the other hand, one could take a different, rather sneering, arrogant, and unpleasant view that longtermism is clearly true, anyone who doesn't recognise this is just an idiot, and all those idiots should clear off. I have also encountered this perspective - far more often than I expected or hoped to.

Given all this, I find it hard to make sense of your claim I've

slugged longtermism in the gut

I've not attacked longtermism. If anything, I'm attacking the sense that only longtermists are welcome in EA - a perception based on exactly the sort of evidence raised in the top comment.

Finally, you said

As a side issue, I'm unsure or ambivalent if criticism specifically needs to be prescribed into introduction materials, especially as a consequence of activism by opponents.

Which I am almost stunned by. Criticism of EA? Criticism of longtermism? Am I an opponent of EA? That would be news to me. An introductory course on EA should include, presumably, arguments for and against the various positions one might take about how to do the most good. Everyone seems to agree that doing good is hard, and we need openness and criticism to improve what we are doing, and therefore I don't see why you want to deliberately minimise, or refuse to include, criticism - that's what you seem to be suggesting, I don't know if that's what you mean. Even an introductory course on just longtermist would, presumably cover objections to the view.

MaxDalton @ 2022-05-04T14:04 (+46)

Thank you for sharing these thoughts.

I can see how the work of several EA projects, especially CEA, contributed to this. I think that some of these were mistakes (and we think some of them were significant enough to list on our website). I personally caused several of the mistakes that you list, and I'm sorry for that.

Often my take on these cases is more like "it's bad that we called this thing "EA"", rather than "it's bad that we did this thing". E.g. I think that the first round of EA Grants made some good grants (e.g. to LessWrong 2.0), but that it would have been better to have used a non-EA brand for it. I think that calling things "EA" means that there's a higher standard of representativeness, which we sometimes failed to meet.

I do want to note that all of the things you list took place around 2017-2018[1], and our work and plans have changed since then. For instance, CBG evaluation criteria are no longer as you state, EA Grants changed a lot after the first round and was closed down around 2019, the EA Handbook is different, and effectivealtruism.org has a new design.

If you have comments about our current work, then please give us (anonymous) feedback!

As I noted in an earlier comment, I want CEA to be promoting the principles of effective altruism. We have been careful not to bake cause area preferences into our metrics, and instead to focus on whether people are thinking carefully and open-mindedly about how to help others the most.

Where we have to decide a content split (e.g. for EA Global or the Handbook), I want CEA to represent the range of expert views on cause prioritization. I still don't think we have amazing data on this, but my best guess is that this skews towards longtermist-motivated or X-risk work (like maybe 70-80%).

I would love someone to do a proper survey of everyone (trying to avoid one’s own personal networks) who has spent >1 year thinking about cause prioritization with a scope-sensitive and open-minded lens. I've tried to commission someone to do this a couple of times but it hasn't worked out. If someone did this, it would help to shape our content, so I’d be happy to offer some advice and could likely find funding. If anyone is interested, let me know!

- ^

I acknowledge that the effectivealtruism.org design was the same in 2021 as it was in 2017, but this was mostly because we didn’t have much capacity to update the site at all, so I think the thing you’re complaining about was mostly a fact about 2017.

Linch @ 2022-05-04T14:39 (+50)

I want CEA to represent the range of expert views on cause prioritization. I still don't think we have amazing data on this, but my best guess is that this skews towards longtermist-motivated or X-risk work (like maybe 70-80%).

I would love someone to do a proper survey of everyone (trying to avoid one’s own personal networks) who has spent >1 year thinking about cause prioritization with a scope-sensitive and open-minded lens. I've tried to commission someone to do this a couple of times but it hasn't worked out. If someone did this, it would help to shape our content, so I’d be happy to offer some advice and could likely find funding. If anyone is interested, let me know!

Thank you for wanting to be principled about such an important issue. However (speaking as someone who is both very strongly longtermist and a believer of the importance of cause prioritization), a core problem with the "neutrality"/expert-views framing of this comment is selection bias. We would naively expect people who spend a lot of time on cause prioritization to systematically overrate (relative to the broader community) both the non-obviousness of the most important causes, and their esotericism.

Put another way, if you were a thoughtful, altruistic, person who heard about EA in 2013 and your first instinct was to start what would be become Wave or earn-to-give for global poverty, you'd be systematically less represented in such a survey.

Now, I happen to think focusing a lot on cause prioritization is correct: I think ethics is hard, in many weird and surprising ways. But I don't think I can (justifiably) get this from expert appeal/deference, it all comes down to specific beliefs I have about the world and how hard it is, and to some degree making specific bets that my own epistemology isn't completely screwed up (because if it was, I probably can't have much of an impact anyway).

Analogously, I also think we should update approximately not at all on the existence of God if we see surveys that philosophers of religion are much more likely to believe in God than other philosophers, or if ethicists are more likely to be deontologists than utilitarians.

MaxDalton @ 2022-05-04T15:18 (+4)

I agree that all sorts of selection biases are going to be at play in this sort of project: the methodology would be a minefield and I don't have all the answers.

I agree that there's going to be a selection bias towards people who think cause prio is hard. Honestly, I guess I also believe that ethics is hard, so I was basically assuming that worldview. But maybe this is a very contentious position? I'd be interested to hear from anyone who thinks that cause prio is just really easy.

More generally, I agree that I/CEA can't just defer our way out of this problem or other problems: you always need to choose the experts or the methodology or whatever. But, partly because ethics seems hard to me, I feel better about something like what I proposed, rather than just going with our staff's best guess (when we mostly haven't engaged deeply with all of the arguments).

Linch @ 2022-05-04T15:52 (+13)

I agree that there's going to be a selection bias towards people who think cause prio is hard.

To be more explicit, there's also a selection bias towards esotericism. Like how much you think most of the work is "done for you" by the rest of the world (e.g. in developmental economics or moral philosophy), versus needing to come up with the frameworks yourself.

Linch @ 2022-05-05T15:54 (+2)

As a side note, I think there's an analogous selection bias within longtermism, where many of our best and brightest people end up doing technical alignment, making it harder to have clearer thinking about other longtermist issues (including issues directly related to making the development of transformative AI go well, like understanding the AI strategic landscape and AI safety recruitment strategy)

AnonymousEAForumAccount @ 2022-05-04T18:37 (+37)

I do want to note that all of the things you list took place around 2017-2018, and our work and plans have changed since then.

My observations about 80k, GPI, and CFAR are all ongoing (though they originated earlier). I also think there are plenty of post-2018 examples related to CEA’s work, such as the Introductory Fellowship content Michael noted (not to mention the unexplained downvoting he got for doing so), Domassoglia’s observations about the most recent EAG and EAGx (James hits on similar themes), and the late 2019 event that was framed as a “Leader’s Forum” but was actually “some people who CEA staff think it would be useful to get together for a few days” (your words) with those people skewing heavily longtermist. I think all of these things “contribute to the view that EA essentially is longtermism/AI Safety?”(though of course longtermism could be “right” in which case these would all be positive developments.)

Where we have to decide a content split (e.g. for EA Global or the Handbook), I want CEA to represent the range of expert views on cause prioritization. I still don't think we have amazing data on this, but my best guess is that this skews towards longtermist-motivated or X-risk work (like maybe 70-80%).

I would love someone to do a proper survey of everyone (trying to avoid one’s own personal networks) who has spent >1 year thinking about cause prioritization with a scope-sensitive and open-minded lens. I've tried to commission someone to do this a couple of times but it hasn't worked out. If someone did this, it would help to shape our content, so I’d be happy to offer some advice and could likely find funding. If anyone is interested, let me know!

I agree with Linch’s concern about the selection bias this might entail: “a core problem with the "neutrality"/expert-views framing of this comment is selection bias. We would naively expect people who spend a lot of time on cause prioritization to systematically overrate (relative to the broader community) both the non-obviousness of the most important causes, and their esotericism.”

Also related to selection bias: most of the opportunities and incentives to work on cause prioritization have been at places like GPI or Forethought Foundation that use a longtermist lens, making it difficult to find an unbiased set of experts. I’m not sure how to get around this issue. Even trying to capture the views of the EA community as a whole (at the expense of deferring to experts) would be problematic to the extent “mistakes” have shaped the composition of the community by making EA more attractive to longtermists and less attractive to neartermists.

I appreciate that CEA is looking to “outsource” cause prioritization in some way. I just have concerns about how this will work in practice, as it strikes me as a very difficult thing to do well.

MichaelPlant @ 2022-05-04T20:05 (+35)

I also strongly share this worry about selection effects. There are additional challenges to those mentioned already: the more EA looks like an answer, rather than a question, the more inclined anyone who doesn't share that answer is simply to 'exit', rather than 'voice', leading to an increasing skew over time of what putative experts believe. A related issue is that, if you want to work on animal welfare or global development you can do that without participating in EA, which is much harder if you want to work on longtermism.

Further, it's a sort of double counting if you consider people as experts because they work in a particular organisation when they would only realistically be hired if they had a certain worldview. If FHI hired 100 more staff, and they were polled, I'm not sure we should update our view on what the expert consensus is any more than I should become more certain of the day's events by reading different copies of the same newspaper. (I mean no offence to FHI or its staff, by the way, it's just a salient example).

AnonymousEAForumAccount @ 2022-05-06T16:43 (+25)

I can see how the work of several EA projects, especially CEA, contributed to this. I think that some of these were mistakes (and we think some of them were significant enough to list on our website)… Often my take on these cases is more like "it's bad that we called this thing "EA"", rather than "it's bad that we did this thing"… I think that calling things "EA" means that there's a higher standard of representativeness, which we sometimes failed to meet.

I do want to note that all of the things you list took place around 2017-2018, and our work and plans have changed since then. For instance… the EA Handbook is different.

The EA Handbook is different, but as far as I can tell the mistakes made with the Handbook 2.0 were repeated for the 3rd edition.

CEA describing those “mistakes” around the Handbook 2.0:

“it emphasized our longtermist view of cause prioritization, contained little information about why many EAs prioritize global health and animal advocacy, and focused on risks from AI to a much greater extent than any other cause. This caused some community members to feel that CEA was dismissive of the causes they valued. We think that this project ostensibly represented EA thinking as a whole, but actually represented the views of some of CEA’s staff, which was a mistake. We think we should either have changed the content, or have presented the content in a way that made clear what it was meant to represent.”

CEA acknowledges it was a mistake for the 2nd edition to exclude the views of large portions of the community, but frame the content as representative of EA. But the 3rd edition does the exact same thing!

As Michael relates, he observed to CEA staff that the EA Introductory Fellowship curriculum was heavily skewed toward longtermist content, and was told that it had been created without much/any input from non-longtermists. Since the Intro Fellowship curriculum is identical to the EA Handbook 3.0 material, that means non-longertermists had minimal input on the Handbook.

Despite that, the Handbook 3.0 and the Intro Fellowship curriculum (and for that matter the In-Depth EA Program, which includes topics on biorisk and AI but nothing on animals or poverty) are clearly framed as EA materials, which you say should be held to “a higher standard of representativeness” rather than CEA’s views. So I struggle to see how the Handbook 3.0 (and other content) isn’t simply repeating the mistakes of the second edition; it feels like we’re right back where we were four years ago. Arguably a worse place, since at least the Handbook 2.0 was updated to clarify that CEA selected the content and other community members might disagree.

I realize CEA posted on the Forum soliciting suggestions on what should be included in the 3rd edition and asking for feedback on an initial sequence on motivation (which doesn’t seem to have made it into the final handbook). But from Michael’s anecdote, it doesn’t sound like CEA reached out to critics of the 2nd edition or the animal or poverty communities. I would have expected those steps to be taken given the criticism surrounding the 2nd edition, CEA’s response to that criticism and its characterization of how it addressed its mistakes (“we took this [community] feedback into account when we developed the latest version of the handbook”), and how the 3rd edition is still framed as “EA” vs. “CEA’s take on EA”.

MaxDalton @ 2022-05-06T18:03 (+16)

Hey, I've just messaged the people directly involved to double check, but my memory is that we did check in with some non-longtermists, including previous critics (as well as asking more broadly for input, as you note). (I'm not sure exactly what causes the disconnect between this and what Aaron is saying, but Aaron was not the person leading this project.) In any case, we're working on another update, and I'll make sure to run that version by some critics/non-longtermists.

Also, per other bits of my reply, we're aiming to be ~70-80% longtermist, and I think that the intro curriculum is consistent with that. (We are not aiming to give equal weight to all cause areas, or to represent the views of everyone who fills out the EA survey.)

Since the content is aiming to represent the range of expert opinion in EA, since we encourage people to reflect on the readings and form their own views, and since we asked the community for input into it, I think that it's more appropriate to call it the "EA Handbook" than the previous edition.

BarryGrimes @ 2022-05-06T18:24 (+34)

I don’t recall seeing the ~70-80% number mentioned before in previous posts but I may have missed it.

I’m curious to know what the numbers are for the other cause areas and to see the reasoning for each laid out transparently in a separate post.

I think that CEA’s cause prioritisation is the closest thing the community has to an EA ‘parliament’ and for that process to have legitimacy it should be presented openly and be subject to critique.

AnonymousEAForumAccount @ 2022-05-07T19:36 (+36)

Agree! This decision has huge implications for the entire community, and should be made explicitly and transparently.

AnonymousEAForumAccount @ 2022-05-06T20:25 (+7)

Thanks for following up regarding who was consulted on the Fellowship content.

And nice to know you’re planning to run the upcoming update by some critics. Proactively seeking out critical opinions seems quite important, as I suspect many critics won’t respond to general requests for feedback due to a concern that they’ll be ignored. Michael noted that concern, I’ve personally been discouraged from offering feedback because of it (I’ve engaged with this thread to help people understand the context and history of the current state of EA cause prioritization, not because I really expect CEA to meaningfully change its content/behavior), and I can’t imagine we’re alone in this.

MichaelPlant @ 2022-05-06T22:27 (+24)

I’ve engaged with this thread to help people understand the context and history of the current state of EA cause prioritization, not because I really expect CEA to meaningfully change its content/behavior

Fwiw, my model of CEA is approximately that it doesn't want to look like it's ignoring differing opinions but that, nevertheless, it isn't super fussed about integrating them or changing what it does.

This is my view of CEA as an organisation. Basically, every CEA staff member I've ever met (including Max D) has been a really lovely, thoughtful individual.

AnonymousEAForumAccount @ 2022-05-07T19:35 (+42)

I agree with your takes on CEA as an organization and as individuals (including Max).

Personally, I’d have a more positive view of CEA the organization if it were more transparent about its strategy around cause prioritization and representativeness (even if I disagree with the strategy) vs. trying to make it look like they are more representative than they are. E.g. Max has made it pretty clear in these comments that poverty and animal welfare aren’t high priorities, but you wouldn’t know that from reading CEA’s strategy page where the very first sentence states: “CEA's overall aim is to do the most we can to solve pressing global problems — like global poverty, factory farming, and existential risk — and prepare to face the challenges of tomorrow.”

MichaelPlant @ 2022-05-09T10:33 (+27)

It's possibly worth flagging that these are (sadly) quite long-running issues. I wrote an EA forum post now 5 years ago on the 'marketing gap', the tension between what EA organisations present EA as being about and what those the organisations believe it should be about, and arguing they should be more 'morally inclusive'. By 'moral inclusive', I mean welcoming and representing the various different ways of doing the most good that thoughtful, dedicated individuals have proposed.

This gap has since closed a bit, although not always in the way I hoped for, i.e. greater transparency and inclusiveness. As two examples, GWWC has been spun off from CEA, rebooted, and now does seem to be cause neutral. 80k is much more openly longtermist.

I recognise this is a challenging issue, but I still think the right solution to this is for the more central EA organisations to actually try hard to be morally inclusive. I've been really impressed at how well GWWC seem to be doing this. I think it's worth doing this for the same reasons I gave in that (now ancient) blogpost: it reduces groupthink, increases movement size, and reduces infighting. If people truly felt like EA was morally inclusive, I don't think this post, or any of these comments (including this one) would have been written.

AnonymousEAForumAccount @ 2022-05-11T23:32 (+4)

Thanks for sharing that post! Very well thought out and prescient, just unfortunate (through no fault of yours) that it's still quite timely.

Chris Leong @ 2022-05-08T22:38 (+2)

Well, now that GiveWell has already put in the years of vetting work, we can reliably have a pretty large impact on global poverty just by channeling however many million to AMF + similar. And I guess, it's not exactly that we need to do too much more than that.

Aaron Gertler @ 2022-05-23T03:13 (+7)

While at CEA, I was asked to take the curriculum for the Intro Fellowship and turn it into the Handbook, and I made a variety of changes (though there have been other changes to the Fellowship and the Handbook since then, making it hard to track exactly what I changed). The Intro Fellowship curriculum and the Handbook were never identical.

I exchanged emails with Michael Plant and Sella Nevo, and reached out to several other people in the global development/animal welfare communities who didn't reply. I also had my version reviewed by a dozen test readers (at least three readers for each section), who provided additional feedback on all of the material.

I incorporated many of the suggestions I received, though at this point I don't remember which came from Michael, Sella, or other readers. I also made many changes on my own.

It's reasonable to argue that I should have reached out to even more people, or incorporated more of the feedback I received. But I (and the other people who worked on this at CEA) were very aware of representativeness concerns. And I think the 3rd edition was a lot more balanced than the 2nd edition. I'd break down the sections as follows:

- "The Effectiveness Mindset", "Differences in Impact", and "Expanding Our Compassion" are about EA philosophy with a near-term focus (most of the pieces use examples from near-term causes, and the "More to Explore" sections share a bunch of material specifically focused on anima welfare and global development).

- "Longtermism" and "Existential Risk" are about longtermism and X-risk in general.

- "Emerging Technologies" covers AI and biorisk specifically.

- These topics get more specific detail than animal welfare and global development do if you look at the required reading alone. This is a real imbalance, but seems minor compared to the imbalance in the 2nd edition. For example, the 3rd edition doesn't set aside a large chunk of the only global health + development essay for "why you might not want to work in this area".

- "What might we be missing?" covers a range of critical arguments, including many against longtermism!

- Michael Plant seems not to have noticed the longtermism critiques in his comment, though they include "Pascal's Mugging" in the "Essentials" section and a bunch of other relevant material in the "More to Explore" section.

- "Putting it into practice" is focused on career choice and links mostly to 80K resources, which does give it a longtermist tilt. But it also links to a bunch of resources on finding careers in neartermist spaces, and if someone wanted to work on e.g. global health, I think they'd still find much to value among those links.

- I wouldn't be surprised if this section became much more balanced over time as more material becomes available from Probably Good (and other career orgs focused on specific areas).

In the end, you have three "neartermist" sections, four "longtermist" sections (if you count career choice), and one "neutral" section (critiques and counter-critiques that span the gamut of common focus areas).

AnonymousEAForumAccount @ 2022-05-25T20:16 (+8)

Thanks for sharing this history and your perspective Aaron.

I agree that 1) the problems with the 3rd edition were less severe than those with the 2nd edition (though I’d say that’s a very low bar to clear) and 2) the 3rd edition looks more representative if you weigh the “more to explore” sections equally with “the essentials” (though IMO it’s pretty clear that the curriculum places way more weight on the content it frames as “essential” than a content linked to at the bottom of the “further reading” section.)

I disagree with your characterization of "The Effectiveness Mindset", "Differences in Impact", and "Expanding Our Compassion" as neartermist content in a way that’s comparable to how subsequent sections are longtermist content. The early sections include some content that is clearly neartermist (e.g. “The case against speciesism”, and “The moral imperative toward cost-effectiveness in global health”). But much, maybe most, of the

"essential" reading in the first three sections isn’t really about neartermist (or longtermist) causes. For instance, “We are in triage every second of every day” is about… triage. I’d also put “On Fringe Ideas”, “Moral Progress and Cause X”, “Can one person make a difference?”, “Radical Empathy”, and “Prospecting for Gold” in this bucket.

By contrast, the essential reading in the “Longtermism”, “Existential Risk”, and “Emerging technologies” section is all highly focused on longtermist causes/worldview; it’s all stuff like “Reducing global catastrophic biological risks”, “The case for reducing existential risk”, and “The case for strong longtermism”.

I also disagree that the “What we may be missing?” section places much emphasis on longtermist critiques (outside of the “more to explore” section, which I don’t think carries much weight as mentioned earlier). “Pascal’s mugging” is relevant to, but not specific to, longtermism, and “The case of the missing cause prioritization research” doesn’t criticize longtermist ideas per se, it more argues that the shift toward prioritizing longtermism hasn’t been informed by significant amounts of relevant research. I find it telling that “Objections to EA” (framed as a bit of a laundry list) doesn’t include anything about longtermism and that as far as I can tell no content in this whole section addresses the most frequent and intuitive criticism of longtermism I’ve heard (that it’s really really hard to influence the far future so we should be skeptical of our ability to do so).

Process-wise, I don’t think the use of test readers was an effective way of making sure the handbook was representative. Each test reader only saw a fraction of the content, so they’d be in no position to comment on the handbook as a whole. While I’m glad you approached members of the animal and global development communities for feedback, I think the fact that they didn’t respond is itself a form of (negative) feedback (which I would guess reflects the skepticism Michael expressed that his feedback would be incorporated). I’d feel better about the process if, for example, you’d posted in poverty and animal focused Facebook groups and offered to pay people (like the test readers were paid) to weigh in on whether the handbook represented their cause appropriately.

Aaron Gertler @ 2022-05-26T11:20 (+4)

I'll read any reply to this and make sure CEA sees it, but I don't plan to respond further myself, as I'm no longer working on this project.

Thanks for the response. I agree with some of your points and disagree with others.

To preface this, I wouldn't make a claim like "the 3rd edition was representative for X definition of the word" or "I was satisfied with the Handbook when we published it" (I left CEA with 19 pages of notes on changes I was considering). There's plenty of good criticism that one could make of it, from almost any perspective.

It’s pretty clear that the curriculum places way more weight on the content it frames as “essential” than a content linked to at the bottom of the “further reading” section.

I agree.

But much, maybe most, of the "essential" reading in the first three sections isn’t really about neartermist (or longtermist) causes. For instance, “We are in triage every second of every day” is about… triage. I’d also put “On Fringe Ideas”, “Moral Progress and Cause X”, “Can one person make a difference?”, “Radical Empathy”, and “Prospecting for Gold” in this bucket.

Many of these have ideas that can be applied to either perspective. But the actual things they discuss are mostly near-term causes.

- "On Fringe Ideas" focuses on wild animal welfare.

- "We are in triage" ends with a discussion of global development (an area where the triage metaphor makes far more intuitive sense than it does for longtermist areas).

- "Radical Empathy" is almost entirely focused on specific neartermist causes.

- "Can one person make a difference" features three people who made a big difference — two doctors and Petrov. Long-term impact gets a brief shout-out at the end, but the impact of each person is measured by how many lives they saved in their own time (or through to the present day).

This is different from e.g. detailed pieces describing causes like malaria prevention or vitamin supplementation. I think that's a real gap in the Handbook, and worth addressing.

But it seems to me like anyone who starts the Handbook will get a very strong impression in those first three sections that EA cares a lot about near-term causes, helping people today, helping animals, and tackling measurable problems. That impression matters more to me than cause-specific knowledge (though again, some of that would still be nice!).

However, I may be biased here by my teaching experience. In the two introductory fellowships I've facilitated, participants who read these essays spent their first three weeks discussing almost exclusively near-term causes and examples.

By contrast, the essential reading in the “Longtermism”, “Existential Risk”, and “Emerging technologies” section is all highly focused on longtermist causes/worldview; it’s all stuff like “Reducing global catastrophic biological risks”, “The case for reducing existential risk”, and “The case for strong longtermism”.

I agree that the reading in these sections is more focused. Nonetheless, I still feel like there's a decent balance, for reasons that aren't obvious from the content alone:

- Most people have a better intuitive sense for neartermist causes and ideas. I found that longtermism (and AI specifically) required more explanation and discussion before people understood them, relative to the causes and ideas mentioned in the first three weeks. Population ethics alone took up most of a week.

- "Longtermist" causes sometimes aren't. I still don't quite understand how we decided to add pandemic prevention to the "longtermist" bucket. When that issue came up, people were intensely interested and found the subject relative to their own lives/the lives of people they knew.

- I wouldn't be surprised if many people in EA (including people in my intro fellowships) saw many of Toby Ord's "policy and research ideas" as competitive with AMF just for saving people alive today.

- I assume there are also people who would see AMF as competitive with many longtermist orgs in terms of improving the future, but I'd guess they aren't nearly as common.

“Pascal’s mugging” is relevant to, but not specific to, longtermism

I don't think I've seen Pascal's Mugging discussed in any non-longtermist context, unless you count actual religion. Do you have an example on hand for where people have applied the idea to a neartermist cause?

"The case of the missing cause prioritization research” doesn’t criticize longtermist ideas per se, it more argues that the shift toward prioritizing longtermism hasn’t been informed by significant amounts of relevant research.

I agree. I wouldn't think of that piece as critical of longtermism.

As far as I can tell, no content in this whole section addresses the most frequent and intuitive criticism of longtermism I’ve heard (that it’s really really hard to influence the far future so we should be skeptical of our ability to do so).

I haven't gone back to check all the material, but I assume you're correct. I think it would be useful to add more content on this point.

This is another case where my experience as a facilitator warps my perspective; I think both of my groups discussed this, so it didn't occur to me that it wasn't an "official" topic.

Process-wise, I don’t think the use of test readers was an effective way of making sure the handbook was representative. Each test reader only saw a fraction of the content, so they’d be in no position to comment on the handbook as a whole.

I agree. That wasn't the purpose of selecting test readers; I mentioned them only because some of them happened to make useful suggestions on this front.

While I’m glad you approached members of the animal and global development communities for feedback, I think the fact that they didn’t respond is itself a form of (negative) feedback (which I would guess reflects the skepticism Michael expressed that his feedback would be incorporated).

I wrote to four people, two of whom (including Michael) sent useful feedback . The other two also responded; one said they were busy, the other seemed excited/interested but never wound up sending anything.

A 50% useful-response rate isn't bad, and makes me wish I'd sent more of those emails. My excuse is the dumb-but-true "I was busy, and this was one project among many".

(As an aside, if someone wanted to draft a near-term-focused version of the Handbook, I think they'd have a very good shot at getting a grant.)

I’d feel better about the process if, for example, you’d posted in poverty and animal focused Facebook groups and offered to pay people (like the test readers were paid) to weigh in on whether the handbook represented their cause appropriately.

I'd probably have asked "what else should we include?" rather than "is this current stuff good?", but I agree with this in spirit.

(As another aside, if you specifically have ideas for material you'd like to see included, I'd be happy to pass them along to CEA — or you could contact someone like Max or Lizka.)

AnonymousEAForumAccount @ 2022-06-01T15:14 (+4)

But it seems to me like anyone who starts the Handbook will get a very strong impression in those first three sections that EA cares a lot about near-term causes, helping people today, helping animals, and tackling measurable problems. That impression matters more to me than cause-specific knowledge (though again, some of that would still be nice!).

However, I may be biased here by my teaching experience. In the two introductory fellowships I've facilitated, participants who read these essays spent their first three weeks discussing almost exclusively near-term causes and examples.

That’s helpful anecdata about your teaching experience. I’d love to see a more rigorous and thorough study of how participants respond to the fellowships to see how representative your experience is.

I don't think I've seen Pascal's Mugging discussed in any non-longtermist context, unless you count actual religion. Do you have an example on hand for where people have applied the idea to a neartermist cause?

I’m pretty sure I’ve heard it used in the context of a scenario questioning whether torture is justified to stop the threat dirty bomb that’s about to go off in a city.

I wrote to four people, two of whom (including Michael) sent useful feedback . The other two also responded; one said they were busy, the other seemed excited/interested but never wound up sending anything.

A 50% useful-response rate isn't bad, and makes me wish I'd sent more of those emails. My excuse is the dumb-but-true "I was busy, and this was one project among many".

That’s a good excuse :) I misinterpreted Michael’s previous comment as saying his feedback didn’t get incorporated at all. This process seems better than I’d realized (though still short of what I’d have liked to see after the negative reaction to the 2nd edition).

if you specifically have ideas for material you'd like to see included, I'd be happy to pass them along to CEA — or you could contact someone like Max or Lizka.

GiveWell’s Giving 101 would be a great fit for global poverty. For animal welfare content, I’d suggest making the first chapter of Animal Liberation part of the essential content (or at least further reading), rather than part of the “more to explore” content. But my meta-suggestion would be to ask people who specialize in doing poverty/animal outreach for suggestions.

AnonymousEAForumAccount @ 2022-05-04T18:51 (+11)

EA Grants changed a lot after the first round and was closed down around 2019

Did subsequent rounds of EA Grants give non-trivial amounts to animal welfare and/or global poverty? What percentage of funding did these cause areas receive, and how much went to longtermist causes? Only the first round of grants was made public.

MaxDalton @ 2022-08-11T08:56 (+2)

I did more research here and talked to more people, and I think that 60% is closer to the right skew here. (I also think that there are some other important considerations that I don't go into here.) I still endorse the other parts of this comment (apart from the 70-80% bit).

Charles He @ 2022-05-04T05:52 (+36)

This account has some of the densest and most informative writing in the forum, here's another comment

(The comment describes CEA in a previous era. It seems the current CEA has different leadership and should be empowered and supported).

AnonymousEAForumAccount @ 2022-05-04T18:25 (+20)

Thank you! I really appreciate this comment, and I’m glad you find my writing helpful.

frances_lorenz @ 2022-05-04T22:08 (+9)

Thank you, I really appreciate the breadth of this list, it gives me a much stronger picture of the various ways a longtermist worldview is being promoted.

MaxDalton @ 2022-10-13T11:59 (+4)

We've now released a page on our website setting out our approach to moderation and content curation, which relates somewhat to this comment. Please feel free to share any feedback in comments or anonymously.

Habryka @ 2022-05-04T08:24 (+1)

- The EA Grants program granted ~16x more money to longtermist projects as global poverty and animal welfare projects combined

This seems wrong to me. The LTFF and the EAIF don't get 16x the money that the Animal Welfare and Global Health and Development funds get. Maybe you meant to say that the EAIF has granted 16x more money to longtermist projects?

Aryan @ 2022-05-04T09:29 (+17)

I think EA Grants is different from EA Funds. EA Grants was discontinued a while back - https://www.effectivealtruism.org/grants

Habryka @ 2022-05-05T07:39 (+25)

Oh, I get it now. That seems like a misleading summary, given that that program was primarily aimed at EA community infrastructure (which received 66% of the funding), the statistic cited here is only for a single grants round, and one of the five concrete examples listed seems to be a relatively big global poverty grant.

I still expect there to be some skew here, but I would take bets that the actual numbers for EA Grants look substantially less skewed than 1:16.

AnonymousEAForumAccount @ 2022-05-05T16:08 (+5)

IMO the share of grants going to community infrastructure isn’t particularly relevant to the relative shares received by longterm and nearterm projects. But I’ll edit my post to note that the stat I cite is only from the first round of EA Grants since that’s the only round for which data was ever published.

one of the five concrete examples listed seems to be a relatively big global poverty grant.

Could you please clarify what you mean by this? I linked to an analysis listing written descriptions of 6 EA Grants made after the initial round, for which grant sizes were never provided. One of those (to Charity Entrepreneurship) could arguably be construed as a global poverty grant, though I think it’d be more natural to categorize it as meta/community (much as how I think it was reasonable that the grant you received to work on LessWrong2.0, the largest grant of the first round, was classified as a community building grant rather than a longtermist grant.)

In any case, the question of what causes the EA Grant program supported is an empirical one that should be easy to answer. I’ve already asked for data on this, and hope that CEA publishes it so we don't have to speculate.

Habryka @ 2022-05-05T20:11 (+9)

Yeah, the Charity Entrepreneurship grant is what I was talking about. But yeah, classifying that one as meta isn't crazy to me, though I think I would classify it more as Global Poverty (since I don't think it involved any general EA community infrastructure).

Michael_Wiebe @ 2022-05-03T17:45 (+103)

I think it's important to frame longtermism as particular subset of EA. We should be EAs first and longtermists second. EA says to follow the importance-tractability-crowdedness framework, and allocate funding to the most effective causes. This can mean funding longtermist interventions, if they are the most cost-effective. If longtermist interventions get a lot of funding and hit diminishing returns, then they won't be the most cost-effective anymore. The ITC framework is more general than the longtermist framing of "focus on the long-term future", and allows us to pivot as funding and tractability changes.

Max Clarke @ 2022-05-03T23:59 (+15)

I'm really hoping we can get some better data on resource allocation and estimated effectiveness to make it clearer when funders or individuals should return to focusing on global poverty etc.

There's a few projects in the works for "ea epistemic infrastructure"

harrygietz@gmail.com @ 2022-05-05T12:39 (+2)

Do you have any more info on these "epistemic infrastructure" projects or the people working on them? I would be super curious to look into this more.

Max Clarke @ 2022-05-07T12:19 (+1)

For an overview of most of the current efforts into "epistemic infrastructure", see the comments on my recent post here https://forum.effectivealtruism.org/posts/qFPQYM4dfRnE8Cwfx/project-a-web-platform-for-crowdsourcing-impact-estimates-of

Leksu @ 2022-05-06T23:08 (+1)

Same here.

Max Clarke @ 2022-05-07T12:25 (+1)

For an overview of most of the current efforts into "epistemic infrastructure", see the comments on my recent post here https://forum.effectivealtruism.org/posts/qFPQYM4dfRnE8Cwfx/project-a-web-platform-for-crowdsourcing-impact-estimates-of

Guy Raveh @ 2022-05-04T17:57 (+13)

I basically agree with your comment, but wanted to emphasize the part I disagree with:

EA says to follow the importance-tractability-crowdedness framework, and allocate funding to the most effective causes.

EA is about prioritising in order to (try to) do the most good. The ITN framework is just a heuristic for that, which may very well be wrong in many places; and funding is just one of the resources we can use.

Michael_Wiebe @ 2022-05-04T18:09 (+3)

I claim that the ITC framework is not just a heuristic, but captures precisely what we mean by "do the most good". And I agree, 'funding' should be interpreted broadly to include all EA resources (eg. money, labor hours, personal connections, etc).

Peter Wildeford @ 2022-05-04T20:10 (+87)

On one hand it's clear that global poverty does get the most overall EA funding right now, but it's also clear that it's more easy for me to personally get my 20th best longtermism idea funded than to get my 3rd best animal idea or 3rd best global poverty idea funded and this asymmetry seems important.

frances_lorenz @ 2022-05-04T22:11 (+3)

Do you think that's a factor of: how many places you could apply for longtermist vs. other cause area funding? How high the bar is for longtermist ideas vs. others? Something else?

Peter Wildeford @ 2022-05-04T23:57 (+17)

I think it's a factor of global health being already allocated to much more scalable opportunities than exist in longtermism, whereas the longtermists have a much smaller amount of funding opportunities to compete for. EA individuals are the main source of longtermist opportunities and thus we get showered in longtermist money but not other kinds of money.

Animals is a bit more of a mix of the two.

PabloAMC @ 2022-05-03T16:59 (+64)

Thanks for posting! My current belief is that EA has not become purely about longtermism. In fact, recently it has been argued in the community that longtermism is not necessary to pursue the kind of things we currently do, as pandemics or AI Safety can also be justified in terms of preventing global catastrophes.

That being said, I'd very much prefer the EA community bottom line to be about doing "the most good" rather than subscribing to longtermism or any other cool idea we might come up with. These are all subject to change and debate, whether doing the most good really shouldn't.

Additionally, it might be worth highlighting, especially when talking with unfamiliarized people, that we deeply care about all present people suffering. Quoting Nate Soares:

One day, we may slay the dragons that plague us. One day we, like the villagers in their early days, may have the luxury of going to any length in order to prevent a fellow sentient mind from being condemned to oblivion unwillingly. If we ever make it that far, the worth of a life will be measured not in dollars, but in stars. That is the value of a life. It will be the value of a life then, and it is the value of a life now.

Emma Abele @ 2022-05-31T20:40 (+37)

In general it doesn't seem logical to me to bucket cause areas as either "longtermist" or "neartermist".

I think this bucketing can paint an overly simplistic image of EA cause prioritization that is something like:

Are you longtermist?

- If so, prioritize AI safety, maybe other x-risks, and maybe global catastrophic risks

- If not, prioritize global health or factory farming depending on your view on how much non-human animals matter compared to humans

But really the situation is way more complicated than this, and I don't think the simplification is accurate enough to be worth spreading.

- There was a time when I thought ending factory farming was highest priority, motivated by a longtermist worldview

- There was also a time when I thought bio-risk reduction was highest priority, motivated by a neartermist worldview

- (now I think AI-risk reduction is highest priority regardless of what I think about longtermism)

When thinking through cause prioritization, I think most EAs (including me) over-emphasize the importance of philosophical considerations like longtermism or speciesism, and under-emphasize the importance of empirical considerations like AI timelines, how much effort it would take to make bio-weapons obsolete or what diseases cause the most intense suffering.

RyanCarey @ 2022-06-01T10:18 (+10)

Agreed! And we should hardly be surprised to see such a founder effect, being that EA was started by philosophers and philosophy fans.

MathiasKB @ 2022-05-03T19:35 (+35)

Open philanthropy is not the only grantmaker in the EA Space! If you add the FTX Community, FTX Future Fund, EA Funds etc. my guess would be that it recently made a large shift towards longtermism, primarily due to the Future fund being so massive.

I also want to emphasize that many central EA Organisations are increasingly focused on longtermist concerns, and not as transparent about it as I would like for them to be. People and organisations should not pretend to care about things they do not for the sake of optics. One of EA's most central tenets is to hold truth at a very high regard. Being transparent about what we believe is necessary to do so.

I think there are a few pitfalls EA can fall into by its increasing focus on longtermism, but by and large people are noticing these and actively discussing them. This is a good sign! I'm a bit worried if many longtermists entirely stop caring about global poverty and animal suffering. Having a deep intuition that losing your parent to malaria is a horrible thing for a child to go through and that you can prevent it, is a very healthy sanity check.

I think the best strategy for 'hardcore' longtermists may very well be to do a bit of both, not because of optics but because regularly working on things with tight feedback loops reminds you just how difficult even well-defined objectives can be to achieve. That said there is enormous value in specialisation, so I'm not sure what the exact optimal trade-off is.

Max_Daniel @ 2022-05-05T02:07 (+11)

If you add the FTX Community, FTX Future Fund, EA Funds etc. my guess would be that it recently made a large shift towards longtermism, primarily due to the Future fund being so massive.

I think starting in 2022 this will be true in aggregate – as you say largely because of the FTX Future Fund.

However, for EA Funds specifically, it might be worth keeping in mind that the Global Health and Development Fund has been the largest of the four funds by payout amount, and by received donations even is about as big as all other funds combined.

Marcel D @ 2022-05-04T04:19 (+3)

In my view, there is some defining tension in rationalist and EA thought regarding epistemic vs. instrumental emphasis on truth: adopting a mindset of rationality/honesty is probably a good mindset—especially to challenge biases and set community standards—but it’s ultimately for instrumental purposes (although, for instrumental purposes, it might be better to think of your mindset as one of honesty/rationality, recursivity problems aside). I don’t think there is much conflict at the level of “lie about what you support”: that’s obviously going to be bad overall. But there are valid questions at the level of “how straightforward/consistent should I be about the way all near-termist cause areas/effects pale in comparison to expected value from existential risk reduction?” It might be the case that it’s obvious that certain health and development causes fail to compare on a long-term scale, but that doesn’t mean heavily emphasizing that is necessarily a good idea, for community health and other reasons like you mention.

Domassoglia @ 2022-05-04T12:47 (+28)

Two points to add re: the cultural capture of longtermism in EA:

- A count of topics at EAG and EAGx's from this year show a roughly 3:1 AI/longtermist to anything else ratio

- All attendees of EAGx's received an official email asking them to pre-order Will MacAskill's "What We Owe The Future," a 350 page longtermist tome, with the urging: "Early pre-orders are important because they help the book reach more people!"

Aaron Gertler @ 2022-05-06T20:54 (+12)

A count of topics at EAG and EAGx's from this year show a roughly 3:1 AI/longtermist to anything else ratio

I'm not sure where to find agendas for past EAGx events I didn't attend. But looking at EAG London, I get a 4:3 ratio for LT/non-LT (not counting topics that fit neither "category", like founding startups):

LT

- "Countering weapons of mass destruction"