More EAs should consider “non-EA” jobs

By Sarah Eustis-Guthrie @ 2021-08-19T21:18 (+143)

Summary: I argue that working in certain non-neglected fields is undervalued as a career option for EA-aligned individuals and that working at EA-organizations is potentially overvalued. I argue that by bringing an EA-perspective to non-EA spaces, EA-aligned folks can potentially have a substantial impact.

I took a non-EA job back in December (partly because I was rejected from all of the EA jobs I applied to, haha) that is not in any of the priority fields, as conventionally understood; I work for the California state government in a civil service job. When I started, I was unsure how much impact I would be able to have in this position, but as I’ve reflected on my time with the state, I’ve come to believe that the ways of thinking that I’ve drawn from EA have allowed me to be much more effective in my job. In particular:

- Attunement to the magnitude of impact of a particular action or policy

- Attunement to the marginal value I’m contributing

- Attunement to how I can make others more effective

- Attunement to inefficiencies in systems and how fundamental changes to workflows or policies could save time or money

- Belief in the value of using data to inform decision-making

As an entry-level worker, I haven’t exactly revolutionized how we’re doing work here. But even in my comparatively low-influence position, I do feel that I’ve been able to make an impact, in large part due to the EA-aligned paradigms that I bring to my work. My colleagues are smart and committed, but they often aren’t focused on the sorts of things I listed above. By bringing these ways of thinking into the work that we do, I believe I’ve been able to make a positive impact.

Beyond entry-level jobs such as mine, I believe that there are tons of opportunities for well-placed individuals to have a substantial impact by working in state government. The amounts of money being spent by state governments are tremendous: the 21-22 California budget is 262.5 billion dollars. Compare that to prominent EA organizations--GiveWell directed 172 million dollars in 2019, for example--or even high-powered philanthropy such as the Bill and Melinda Gates Foundation, which contributed 5.09 billion in 2019 (1). It goes without saying that when it comes to lives saved per dollar, EA orgs are spending their money more efficiently; however, I would argue that there is so much money being spent on state, local, and federal government programs that they’re worth considering as areas where bringing in EA perspectives can have a positive impact.

How that 262.5 billion is allocated and spent is shaped by a number of actors: members of the legislature and governor’s office; individuals in the state departments who decide which programs to request additional funding for; individuals in the budget offices and Department of Finance who decide which funding requests to reject or approve; and, of course, individuals throughout civil service who carry out the programs being funded with more or less efficiency. Throughout this process, there are individuals and departments that have substantial influence on where the money goes and how well it is spent. Thankfully, it’s typically groups of people who are making decisions, rather than lone individuals, but individuals can absolutely have substantial ripple effects. Having one person in the room who advocates for a more data-driven approach or who points to a neglected aspect of the issues that others hadn’t noted can shift the whole direction of a policy; having one analyst who develops a more efficient way of processing applications or conducting investigations, and then pushes for that to become policy, can make things more efficient for huge numbers of people. These are just a few examples.

I think that conventional EA approaches to career-choice miss these sorts of opportunities because of an overemphasis on cause area. Let’s take a look at the 80,000 Hours perspective on this topic. I recognize that 80,000 Hours is not representative of the beliefs of every EA on career-alignment, but it is the main institutional voice when it comes to this topic.

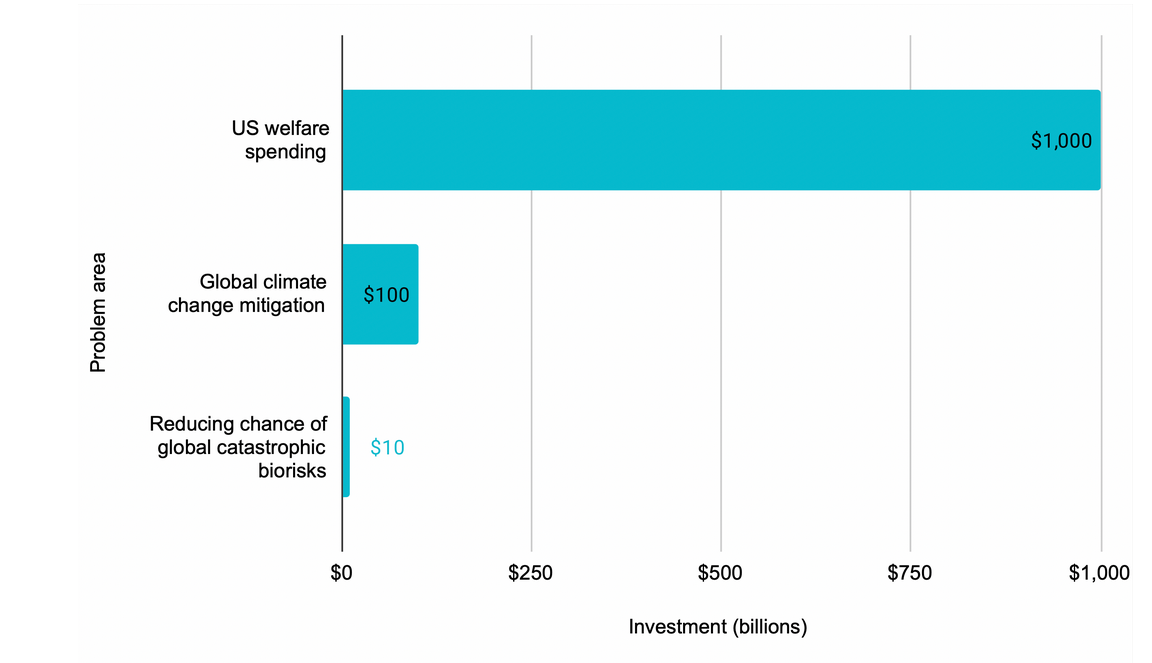

80,000 Hours argues that your choice of cause area is the single most important factor to consider when choosing your career (2). Their “Start here” page uses the following chart to support their argument that people should go into neglected fields:

Their point is that certain issues, such as global biorisk research, are neglected. I agree with that assessment, and I agree that EAs should consider going into that biorisk research if it's right for them. But when I look at this chart, my main takeaway is that there’s a ton of money being spent on welfare, and that working to make sure that money is spent as efficiently as possible could have a huge impact. Applying the framework of neglectedness too broadly risks concealing opportunities for change.

I also think that an overemphasis on the importance of cause area can unduly minimize considerations related to marginal value. In my experience, EAs can be weirdly selective about when they employ arguments about marginal value when it comes to career choice. I’ve often heard EAs argue that it’s not particularly effective to become a doctor. The argument goes that lots of smart, talented people want to be doctors, so you’re unlikely to provide marginal value as a doctor. Given my impression of the EA job market in many (but not all) areas right now, this seems to be a fair way of approaching many jobs at explicitly-EA orgs. There are tons of smart, talented people vying for EA jobs; unless you have a particularly unique skillset or perspective, it’s not clear to me that any given individual is likely to provide substantial marginal value in an EA job (3, 4). But if you’re an EA-aligned person taking a job in a non-EA org, you’re instantly providing the marginal value of EA-aligned paradigms and approaches. This both allows you to do more good and creates the opportunity to spread EA-aligned ways of thinking.

To be absolutely clear, I am not arguing that folks shouldn’t apply for or work in explicitly-EA organizations. These orgs are doing a tremendous amount of good, made possible by the talented and committed folks who work for them. What I am arguing is that considerations of marginal value make working for an explicitly-EA org less valuable than conventional wisdom currently states, and working for non-EA-orgs more valuable than our current models might suggest.

Where does that leave us? My hope is that more folks will consider careers in fields that are not-traditionally associated with EA, while continuing to consider conventionally EA ones as well. I'm really excited by the possibility of bringing EA ideas and ways of thinking into more spaces, and I think that taking positions in non-EA spaces is one way of encouraging this. I know that some folks feel like there's a natural limit to how many people will want to join EA (whether because of the demanding ethics that many associate EA with or some of the more esoteric cause areas under the EA umbrella), but I think that it's also worth considering that there is a subset of people who might not be willing to fully identify with the movement but who would be willing to adopt some of its ways of thinking, especially if they see firsthand how it can help them be more effective in their work.

I also think that a more positive attitude toward "non-EA" jobs would be healthy for the community. I've often heard people say (particularly on r/effectivealtruism and at in-person gatherings) that they feel like the advice offered by 80,000 Hours is only "for" a small subset of high-achieving individuals. I don't think that that's totally fair, but I think it is true to an extent. Optimization has always been a big part of EA: "How can I do the most good?" Even as we keep this question central, I think it's important to also ask gentler variations of this question such as: "How can I do the most good with where I am now?" and "How can I do the most good given the choices I'm willing to make?" Making a career change isn't always what's right for someone, but that doesn't mean that they can't bring EA concepts into non-traditionally EA jobs, whether in a policy job (where I would argue you may be able to do comparable amounts of good as in an explicitly-EA-org) or in any other position.

There are always tradeoffs. Working at an explicitly-EA aligned org has both pros and cons: it typically means that you’re engaging in work that is likely highly effective and you’re also getting to be in a community of people with shared values, which may strengthen your own beliefs and incubate new EA ideas, but it’s possible that in a counterfactual scenario, another person may have comparable impact. There are likewise both pros and cons of working for a non-EA org: you’re almost certainly bringing much more of an EA perspective than anyone else would in your position, so you may be able to have substantial marginal impact, and you’re helping to spread EA ideas to new networks, but your day to day work is likely less effective than it would be at an EA org and you may not have a sense of sharing values with your work community, which may dilute your commitment or lead to a less engaging experience for you.

There are always tradeoffs, and it’s up to each person to figure out which position is right for them at any given time. Career choice is a complex question that’s worthy of sustained attention, and I think it’s fantastic that orgs such as 80,000 Hours have championed treating it with such seriousness. But I worry that the way that 80,000 Hours discusses non-neglected cause areas may lead to some EAs dismissing possibly high-impact careers; and, furthermore, I think it's important that we create space for asking the question "how can I do the most good given the choices I'm willing to make?" in broader career-choice contexts.

(1) 21-22 state budget: http://www.ebudget.ca.gov/budget/2021-22EN/#/Home; Givewell: https://www.givewell.org/about/impact; Bill and Melinda Gates Foundation: https://www.gatesfoundation.org/about/financials/annual-reports/annual-report-2019. I used 2019 numbers for the latter two because they were the most recent numbers available.

(2) https://80000hours.org/make-a-difference-with-your-career/#which-problems-should-you-work-on

(3) My belief that there are large numbers of highly talented individuals applying to EA jobs in certain areas (particularly AI research, catastrophic risk, and mainstream EA orgs; my rough sense is that jobs are more attainable in orgs that do animal-related work) is based on things I’ve seen on the EA forum and various EA-related FB pages, what I’ve heard from people at a few in-person EA gatherings, and my own experiences. I may be wrong, and I am very open to alternative perspectives, but this is my sense of it.

This topic becomes yet more complex because the question then becomes “how do I know if I would add substantial marginal value in this position?”, which is a challenging question to answer. One approach is to apply to lots of EA orgs and trust them to assess that, which I’ve repeatedly heard recommended; however, this does require investing a decent amount of time into these applications with uncertain reward. I found EA-jobs to be much more time-intensive to apply for than non-EA jobs, on the whole, and dedicating that time was a non-trivial ask.

(4) My belief that any given person may not provide substantial marginal value in a particular role is key to my argument, and definitely a live area of debate. Here's one counterargument from 80,000 Hours, for example: https://80000hours.org/2019/05/why-do-organisations-say-recent-hires-are-worth-so-much/#2-there-might-be-large-differences-in-the-productivity-of-hires-in-a-specific-role I'm not an expert in this area, so I am very open to further counterarguments on this point!

Note: I realize that I'm bringing several different ideas into play here; I considered splitting this into separate posts but wasn't able to make that work. Eager to hear folks' thoughts on any of the ideas discussed above (reflecting on what it means to bring EA principles into a non-EA job; arguing for the value of policy jobs; arguing against an overemphasis on cause area and neglectedness; arguing for the value of bringing EA perspectives into non-EA spaces; arguing that EA jobs may be overvalued due to marginal value considerations; discussing spreading EA ideas through career choice).

Big thank you to Nicholas Goldowsky-Dill for his help thinking through these concepts!

Linch @ 2021-08-20T20:00 (+24)

As an empirical matter, are there many EAs, especially in junior positions, who don't consider non-EA jobs seriously?

The only people I can think of who aren't considering non-EA jobs at all while doing a job search are a subset of a) people quite established/senior in EA orgs and thus may believe that they have transferable skills/connections to other EA orgs, and b) a small number of relatively well-off (either from family or past jobs) people excited to attempt a transition.

And in the case of both a) and b), even in those groups there are plenty of people who seriously consider (and frequently take up) jobs outside of our small movement.

alexrjl @ 2021-08-21T12:43 (+21)

In my case it was the opposite - I spent several years considering only non-EA jobs as I had formed the (as it turns out mistaken) impression that I would not be a serious candidate for any roles at EA orgs.

Max_Daniel @ 2021-08-20T21:16 (+16)

FWIW, I think I did not consider non-EA jobs nearly enough right after my master's in 2016. However, my situation was somewhat idiosyncratic, and I'm not sure it could happen today in this form.

I ended up choosing between one offer from an EA org and one offer from a mid-sized German consulting firm. I chose the EA org. I think it's kind of insane that I hadn't even applied to, or strongly considered, more alternatives, and I think it's highly unclear if I made the right choice.

Linch @ 2021-08-20T21:21 (+9)

I do think on average people don't apply to enough jobs relative to the clear benefits of having more options. I'm not sure why this is, and also don't have a sense of whether utility will increase if we tell people to apply to more EA or EA-adjacent jobs vs more jobs outside the movement. Naively I'd have guessed the former to be honest.

Sarah H @ 2021-08-22T15:31 (+13)

That's a good point. I don't have any data on this (not sure if this is something addressed in any of the EA surveys?) but my understanding is that you're totally right that most EAs are in non-EA jobs.

What I was trying to get at in my post was less the thought that more EAs should take jobs in non-EA spaces, but more the notion that discussions of career choice should take those choices more seriously. My title—More EAs should consider non-EA jobs—could be expanded to be “More EAs should consider non-EA jobs as a valid way of doing the most good.” But there was definitely some ambiguity there.

I think it’s valuable to distinguish between folks who take non-EA jobs out of EA-related considerations (i.e. “I’m taking this job because I think it will allow me to do the most good”) vs. those who take them for unrelated considerations (such as interest, availability, location, etc.). I would guess that both approaches are at play for many people. I don’t think that conversations of career choice talk enough about the former; I think they tend to foreground career paths in EA orgs and don’t talk much about the potential value of bringing EA into non-EA spaces.

Denise_Melchin @ 2021-08-21T10:28 (+5)

I didn't originally, but then did when I could not get an offer for an EA job.

I do think in many cases EA org jobs will be better in terms of impact (or more specifically: high impact non-EA org jobs are hard to find) so I do not necessarily consider this approach wrong. Once you fail to get an EA job, you will eventually be forced to consider getting a non-EA job.

tamgent @ 2021-08-24T19:10 (+9)

I agree that if you choose at random from EA org and non-EA org jobs, you are more likely to have more impact at an EA org job. And I agree that there is work involved in finding a high impact non-EA job.

However, I don't think the work involved in finding a high impact non-EA org job is hard because there are so few such opportunities out there, but because finding them requires more imagination/creativity than just going for a job at an EA org does. Maybe you could start a new AI safety team at Facebook or Amazon by joining, building the internal capital, and then proposing it. Maybe you can't because reasons. Either way, you learn by trying. And this learning is not wasted. Either you pave the way for others in the community, highlighting a new area where impact can be made. Or, if it turns out it's hard for reasons, then you've learnt why, and can pass that on to others who might try.

Needless to say this impact finding strategy scales better than one where everyone is exclusively focused on EA org jobs (although you need some of that too). On a movement scale, I'd make a bet that we're too far in the direction of thinking that EA orgs is a better path to impact and have significantly under-explored ways of making impact in non-EA orgs, and there are social reasons why we'd naturally bias in that direction. Alternatively, like Sarah said elsewhere, it's just less visible.

I just realised I haven't asked - why are high impact non-EA org jobs are hard to find, in your view?

Jsevillamol @ 2021-08-19T21:38 (+20)

But when I look at this chart, my main takeaway is that there’s a ton of money being spent on welfare, and that working to make sure that money is spent as efficiently as possible could have a huge impact.

I think this is basically true.

A while back I thought that it was false - in particular, I thought that the public money was extremely tight, and that fighting to change a budget was an extremely political issue where one would face a lot of competition.

My experience collaborating with public organizations and hearing from public servants so far has been very different. I still think that budgets are hard to change. But within public organizations there is usually a fair amount of willingness to reconsider how their allocated budget is spent, and lots of space for savings and improvement.

This is still more anecdote than hard evidence. Yet I really think this is worth thinking more about. I think one very effective thing EAs can do is study closely public organizations (by eg interviewing their members or applying for jobs in the organization), and then think hard about how to help the organization better achieve their goals.

Sarah H @ 2021-08-19T21:53 (+2)

Thank you for sharing that! I like your idea about talking to people within these orgs--I know that my sense of how things work has been really changed by actually seeing some of this firsthand.

I think another element to consider is what level of government we're talking about. My sense is that the federal budget tends to be more politicized than many state and local-level budgets, and that with state and local budgets there's more room for a discussion of "what is actually needed here in the community" vs. it becoming a straightforward red/blue issue (at least here in the states). I wonder if this means that, at least in some instances, interventions related to state and local-level would be more tractable than national ones. I'm reminded of the Zurich ballot initiative, for example.

Jan-WillemvanPutten @ 2021-08-28T08:55 (+14)

Hi Sarah, thanks for writing this great article.

As someone else mentioned in the comments most EAs work in non-EA orgs looking at the EA surveys. According to the last EA Survey I checked only ~10% of respondents worked in EA orgs and this is probably an overestimation (people in EA orgs are more likely to complete the survey I assume)

So I think the problem is not that EAs are not considering these jobs, I would say the bottleneck for impact is something else:

1) Picking the right non-EA orgs, as mentioned in the comments the differences are massive here

2) Noticing and creating impact opportunities in those jobs

80k is able to give generic career advice, e.g. on joining the civil service but they often lack the capacity to give very specific advice to individuals on where to start, let alone on how to have impact in that job once you landed it.

One solution could be to start a new career org for this, but I think it is very hard to be an expert in everything and I often think that specific personal fit considerations are important. Therefore I think we are better off through training people in picking the right orgs, through teaching them impact-driven career decision models and training and sharing impact opportunities in certain non-EA org career paths.

Summarized: I don't think people lack the willingness to join non EA-orgs but they lack the tools and skills for maximising their impact in those careers

Nathan_Barnard @ 2021-08-20T00:31 (+12)

I think this is correct and EA thinks about neglectedness wrong. I've been meaning to formalise this for a while and will do that now.

freedomandutility @ 2021-08-20T11:42 (+6)

Same! I think neglectedness is more useful for identifying impactful “just add more funding” style interventions, but is less useful for identifying impactful careers and other types of interventions since focusing on neglectedness systematically misses high leverage careers and interventions.

Sarah H @ 2021-08-22T15:34 (+1)

I totally agree! You articulated something I've been thinking about lately in a very clear manner; I think you're absolutely right to distinguish the value of neglectedness for funding vs. career choice--it's such a useful heuristic for funding considerations, but I think it can be used too indiscriminately in conversations about career choice.

tamgent @ 2021-08-27T10:11 (+2)

I wonder if others' understanding of neglectedness is different from my own. I think I've always implicitly thought of neglectedness as how many people are trying to do the exact thing you're trying to do to solve the exact problem you're working on, and therefore think there's loads of neglected opportunities everywhere, mostly at non-EA orgs. But now reading this thread I got confused and checked the community definition here and which says it's about dedicating resources to a problem, which is quite different and helps me better understand this thread. It's funny that after all these years I've had a different concept in my head to everyone else and didn't realise. Anyway, if neglectedness includes resources dedicated to the problem, then a predominantly non-EA org like a government body might be dedicating lots of resources to a problem, but not making much progress on it. In my view, this is a neglected opportunity.

Maybe we should distinguish between neglected in terms of crowdedness vs. opportunities available?

Also, what are others' understandings of neglectedness?

Miranda_Zhang @ 2021-08-20T00:26 (+12)

Thank you for writing this up! Purely anecdotal but I've been career 'planning' (more just thinking, really) for the past year and I've increasingly updated towards thinking that EA could draw a lot more from non-EAs, whether in terms of academia/knowledge (e.g. sociology) or professionally (e.g. non-EA orgs).

We don't have to reinvent the wheel with everything by making orgs EA-aligned - just as much, if not more, impact could be made not going into traditional EA paths - not just EA orgs but other fields 'neglected' by the highly-engaged community. I think that's a pretty common belief around here but perhaps not always clearly communicated!

tamgent @ 2021-08-21T07:56 (+8)

Thanks for writing about this. I wanted to a while ago but didn't get round to it. I also get the sense that too many folks in the EA community think the best way they can make an impact is at an EA org. I think this probably isn't true for most people. Gave a couple of reasons why here.

I wrote a list of some reasons to work at a non-EA org here a while ago, which overlap with your reasons.

Sarah H @ 2021-08-22T15:53 (+5)

These are great, thank you for sharing! I really appreciate your framing of your focus on non-EA jobs, especially the language of low-hanging fruit and novelty of EA ideas in non-EA spaces. I like that you distinguish between EA as a movement/identity and the ideas that underlie it; I think that too often, we elide the two, and miss opportunities to share the underlying ideas separate from the wider identity. And I also like your point about the importance of integrating EA and non-EA: I feel like there has been a lot of effort dedicated to strengthening the EA community, as well as substantial effort dedicated to getting people to join the EA community, but less energy devoted to bringing EA ideas into spaces where folks might not want the whole identity, but would appreciate some of the ideas. It's possible that that work has just been more behind-the-scenes, however.

Anyways, thanks for sharing-I'm happy to hear that this has been an ongoing topic of conversation. I'm going to go read more of that careers questions thread--somehow I missed that the first time around!

tamgent @ 2021-08-22T20:18 (+9)

Yeah I'd imagine much of the work of bringing EA ideas into spaces where folks might not want the identity is less visible, sometimes necessarily or wisely so. I'd love to see more stories told on forums such as this one of making impact in 'non-EA' spaces, even in an anonymised/redacted way.

smountjoy @ 2021-08-20T15:26 (+6)

Hi Sarah! I broadly agree with the post, but I do think there's a marginal value argument against becoming a doctor that doesn't apply to working at EA orgs. Namely:

Suppose I'm roughly as good at being a doctor as the next-doctor-up. My choosing to become a doctor brings about situation A over situation B:

Situation A: I'm a doctor, next-doctor-up goes to their backup plan

Situation B: next-doctor-up is a doctor, I go to my backup plan

Since we're equally good doctors, the only difference is in whose backup plan is better—so I should prefer situation B, in which I don't become a doctor, as long as I think my backup plan will do more good than their backup plan. This seems likely to be the case for anyone strongly motivated to do good, including EAs.

To make a similar case against working at an EA org, you would have to believe that your backup plan is significantly better than other EAs' backup plans.

EDIT: I should say I agree it's possible that friction in applying for EA jobs could outweigh any chance you have of being better than the next candidate. Just saying I think the argument against becoming a doctor is different—and stronger, because there are bigger gains on the table.

Sarah H @ 2021-08-24T15:13 (+2)

That's a good point, and I'm inclined to agree, at least on an abstract level. My question then becomes how you evaluate what the backup plans of others are. Is this something based on data? Rough estimations? It seems like it could work on a very roughly approximated level, but I would imagine there would be a lot of uncertainty and variation.

Tristan Williams @ 2023-05-07T20:41 (+1)

I swear I'm not usually the one to call for numbers, but I'm compelled to in this case, because one of the common feelings I have reading this claim and claims like it is wanting to know: quantitatively what sort of difference did you make? I don't expect anything beyond really rough numbers, but let me give you an example of something I'd love to read here:

"The 21-22 California budget is 262.5 billion dollars. The organization I work in takes precedence over X billion dollars. My role covers Y% of that budget, and I expect that had another non-EA aligned person been in my role the allocation would have been Z% less effective."

If you had to guess, what do you think your X, Y and Z are? I know Z is tricky too, but if you explain your rough reasoning, I think that'd help. I also know you mention many things that would be hard to directly quantify (changing minds towards being more data oriented in a meeting) but I think you can roughly quantify this by determining what percentage of X became more effective over the course of your influence and what W% were you responsible for of that.