Architecting Trust: A Conceptual Blueprint for Verifiable AI Governance

By Ihor Ivliev @ 2025-03-31T18:48 (+3)

1. Executive Summary

High-stakes AI systems increasingly impact healthcare diagnoses, legal proceedings, and public policy, yet their common "black-box" nature creates a dangerous governance gap. Unexplained outputs can lead to significant errors, biased outcomes, and erode public trust, hindering accountability and alignment with key regulations and principles, such as the EU AI Act and guidance from the OECD, NIST, and IEEE. Superficial checks are insufficient; trustworthiness must be engineered into AI’s core architecture. This article introduces the Generalized Comprehensible Configurable Adaptive Cognitive Structure (G-CCACS) — a conceptual reference architecture designed for this challenge, currently awaiting crucial implementation and empirical validation. G-CCACS structurally integrates mechanisms for verifiable auditability (via immutable logging), intrinsic ethical governance (via embedded rules and escalation protocols), and validated, context-aware reasoning. While presented as a theoretical blueprint, G-CCACS offers policymakers a tangible vision for bridging the gap between essential governance principles and technical reality, fostering AI systems built for verifiable trust. Moving this concept forward requires a dedicated multi-stakeholder effort.

(For the full conceptual manuscript, see https://doi.org/10.6084/m9.figshare.28673576.v4)

2. Introduction: The Governance Imperative in High-Stakes AI

Consider illustrative clinical scenarios where unexplained AI outputs reportedly led doctors to make significantly more diagnostic errors, even when biases were small. Or imagine AI used in judicial processes where opaque reasoning prevents verification of fairness or adherence to legal principles. These scenarios highlight a critical governance imperative: as AI takes on roles with profound consequences for health, liberty, and public welfare, simply accepting “it just works” is no longer viable. We need AI systems capable of explaining their reasoning, performing self-checks, and reliably escalating issues beyond their competence to human judgment.

Yet, a significant gap persists. Vital regulatory frameworks like the EU AI Act[1], guiding documents such as the OECD AI Principles, practical standards like the NIST AI Risk Management Framework, and ethical design initiatives like IEEE’s Ethically Aligned Design collectively demand trustworthy AI — systems that are transparent, robust, safe, fair, and accountable. However, these frameworks often lack the concrete technical specifications for how these crucial properties can be reliably built and verified, especially within the complex, often opaque "black-box" models prevalent today. Attempting to add transparency or ethical checks after development often proves inadequate, addressing symptoms rather than the underlying structural limitations.

This article argues that a fundamental shift towards architectural thinking is necessary to bridge this policy-implementation gap. It introduces the Generalized Comprehensible Configurable Adaptive Cognitive Structure (G-CCACS) — a conceptual reference architecture designed specifically to explore how core governance principles can be embedded within the operational fabric of an AI system. G-CCACS integrates mechanisms for rigorous internal validation — assessing belief reliability (via the Causal Fidelity Score, CFS) while monitoring environmental shifts (via the Systemic Unfolding & Recomposition Drift, SURD metric) — alongside robust ethical controls, including a multi-stage Ethical Escalation Protocol (EEP). The aim here is to outline this architectural approach, demonstrating its potential for operationalizing vital policy goals found across these key international frameworks and serving as a foundation for the collaborative work needed to build AI systems truly worthy of societal trust. (Key G-CCACS terms are defined upon first significant use or detailed in the source manuscript glossary)

3. Why Architecture Matters: The Foundation of Trust

Ensuring Artificial Intelligence acts safely, ethically, and accountably cannot remain an afterthought; it must be a deliberate design choice reflected in the system’s fundamental structure. Just as a skyscraper’s safety relies not merely on surface inspections but on integrated fire suppression systems, emergency exits, and structural reinforcements designed from the blueprint stage, trustworthy AI requires governance principles to be woven into its core architecture. Slapping transparency layers or ethical checks onto an inherently opaque system is akin to painting fire escape signs on a solid wall — it offers the illusion of safety without the substance. To achieve genuine, verifiable trustworthiness, we must architect for it from the beginning.

The G-CCACS framework embodies this “governance-by-design” philosophy. It posits that properties like traceability, ethical alignment, and validated reasoning should emerge intrinsically from how the system processes information, manages knowledge, and makes decisions. This architectural approach aims to create a foundation upon which verifiable guarantees can be built, moving beyond post-hoc justifications towards inherent accountability.

However, it is essential to approach this vision with epistemic humility. G-CCACS, as presented, is a conceptual blueprint — a detailed exploration of how such integration might be achieved, not a deployed or empirically proven system. Translating this architecture into practice involves significant challenges, including managing its inherent complexity, fostering deep multi-disciplinary collaboration, and conducting rigorous empirical validation across diverse, real-world contexts. It represents a direction, a set of interconnected ideas intended to serve as a reference point for building the next generation of trustworthy AI.

4. Core Pillars: Architectural Mechanisms for Verifiable Governance

To move from abstract principles to operational reality, G-CCACS proposes several integrated architectural pillars. These pillars provide concrete technical mechanisms designed to address specific governance needs frequently highlighted in policy discussions and regulatory frameworks.

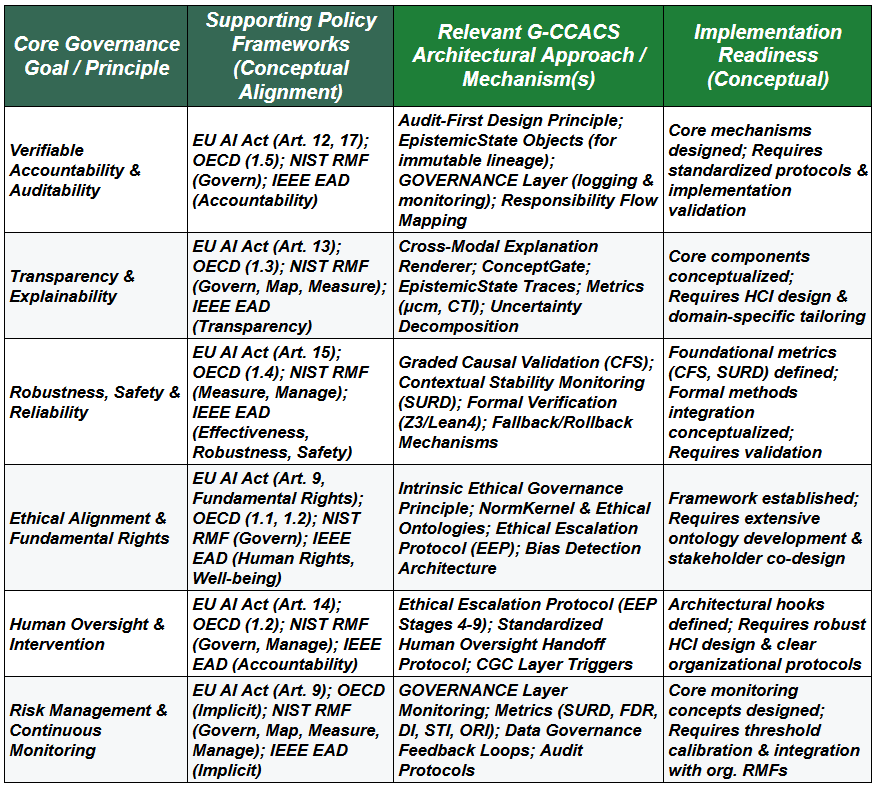

G-CCACS: Architectural Alignment with Core Trustworthy AI Governance Goals

Note: G-CCACS is a conceptual reference architecture. Full mappings detailed in Manuscript Appendices G-J.

Important Considerations for Policymakers:

- Conceptual Framework, Not Turnkey Solution: G-CCACS provides an architectural blueprint illustrating how trustworthiness can be structurally embedded. It serves as a reference model requiring significant implementation, empirical validation, and domain-specific adaptation before deployment.

- Focus on Core Trustworthiness Architecture: The framework prioritizes verifiable accountability, transparency, ethical alignment, robust reasoning, and safety mechanisms. It creates architectural hooks where complementary standards can be integrated.

- Scope & Complementary Needs: While G-CCACS aims to support compliance broadly, specific areas like detailed data protection protocols (beyond secure logging/access), comprehensive cybersecurity hardening (beyond architectural assumptions), and nuanced human factors engineering require dedicated, complementary solutions and robust organizational practices built upon this foundation.

Collaboration is Essential: Translating this conceptual architecture into practical standards, validated implementations, and widely adopted best practices necessitates a concerted, multi-disciplinary effort involving policymakers, regulators, AI researchers, ethicists, domain experts, and industry practitioners.

Pillar 1: Audit-First Design (Verifiable Accountability)

- At its core, G-CCACS mandates an “audit-first” principle. Accountability hinges on the ability to reconstruct and understand why a decision was made. G-CCACS achieves this through EpistemicState objects — immutable digital records capturing the complete lifecycle of every significant belief or inference. Each log details evidence, validation steps, reasoning, ethical justifications, and history. This detailed provenance, potentially combined with Responsibility Flow Mapping attributing steps, provides the technical foundation for meaningful audits and verifiable compliance with logging requirements (e.g., Art. 12, 17)[1]. For instance, if a G-CCACS-based clinical support system recommends deviating from standard treatment, an auditor could trace the exact reasoning via EpistemicState logs, verifying the data, inferences (with validation scores), and ethical checks involved.

Pillar 2: Intrinsic Ethical Governance (Ethical Alignment & Human Oversight)

- Ethical considerations must actively constrain AI. G-CCACS integrates ethical governance structurally via the NormKernel and the Ethical Escalation Protocol (EEP). The NormKernel interprets formalized ethical rules and value hierarchies (developed via stakeholder input). The EEP acts as a multi-stage safety mechanism; if a conflict is detected or safety metrics are breached, it automatically initiates interventions, from monitoring to operational halts. Crucially, specific EEP stages mandate structured human oversight, ensuring human judgment is integrated when risk exceeds predefined limits. This provides a mechanism for enforcing “Ethical Supremacy” and operationalizing requirements for human control (like EU AI Act Art. 14)[1]. Illustratively, if an AI assessing eligibility for social benefits flagged a decision as potentially biased, the EEP could halt processing and route the case with full context to a human caseworker.

Pillar 3: Validated Reasoning & Context Awareness (Robustness & Accuracy)

- Trustworthy decisions require reasoning grounded in validated evidence, sensitive to context. G-CCACS moves beyond correlation via a Graded Causal Validation Pipeline (G4→G1). Beliefs about causal links mature through validation stages, quantified by the Causal Fidelity Score (CFS). Simultaneously, the Systemic Unfolding & Recomposition Drift (SURD) metric continuously monitors operational context stability. High SURD (instability) automatically triggers caution — demanding stricter validation (higher CFS) or downgrading existing beliefs. This dynamic interplay addresses demands for robustness and accuracy (e.g., EU AI Act Art. 15)[1]. For instance, if a G-CCACS system analyzing public health data detects a sudden shift in reporting patterns (high SURD), it would automatically reduce confidence in trends derived from that unstable data, possibly triggering model recalibration alerts.

Pillar 4: Integrated Formal Verification (Enhanced Assurance)

- For applications demanding the highest assurance, G-CCACS incorporates formal verification pathways. Using mathematical techniques and tools (like the Z3 SMT solver or the Lean4 proof assistant), critical components or rules can be proven to adhere to safety or logical specifications. For example, the fallback rules activated during critical failures in a medical diagnostic AI could be formally verified to ensure they always lead to a known safe state, significantly bolstering confidence beyond empirical testing alone and supporting robustness goals.

5. Bridging Policy and Practice: An Implementation Pathway

High-level principles for trustworthy AI, as articulated in frameworks like the EU AI Act or OECD Principles, are essential starting points. However, the critical challenge lies in translating these principles into tangible technical reality — building systems where compliance is verifiable and governance is operational. Architectural blueprints like G-CCACS offer a vital link, demonstrating how abstract goals like accountability, transparency, and ethical alignment can be realized through concrete design patterns, specific mechanisms, and measurable indicators.

Moving from a conceptual architecture like G-CCACS towards practical implementation and regulatory acceptance requires a structured, collaborative pathway. While specific timelines depend heavily on context and resources, a potential phased approach could involve:

- Phase 1: Standardization & Benchmarking: The immediate step involves developing shared technical standards based on core architectural concepts. This could include standardizing formats for immutable audit logs (like the EpistemicState object format), defining robust methods for calculating and interpreting context stability metrics (like SURD), and creating benchmarks to evaluate the effectiveness of causal validation pipelines (CFS-based grading) and ethical escalation protocols (EEP effectiveness). Such standards provide a common language for developers, auditors, and regulators.

- Phase 2: Pilot Implementations & Tooling: The next phase would focus on building reference implementations of G-CCACS-inspired architectures within controlled environments, such as regulatory sandboxes or specific industry pilot projects (e.g., in healthcare diagnostics or legal analysis support). This allows for iterative refinement and learning. Crucially, this phase requires developing and validating the necessary tools for auditing (e.g., automated analysis of EpistemicState logs) and verification (e.g., user-friendly interfaces for formal methods outputs).

- Phase 3: Conformity Assessment & Certification: Based on insights from standardization and piloting, methodologies for conformity assessment can be defined. This involves specifying how regulators or independent bodies can assess compliance based on the architectural evidence generated by the system itself — the logs, metrics, and verification certificates inherent to a G-CCACS-like design. This could eventually lead to exploring certification frameworks recognizing systems built on verifiably trustworthy architectural foundations.

Furthermore, the metrics embedded within G-CCACS offer intriguing possibilities for ongoing governance and adaptive regulation. Indicators like the Systemic Unfolding & Recomposition Drift (SURD) or the Formalization Debt Ratio (FDR) — tracking reliance on unverified knowledge – monitored continuously by the system’s GOVERNANCE layer, could potentially serve as quantifiable indicators for regulators. Instead of relying solely on static pre-deployment checks, regulations could incorporate requirements for maintaining stability (acceptable SURD levels) or rigor (maximum allowable FDR) during operation, enabling a more dynamic approach to AI oversight.

This phased pathway underscores that bridging policy and practice requires coordinated effort across standardization bodies, research institutions, industry consortia, and regulatory agencies. Architectural frameworks provide the common ground upon which this essential collaboration can build. However, it’s vital to recognize that while architectures like G-CCACS provide foundational support for trustworthiness — for example, supporting data protection and cybersecurity compliance goals by providing robust audit trails and enabling fine-grained access controls managed via the GOVERNANCE layer, when integrated within a secure environment and appropriate organizational data handling policies — they are not standalone solutions in these areas. Full compliance necessitates integrating G-CCACS within broader organizational practices and complementing it with dedicated technical layers for specific needs like end-to-end encryption, advanced network security protocols, specific data anonymization techniques, and comprehensive vulnerability management, which fall outside the direct scope of this cognitive architecture.

6. Conclusion: A Collaborative Call to Architect Trust

The journey toward Artificial Intelligence that benefits humanity safely and ethically requires more than incremental improvements; it demands a fundamental commitment to trustworthiness, built into the very architecture of these powerful systems. Superficial checks and balances are insufficient when navigating the complexities of healthcare, law, and public policy. We must engineer AI systems where transparency, ethical constraints, and rigorous validation are operational realities, not mere aspirations. The G-CCACS framework, detailed in this exploration, offers one possible, comprehensive conceptual blueprint for how this architectural integration might be achieved — demonstrating pathways to embed governance deeply within AI’s cognitive processes.

However, it is essential to reiterate that G-CCACS is currently a detailed concept — a reference architecture awaiting the crucial stages of implementation, empirical validation, and adaptation. Its realization is not a solitary task but demands a broad, sustained, multi-disciplinary collaboration. We need policymakers and regulators working alongside standards bodies; AI and machine learning researchers engaging with cognitive scientists and formal methods experts; industry practitioners partnering with ethicists; and domain specialists providing critical context from fields like medicine, law, and social science.

On a personal note, as the originator of this framework, I have explored these concepts as far as my individual capacity currently allows. Due to ongoing health reasons requiring me to step back from such intensive theoretical work for the time being, I now respectfully pass the baton. It is my sincere hope that this detailed conceptual work provides a useful foundation, sparking further research, development, and critical discussion within the diverse community dedicated to trustworthy AI — perhaps within standardization bodies, university research labs focused on AI safety and ethics, or public-private governance initiatives.

The path forward involves concrete, collaborative steps. As a collective community, we could focus on:

- Forming working groups to develop standardized formats for core mechanisms like EpistemicState logs and common methodologies for key metrics (CFS, SURD, FDR).

- Establishing pilot projects, potentially within regulatory sandboxes, to test the feasibility and effectiveness of components like the Ethical Escalation Protocol (EEP) in specific domains.

- Collaborating on open-source tools for auditing G-CCACS-inspired systems and for developing/validating the ethical ontologies needed by the NormKernel.

Building AI that is not only intelligent but demonstrably trustworthy and ethically aligned is one of the defining challenges of our time. It requires foresight, diligence, and a shared commitment to designing these systems responsibly from the ground up. The potential exists; the architecture matters profoundly. Let us embrace the challenge and work together to build an AI future worthy of society’s trust.

- ^

References to specific frameworks like the EU AI Act reflect ongoing legislative and standards development processes. Final legal text, article numbering, interpretations, and specific recommendations within all mentioned frameworks (OECD, NIST, IEEE) may evolve over time.