Arguments for utilitarianism are impossibility arguments under unbounded prospects

By Michael St Jules 🔸 @ 2023-10-07T21:09 (+40)

Summary

Most moral impact estimates and cost-effectiveness analyses in the effective altruism community use (differences in) expected total welfare. However, doing so generally is probably irrational, based on arguments related to St Petersburg game-like prospects. These are prospects that are strictly better than each of their infinitely many possible but finite actual value outcomes, with unbounded but finite value across these outcomes. The arguments I consider here are:

- Based on a money pump argument, the kind used to defend the axioms of expected utility theory, maximizing the expected value of an unbounded utility function is irrational. As a special case, expectational total utilitarianism, i.e. maximizing the expected value of total welfare, is also irrational.

- Two recent impossibility theorems demonstrate the incompatibility of Stochastic Dominance — a widely accepted requirement for instrumental rationality — with Impartiality and each of Anteriority and Separability. These last three principles are extensions of standard assumptions used in theorems to prove utilitarianism, e.g. Anteriority in Harsanyi's theorem.

Taken together, utilitarianism is either irrational or the kinds of arguments used to support it in fact undermine it instead when generalized. However, this doesn't give us any positive arguments for any other specific views.

I conclude with a discussion of responses.

EDIT: I've rewritten the summary and the title, and made various other edits for clarity and to better motivate. The original title of this post was "Utilitarianism is irrational or self-undermining".

Basic terminology

By utilitarianism, I include basically all views that are impartial and additive in deterministic fixed finite population cases. Some such views may not be vulnerable to all of the objections here, but they apply to most such views I’ve come across, including total utilitarianism. These problems also apply to non-consequentialists using utilitarian axiologies.

To avoid confusion, I prefer the term welfare as what your moral/social/impersonal preferences and therefore what your utility function should take into account.[1] In other words, your utility function can be a function of individuals’ welfare levels.

A prospect is a probability distribution over outcomes, e.g. over heads or tails from a coin toss, over possible futures, etc..

Motivation and outline

Many people in the effective altruism and rationality communities seem to be expectational total utilitarians or give substantial weight to expectational total utilitarianism. They take their utility function to just be total welfare across space and time, and so aim to maximize the expected value of total welfare (total individual utility), E[∑Ni=1ui]. Whether or not committed to expectational total utilitarianism, many in these communities also argue based on explicit estimates of differences in expected total welfare. Almost all impact and cost-effectiveness estimation in the communities is also done this way. These arguments and estimation procedures agree with and use expected total welfare, but if there are problems with expectational total utilitarianism in general, then there’s a problem with the argument form and we should worry about specific judgements using it.

And there are problems.

Total welfare, and differences in total welfare between prospects, may be unbounded, even if it were definitely finite. We shouldn't be 100% certain of any specified upper bound on how long our actions will affect value in the future, or even for how long a moral patient can exist and aggregate welfare over their existence. By this, I mean that you can't propose some finite number K such that your impact must, with 100% probability, be at most K. K doesn't have to be a tight upper bound. Here are some arguments for this:

- Given any proposed maximum value, we can always ask: isn't there at least some chance it could go on for 1 second more? Even if extremely tiny. By induction, we'll have to go past any K.[2]

- How would you justify your choice of K and 100% certainty in it? (Feel free to try, and I can try to poke holes in the argument.)

- You shouldn't be 100% certain of anything, except maybe logical necessities and/or some exceptions with continuous distributions (Cromwell's rule).

- If you grant any weight to the views of those who aren't 100% sure of any specific finite upper bound, then you also shouldn't be 100% sure of any, either. If you don't grant any weight to them, then this is objectionably epistemically arrogant. For a defense of epistemic modesty, see Lewis, 2017.

- There are some specific possibilities allowing this that aren't ruled out by models of the universe consistent with current observations, like creating more universes, from which other universes can be created, and so on (Tomasik, 2017).

We could also have no sure upper bound on the spatial size of the universe or the number of moral patients around now.[3] Now, you might say you can just ignore everything far enough away, because you won't affect it. If your decisions don't depend on what's far enough away and unaffected by your actions, then this means, by definition, satisfying a principle of Separability. But then you're forced to give up impartiality or one of the least controversial proposed requirements of rationality, Stochastic Dominance. I'll state and illustrate these definitions and restate the result later, in the section Anti-utilitarian theorems.

This post is concerned with the implications of prospects with infinitely many possible outcomes and unbounded but finite value, not actual infinities, infinite populations or infinite ethics generally. The problems arise due to St Petersburg-like prospects or heavy-tailed distributions (and generalizations[4]): prospects with infinitely many possible outcomes, infinite (or undefined) expected utility, but finite utility in each possible outcome. The requirements of rationality should apply to choices involving such possibilities, even if remote.

The papers I focus on are:

- Jeffrey Sanford Russell, and Yoaav Isaacs. “Infinite Prospects*.” Philosophy and Phenomenological Research, vol. 103, no. 1, Wiley, July 2020, pp. 178–98, https://doi.org/10.1111/phpr.12704, https://philarchive.org/rec/RUSINP-2

- Goodsell, Zachary. “A St Petersburg Paradox for Risky Welfare Aggregation.” Analysis, vol. 81, no. 3, Oxford University Press, May 2021, pp. 420–26, https://doi.org/10.1093/analys/anaa079, https://philpapers.org/rec/GOOASP-2

- Jeffrey Sanford Russell. “On Two Arguments for Fanaticism.” Noûs, Wiley-Blackwell, June 2023, https://doi.org/10.1111/nous.12461, https://philpapers.org/rec/RUSOTA-2, https://globalprioritiesinstitute.org/on-two-arguments-for-fanaticism-jeff-sanford-russell-university-of-southern-california/

Respectively, they:

- Argue that unbounded utility functions (and generalizations) are irrational (or at least as irrational as violating Independence or the Sure-Thing Principle, crucial principles for expected utility theory).

- Prove that Stochastic Dominance, Impartiality and Anteriority are jointly inconsistent.

- Prove that Stochastic Dominance, Compensation (which implies Impartiality) and Separability are jointly inconsistent.

Again, respecting Stochastic Dominance is among the least controversial proposed requirements of instrumental rationality. Impartiality, Anteriority and Separability are principles (or similarly motivated extensions thereof) used to support and even prove utilitarianism.

I will explain what these results mean, including a money pump for 1 in the correspondingly named section, and definitions, motivation and background for the other two in the section Anti-utilitarian theorems. I won't include proofs for 2 or 3; see the papers instead. Along the way, I will argue based on them that all (or most standard) forms of utilitarianism are irrational, or the standard arguments used in defense of principles in support of utilitarianism actually extend to principles that undermine utilitarianism. Then, in the last section, Responses, I consider some responses and respond to them.

Unbounded utility functions are irrational

Expected utility maximization with an unbounded utility function is probably (instrumentally) irrational, because it recommends, in some hypothetical scenarios, choices leading to apparently irrational behaviour. This includes foreseeable sure losses — a money pump —, and paying to avoid information, among others, following from the violation of extensions of the Independence axiom[5] and Sure-Thing Principle[6] (Russell and Isaacs, 2021, p.3-5).[7] The issue comes from St Petersburg game-like prospects: prospects with infinitely many possible outcomes, each of finite utility, but with overall infinite (or undefined) expected utility, as well as generalizations of such prospects.[4] Such a prospect is, counterintuitively, better than each of its possible outcomes.[8]

The original St Petersburg game is a prospect that with probability 1/2n gives you $2n, for each positive integer n (Peterson, 2023). The expected payout from this game is infinite,[9] even though each possible outcome is finite. But it's not money we care about in itself.

Suppose you have an unbounded real-valued utility function u.[4] Then it’s unbounded above or below. Assume it’s unbounded above, as a symmetric argument applies if it’s only unbounded below. Then, being unbounded above implies that it takes some utility value u(x)>0, and for each utility value u(x)>0, there’s some outcome x′ such that u(x′)≥2u(x). Then we can construct a countable sequence of outcomes, x1,x2,…,xn, with u(xn+1)≥2u(xn) for each n>1, as follows:

- Choose an outcome x1 such that u(x1)>0.

- Choose an outcome x2 such that u(x2)≥2u(x1).

- …

- Choose an outcome xn+1 such that u(xn+1)≥2u(xn).

- …

Define a prospect X as follows: with probability 1/2n,X=xn. Then, E[u(X)]=∞,[10] and X is better than any prospect with finite expected utility.[11]

St Petersburg game-like prospects lead to violations of generalizations of the Independence axiom and the Sure-Thing Principle to prospects over infinitely (countably) many possible outcomes (Russell and Isaacs, 2021).[12] The corresponding standard finitary versions are foundational principles used to establish expected utility representations of preferences in the von Neumann-Morgenstern utility theorem (von Neumann and Morgenstern, 1944) and Savage’s theorem (Savage, 1972), respectively. The arguments for the countable generalizations are essentially the same as those for the standard finitary versions (Russell and Isaacs, 2021), and in the following subsection, I will illustrate one: a money pump argument. So, if money pumps establish the irrationality of violations of the standard finitary Sure-Thing Principle, they should too for the countable version. Then maximizing the expected value of an unbounded utility function is irrational.

A money pump argument

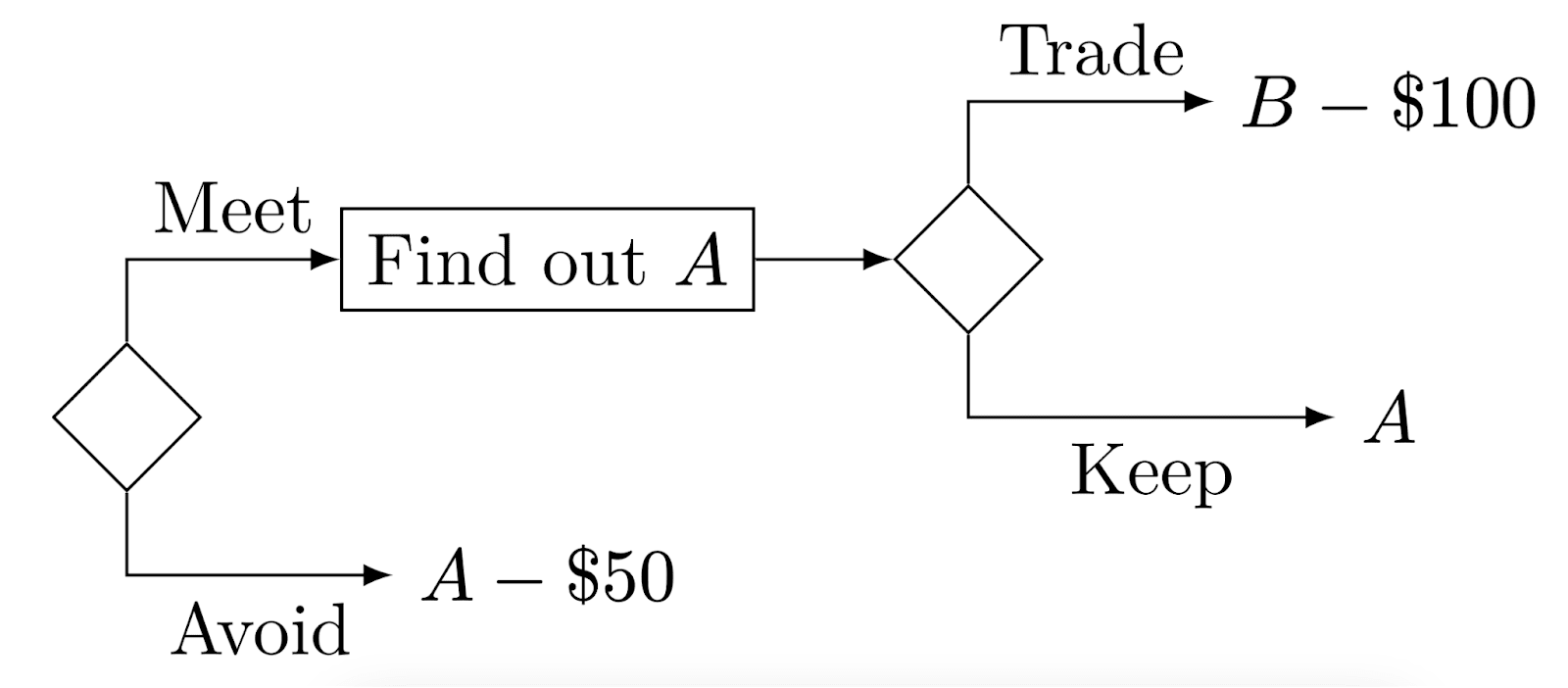

Consider the following hypothetical situation, adapted from Russell and Isaacs, 2021, but with a genie instead. It’s the same kind of money pump that would be used in support of the Sure-Thing Principle, and structurally nearly identical to the one to used to defend Independence in Gustafsson, 2022.

You are facing a prospect A with infinite expected utility, but finite utility no matter what actually happens. Maybe A is your own future and you value your years of life linearly, and could live arbitrarily but finitely long, and so long under some possibilities that your life expectancy and corresponding expected utility is infinite. Or, you're an expectational total utilitarian, and thinking about the value in distant parts of the universe (or multiverse), with infinite expected value but almost certainly finite.[13]

Now, there’s an honest and accurate genie — or God or whoever’s simulating our world or an AI with extremely advanced predictive capabilities — that offers to tell you exactly how A will turn out.[14] Talking to them and finding out won’t affect A or its utility, they’ll just tell you what you’ll get. The genie will pester you unless you listen or you pay them $50 to go away. Since there’s no harm in finding out, and no matter what happens, being an extra $50 poorer is worse, because that $50 could be used for ice cream or bed nets,[15] you conclude it's better to find out.

However, once you do find out, the result is, as you were certain it would be, finite. The genie turns out to be very powerful, too, and feeling generous, offers you the option to metaphorically reroll the dice. You can trade the outcome of A for a new prospect B with the same distribution as you had for A from before you found out, but statistically independent from the outcome of A. B would have been equivalent, because the distributions would have been the same, but B now looks better because the outcome of A is only finite. But, you’d have to pay the genie $100 for B. Still, $100 isn’t enough to drop the expected utility into the finite, and this infinite expected utility is much better than the finite utility outcome of A. You could refuse, but it's a worthwhile trade to make, so you do it.

But then you step back and consider what you've just done. If you hadn't found out the value of A, you would have stuck with it, since A was better than B - $100 ahead of time: A was equivalent to a prospect, the prospect B, that's certainly better than B - $100. You would have traded the outcome of A away for B - $100 no matter what the outcome of A would be, even though A was better ahead of time than B - $100. It was equivalent to B, and B - $100 is strictly worse, because it's the same but $100 poorer no matter what.

Not only that, if you hadn't found out the value of A, you would have no reason to pay for B. Even A - $50 would have been better than B - $100. Ahead of time, if you knew what the genie was going to do, but not the value of A, ending up with B - $100 would be worse than each of A and A - $50.

Suppose you're back at the start before knowing A and with the genie pestering you to hear how it will turn out. Suppose you also know ahead of time that the genie will offer you B for $100 no matter the outcome of A, but you don't yet know how A will turn out. Predicting what you'd do to respect your own preferences, you reason that if you find out A, no matter what it is, you'd pay $100 for B. In other words, accepting the genie's offer to find out A actually means ending up with B - $100 no matter what. So, really, accepting to find out A from the genie just is B - $100. But B - $100 is also worse than A - $50 (you're guaranteed to be $50 poorer than with B - $50, which is equivalent to A - $50). It would have been better to pay the genie $50 to go away without telling you how A will go.

So this time, you pay the genie $50 to go away, to avoid finding out true information and making a foreseeably worse decision based on it. And now you're out $50, and definitely worse off than if you could have stuck through with A, finding out its value and refusing to pay $100 to switch to B. And you had the option to stick with A though the whole sequence and could have, if only you wouldn't trade it away for B at a cost of $100.

So, whatever strategy you follow, if constrained within the options I described, you will act irrationally. Specifically, either

- With nonzero probability, you will refuse to follow your own preferences when offered B - $100 after finding out A, which would be irrational then (Gustafsson, 2022 and Russell and Isaacs, 2021 argue similarly against resolute choice strategies). Or,

- You pay the genie $50 at the start, leaving you with a prospect that’s certainly worse than one you could have ended up with, i.e. A without paying, and so irrational. This also looks like paying $50 to not find out A.

You're forced to act irrationally either way.

Anti-utilitarian theorems

Harsanyi, 1955 proved that our social (or moral or impersonal) preferences over prospects should be to maximize the expected value of a weighted sum of individual utilities in fixed population cases, assuming our social preferences and each individual’s preferences (or betterness) satisfy the standard axioms of expected utility theory and assuming our social preferences satisfy Ex Ante Pareto. Ex Ante Pareto is defined as follows: if between two options, A and B, everyone is at least as well off ex ante — i.e. A is at least as good as B for each individual —, then A⪰B according to our social preferences. Under these assumptions, according to the theorem, each individual in the fixed population has a utility function, ui, and our social preferences over prospects for each fixed population can be represented by the expected value of a utility function, this function equal to a linear combination of these individual utility functions, ∑Ni=1aiui. In other words,

A⪰B if and only if E[∑Ni=1aiui(A)]≥E[∑Ni=1ui(B)].

Now, if each individual’s utility function in a fixed finite population is bounded, then our social welfare function for that population, from Harsanyi’s theorem, would also be bounded. One might expect the combination of total utilitarianism and Harsanyi’s theorem to support expectational total utilitarianism.[16] However, either the axioms themselves (e.g. the continuity/Archimedean axiom, or general versions of Independence or the Sure-Thing Principle) rule out expectational total utilitarianism, or the kinds of arguments used to defend the axioms (Russell and Isaacs, 2021). For example, essentially the same money pump argument, as we just saw, can be made against it. So, in fact, rather than supporting total utilitarianism, the arguments supporting the axioms of Harsanyi’s theorem refute total utilitarianism.

Perhaps you’re unconvinced by money pump arguments (e.g. Halstead, 2015) or expected utility theory in general. Harsanyi’s theorem has since been generalized in multiple ways. Recent results, without relying on the Independence axiom or Sure-Thing Principle at all, effectively obtain expectational utilitarianism in finite population cases or views including it as a special case, and with some further assumptions, expectational total utilitarianism specifically (McCarthy et al., 2020, sections 4.3 and 5 of Thomas, 2022, Gustafsson et al., 2023). They therefore don’t depend on support from money pump arguments either. In deterministic finite population cases and principles constrained to those cases, arguments based on Separability have also been used to support utilitarianism or otherwise additive social welfare functions (e.g. Theorem 3 of Blackorby et al., 2002 and section 5 of Thomas, 2022). So, there are independent arguments for utilitarianism, other than Harsanyi's original theorem.

However, recent impossibility results undermine them all, too. Given a preorder over prospects[17]:

- Goodsell, 2021 shows Stochastic Dominance, Anteriority and Impartiality are jointly inconsistent. This follows from certain St Petersburg game-like prospects over the population size but constant welfare levels. It also requires an additional weak assumption that most impartial axiologies I’ve come across satisfy[18]: there's some finite population of equal welfare such that adding two more people with the same welfare is either strictly better or strictly worse. For example, if everyone has a hellish life, adding two more people with equally hellish lives should make things worse.

- Russell, 2023 (Theorem 4) shows “Stochastic Dominance, Separability, and Compensation are jointly inconsistent”. As a corollary, Stochastic Dominance, Separability and Impartiality are jointly inconsistent, because Impartiality implies Compensation.

Russell, 2023 has some other impossibility results of interest, but I’ll focus on Theorem 4. I will define and motivate the remaining conditions here. See the papers for the proofs, which are short but technical.

Stochastic Dominance is generally considered to be a requirement of instrumental rationality, and it is a combination of two fairly obvious principles, Stochastic Equivalence and Statewise Dominance (e.g. Tarsney, 2020, Russell, 2023[19]). Stochastic Equivalence requires us to treat two prospects as equivalent if for each set of outcomes, the two prospects are equally likely to have their outcome in that set, and we call such prospects stochastically equivalent. For example, if I win $10 if a coin lands heads, and lose $10 if it lands tails, that should be equivalent to me to winning $10 on tails and losing $10 on heads, with a perfectly 50-50 coin. It shouldn’t matter how the probabilities are arranged, as long as each outcome occurs with the same probability. Statewise Dominance requires us to treat a prospect A as at least as good as B if A is at least as good as B with probability 1, and we’d say A statewise dominates B in that case.[20] It further requires us to treat A as strictly better than B, if on top of being at least as good as B with probability 1, A is strictly better than B with some positive probability, and in this case A strictly statewise dominates B. Informally, A statewise dominates B if A is always at least as good as B, and A strictly statewise dominates B if on top of that, A can also be better than B.

If instrumental rationality requires anything at all, it’s hard to deny that it requires respecting Stochastic Equivalence and Statewise Dominance. And, you respect Stochastic Dominance if and only if you respect both Stochastic Equivalence and Statewise Dominance, assuming transitivity. We’ll say A stochastically dominates B if there are prospects A′ and B′ to which A and Bare respectively stochastically equivalent and such that A′ statewise dominates B′ (we can in general take A′=A or B′=B, but not both), and A strictly stochastically dominates B if there are such A′ and B′ such that A′ strictly statewise dominates B′.

Impartiality can be stated in multiple equivalent ways for outcomes (deterministic cases) in finite populations:

- only the distribution of welfares — the number of individuals at each welfare level (or lifetime welfare profiles) — matter in a population matter, not who realizes them or where or when they are realized, or

- we can replace in an individual in any outcome with another individual at the same welfare level (or lifetime welfare profile), and the two outcomes will be equivalent.

Compensation is roughly the principle “that we can always compensate somehow for making things worse nearby, by making things sufficiently better far away (and vice versa)” (Russell, 2023). It is satisfied pretty generally by theories that are impartial in deterministic finite cases, including total utilitarianism, average utilitarianism, variable value theories, prioritarianism, critical-level utilitarianism, egalitarianism and even person-affecting versions of any of these views. In particular, theoretically “moving” everyone to nearby or “moving” everyone to far away without changing their welfare levels suffices.

Anteriority is a weaker version of Ex Ante Pareto: our social preferences are indifferent between two prospects whenever each individual is indifferent. The version Goodsell, 2021 uses, however, is stronger than typical statements of Anteriority and requires its application across different number cases:

If each possible person is equally likely to exist in either of two prospects, and for each welfare level, each person is, conditional on their existence, equally likely to have a life at least that good on either prospect, then those prospects are equally good overall.

This version is satisfied by expectational total utilitarianism, at least when the sizes of the populations in the prospects being compared are bounded by some finite number.

Separability is roughly the condition that parts of the world unaffected in a choice between two prospects can be ignored for ranking those prospects. What’s better or permissible shouldn’t depend on how things went or go for those unaffected by the decision.[21] Or, following Russell, 2023, what we should do that only affects what’s happening nearby (in time and space) shouldn’t depend on what’s happening far away. In particular, in support of Separability and initially raised against average utilitarianism, there’s the Egyptology objection: the study of ancient Egypt and the welfare of ancient Egyptians “cannot be relevant to our decision whether to have children” (Parfit 1984, p. 420).[22]

Separability can be defined as follows: for all prospects X, Y and B concerning outcomes for entirely separate things from both X and Y,

X⪰Y if and only if X⊕B⪰Y⊕B,

where ⊕ means combining or concatenating the prospects. For example, B could be the welfare of ancient Egyptians, while X and Y are the welfare of people today; the two may not be statistically independent, but they are separate, concerning disjoint sets of people and welfare levels. Average utilitarianism, many variable value theories and versions of egalitarianism are incompatible with Separability.

Separability is closely related to Anteriority and Ex Ante Pareto. Of course, Harsanyi’s theorem establishes Separability based on Ex Ante Pareto (or Anteriority) and axioms of Expected Utility Theory in fixed finite population cases, but we don’t need all of Expected Utility Theory. Separability, or at least in a subset of cases, follows from Anteriority (or Ex Ante Pareto) and some other modest assumptions, e.g. section 4.3 in Thomas, 2022. On the other hand, a preorder satisfying Separability, and in one-person cases, Anteriority or Ex Ante Pareto, will also satisfy Anteriority or Ex Ante Pareto, respectively, in fixed finite population cases.

So, based on the two theorems, if we assume Stochastic Dominance and Impartiality,[23] then we can’t have Anteriority (unless it’s not worse to add more people to hell) or Separability. Anteriority and Separability are principles used to support utilitarianism, or at least natural generalizations of them defensible by essentially the same arguments. This substantially undermines all arguments for utilitarianism based on these principles. And my impression is that there aren’t really any other good arguments for utilitarianism, but I welcome readers to point any out!

Summary so far

To summarize the arguments so far (given some basic assumptions):

- Unbounded utility functions and expectational total utilitarianism in particular are irrational because of essentially the same arguments as those used to support expected utility theory in the first place, including money pumps.

- All plausible views either give up an even more basic requirement of rationality, Stochastic Dominance, or one of two other principles — or natural extensions that can be motivated the same way — used to defend utilitarianism, i.e. Impartiality or Anteriority.

- All plausible views either give up Stochastic Dominance, or one of two other principles — or natural extensions that can be motivated the same way — used to defend utilitarianism, i.e. Compensation (and so Impartiality) or Separability.

- Together, it seems like the major arguments for utilitarianism in the first place actually undermine utilitarianism.

Responses

Things look pretty bad for unbounded utility functions and utilitarianism. However, there are multiple responses someone might give in order to defend them, and I consider three here:

- We only need to satisfy versions of the principles concerned with prospects with only finitely many outcomes, because infinities are too problematic generally.

- Accept irrational behaviour (at least in some hypotheticals) or deny its irrationality.

- Accept violating foundational principles for utilitarianism in the general cases, but this only somewhat undermines utilitarianism, as other theories may do even worse.

- EDIT to add: The results undermine many other views, not just utilitarianism.

To summarize my opinion on these, I think 1 is a bad argument, but 2, 3 and 4 seem defensible, although 2 and 3 accept that expected utility maximization and utilitarianism are at least somewhat undermined, respectively. On 4, I still think utilitarianism takes the bigger hit, but that doesn't mean it's now less plausible than alternatives. I elaborate below.

Infinities are generally too problematic

First, one might claim the generalizations of axioms of expected utility theory, especially Independence or the Sure-Thing Principle, or even Separability, as well money pumps and Dutch books in general, should count only for prospects over finitely many possible outcomes, given other problems and paradoxes with infinities for decision theory, even expected utility theory with bounded utilities, as discussed in Arntzenius et al., 2004, Peterson, 2016 and Bales, 2021. Expected utility theory with unbounded utilities is consistent with the finitary versions, and some extensions of finitary expected utility theory are also consistent with Stochastic Dominance applied over all prospects, including those with infinitely many possible outcomes (Goodsell, 2023, see also earlier extensions of finitary expected utility to satisfy statewise dominance in Colyvan, 2006, Colyvan, 2008, which can be further extended to satisfy Stochastic Dominance[24]). Stochastic Dominance, Compensation and the finitary version of Separability are also jointly consistent (Russell, 2023). However, I find this argument unpersuasive:

- Plausible and rational decision theories can accommodate infinitely many outcomes, e.g. with bounded utility functions. Not all uses of infinities are problematic for decision theory in general, so the argument from other problems with infinities doesn’t tell us much about these problems. Measure theory and probability theory work fine with these kinds of infinities. The argument proves too much.

- It’s reasonable to consider prospects with infinitely many possible outcomes in practice (e.g. for the “lifetime” of our universe, for sizes of the multiverse, the possibility of continuous spacetime, for the number of moral patients in our multiverse, Russell, 2023), and it’s plausible that all of our prospects have infinitely many possible outcomes, so our decision theory should handle them well. One might claim that we can uniformly bound the number of possible outcomes by a finite number across all prospects. But consider the maximum number across all prospects, and a maximally valuable (or maximally disvaluable) but finite value outcome. We should be able to consider another outcome not among the set. Add a bit more consciousness in a few places, or another universe in the multiverse, or extend the time that can support consciousness a little. So, the space of possibilities is infinite, and it’s reasonable to consider prospects with infinitely many possible outcomes. Furthermore, a probabilistic mixture of any prospect with a heavy-tailed prospect (St Petersburg-like, infinite or undefined expected utility) is heavy-tailed. If you think there's some nonzero chance that it's heavy-tailed, then you should believe now that it's heavy-tailed. If you think there's some nonzero chance that you'd come to believe there's some nonzero chance that it's heavy-tailed, then you should believe now that it's heavy-tailed. You'd need absolute certainty to deny this.

- It’s plausible that if we have an unbounded utility function (or similarly unbounded preferences), we are epistemically required to treat all of our prospects as involving St Petersburg game-like subdistributions, because we can’t justify ruling them out with certainty (see also Cromwell's rule - Wikipedia). It would be objectionably dogmatic to rule them out.

- This doesn’t prevent irrational behaviour in theory. If we refuse to rank St Petersburg-like prospects as strictly preferable to each of their outcomes, we give up statewise (and stochastic) dominance or transitivity (see the previous footnote [11]), each of which is irrational. If we don’t (e.g. following Goodsell, 2023), the same arguments that support the finite versions of Independence and the Sure-Thing Principle can be made against the countable versions (e.g. Russell and Isaacs, 2021, the money pump argument earlier). And the Egyptology objection for Separability generalizes, too (as pointed out in Russell, 2023). If those arguments don’t have (much) force in the general cases, then they shouldn’t have (much) force in the finitary cases, because the arguments are the same.

Accept irrational behaviour or deny its irrationality

A second response is to just bite the bullet and accept apparently irrational behaviour in some (at least hypothetical) circumstances, or deny that it is in fact irrational at all. However, this, too, weakens the strongest arguments for expected utility maximization. The hypothetical situations where irrational decisions would be forced could be unrealistic or very improbable, and so seemingly irrational behaviour in them doesn’t matter, or matters less. The money pump I considered doesn’t seem very realistic, and it’s hard to imagine very realistic versions. Finding out the actual value (or a finite upper bound on it) of a prospect with infinite expected utility conditional on finite actual utility would realistically require an unbounded amount of time and space to even represent. Furthermore, for utility functions that scale relatively continuously with events over space and time, with unbounded time, many of the events contributing utility will have happened, and events that have already happened can’t be traded away. That being said:

- The issues with the money pump argument don't apply to the impossibility theorems for Stochastic Dominance, Impartiality (or Compensation) and Anteriority or Separability. Those are arguments about the right kinds of views to hold. The proofs are finite, and at no point do we need to imagine someone or something with arbitrarily large representations of value (except the outcome being a representation of itself).

- I expect the issue about events already happening to be addressable in principle by just subtracting from B - $100 the value in A already accumulated in the time it took to estimate the actual value of A, assuming this can be done without all of A’s value having already been accumulated.

Still, let's grant that there's something to this, and we don't need to be meet these requirements all of the time or at least in all hypotheticals. Then, other considerations, like Separability, can outweigh them. However, if expectational total utilitarianism is still plausible despite irrational behaviour in unrealistic or very improbable situations, then it seems irrational behaviour in unrealistic or very improbable situations shouldn’t count decisively against other theories or other normative intuitions. So, we open up the possibility to decision theories other than expected utility theory. Furthermore, the line for “unrealistic or very improbable” seems subjective, and if we draw a line to make an exception for utilitarianism, there doesn’t seem to be much reason why we shouldn’t draw more permissive lines to make more exceptions.

Indeed, I don’t think instrumental rationality or avoiding money pumps in all hypothetical cases is normatively required, and I weigh them with my other normative intuitions, e.g. epistemic rationality or justifiability (e.g. Schoenfield, 2012 on imprecise credences). I’d of course prefer to be money pumped or violate Stochastic Dominance less. However, a more general perspective is that foreseeably doing worse by your own lights is regrettable, but regrettable only to the extent of your actual losses from it. There are often more important things to worry about than such losses, like situations of asymmetric information, or just doing better by the lights of your other intuitions. Furthermore, having to abandon another principle or reason you find plausible or otherwise change your views just to be instrumentally rational can be seen as another way of foreseeably doing worse by your own lights. I'd rather hypothetically lose than definitely lose.

Sacrifice or weaken utilitarian principles

A third response is of course to just give up or weaken one or more of the principles used to support utilitarianism. We could approximate expectational total utilitarianism with bounded utility functions or just use stochastic dominance over total utility (Tarsney, 2020), even agreeing in all deterministic finite population cases, and possibly “approximately” satisfying these principles in general. We might claim that moral axiology should only be concerned with betterness per se and deterministic cases. On the other hand, risk and uncertainty are the domains of decision theory, instrumental rationality and practical deliberation, just aimed at ensuring we act consistently with our understanding of betterness. What you have most reason to do is whatever maximizes actual total welfare, regardless of your beliefs about what would achieve this. It’s not a matter of rationality that what you should do shouldn’t depend on things unaffected by your decisions even in uncertain cases or that we should aim to maximize each individual’s expected utility. Nor are these matters of axiology, if axiology is only concerned with deterministic cases. So, Separability and Pareto only need to apply in deterministic cases, and we have results that support total utilitarianism in finite deterministic cases based on them, like Theorem 3 of Blackorby et al., 2002 and section 5 of Thomas, 2022.

That the deterministic and finitary prospect versions of these principles are jointly consistent and support (extensions of) (expectational total) utilitarianism could mean arguments defending these principles provide some support for the view, just less than if the full principles were jointly satisfiable. Other views will tend to violate restricted or weaker versions or do so in worse ways, e.g. not just failing to preserve strict inequalities in Separability but actually reversing them. Beckstead and Thomas, 2023 (footnote 19) point to “the particular dramatic violations [of Separability] to which timidity leads.” If we find the arguments for the principles intuitively compelling, then it’s better, all else equal, for our views to be “more consistent” with them than otherwise, i.e. satisfy weaker or restricted versions, even if not perfectly consistent with the general principles. Other views could still just be worse. Don't let the perfect be the enemy of the good, and don't throw the baby out with the bathwater.

It's not just utilitarianism

EDIT: A final response is to point out that these results undermine much more than just utilitarianism. If we give up Anteriority, then we give up Strong Ex Ante Pareto, and if we give up Strong Ex Ante Pareto, we have much less reason to satisfy its restriction to deterministic cases, Strong Pareto, because similar arguments support both. Strong Pareto seems very basic and obvious: if we can make an individual or multiple individuals better off without making anyone worse off,[25] we should. Having to give up Impartiality or Anteriority, and therefore it seems, Impartiality or Strong Pareto, puts us in a similar situation as infinite ethics, where extensions of Impartiality and Pareto are incompatible in deterministic cases with infinite populations (Askell, 2018, Askell, Wiblin and Harris, 2018). However, in response, I do think there's at least one independent reason to satisfy Strong Pareto but not (Strong) Ex Ante Pareto or Anteriority: extra concern for those who end up worse off (ex post equity) like an (ex post) prioritarian, egalitarian or sufficientarian. Priority for the worse off doesn't give us a positive argument to have a bounded utility function in particular or avoid the sorts of problems here (even if not exactly the same ones). It just counts against some positive arguments to have an unbounded utility function, specifically the ones depending on Anteriority or Ex Ante Pareto. But that still takes away more from what favoured utilitarianism than from what favoured, say, (ex post) prioritarianism. It doesn't necessarily make prioritarianism or other views more plausible than utilitarianism, but utilitarianism takes the bigger hit to its plausibility, because what seemed to favour utilitarianism so much has turned out to not favour it as much as we thought. You might say utilitarianism had much more to lose, i.e. Harsanyi's theorem and generalizations.

Acknowledgements

Thanks to Jeffrey Sanford Russell for substantial feedback on a late draft, as well as Justis Mills and Hayden Wilkinson for helpful feedback on an earlier draft. All errors are my own.

- ^

An individual’s welfare can be the value of their own utility function, although preferences or utility functions defined in terms of each other can lead to contradictions through indirect self-reference (Bergstrom, 1989, Bergstrom, 1999, Vadasz, 2005, Yann, 2005 and Dave and Dodds, 2012). I set aside this issue here.

- ^

This argument works with a step size that's bounded below, even by a tiny value, like 1 millionth of a second or 1 millionth more (counterfactual) utility. If the step sizes have to keep getting smaller and smaller and converge to 0, then we may never reach K.

- ^

Although there are stronger arguments that's actually infinite. It's one of the simplest and most natural models that fits with our observations of global flatness. See the Wikipedia article Shape of the Universe.

- ^

For generalizations without actual utility values, see violations of Limitedness in Russell and Isaacs, 2021 and reckless preferences in Beckstead and Thomas, 2023.

- ^

Independence: For any prospects X, Y and Z, and probability p,0<p<1, if X<Y, then pX+(1−p)Z<pY+(1−p)Z,

where pX+(1−p)Z is the prospect that's X with probability p, and Z with probability 1−p.

Russell and Isaacs, 2021 define Countable Independence as follows:

∑ipiXi≲∑ipiYiFor any prospects X1,X2,…, and Y1,Y2,…, and any probabilities p1,p2,… that sum to one, if X1≲Y1,X2≲Y2,…, then

∑ipiXi<∑ipiYiIf furthermore Xj<Yj for some j such that pj>0, then

The standard finitary Independence axiom is a special case.

- ^

The Sure Thing Principle can be defined as follows:

Let A and B be prospects, and let E be some event with probability neither 0 nor 1. If A≲B conditional on each of E and not E, then A≲B. If furthermore, A<B conditional on E or A < B conditional on not E, then A<B.

In other words, if we weakly prefer B either way, then we should just weakly prefer B. And if, furthermore, we strictly prefer B on one of the two possibilities, then we should just strictly prefer B.

Russell and Isaacs, 2021 define the Countable Sure Thing Principle as follows:

Let A and B be prospects, and let E be a (countable) set of mutually exclusive and exhaustive events, each with non-zero probability. If A≲B conditional on each E∈E, then A≲B. If furthermore, A<B conditional on some E∈E, then A<B.

- ^

See also Christiano, 2022. Both depend on St Petersburg game-like prospects with infinitely many possible outcomes and, when defined, infinite expected utility. For more on the St Petersburg paradox, see Peterson, 2023. Some other foreseeable sure loss arguments require a finite but possibly unbounded number of choices, like McGee, 1999 and Pruss, 2022.

- ^

Or, as in Russell and Isaacs, 2021, each of the countably many prospects used to construct it.

- ^

Note that the probabilities sum to 1, because ∑∞n=11/2n=1, so this is in fact a proper probability distribution.

The expected value is ∑∞n=12n12n=limN→∞∑Nn=11=limN→∞N=∞

- ^

From u(xn+1)≥2u(xn) for each n>1, we have, by induction, u(xn+1)≥2nu(xn). Then, for each N≥1,

E[u(X)]=∑∞n=1u(xn)p(xn)=∑∞n=1u(xn)12n≥∑Nn=1u(xn)/2n≥∑Nn=12n−1u(x1)/2n=Nu(x1)/2,

which can be arbitrarily large, so E[u(X)]=∞.

- ^

This would follow either by extension to expected utilities over countable prospects, or assuming we respect Statewise Dominance and transitivity.

For the latter, we can modify the prospect to a truncated one with finitely many outcomes XN for each N>1, by defining XN=X if X<xN, and XN=xN (or x1) otherwise. Then E[u(XN)] is finite for each N, but limN→∞E[u(XN)]=∞. Furthermore, for each N, not only is it the case that E[u(X)]=∞>E[u(XN)], but X also strictly statewise dominates XN, i.e. X is with certainty at least as good as XN, and is, with nonzero probability, strictly better. So, given any prospect Y with finite (expected) utility, there’s an N such that E[u(XN)]>E[u(Y)], so XN≻Y, but since X≻XN, by transitivity, X≻Y.

- ^

For Countable Independence: We defined X=∑∞n=112nxn. We can let Xn=xn in the definition of Countable Independence. However, it's also the case that X=∑∞n=112nX, so we can let Yn=X in the definition of Countable Independence. But Yn=X>xn=Xn for each n, so by Countable Independence, X>X, contrary to reflexivity.

For the Countable Sure-Thing Principle: define Y to be identically distributed to X but independent from X. Let E={X=xn|n≥1}. Y>xn for each n, so Y>X conditional on X=xn, for each n. By the Countable Sure-Thing Principle, this would imply Y>X. However, doing the same with E={Y=xn|n≥1} also gives us X>Y, violating transitivity.

These arguments extend to the more general kinds of improper prospects in Russell and Isaacs, 2021.

- ^

In practice, you should give weight to the possibility that it has infinite or undefined value. However, the argument that follows can be generalized to this case using stochastic dominance reasoning or, if you do break ties between actual infinities, any reasonable way of doing so.

- ^

Or give you an accurate finite upper bound on how it will turn out.

- ^

And the genie isn’t going to do anything good with it.

- ^

Interestingly, if expectational total utilitarianism is consistent with Harsanyi’s theorem, then it is not the only way for total utilitarianism to be consistent with Harsanyith’s theorem. Say individual welfare takes values in the interval [2,3]. Then the utility functions ∑Nn=1ui+N and N∑Nn=1ui agree with both Harsanyi’s theorem and total utilitarianism. According to them, a larger population is always better than a smaller population, regardless of the welfare levels in each. However, some further modest assumptions give us expectational total utilitarianism, e.g. each individual can welfare level 0.

- ^

So, assuming reflexivity, transitivity, the Independence of Irrelevant Alternatives. Also, we need the set of prospects to be rich enough to include some of the kinds of prospects used in the proofs.

- ^

Exceptions include average utilitarianism, symmetric person-affecting views, maximin and maximax.

- ^

Russell, 2023 writes:

Stochastic Dominance is a fairly uncontroversial principle of decision theory—even among those who reject other parts of standard expectational decision theory (such as Quiggin, 1993; Broome, 2004), and even in settings where other parts of standard expectational decision theory give out (see for example Easwaran, 2014).10 We should not utterly foreclose giving up Stochastic Dominance—we are facing paradoxes, so some plausible principles will have to go—but I do not think this is a very promising direction. In what follows, I will take Stochastic Dominance for granted.

and in footnote 10:

For other defenses of Stochastic Dominance, on which I here have drawn, see Tarsney (2020, 8); Wilkinson (2022, 10); Bader (2018).

- ^

There is some controversy here, because we might instead say that A statewise dominates B if and only if A is at least as good as B under every possibility, including each possibility with probability 0. Russell, 2023 writes:

For example, the probability of an ideally sharp dart hitting a particular point may be zero—but the prospect of sparing a child from malaria if the dart hits that point (and otherwise nothing) may still be better than the prospect of getting nothing no matter what. But these two prospects are stochastically equivalent. Perhaps what is best depends on what features of its outcomes are sure—where in general this can come apart from what is almost sure—that is, has probability one.

However, I don’t think this undermines the results of Russell, 2023, because the prospects considered don’t disagree on any outcomes of probability 0.

- ^

Insofar as it isn’t evidence for how well off moral patients today and in the future can or will be, and ignoring acausal influence.

- ^

The same objection is raised earlier in McMahan, 1981, p. 115 referring to past generations more generally. See also discussion of it and similar objections in Huemer, 2008, Wilkinson, 2022, Beckstead and Thomas, 2023, Wilkinson, 2023 and Russell, 2023.

- ^

And a single preorder over prospects, so transitivity, reflexivity and the independence of irrelevant alternatives, and a rich enough set of possible prospects.

- ^

These can be extended to satisfy Stochastic Dominance by making stochastically equivalent prospects equivalent and taking the transitive closure to get a new preorder.

- ^

Or, while keeping everyone at least as well off, in cases of incomparability.

MichaelStJules @ 2023-10-08T01:06 (+14)

I'd be happy to get constructive criticism, given downvotes I was getting soon after posting. I'll leave some comment replies here for people to agreevote/disagreevote with in case they want to stay anonymous. I also welcome feedback as comments here or private messages.

I've removed my own upvotes from these comments so this thread doesn't start at the top of the comment section. EDIT: Also, keep my comments in this thread at 0 karma if you want to avoid affecting my karma for making so many comments.

Arepo @ 2023-10-09T21:46 (+8)

I haven't downvoted it, and I'm sorry you're getting that response for a thoughtful and in-depth piece of work, but I can offer a couple of criticisms I had that have stopped me upvoting it yet because I don't feel like I understand it, mixed in with a couple of criticisms where I feel like I did:

- Too much work done by citations. Perhaps it's not possible to extract key arguments, but most philosophy papers IME have their core point in just a couple of paragraphs, which you could quote, summarise or refer to more precisely than a link to the whole paper. Most people on this forum just won't have the bandwidth to go digging through all the links.

- The arguments for infinite prospective utility didn't hold up for me. A spatially infinite universe doesn't give us infinite expectation from our action - even if the universe never ends, our light cone will always be finite. Re Oesterheld's paper, acausal influence seems an extremely controversial notion in which I personally see no reason to believe. Certainly if it's a choice between rejecting that or scrabbling for some alternative to an intuitive approach that in the real world has always yielded reasonable solutions, I'm happy to count that as a point against Oesterheld.

- Relatedly, some parts I felt like you didn't explain well enough for me to understand your case, eg:

- I don't see the argument in this post for this: 'So, based on the two theorems, if we assume Stochastic Dominance and Impartiality,[18] then we can’t have Anteriority (unless it’s not worse to add more people to hell) or Separability.' It seemed like you just attempted to define these things and then asserted this - maybe I missed something in the definition?

- 'You are facing a prospect with infinite expected utility, but finite utility no matter what actually happens. Maybe is your own future and you value your years of life linearly, and could live arbitrarily but finitely long, and so long under some possibilities that your life expectancy and corresponding expected utility is infinite.' I don't see how this makes sense. If all possible outcomes have me living a finite amount of time and generating finite utility per life-year, I don't see why expectation would be infinite.

- Too much emphasis on what you find 'plausible'. IMO philosophy arguments should just taboo that word.

MichaelStJules @ 2023-10-10T06:50 (+4)

Thanks for the feedback and criticism!

Too much work done by citations.

Hmm, I didn't expect or intend for people to dig through the links, but it looks like I misjudged what things people would find cruxy for the rest of the arguments but not defended well enough, e.g. your concerns with infinite expected utility.

EDIT: I've rewritten the arguments for possibly unbounded impacts.

The arguments for infinite prospective utility didn't hold up for me. A spatially infinite universe doesn't give us infinite expectation from our action - even if the universe never ends, our light cone will always be finite.

But can you produce a finite upper bound on our lightcone that you're 100% confident nothing can pass? (It doesn't have to be tight.) If not, then you could consider a St Petersburg-like prospect, with for each , has probability of size (or impact) , in whatever units you're using. That's finite under every possible outcome, but it has an infinite expected value.

Re Oesterheld's paper, acausal influence seems an extremely controversial notion in which I personally see no reason to believe.

Section II from Carlsmith, 2021 is one of the best arguments for acausal influence I'm aware of, in case you're interested in something more convincing. (FWIW, I also thought acausal influence was crazy for a long time, and I didn't find Newcomb's problem to be a compelling reason to reject causal decision theory.)

EDIT: I've now cut the acausal stuff and just focus on unbounded duration.

It seemed like you just attempted to define these things and then asserted this - maybe I missed something in the definition?

This follows from the theorems I cited, but I didn't include proofs of the theorems here. The proofs are technical and tricky,[1] and I didn't want to make my post much longer or spend so much more time on it. Explaining each proof in an intuitive way could probably be a post on its own.

I don't see how this makes sense. If all possible outcomes have me living a finite amount of time and generating finite utility per life-year, I don't see why expectation would be infinite.

How long you live could be distributed like a St Petersburg gamble, e.g. for each , with probability ., you could live years. The expected value of that is infinite, even though you'd definitely only live a finite amount of time.

Too much emphasis on what you find 'plausible'. IMO philosophy arguments should just taboo that word.

Ya, I got similar feedback on an earlier draft for making it harder to read, and tried to cut some uses of the word, but still left a bunch. I'll see if I can cut some more.

- ^

They work by producing some weird set of prospects. They then show that you can't order them in a way that satisfies the axioms, applying them one-by-one and then violating one of them or getting a contradiction.

Arepo @ 2023-10-10T09:14 (+2)

But can you produce a finite upper bound on our lightcone that you're 100% confident nothing can pass? (It doesn't have to be tight.)

I think Vasco already made this point elsewhere, but I don't see why you need certainty about any specific line to have finite expectation. If for the counterfactual payoff x, you think (perhaps after a certain point) xP(x) approaches 0 as x tends to infinity, it seems like you get finite expectation without ever having absolute confidence in any boundary (this applies to life expectancy, too).

Section II from Carlsmith, 2021 is one of the best arguments for acausal influence I'm aware of, in case you're interested in something more convincing. (FWIW, I also thought acausal influence was crazy for a long time, and I didn't find Newcomb's problem to be a compelling reason to reject causal decision theory.)

Thanks! I had a look, and it still doesn't persuade me, for much the reasons Newcomb's problem didn't. In roughly ascending importance

- Maybe this just a technicality, but the claim 'you are exposed to exactly identical inputs' seems impossible to realise with perfect precision. The simulator itself must differ in the two cases. So in the same way that outputs of two instances of a software program being run, even on the same computer in the same environment can theoretically differ for various reasons (looking at a high enough zoom level they will differ), the two simulations can't be guaranteed identical (Carlsmith even admits this with 'absent some kind of computer malfunction', but just glosses over it). On the one hand, this might be too fine a distinction to matter in practice; on the other, if I'm supposed to believe a wildly counterintuitive proposition instead of a commonsense one that seems to work fine in the real world, based on supposed logical necessity that it turns out isn't logically necessary, I'm going to be very sceptical of the proposition even if I can't find a stronger reason to reject it.

- The thought experiment gives no reason why the AI system should actually believe it's in the scenario described, and that seems like a crucial element in its decision process. If in the real world, someone put me in a room with a chalkboard and told me this is what was happening, no matter what evidence they showed, I would have some element of doubt, both of their ability (cf point 1) but more importantly their motivations. If I discovered that the world was so bizarre as in this scenario, it would be at best a coinflip for me that I should take them at face value.

- It seems contradictory to frame decision theory as applying to 'a deterministic AI system' whose clones 'will make the same choice, as a matter of logical necessity'. There's a whole free will debate lurking underneath any decision theoretic discussion involving recognisable agents that I don't particularly want to get into - but if you're taking away all agency from the 'agent', it's hard to see what it means to advocate it adopting a particular decision theory. At that point the AI might as well be a rock, and I don't feel like anyone is concerned about which decision theory rocks 'should' adopt.

This follows from the theorems I cited, but I didn't include proofs of the theorems here. The proofs are technical and tricky,[1] and I didn't want to make my post much longer or spend so much more time on it. Explaining each proof in an intuitive way could probably be a post on its own.

I would be less interested to see a reconstruction of a proof of the theorems and more interested to see them stated formally and a proof of the claim that it follows from them.

MichaelStJules @ 2023-10-10T10:44 (+2)

On Carlsmith's example, we can just make it a logical necessity by assuming more. And, as you acknowledge the possibility, some distinctions can be too fine. Maybe you're only 5% sure your copy exists at all and the conditions are right for you to get $1 million from your copy sending it.

5%*$1 million = $50,000 > $1,000, so you still make more in expectation from sending a million dollars. You break even in expected money if your decision to send $1 million increases your copy's probability of sending $1 million by 1/1,000.

I do find it confusing to think about decision-making under determinism, but I think 3 proves too much. I don't think quantum indeterminacy or randomness saves free will or agency if it weren't already saved, and we don't seem to have any other options, assuming physicalism and our current understanding of physics.

MichaelStJules @ 2023-10-10T10:01 (+2)

I think Vasco already made this point elsewhere, but I don't see why you need certainty about any specific line to have finite expectation. If for the counterfactual payoff x, you think (perhaps after a certain point) xP(x) approaches 0 as x tends to infinity, it seems like you get finite expectation without ever having absolute confidence in any boundary (this applies to life expectancy, too).

Ya, I agree you don't need certainty about the bound, but now you need certainty about the distribution not being heavy-tailed at all. Suppose your best guess is that it looks like some distribution , with finite expected value. Now, I suggest that it might actually be , which is heavy-tailed (has infinite expected value). If you assign any nonzero probability to that being right, e.g. switch to for some , then your new distribution is heavy-tailed, too. In general, if you think there's some chance you'd come to believe it's heavy-tailed, then you should believe now that it's heavy-tailed, because a probabilistic mixture with a heavy-tailed distribution is heavy-tailed. Or, if you think there's some chance you'd come to believe there's some chance it's heavy-tailed, then you should believe now that it's heavy-tailed.

(Vasco's claim was stronger: the difference is exactly 0 past some point.)

I would be less interested to see a reconstruction of a proof of the theorems and more interested to see them stated formally and a proof of the claim that it follows from them.

Hmm, I might be misunderstanding.

I already have formal statements of the theorems in the post:

- Stochastic Dominance, Anteriority and Impartiality are jointly inconsistent.

- Stochastic Dominance, Separability and Impartiality are jointly inconsistent.

All of those terms are defined in the section Anti-utilitarian theorems. I guess I defined Impartiality a bit informally and might have hidden some background assumptions (preorder, so reflexivity + transitivity, and the set of prospects is every probability distribution over outcomes in the set of outcomes), but the rest were formally defined.

Then, from 1, assuming Stochastic Dominance and Impartiality, Anteriority must be false. From 2, assuming Stochastic Dominance and Impartiality, Separability must be false. Therefore assuming Stochastic Dominance and Impartiality, Anteriority and Separability must both be false.

MichaelStJules @ 2023-10-08T01:10 (+6)

The post is too long.

MichaelStJules @ 2023-10-08T01:08 (+2)

The title is bad, e.g. too provocative, clickbaity, overstates the claims or singles out utilitarianism too much (there are serious problems with other views).

EDIT: I've changed the title to "Arguments for utilitarianism are impossibility arguments under unbounded prospects". Previously, it was "Utilitarianism is irrational or self-undermining". Kind of long now, but descriptive and less provocative.

Vasco Grilo @ 2023-10-09T10:21 (+6)

Thanks for the post, Michael! I strongly endorse expectational total hedonistic utilitarianism, but strongly upvoted it for thoughtfully challenging the status-quo, and because one should be nice to other value systems.

However, total welfare, and differences in total welfare between prospects, may be unbounded, because the number of moral patients and their welfares may be unbounded. There are no 100% sure finite upper bounds on how many of them we could affect.

I agree total welfare may be unbounded for the reasons you mention, but I would say differences in total welfare between prospects have to be bounded. I think we have perfect evidential symmetry (simple cluelessness) between prospects beyond a sufficiently large (positive or negative) welfare, in which case the difference between their welfare probability density functions is exactly 0. So I believe the tails of the differences in total welfare between prospects are bounded, and so are the expected differences in total welfare between prospects. One does not know the exact points the tails of the differences in total welfare between prospects reach 0, but that only implies decisions will fall short of perfect, not that what one should do is undefined, right?

This post is concerned with the implications of prospects with infinitely many possible outcomes and unbounded but finite value, not actual infinities, infinite populations or infinite ethics generally.

I am glad you focussed on real outcomes.

One might claim that we can uniformly bound the number of possible outcomes by a finite number across all prospects. But consider the maximum number across all prospects, and a maximally valuable (or maximally disvaluable) but finite value outcome. We should be able to consider another outcome not among the set. Add a bit more consciousness in a few places, or another universe in the multiverse, or extend the time that can support consciousness a little. So, the space of possibilities is infinite, and it’s reasonable to consider prospects with infinitely many possible outcomes.

I would reply to this as follows. If "the maximum number across all prospects" is well chosen, one will have perfect evidential symmetry between any prospects for higher welfare levels than the maximum. Consequently, there will be no difference between the prospects for outcomes beyond the maximum, and the expected difference between prospects will be maintained when we "add a bit more consciousness".

MichaelStJules @ 2023-10-09T15:39 (+4)

Thanks for engaging!

On symmetry between options in the tails, if you think there's no upper bound with certainty on how long our descendants could last, then reducing extinction risk could have unbounded effects. Maybe other x-risks, too. I do think heavy tails like this are very unlikely, but it's hard to justifiably rule them out with certainty.

Or, you could have a heavy tail on the number of non-solipsist simulations, or the number of universes in our multiverse (if spatially very large, or the number of quantum branches, or the number of pocket universes, or if the universe will start over many times, like a Big Bounce, etc.), and acausal influence over what happens in them.

Derek Shiller @ 2023-10-09T01:03 (+6)

The money pump argument is interesting, but it feels strange to take away a decision-theoretic conclusion from it because the issue seems centrally epistemic. You know that the genie will give you evidence that will lead you to come to believe B has a higher expected value than A. Despite knowing this, you're not willing to change your mind about A and B without that evidence. This is a failure of van Fraassen's principle of reflection, and it's weird even setting any choices you need to make aside. That failure of reflection is what is driving the money pump. Giving up on unbounded utilities or expected value maximization won't save you from the reflection failure, so it seems like the wrong solution. Either there is a purely epistemic solution that will save you or your practical irrationality is merely the proper response to an inescapable epistemic irrationality.

MichaelStJules @ 2023-10-09T17:14 (+2)

There's also a reflection argument in Wilkinson, 2022, in his Indology Objection. Russell, 2023 generalizes the argument with a theorem:

Theorem 5. Stochastic Dominance, Negative Reflection, Background Independence, and Positive and Negative Compensation together imply Fanaticism.

and Russell, 2023 defines Negative Reflection based on Wilkinson, 2022's more informal argument as follows:

Negative Reflection.For prospects X and Y and a question Q, if X is not better than Y conditional on any possible answer to Q, then X is not better than Y unconditionally.

Background Independence is a weaker version of Separability. I think someone who denies Separability doesn't have much reason to satisfy Background Independence, because I expect intuitive arguments for Background Independence (like the Egyptology objection) to generalize to arguments for Separability.

But still, either way, Russell, 2023 proves the following:

Theorem 6. Stochastic Dominance and Negative Reflection together imply that Fanaticism is false.

This rules out expected utility maximization with unbounded utility functions.

MichaelStJules @ 2023-10-09T02:42 (+2)

Satisfying the Countable Sure-Thing Principle (CSTP, which sounds a lot like the principle of reflection) and updating your credences about outcomes properly as a Bayesian and looking ahead as necessary should save you here. Expected utility maximization with a bounded utility function satisfies the CSTP so it should be safe. See Russell and Isaacs, 2021 for the definition of the CSTP and a theorem, but it should be quick to check that expected utility maximization with a bounded utility function satisfies the CSTP.

You can also preserve any preorder over outcomes from an unbounded real-valued utility function with a bounded utility function (e.g. apply arctan) and avoid these problems. So to me it does seem to be a problem with the attitudes towards risk involved with unbounded utility functions, and it seems appropriate to consider implications for decision theory.

Maybe it is also an epistemic issue, too, though. Like it means having somehow (dynamically?) inconsistent or epistemically irrational joint beliefs.

Are there other violations of the principle of reflection that aren't avoidable? I'm not familiar with it.

Derek Shiller @ 2023-10-10T17:14 (+3)

Are there other violations of the principle of reflection that aren't avoidable? I'm not familiar with it

The case reminded me of one you get without countable additivity. Suppose you have two integers drawn with a fair chancy process that is as likely to result in any integer. What’s the probability the second is greater than the first? 50 50. Now what if you find out the first is 2? Or 2 trillion? Or any finite number? You should then think the second is greater.

MichaelStJules @ 2023-10-10T18:22 (+3)

Ya, that is similar, but I think the implications are very different.

The uniform measure over the integers can't be normalized to a probability distribution with total measure 1. So it isn’t a real (or proper) probability distribution. Your options are, assuming you want to address the problem:

- It's not a valid set of credences to hold.

- The order on the integers (outcomes) is the problem and we have to give it up (at least for this distribution).

2 gives up a lot more than 1, and there’s no total order we can replace it with that will avoid the problem. Giving up the order also means giving up arithmetical statements about the outcomes of the distribution, because the order is definable from addition or the successor function.

If you give up the total order entirely (not just for the distribution or distributions in general), then you can't even form the standard set of natural numbers, because the total order is definable from addition or the successor function. So, you're forced to give up 1 (and the Axiom of Infinity from ZF) along with it, anyway. You also lose lots of proofs in measure theory.

OTOH, the distribution of outcomes in a St Petersburg prospect isn't improper. The probabilities sum to 1. It's the combination with your preferences and attitudes to risk that generate the problem. Still, you can respond nearly the same two ways:

- It's not a valid set of credences (over outcomes) to hold.

- Your preferences over prospects are the problem and we have to give them up.

However, 2 seems to give up less than 1 here, because:

- There's little independent argument for 1.

- You can hold such credences over outcomes without logical contradiction. You can still have non-trivial complete preferences and avoid the problem, e.g. with a bounded utility function.

- Your preferences aren't necessary to make sense of things like the total order on the integers is.

Derek Shiller @ 2023-10-10T22:23 (+3)

The other unlisted option (here) is that we just accept that infinities are weird and can generate counter-intuitive results and that we shouldn't take too much from them because it is easier to blame them then all of the other things wrapped up with them. I think the ordering on integers is weird, but it's not a metaphysical problem. The weird fact is that every integer is unusually small. But that's just a fact, not a problem to solve.

Infinities generate paradoxes. There are plenty of examples. In decision theory, there is also stuff like Satan's apple and the expanding sphere of suffering / pleasure. Blaming them all on the weirdness of infinities just seems tidier than coming up with separate ad hoc resolutions.

MichaelStJules @ 2023-10-13T06:38 (+3)

I think there's something to this. I argue in Sacrifice or weaken utilitarian principles that it's better to satisfy the principles you find intuitive more than less (i.e. satisfy weaker versions, which could include the finitary or deterministic case versions, or approximate versions). So, it's kind of a matter of degree. Still, I think we should have some nuance about infinities rather than treat them all the same and paint their consequences as all easily dismissable. (I gather that this is compatible with your responses so far.)

In general, I take actual infinities (infinities in outcomes or infinitely many decisions or options) as more problematic for basically everyone (although perhaps with additional problems for those with impartial aggregative views) and so their problems easier to dismiss and blame on infinities. Problems from probability distributions with infinitely many outcomes seem to apply much more narrowly and so harder to dismiss or blame on infinities.

(The rest of this comment goes through examples.)

And I don't think the resolutions are in general ad hoc. Arguments for the Sure-Thing Principle are arguments for bounded utility (well, something more general), and we can characterize the ways that avoid the problem as such (given other EUT axioms, e.g. Russell and Isaacs, 2021). Dutch book arguments for probabilism are arguments that your credences should satisfy certain properties not satisfied by improper distributions. And improper distributions are poorly behaved in other ways that make them implausible for use as credences. For example, how do you define expectations, medians and other quantiles over them — or even the expected value of a nonzero constant functions or two-valued step function over improper distributions — in a way that makes sense? Improper distributions just do very little of what credences are supposed to do.

There are also representation theorems in infinite ethics, specifically giving discounting and limit functions under some conditions in Asheim, 2010 (discussed in West, 2015), and average utilitarianism under others in Pivato (2021, and further discussed in 2022 and 2023).

Satan's apple would be a problem for basically everyone, and it results from an actual infinity, i.e. infinitely many actual decisions made. (I think how you should handle it in practice is to precommit to taking at most a specific finite number of pieces of the apple, or use a probability distribution, possibly one with infinite expected value but finite with certainty.)

Similarly, when you have infinitely many options to choose from, there may not be any undominated option. As long as you respect statewise dominance and have two outcomes A and B, with one strictly worse than the other, then there's no undominated option among the set pA + (1-p)B, for all p strictly between 0 and 1 (with p=1/n or 1-1/n for each n). These are cases where the argument for dismissal is strong, because "solving" these problems would mean giving up the most basic requirements of our theories. (And this fits well with scalar utilitarianism.)

My inclination for the expanding sphere of suffering/pleasure is that there are principled solutions:

- If you can argue for the separateness of persons, then you should sum over each person's life before summing across lives. Or, people's utility values are in fact utility functions, just preferences about how things go, then there may be nothing to aggregate within the person. There's no temporal aggregation over each person in Harsanyi's theorem.

- If we have to pick an order to sum in or take a value density over, there are more or less natural ones, e.g. using a sequence of nested compact convex sets whose union is the whole space. If we can't pick one, we can pick multiple or them all, either allowing incompleteness with a multi-utility representation (Shapley and Baucells, 1998, Dubra, Maccheroni, and Ok, 2004, McCarthy et al., 2017, McCarthy et al., 2021), or having normative uncertainty between them.

MichaelStJules @ 2023-10-09T03:27 (+2)

I think Parfit's Hitchhiker poses a similar problem for everyone, though.

You're outside town and hitchhiking with your debit card but no cash, and a driver offers to drive you if you pay him when you get to town. The driver can also tell if you'll pay (he's good at reading people), and will refuse to drive if he predicts that you won't. Assuming you'd rather keep your money than pay conditional on getting into town, it would be irrational to pay then (you wouldn't be following your own preferences). So, you predict that you won't pay. And then the driver refuses to drive you, and you lose.

So, the thing to do here is to somehow commit to paying and actually pay, despite it violating your later preference to not pay when you get into town.

And we might respond the same way for A vs B-$100 in the money pump in the post: just commit to sticking with A (at least for high enough value outcomes) and actually do it, even though you know you'll regret it when you find out the value of A.

So maybe (some) money pump arguments prove too much? Still, it seems better to avoid having these dilemmas when you can, and unbounded utility functions face more of them.

On the other hand, you can change your preferences so that you actually prefer to pay when you get into town. Doing the same for the post's money pump would mean actually not preferring B-$100 over some finite outcome of A. If you do this in response to all possible money pumps, you'll end up with a bounded utility function (possibly lexicographic, possibly multi-utility representation). Or, this could be extremely situation-specific preferences. You don't have to prefer to pay drivers all the time, just in Parfit's Hitchhiker situations. In general, you can just have preferences specific to every decision situation to avoid money pumps. This violates the Independence of Irrelevant Alternatives, at least in spirit.

CarlShulman @ 2023-10-08T03:35 (+5)