The Windfall Clause: How to Prevent AI-Induced Wealth Inequality

By Strad Slater @ 2025-11-11T09:18 (+12)

This is a linkpost to https://williamslater2003.medium.com/the-windfall-clause-how-to-prevent-ai-induced-wealth-inequality-08a702254886

Quick Intro: My name is Strad and I am a new grad working in tech wanting to learn and write more about AI safety and how Tech will effect our future. I'm trying to challenge myself to write a short article a day to get back into writing. Would love any feedback on the article and any advice on writing in this field!

30,000 jobs where recently cut at Amazon. 9,000 at Microsoft. 4,000 at Salesforce. As of October 2025, over 184,000 tech jobs have been cut this year with a reported 25% of them being related to “AI-driven restructuring.”

Seeing these stats have definitely made me more aware of my own place in the job market as well as my current skill set and how it shapes up next to a future with AI. To calm my worries, I tried to research what skills to learn in order to stay competitive. However, in doing this research, I couldn’t help but notice my anxiety about AI and job loss more broadly.

While AI continues to progress at tasks such as coding and driving, the end goal of a lot of leading AI companies is to create artificial general intelligence, which would do everything humans can do but better.

If this is the end goal, then will any skill help me keep a job? Better yet, will there even be jobs for humans at this point?

A world where no one can work stresses me out. Jobs are what give us the ability to survive and sustain our lives. How do we survive without the ability to provide for ourselves?

Of course, if AI reach's the point where it can take all our jobs, surely it has the ability to help sustain human lives in a post-work world. Everything we need; food, healthcare, housing, will all be automated and done by AI. We won’t need jobs that make money to buy these things cause AI will do them for free.

This utopia could only comfort me for so long before I had to look at the current reality of the situation. Sure, many tech giants working towards AGI claim to do so in the hopes of expanding human flourishing. While this ideal is nice it is by no means an inevitable outcome of reaching AGI.

Even if we succeed in creating a fully aligned and benevolent AI, it is not entirely clear that it will be leveraged in the most beneficial way for the public good.

The more replacing of human labor with AI, the more transferring of profits from human workers to the owners of AI companies. If not dealt with properly, this situation can potentially result in a massive inequality of wealth between AI tech giants and… everyone else.

Press enter or click to view image in full size

Such extreme inequality could lead to a whole multitude of other problems. People would suffer since they wouldn't be able to provide themselves with necessities such as food and housing. The perception of wealth disparities between companies and the rest of society would likely result in political unrest and social instability. Power over society would increasingly become concentrated in the hands of a few tech companies, eroding democratic ideals.

Given the nontrivial chance of severe, AI-induced wealth inequality in the near future, it’s important to think about how we can better guide the advancement of AI towards the public good.

This is exactly what Cullen O’Keefe, a research affiliate with the Centre for the Governance of AI, did with his team back in 2020 when they proposed “The Windfall Clause.”

The Windfall Clause

The Windfall Clause is an economic framework that encourages AI tech companies to donate a portion of their profits to the public good once they exceed a specific percentage of the world’s total wealth.

The term “windfall” comes from the fact that this threshold only occurs if a company receives “windfall” profits, meaning an unexpectedly large amount of profits. The clause acts a pledge that companies make before actually reaching this “windfall” threshold for donating. The low likelihood of reaching the threshold along with the preemptive nature of the pledge helps increase the likelihood of companies actually choosing to take the pledge.

The framework is a realistic strategy to combat inequality because there are not only moral reasons, but economic ones to suspect companies would take the pledge. The concrete demonstration of a company using their product for the public good creates great publicity. This positive perception triggers downstream benefits such as getting more people to use their product, attracting and maintaining high skilled talent, and higher employee productivity due to the perceived meaning in their work.

By getting tech giants in AI to take this pledge, severe wealth inequality and its downstream consequences could be avoided. With the framework’s potential for positive impact, I wanted to give a brief overview of the various research directions one could go down in order to help build upon the idea. A complicated, global framework such as the Windfall Clause comes with a lot of difficult challenges, so the more people working on it, the better chance there is of it being effectively implemented.

The Properties of an Effective Windfall Clause

The desired properties of an effective Windfall Clause are discussed in O’Keefe’s 2020 report on the framework. These properties act as a good launch pad for further research directions.

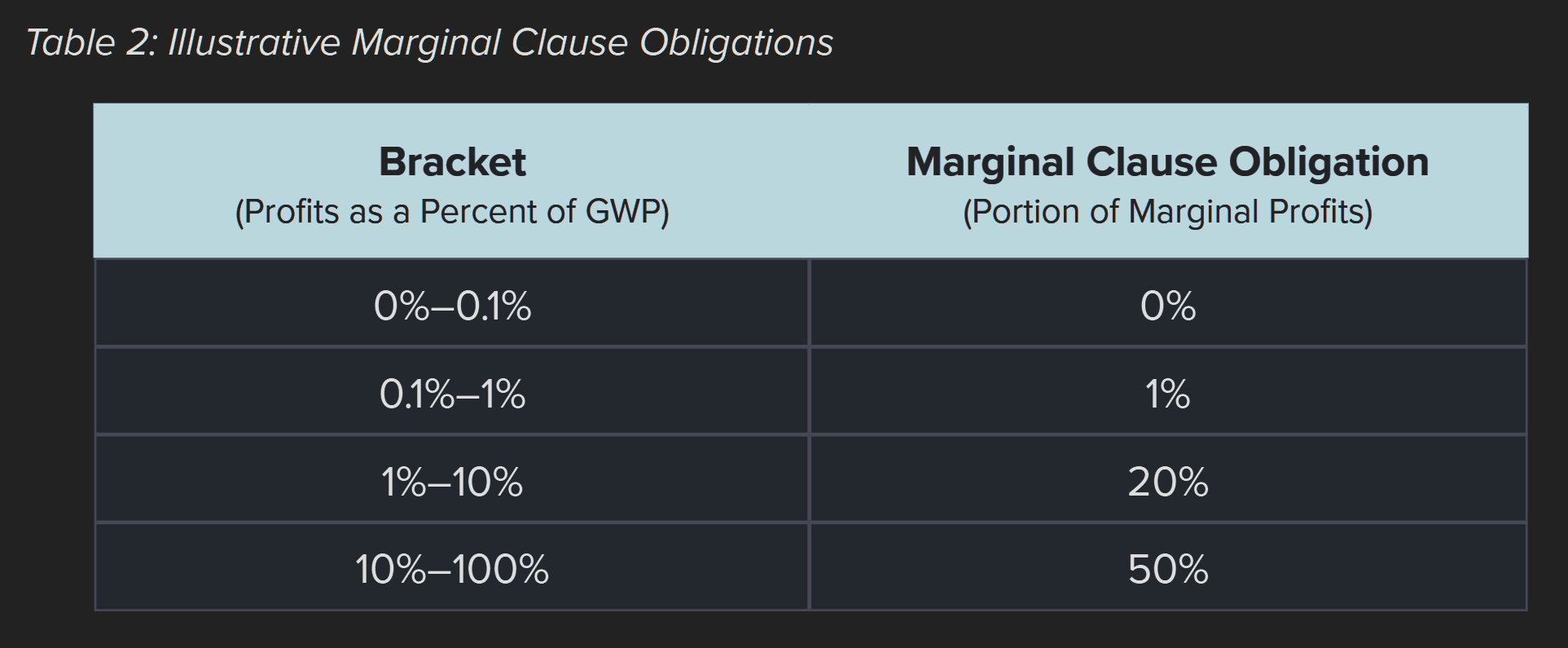

In order for the Windfall Clause to be effective, there are two major components that must be carefully crafted. The windfall function, which determines when and how a company donates its money to the public, and the distribution strategy which determines how the donated money is used to help the public.

Below are some of the properties of an effective windfall function mentioned in the report.

- Transparency — It must be easy for the public to tell that the company’s obligation to the pledge has been fulfilled. This helps the company get proper recognition and makes the pledge more enforceable. People interested in this property could research which donation structures are the most transparent (i.e. a smooth function of profits to donations vs a step function).

- Pre-Windfall Commitment — There should be a smaller version of commitments within the pledge that occurs before true windfall profits are reached. This would act as a test run for the company to see if they truly stick to the commitment. It would also help hold the company accountable for if they do reach windfall profits since they would’ve already shown precedent in adhering to the pledge in the past. People interested in this property could research which strategies for Pre-Windfall commitment current AI companies would be most receptive too.

- Incentive Alignment — Companies who take the pledge should always have an incentive to make more profits. There should be nothing in the windfall function that might deter companies from increasing profits to avoid reaching the donation threshold. People interested in this property could research what type of metrics work best for determining the thresholds for donation while still encouraging growth (i.e. profits vs market cap).

- Competitiveness — The pledge must allow companies to stay competitive against those who don't take it. If this is not the case, then companies are unlikely to take the pledge. And even if some do, they will likely not reach windfall profits before the other, more competitive companies. People interested in this property could research windfall functions that maintain competitiveness (i.e. allow for a pace of progression that matches/exceed standards in the market).

Below are some of the properties of an effective distribution strategy mentioned in the report.

- Effectiveness — The distribution strategy should be effective at doing whatever it aims to do. People interested in this property can help determine concretely what the aim of the framework should be as well as the optimal distribution strategy to achieve this aim. (i.e. since the effects of AI is likely to be global in nature, we should aim to help people globally and therefore use a distribution strategy that distributes globally.)

- Accountability — The body distributing the wealth should be held accountable to ensure that they are following the agreed upon strategy for distribution. People interested in this property can look into different structures/requirements for the distributing body that best holds it accountable (i.e. requiring the distributing body to release written rationales for each decision made).

- Legitimacy — Since the goal of the Windfall Clause is to benefit all people, finding a way to make everyone's voice fairly represented when making decisions related to distribution is important. People interested in this property can research strategies to aggregate the vast and varying preferences of the global population in an effective way (i.e. holding popular vote elections for some of the decision makers involved in distribution).

- Firm Buy-in — The Windfall Clause is dependent on the cooperation of the companies that make the pledge. Therefore an effective distribution strategy would likely benefit from some sort of promotion of the company’s interests. People interested in this property can help determine a way to support donating company’s interests while still holding to the values and goals of the Windfall Clause.

Takeaway

Given my anxieties the past few months about the potential consequences of an AI-induced, post-work future, learning about frameworks such as the Windfall Clause has been comforting. At least more comforting than just blindly hoping everything will be okay.

It is nice to know that people out there understand that the AI utopia so many people hope for is far from the only possible outcome and likely not the default outcome. The Windfall Clause is an example of researchers taking action based on this understanding.

Even if the Windfall Clause never gets implemented, research into its efficacy helps further the conversation of how to make AI work for the good of humanity. In a time where AI’s effect on the future seems so uncertain, the fostering of these discussions are becoming ever more important.

So if you happened to find The Windfall Clause interesting, feel free to read the full report here and contribute to the conversation!

Yarrow Bouchard 🔸 @ 2025-11-12T01:47 (+3)

Thanks for sharing this. I'm skeptical about near-term AGI, so I don't think this is a practical concern in the near term, but it's still really fun to think about.

This is the example O'Keefe et al. give in the report:

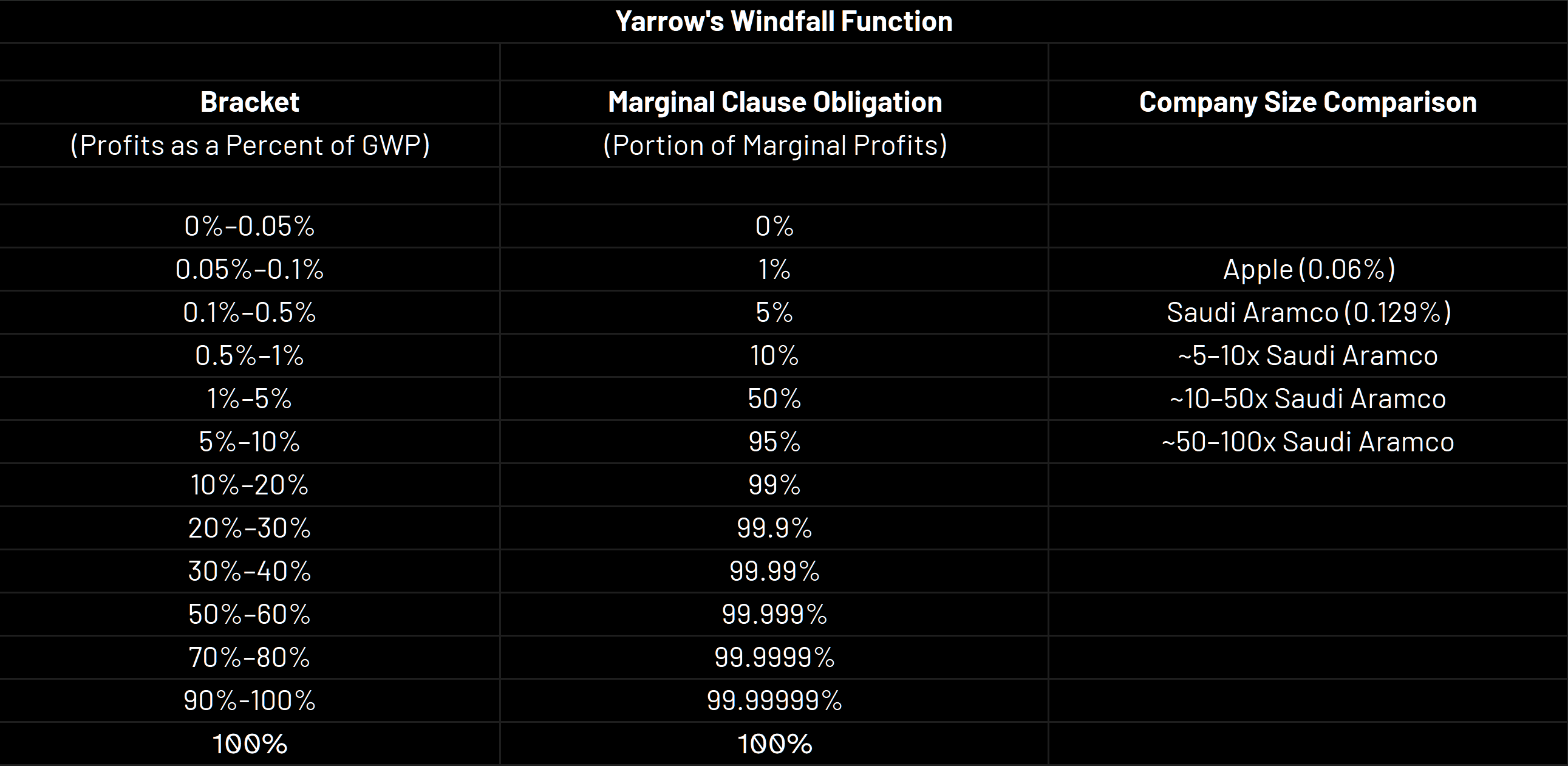

This is way too mild! I'd design it way more aggressively, something like this:

Particularly having it top out at 50% seems way too low.