A case against focusing on tail-end nuclear war risks

By Sarah Weiler @ 2022-11-16T06:08 (+32)

A case against focusing on tail-end nuclear war risks: Why I think that nuclear risk reduction efforts should prioritize preventing any kind of nuclear war over preventing or preparing for the worst case scenarios

Summary

- This is an essay written in the context of my participation in the Cambridge Existential Risk Initiative 2022, where I did research on nuclear risks and effective strategies for mitigating them. It partially takes on the following question (which is one of the cruxes I identified for the nuclear risk field): Which high-level outcomes should nuclear risk reduction efforts focus on?

- I argue against two approaches that seem quite prominent in current EA discourse on the nuclear risk cause area: preventing the worst types of nuclear war, and preparing for a post-nuclear war world.

- Instead, I advocate for a focus on preventing any kind of military deployment of nuclear weapons (with no differentiation between “small-” or “large-scale” war).

- I flesh out three arguments that inform my stance on this question:

- The possible consequences of a one-time deployment of nuclear weapons are potentially catastrophic. (more)

- It seems virtually impossible to put a likelihood on which consequences will occur, how bad they will be, and whether interventions taken today to prevent or prepare for them will succeed. (more)

- De-emphasizing the goal of preventing any nuclear war plausibly has adverse effects, in the form of opportunity costs, a weakening of norms against the deployment of nuclear weapons, and a general moral numbing of ourselves and our audiences. (more)

Setting the stage

Context for this research project

This essay was written in the context of the Cambridge Existential Risk Initiative (CERI) 2022, a research fellowship in which I participated this summer. My fellowship project/goal was to disentangle “the nuclear risk cause area”, i.e. to figure out which specific risks it encompasses and to get a sense for what can and should be done about these risks. I took several stabs at the problem, which I will not go into in this document (they are compiled in this write-up).

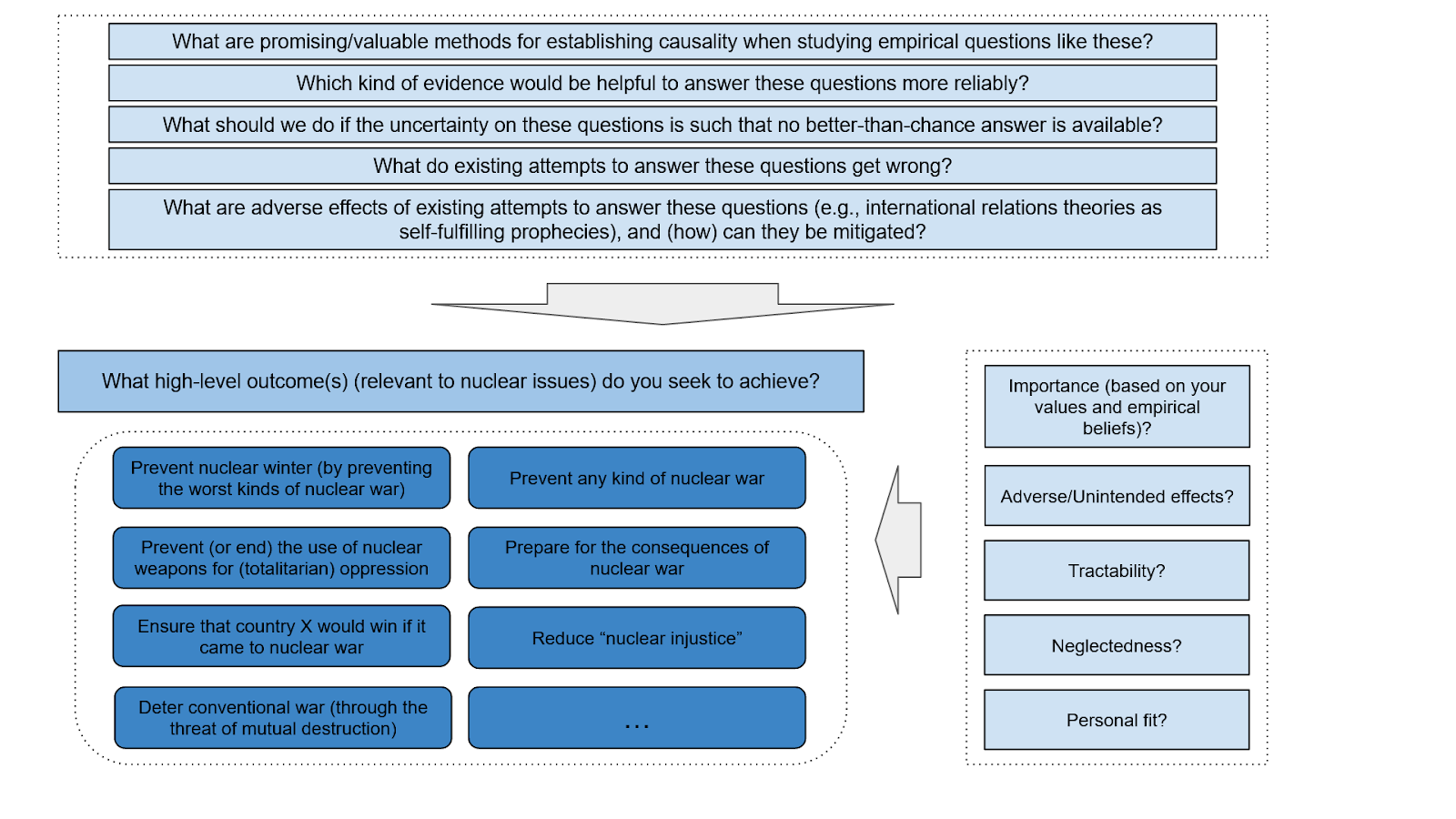

The methodology I settled on eventually was to collect a number of crucial questions (cruxes), which need to be answered to make an informed decision about how to approach nuclear risk mitigation. One of the cruxes I identified was about the “high-level outcomes” that people interested in nuclear risk reduction might aim for. I came up with a list of the nuclear-related outcomes that I think could conceivably constitute a high-level goal (it is likely that this list is incomplete and I welcome suggestions for additional items to put on it). These high-level outcomes are found in the dark blue rectangles in Figure 1.

For lack of time and skills, I didn't do an in-depth investigation to compare and prioritize between these goals. However, I did spend some time thinking about a subset of the goals. In conversations with others at the fellowship, I encountered the view[1] that nuclear weapons are relevant to a committed longtermist only insofar as they constitute an existential risk, and that only certain kinds of nuclear conflict fulfill that criterion, which is why work to reduce nuclear risk (in the EA community) should mainly be targeted at addressing the danger of these "tail-end outcomes" (i.e., the worst kinds of nuclear war, which may cause nuclear winter). I felt quite concerned by this stance and what I perceive to be its fragile foundations and dangerous implications. In particular, I was shaken by the claim that "small-scale nuclear conflict essentially doesn't matter to us". This essay is an attempt to spell out my disagreements with and concerns about this view[2], and to make the case that preventing any kind of nuclear war should be a core priority for effective altruists (or longtermists) seeking to work on nuclear risk reduction.

Terminology

- “Using” nuclear weapons: this is a broad phrase that refers to any purposive action involving nuclear weapons, which includes using the possession of nuclear weapons as a threat and deterrent

- “Detonating” nuclear weapons: this refers to any detonation of nuclear weapons, including accidental ones and those that happen during tests as these weapons are developed; the phrase by itself does not imply that nuclear weapons are used in an act of war and it says nothing about the place and target of the detonation

- “Deploying”/”Employing” nuclear weapons: this is the phrase that refers specifically to the purposive detonation of nuclear weapons against an enemy in war.[3][4]

Spelling out the argument

The main thrust of my argument is that I contest the viability/feasibility of present-day attempts to either prevent cascading consequences of the one-time deployment of nuclear weapons or to prepare for those consequences. Because of this, I argue that it is dangerously overconfident to focus on those two outcomes (preventing the worst case; preparing for the world after) while deprioritizing the goal of preventing any kind of nuclear war. I point to the unpredictable consequences of a one-time deployment of nuclear weapons, and to the potentially far-reaching damage/risk they may bring, to assert that "small-scale" nuclear conflict does matter from a longtermist perspective (as well as from many other ethical perspectives).

Below, I spell out my argument by breaking it up into three separate reasons, which together motivate my conclusion regarding which high-level outcome to prioritize in the nuclear risk reduction space.

Reason 1: The possible consequences of a one-time deployment of nuclear weapons are potentially catastrophic

While I would argue that the sheer destruction wrought by the detonation of a single nuclear weapon is sufficient grounds for caring about the risk and for making the choice to attempt to reduce it[5], I understand and accept that this is less obvious when taking on a scope sensitive moral perspective (such as total utilitarianism), which recognizes/assumes that there are many incredibly important problems and risks in the world, that we cannot fix all of them at once, and that this obliges us to prioritize based on just how large the risk posed by each problem is (and on how good our chances to reduce it are). I will here make the case that the consequences of a one-time deployment of nuclear weapons are large enough in expectation to warrant the attention of a cause prioritizing consequentialist, especially if that consequentialist accepts the ethical argument of longtermism. I assume that such a (longtermist) consequentialist considers a problem sufficiently important if it contributes significantly to existential risks[6].

There are several plausible ways in which a one-time use of nuclear weapons can precipitate existential risk:

- Escalation / Failure at containing nuclear war[7]

- A relatively immediate consequence of a first deployment of nuclear weapons, or of a "small-scale" nuclear conflict, is that it can escalate into a larger exchange of attacks, with no obvious or foolproof stopping point.

- The first deployment of nuclear weapons can also lead to large-scale nuclear war more indirectly, by setting a precedent, weakening strong normative anti-use inhibitions among decision-makers, and thus making the use of these weapons in future conflicts more likely.

- In either case, a first deployment of nuclear weapons would then be a significant causal factor for larger-scale deployment, as such contributing to the risk of nuclear winter (which in itself has some chance of constituting an existential catastrophe[8]).

- Civilizational collapse

- The one-time deployment of nuclear weapons could severely upset norms against violence in the global system (and/or in specific localities).

- It could thus be a significant causal factor in increasing the incidence and severity of violent conflict globally.

- In a worst case scenario, such conflict could occur at a scale that induces civilizational collapse even if no further nuclear weapons are deployed.

- Societal vulnerability

- The one-time deployment of nuclear weapons would plausibly lead to political, economic, social, and cultural/normative shocks that could cause turmoil, instability, and a breakdown/weakening of societies' abilities to deal with, prepare for, and respond to pressing and longterm challenges (such as run-away climate change, the development and control of dangerous technologies, etc).

- One more specific example/scenario of these effects would be[9]: The one-time deployment of nuclear weapons makes people more suspicious and distrusting of other countries and of efforts for bi- and multilateral cooperation, thus exacerbating great power conflict, fueling technological arms race dynamics, decreasing the chance that revolutionary tech like AGI/TAI is developed safely, and increasing the chance of destructive conflict over the appropriation and use of such tech.

Reason 2: It seems virtually impossible to put a likelihood on any of these consequences, or on the chance that interventions taken today to prevent or prepare for them succeed

How would a one-time deployment of nuclear weapons today influence state leaders' decisions to acquire, modernize, and use nuclear weapons in the future? Would it lead to an immediate retaliation and escalation, or would there be restraint? Would the vivid demonstration of these weapons' destructive power reinforce emotions, norms, and reasons that deter from their use in war, or would the precedent break a taboo that has hencetoforth prevented the intentional deployment of nuclear weapons? How would such an event impact deterrence dynamics (incl. the credibility of retaliatory threats, a trust in each party's rational choice to avoid MAD, etc)? How would it impact proliferation aspirations by current non-nuclear weapons states?

How would a deployment of nuclear weapons influence the current global order, the relations and interactions between nation-states and non-state actors, and the global economy? How would it affect cultural norms and values, as well as social movements and civil society across the world? How would all this impact on efforts to tackle global challenges and risks? How would it impact the global as well as several regional communities' resilience and ability to respond to crises?

I consider these questions impossible to answer with any degree of certainty. Why? Because we have close to no relevant empirical data to infer the probability of different consequences of a one-time nuclear deployment, nor can these probabilities be derived logically from some reliable higher-order principles/theories.

Statements about the causal effects of interventions face similarly severe challenges: Even if we were able to make a decent guess at what the world after a one-time deployment of nuclear weapons would look like, I don't think we have much data or understanding to inform decisions meant to prepare for such a world. In addition, such preparatory or preventive interventions can't be assessed while or after they are implemented, since there is no feedback that would give us a sense of how effective the interventions are.[10]

Reason 3: De-emphasizing the goal of preventing any nuclear war plausibly has adverse effects

I'm thinking about two channels through which a focus on the tail-ends of nuclear war risk can have the adverse effect of making the deployment of nuclear weapons more likely: opportunity costs, and a weakening of the normative taboo against nuclear weapons employment. In an expansion of the latter point, I'm also concerned about the more general desensitizing effect that such a focus can bring.

Opportunity costs

The first channel is probably obvious and doesn't require much additional explanation. If resources (time, political capital, money) are spent narrowly on preventing or preparing for the worst-case outcome of a nuclear conflict, those same resources cannot be spent on reducing the probability of nuclear war per se[11]. Given that the resources to address nuclear risks are seriously limited in our present world (especially after the decision by one of the biggest funders in this space, the MacArthur Foundation, to phase out its support by the end of 2023; see Kimball 2021), such diversion of available means would arguably be quite significant and could seriously impact our overall efforts to prevent nuclear war.

Weakening of the nuclear taboo

The second channel is a little less direct and requires acceptance of a few assumptions:

- First, that "nuclear war is being and has been averted at least in part by norms that discourage leaders of nuclear weapons states from making (or from seriously contemplating) the decision to use nuclear weapons against 'enemy' targets, [...] either [because] decision-makers feel normative pressure from domestic and/or global public opinion, or [because] they have internalized these norms themselves, or both."[12]

- Second, that these norms are affected by the way in which authoritative voices talk about nuclear weapons, and that they are weakened when authoritative voices start to use diminutive language to describe some forms of nuclear war (describing them as minor, small-scale, or limited) as well as when they rank different types of nuclear war and downplay the danger of some types by contrasting their expected cost with the catastrophic consequences of the worst-case outcomes.[13]

- Third, that the discourse of the policy research community is an authoritative voice in this space[14], and

- fourth, that effective altruists and effective altruist organizations can contribute to and shape this authoritative discourse.

What follows from these assumptions is that research that makes the case for prioritizing worst-case outcomes (by "demonstrating" how working on them is orders of magnitude more important than working on "smaller nuclear war issues") could have the unintended negative side effect of increasing the probability of a nuclear first strike, because such research could weaken normative barriers to the deployment of nuclear weapons.

Desensitizing effect

In addition to the concern that focusing on worst case outcomes can end up increasing nuclear war risk (by increasing the probability of weapons deployment), I am worried that such a focus, and the language surrounding it, has a morally desensitizing effect. I feel a stark sense of unease (or, at times, terror) when I hear people talk nonchalantly about “unimportant small-scale nuclear war” or, to name a more specific example, “the likely irrelevance of India-Pakistan war scenarios”, when I read long articles that calculate the probability for different death counts in the event of nuclear escalation with the explicit intention of figuring out whether this is a risk worthy of our attention, or when I listen in on discussions about which conditions would make the deployment of nuclear weapons a “rational choice”. These experiences set off bright warning lights before my inner eye, and I feel strongly compelled to speak out against the use and promulgation of the types of arguments and reasoning just listed.

Maybe the reason for this intuitive response is that I’ve been taught - mostly implicitly, through the culture and art I encountered while growing up in a progressive, educated slice of Austrian society - that it is this kind of careless, trivializing language, and a collective failure to stand up and speak out against it, that have preceded and (at least partially) enabled the worst kinds of mass atrocity in history? I don’t have full clarity on my own views here; I find myself unable to pin down what I believe the size and probability of the problem (the desensitizing effect of this type of speech) to be; nor can I meticulously trace where my worry comes from and which sources, arguments, and evidence it is informed by. For the last months (all throughout and after the summer fellowship), I’ve been trying to get a better understanding of what I think and to mold my opposition into a watertight or at least comprehensible argument, and I largely failed. And yet - I continue to believe fairly strongly that there is harm and danger in the attitudes and conversations I witnessed. I’m not asking anyone to defer to that intuitive belief, of course; what I ask you to do is to reflect on the feelings I describe, and I’d also encourage you to not be instantly dismissive if you share similar concerns without being able to back them up with a rational argument.

But even if you think the last paragraph betrays some failing of my commitment to rational argument and reason, I hope that it doesn’t invalidate the entire post for you. Though I personally assign substantial weight to the costs (and risks) of “moral numbing”, I think that the overall case I make in this essay remains standing if you entirely discard the part about “desensitizing effects”.

Other pieces I wrote on this topic

- Disentanglement of nuclear security cause area_2022_Weiler: written prior to CERI, as part of a part-time and remote research fellowship in spring 2022)

- Cruxes for nuclear risk reduction efforts - A proposal

- What are the most promising strategies for preventing nuclear war?

- List of useful resources for learning about nuclear risk reduction efforts: This is a work-in-progress; if I ever manage to compile a decent list of resources, I will insert a link here.

- How to decide and act in the face of deep uncertainty?: This is a work-in-progress; if I ever manage to bring my thoughts on this thorny question into a coherent write-up, I will insert a link here.

References

Atkinson, Carol. 2010. “Using nuclear weapons.” Review of International Studies, 36(4), 839-851. doi:10.1017/S0260210510001312.

Bostrom, Nick. 2013. “Existential Risk Prevention as Global Priority.” Global Policy 4 (1): 15–31. https://doi.org/10.1111/1758-5899.12002.

CERI, (Cambridge Existential Risk Initiative). 2022. “CERI Fellowship.” CERI. 2022. https://www.cerifellowship.org/.

Clare, Stephen. 2022. “Modelling Great Power conflict as an existential risk factor”, subsection: “Will future weapons be even worse?” EA Forum, February 3. https://forum.effectivealtruism.org/posts/mBM4y2CjfYef4DGcd/modelling-great-power-conflict-as-an-existential-risk-factor#Will_future_weapons_be_even_worse_.

Cohn, Carol. 1987. “Sex and Death in the Rational World of Defense Intellectuals.” Signs 12 (4): 687-718. https://www.jstor.org/stable/3174209.

EA Forum. n.d. “Disentanglement Research.” In Effective Altruism Forum. Centre for Effective Altruism. Accessed October 9, 2022. https://forum.effectivealtruism.org/topics/disentanglement-research.

———. n.d. “Great Power Conflict.” In Effective Altruism Forum. Centre for Effective Altruism. Accessed October 9, 2022. https://forum.effectivealtruism.org/topics/great-power-conflict.

———. n.d. “Longtermism.” In Effective Altruism Forum. Centre for Effective Altruism. Accessed October 9, 2022. https://forum.effectivealtruism.org/topics/longtermism.

Hilton, Benjamin, and Peter McIntyre. 2022. “Nuclear War.” 80,000 Hours: Problem Profiles (blog). June 2022. https://80000hours.org/problem-profiles/nuclear-security/#top.

Hook, Glenn D. 1985. “Making Nuclear Weapons Easier to Live With: The Political Role of Language in Nuclearization.” Bulleting of Peace Proposals 16 (1), January 1985. https://doi.org/10.1177/096701068501600110.

Kaplan, Fred. 1991 (1983). The Wizards of Armageddon. Stanford, Calif: Stanford University Press. https://www.sup.org/books/title/?id=2805.

Ladish, Jeffrey. 2020. “Nuclear War Is Unlikely to Cause Human Extinction.” EA Forum (blog). November 7, 2020. https://forum.effectivealtruism.org/posts/mxKwP2PFtg8ABwzug/nuclear-war-is-unlikely-to-cause-human-extinction.

Larsen, Jeffrey A., and Kerry M. Kartchner. 2014. On Limited Nuclear War in the 21st Century. On Limited Nuclear War in the 21st Century. Stanford University Press. https://doi.org/10.1515/9780804790918.

LessWrong. n.d. “Motivated Reasoning.” In LessWrong. Accessed November 22, 2022. https://www.lesswrong.com/tag/motivated-reasoning.

Rodriguez, Luisa (Luisa_Rodriguez). 2019. “How Bad Would Nuclear Winter Caused by a US-Russia Nuclear Exchange Be? - EA Forum.” EA Forum (blog). June 20, 2019. https://forum.effectivealtruism.org/posts/pMsnCieusmYqGW26W/how-bad-would-nuclear-winter-caused-by-a-us-russia-nuclear.

Todd, Benjamin. 2017 (2022). “The case for reducing existential risks.” 80,000 Hours, first published in October 2017, last updated in June 2022. https://80000hours.org/articles/existential-risks/.

- ^

I don’t know how common or prominent this view is in the EA community. Some info to get a tentative sense of its prominence: One person expressed the view explicitly in a conversation with me and suggested that many people on LessWrong (a proxy for the rationalist community, which has significant overlaps with EA?) share it. A second person expressed the view in more cautious terms in a conversation. In addition, over the summer several people asked me and other nuclear risk fellows about the probability that nuclear war leads to existential catastrophe, which might indicate some affinity to the view described above. I would also argue that articles like this one (Ladish 2020) on the EA Forum seem implicitly based on this view.

- ^

Note that the reasons I spell out in this post can, in some sense, be accused of motivated reasoning. I had an intuitive aversion against focusing on tail end nuclear risks (and against de-emphasizing the goal of preventing any kind of nuclear deployment) before I came up with the supporting arguments in concrete and well-formulated terms. The three reasons I present below thus came about as a result of me asking myself "Why do I think it's such a horrible idea to focus on the prevention of and preparation for the worst case of a nuclear confrontation?"

While in itself, this is not (or should not) be sufficient grounds for readers to discount my arguments, I think it constitutes good practice to be transparent about where our beliefs might come from (as far as that is possible) and I thank Oscar Delaney for raising the point in the comments and thus prompting me to add this footnote.

- ^

I owe the nuance to distinguish between “using”, “detonating”, and “deploying” nuclear weapons to conversations with academics working on nuclear issues. It is also recommended by Atkinson 2010: “Whether used or not in the material sense, the idea that a country either has or does not have nuclear weapons exerts political influence as a form of latent power, and thus represents an instance of the broader meaning of use in a full constructivist analysis.”

- ^

In a previous draft, I wrote “use nuclear weapons in war” to convey the same meaning; I tried to replace this throughout the essay, but the old formulation may still crop up and should be treated as synonymous with “deploying” or “employing nuclear weapons”.

- ^

The immediate and near-term consequences of a nuclear detonation are described in, for instance, Hilton and McIntyre 2022.

- ^

A defense of that assumption can be found in Bostrom 2013 and Todd 2017 (2022), among other places.

- ^

Containing nuclear war has been discussed as a strategic goal since the very early decades of the nuclear age (i.e., from the 1950s onwards). These discussions have always featured a standoff between the merits and necessity vs. futility and adverse consequences of thinking about “smart nuclear war-fighting” (i.e., keeping nuclear war contained to a “small scale”); my arguments above stand in the tradition of those who contend that such thinking and strategizing is creating more harm than good. For a more comprehensive treatment of all sides of the debate, see the first part of On limited nuclear war in the 21st century, edited by Larsen and Kartchner (2014), and for a historical account of the first time the discussion arose, see Chapter 14 of Kaplan’s Wizards of Armageddon (1991 (1983)).

- ^

Rodriguez (2019) and Ladish (2020) provide extensive analyses of the effects of nuclear wars of different magnitudes, admitting the possibility that nuclear winter could cause human extinction but ultimately assessing it as rather low. Both of these are posts on the EA Forum; I have not looked at the more academic literature on nuclear winter and won’t take a stance on exactly how likely it is to cause an existential catastrophe.

- ^

This is very similar to, and somewhat inspired by, Clare’s illustration of “Pathways from reduced cooperation and war to existential risk via technological disasters” in a post that discusses great power conflict as an existential risk factor (Clare 2022).

- ^

A possible objection here could be that a similar level of uncertainty exists for the goal of preventing a one-time deployment of nuclear weapons in the first place. I agree that we also face disconcertingly high uncertainty when attempting to prevent any kind of nuclear war (see the non-conclusive conclusions of my investigation into promising strategies to reduce the probability of nuclear war as evidence of how seriously I take that uncertainty). However, I would argue that interventions to reduce the likelihood of any nuclear war are at least somewhat more tractable than those that aim to foresee and prepare for the consequences of nuclear weapons deployment. This is because the former at least have the present world - which we have some evidence and familiarty with - as their starting point, whereas the latter attempt to intervene in a world which is plausibly significantly different from the one we inhabit today. In other words: The knowledge that would be relevant for figuring out how to prevent the worst kinds of nuclear war, or to prepare for a post-nuclear war world, is particularly unattainable to us at the present moment, simply because we have so little experience with events that could give us insights into what the consequences of a break in the nuclear taboo would be.

- ^

Unless reducing the probability of any kind of nuclear war is viewed as the best available means for preventing or preparing for the worst-case outcome, which is what I argue for in this essay.

- ^

I put this in quotation marks because the lines are copied directly from this investigative report I wrote on reducing the probability of nuclear war (in other words, I’m shamelessly quoting myself here).

- ^

For a more extensive discussion and defense of the claim that language affects nuclear policy, I point the reader to the literature of “nukespeak”. I would especially recommend two articles on the topic from the 1980s: Hook 1985, “Making Nuclear Weapons Easier to Live With. The Political Role of Language in Nuclearization” (download a free version here: pdf), and Cohn 1987, “Sex and Death in the Rational World of Defense Intellectuals”.

- ^

In the case of discourses on nuclear weapons, this point has been made, for instance, by Cohn 1987. In addition, authors have argued that the ideas and discourse of the (policy) research community in the field of International Relations more broadly have a significant influence on the perceptions and actions of policymakers (e.g., Smith 2004 and his discussion of how International Relations scholars have been shaping dominant definitions of violence and, through that, have had an influence on policies to counter it).

AronM @ 2022-11-17T21:43 (+19)

Thank you for this work. I appreciate the high-level transparency throughout (e.g what is an opinion, how many sources have been read/incorporated, reasons for assumptions etc.)!

I have few key (dis)agreements and considerations. Disclaimer: I work for ALLFED (Alliance to Feed the Earth in Disasters) where we look at preparedness and response to nuclear winter among other things.

1) Opportunity Costs

I think it is not necessary for work on either preventing the worst nuclear conflicts or work on preparedness/response to be mutually exclusive with preventing nuclear conflict in general.

My intuition is that if you are working on preventing the worst nuclear conflicts then you (also) have to work on escalation steps. And understanding of how wars escalate and what we can do about it seems to be very useful generally no matter if we go from a war using 0 to ~10 nukes or from a war escalating from 10 to 100 nukes. At each step we would want to intervene. I do not know how a specialization would look like that is only relevant at the 100 to 1000 nukes step. I know me not being able to imagine such a specialization is only a weak argument but I am also not aware of anyone only looking at such a niche problem.

Additionally, preparedness/response work has multiple uses. Nuclear winter is only one source for an abrupt sunlight reduction scenario (ASRS), the others being super volcanic eruptions and asteroid impacts (though one can argue that nuclear winter is the most likely out of the 3). Having 'slack' critical infrastructure (either through storage or the capacity to quickly scale-up post-catastrophe) is also helpful in many scenarios. Examples: resilient communication tech is helpful if communication is being disrupted either by war or by say a solar storm (same goes for electricity and water supply). The ability to scale-up food production is useful if we have Multiple Bread Basket Failures due to coinciding extreme weather events or if we have an agricultural shortfall due to a nuclear winter. In both cases we would want to know how feasible it is to quickly ramp up greenhouses (one example).

Lastly, I expect these different interventions to require different skillsets (e.g civil engineers vs. policy scholars). (Not always, surely there will be overlap.) So the opportunity costs would be more on the funding side of the cause area, less so on the talent side.

2) Neglectedness

I agree that the cause area as a whole is neglected and share the concerns around reduced funding. But within the broader cause area of 'nuclear conflict' the tail-risks and the preparedness/response are even more neglected. Barely anyone is working on this and I think this is one strength of the EA community to look into highly neglected areas and add more value per person working on the problem. I don't have numbers but I would expect there to be at least 100 times more people working on preventing nuclear war and informing policy makers about the potential negative consequences because as you rightly stated that one does not need to be utilitarian, consequentialist, or longtermist to not want nukes to be used under any circumstances.

and 3) High uncertainty around interventions

Exactly because of the uncertainty you mentioned I think we should not rely on a narrow set of interventions and go broader. You can discount the likelihood, run your own numbers and advocate your ideal funding distribution between interventions but I think that we can not rule out nuclear winter happening and therefore some funding should go to response.

For context: Some put the probability of nuclear war causing extinction at (only) 0.3% this century. Or here is ALLFED's cost-effectiveness model looking at 'agriculture shortfalls' and their longterm impact, making the case for the marginal dollar in this area being extremely valuable.

In general I strongly agree with your argument that more efforts should go into prevention of any kind of nuclear war. I do not agree that this should happen at the expense of other interventions (such as working on response/preparedness).

4) Premise 1 --> Civilizational Collapse (through escalation after a single nuke)

You write that a nuclear attack could cause a global conflict (agree) which could then escalate to civilizational collapse (and therefore pose an xrisk) even if no further nukes are being used (strong disagree).

I do not see a plausible pathway to that. Even in an all out physical/typical explosives kind of war (I would expect our supply chains to fail and us running out of things to throw at each other way before civilization itself collapses). Am I missing something here?

Tongue in cheek related movie quote:

A: "Eye for an eye and the world goes blind."

B: "No it doesn't. There'll be one guy left with one eye."

But I do not think it changes much of what you write here even if you cut-out this one consideration. It is only a minor point. Not a crux. Agree on the aspect that a single nuke can cause significant escalation though.

5) Desensitizing / Language around size of events

I am also saddened to hear that someone was dismissive about an India/Pakistan nuclear exchange. I agree that that is worrisome.

I think that Nuclear Autumns (up to ~25 Tg (million tons) of soot entering the atmosphere) still pose a significant risk and could cause ~1 billion deaths through famines + cascading effects, that is if we do not prepare. So dismissing such a scenario seems pretty bad to me

Sarah Weiler @ 2022-11-19T10:11 (+10)

Thanks for taking the time to read through the whole thing and leaving this well-considered comment! :)

In response to your points:

1) Opportunity costs

- “I do not know how a specialization would look like that is only relevant at the 100 to 1000 nukes step. I know me not being able to imagine such a specialization is only a weak argument but I am also not aware of anyone only looking at such a niche problem.” - If this is true and if people who express concern mainly/only for the worst kinds of nuclear war are actually keen on interventions that are equally relevant for preventing any deployment of nuclear weapons, then I agree that the opportunity cost argument is largely moot. I hope your impressions of the (EA) field in this regard are more accurate than mine!

- My main concern with preparedness interventions is that they may give us a false sense of ameliorating the danger of nuclear escalation (i.e., “we’ve done all these things to prepare for nuclear winter, so now the prospect of nuclear escalation is not quite as scary and unthinkable anymore”). So I guess I’m less concerned about these interventions the more they are framed as attempts to increase general global resilience, because that seems to de-emphasize the idea that they are effective means to substantially reduce the harms incurred by nuclear escalation. Overall, this is a point that I keep debating in my own mind and where I haven’t come to a very strong conclusion yet: There is a tension in my mind between the value of system slack (which is large, imo) and the possible moral hazard of preparing for an event that we should simply never allow to occur in the first place (i.e.: preparation might reduce the urgency and fervor with which we try to prevent the bad outcome in the first place).

- I mostly disagree on the point about skillsets: I think both intervention targets (focus on tail risks vs. preventing any nuclear deployment) are big enough to require input from people with very diverse skillsets, so I think it will be relatively rare for a person to be able to only meaningfully contribute to either of the two. In particular, I believe that both problems are in need of policy scholars, activists, and policymakers and a focus on the preparation side might lead people in those fields to focus less on the preventing any kind of nuclear deployment goal.

2) Neglectedness:

- I think you’re empirically right about the relative neglectedness of tail-ends & preparedness within the nuclear risk field.

- (I’d argue that this becomes less pronounced as you look at neglectedness not just as “number of people-hours” or “amount of money” dedicated to a problem, but also factor in how capable those people are and how effectively the money is spent (I believe that epistemically rigorous work on nuclear issues is severely neglected and I have the hope that EA engagement in the field could help ameliorate that).)

- That said, I must admit that the matter of neglectedness is a very small factor in convincing me of my stance on the prioritization question here. As explained in the post, I think that a focus on the tail risks and/or on preparedness is plausibly net negative because of the intractability of working on them and because of the plausible adverse consequences. In that sense, I am glad that those two are neglected and my post is a plea for keeping things that way.

3) High uncertainty around interventions: Similar thoughts to those expressed above. I have an unresolved tension in my mind when it comes to the value of preparedness interventions. I’m sympathetic to the case you’re making (heck, I even advocated (as a co-author) for general resilience interventions in a different post a few months ago); but, at the moment, I’m not exactly sure I know how to square that sympathy with the concerns I simultaneously have about preparedness rhetoric and action (at least in the nuclear risk field, where the danger of such rhetoric being misused seems particularly acute, given vested interests in maintaining the system and status-quo).

4) Civilizational Collapse:

- My claim about civilization collapse in the absence of the deployment of multiple nukes is based on the belief that civilizations can collapse for reasons other than weapons-induced physical destruction.

- Some half-baked, very fuzzy ideas of how this could happen are: destruction of communities’ social fabric and breakdown of governance regimes; economic damage, breakdown of trade and financial systems, and attendant social and political consequences; cyber warfare, and attendant social, economic, and political consequences.

- I have not spent much time trying to map out the pathways to civilizational collapse and it could be that such a scenario is much less conceivable than I currently imagine. I think I’m currently working on the heuristic that societies and societal functioning is hyper-complex and that I have little ability to actually imagine how big disruptions (like a nuclear conflict) would affect them, which is why I shouldn’t rule out the chance that such disruptions cascade into collapse (through chains of events that I cannot anticipate now).

- (While writing this response, I just found myself staring at the screen for a solid 5 minutes and wondering whether using this heuristic is bad reasoning or a sound approach on my part; I lean towards the latter, but might come back to edit this comment if, upon reflection, I decide it’s actually more the former)

Denkenberger @ 2022-11-19T22:06 (+5)

On moral hazard, I did some analysis in a journal article of ours:

"Moral hazard would be if awareness of a food backup plan makes nuclear war more likely or more intense. It is unlikely that, in the heat of the moment, the decision to go to nuclear war (whether accidental, inadvertent, or intentional) would give much consideration to the nontarget countries. However, awareness of a backup plan could result in increased arsenals relative to business as usual, as awareness of the threat of nuclear winter likely contributed to the reduction in arsenals [74]. Mikhail Gorbachev stated that a reason for reducing the nuclear arsenal of the USSR was the studies predicting nuclear winter and therefore destruction outside of the target countries [75]. One can look at how much nuclear arsenals changed while the Cold War was still in effect (after the Cold War, reduced tensions were probably the main reason for reduction in stockpiles). This was ~20% [76]. The perceived consequences of nuclear war changed from hundreds of millions of dead to billions of dead, so roughly an order of magnitude. The reduction in damage from reducing the number of warheads by 20% is significantly lower than 20% because of marginal nuclear weapons targeting lower population and fuel loading density areas. Therefore, the reduction in impact might have been around 10%. Therefore, with an increase in damage with the perception of nuclear winter of approximately 1000% and a reduction in the damage potential due to a smaller arsenal of 10%, the elasticity would be roughly 0.01. Therefore, the moral hazard term of loss in net effectiveness of the interventions would be 1%."

Also, as Aron pointed out, resilience protects against other catastrophes, such as supervolcanic eruptions and asteroid/comet impacts. Similarly, there is some evidence that people drive less safely if they are wearing a seatbelt, but overall we are better off with a seatbelt. So I don't think moral hazard is a significant argument against resilience.

I think direct cost-effectiveness analyses like this journal article are more robust, especially for interventions, than Importance, Neglectedness and Tractability. But it is interesting to think about tractability separately. It is true that there is a lot of uncertainty of what the environment would be like post catastrophe. However, we have calculated that resilient foods would greatly improve the situation with and without global food trade, so I think they are a robust intervention. Also, I think if you look at the state of resilience to nuclear winter pre-2014, it was basically to store up more food, which would cost tens of trillions of dollars, would not protect you right away, and if you did it fast, it would raise prices and exacerbate current malnutrition. In 2014, we estimated that resilient foods could be scaled up to feed everyone technically. And then in the last eight years, we have done research estimating that it could also be done affordably for most people. So I think there has been a lot of progress with just a few million dollars spent, indicating tractability.

I mostly disagree on the point about skillsets: I think both intervention targets (focus on tail risks vs. preventing any nuclear deployment) are big enough to require input from people with very diverse skillsets, so I think it will be relatively rare for a person to be able to only meaningfully contribute to either of the two. In particular, I believe that both problems are in need of policy scholars, activists, and policymakers and a focus on the preparation side might lead people in those fields to focus less on the preventing any kind of nuclear deployment goal.

I think that Aron was talking about prevention versus resilience. Resilience requires more engineering.

Sarah Weiler @ 2022-11-24T10:48 (+3)

Thanks for your comment and for adding to Aron’s response to my post!

Before reacting point-by-point, one more overarching warning/clarification/observation: My views on the disvalue of numerical reasoning and the use of BOTECs in deeply uncertain situations are quite unusual within the EA community (though not unheard of, see for instance this EA Forum post on "Potential downsides of using explicit probabilities" and this GiveWell blog post on "Why we can’t take expected value estimates literally (even when they’re unbiased)" which acknowledge some of the concerns that motivate my skeptical stance). I can imagine that this is a heavy crux between us and that it makes advances/convergence on more concrete questions (esp. through a forum comments discussion) rather difficult (which is not at all meant to discourage engagement or to suggest I find your comments unhelpful (quite the contrary); just noting this in an attempt to avoid us arguing past each other).

- On moral hazards:

- In general, my deep-seated worries about moral hazard and other normative adverse effects feel somewhat inaccessible to numerical/empirical reasoning (at least until we come up with much better empirical research strategies for studying complex situations). To be completely honest, I can’t really imagine arguments or evidence that would be able to substantially dissolve the worries I have. That is not because I’m consciously dogmatic and unwilling to budge from my conclusions, but rather because I don’t think we have the means to know empirically to what extent these adverse effects actually exist/occur. It thus seems that we are forced to rely on fundamental worldview-level beliefs (or intuitions) when deciding on our credences for their importance. This is a very frustrating situation, but I just don’t find attempts to escape it (through relatively arbitrary BOTECs or plausibility arguments) in any sense convincing; they usually seem to me to be trying to come up with elaborate cognitive schemes to diffuse a level of deep empirical uncertainty that simply cannot be diffused (given the structure of the world and the research methods we know of).

- To illustrate my thinking, here’s my response to your example:

- I don’t think that we really know anything about the moral hazard effects that interventions to prepare for nuclear winter would have had on nuclear policy and outcomes in the Cold War era.

- I don’t think we have a sufficiently strong reason to assign the 20% reduction in nuclear weapons to the difference in perceived costs of nuclear escalation after research on nuclear winter surfaced.

- I don’t think we have any defensible basis for making a guess about how this reduction in weapons stocks would have been different had there been efforts to prepare for nuclear winter in the 1980s.

- I don’t think it is legitimate to simply claim that fear of nuclear-winter-type events has no plausible effect on decision-making in crisis situations (either consciously or sub-consciously, through normative effects such as those of the nuclear taboo). At the same time, I don’t think we have a defensible basis for guessing the expected strength of this effect of fear (or “taking expected costs seriously”) on decision-making, nor for expected changes in the level of fear given interventions to prepare for the worst case.

- In short, I don’t think it is anywhere close to feasible or useful to attempt to calculate “the moral hazard term of loss in net effectiveness of the [nuclear winter preparation] interventions”.

- On the cost-benefit analysis and tractability of food resilience interventions:

- As a general reaction, I’m quite wary of cost-effectiveness analyses for interventions into complex systems. That is because such analyses require that we identify all relevant consequences (and assign value and probability estimates to each), which I believe is extremely hard once you take indirect/second-order effects seriously. (In addition, I’m worried that cost-effectiveness analyses distract analysts and readers from the difficult task of mapping out consequences comprehensively, instead focusing their attention on the quantification of a narrow set of direct consequences.)

- That said, I think there sometimes is informational value in cost-effectiveness analyses in such situations, if their results are very stark and robust to changes in the numbers used. I think the article you link is an example of such a case, and accept this as an argument in favor of food resilience interventions.

- I also accept your case for the tractability of food resilience interventions (in the US) as sound.

- As far as the core argument in my post is concerned, my concern is that the majority of post-nuclear war conditions gets ignored in your response. I.e., if we have sound reasons to think that we can cost-effectively/tractably prepare for post-nuclear war food shortages but don’t have good reasons to think that we know how to cost-effectively/tractably prepare for most of the other plausible consequences of nuclear deployment (many of which we might have thus far failed to identify in the first place), then I would still argue that the tractability of preparing for a post-nuclear war world is concerningly low. I would thus continue to maintain that preventing nuclear deployment should be the primary priority (in other words: your arguments in favor of preparation interventions don’t address the challenge of preparing for the full range of possible consequences, which is why I still think avoiding the consequences ought to be the first priority).

Vasco Grilo @ 2024-04-04T15:33 (+2)

Thanks for the detailed comment, Aron!

2) Neglectedness

I agree that the cause area as a whole is neglected and share the concerns around reduced funding. But within the broader cause area of 'nuclear conflict' the tail-risks and the preparedness/response are even more neglected. Barely anyone is working on this and I think this is one strength of the EA community to look into highly neglected areas and add more value per person working on the problem. I don't have numbers but I would expect there to be at least 100 times more people working on preventing nuclear war and informing policy makers about the potential negative consequences because as you rightly stated that one does not need to be utilitarian, consequentialist, or longtermist to not want nukes to be used under any circumstances.

I think nuclear tail risk may be fairly neglected because their higher severity may be more than outweighted by their lower likelihood. To illustrate, in the context of conventional wars:

- Deaths follow a power law whose tail index is “1.35 to 1.74, with a mean of 1.60”. So the probability density function (PDF) of the deaths is proportional to “deaths”^-2.6 (= “deaths”^-(“tail index” + 1)), which means a conventional war exactly 10 times as deadly is 0.251 % (= 10^-2.6) as likely[1].

- As a result, the expected value density of the deaths ("PDF of the deaths"*"deaths") is proportional to “deaths”^-1.6 (= “deaths”^-2.6*“deaths”).

- I think spending by war severity should a priori be proportional to expected deaths, i.e. to “deaths”^-1.6. If so, spending to save lives in wars exactly 1 k times as deadly should be 0.00158 % (= (10^3)^(-1.6)) as high.

Nuclear wars arguably scale much faster than conventional ones (i.e. have a lower tail index), so I guess spending on nuclear wars involving 1 k nuclear detonations should be higher than 0.00158 % of the spending on ones involving a single detonation. However, it is not obvious to me whether it should be higher than e.g. 1 % (respecting the multiplier you mentioned of 100). I estimated the expected value density of the 90th, 99th and 99.9th percentile famine deaths due to the climatic effects of a large nuclear war are 17.0 %, 2.19 %, and 0.309 % that of the median deaths, which suggests spending on the 90th, 99th and 99.9th percentile large nuclear war should be 17.0 %, 2.19 %, and 0.309 % that on the median large nuclear war.

- ^

Note the tail distribution is proportional to "deaths"^-1.6 (= "deaths"^-"tail index"), so a conventional war at least 10 times as deadly is 2.51 % (= 10^-1.6) as likely.

Oscar Delaney @ 2022-11-19T04:15 (+9)

Thanks Sarah, important issues. I basically agree with Aron's comment and am interested in your thoughts on the various points raised there.

Like Aron, I think the route from a single nuclear deployment to an existential catastrophe runs mainly through nuclear escalation. I think you are arguing for a more expansive conclusion, but the smaller claim that the best way to prevent a large nuclear exchange is to prevent the first deployment seems very defensible to me, and is still big if true. I don't have the necessary object-level knowledge to judge how true it is. So I agree with P1.

For P2, I agree with Aron that in general being more uncertain leads to a more diversified portfolio of interventions being optimal. I think while the point you raise - the future of complex systems like global geopolitics is hard to predict - is pretty obviously true, I resist the next step you take to say that using probabilities is useless/bad. Not to the same degree perhaps, but predicting whether a startup will succeed is very complex and difficult: it involves the personalities and dynamics of the founders and employees, the possibility of unforeseen technological breakthroughs, the performance of competitors including competitors that don't yet exist, the wider economy and indeed geopolitical and trade relationships. And yet people make these predictions all the time, and some venture capitalists make more money than others and I think it is reasonable to believe we can make more and less informed guesses. Likewise with your questions, I think some probabilities we could use are more wrong than others. So I think like in any other domain, we make a best guess, think about how uncertain we are and use that to inform whether to spend more time thinking about it to narrow our uncertainty. My guess is you believe after making our initial probability guess based mainly on intuition it is hard to make progress and narrow our uncertainty. I think this could well be true. I suppose I just don't see a better competing method of making decisions that avoids assigning probabilities to theory questions. My claim is that people/institutions that foreswear the use of probabilities will do systematically worse than those that try their best to predict the future, acknowledging the inherent difficulty of the endeavour.

Plausibly we can reformulate this premise as claiming that preventing the first nuclear deployment is more tractable because preventing escalation has more unknowns. I would be on board with this. I think it is mainly the anti-probability stance I am pushing back against.

For P3, I share the intuition that being cavalier about the deaths of millions of people seems bad. I think as a minimum it is bad optics, and quite possibly more (corrosive to our empathy, and maybe empathy is useful as altruists?). It seems trivially true that taking resources that would be used to try to prevent a first deployment and incinerating those resources is negative EV (ie "de-emphasizing the goal ... has adverse effects"). It is a much stronger more controversial claim that moving resources from escalation prevention to first-use prevention is good, and I feel ill-equipped to assess this claim.

Also, as a general point, I think these three premises are not actually premises in a unified logical structure (ie 'all forum posts are in English, this is a forum post, therefore it is in English') but rather quite independent arguments pointing to the same conclusion. Which is fine, just misleading to call them each premises in the one argument I think. FInally, I am not that sure of your internal history but one worry would be if you decided long ago intuitively based on the cultural milieu that the right answer is 'the best intervention in nuclear policy is to try to prevent first use' and then subconsciously sought out supporting arguments. I am not saying this is what happened or that you are any more guilty of this than me or anyone else, just that it is something I and we all should be wary of.

Sarah Weiler @ 2022-11-20T19:50 (+2)

Thanks for going through the "premises" and leaving your comments on each - very helpful for myself to further clarify and reflect upon my thoughts!

On P1 (that nuclear escalation is the main or only path to existential catastrophe):

- Yes, I do argue for the larger claim that a one-time deployment of nuclear weapons could be the start of a development that ends in existential catastrophe even if there is no nuclear escalation.

- I give a partial justification of that in the post and in my comment to Aron,

- but I accept that it's not completely illegitimate for people to continue to disagree with me; opinions on a question like this rest on quite foundational beliefs, intuitions, and heuristics, and two reasonable people can, imo, have different sets of these.

- (Would love to get into a more in-depth conversation on this question at some point though, so I'd suggest putting it on the agenda for the next time we happen to see each other in-person :)!)

On P2:

- Your suggested reformulation ("preventing the first nuclear deployment is more tractable because preventing escalation has more unknowns") is pretty much in line with what I meant this premise/proposition to say in the context of my overall argument. So, on a high-level, this doesn't seem like a crux that would lead the two of us to take a differing stance on my overall conclusion.

- You're right that I'm not very enthusiastic about the idea of putting actual probabilities on any of the event categories I mention in the post (event categories: possible consequences of a one-time deployment of nukes; conceivable effects of different types of interventions). We're not even close to sure that we/I have succeeded in identifying the range of possible consequences (pathways to existential catastrophe) and effects (of interventions), and those consequences and effects that I did identify aren't very specific or well-defined; both of these seem like

necessaryprudent steps to precede the assignment of probabilities. I realize while writing that you will probably just once again disagree with that leap I made (from deep uncertainty to rejecting probability assignment), and that I'm not doing much to advance our discussion here. Apologies! On your specific points: correct, I don't think we can advance much beyond an intuitive, extremely uncertain assignment of probabilities; I think that the alternative (whose existence you deny) is to acknowledge our lack of reasonable certainty about these probabilities and to make decisions in the awareness that there are these unknowns (in our model of the world); and I (unsurprisingly) disagree that institutions or people that choose this alternative will do systematically worse than those that always assign probabilities. - (I don't think the start-up analogy is a good one in this context, since venture capitalists get to make many bets and they receive reliable and repeated feedback on their bets. Neither of these seem particularly true in the nuclear risk field (whether we're talking about assigning probabilities to the consequences of nuclear weapons deployment or about the effects of interventions to reduce escalation risk / prepare for a post-nuclear war world).)

On P3: Thanks for flagging that, even after reading my post, you feel ill-equipped to assess my claim regarding the value of interventions for preventing first-use vs. interventions for preventing further escalation. Enabling readers to navigate, understand and form an opinion on claims like that one was one of the core goals that I started this summer's research fellowship with; I shall reflect on whether this post could have been different, or whether there could have been a complementary post, to better achieve this enabling function!

On P4: Haha yes, I see this now, thanks for pointing it out! I'm wondering whether renaming them "propositions" or "claims" would be more appropriate?

Oscar Delaney @ 2022-11-21T11:58 (+3)

Thanks!

Fair enough re the disanalogy between investing and nuclear predictions. My strong suspicion (from talking to some of them + reading write-ups + vibes) is that the people with the best Brier scores in various forecasting competitions use probabilities plenty, and probably more than the mediocre forecasters who just intuit a final number. But then I think you could reasonably respond that this is a selection effect that of course the people who love probabilities will spend lots of time forecasting and rise to the top.

I wonder what adversarial collaboration would resolve this probabilities issue? Perhaps something like: get a random group of a few hundred people and assign one group some calibration training and probability theory and practice and so forth a bit like what Nuno gave us, and assign the other group a curriculum of your choice that doesn't emphasise probabilities. Then get them all to make a bunch of forecasts, and a year or however long later see which group did better. This already seems skewed in my favour though as the forecasts are just probabilities. I am struggling to think of a way to test predictive skill nicely without using probability forecasts though.

Re your goals for the summer project, oh no that certainly wasn't the vibe I was intending to convey. I think this post succeeded in making me understand some of the issues at stake here better, and get a sense for some of the arguments and tensions. I think if after reading a 3000-word article with minimal background knowledge I had come to form strong and well-founded inside takes on (as you say) a very complex issue like nuclear policy, this would be remarkable! I don't think it is a failing of you or the essay at all that this isn't (very much) the case. To have good inside views I think I would need to at least be familiar with the various nuclear research/policy agendas out there, and how they fit into the preventing first use/preventing 100-->1000 escalation, and how tractable they each seem.

I think I still like calling them each 'arguments' but I think anything is probably better than 'premise' given the very special meaning of that word in logic.

Sarah Weiler @ 2022-11-23T20:33 (+2)

Ah, I think mybe there is/was a misunderstanding here. I don't reject the claim that the forecasters are (much) better on average when using probabilities than when refusing to do so. I think my point here is that the questions we're talking about (what would be the full set of important* consequences of nuclear first-use or the full set of important* consequences of nuclear risk reduction interventions X and Z) are not your standard, well-defined and soon-to-be-resolved forecasting questions. So in a sense, the very fact that the questions at issue cannot be part of a forecasting experiment is one of the main reasons for why I think they are so deeply uncertain and hard to answer with more than intuitive guesswork (if they could be part of a forecasting experiment, people could test and train their skills at assigning probabilities by answering many such questions, in which case I guess I would be more amenable to the claim that assigning probabilities can be useful). The way I understood our disagreement, it was not about the predictive performance of actors who do vs don't (always) use probabilities, but rather about their decision quality. I think the actual disagreement may be that I think that there is a significant difference (for some decisions, high decision quality is not a neat function of explicit predictive ability), whereas you might be close to equating the two?

[*by "full set" I mean that this is supposed to include indirect/second-order consequences]

That said, I can't, unfortunately, think of any alternative ways to resolve the disagreement regarding the decision quality of people using vs. refusing to use probabilities in situations where assessing the effects of a decision/action after the fact is highly difficult... (While the comment added by Noah Scales contains some interesting ideas, I don't think it does anything to resolve this stalemate, since it is also focused on comparing & assessing predictive success for questions with a small set of known answer options)

One other thing, because I forgot about that in my last response:

"FInally, I am not that sure of your internal history but one worry would be if you decided long ago intuitively based on the cultural milieu that the right answer is 'the best intervention in nuclear policy is to try to prevent first use' and then subconsciously sought out supporting arguments. I am not saying this is what happened or that you are any more guilty of this than me or anyone else, just that it is something I and we all should be wary of."

-> I think this is a super important point, actually, and agree that it's a concern that should be kept in mind when reading my essay on this topic. I did have the intuitive aversion against focusing on tail end risks before I came up with all the supporting arguments; basically, this post came about as a result of me asking myself "Why do I think it's such a horrible idea to focus on the prevention of and preparation for the worst case of a nuclear confrontation?" I added a footnote to be more transparent about this towards the beginning of the post (fn. 2). Thanks for raising it!

Noah Scales @ 2022-12-02T06:50 (+1)

Sarah, you wrote:

(While the comment added by Noah Scales contains some interesting ideas, I don't think it does anything to resolve this stalemate, since it is also focused on comparing & assessing predictive success for questions with a small set of known answer options)

Yes, that's right that my suggestions let you assess predictive success, in some cases, for example, over a set of futures partitioning a space of possibilities. Since the futures partition the space, one of them will occur, the rest will not. A yes/no forecast works this way.

Actually, if you have any question about a future at a specific time about which you feel uncertain, you can phrase it as a yes/no question. You then partition the space of possibilities at that future time. Now you can answer the question, and test your predictive success. Whether your answer has any value is the concern.

However, one option I mentioned is to list contingencies that, if present, result in contingent situations (futures). That is not the same as predicting the future, since the contingencies don't have to be present or identified (EDIT: in the real world, ie as facts), and you do not expect their contingent futures otherwise.

If condition X, then important future Y happens.

Condition X could be present now or later, but I can't identify or infer its presence now.

Deep uncertainty is usually taken as responding to those contingent situations as meaningful anyway. As someone without predictive information, you can only start offering models, like:

If X, then Y

If Y, then Z

If W and G, then B

If B, then C

A

T

I'm worried that A because ...

You can talk about scenarios, but you don't know or haven't seen their predictive indicators.

You can discuss contingent situations, but you can't claim that they will occur.

You can still work to prevent those contingent situations, and that seems to be your intention in your area of research. For example, you can work to prevent current condition "A", whatever that is. Nuclear proliferation, maybe, or deployment of battlefield nukes. Nice!

You are not asking the question, "What will the future be?" without any idea of what some scenarios of the future depend on. After all, if the future is a nuclear holocaust, you can backtrack to at least some earlier point in time, for example, far enough to determine that nuclear weapons were detonated prior to the holocaust, and further to someone or something detonating them, and then maybe further to who had them, or why they detonated them, or that might be where gaps in knowledge appear.

Oscar Delaney @ 2022-11-24T00:35 (+1)

Yes, I think this captures our difference in views pretty well: I do indeed think predictive accuracy is very valuable for decision quality. Of course, there are other skills/attributes that are also useful for making good decisions. Predicting the future seems pretty key though.

Noah Scales @ 2022-11-23T10:36 (+1)

You wrote:

" I am struggling to think of a way to test predictive skill nicely without using probability forecasts though."

The list of forecasting methods that do not rely on subjective or unverifiable probability estimates includes:

- in response to a yes/no forecasting question, given what you believe now about the current situation and what it will cause, answer with whether the questioned future scenario is what the current situation leads to.

- in response to a multiple option (mutually exclusive choice) forecasting question, given what you believe now about the current situation and what it could cause, answer what alternative future scenarios the current situation could lead to.

- in response to any forecasting question, if you lack sufficient knowledge of the facts of the current situation, then list the contingencies and contingent situations that you would match to better knowledge of the facts in order to determine the future.

- in response to any forecasting question, if you lack sufficient knowledge of causal relations that lead to a scenario, backtrack necessary (and together sufficient) causes from the scenario (one of the alternative forecast answers) to as close to the present as you can. From there, decide whether the causal gap between the present situation and that near-future situation (backtracked from the forecast scenario) is plausible to causally bridge. In other words, is that near-future situation causally possible? If you don't believe so, reject the scenario in your forecast. If you do believe so, you have a starting point for research to close the information gap corresponding to the causal gap.

The list of forecasting methods that do not provide a final forecast probability includes:

- for a yes/no forecast, use whatever probability methods to reach a conclusion however you do. Then establish a probability floor below which the answer is "no", a ceiling above which the answer is "yes", and a range between the floor and ceiling an answer of "maybe". Work to reduce your uncertainty enough to move your forecast probability below or above the maybe zone.

- for an alternatives forecast,use whatever probability methods to reach a conclusion however you do. Then establish a floor of probability above which an option is selected. List all options above the floor as chosen alternatives (for example, "I forecast scenario A or B but not C or D."). Work to reduce your uncertainty enough to concentrate forecast probability within fewer options (for example, "I forecast scenario B only, not A or C or D.").

The definition of scenario in the domain of forecasting (as I use it here) includes:

- an option among several that answer a forecast multiple-choice (single-answer) question.

- a proposed future about which a yes/no forecast is made.

Christopher Chan @ 2023-11-21T10:27 (+4)

Hi Sarah,

Many thanks for pointing me to this. I had a brief look at the content and the comments, not yet the preceding posts and succesive posts. While I generally remain in agreement with the ALLFED teams on the neglectedness of the tail end, and how solving this also solves for other naturally occuring ASRS scenarios (albeit lower probabilities). Your argument reminds me of a perspective in animal welfare. If we improve the current condition of the billions of animal suffering, we have more of an excuse to slaughter them, in turn, empowering the meat companies, and thus it impedes our transition towards a cruelty free world.

Now I don't think I have anything to add that was not covered by the others a year ago, but I want to take this opportunitiy to steelman your case: If the lower neglectedness and higher tractability of civil movement / policy in denuclearisation to less than 300 nuclear weapons (approximate number for not causing a nuclear winter) > higher neglectedness and lower tractability of physical intervention (resilience food and supply chain resilience plan), you might be correct!

The tractability can be assessed on the civilian organisations (in and out of EA) that have been working on this and the success rate of reduced stockpile / dollar spent

But note that at least half of the nuclear weapon deployed are in the hands of authoritarian countries [Russia: 3859, China: 410, North Korea: 30] which does not have good track record in listening to civil societies. While you could argue that Russia had drastically reduced their stockpile at the end of the cold war, many non-alligned countries [non-NATO, non Russia Bloc] have only increased their stockpile absolutely. I suspect with low confidence that reducing stockpile by a lot is tractable but complete denuclearisation, squeezeing the last 10% is an extremely hard up-hill battle if not impossible as countries continue to look up for their interests. There's been a lot of recent talks about in hindsight Ukraine should not have given up their nuclear weapons and Russia just lifted their ban on testing them.

Interested in your thoughts here :)

Chris

Sarah Weiler @ 2023-11-24T08:13 (+8)

Thanks a lot for taking the time to read & comment, Chris!

Main points

I want to take this opportunitiy to steelman your case: If the lower neglectedness and higher tractability of civil movement / policy in denuclearisation to less than 300 nuclear weapons (approximate number for not causing a nuclear winter) > higher neglectedness and lower tractability of physical intervention (resilience food and supply chain resilience plan), you might be correct!

I (honestly!) appreciate your willingness to steelman a case that (somewhat?) challenges your own views. However, I think I don't endorse/buy the steelmanned argument as you put it here. It seems to me like the kind of simplified evaluation/description that I don't think is very well-suited for assessing strategies to tackle societal problems. More specifically, I think the simple argument/relation you outline wrongfully ignores second- and third-order effects (incl. the adverse effects outlined in the post), which I believe are both extremely important and hard to simplify/formalize.

In a similar vein, I worry about the simplification in your comment on assessing the tractability of denuclearization efforts. I don't think it's appropriate to assess the impact of prior denuclearization efforts based simply on observed correlations, for two main reasons: first, there are numerous relevant factors aside from civil society's denuclearization efforts, and the evidence we have access to has a fairly small sample size of observations that are not independent from each other, which means that identifying causal impact reliably is challenging if not impossible. Second, this is likely a "threshold phenomenon" (not sure what the official term would be), where observable cause-and-effect relations are not linear but occur in jumps; in other words, it seems likely here that civil society activism needs to build up to a certain level to result in clearly visible effects in terms of denuclearization (and the level of movement mobilisation required at any given time in history depends on a number of other circumstances, such as geopolitical and economic events). I don't think that civil society activism for denuclearization is meaningless as long as it remains below that level, because I think it potentially has beneficial side-effects (on norms, culture, nuclear doctrine, decision-makers' inhibitions against nuclear use, etc) and because we will never get above the threshold level if we consider efforts below the level to be pointless and not worth pursuing; but I do think that its visible effects as revealed by the evidence may well appear meaningless because of this non-linear nature of the causal relationship.

squeezeing the last 10% is an extremely hard up-hill battle if not impossible as countries continue to look up for their interests

I completely agree that denuclearization is an extremely hard up-hill battle, and I would argue that this is true even before the last 10% are reached. But I don't think we have the evidence to say that it's an impossible battle, and since I'm not convinced by the alternatives on offer (interventions "to the right of boom", or simple nihilism/giving up/focusing on other things), I think it's worthwhile - vital, actually - to keep fighting that uphill battle.

Some further side-notes

But note that at least half of the nuclear weapon deployed are in the hands of authoritarian countries [Russia: 3859, China: 410, North Korea: 30] which does not have good track record in listening to civil societies. While you could argue that Russia had drastically reduced their stockpile at the end of the cold war, many non-alligned countries [non-NATO, non Russia Bloc] have only increased their stockpile absolutely.

A short comment on the point about authoritarian states: At least for Russia and China, I think civil society/public opinion is far from unimportant (dictators tend to care about public approval at least to some extent) but agree that it's a different situation from liberal democracies, which means that assessing the potential for denuclearization advocacy would require separate considerations for the different settings. On a different note, I think there is at least some case for claiming that changing attitudes/posture in the US has some influence on possibly shifting attitudes and debate in other nuclear-weapons states, especially when considered over a longer timeframe (just as examples: there are arguments that Putin's current bellicosity is partially informed by continued US hostility and militarism esp. during the 2000s; and China justifies its arms build-up mainly by arguing that the huge gap between its arsenal and that of the US is unacceptable). All of this would require a much larger discussion (which would probably lead to no clear conclusion, because uncertainty is immense), so I wouldn't be surprised nor blame you if the snippets of an argument presented above don't change your mind on this particular point ^^

(And a side-note to the side-note: I think it's worth pointing out that the biggest reduction in Russia's stockpiles occurred before the end of the cold war, when the Soviet Union still seriously considered themselves a superpower)

Your argument reminds me of a perspective in animal welfare. If we improve the current condition of the billions of animal suffering, we have more of an excuse to slaughter them, in turn, empowering the meat companies, and thus it impedes our transition towards a cruelty free world.

The analogy makes sense to me, since both some of my claims and the animal advocats' claim you mention seem to fall into the moral hazards category. Without having looked closely at the animal case, I don't think I strongly share their concern in that case (or at least, I probably wouldn't make the tradeoff of giving up on interventions to reduce suffering).

Again, thanks a lot for your comment and thoughts! Looking forward to hearing if you have any further thoughts on the answers given above.

Vasco Grilo @ 2024-04-20T08:49 (+2)

Hi Sarah,

I have just published a post somewhat related to yours where I wonder whether saving lives in normal times is better to improve the longterm future than doing so in catastrophes.