Freddie DeBoer Is Wrong About Effective Altruism

By Bentham's Bulldog @ 2023-12-23T06:02 (+29)

Also posted on my blog.

There are some topics that cause smart people to lose their minds. I have a vivid memory of hearing someone of quite great intelligence, who was able to rattle off very precise facts about the tax rate in particular years of the Reagan administration, talking about veganism. And when he did, he just seemed to lose his mind; everything he said was false, and not in hard-to-figure-out ways. It was all the type of thing that would have been easily disproven by about 5 seconds of critical thought.

I’m reminded of this event whenever I read Freddie deBoer talk about effective altruism. DeBoer is a great writer and thinker; his books are great and he’s literally one of the best blog writers I’ve ever read. DeBoer’s devastating polemics are the stuff of legend—when I’m writing an article critical of something, I’ll often try to emulate deBoer given how sharp and clear his writing is. But when deBoer talks about effective altruism, the stuff he says isn’t just wrong; it’s ill-thought-out and unpersuasive. It’s not just that he makes mistakes, it’s that he makes obvious errors ill-befitting of a thinker of his caliber with alarming frequency.

DeBoer has a recent article criticizing EA describing it as a “shell game.” It begins by briefly mentioning the SBF scandal before describing that his critique isn’t mostly about that. Still, it’s worth addressing this “objection” because it’s the most common objection to effective altruism and deBoer does make it, albeit only onliquely. If you want to read roughly 1 gazillion pages about why there is no good objection to EA based on the SBF scandal, read this excellent article by the wonderful Alex Strasser.

For those who don’t know, presumably on account of having severe amnesia or being young children, Sam Bankman Fried committed fraud. Before this, he was touted as a major figure in EA, and the reason he got into trying to earn lots of money in the first place was in order to give it away. So maybe EA is causally responsible for SBF.

But if you look at what EAs actually recommend, they very much do not recommend defrauding lots of people in a way that totally tanks the reputation of EA and leaves lots of people short of money. If a person, in pursuit of a cause, does immoral things, that’s not a good objection to the cause. If some fraudster defrauded a bunch of people to donate to the Red Cross, even if he was touted by the Red Cross as a good person before they discovered the fraud, that wouldn’t be an objection to the Red Cross.

Maybe the claim made by critics of EA is that the SBF stuff means that EA’s net impact has been negative. It’s worth noting that even if this were true, one should still be an EA. In the Red Cross case, even if the Red Cross’s net impact were negative on account of inspiring a massive fraudster, it would still be good to donate to the Red Cross. It can be good to give money to charities saving lives at the margin even if those charities have, through no fault of their own, motivated people to do bad things.

But the bigger problem is that this claim is false. The overall impact of EA has been overwhelmingly positive. As Scott Alexander notes, listening things EA has achieved:

Global Health And Development

Saved about 200,000 lives total, mostly from malaria1

Treated 25 million cases of chronic parasite infection.2

Given 5 million people access to clean drinking water.3

Supported clinical trials for both the RTS.S malaria vaccine (currently approved!) and the R21/Matrix malaria vaccine (on track for approval)4

Supported additional research into vaccines for syphilis, malaria, helminths, and hepatitis C and E.5

Supported teams giving development economics advice in Ethiopia, India, Rwanda, and around the world.6

Animal Welfare:

Convinced farms to switch 400 million chickens from caged to cage-free.7

Things are now slightly better than this in some places! Source: https://www.vox.com/future-perfect/23724740/tyson-chicken-free-range-humanewashing-investigation-animal-cruelty

Freed 500,000 pigs from tiny crates where they weren’t able to move around8

Gotten 3,000 companies including Pepsi, Kelloggs, CVS, and Whole Foods to commit to selling low-cruelty meat.

AI:

Developed RLHF, a technique for controlling AI output widely considered the key breakthrough behind ChatGPT.9

…and other major AI safety advances, including RLAIF and the foundations of AI interpretability10.

Founded the field of AI safety, and incubated it from nothing up to the point where Geoffrey Hinton, Yoshua Bengio, Demis Hassabis, Sam Altman, Bill Gates, and hundreds of others have endorsed it and urged policymakers to take it seriously.11

Helped convince OpenAI to dedicate 20% of company resources to a team working on aligning future superintelligences.

Gotten major AI companies including OpenAI to commit to the ARC Evals battery of tests to evaluate their models for dangerous behavior before releasing them.

Got two seats on the board of OpenAI, held majority control of OpenAI for one wild weekend, and still apparently might have some seats on the board of OpenAI, somehow?12

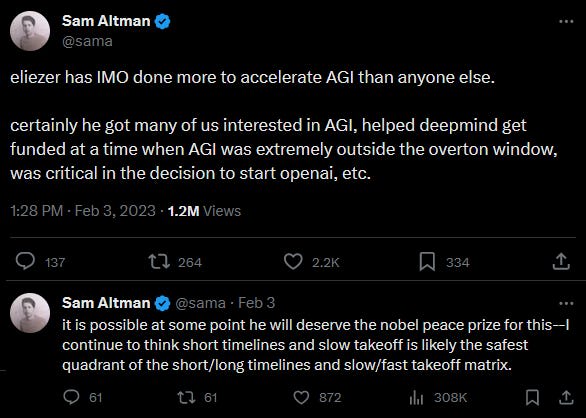

I don't exactly endorse this Tweet, but it is . . . a thing . . . someone has said.

Helped found, and continue to have majority control of, competing AI startup Anthropic, a $30 billion company widely considered the only group with technology comparable to OpenAI’s.13

I don't exactly endorse and so on.

Become so influential in AI-related legislation that Politico accuses effective altruists of having “[taken] over Washington” and “largely dominating the UK’s efforts to regulate advanced AI”.

Helped (probably, I have no secret knowledge) the Biden administration pass what they called "the strongest set of actions any government in the world has ever taken on AI safety, security, and trust.”

Helped the British government create its Frontier AI Taskforce.

Won the PR war: a recent poll shows that 70% of US voters believe that mitigating extinction risk from AI should be a “global priority”.

Other:

Helped organize the SecureDNA consortium, which helps DNA synthesis companies figure out what their customers are requesting and avoid accidentally selling bioweapons to terrorists14.

Provided a significant fraction of all funding for DC groups trying to lower the risk of nuclear war.15

Donated a few hundred kidneys.16

Sparked a renaissance in forecasting, including major roles in creating, funding, and/or staffing Metaculus, Manifold Markets, and the Forecasting Research Institute.

Donated tens of millions of dollars to pandemic preparedness causes years before COVID, and positively influenced some countries’ COVID policies.

Played a big part in creating the YIMBY movement - I’m as surprised by this one as you are, but see footnote for evidence17.

Scott further notes that EA, an incredibly small group, has saved as many lives as curing AIDS, stopping a 9/11 terror attack every year since its founding, and stopping all gun violence. Compared to this, SBF’s misbehavior is a drop in the bucket. If you found out that the U.S. cured AIDS, stopped another 9/11, and ended all gun violence, but that some major fraud occurred, that would be good news overall.

DeBoer quotes the Center for Effective Altruism’s definition of EA as:

both a research field, which aims to identify the world’s most pressing problems and the best solutions to them, and a practical community that aims to use those findings to do good.

This project matters because, while many attempts to do good fail, some are enormously effective. For instance, some charities help 100 or even 1,000 times as many people as others, when given the same amount of resources.

This means that by thinking carefully about the best ways to help, we can do far more to tackle the world’s biggest problems.

However, deBoer declares that this makes it trivial. Who could object to doing good effectively? Thus, he claims, EA is just doing what everyone else does. But this is totally false. It’s true that most people—not all mind you—who donate to charity are in favor of effectiveness. But many of them are in favor of effective charity the way that some liberal Christians are in favor of God; they think of it as a pleasant-sounding buzzword that they kind of abstractly like but don’t do anything about that fact and it’s not a big part of their lives.

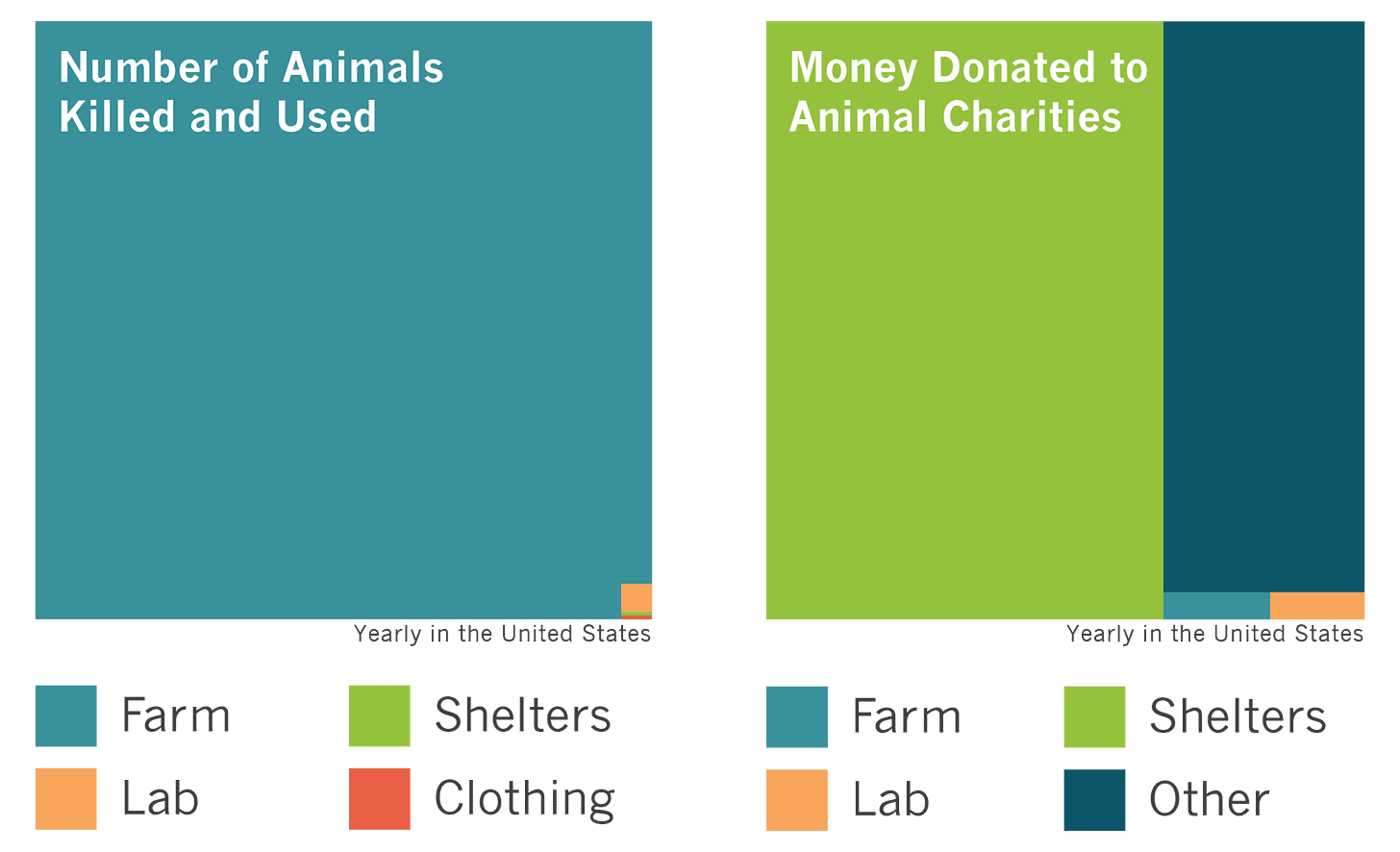

Virtually no one has spent any time looking at the most effective charities. There’s a reason that almost all money given to charities helping animals goes to the small number of them in shelters rather than the billions of them being tortured in factory farms. The reason is that when people are donating to help animals, they donate to the cute puppies they feel a positive emotional sentiment to because they saw ads about them looking sad, rather than trying to do good effectively.

If everyone supports effective charities, why does the Against Malaria Foundation get such a small percentage of charitable funding? Is it really plausible that huge numbers of people have looked into it and concluded that the GiveWell top charities are ineffective? EA is about not just abstractly saying that you like effectiveness but actually looking hard into which charities are effective and which careers do lots of good and then doing those things that are good. Virtually no one does either of those things!

Sufficiently confused, you naturally turn to the specifics, which are the actual program. But quickly you discover that those specifics are a series of tendentious perspectives on old questions, frequently expressed in needlessly-abstruse vocabulary and often derived from questionable philosophical reasoning that seems to delight in obscurity and novelty; the simplicity of the overall goal of the project is matched with a notoriously obscure (indeed, obscurantist) set of approaches to tackling that goal. This is why EA leads people to believe that hoarding money for interstellar colonization is more important than feeding the poor, why researching EA leads you to debates about how sentient termites are. In the past, I’ve pointed to the EA argument, which I assure you sincerely exists, that we should push all carnivorous species in the wild into extinction, in order to reduce the negative utility caused by the death of prey animals. (This would seem to require a belief that prey animals dying of disease and starvation is superior to dying from predation, but ah well.) I pick this, obviously, because it’s an idea that most people find self-evidently ludicrous; defenders of EA, in turn, criticize me for picking on it for that same reason. But those examples are essential because they demonstrate the problem with hitching a moral program to a social and intellectual culture that will inevitably reward the more extreme expressions of that culture. It’s not nut-picking if your entire project amounts to a machine for attracting nuts.

It’s true that if you hang out in EA circles you’ll disproportionately hear weird arguments about things that most normies scoff at. You’re much more likely to hear about space colonization and culling predators at an EA event than, say, at your local bar. But if you look at what EA actually does, it’s mostly just conventionally good stuff. About half of it goes to global health, while only around a third goes to stuff related to Longtermism. DeBoer paints a misleading picture in which EA talks a big game about doing good well, but is really about weird pet projects. In reality, most of what EA funds is just obviously good, while EAs are disproportionately nerds who like to talk about obscure philosophy, so they spend lots of time talking about stuff that sounds strange.

The stuff under the umbrella of Longtermism is mostly uncontroversially good. A lot of it is about making sure AI is safe and we don’t get into a nuclear war, which are both pretty popular.

It’s true that a small share of EA funding goes to weird stuff like shrimp welfare and preventing S-risks. But I think that’s a good thing! As Sam Atis notes:

And it’s true that many people, if asked, would probably agree that when trying to do good you should try to do the most good possible. But it’s insanely weird to actually put it into practice. It’s weird to donate 10% of your income to malaria charities. It’s weird to give your kidney to a stranger. It’s weird to spend a significant amount of your time thinking about how exactly you should structure your career in order to do as much good as possible. So while I’m sympathetic to the argument that the idea is banal, it’s extremely weird to actually go and do this stuff.

Couldn’t we have all the good stuff and get rid of the especially weird stuff? Why does donating 10% of your income to malaria charities have to be associated with people who take seriously the idea that we should eliminate some species of predators in the wild?

Well, if you’re weird enough to take seriously the idea that you should donate a hefty chunk of your income to help people who live on the other side of the planet, you might also be weird enough to think it’s worth considering spending a lot of time and effort trying to help wild animals or people who don’t yet exist. You don’t get the good stuff without the weird stuff.

If you’re trying to decide how to do good, and you only consider options that sound reasonable and normal to most people, you probably won't end up with the conclusion that we should donate huge amounts of our income to people abroad. You probably won’t end up with all the good things EA has done: no malaria nets, no pandemic preparedness funding, no huge campaigns to get animals out of cages. Effective Altruists are weird people doing good things, and long may they stay that way.

Furthermore, not only is it good that EAs are willing to consider weird things, it’s good that some of them are willing to do weird things. Take shrimp welfare, for example. There are over 300 billion farmed shrimp killed each year who feel pain and spend their lives in small tanks, without enough space, have their eyes stabbed out, get diseases constantly, and are killed painfully. I think it’s good that we have a few people—not everyone, but at least a few—thinking hard about how we can prevent the population of shrimp which is over 35 times the population of Earth from being mistreated terribly in the ways they are routinely.

Yes, it sounds weird. If I was dating someone and meeting her parents, I would not like to describe what I do professionally as campaigning on behalf of the shrimp. But that’s what makes EA wonderful. It scoffs at the absurdity heuristic and social desirability bias and instead does what’s good most effectively.

To a lot of people transgender rights and gay rights sounded weird. So did abolitionism and advocacy for the rights of women. Sometimes things that are valuable sound really weird because society often has warped moral priorities. In the case where there is a group that puts their reputation on the line to do good, that has demonstrably saved hundreds of thousands of lives, the correct response is to praise them for their willingness to be weird rather than condemn them.

DeBoer next takes a swipe at EA based on Longtermism, claiming that it’s another absurd view. DeBoer has no argument for why Longtermism is bad—and he ignores the mountains of scholarship defending it. When our decisions can impact vast numbers of future centuries, potentially eliminating a glorious future that would contain trillions of happy people, it’s not hard to believe that we should take that into account, just as it would have been bad if people in the third century had brought about the extinction of the world for temporary benefits.

Even if you’re not on board with Longtermism, EA should still significantly impact how you live your life. You should donate to the Against Malaria Foundation even if you don’t think preventing extinction is valuable. Furthermore, even if you’re not a Longtermist, preventing everyone from dying in a nuclear war or AI cataclysm or plague more deadly than Covid is good, actually, and the fact that there are lots of smart people working on it is valuable.

DeBoer claims that EA “most often functions as a Trojan horse for utilitarianism, a hoary old moral philosophy that has received sustained and damning criticism for centuries.” But this is like claiming that working at soup kitchens function as a trojan horse for socialism. What does it even mean?

EAs often are utilitarians, but one certainly doesn’t have to be a utilitarian to be an EA. The ethics behind EA is Beneficentrist in nature—Beneficentrism is just the idea that helping others is important and that it’s better to do it more rather than less effectively. To think this, one certainly doesn’t have to believe any of the controversial things that utilitarianism implies.

DeBoer’s final point involves questioning why one should align oneself with the movement. Why not just like do charitable things effectively? This is, I think, less important than most of his critique. If you don’t call yourself an effective altruist but give 10% of your income to effective charities, take a high-impact career, are vegan, and give away your kidney, I don’t think you’re doing anything wrong. In fact, I’d consider you to be basically an EA in spirit, even if not in name.

The answer to DeBoer’s question is that the movement is good and reflects my values. The movement is a bunch of people thinking hard and working hard to try to improve the world—and they’ve made great progress. This small group of nerds has done as much good as ending AIDS, gun violence, and stopping 9/11.

Whenever there’s a big movement that one aligns with, they won’t ‘agree with everything it does. DeBoer is a socialist, yet he certainly doesn’t endorse everything that’s ever been done in the name of socialism. He calls himself a socialist because he shares their core ideas and likes a lot of what they do.

That is why I call myself an effective altruist. It’s because the movement does lots of good and I agree with most of what they do. When a small group of nerds saves 200,000 lives and contains some of the nicest, most virtuous, most charitable people working hard to save lives and improve the lives of animals being tortured on factory farms, I will always stand on their side.

JWS @ 2023-12-23T10:51 (+14)

Thanks for writing this - I was planning to but as usual my Forum post reach exceeded my grasp. I also just found it to be a bad piece tbh, and DeBoer to be quite nasty (see Freddie's direct "response" for example)

But I think what I find most astounding about this, and the wave of EA critiques in the wider blogosphere (inc big media) over the last few months is how much they make big claims that seem obviously false, or provide no evidence to back up their sweeping claims. Take this example:

If you’d like a more widely-held EA belief that amounts to angels dancing on the head of a pin, you could consider effective altruism’s turn to an obsessive focus on “longtermism,” in theory an embrace of future lives over present ones and in practice a fixation on the potential dangers of apocalyptic artificial intelligence.

Like this paragraph isn't argued for. It just states and provides no evidence for EA focusing on longtermism, that focus being obsessive, that theoretically it leads one to embrace future lives over the present, and than in practice it leads to a fixation of AI. Even if you think he's right, you have to provide some goddamn evidence.

Then later:

Still, utilitarianism has always been subject to simple hypotheticals that demonstrate its moral failure. Utilitarianism insists...

What follows, I'm afraid, is not a thorough literature review of Meta/Normative/Applied Ethics, or intellectual histories of arguments for Utilitarianism, or as you (and Richard Chappell) point out the difference between Utilitarianism, Consequentialism, and Beneficentrism.

Or later:

I will, however, continue to oppose the tendentious insistence that any charitable dollars for the arts, culture, and beauty are misspent.

I have no idea what claim this is responding to or the idea that this is representative of EA somehow?

All in all Freddie seems to mix up some just simply false empirical claims (in practice EAs do mostly X, or that trying to do good impartially is super common behaviour for everyone around the world) or just appeals to his own moral intuition (this set of stuff Y that EA does is good but you don't need EA, and all this other stuff is just self-evidently ridiculous and wrong)

Funnily enough, it's an example of EA criticism being a shell game, rather than EA. Like in the comments of DeBoer's article and in Scott's reply people are having tons of arguments about EA, but very few are litigating the merits of DeBoer's actual claims. It reminds me of the inconsistency between Silicon Valley e/acc critiques of EA and more academic/leftist critiques of EA. The former calls us communists and the other useful idiots of the expoitative capitalist class. We can't be both!

Anyway, just wanted to add some ammunition to your already good post. Keep on pushing back on rubbish critiques like this where you can Omnizoid, I'll back you up where I can.

DPiepgrass @ 2023-12-23T17:04 (+5)

quickly you discover that [the specifics of the EA program] are a series of tendentious perspectives on old questions, frequently expressed in needlessly-abstruse vocabulary and often derived from questionable philosophical reasoning that seems to delight in obscurity and novelty

He doesn't talk or quote specifics, as if to shield his claim from analysis. "tendentious"? "abstruse"? He's complaining that I, as an EA, am "abstruse" meaning obscure/difficult to understand, but I'm the one that has to look up his words in the dictionary. As for how EAs "seem", if one doesn't try to understand them, they may "seem" different than they are.

EA leads people to believe that hoarding money for interstellar colonization, is more important than feeding the poor.

Hmm, I've been around here awhile and I recall no suggestions to hoard money for interstellar colonization. Technically I haven't been feeding the poor ― I just spent enough on AMF to statistically save one or two children from dying of malaria. But I'm also trying to figure out how AGI ruin might play out and whether there's a way to stop it, so I assume deBoer doesn't like this for some reason. The implication of the title seems to be that because I'm interested in the second thing, I'm engaged in a "Shell Game"?

researching EA leads you to debates about how sentient termites are

I haven't seen any debates about that. Maybe deBoer doesn't want the question raised at all? Like, when he squishes a bug, it bothers him that anyone would wonder whether pain occurred? I've seen people who engage in "moral obvious-ism": "whatever my moral intuitions may be, they are obviously right and yours are obviously wrong". deBoer's anti-EA stance might be simply that.

I’ve pointed to the EA argument, which I assure you sincerely exists, that we should push all carnivorous species in the wild into extinction, in order to reduce the negative utility caused by the death of prey animals. (This would seem to require a belief that prey animals dying of disease and starvation is superior to dying from predation, but ah well.) I pick this, obviously, because it’s an idea that most people find self-evidently ludicrous

The second sentence there is surely inaccurate, but the third is the crux of the matter: he claims it's "self-evidently ludicrous" to think extinction of predators is preferable to the suffering and death of prey. It's an appeal-to-popularity fallacy: since the naturalistic fallacy is very popular, it is right. But also, deBoer implies, since one EA argues this, it's evidence that the entire movement is mad. Like, is debate not something intellectuals should be doing?

“what’s distinctive about EA is that… its whole purpose is to shine light on important problems and solutions in the world that are being neglected.” But that isn’t distinctive at all! Every do-gooder I have ever known has thought of themselves as shining a light on problems that are neglected. So what?

So, maybe he's never met anyone who did mainstream things like give to cancer research or local volunteering. But it's a straw man anyway, since he simply ignores key elements of EA like tractability, comparing different causes with each other via cost-effectiveness estimates and prioritization specialists, etc.

“Let’s be effective in our altruism,” “let’s pursue charitable ends efficiently,” “let’s do good well” - however you want to phrase it, that’s not really an intellectual or political or moral project, because no one could object to it. There is no content there

Yet he is objecting to it, and there are huge websites filled with the EA content which... counts as "no content"?

But oh well, haters gonna hate. Wait a minute, didn't Scott Alexander respond to this already?

Mathieu Spillebeen @ 2023-12-23T09:07 (+1)

I don't know who DeBoer is, but I enjoyed reading about why being weird is practically inevitable even from a historical perspective if you really want to do good. Thanks!