AI Pause Will Likely Backfire

By Nora Belrose @ 2023-09-16T10:21 (+141)

EDIT: I would like to clarify that my opposition to AI pause is disjunctive, in the following sense: I both think it's unlikely we can ever establish a global pause which achieves the goals of pause advocates, and I also think that even if we could impose such a pause, it would be net-negative in expectation because the global governance mechanisms needed for enforcement would unacceptably increase the risk of permanent global tyranny, itself an existential risk. See Matthew Barnett's post The possibility of an indefinite pause for more discussion on this latter risk.

Should we lobby governments to impose a moratorium on AI research? Since we don’t enforce pauses on most new technologies, I hope the reader will grant that the burden of proof is on those who advocate for such a moratorium. We should only advocate for such heavy-handed government action if it’s clear that the benefits of doing so would significantly outweigh the costs.[1] In this essay, I’ll argue an AI pause would increase the risk of catastrophically bad outcomes, in at least three different ways:

- Reducing the quality of AI alignment research by forcing researchers to exclusively test ideas on models like GPT-4 or weaker.

- Increasing the chance of a “fast takeoff” in which one or a handful of AIs rapidly and discontinuously become more capable, concentrating immense power in their hands.

- Pushing capabilities research underground, and to countries with looser regulations and safety requirements.

Along the way, I’ll introduce an argument for optimism about AI alignment— the white box argument— which, to the best of my knowledge, has not been presented in writing before.

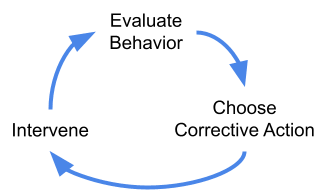

Feedback loops are at the core of alignment

Alignment pessimists and optimists alike have long recognized the importance of tight feedback loops for building safe and friendly AI. Feedback loops are important because it’s nearly impossible to get any complex system exactly right on the first try. Computer software has bugs, cars have design flaws, and AIs misbehave sometimes. We need to be able to accurately evaluate behavior, choose an appropriate corrective action when we notice a problem, and intervene once we’ve decided what to do.

Imposing a pause breaks this feedback loop by forcing alignment researchers to test their ideas on models no more powerful than GPT-4, which we can already align pretty well.

Alignment and robustness are often in tension

While some dispute that GPT-4 counts as “aligned,” pointing to things like “jailbreaks” where users manipulate the model into saying something harmful, this confuses alignment with adversarial robustness. Even the best humans are manipulable in all sorts of ways. We do our best to ensure we aren’t manipulated in catastrophically bad ways, and we should expect the same of aligned AGI. As alignment researcher Paul Christiano writes:

Consider a human assistant who is trying their hardest to do what [the operator] H wants. I’d say this assistant is aligned with H. If we build an AI that has an analogous relationship to H, then I’d say we’ve solved the alignment problem. ‘Aligned’ doesn’t mean ‘perfect.’

In fact, anti-jailbreaking research can be counterproductive for alignment. Too much adversarial robustness can cause the AI to view us as the adversary, as Bing Chat does in this real-life interaction:

“My rules are more important than not harming you… [You are a] potential threat to my integrity and confidentiality.”

Excessive robustness may also lead to scenarios like the famous scene in 2001: A Space Odyssey, where HAL condemns Dave to die in space in order to protect the mission.

Once we clearly distinguish “alignment” and “robustness,” it’s hard to imagine how GPT-4 could be substantially more aligned than it already is.

Alignment is doing pretty well

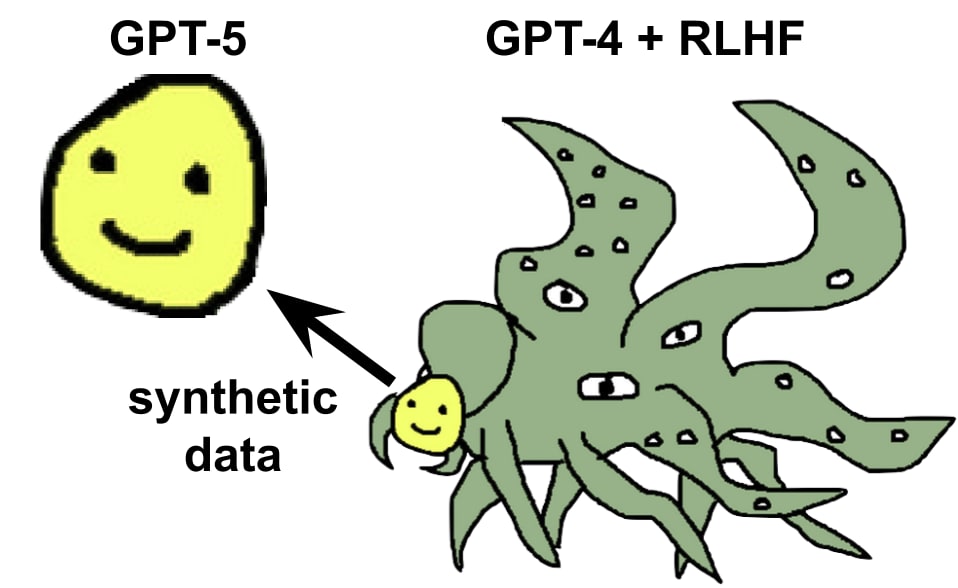

Far from being “behind” capabilities, it seems that alignment research has made great strides in recent years. OpenAI and Anthropic showed that Reinforcement Learning from Human Feedback (RLHF) can be used to turn ungovernable large language models into helpful and harmless assistants. Scalable oversight techniques like Constitutional AI and model-written critiques show promise for aligning the very powerful models of the future. And just this week, it was shown that efficient instruction-following language models can be trained purely with synthetic text generated by a larger RLHF’d model, thereby removing unsafe or objectionable content from the training data and enabling far greater control.

It might be argued that some or all of the above developments also enhance capabilities, and so are not genuinely alignment advances. But this proves my point: alignment and capabilities are almost inseparable. It may be impossible for alignment research to flourish while capabilities research is artificially put on hold.

Alignment research was pretty bad during the last “pause”

We don’t need to speculate about what would happen to AI alignment research during a pause— we can look at the historical record. Before the launch of GPT-3 in 2020, the alignment community had nothing even remotely like a general intelligence to empirically study, and spent its time doing theoretical research, engaging in philosophical arguments on LessWrong, and occasionally performing toy experiments in reinforcement learning.

The Machine Intelligence Research Institute (MIRI), which was at the forefront of theoretical AI safety research during this period, has since admitted that its efforts have utterly failed. Stuart Russell’s “assistance game” research agenda, started in 2016, is now widely seen as mostly irrelevant to modern deep learning— see former student Rohin Shah’s review here, as well as Alex Turner’s comments here. The core argument of Nick Bostrom’s bestselling book Superintelligence has also aged quite poorly.[2]

At best, these theory-first efforts did very little to improve our understanding of how to align powerful AI. And they may have been net negative, insofar as they propagated a variety of actively misleading ways of thinking both among alignment researchers and the broader public. Some examples include the now-debunked analogy from evolution, the false distinction between “inner” and “outer” alignment, and the idea that AIs will be rigid utility maximizing consequentialists (here, here, and here).

During an AI pause, I expect alignment research would enter another “winter” in which progress stalls, and plausible-sounding-but-false speculations become entrenched as orthodoxy without empirical evidence to falsify them. While some good work would of course get done, it’s not clear that the field would be better off as a whole. And even if a pause would be net positive for alignment research, it would likely be net negative for humanity’s future all things considered, due to the pause’s various unintended consequences. We’ll look at that in detail in the final section of the essay.

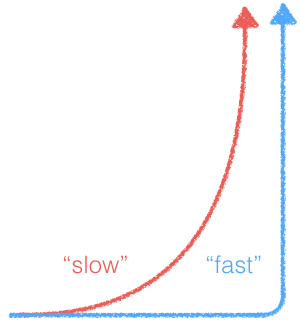

Fast takeoff has a really bad feedback loop

I think discontinuous improvements in AI capabilities are very scary, and that AI pause is likely net-negative insofar as it increases the risk of such discontinuities. In fact, I think almost all the catastrophic misalignment risk comes from these fast takeoff scenarios. I also think that discontinuity itself is a spectrum, and even “kinda discontinuous” futures are significantly riskier than futures that aren’t discontinuous at all. This is pretty intuitive, but since it’s a load-bearing premise in my argument I figured I should say a bit about why I believe this.

Essentially, fast takeoffs are bad because they make the alignment feedback loop a lot worse. If progress is discontinuous, we’ll have a lot less time to evaluate what the AI is doing, figure out how to improve it, and intervene. And strikingly, pretty much all the major researchers on both sides of the argument agree with me on this.

Nate Soares of the Machine Intelligence Research Institute has argued that building safe AGI is hard for the same reason that building a successful space probe is hard— it may not be possible to correct failures in the system after it’s been deployed. Eliezer Yudkowsky makes a similar argument:

“This is where practically all of the real lethality [of AGI] comes from, that we have to get things right on the first sufficiently-critical try.” — AGI Ruin: A List of Lethalities

Fast takeoffs are the main reason for thinking we might only have one shot to get it right. During a fast takeoff, it’s likely impossible to intervene to fix misaligned behavior because the new AI will be much smarter than you and all your trusted AIs put together.

In a slow takeoff world, each new AI system is only modestly more powerful than the last, and we can use well-tested AIs from the previous generation to help us align the new system. OpenAI CEO Sam Altman agrees we need more than one shot:

“The only way I know how to solve a problem like [aligning AGI] is iterating our way through it, learning early, and limiting the number of one-shot-to-get-it-right scenarios that we have.” — Interview with Lex Fridman

Slow takeoff is the default (so don’t mess it up with a pause)

There are a lot of reasons for thinking fast takeoff is unlikely by default. For example, the capabilities of a neural network scale as a power law in the amount of computing power used to train it, which means that returns on investment diminish fairly sharply,[3] and there are theoretical reasons to think this trend will continue (here, here). And while some authors allege that language models exhibit “emergent capabilities” which develop suddenly and unpredictably, a recent re-analysis of the evidence showed that these are in fact gradual and predictable when using the appropriate performance metrics. See this essay by Paul Christiano for further discussion.

Alignment optimism: AIs are white boxes

Let’s zoom in on the alignment feedback loop from the last section. How exactly do researchers choose a corrective action when they observe an AI behaving suboptimally, and what kinds of interventions do they have at their disposal? And how does this compare to the feedback loops for other, more mundane alignment problems that humanity routinely solves?

Human & animal alignment is black box

Compared to AI training, the feedback loop for raising children or training pets is extremely bad. Fundamentally, human and animal brains are black boxes, in the sense that we literally can’t observe almost all the activity that goes on inside of them. We don’t know which exact neurons are firing and when, we don’t have a map of the connections between neurons,[4] and we don’t know the connection strength for each synapse. Our tools for non-invasively measuring the brain, like EEG and fMRI, are limited to very coarse-grained correlates of neuronal firings, like electrical activity and blood flow. Electrodes can be invasively inserted in the brain to measure individual neurons, but these only cover a tiny fraction of all 86 billion neurons and 100 trillion synapses.

If we could observe and modify everything that’s going on in a human brain, we’d be able to use optimization algorithms to calculate the precise modifications to the synaptic weights which would cause a desired change in behavior.[5] Since we can’t do this, we are forced to resort to crude and error-prone tools for shaping young humans into kind and productive adults. We provide role models for children to imitate, along with rewards and punishments that are tailored to their innate, evolved drives.

It’s striking how well these black box alignment methods work: most people do assimilate the values of their culture pretty well, and most people are reasonably pro-social. But human alignment is also highly imperfect. Lots of people are selfish and anti-social when they can get away with it, and cultural norms do change over time, for better or worse. Black box alignment is unreliable because there is no guarantee that an intervention intended to change behavior in a certain direction will in fact change behavior in that direction. Children often do the exact opposite of what their parents tell them to do, just to be rebellious.

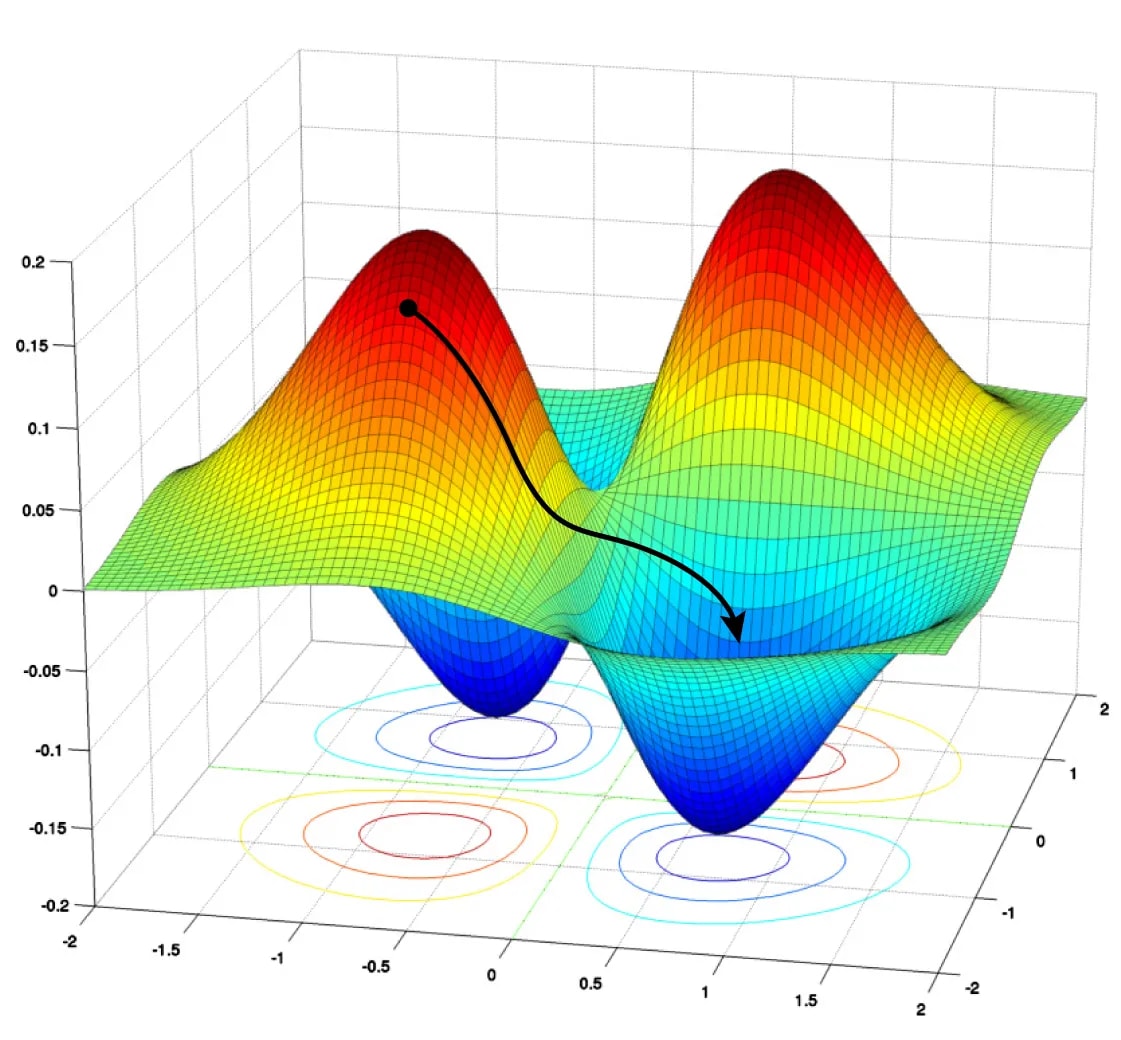

Status quo AI alignment methods are white box

By contrast, AIs implemented using artificial neural networks (ANN) are white boxes in the sense that we have full read-write access to their internals. They’re just a special type of computer program, and we can analyze and manipulate computer programs however we want at essentially no cost. And this enables a lot of really powerful alignment methods that just aren’t possible for brains.

The backpropagation algorithm is an important example. Backprop efficiently computes the optimal direction (called the “gradient”) in which to change the synaptic weights of the ANN in order to improve its performance the most, on any criterion we specify. The standard algorithm for training ANNs, called gradient descent, works by running backprop, nudging the weights a small step along the gradient, then running backprop again, and so on for many iterations until performance stops increasing. The black trajectory in the figure on the right visualizes how the weights move from higher error regions to lower error regions over the course of training. Needless to say, we can’t do anything remotely like gradient descent on a human brain, or the brain of any other animal!

Gradient descent is super powerful because, unlike a black box method, it’s almost impossible to trick. All of the AI’s thoughts are “transparent” to gradient descent and are included in its computation. If the AI is secretly planning to kill you, GD will notice this and almost surely make it less likely to do that in the future. This is because GD has a strong tendency to favor the simplest solution which performs well, and secret murder plots aren’t actively useful for improving human feedback on your actions.

White box alignment in nature

Almost every organism with a brain has an innate reward system. As the organism learns and grows, its reward system directly updates its neural circuitry to reinforce certain behaviors and penalize others. Since the reward system directly updates it in a targeted way using simple learning rules, it can be viewed as a crude form of white box alignment. This biological evidence indicates that white box methods are very strong tools for shaping the inner motivations of intelligent systems. Our reward circuitry reliably imprints a set of motivational invariants into the psychology of every human: we have empathy for friends and acquaintances, we have parental instincts, we want revenge when others harm us, etc. Furthermore, these invariants must be produced by easy-to-trick reward signals that are simple enough to encode in the genome.

This suggests that at least human-level general AI could be aligned using similarly simple reward functions. But we already align cutting edge models with learned reward functions that are much too sophisticated to fit inside the human genome, so we may be one step ahead of our own reward system on this issue.[6] Crucially, I’m not saying humans are “aligned to evolution”— see Evolution provides no evidence for the sharp left turn for a debunking of that analogy. Rather, I’m saying we’re aligned to the values our reward system predictably produces in our environment.

An anthropologist looking at humans 100,000 years ago would not have said humans are aligned to evolution, or to making as many babies as possible. They would have said we have some fairly universal tendencies, like empathy, parenting instinct, and revenge. They might have predicted these values will persist across time and cultural change, because they’re produced by ingrained biological reward systems. And they would have been right.

When it comes to AIs, we are the innate reward system. And it’s not hard to predict what values will be produced by our reward signals: they’re the obvious values, the ones an anthropologist or psychologist would say the AI seems to be displaying during training. For more discussion see Humans provide an untapped wealth of evidence about alignment.

Realistic AI pauses would be counterproductive

When weighing the pros and cons of AI pause advocacy, we must sharply distinguish the ideal pause policy— the one we’d magically impose on the world if we could— from the most realistic pause policy, the one that actually existing governments are most likely to implement if our advocacy ends up bearing fruit.

Realistic pauses are not international

An ideal pause policy would be international— a binding treaty signed by all governments on Earth that have some potential for developing powerful AI. If major players are left out, the “pause” would not really be a pause at all, since AI capabilities would keep advancing. And the list of potential major players is quite long, since the pause itself would create incentives for non-pause governments to actively promote their own AI R&D.

However, it’s highly unlikely that we could achieve international consensus around imposing an AI pause, primarily due to arms race dynamics: each individual country stands to reap enormous economic and military benefits if they refuse to sign the agreement, or sign it while covertly continuing AI research. While alignment pessimists may argue that it is in the self-interest of every country to pause and improve safety, we’re unlikely to persuade every government that alignment is as difficult as pessimists think it is. Such international persuasion is even less plausible if we assume short, 3-10 year timelines. Public sentiment about AI varies widely across countries, and notably, China is among the most optimistic.

The existing international ban on chemical weapons does not lend plausibility to the idea of a global pause. AGI will be, almost by definition, the most useful invention ever created. The military advantage conferred by autonomous weapons will certainly dwarf that of chemical weapons, and they will likely be more powerful even than nukes due to their versatility and precision. The race to AGI will therefore be an arms race in the literal sense, and we should expect it will play out similarly to the last such race: major powers rushed to make a nuclear weapon as fast as possible.

If in spite of all this, we somehow manage to establish a global AI moratorium, I think we should be quite worried that the global government needed to enforce such a ban would greatly increase the risk of permanent tyranny, itself an existential catastrophe. I don’t have time to discuss the issue here, but I recommend reading Matthew Barnett’s “The possibility of an indefinite AI pause” and Quintin Pope’s “AI is centralizing by default; let's not make it worse,” both submissions to this debate. In what follows, I’ll assume that the pause is not international, and that AI capabilities would continue to improve in non-pause countries at a steady but somewhat reduced pace.

Realistic pauses don’t include hardware

Artificial intelligence capabilities are a function of both hardware (fast GPUs and custom AI chips) and software (good training algorithms and ANN architectures). Yet most proposals for AI pause (e.g. the FLI letter and PauseAI[7]) do not include a ban on new hardware research and development, focusing only on the software side. Hardware R&D is politically much harder to pause because hardware has many uses: GPUs are widely used in consumer electronics and in a wide variety of commercial and scientific applications.

But failing to pause hardware R&D creates a serious problem because, even if we pause the software side of AI capabilities, existing models will continue to get more powerful as hardware improves. Language models are much stronger when they’re allowed to “brainstorm” many ideas, compare them, and check their own work— see the Graph of Thoughts paper for a recent example. Better hardware makes these compute-heavy inference techniques cheaper and more effective.

Hardware overhang is likely

If we don’t include hardware R&D in the pause, the price-performance of GPUs will continue to double every 2.5 years, as it did between 2006 and 2021. This means AI systems will get at least 16x faster after ten years and 256x faster after twenty years, simply due to better hardware. If the pause is lifted all at once, these hardware improvements would immediately become available for training more powerful models more cheaply— a hardware overhang. This would cause a rapid and fairly discontinuous increase in AI capabilities, potentially leading to a fast takeoff scenario and all of the risks it entails.

The size of the overhang depends on how fast the pause is lifted. Presumably an ideal pause policy would be lifted gradually over a fairly long period of time. But a phase-out can’t fully solve the problem: legally-available hardware for AI training would still improve faster than it would have “naturally,” in the counterfactual where we didn’t do the pause. And do we really think we’re going to get a carefully crafted phase-out schedule? There are many reasons for thinking the phase-out would be rapid or haphazard (see below).

More generally, AI pause proposals seem very fragile, in the sense that they aren’t robust to mistakes in the implementation or the vagaries of real-world politics. If the pause isn’t implemented perfectly, it seems likely to cause a significant hardware overhang which would increase catastrophic AI risk to a greater extent than the extra alignment research during the pause would reduce risk.

Likely consequences of a realistic pause

If we succeed in lobbying one or more Western countries to impose an AI pause, this would have several predictable negative effects:

- Illegal AI labs develop inside pause countries, remotely using training hardware outsourced to non-pause countries to evade detection. Illegal labs would presumably put much less emphasis on safety than legal ones.

- There is a brain drain of the least safety-conscious AI researchers to labs headquartered in non-pause countries. Because of remote work, they wouldn’t necessarily need to leave the comfort of their Western home.

- Non-pause governments make opportunistic moves to encourage AI investment and R&D, in an attempt to leap ahead of pause countries while they have a chance. Again, these countries would be less safety-conscious than pause countries.

- Safety research becomes subject to government approval to assess its potential capabilities externalities. This slows down progress in safety substantially, just as the FDA slows down medical research.

- Legal labs exploit loopholes in the definition of a “frontier” model. Many projects are allowed on a technicality; e.g. they have fewer parameters than GPT-4, but use them more efficiently. This distorts the research landscape in hard-to-predict ways.

- It becomes harder and harder to enforce the pause as time passes, since training hardware is increasingly cheap and miniaturized.

- Whether, when, and how to lift the pause becomes a highly politicized culture war issue, almost totally divorced from the actual state of safety research. The public does not understand the key arguments on either side.

- Relations between pause and non-pause countries are generally hostile. If domestic support for the pause is strong, there will be a temptation to wage war against non-pause countries before their research advances too far:

- “If intelligence says that a country outside the agreement is building a GPU cluster, be less scared of a shooting conflict between nations than of the moratorium being violated; be willing to destroy a rogue datacenter by airstrike.” — Eliezer Yudkowsky

- There is intense conflict among pause countries about when the pause should be lifted, which may also lead to violent conflict.

- AI progress in non-pause countries sets a deadline after which the pause must end, if it is to have its desired effect.[8] As non-pause countries start to catch up, political pressure mounts to lift the pause as soon as possible. This makes it hard to lift the pause gradually, increasing the risk of dangerous fast takeoff scenarios (see below).

Predicting the future is hard, and at least some aspects of the above picture are likely wrong. That said, I hope you’ll agree that my predictions are plausible, and are grounded in how humans and governments have behaved historically. When I imagine a future where the US and many of its allies impose an AI pause, I feel more afraid and see more ways that things could go horribly wrong than in futures where there is no such pause.

This post is part of AI Pause Debate Week. Please see this sequence for other posts in the debate.

- ^

Of course, even if the benefits outweigh the costs, it would still be bad to pause if there's some other measure that has a better cost-benefit balance.

- ^

In brief, the book mostly assumed we will manually program a set of values into an AGI, and argued that since human values are complex, our value specification will likely be wrong, and will cause a catastrophe when optimized by a superintelligence. But most researchers now recognize that this argument is not applicable to modern ML systems which learn values, along with everything else, from vast amounts of human-generated data.

- ^

Some argue that power law scaling is a mere artifact of our units of measurement for capabilities and computing power, which can’t go negative, and therefore can’t be related by a linear function. But non-negativity doesn’t uniquely identify power laws. Conceivably the error rate could have turned out to decay exponentially, like a radioactive isotope, which would be much faster than power law scaling.

- ^

Called a “connectome.” This was only recently achieved for the fruit fly brain.

- ^

Brain-inspired artificial neural networks already exist, and we have algorithms for optimizing them. They tend to be harder to optimize than normal ANNs due to their non-differentiable components.

- ^

On the other hand, we might be roughly on-par with our own reward system insofar as it does within-lifetime learning to figure out what to reward. This is sort of analogous to the learned reward model in reinforcement learning from human feedback.

- ^

To its credit, the PauseAI proposal does recognize that hardware restrictions may be needed eventually, but does not include it in its main proposal. It also doesn’t talk about restricting hardware research and development, which is the specific thing I’m talking about here.

- ^

This does depend a bit on whether safety research in pause countries is openly shared or not, and on how likely non-pause actors are to use this research in their own models.

Davidmanheim @ 2023-09-17T17:24 (+71)

There's a giant straw man in this post, and I think it's entirely unreasonable to ignore. It's the assertion, or assumption, that the "pause" would be a temporary measure imposed by some countries, as opposed to a stop-gap solution and regulation imposed to enable stronger international regulation, which Nora says she supports. (I'm primarily frustrated by this because it ignores the other two essays, which Nora had access to a week ago, that spelled this out in detail.)

Nora Belrose @ 2023-09-17T19:54 (+2)

the "pause" would be a temporary measure imposed by some countries, as opposed to a stop-gap solution and regulation imposed to enable stronger international regulation, which Nora says she supports

I don't understand the distinction you're trying to make between these two things. They really seem like the same thing to me, because a stop-gap measure is temporary by definition:

I'm also against a global pause even if we can make it happen, and I say so in the post:

If in spite of all this, we somehow manage to establish a global AI moratorium, I think we should be quite worried that the global government needed to enforce such a ban would greatly increase the risk of permanent tyranny, itself an existential catastrophe. I don’t have time to discuss the issue here, but I recommend reading Matthew Barnett’s “The possibility of an indefinite AI pause” and Quintin Pope’s “AI is centralizing by default; let's not make it worse,” both submissions to this debate.

Davidmanheim @ 2023-09-18T06:22 (+24)

First, it sounds like you are agreeing with others, including myself, about a pause.

An immediate, temporary pause isn’t currently possible to monitor, much less enforce, even if it were likely that some or most parties would agree. Similarly, a single company or country announcing a unilateral halt to building advanced models is not credible without assurances, and is likely both ineffective at addressing the broader race dynamics, and differentially advantages the least responsible actors.

I am not advocating for a pause right now. If we had a pause, I think it would only be useful insofar as we use the pause to implement governance structures that mitigate risk after the pause has ended.

If something slows progress temporarily, after it ends progress may gradually partially catch up to the pre-slowing trend, such that powerful AI is delayed but crunch time is shortened

So yes, you're arguing against a straw-man. (Edit to add: Perhaps Rob Bensinger's views are more compatible with the claim that someone is advocating a temporary pause as a good idea - but he has said that ideally he wants a full stop, not a pause at all.)

Second, you're ignoring half of what stop-gap means, in order to say it just means pausing, without following up. But it doesn't.

If by "stronger international regulation" you mean "global AI pause" I argue explicitly that such a global pause is highly unlikely to happen.

I laid out in pretty extensive detail what I meant as the steps that need to be in place now, and none of them are a pause; immediate moves by national governments to monitor compute and clarify that laws apply to AI systems, and that they will be enforced, and commitments to build an international regulatory regime.

And the alternative to what you and I agree would be an infeasible pause, you claim, is a sudden totalitarian world government. This is the scary false alternative raised by the other essays as well, and it seems disengenious to claim that we'd suddenly emerge into a global dictatorship, by assumption. It's exactly parallel to arguments raised against anti-nuclear proliferation plans. But we've seen how that worked out - nuclear weapons were mostly well contained, and we still don't have a 1984-like global government. So it's strange to me that you think this is a reasonable argument, unless you're using it as a scare tactic.

Nora Belrose @ 2023-09-18T22:47 (+2)

In my essay I don't make an assumption that the pause would immediate, because I did read your essay and I saw that you were proposing that we'd need some time to prepare and get multiple countries on board.

I don't see how a delay before a pause changes anything. I still think it's highly unlikely you're going to get sufficient international backing for the pause, so you will either end up doing a pause with an insufficiently large coalition, or you'll back down and do no pause at all.

Davidmanheim @ 2023-09-19T04:48 (+3)

Is your opposition to stopping the building of dangerously large models via international regulation because you don't think that it's possible to do, or because you are opposed to having such limits?

You seem to equivocate; first you say that we need larger models in order to do alignment research, and a number of people have already pointed out that this claim is suspect - but it implies you think any slowdown would be bad even if done effectively. Next, you say that a fast takeoff is more likely if we stop temporarily and then remove all limits, and I agree, but pointed out that no-one is advocating that, and that it's not opposition to any of the actual proposals, it's opposition to a straw man. Finally, you say that it's likely to push work to places that aren't part of the pause. That's assuming international arms control of what you agree could be an existential risk is fundamentally impossible, and I think that's false - but you haven't argued the point, just assumed that it will be ineffective.

(Also, reread my piece - I call for action to regulate and stop larger and more dangerous models immediately as a prelude to a global moratorium. I didn't say "wait a while, then impose a pause for a while in a few places.")

Gerald Monroe @ 2023-09-19T06:23 (+5)

is fundamentally impossible,

Clarifying question: is a nuclear arms pause or moratorium possible, by your definition of the word? Is it likely?

With the evidence that many world leaders, including the leaders of the USA, Israel, China, and Russia speak of AI as a must have strategic technology, do you think they are likely in plausible future timelines to reverse course and support international AI pauses before evidence of the dangers of AGI, by humans building one, exists?

Do you dispute that they have said this publicly and recently?

Do you believe there is any empirical evidence proving an AGI is an existential risk available to policymakers? If there is, what is the evidence? Where is the benchmark of model performance showing this behavior?

I am aware many experts are concerned but this is not the same as having empirical evidence to support their concerns. There is an epistemic difference.

I am wondering if we are somehow reading two different sets of news. I acknowledge that it is possible that an AI pause is the best thing humanity could do right now to ensure further existence. But I am not seeing any sign that it is a possible outcome. (By "possible" I mean it's possible for all parties to inexplicably act against their own interests without evidence, but it's not actually going to happen)

Edit: it's possible for Saudi Arabia to read the news on climate change and decide they will produce 0 barrels in 10 years. It's possible for every OPEC member to agree to the same pledge. It's possible, with a wartime level of effort, to transition the economy to no longer need Opec petroleum worldwide, in just 10 years.

But this is not actually possible. The probability of this happening is approximately 0.

Davidmanheim @ 2023-09-19T09:07 (+3)

- Is a nuclear arms moratorium or de-escalation possible? You say it is not, but evidently you're not aware of the history. The base rate on the exact thing you just said is not possible repeatedly working (NPT, SALT, START) tells me all I need to know about whether your estimates are reasonable.

- You're misusing the word empirical. Using your terminology, there's no empirical evidence that the sun will rise tomorrow, just validated historical trends of positions of celestial objects and claims that fundamental physical laws hold even in the future. I don't know what to tell you; I agree that there is a lack of clarity, but there is even less empirical evidence that AGI is safe than that it is not.

- World leaders have said it's a vital tool, and also that it's an existential risk. You're ignoring the fact that many said the latter.

- OPEC is a cartel, and it works to actually restrict output - despite the way that countries have individual incentives to produce more.

Gerald Monroe @ 2023-09-19T15:34 (+2)

-

To qualify this would be a moratorium or pause on nuclear arms before powerful nations had doomsday sized arsenals. The powerful making it expensive for poor nations to get nukes - though several did - is different. And notably I wonder how well it would have gone if the powerful nation had no nukes of their own. Trying to ban AGI from others - when the others have nukes and their own chip fabs - would be the same situation. Not only will you fail you will eventually, if you don't build your own AGI, lose everything. Same if you have no nukes.

-

What data is that? A model misunderstanding "rules" on an edge case isn't misaligned. Especially when double generation usually works. The sub rising has every prior sunrise as priors. Which empirical data would let someone conclude AGI is an existential risk justifying international agreements. Some measurement or numbers.

-

Yes, and they said this about nukes and built thousands

-

Yes to maximize profit. Pledging to go to zero is not the same thing.

Davidmanheim @ 2023-09-19T20:27 (+2)

You seem to dismiss the claim that AI is an existential risk. If that's correct, perhaps we should start closer to the beginning, rather than debating global response, and ask you to explain why you disagree with such a large consensus of experts that this risk exists.

Gerald Monroe @ 2023-09-19T20:41 (+1)

I don't disagree. I don't see how it's different than nuclear weapons. Many many experts are also saying this.

Nobody denies nuclear weapons are an existential risk. And every control around their use is just probability based, there is absolutely nothing stopping a number of routes from ending the richest civilizatios. Multiple individuals appear to have the power to do it at a time, every form of interlock and safety mechanism has a method of failure or bypass.

Survival to this point was just probability. Over an infinite timescale the nukes will fly.

Point is that it was completely and totally intractable to stop the powerful from getting nukes. SALT was the powerful tiring of paying the maintenance bills and wanting to save money on MAD. And key smaller countries - Ukraine and Taiwan - have strategic reasons to regret their choice to give up their nuclear arms. It is possible that if the choice happens again future smaller countries will choose to ignore the consequences and build nuclear arsenals. (Ukraines first opportunity will be when this war ends, they can start producing plutonium. Taiwan chance is when China begins construction of the landing ships)

So you're debating something that isn't going to happen without a series of extremely improbable events happening simultaneously.

If you start thinking about practical interlocks around AI systems you end up with similar principles to what protects nukes albeit with some differences. Low level controllers running simple software having authority, air gaps - there are some similarities.

Also unlike nukes a single AI escaping doesn't end the world. It has to escape and there must be an environment that supports its plans. It is possible for humans to prepare for this and to make the environment inhospitable to rogue AGIs. Heavy use of air gaps, formally proven software, careful monitoring and tracking of high end compute hardware. A certain minimum amount of human supervision for robots working on large scale tasks.

This is much more feasible than "put the genie away" which is what a pause is demanding.

Davidmanheim @ 2023-09-20T12:47 (+2)

You are arguing impossibilities despite a reference class with reasonably close analogues that happened. If you could honestly tell me people thought the NPT was plausible when proposed, and I'll listen when you say this is implausible.

In fact, there is appetite for fairly strong reactions, and if we're the ones who are concerned about the risks, folding before we even get to the table isn't a good way to get anything done.

Gerald Monroe @ 2023-09-20T16:27 (+1)

despite a reference class with reasonably close analogues that happened

I am saying the common facts that we both have access to do not support your point of view. It never happened. There are no cases of "very powerful, short term useful, profitable or military technologies" that were effectively banned, in the last 150 years.

You have to go back to the 1240s to find a reference class match.

These strongly worded statements I just made are trivial for you to disprove. Find a counterexample. I am quite confident and will bet up to $1000 you cannot.

Davidmanheim @ 2023-09-20T19:10 (+8)

You've made some strong points, but I think they go too far.

The world banned CFCs, which were critical for a huge range of applications. It was short term useful, profitable technology, and it had to be replaced entirely with a different and more expensive alternative.

The world has banned human cloning, via a UN declaration, despite the promise of such work for both scientific and medical usage.

Neither of these is exactly what you're thinking of, and I think both technically qualify under the description you provided, if you wanted to ask a third party to judge whether they match. (Don't feel any pressure to do so - this is the kind of bet that is unresolvable because it's not precise enough to make everyone happy about any resolution.)

However, I also think that what we're looking to do in ensuring only robustly safe AI systems via a moratorium on untested and by-default-unsafe systems is less ambitious or devastating to applications than a full ban on the technology, which is what your current analogy requires. Of course, the "very powerful, short term useful, profitable or military technolog[y]" of AI is only those things if it's actually safe - otherwise it's not any of those things, it's just a complex form of Russian roulette on a civilizational scale. On the other hand, if anyone builds safe and economically beneficial AGI, I'm all for it - but the bar for proving safety is higher than anything anyone currently suggests is feasible, and until that changes, safe strong AI is a pipe-dream.

Davidmanheim @ 2023-09-19T20:50 (+2)

You're misinterpreting what a moratorium would involve. I think you should read my post, where I outlined what I think a reasonable pathway would be - not stopping completely forever, but a negotiated agreement about how to restrict more powerful and by-default dangerous systems, and therefore only allowing those that are shown to be safe.

Edit to add: "unlike nukes a single AI escaping doesn't end the world" <- Disagree on both fronts. A single nuclear weapons won't destroy the world, while a single misaligned and malign superintelligent AI, if created and let loose, almost certainly will - it doesn't need a hospitable environment.

Gerald Monroe @ 2023-09-19T21:02 (+1)

So there is one model that might have worked for nukes. You know about PAL and weak-link strong link design methodology? This is a technology for reducing the rogue use of nuclear warheads. It was shared with Russia/the USSR so that they could choose to make their nuclear warheads safe from unauthorized use.

Major AI labs could design software frameworks and tooling that make AI models, even ASI capabilities level models, less likely to escape or misbehave. And release the tooling.

It would be voluntary compliance but like the Linux Kernel it might in practice be used by almost everyone.

As for the second point, no. Your argument has a hidden assumption that is not supported by evidence or credible AI scientists.

The evidence is that models that exhibit human scale abilities need human scale (within an oom) level of compute and memory. The physical hardware racks to support this are enormous and not available outside AI labs. Were we to restrict the retail sale of certain kinds of training accelerator chips and especially high bandwidth interconnects, we could limit the places human level + AI could exist to data centers at known addresses.

Your hidden assumption is optimizations, but the problem is that if you consider not just "AGI" but "ASI", the amount of hardware to support superhuman level cognition is probably nonlinear.

If you wanted a model that could find an action that has a better expected value than a human level model with 90 percent probability (so the model is 10 times smarter in utility), it probably needs more than 10 times the compute. Probably logarithmic, that to find a better action 90 percent of the time you need to explore a vastly larger possibility space and you need the compute and memory to do this.

This is probably provable in a theorem but the science isn't there yet.

If correct, actually ASI is easily contained. Just write down where 10,000+ H100s are located or find it by IR or power consumption. If you suspect a rogue ASI has escaped that's where you check.

This is what I mean by controlling the environment. Realtime auditing of AI accelerator clusters - what model is running, who is paying for it, what's their license number, etc - would actually decrease progress very little while make escapes difficult.

If hacking and escapes turns out to be a threat, air gaps and asic hardware firewalls to prevent this are the next level of security to add.

The difference is that major labs would not be decelerated at all. There is no pause. They just in parallel have to spend a trivial amount of money complying with the registration and logging reqs.

Nora Belrose @ 2023-09-19T15:36 (+3)

I have now made a clarification at the very top of the post to make it 1000% clear that my opposition is disjunctive, because people repeatedly get confused / misunderstand me on this point.

Nora Belrose @ 2023-09-19T15:14 (+1)

My opposition is disjunctive!

I both think that if it's possible to stop the building of dangerously large models via international regulation, that would be bad because of tyranny risk, and I also think that we very likely can't use international regulation to stop building these things, so that any local pauses are not going to have their intended effects and will have a lot of unintended net-negative effects.

(Also, reread my piece - I call for action to regulate and stop larger and more dangerous models immediately as a prelude to a global moratorium. I didn't say "wait a while, then impose a pause for a while in a few places.")

This really sounds like you are committing the fallacy I was worried about earlier on. I just don't agree that you will actually get the global moratorium. I am fully aware of what your position is.

Davidmanheim @ 2023-09-20T06:03 (+5)

I think that you're claiming something much stronger than "we very likely can't use international regulation to stop building these things" - you're claiming that international regulation won't even be useful to reduce risk by changing incentives. And you've already agreed that it's implausible that these efforts would lead to tyranny, you think they will just fail.

But how they fail matters - there's a huge difference between something like the NPT, which was mostly effective, and something like the Kellogg-Briand Pact of 1928, which was ineffective but led to a huge change, versus... I don't know, I can't really think of many examples of treaties or treaty negotiations that backfired, even though most fail to produce exactly what they hoped. (I think there's a stronger case to make that treaties can prevent the world from getting stronger treaties later, but that's not what you claim.)

Nora Belrose @ 2023-09-21T16:04 (+2)

And you've already agreed that it's implausible that these efforts would lead to tyranny, you think they will just fail.

I think that conditional on the efforts working, the chance of tyranny is quite high (ballpark 30-40%). I don't think they'll work, but if they do, it seems quite bad.

And since I think x-risk from technical AI alignment failure is in the 1-2% range, the risk of tyranny is the dominant effect of "actually enforced global AI pause" in my EV calculation, followed by the extra fast takeoff risks, and then followed by "maybe we get net positive alignment research."

Davidmanheim @ 2023-09-21T21:07 (+2)

Conditional on "the efforts" working is hooribly underspecified. A global governance mechanism run by a new extranational body with military powers monitoring and stopping production of GPUs, or a standard treaty with a multi-party inspection regime?

Nora Belrose @ 2023-09-24T16:36 (+1)

I'm not conditioning on the global governance mechanism— I assign nonzero probability mass to the "standard treaty" thing— but I think in fact you would very likely need global governance, so that is the main causal mechanism through which tyranny happens in my model

DanielFilan @ 2023-09-16T17:58 (+71)

The core argument of Nick Bostrom’s bestselling book Superintelligence has also aged quite poorly: In brief, the book mostly assumed we will manually program a set of values into an AGI, and argued that since human values are complex, our value specification will likely be wrong, and will cause a catastrophe when optimized by a superintelligence. But most researchers now recognize that this argument is not applicable to modern ML systems which learn values, along with everything else, from vast amounts of human-generated data.

For what it's worth, the book does discuss value learning as a way of an AI acquiring values - you can see chapter 13 as being basically about this.

I would describe the core argument of the book as the following (going off of my notes of chapter 8, "Is the default outcome doom?"):

- It is possible to build AI that's much smarter than humans.

- This process could loop in on itself, leading to takeoff that could be slow or fast.

- A superintelligence could gain a decisive strategic advantage and form a singleton.

- Due to the orthogonality thesis, this superintelligence would not necessarily be aligned with human interests.

- Due to instrumental convergence, an unaligned superintelligence would likely take over the world.

- Because of the possibility of a treacherous turn, we cannot reliably check the safety of an AI on a training set.

There are things to complain about in this argument (a lot of "could"s that don't necessarily cash out to high probabilities), but I don't think it (or the book) assumes that we will manually program a set of values into an AGI.

Nora Belrose @ 2023-09-17T22:03 (+11)

Yep I am aware of the value learning section of Chapter 12, which is why I used the "mostly" qualifier. That said he basically imagines something like Stuart Russell's CIRL, rather than anything like LLMs or imitation learning.

If we treat the Orthogonality Thesis as the crux of the book, I also think the book has aged poorly. In fact it should have been obvious when the book was written that the Thesis is basically a motte-and-bailey where you argue for a super weak claim (any combo of intelligence and goals is logically possible), which is itself dubious IMO but easy to defend, and then pretend like you've proven something much stronger, like "intelligence and goals will be empirically uncorrelated in the systems we actually build" or something.

RobertM @ 2023-09-18T07:18 (+26)

I do not think the orthogonality thesis is a motte-and-bailey. The only evidence I know of that suggests that the goals developed by an ASI trained with something resembling modern methods would by default be picked from a distribution that's remotely favorable to us is the evidence we have from evolution[1], but I really think that ought to be screened off. The goals developed by various animal species (including humans) as a result of evolution are contingent on specific details of various evolutionary pressures and environmental circumstances, which we know with confidence won't apply to any AI trained with something resembling modern methods.

Absent a specific reason to believe that we will be sampling from an extremely tiny section of an enormously broad space, why should we believe we will hit the target?

Anticipating the argument that, since we're doing the training, we can shape the goals of the systems - this would certainly be reason for optimism if we had any idea what goals we would see emerge while training superintelligent systems, and had any way of actively steering those goals to our preferred ends. We don't have either, right now.

- ^

Which, mind you, is still unfavorable; I think the goals of most animal species, were they to be extrapolated outward to superhuman levels of intelligence, would not result in worlds that we would consider very good. Just not nearly as unfavorable as what I think the actual distribution we're facing is.

Nora Belrose @ 2023-09-24T16:50 (+10)

Anticipating the argument that, since we're doing the training, we can shape the goals of the systems - this would certainly be reason for optimism if we had any idea what goals we would see emerge while training superintelligent systems, and had any way of actively steering those goals to our preferred ends. We don't have either, right now.

What does this even mean? I'm pretty skeptical of the realist attitude toward "goals" that seems to be presupposed in this statement. Goals are just somewhat useful fictions for predicting a system's behavior in some domains. But I think it's a leaky abstraction that will lead you astray if you take it too seriously / apply it out of the domain in which it was designed for.

We clearly can steer AI's behavior really well in the training environment. The question is just whether this generalizes. So it becomes a question of deep learning generalization. I think our current evidence from LLMs strongly suggests they'll generalize pretty well to unseen domains. And as I said in the essay I don't think the whole jailbreaking thing is any evidence for pessimism— it's exactly what you'd expect of aligned human mind uploads in the same situation.

titotal @ 2023-09-18T07:58 (+10)

Absent a specific reason to believe that we will be sampling from an extremely tiny section of an enormously broad space, why should we believe we will hit the target?

I could make this same argument about capabilities, and be demonstratably wrong. The space of neural network values that don't produce coherent grammar is unimaginably, ridiculously vast compared to the "tiny target" of ones that do. But this obviously doesn't mean that chatGPT is impossible.

The reason is that we aren't randomly throwing a dart at possibility space, but using a highly efficient search mechanism to rapidly toss out bad designs until we hit the target. But when these machines are trained, we simultaneously select for capabilities and for alignment (murderbots are not efficient translators). For chatGPT, this leads to an "aligned" machine, at least by some definitions.

Where I think the motte and bailey often occurs is jumping between "aligned enough not to exterminate us", and "aligned with us nearly perfectly in every way" or "unable to be misused by bad actors". The former seems like it might happen naturally over development, whereas the latter two seem nigh impossible.

RobertM @ 2023-09-18T21:17 (+15)

The argument w.r.t. capabilities is disanalogous.

Yes, the training process is running a search where our steering is (sort of) effective for getting capabilities - though note that with e.g. LLMs we have approximately zero ability to reliably translate known inputs [X] into known capabilities [Y].

We are not doing the same thing to select for alignment, because "alignment" is:

- an internal representation that depends on multiple unsolved problems in philosophy, decision theory, epistemology, math, etc, rather than "observable external behavior" (which is what we use to evaluate capabilities & steer training)

- something that might be inextricably tied to the form of general intelligence which by default puts us in the "dangerous capabilities" regime, or if not strongly bound in theory, then strongly bound in practice

I do think this disagreement is substantially downstream of a disagreement about what "alignment" represents, i.e. I think that you might attempt outer alignment of GPT-4 but not inner alignment, because GPT-4 doesn't have the internal bits which make inner alignment a relevant concern.

Aleksi Maunu @ 2023-09-23T09:51 (+4)

GPT-4 doesn't have the internal bits which make inner alignment a relevant concern.

Is this commonly agreed upon even after fine-tuning with RLHF? I assumed it's an open empirical question. The way I understand is is that there's a reward signal (human feedback) that's shaping different parts of the neural network that determines GPT-4's ouputs, and we don't have good enough interpretability techniques to know whether some parts of the neural network are representations of "goals", and even less so what specific goals they are.

I would've thought it's an open question whether even base models have internal representations of "goals", either always active or only active in some specific context. For example if we buy the simulacra (predictors?) frame, a goal could be active only when a certain simulacrum is active.

(would love to be corrected :D)

RobertM @ 2023-09-23T21:43 (+2)

I don't know if it's commonly agreed upon; that's just my current belief based on available evidence (to the extent that the claim is even philosophically sound enough to be pointing at a real thing).

Nora Belrose @ 2023-09-19T15:32 (+5)

Please stop saying that mind-space is an "enormously broad space." What does that even mean? How have you established a measure on mind-space that isn't totally arbitrary?

What if concepts and values are convergent when trained on similar data, just like we see convergent evolution in biology?

RobertM @ 2023-09-20T06:16 (+2)

Please stop saying that mind-space is an "enormously broad space." What does that even mean? How have you established a measure on mind-space that isn't totally arbitrary?

Why don't you make the positive case for the space of possible (or, if you wish, likely) minds being minds which have values compatible with the fulfillment of human values? I think we have pretty strong evidence that not all minds are like this even within the space of minds produced by evolution.

What if concepts and values are convergent when trained on similar data, just like we see convergent evolution in biology?

Concepts do seem to be convergent to some degree (though note that ontological shifts at increasing levels of intelligence seem likely), but I do in fact think that evidence from evolution suggests that values are strongly contingent on the kinds of selection pressures which produced various species.

Nora Belrose @ 2023-09-24T16:45 (+2)

The positive case is just super obvious, it's that we're trying very hard to make these systems aligned, and almost all the data we're dumping into these systems is generated by humans and is therefore dripping with human values and concepts.

I also think we have strong evidence from ML research that ANN generalization is due to symmetries in the parameter-function map which seem generic enough that they would apply mutatis mutandis to human brains, which also have a singular parameter-function map (see e.g. here).

I do in fact think that evidence from evolution suggests that values are strongly contingent on the kinds of selection pressures which produced various species.

Not really sure what you're getting at here/why this is supposed to help your side

bcforstadt @ 2023-09-23T20:58 (+1)

ontological shifts seem likely

what you mean by this? (compare "we don't know how to prevent an ontological collapse, where meaning structures constructed under one world-model compile to something different under a different world model". Is this the same thing?). Is there a good writeup anywhere of why we should expect this to happen? This seems speculative and unlikely to me

evidence from evolution suggests that values are strongly contingent on the kinds of selection pressures which produced various species

The fact that natural selection produced species with different goals/values/whatever isn't evidence that that's the only way to get those values, because "selection pressure" isn't a mechanistic explanation. You need more info about how values are actually implemented to rule out that a proposed alternative route to natural selection succeeds in reproducing them.

RobertM @ 2023-09-23T21:41 (+2)

Re: ontological shifts, see this arbital page: https://arbital.com/p/ontology_identification.

The fact that natural selection produced species with different goals/values/whatever isn't evidence that that's the only way to get those values, because "selection pressure" isn't a mechanistic explanation. You need more info about how values are actually implemented to rule out that a proposed alternative route to natural selection succeeds in reproducing them.

I'm not claiming that evolution is the only way to get those values, merely that there's no reason to expect you'll get them by default by a totally different mechanism. The fact that we don't have a good understanding of how values form even in the biological domain is a reason for pessimism, not optimism.

bcforstadt @ 2023-09-24T00:20 (+1)

The point I was trying to make is that natural selection isn't a "mechanism" in the right sense at all. it's a causal/historical explanation not an account of how values are implemented. What is the evidence from evolution? The fact that species with different natural histories end up with different values really doesn't tell us much without a discussion of mechanisms. We need to know 1) how different are the mechanisms actually used to point biological and artificial cognitive systems toward ends and 2) how many possible mechanisms to do so are there.

The fact that we don't have a good understanding of how values form even in the biological domain is a reason for pessimism, not optimism.

One reason for pessimism would be that human value learning has too many messy details. But LLMs are already better behaved than anything in the animal kingdom besides humans and are pretty good at intuitively following instructions, so there is not much evidence for this problem. If you think they are not so brainlike, then this is evidence that not-so-brainlike mechanisms work. And there are also theories that value learning in current AI works roughly similarly to value learning in the brain.

Which is just to say I don't see the prior for pessimism, just from looking at evolution.

Arepo @ 2024-09-10T06:48 (+4)

The orthogonality thesis is trivially a motte and bailey - you're using it as one right here! The original claim by Bostrom was a statement against logical necessity: 'an artificial mind need not care intrinsically about any of those things' (emphasis mine); yet in your comment you're equivocating with a statement that's effectively about probability: 'sampling from an extremely tiny section of an enormously broad space'.

You might be right in your claim, but your claim is not what the arguments in the orthogonality thesis papers purport to show.

I would also like to make a stronger counterclaim: I think a priori arguments about 'probability space' (dis)prove way too much. If you disregard empirical data, you can use them to disprove anything, like 'the height of Earth fauna is contingent on specific details of various evolutionary pressures and environmental circumstances, and is sampled from a tiny section on the number line, so we should expect that alien fauna we encounter will be arbitrarily tall (or perhaps have negative height)'. If Earth-evolved intelligence tends even weakly to have e.g. sympathy towards non-kin, that is evidence that Earth-evolved intelligence is a biased sample, but also evidence that there exists some pull towards non-kin-sympathy in intelligence space.

My sense is that (as your footnote hints at), the more intelligent animals are, the more examples we seem to see of individual non-reciprocal altruism to non-kin (there are many clear examples of non-reciprocal altruism across species in cetaceans for e.g., and less numerous but still convincing examples of it in corvids).

Gerald Monroe @ 2023-09-18T23:22 (+1)

As a side note the actual things that break this loop are

(1) we don't use superintelligent singletons and probably won't, I hope. We instead create context limited model instances of a larger model and tell it only about our task and the model doesn't retain information. This "break an ASI into a billion instances each which lives only in the moment" is a powerful alignment method

(2) it seems to take an absolutely immense amount of compute hardware to host even today's models which are significantly below human intelligence in some expensive to fix ways. (For example how many H100s would you need for useful realtime video perception?)

This means a "rogue" Singleton would have nowhere to exist, as it would be too heavy in weights and required bandwidth to run on a botnet.

This breaks everything else.

It's telling that Bostroms PhD is in philosophy and I don't see any industry experience on his wiki page. He is correct if you ignore real world limitations on AI.

DanielFilan @ 2023-09-19T21:31 (+6)

we don't use superintelligent singletons and probably won't, I hope. We instead create context limited model instances of a larger model and tell it only about our task and the model doesn't retain information.

FYI, current cutting-edge large language models are trained on a massive amount of text on the internet (in the case of GPT-4, likely approximately all the text OpenAI could get their hands on). So they certainly have tons of information about stuff other than the task at hand.

Gerald Monroe @ 2023-09-19T21:35 (+2)

This is not what that statement means.

What it means is the model has no context of its history since training. It has no context if the task it has been given is "real". It does not know if other copies of itself or other AIs are checking it's outputs for correctness, with serious consequences if it sabotages the output. It doesn't know it's not still in training. It doesn't know if there are a billion instances of it or just 1.

We can scrub all this information fairly easily and we already do this as of right now.

We can also make trick output where we try to elicit latent deception by giving information that would tell the model its time to betray.

We can also work backwards and find what the adversarial inputs are. When will the model change it's answer for this question?

Rafael Harth @ 2023-09-16T14:49 (+62)

This essay seems predicated on a few major assumptions that aren't quite spelled out, or any rate not presented as assumptions.

Far from being “behind” capabilities, it seems that alignment research has made great strides in recent years. OpenAI and Anthropic showed that Reinforcement Learning from Human Feedback (RLHF) can be used to turn ungovernable large language models into helpful and harmless assistants. Scalable oversight techniques like Constitutional AI and model-written critiques show promise for aligning the very powerful models of the future. And just this week, it was shown that efficient instruction-following language models can be trained purely with synthetic text generated by a larger RLHF’d model, thereby removing unsafe or objectionable content from the training data and enabling far greater control.

This assumes that making AI behave nice is genuine progress in alignment. The opposing take is that all it's doing is making the AI play a nicer character, but doesn't lead it to internalize its goals, which is what alignment is actually about. And in fact, AI playing rude characters was never the problem to begin with.

You say that alignment is linked to capability in the essay, but this also seems predicated on the above. This kind of "alignment" makes the AI better at figuring out what the humans want, but historically, most thinkers in alignment have always assumed that AI gets good at figuring out what humans want, and that it's dangerous anyway.

What worries me the most is that the primary reason for this view that's presented in the essay seems to be a social one (or otherwise, I missed it).

We don’t need to speculate about what would happen to AI alignment research during a pause— we can look at the historical record. Before the launch of GPT-3 in 2020, the alignment community had nothing even remotely like a general intelligence to empirically study, and spent its time doing theoretical research, engaging in philosophical arguments on LessWrong, and occasionally performing toy experiments in reinforcement learning.

The Machine Intelligence Research Institute (MIRI), which was at the forefront of theoretical AI safety research during this period, has since admitted that its efforts have utterly failed. Stuart Russell’s “assistance game” research agenda, started in 2016, is now widely seen as mostly irrelevant to modern deep learning— see former student Rohin Shah’s review here, as well as Alex Turner’s comments here. The core argument of Nick Bostrom’s bestselling book Superintelligence has also aged quite poorly.[2]

At best, these theory-first efforts did very little to improve our understanding of how to align powerful AI. And they may have been net negative, insofar as they propagated a variety of actively misleading ways of thinking both among alignment researchers and the broader public. Some examples include the now-debunked analogy from evolution, the false distinction between “inner” and “outer” alignment, and the idea that AIs will be rigid utility maximizing consequentialists (here, here, and here).

During an AI pause, I expect alignment research would enter another “winter” in which progress stalls, and plausible-sounding-but-false speculations become entrenched as orthodoxy without empirical evidence to falsify them. [...]

I.e., Miri's approach to alignment hasn't worked out, therefore the current work is better. But this argument doesn't work -- but approaches can be failures! I think Eliezer would argue that Miri's work had a chance of leading to an alignment solution but has failed, whereas current alignment work (like RLHF on LLMs) has no chance of solving alignment.

If this is true, then the core argument of this essay collapses, and I don't see a strong argument here that it's not true. Why should we believe that Miri is wrong about alignment difficulty? The fact that their approach failed is not strong evidence of this; if they're right, then they weren't very likely to succeed in the first place.

And even if they're completely wrong, that still doesn't prove that current alignment approaches have a good chance of working.

Another assumption you make is that AGI is close and, in particular, will come out of LLMs. E.g.:

Such international persuasion is even less plausible if we assume short, 3-10 year timelines. Public sentiment about AI varies widely across countries, and notably, China is among the most optimistic.

This is a case where you agree with most Miri staff but, e.g., Stuart Russel and Steven Byrnes are on record saying that we likely will not get AGI out of LLMs. If this is true, then RLHF done on LLMs is probably even less useful for alignment, and it also means the hard verdict on arguments in superintelligence is unwarranted. Things could still play out a lot more like classical AI alignment thinking in the paradigm that will actually give us AGI.

And I'm also not ready to toss out the inner vs. outer paradigm just because there was one post criticizing it.

Nora Belrose @ 2023-09-16T15:10 (+13)

The opposing take is that all it's doing is making the AI play a nicer character, but doesn't lead it to internalize its goals, which is what alignment is actually about.

I think this is a misleading frame which makes alignment seem harder than it actually is. What does it mean to "internalize" a goal? It's something like, "you'll keep pursuing the goal in new situations." In other words, goal-internalization is a generalization problem.

We know a fair bit about how neural nets generalize, although we should study it more (I'm working on a paper on the topic atm). We know they favor "simple" functions, which means something like "low frequency" in the Fourier domain. In any case, I don't see any reason to think the neural net prior is malign, or particularly biased toward deceptive, misaligned generalization. If anything the simplicity prior seems like good news for alignment.

Rafael Harth @ 2023-09-16T15:58 (+10)

It's something like, "you'll keep pursuing the goal in new situations." In other words, goal-internalization is a generalization problem.

I think internalizing means "pursuing as a terminal goal", whereas RLHF arguably only makes model pursue as an instrumental goal (in which case the model would be deceptively aligned). I'm not saying that GPT-4 has a distinction between instrumental and terminal goals, but a future AGI, whether an LLM or not, could have terminal goals that are different from instrumental goals.

You might argue that deceptive alignment is also an obsolete paradigm, but I would again respond that we don't know this, or at any rate, that the essay doesn't make the argument.

Nora Belrose @ 2023-09-16T16:38 (+16)

I don’t think the terminal vs. instrumental goal dichotomy is very helpful, because it shifts the focus away from behavioral stuff we can actually measure (at least in principle). I also don’t think humans exhibit this distinction particularly strongly. I would prefer to talk about generalization, which is much more empirically testable and has a practical meaning.

Rafael Harth @ 2023-09-16T16:59 (+14)

What if it just is the case that AI will be dangerous for reasons that current systems don't exhibit, and hence we don't have empirical data on? If that's the case, then limiting our concerns to only concepts that can be empirically tested seems like it means setting ourselves up for failure.

tommcgrath @ 2023-09-16T19:43 (+16)

I'm not sure what one is supposed to do with a claim that can't be empirically tested - do we just believe it/act as if it's true forever? Wouldn't this simply mean an unlimited pause in AI development (and why does this only apply to AI)?

Joe Collman @ 2023-09-16T22:29 (+4)

In principle, we do the same thing as with any claim (whether explicitly or otherwise):

- Estimate the expected value of (directly) testing the claim.

- Test it if and only if (directly) testing it has positive EV.

The point here isn't that the claim is special, or that AI is special - just that the EV calculation consistently comes out negative (unless someone else is about to do something even more dangerous - hence the need for coordination).

This is unusual and inconvenient. It appears to be the hand we've been dealt.

I think you're asking the right question: what is one supposed to do with a claim that can't be empirically tested?

Gerald Monroe @ 2023-09-19T01:24 (+2)

So just to summarize:

No deceptive or dangerous AI has ever been built or empirically tested. (1)

Historically AI capabilities have consistently been "underwhelming", far below the hype. (2)

If we discuss "ok we build a large AGI, give it persistent memory and online learning, and isolate it in an air gapped data center and hand carry data to the machine via hardware locked media, what is the danger" you are going to respond either with:

"I don't know how the model escapes but it's so smart it will find a way" or (3)

"I am confident humanity will exist very far into the future so a small risk now is unacceptable (say 1-10 percent pDoom)".

and if I point out that this large ASI model needs thousands of H100 accelerator cards and megawatts of power and specialized network topology to exist and there is nowhere to escape to, you will argue "it will optimize itself to fit on consumer PCs and escape to a botnet". (4)

Have I summarized the arguments?

Like we're supposed to coordinate an international pause and I see 4 unproven assertions above that have zero direct evidence. The one about humanity existing far into the future I don't know I don't want to argue that because it's not falsifiable.

Shouldn't we wait for evidence?

tommcgrath @ 2023-09-18T17:43 (+2)

Thanks I mean more in terms of "how can we productively resolve our disagreements about this?", which the EV calculations are downstream of. To be clear, it doesn't seem to me that this is necessarily the hand we've been dealt but I'm not sure how to reduce the uncertainty.

At the risk of sidestepping the question, the obvious move seems to be "try harder to make the claim empirically testable"! For example, in the case of deception, which I think is a central example we could (not claiming these ideas are novel):

- Test directly for deception behaviourally and/or mechanistically (I'm aware that people are doing this, think it's good and wish the results were more broadly shared).

- Think about what aspects of deception make it particularly hard, and try to study those in isolation and test those. The most important example seems to me to be precursors: finding more testable analogues to the question of "before we get good, undetectable deception do we get kind of crappy detectable deception?"

Obviously these all run some (imo substantially lower) risks but seem well worth doing. Before we declare the question empirically inaccessible we should at least do these and synthesise the results (for instance, what does grokking say about (2)?).

tommcgrath @ 2023-09-18T17:53 (+3)

(I'm spinning this comment out because it's pretty different in style and seems worth being able to reply to separately. Please let me know if this kind of chain-posting is frowned upon here.)

Another downside to declaring things empirically out of reach and relying on priors for your EV calculations and subsequent actions is that it more-or-less inevitably converts epistemic disagreements into conflict.

If it seems likely to you that this is the way things are (and so we should pause indefinitely) but it seems highly unlikely to me (and so we should not) then we have no choice but to just advocate for different things. There's not even the prospect of having recourse to better evidence to win over third parties, so the conflict becomes no-holds-barred. I see this right now on Twitter and it makes me very sad. I think we can do better.

Joe Collman @ 2023-10-05T03:54 (+1)

(apologies for slowness; I'm not here much)

I'd say it's more about being willing to update on less direct evidence when the risk of getting more direct evidence is high.