Cause Plurality vs Cause Prioritization

By Joey🔸 @ 2024-11-11T09:59 (+86)

TLDR:

Prioritization is one of Effective Altruism's most fundamental values. In fact, almost all the EA principles outlined on Wikipedia (Impartiality, Cause Prioritization, Cost-Effectiveness, Counterfactual Reasoning) point towards the concept that some ways of doing good are better than others. However, there is another factor we need to balance: plurality. The EA movement is young; it is still learning and evolving. We do not have all the answers (and even if we did have all the facts there would be variation in values). We should expect that new causes, career paths, and ways to do good will emerge. Some of the debates that divide the EA movement might not be resolved in our lifetime, due to deep ethical or epistemic differences. If our long-term goal is to have the most impact, it would therefore likely be wise to keep a somewhat open mind, rather than putting all of our focus and energy on the one area we currently consider the most effective. I feel that there is an optimal balance between plurality and prioritization that leads to the most impact with most of the charity movement doing far too little prioritization and the EA movement often doing too much prioritization. I think having something closer to a top 5 cause areas / career paths etc will result in the most impact long term and also fits the level of understanding we have about the world.

The CE/AIM team and I have a somewhat unique view on how to achieve balance in this regard. Broadly, it boils down to not focusing exclusively on one cause area, instead, viewing areas in different tiers of impact when it comes to causes and careers. There are four questions one could ask of this approach: 1) Why not prioritize more? 2) Why not be more pluralistic? 3) What are the common objections to our approach? 4) How does this work in practice?

Why do we not lean harder into prioritization?

Doing so could be for example focusing exclusively on the area we think is most likely to be the most promising. We think overall this would lead to less impact. To explain why we think this, we'll first discuss some advantages of having multiple cause areas on the docket, and then break down some examples and examine key assumptions that go into these cause areas that we feel would be very difficult to come to a robust single definitive conclusion on.

Epistemic modesty

In short, we generally believe it is almost impossible to come to a definitive robust consensus on which area is the single top cause. The factors differentiating cause priorities are very complex and we think pretty intractable due to the complex value judgements and epistemic calls needed for such prioritization between the top areas. There are huge amounts of calls and we generally favor multi-tool epistemology and looking for convergence. Among the very top areas there are relatively low levels of convergence, a native cost effective estimate might show cause A (at least until a single number in it is changed) where a synthesis of expert views might show cause B (at least until you ask another set of experts).

Example: The one true top charity

If our goal is to have the most impact it seems fruitful to find the single best charity, yet both charity evaluators and grantmakers who state their goal is having the most impact (such as GiveWell and Open Philanthropy) still support multiple charities. When we think about why this occurs the answer is both simple and applicable to the question of cause areas. The differences between the GW top charities are too small to make a definite claim as to which is best and there are additional factors, such as room for funding, which make it unwise to double down on a single charity vs multiple.

Example: Mental Health vs. Global Health.

If we look at a specific cause area example, there have been many recent and historical blog posts, debating the value of mental health. Some of the questions debated are empirical, and significant progress has been made (e.g., how long the effects of a Cognitive Behavioral Therapy (CBT) treatment last after completion). However, others are ethical or otherwise very difficult to make progress on, and even thoughtful actors have reached different conclusions. An example of this might be the question “when is a life worth bringing into existence?”. I do not expect substantial progress to be made on this question in the near future, and yet it is a pivotal factor in the debate of how important a life-saving intervention is, relative to a happiness-focused one. This is just one consideration, but you don’t have to spend long looking into an area to find many more such examples.

Limiting factors

Engaging with multiple cause areas deemed comparable in impact offers many benefits. Take our organization, AIM, for instance; ecosystem limitations are a significant factor. When launching a charity, we need 1) founders, 2) ideas, and 3) funding. Each of these elements is fairly limited by the cause area (and I believe limiting factors are often more important than the total scale). For example, if we aimed to launch ten animal charities a year (rather than ten charities across all the cause areas we currently focus on), I do not think the weakest two would be anywhere near as impactful as the top two, and only a small minority of them would secure long-term funding. With animal charities making up around a third of those we have launched, it’s likely we're already approaching some of these limitations. This means that even if we thought animal charities were, on average, more impactful than human ones, the difference would have to be substantial for us to think that adding a ninth or tenth animal charity into the ecosystem would be more impactful than adding the first or second human-focused charity. I believe this consideration can apply to other cause areas too. Given the current ecosystem, I suspect that founding more than three to five charities per year within any given area would lead to a situation where charities would have to compete with each other for talent, funding and mentorship potentially undermining their overall effectiveness. I believe these sorts of considerations apply far beyond just AIM. Many funders have a narrow scope to consider charity evaluations, and many individuals choosing career paths will find they're a much better natural fit for some areas versus others. These assumptions are only valid if the cause areas are in comparable realms in impact. If we were to consider one cause area to be significantly superior to others by a factor of thousands, it is possible that the tenth charity within that area, despite having less impact, could still outperform the highest impact charity of another cause area. However, we find this assertion to be bold and unsupported, as we will discover when we look at the examples.

Talent and resource absorbency

Connected quite closely to limiting factors, having more cause areas gives considerably more room for high absorbency career paths and thus better utilization of talent. Similar to room for more funding stopping a single charity from being the best donation target indefinitely, limited room for talent absorbency can affect cause areas more broadly. Some people will likely be much better personal fits and personally motivated for certain jobs and cause areas. Having a movement or programs (such as education programs in AIMs case) that are broader allows more capture of this talent/resource.

Cause X

It's very possible we have not found all the areas that are most impactful to work on, and having a more open view (e.g. multiple top cause areas) makes it much more likely for another one to be added to the list. Sometimes the EA movement forgets that we did not come to our current cause areas using very thorough methodology, it was circumstantial and historical. EA has not gone through every problem that causes over 10m DALYs and considered if it was significant enough to warrant more time on. If there is in fact a single best cause area, there could still be a strong argument that we have yet to find it and need to keep ourselves open to new areas. It is pretty rare for a movement highly invested in one cause area for years to do a full pivot where it feels much more likely an already pluralistic one could find and adopt new areas.

Cross applicable lessons

We have found quite a lot of lessons and learnings can be cross-applied between cause areas, which happens more often in a pluralistic movement (e.g. animal charities in the AIM cohort have benefited a lot from iterating with global health charities). Even if we thought a single cause area was best there would be a case for having deep interactions with other cause areas to reduce echo chambers and learn the best practices from other fields.

Why do we not lean harder into plurality?

When prioritizing, judgment calls can be challenging; they are often subjective, and frequently unclear - but we still have to make them, since doing the most good implies making such calls. Not all cases are equally complex. The number of assumptions you have to make when comparing the absolute peak of two highly promising cause areas is much larger than when comparing the weakest areas to the strongest.

A way to think about this by analogy is if you are comparing the heights of two mountains from far away it might be hard to tell which is the tallest but it's not as hard to identify the difference between a mountain and hill even in imperfect weather conditions.

A more close-to-home analogy might be the question of how we can compare things in other non-charitable domains. It might be hotly debated who is the best technology company with different people arguing for Apple vs Tesla but Uncle Bob's Toasters isn’t on anyone's top list. It might be hard to know what the best blender per price point is but it's pretty easy to identify some that are clearly not going to be at the top of the list. When looking for “the best” in different domains it's often pretty easy to eliminate 90% of the contenders without making controversial assumptions.

So if we recognize that comparisons can and should happen at some level, but in other areas it's extremely difficult to compare, how do we know when to make the tradeoff and when to be more pluralized vs more prioritized? Below are a bunch of examples going from easiest to hardest in terms of how viable it is to prioritize.

Examples at different levels of comparability.

Let's consider the simplest example: charities that work towards similar endline outcomes. If two charities work on malaria with the same ultimate goal of reducing child deaths, comparing them is much more straightforward. Sure, there may be some ethical assumptions to be made (maybe the two charities operate in different countries, and you have to assume that saving a life in each country is roughly equivalent), but these are relatively clean assumptions that most people would agree on (particularly if they were behind the veil of ignorance), even if they are from varying backgrounds and subscribe to a wide range of ethical perspectives. It seems quite reasonable you could select 1-3 of the best malaria charities and fairly confidently claim that with a marginal $ you would have the most impact donating to one of those three.

To pick a fairly easy example of comparability that seems robust to make, even across cause areas, donkey sanctuaries get a surprisingly large amount of funding but it's hard to get to a consequentialist impact-focused reason why to prioritize them over many other charities. Could donkeys be worth orders of magnitude more moral weight than cows, or other animals, that could be helped for a fraction of the cost? This is an assumption I feel pretty confident making and I think few with EA-ish mindsets would want to defend. Similarly when it comes epistemics (e.g. evidence based and theories of change) it does not we are much more certain how to helping donkeys in a sanctuary than we would be helping cats, dogs, farmed animals or other well-understood animals, so it seems equally hard to make a case for focusing on donkeys due to epistemics.

Let's look at a slightly more complex case: comparing health charities that all use DALY-based outcomes. DALYs have many potential flaws, but they do enable cross-comparison in a relatively clear way, and they are generated by a large number of surveys (broad enough that an individual's intuition generally should not sway them). This enables cross-comparison across numerous charities. There is still open debate but there is also lots of progress that can be made with relatively clear and well agreed-on ethical assumptions. The end result of this is something like the GiveWell recommendations (they tend to recommend 4-8 charities), which I believe is a fairly safe bet for a top charity within that given worldview.

A more challenging case is comparing interventions that focus on different metrics, such as income vs. health. GiveWell tackles this by using a combination of donor, recipient, and staff surveys to gather an average of moral weights from a large number of people. Is this trade-off objective, or set in stone? Not really, but it does provide some insight into how a broad group of people would prioritize. This type of data and comparison can take us a long way, but not all the way to a single "true" answer and you could pretty easily imagine a GW-like charity evaluator that ranks income as x4 as important as GW does coming to pretty different but still highly compelling top charities. When it comes to a clear correct answer on this sort of question in my view the data is just too noisy. GiveWell’s current trade-offs result in no income charities being recommended but I think many would be hard-pressed to make a knockdown case, with our current values and epistemic understanding, that the top income charities would never compete with the top health ones. This starts to get into the territory where I would prefer there to be two lists, one focused on income and one on health, based on how soft the assumptions are.

You can imagine what the next case looks like, comparing radically different outcomes across different populations, say shrimp welfare vs malaria bed nets. With this sort of comparison the number of assumptions and epistemic states you need to include to come to a definitive answer is an order of magnitude larger than even the fairly difficult case of income vs health. You start getting into ethical arguments about sentience and epistemic arguments on cost-effectiveness vs evidence base. Coming to a definitive answer on this sort of case requires a lot of assumptions, each of which one could spend a lifetime diving deeper into without ending up confident in the outcome. I think this sort of case sits fairly safely in the “really hard to near impossible to compare” category. I think a worldview diversification approach supporting the peaks of both areas seems like the most clear way forward.

Common Objections

There are a few objections that arise quite often, and I'll briefly address a couple of them here.

- Why not just pick your best guess (e.g., something you have 51% confidence in), as that will result in a better expected value (EV)?

Aside from the fact that I'm not sure it does (due to the practical reasons above), I also think that unless you've devoted significant time to it, favoring your own ethical theory/epistemic stance is hard to justify. One could envision a veil-of-ignorance-type reasoning underlying a charitable donation: How would I desire the world to behave if I could have been any individual within it, thereby possessing diverse ethical and epistemic assumptions? Even if you believe morals are all subjective, you could still quite easily be wrong about your own assessment (for example, consider how your values have changed in the past).

- If you descend into cluelessness so quickly, should you really take any action?

I think this is also an extreme interpretation. If the evidence is sufficiently robust between areas, it's not a stretch to compare them. There are often comparisons that require few ethical assumptions, and actions that look robustly beneficial. No utopias involve children eating lead paint.

How does this work in practice?

I believe that in practice, this aligns well with frameworks of epistemic and moral modesty, and cluster thinking but with a more applied angle (e.g., using hedging words doesn't solve this, but diversifying the number of people making an important decision does).

Tier-Based Cause Area Support

One way to bring balance is through a tier-based approach. For instance, having a top-five list of cause areas or career paths can mitigate the negative consequences that arise from narrow perspectives or exclusionary viewpoints. I even think drawing a fairly arbitrary line in the sand (e.g. top 5) is better than having no plurality and better than having no prioritization.

Favor Diversification of Power/Funding

The way funding is divided can either lead to more diverse perspectives, or it can narrow them down. For example, more diverse calls will come from a funding circle where each funder makes their own decisions with shared information than if those same funders donated to a single program officer who makes the decisions. The single-program-officer method in practice tends to favor a few charities and single points that control large shares of the funding. The same principle applies to less tangible aspects, such as only ever having one person give all the talks, or handle the bulk of public outreach for a movement. Having 10 heroes rather than one results in a more pluralistic approach.

Not Burning Bridges / using polarizing techniques

This topic became so extensive that I wrote a separate blog post about it. The TL;DR version is: The EA movement should not use techniques that alienate people from the EA community as a whole if they do not align with a particular subgroup within the community. These approaches have not only an immediate negative impact on the EA community, but also carry long-term repercussions for the sub-community employing them.

Overall I feel that there is an optimal balance between plurality and prioritization that leads to the most impact with most of the charity movement doing far too little prioritization and the EA movement often doing too much prioritization. I think having something closer to a top 5 cause areas / career paths etc will result in the most impact long term and also fits the level of understanding we have about the world.

James Snowden🔸 @ 2024-11-12T17:57 (+15)

Nice post Joey, thanks for laying it out so clearly.

I agree with almost all of this. I find it interesting to think more about which domains / dimensions I'd prefer to push towards prioritization vs. pluralism:

- Speaking loosely, I think EA could push more towards pluralism for career decisions (where personal fit, talent absorbency and specialization are important), and FAW/GHD/GCR cause prioritization (where I at least feel swamped by uncertainty). But I'm pretty unsure on where to shift on the margin in other domains like GiveWell style GHD direct delivery (where money is ~fungible, and comparisons can be meaningful).

- e.g. I suspect I'm more willing to prioritize than you on bednets vs. therapy. I think you / AIM are more positive than me about therapy (as currently delivered) on the merits. Sure, there's a lot of uncertainty, but having spent a bit of time with the CEAs, I just find it real hard to get to therapy being more cost-effective than bednets in high burden areas.

you could pretty easily imagine a GW-like charity evaluator that ranks income as x4 as important as GW does coming to pretty different but still highly compelling top charities

- I agree moral weights are one of the more uncertain parameters, though I think the range of reasonable disagreement given current evidence is a bit less wide than implied here. I'd love to see someone dive deep on the question and actually make the case that we should be using moral weights for income 4x higher vs. health, rather than they're plausible.

I guess a general theme is that I worry about a tendency to string together lots of "plausible" assumptions without defending them as their best guess, and that eroding a prioritization mindset. I think you'd probably agree with that in general, but suspect we have different practical views on some specifics.

Wayne_Chang @ 2024-11-16T03:08 (+15)

Hi, James! When it comes to assessing bednets vs therapy or more generally, saving a life vs happiness improvements for people, the meat eater problem looms large for me. This immediately complicates the trade-off, but I don't think dismissing it is justifiable on most moral theories given our current understanding that farm animals are likely conscious, feel pain, and thus deserve moral consideration. Once we include this second-order consideration, it's hard to know the magnitude of the impact given animal consumption, income, economic growth, wild animal, etc. effects. You've done a lot of work evaluating mental health vs life-saving interventions (thanks for that!), how does including animals impact your thinking? Do you think it's better that we should just ignore it (like GiveWell does)?

I think this goes back to Joey's case for a more pluralistic perspective, but I take your point that in some cases, we may be doing too much of that. It's just hard to know how wide a range of arguments to include when assessing this balance...

James Snowden🔸 @ 2024-11-16T04:22 (+7)

Hi Wayne, that’s fair. I hadn’t been including farmed animal welfare in the comparison because I don’t think people donating to therapy organizations are doing it for animal welfare reasons.

I don’t think it would be practical for givewell to include animal welfare in its evaluations. I think donors who care about both animal and human welfare would have more impact giving to separate projects optimising for each of those goals

Vasco Grilo🔸 @ 2024-12-27T12:36 (+2)

Hi James.

I think donors who care about both animal and human welfare would have more impact giving to separate projects optimising for each of those goals

I think donors who care about human and animal welfare, in the sense of valuing 1 unit of welfare the same regardless of species, had better support animal welfare interventions roughly exclusively, instead of interventions optimised for human welfare as well as ones optimised for human welfare. I estimate:

NickLaing @ 2024-11-16T12:06 (+6)

Small thing. I think phrasing is the the "meat eating" problem is better here, will continue to plug this.

Vasco Grilo🔸 @ 2024-12-27T12:31 (+1)

Thanks for having in mind the meat-eating problem, Wayne. You may be interested in my post GiveWell may have made 1 billion dollars of harmful grants, and Ambitious Impact incubated 8 harmful organisations via increasing factory-farming?.

tlevin @ 2024-11-13T03:33 (+11)

I hope to eventually/maybe soon write a longer post about this, but I feel pretty strongly that people underrate specialization at the personal level, even as there are lots of benefits to pluralization at the movement level and large-funder level. There are just really high returns to being at the frontier of a field. You can be epistemically modest about what cause or particular opportunity is the best, not burn bridges, etc, while still "making your bet" and specializing; in the limit, it seems really unlikely that e.g. having two 20 hr/wk jobs in different causes is a better path to impact than a single 40 hr/wk job.

I think this applies to individual donations as well; if you work in a field, you are a much better judge of giving opportunities in that field than if you don't, and you're more likely to come across such opportunities in the first place. I think this is a chronically underrated argument when it comes to allocating personal donations.

NickLaing @ 2024-11-11T10:20 (+6)

I love this a lot. Something that often gets my hackles/Spidey sense up is when someone seems very confident about a particular cause being the "best" or "better", especially when making difficult (arguably even impossible like you say) comparisons between animals/current humans/future humans. I think it is helpful to make these difficult comparisons but only with deep humility and huge acknowledged uncertainty.

Another benefit of plurality is that it's easier to have a "bigger EA tent" both in human resource and funding. Us humans will always have different opinions not only about impact, but also the kind of things that we lean towards naturally and also where our competitive advantages are. The more we prioritize the more we may exclude.

DavidNash @ 2024-11-11T13:13 (+6)

I'm not sure having a "bigger EA tent" leads to more funding/interest, if anything, people may be less likely to fund/support/be interested in a group that supports many different areas rather than the cause they mainly care about. At least it seems like cause specific orgs get much more funding than multi-cause/EA orgs.

NickLaing @ 2024-11-11T16:02 (+6)

Yeah I'm not sure we're really disagreeing here? I agree people are less interested in as group that supports different areas and that orgs should mostly be cause specific. I'm talking about having a lot of grace for a wide range of "high impact' causes under the broader EA tent depending on people's epistemics and cost effectiveness calculation methods. I think this is more helpful than doubling down on prioritisation and leaving groups our causes feeling like they might be on the "outer" edge of EA or excluded completely

Maybe I'm being too vague here though...

Vasco Grilo🔸 @ 2024-12-27T13:28 (+1)

Hi Nick.

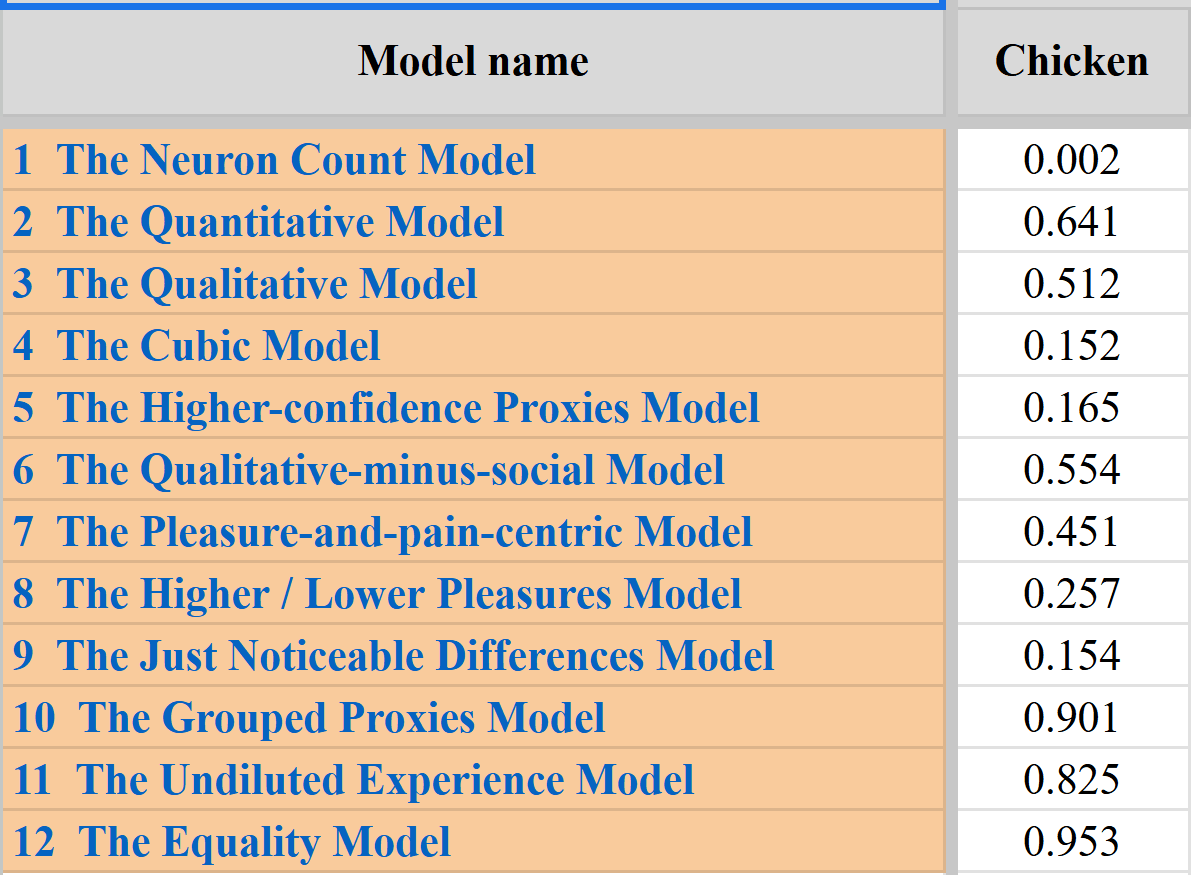

I think it is helpful to make these difficult comparisons but only with deep humility and huge acknowledged uncertainty.

I think acknowledging uncertainty implies that the best animal welfare interventions are way more cost-effective that the best human welfare interventions. Below are RP's welfare ranges of chickens conditional on sentience for the 12 models RP has considered. I estimate broiler welfare and cage-free campaigns are 168 and 462 times as cost-effective as GiveWell’s top charities using Rethink Priorities' (RP's) median welfare range of chickens of 0.332. The welfare range of chickens under the neuron count model, the one leading to the lowest welfare range, is 0.528 % (= 0.002*0.876/0.332) as large as the one I assumed, in which case I would estimate cage-free campaigns to be 2.44 (= 462*5.28*10^-3) times as cost-effective as GiveWell's top charities. The welfare range of chickens under the cubic model, the one leading to the 2nd lowest welfare range, is 40.1 % (= 0.152*0.876/0.332) as large as the one I assumed, in which case I would estimate cage-free campaigns to be 185 (= 462*0.401) times as cost-effective as GiveWell's top charities. As a result, the best animal welfare interventions are way more cost-effective than the best human welfare interventions under 11 of the 12 models RP considered. I think one has to be super confident that the neuron count model, or other outputting a similarly low welfare range, is right for the best human welfare interventions to be competitive. I suspect people supporting human welfare interventions would not be that confident on such models on reflection.

NickLaing @ 2024-12-28T03:38 (+7)

I don't really understand how you're achknowleging the uncertainty here? Basing on RPs weights is one way of making these comparisons, and even those have enormous uncertainty. I was just saying I don't like it when someone is very confident that one cause or set of causes are better than others. I think there's still enormous uncertainty that animal welfare interventions are better than human ones. Are you saying that's not the case?

As a side note I don't think there really are a wide range of meaningfully different RP "Models" in the way I think of models anyway. A separate "model" for me implies genuinely different inputs and assumptions, which are basically all the same for RPs models - which is why they differ far less than even an order of magnitude.

As I said in my post here

"After the project decided to assume hedonism and dismiss neuron count, the cumulative percent of these 90 behavioral proxies became the basis for their welfare range estimates. Although the team used a number of models in their final analysis, these models were mostly based on different weightings of these same behavioral proxies.[12]. Median final welfare ranges are therefore fairly well approximated by the simple formula.

(Behavioral proxy percent) x (Probability of Sentience) = Median Welfare range"

To me then it becomes a question of whether or you agree with the assumptions that RP make along the way (and how strongly)

Vasco Grilo🔸 @ 2024-12-28T11:49 (+3)

I think there's still enormous uncertainty that animal welfare interventions are better than human ones. Are you saying that's not the case?

I think it is clear that the best animal welfare interventions are much more cost-effective than the best human welfare interventions.

After the project decided to assume hedonism and dismiss neuron count, the cumulative percent of these 90 behavioral proxies became the basis for their welfare range estimates. Although the team used a number of models in their final analysis, these models were mostly based on different weightings of these same behavioral proxies.

The welfare range of chickens is higher than RP's median under the 2 models besides the neuron count one which do not rely on behaviour:

- For the quantitative model, it is 1.69 (= 0.641*0.876/0.332) times as high.

- For the equality model, it is 2.51 (= 0.953*0.876/0.332) times as high.

If one puts at least 10 % weight on the quantitative model, which "aggregates several quantifiably characterizable physiological measurements related to activity in the pain processing system", the welfare range of chickens will be at least 16.9 % (= 0.1*1.69) of RP's median.

Vasco Grilo🔸 @ 2024-12-27T13:52 (+2)

I liked this post, Joey.

For example, if we aimed to launch ten animal charities a year (rather than ten charities across all the cause areas we currently focus on), I do not think the weakest two would be anywhere near as impactful as the top two, and only a small minority of them would secure long-term funding. With animal charities making up around a third of those we have launched, it’s likely we're already approaching some of these limitations. This means that even if we thought animal charities were, on average, more impactful than human ones, the difference would have to be substantial for us to think that adding a ninth or tenth animal charity into the ecosystem would be more impactful than adding the first or second human-focused charity.

I do not know whether Ambitious Impact (AIM) should be starting more or fewer animal welfare organisations due to them competing for funding. However, how about starting animal welfare organisations with more seed funding (instead of starting more of them)?

If we were to consider one cause area to be significantly superior to others by a factor of thousands, it is possible that the tenth charity within that area, despite having less impact, could still outperform the highest impact charity of another cause area. However, we find this assertion to be bold and unsupported, as we will discover when we look at the examples.

I did not find your examples convincing. I estimate:

- Broiler welfare and cage-free campaigns are 168 and 462 times as cost-effective as GiveWell’s top charities.

- The Shrimp Welfare Project is 64.3 k as cost-effectivene as GiveWell’s top charities.

How would you concretely modify these analyses to conclude that the best animal welfare interventions are less than 3 times as cost-effective as GiveWell's top charities?