Introducing AI Lab Watch

By Zach Stein-Perlman @ 2024-04-30T17:00 (+128)

This is a linkpost to https://ailabwatch.org

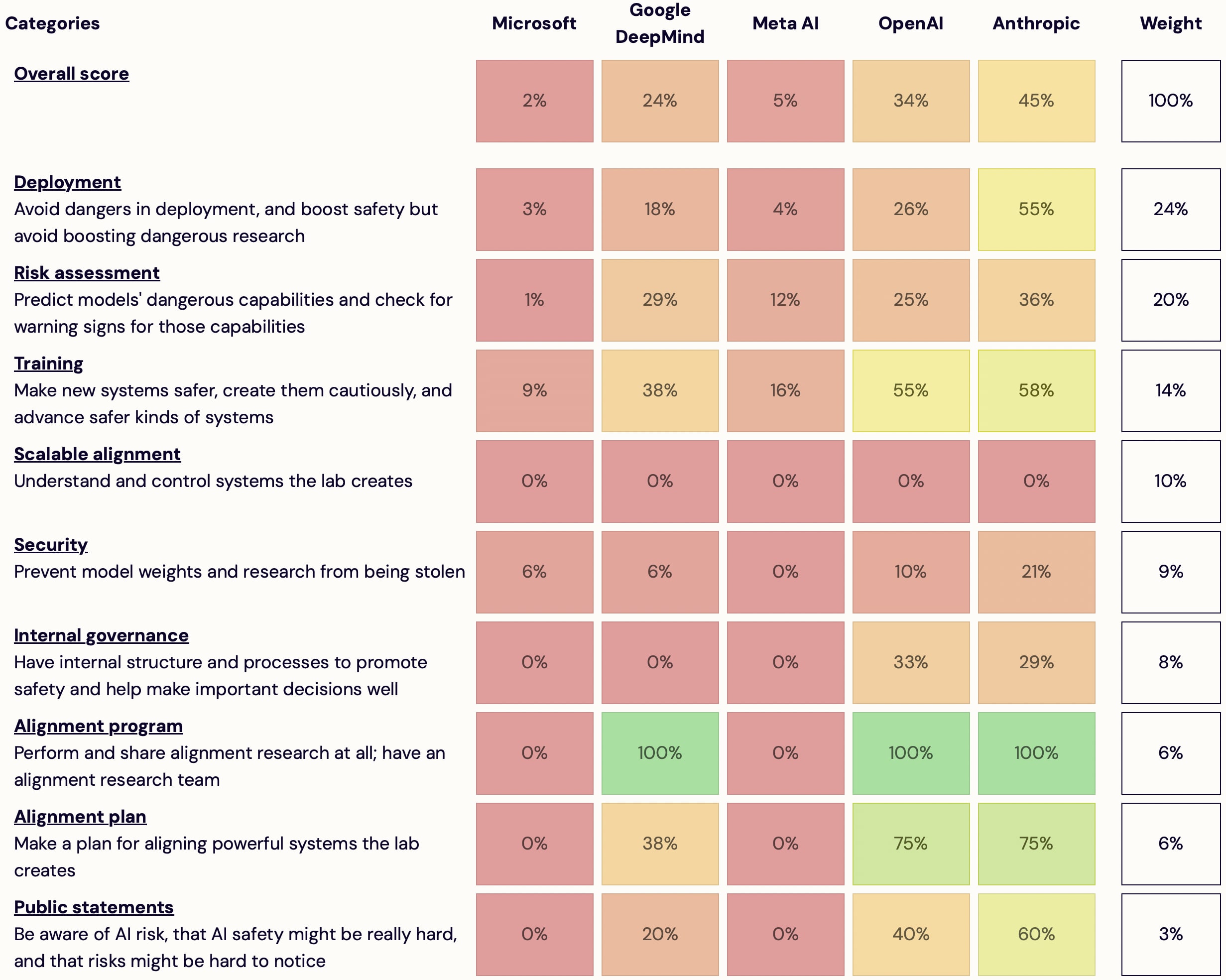

I'm launching AI Lab Watch. I collected actions for frontier AI labs to improve AI safety, then evaluated some frontier labs accordingly.

It's a collection of information on what labs should do and what labs are doing. It also has some adjacent resources, including a list of other safety-ish scorecard-ish stuff.

(It's much better on desktop than mobile — don't read it on mobile.)

It's in beta—leave feedback here or comment or DM me—but I basically endorse the content and you're welcome to share and discuss it publicly.

It's unincorporated, unfunded, not affiliated with any orgs/people, and is just me.

Some clarifications and disclaimers.

How you can help:

- Give feedback on how this project is helpful or how it could be different to be much more helpful

- Tell me what's wrong/missing; point me to sources on what labs should do or what they are doing

- Suggest better evaluation criteria

- Share this

- Help me find an institutional home for the project

- Offer expertise on a relevant topic

- Offer to collaborate

- Volunteer your webdev skills

- (Pitch me on new projects or offer me a job)

- (Want to help and aren't sure how to? Get in touch!)

I think this project is the best existing resource for several kinds of questions, but I think it could be a lot better. I'm hoping to receive advice (and ideally collaboration) on taking it in a more specific direction. Also interested in finding an institutional home. Regardless, I plan to keep it up to date. Again, I'm interested in help but not sure what help I need.

I could expand the project (more categories, more criteria per category, more labs); I currently expect that it's more important to improve presentation stuff but I don't know how to do that; feedback will determine what I prioritize. It will also determine whether I continue spending most of my time on this or mostly drop it.

I just made a twitter account. I might use it to comment on stuff labs do.

Thanks to many friends for advice and encouragement. Thanks to Michael Keenan for doing most of the webdev. These people don't necessarily endorse this project.

yanni kyriacos @ 2024-05-01T05:46 (+15)

I think this is a great idea! Is there a way to have two versions:

- The detailed version (with %'s, etc)

- And the meme-able version (which links to the detailed version)

Content like this is only as good as the number of people that see it, and while its detail would necessarily be reduced in the meme-able version, I think it is still worth doing.

The Alliance for Animals does this in the lead up to elections and it gets spread widely: https://www.allianceforanimals.org.au/nsw-election-2023

Zach Stein-Perlman @ 2024-05-01T07:33 (+3)

Yep. But in addition to being simpler, the version of this project optimized for getting attention has other differences:

- Criteria are better justified, more widely agreeable, and less focused on x-risk

- It's done—or at least endorsed and promoted—by a credible org

- The scoring is done by legible experts and ideally according to a specific process

Even if I could do this, it would be effortful and costly and imperfect and there would be tradeoffs. I expect someone else will soon fill this niche pretty well.

yanni kyriacos @ 2024-05-01T23:24 (+4)

Hi Zach! To clarify, are you basically saying you don't want to improve the project much more than where you've got it to? I think it is possible you've tripped over a highly impactful thing here!

Zach Stein-Perlman @ 2024-05-02T00:05 (+2)

Not necessarily. But:

- There are opportunity costs and other tradeoffs involved in making the project better along public-attention dimensions.

- The current version is bad at getting public attention; improving it and making it get 1000x public attention would still leave it with little; likely it's better to wait for a different project that's better positioned and more focused on getting public attention. And as I said, I expect such a project to appear soon.

yanni kyriacos @ 2024-05-02T00:18 (+3)

"And as I said, I expect such a project to appear soon."

I dont know whether to read this as "Zach has some inside information that gives him high confidence it will exist" or "Zach is doing wishful thinking" or something else!

ixex @ 2024-05-02T17:58 (+1)

What do you consider the purpose or theory of change of this project? I assumed it was to put pressure on the AI labs to improve along these criteria, which presumably requires some level of public attention. Do you see it more as a way for AI safety people to keep tabs on the status of these labs?

Zach Stein-Perlman @ 2024-05-02T19:02 (+2)

The original goal involved getting attention. Weeks ago, I realized I was not on track to get attention. I launched without a sharp object-level goal but largely to get feedback to figure out whether to continue working on this project and what goals it should have.

ixex @ 2024-05-02T19:41 (+1)

I think getting attention would increase the impact of this project a lot and is probably pretty doable if you are able to find an institutional home for it. I agree with Yanni's sentiment that it is probably better to improve on this project than to wait for another one that is more optimized for public attention to come along (though am curious why you think the latter is better).

Dan H @ 2024-05-02T06:45 (+13)

To my understanding, Google has better infosec than OpenAI and Anthropic. They have much more experience protecting assets.

Zach Stein-Perlman @ 2024-05-02T06:52 (+7)

I share this impression. Unfortunately it's hard to capture the quality of labs' security with objective criteria based on public information. (I have disclaimers about this in 4-6 different places, including the homepage.) I'm extremely interested in suggestions for criteria that would capture the ways Google's security is good.

Dan H @ 2024-05-03T05:16 (+4)

I mean Google does basic things like use Yubikeys where other places don't even reliably do that. Unclear what a good checklist would look like, but maybe one could be created.

Linch @ 2024-05-02T23:52 (+3)

The broader question I'm confused about is how much to update on the local/object-level of whether the labs are doing "kind of reasonable" stuff, vs what their overall incentives and positions in the ecosystem points them to doing.

eg your site puts OpenAI and Anthropic as the least-bad options based on their activities, but from an incentives/organizational perspective, their place in the ecosystem is just really bad for safety. Contrast with, e.g., being situated within a large tech company[1] where having an AI scaling lab is just one revenue source among many, or Meta's alleged "scorched Earth" strategy where they are trying very hard to commoditize the component of LLMs.

- ^

eg GDM employees have Google/Alphabet stock, most of the variance in their earnings isn't going to come from AI, at least in the short term.

Ben_West @ 2024-05-01T01:39 (+12)

Thanks for doing this! This is one of those ideas that I've heard discussed for a while but nobody was willing to go through the pain of actually making the site; kudos for doing so.

DrGunn @ 2024-05-02T01:54 (+1)

Agreed! Safer AI was supposed to launch their rankings site in April, but nothing public so far.

Nathan Young @ 2024-05-01T12:50 (+11)

Very easy to read. Props on the design.

Larks @ 2024-05-01T03:21 (+8)

Cool idea, thanks for working on it.

According to this article, only Deepmind gave the UK AI Institute (partial?) access to their model before release. This seems like a pro-social thing to do so maybe this could be worth tracking in some way if possible.

Zach Stein-Perlman @ 2024-05-01T04:49 (+6)

- Yep, that's related to my "Give some third parties access to models to do model evals for dangerous capabilities" criterion. See here and here.

- As I discuss here, it seems DeepMind shared super limited access with UKAISI (only access to a system with safety training + safety filters), so don't give them too much credit.

- I suspect Politico is wrong and the labs never committed to give early access to UKAISI. (I know you didn't assert that they committed that.)

Chris Leong @ 2024-04-30T17:43 (+4)

This is a great project idea!

Dan H @ 2024-05-04T21:04 (+3)

OpenAI has made a hard commitment to safety by allocating 20% compute (~20% of budget) for the superalignment team. That is a huge commitment which isn't reflected in this.

Zach Stein-Perlman @ 2024-05-04T21:28 (+7)

I agree such commitments are worth noticing and I hope OpenAI and other labs make such commitments in the future. But this commitment is not huge: it's just "20% of the compute we've secured to date" (in July 2023), to be used "over the next four years." It's unclear how much compute this is, and with compute use increasing exponentially it may be quite little in 2027. Possibly you have private information but based on public information the minimum consistent with the commitment is quite little.

It would be great if OpenAI or others committed 20% of their compute to safety! Even 5% would be nice.

Dan H @ 2024-05-05T15:31 (+7)

I've heard OpenAI employees talk about the relatively high amount of compute superalignment has (complaining superalignment has too much and they, employees outside superalignment, don't have enough). In conversations with superalignment people, I noticed they talk about it as a real strategic asset ("make sure we're ready to use our compute on automated AI R&D for safety") rather than just an example of safety washing. This was something Ilya pushed for back when he was there.

Rebecca @ 2024-05-05T15:54 (+2)

Ilya is no longer on the Superalignment team?

DrGunn @ 2024-05-02T01:52 (+1)

Great to see the progress you've made on this.