Ask (Everyone) Anything — “EA 101”

By Lizka @ 2022-10-05T10:17 (+110)

I invite you to ask anything you’re wondering about that’s remotely related to effective altruism. There’s no such thing as a question too basic.

Try to ask your first batch of questions by Monday, October 17 (so that people who want to answer questions can know to make some time around then).

Everyone is encouraged to answer (see more on this below). There’s a small prize for questions and answers. [Edit: prize-winning questions and answers are announced here.]

This is a test thread — we might try variations on it later.[1]

How to ask questions

Ask anything you’re wondering about that has anything to do with effective altruism.

More guidelines:

- Try to post each question as a separate "Answer"-style comment on the post.

- There’s no such thing as a question too basic (or too niche!).

- Follow the Forum norms.[2]

I encourage everyone to view asking questions that you think might be “too basic” as a public service; if you’re wondering about something, others might, too.

Example questions

- I’m confused about Bayesianism; does anyone have a good explainer?

- Is everyone in EA a utilitarian?

- Why would we care about neglectedness?

- Why do people work on farmed animal welfare specifically vs just working on animal welfare?

- Is EA an organization?

- How do people justify working on things that will happen in the future when there’s suffering happening today?

- Why do people think that forecasting or prediction markets work? (Or, do they?)

How to answer questions

Anyone can answer questions, and there can (and should) be multiple answers to many of the questions. I encourage you to point people to relevant resources — you don’t have to write everything from scratch!

Norms and guides:

- Be generous and welcoming (no patronizing).

- Honestly share your uncertainty about your answer.

- Feel free to give partial answers or point people to relevant resources if you can’t or don’t have time to give a full answer.

- Don’t represent your answer as an official answer on behalf of effective altruism.

- Keep to the Forum norms.

You should feel free and welcome to vote on the answers (upvote the ones you like!). You can also give answers to questions that already have an answer, or reply to existing answers, especially if you disagree.

The (small) prize

This isn’t a competition, but just to help kick-start this thing (and to celebrate excellent discussion at the end), the Forum team will award $100 each to my 5 favorite questions, and $100 each to my 5 favorite answers (questions posted before Monday, October 17, answers posted before October 24).

I’ll post a comment on this post with the results, and edit the post itself to list the winners. [Edit: prize-winning questions and answers are announced here.]

- ^

Your feedback is very welcome! We’re considering trying out themed versions in the future; e.g. “Ask anything about cause prioritization” or “Ask anything about AI safety.”

We’re hoping this thread will help get clarity and good answers, counter some impostor syndrome that exists in the community (see 1 and 2), potentially rediscover some good resources, and generally make us collectively more willing to ask about things that confuse us.

- ^

If I think something is rude or otherwise norm-breaking, I’ll delete it.

Clifford @ 2022-10-05T11:09 (+72)

Does anyone know why the Gates Foundation doesn't fill the GiveWell top charities' funding gaps?

Hauke Hillebrandt @ 2022-10-08T15:37 (+14)

One recent paper suggests that an estimated additional $200–328 billion per year is required for the various measures of primary care and public health interventions from 2020 to 2030 in 67 low-income and middle-income countries and this will save 60 million lives. But if you look at just the amount needed in low-income countries for health care - $396B - and divide by the total 16.2 million deaths averted by that, it suggests an average cost-effectiveness of ~$25k/death averted.

Other global health interventions can be similarly or more effective: a 2014 Lancet article estimates that, in low-income countries, it costs $4,205 to avert a death through extra spending on health[22]. Another analysis suggests that this trend will continue and from 2015-2030 additional spending in low-income countries will avert a death for $4,000-11,000[23].

For comparison, in high-income countries, the governments spend $6.4 million to prevent a death (a measure called “value of a statistical life”)[24]. This is not surprising given the poorest countries spend less than $100 per person per year on health on average, while high-income countries almost spend $10,000 per person per year[25].

GiveDirectly is a charity that can productively absorb very large amounts of donations at scale, because they give unconditional cash transfers to extremely poor people in low-income countries. A Cochrane review suggests that such unconditional cash-transfers “probably or may improve some health outcomes.[21] One analysis suggests that cash-transfers are roughly equivalent as effective as averting a death on the order of $10k .

So essentially cost-effectiveness doesn't drop off sharply after Givewell's top charities are 'fully funded', and one could spend billions and billions at similar cost-effectiveness, Gates only has ~$100B and only spends~$5B a year.

Hauke Hillebrandt @ 2022-10-05T13:30 (+14)

I wrote a post about this 7 years ago! Still roughly valid.

DavidNash @ 2022-10-05T14:29 (+12)

Could you post this as a new forum post rather than a link to a Google doc? I think it's a question that gets asked a lot and would be good to have an easy to read post to link to.

EdoArad @ 2022-10-07T06:20 (+5)

Agree! Hauke, let me know if you'd want me to do that on your behalf (say, using admin permissions to edit that previous post to add the doc content) if it'll help :)

Hauke Hillebrandt @ 2022-10-08T13:54 (+2)

Yes, that's fine.

EdoArad @ 2022-10-11T08:49 (+7)

Edited to include the text. Did only a little bit of formatting, and added the appendix as is, so it's not perfect. Let me know if you have any issues, requests, or what not :)

Henry Howard @ 2022-10-26T21:52 (+4)

This is a great question, and the same should be asked of governments (as in: "why doesn't the UK aid budget simply all go to mosquito nets?")

A likely explanation for why the Gates Foundation doesn't give to GiveWell's top charities is that those charities don't currently have much room for more funding (GiveWell had to rollover funding last year because they couldn't spend it all. A recent blog posts suggests they may have more room for funding soon https://blog.givewell.org/2022/07/05/update-on-givewells-funding-projections/)

A likely explanation for why the Gates Foundation doesn't give to GiveDirectly is that they don't see strong enough evidence yet for the effectiveness (particularly in the long-term or at the societal level) of unconditional cash transfers (A Cochrane review from this year suggests slight short-term benefits: https://www.cochranelibrary.com/cdsr/doi/10.1002/14651858.CD011135.pub3/full)

pseudonym @ 2022-10-05T23:32 (+42)

When I read Critiques of EA that I want to read, one very concerning section seemed to be "People are pretty justified in their fears of critiquing EA leadership/community norms."

1) How seriously is this concern taken by those that are considered EA leadership, major/public facing organizations, or those working on community health? (say, CEA, OpenPhil, GiveWell, 80000 hours, Forethought, GWWC, FHI, FTX)

2a) What plans and actions have been taken or considered?

2b) Do any of these solutions interact with the current EA funding situation and distribution? Why/why not?

3) Are there publicly available compilations of times where EA leadership or major/public facing organizations have made meaningful changes as a result of public or private feedback?

(Additional note: there were a lot of publicly supportive comments [1] on the Democratising Risk - or how EA deals with critics post, yet it seems like the overall impression was that despite these public comments, she was disappointed by what came out of it. It's unclear whether the recent Criticism/Red-teaming contest was a result of these events, though it would be useful to know which organizations considered or adopted any of the suggestions listed[2] or alternate strategies to mitigate concerns raised, and the process behind this consideration. I use this as an example primarily because it was a higher-profile post that involved engagement from many who would be considered "EA Leaders".)

- ^

- ^

"EA needs to diversify funding sources by breaking up big funding bodies and by reducing each orgs’ reliance on EA funding and tech billionaire funding, it needs to produce academically credible work, set up whistle-blower protection, actively fund critical work, allow for bottom-up control over how funding is distributed, diversify academic fields represented in EA, make the leaders' forum and funding decisions transparent, stop glorifying individual thought-leaders, stop classifying everything as info hazards…amongst other structural changes."

Lizka @ 2022-10-11T14:14 (+10)

Thanks for asking this. I can chime in, although obviously I can't speak for all the organizations listed, or for "EA leadership." Also, I'm writing as myself — not a representative of my organization (although I mention the work that my team does).

- I think the Forum team takes this worry seriously, and we hope that the Forum contributes to making the EA community more truth-seeking in a way that disregards status or similar phenomena (as much as possible). One of the goals for the Forum is to improve community norms and epistemics, and this (criticism of established ideas and entities) is a relevant dimension; we want to find out the truth, regardless of whether it's inconvenient to leadership. We also try to make it easy for people to share concerns anonymously, which I think makes it easier to overcome these barriers.

- I personally haven't encountered this problem (that there are reasons to be afraid of criticizing leadership or established norms) — no one ever hinted at this, and I've never encountered repercussions for encouraging criticism, writing some myself, etc. I think it's possible that this happens, though, and I also think it's a problem even if people in the community only think it's a problem, as that can still silence useful criticism.

- I can't speak about funding structures, but we did run the criticism contest in part to encourage criticism of the most established organizations and norms, and we explicitly encouraged criticism of the most important of those.

- I think lots of organizations have "mistakes" pages, and Ben linked to a question asking for examples of this kind of thing. Off the top of my head, I don't know of much else — this could be a good project for someone to undertake!

Ben_West @ 2022-10-07T18:23 (+4)

Are there publicly available compilations of times where EA leadership or major/public facing organizations have made meaningful changes as a result of public or private feedback?

Some examples here: Examples of someone admitting an error or changing a key conclusion.

pseudonym @ 2022-10-08T05:12 (+4)

Thanks for the link!

I think most examples in the post do not include the part about "as a result of public or private feedback", though I think I communicated this poorly.

My thought process behind going beyond a list of mistakes and changes to including a description of how they discovered this issue or the feedback that prompted it,[1] is that doing so may be more effective at allaying people's fears of critiquing EA leadership.

For example, while mistakes and updates are documented, if you were concerned about, say, gender diversity (~75% men in senior roles) in the organization,[2] but you were an OpenPhil employee or someone receiving money from OpenPhil, would the the contents of the post [3] you linked actually make you feel comfortable raising these concerns?[4] Or would you feel better if there was an explicit acknowledgement that someone in a similar situation had previously spoken up and contributed to positive change?

I also think curating something like this could be beneficial not just for the EA community, but also for leaders and organizations who have a large influence in this space. I'll leave the rest of my thoughts in a footnote to minimize derailing the thread, but would be happy to discuss further elsewhere with anyone who has thoughts or pushbacks about this.[5]

- ^

Anonymized as necessary

- ^

I am not saying that I think OpenPhil in fact has a gender diversity problem (is 3/4 men too much? what about 2/3? what about 3/5? Is this even the right way of thinking about this question?), nor am I saying that people working in OpenPhil or receiving their funding don't feel comfortable voicing concerns.

I am not using OpenPhil as an example because I believe they are bad, but because they seem especially important as both a major funder of EA and as folks who are influential in object-level discussions on a range of EA cause areas. - ^

Specifically, this would be Holden's Three Key Issues I've Changed My Mind About

- ^

This also applies to CEA's "Our Mistakes" page, which includes a line "downplaying critical feedback from the community". Since the page does not talk about why the feedback was downplayed or steps taken specifically to address the causes of the downplaying, one might even update away from providing feedback after reading this.

(In this hypothetical, I am starting from the place where the original concern: "People are pretty justified in their fears of critiquing EA leadership/community norms." is true. This is an assumption, not because I personally know this is the case.) - ^

Among the many bad criticisms of EA, I have heard some good-faith criticisms of major organizations in the EA community (some by people directly involved) that I would consider fairly serious. I think having these criticisms circulating in the community through word of mouth might be worse than having a public compilation, because:

1) it contributes to distrust in EA leaders or their ability to steer the EA movement (which might make it harder for them to do so);

2) it means there are less opportunities for this to be fixed if EA leaders aren't getting an accurate sense of the severity of mistakes they might be making, which might also further exacerbate this problem; and

3) it might mean an increased difficulty in attracting or retain the best people in the EA spaceI think it could be in the interest of organizations who play a large role in steering the EA movement to make compilations of all the good-faith pieces of feedback and criticisms they've received, as well as a response that includes points of (dis)agreement, and any updates as a result of the feedback (possibly even a reward, if it has contributed to a meaningfully positive change).

If the criticism is misplaced, it provides an opportunity to provide a justification that might have been overlooked, and to minimize rumors or speculation about these concerns. The extent to which the criticism is not misplaced, it provides an opportunity for accountability and responsiveness that builds and maintains the trust of the community. It also means that those who disagree at a fundamental level with the direction the movement is being steered can make better-informed decisions about the extent of their involvement with the movement.

This also means other organizations who might be subject to similar criticisms can benefit from the feedback and responses, without having to make the same mistakes themselves.

One final benefit of including responses to substantial pieces of feedback and not just "mistakes", is that feedback can be relevant even if not in response to a mistake. For example, the post Red Teaming CEA’s Community Building Work claims that CEA's mistakes page has "routinely omitted major problems, significantly downplayed the problems that are discussed, and regularly suggests problems have been resolved when that has not been the case".Part of Max's response here suggests some of these weren't considered "mistakes" but were "suboptimal". While I agree it would be unrealistic to include every inefficiency in every project, I can imagine two classes of scenarios where responses to feedback could capture important things that responses to mistakes do not.

The first class is when there's no clear seriously concerning event that one can point to, but the status quo is detrimental in the long run if not changed.

For example, if a leader of a research organization is a great researcher but bad at running a research organization, at what stage does this count as a "mistake"? If an organization lacks diversity, to whatever extent this is harmful, at what stage does this count as a "mistake"?

The second class is when the organization itself is perpetuating harm in the community but aren't subject to any formal accountability mechanisms. If an organization funded by CEA does harm, they can have their funding pulled. If an individual is harmful to the community, they can be banned. While there have been some form of accountability in what appears to be an informal, crowd-sourced list of concerns, this seemed to be prompted by egregious and obvious cases of alleged misconduct, and might not work for all organizations. Imagine an alternate universe where OpenPhil started actively contributing to harm in the EA community, and this harm grew slowly over time. How much harm would they need to be doing for internal or external feedback to be made public to the rest of the EA community? How much harm would they need to do for a similar crowd-sourced list of concerns to arise? How much harm would they need to do for the EA community to disavow them and their funding? Do we have accountability mechanisms and systems in place to reduce the risk here, or notice it early?

P @ 2022-10-05T17:25 (+39)

Why is scope insensitivity considered a bias instead of just the way human values work?

MichaelDickens @ 2022-10-07T20:02 (+14)

Quoting Kelsey Piper:

If I tell you “I’m torturing an animal in my apartment,” do you go “well, if there are no other animals being tortured anywhere in the world, then that’s really terrible! But there are some, so it’s probably not as terrible. Let me go check how many animals are being tortured.”

(a minute later)

“Oh, like ten billion. In that case you’re not doing anything morally bad, carry on.”

I can’t see why a person’s suffering would be less morally significant depending on how many other people are suffering. And as a general principle, arbitrarily bounding variables because you’re distressed by their behavior at the limits seems risky.

Thomas Kwa @ 2022-10-06T09:05 (+9)

Not a philosopher, but scope sensitivity follows from consistency (either in the sense of acting similarly in similar situations, or maximizing a utility function). Suppose you're willing to pay $1 to save 100 birds from oil; if you would do the same trade again at a roughly similar rate (assuming you don't run out of money) your willingness to pay is roughly linear in the number of birds you save.

Scope insensitivity in practice is relatively extreme; in the original study, people were willing to pay $80 for 2000 birds and $88 for 200,000 birds. So if you think this represents their true values, people were willing to pay $.04 per bird for the first 2000 birds but only $0.00004 per bird for the next 198,000 birds. This is a factor of 1000 difference; most of the time when people have this variance in price they are either being irrational, or there are huge diminishing returns and they really value something else that we can identify. For example if someone values the first 2 movie tickets at $1000 each but further movie tickets at only $1, maybe they really enjoy the experience of going with a companion, and the feeling of happiness is not increased by a third ticket. So in the birds example it seems plausible that most people value the feeling of having saved some birds.

Why should you be consistent? One reason is the triage framing, which is given in Replacing Guilt. Another reason is the money-pump; if you value birds at $1 per 100 and $2 per 1000, and are willing to make trades in either direction, there is a series of trades that causes you to lose both $ and birds.

All of this relies on you caring about consequences somewhat. If your morality is entirely duty-based or has some other foundation, there are other arguments but they probably aren't as strong and I don't know them.

P @ 2022-10-06T18:31 (+1)

I think the money-pump argument is wrong. You are practically assuming the conclusion. A scope insensitive person would negatively value the total number of bird deaths, or maybe positively value the number of birds alive. So that each death is less bad if other birds also die. In this case it doesn't make sense to talk about $1 per 100 avoided deaths in isolation.

Thomas Kwa @ 2022-10-06T19:05 (+3)

A scope insensitive person would negatively value the total number of bird deaths, or maybe positively value the number of birds alive. So that each death is less bad if other birds also die.

This doesn't follow for me. I agree that you can construct some set of preferences or utility function such that being scope-insensitive is rational, but you can do that for any policy.

Dan_Keys @ 2022-10-14T22:53 (+7)

Two empirical reasons not to take the extreme scope neglect in studies like the 2,000 vs 200,000 birds one as directly reflecting people's values.

First, the results of studies like this depend on how you ask the question. A simple variation which generally leads to more scope sensitivity is to present the two options side by side, so that the same people would be asked both about 2,000 birds and about the 200,000 birds (some call this "joint evaluation" in contrast to "separate evaluation"). Other variations also generally produce more scope sensitive results (this Wikipedia article seems uneven in quality but gives a flavor for some of those variations.) The fact that this variation exists means that just take people's answers at face value does not work as a straightforward approach to understanding people's values, and I think the studies which find more scope sensitivity often have a strong case for being better designed.

Second, there are variants of scope insensitivity which involve things other than people's values. Christopher Hsee has done a number of studies in the context of consumer choice, where the quantity is something like the amount of ice cream that you get or the number of entries in a dictionary, which find scope insensitivity under separate evaluation (but not under joint evaluation), and there is good reason to think that people do prefer more ice cream and more comprehensive dictionaries. Daniel Kahneman has argued that several different kinds of extension neglect all reflect similar cognitive processes, including scope neglect in the bird study, base rate neglect in the Tom W problem, and duration neglect in studies of colonoscopies. And superforecasting researchers have found that ordinary forecasters neglect scope in questions like (in 2012) "How likely is it that the Assad regime will fall in the next three months?" vs. "How likely is it that the Assad regime will fall in the next six months?"; superforecasters' forecasts are more sensitive to the 3 month vs. 6 month quantity (there's a passage in Superforecasting about this which I'll leave as a reply, and a paper by Mellers & colleagues with more examples). These results suggest that people's answers to questions about values-at-scale has a lot to do with how people think about quantities, that "how people think about quantities" is a fairly messy empirical matter, and that it's fairly common for people's thinking about quantities to involve errors/biases/limitations which make their answers less sensitive to the size of the quantity.

This does not imply that the extreme scope sensitivity common in effective altruism matches people's values; I think that claim requires more of a philosophical argument rather than an empirical one. Just that the extreme scope insensitivity found in some studies probably doesn't match people's values.

Dan_Keys @ 2022-10-14T22:57 (+2)

A passage from Superforecasting:

Flash back to early 2012. How likely is the Assad regime to fall? Arguments against a fall include (1) the regime has well-armed core supporters; (2) it has powerful regional allies. Arguments in favor of a fall include (1) the Syrian army is suffering massive defections; (2) the rebels have some momentum, with fighting reaching the capital. Suppose you weight the strength of these arguments, they feel roughly equal, and you settle on a probability of roughly 50%.

But notice what’s missing? The time frame. It obviously matters. To use an extreme illustration, the probability of the regime falling in the next twenty-four hours must be less—likely a lot less—than the probability that it will fall in the next twenty-four months. To put this in Kahneman’s terms, the time frame is the “scope” of the forecast.

So we asked one randomly selected group of superforecasters, “How likely is it that the Assad regime will fall in the next three months?” Another group was asked how likely it was in the next six months. We did the same experiment with regular forecasters.

Kahneman predicted widespread “scope insensitivity.” Unconsciously, they would do a bait and switch, ducking the hard question that requires calibrating the probability to the time frame and tackling the easier question about the relative weight of the arguments for and against the regime’s downfall. The time frame would make no difference to the final answers, just as it made no difference whether 2,000, 20,000, or 200,000 migratory birds died. Mellers ran several studies and found that, exactly as Kahneman expected, the vast majority of forecasters were scope insensitive. Regular forecasters said there was a 40% chance Assad’s regime would fall over three months and a 41% chance it would fall over six months.

But the superforecasters did much better: They put the probability of Assad’s fall at 15% over three months and 24% over six months. That’s not perfect scope sensitivity (a tricky thing to define), but it was good enough to surprise Kahneman. If we bear in mind that no one was asked both the three- and six-month version of the question, that’s quite an accomplishment. It suggests that the superforecasters not only paid attention to the time frame in the question but also thought about other possible time frames—and thereby shook off a hard-to-shake bias.

Note: in the other examples studied by Mellers & colleagues (2015), regular forecasters were less sensitive to scope than they should've been, but they were not completely insensitive to scope, so the Assad example here (40% vs. 41%) is unusually extreme.

Lumpyproletariat @ 2022-10-06T09:07 (+7)

Hm, I think that most of the people who participated in this experiment:

three groups of subjects were asked how much they would pay to save 2,000 / 20,000 / 200,000 migrating birds from drowning in uncovered oil ponds. The groups respectively answered $80, $78, and $88.1 This is scope insensitivity or scope neglect: the number of birds saved—the scope of the altruistic action—had little effect on willingness to pay.

would agree after the results were shown to them that they were doing something irrational that they wouldn't endorse if aware of it. (Example taken from here: https://www.lesswrong.com/posts/2ftJ38y9SRBCBsCzy/scope-insensitivity )

There's also an essay from 2008 about the intuitions behind utilitarianism that you might find helpful for understanding why someone could consider scope insensitivity a bias instead of just the way human values work:

https://www.lesswrong.com/posts/r5MSQ83gtbjWRBDWJ/the-intuitions-behind-utilitarianism

Charlotte @ 2022-10-06T18:18 (+4)

I think scope insensitivity could be a form of risk aversion over the difference you make in the world (=difference-making) (scope insensitivity is related at least). I explain here why I think that risk aversion over the difference you make is irrational even though risk aversion over states of the world is not.

niplav @ 2022-10-06T00:22 (+3)

I think they are basically not a bias in the way confirmation bias is, and anyone claiming otherwise is pre-supposing linear aggregation of welfare already. From a thing I wrote recently:

Scope neglect is not a cognitive bias like confirmation bias. I can want there to be ≥80 birds saved, but be indifferent about larger numbers: this does not violate the von Neumann-Morgenstern axioms (nor any other axiomatic systems that underlie alternatives to utility theory that I know of). Similarly, I can most highly value there being exactly 3 flowers in the vase on the table (less being too sparse, and more being too busy). The pebble-sorters of course go the extra mile.

Calling scope neglect a bias pre-supposes that we ought to value certain things linearly (or at least monotonically). This does not follow from any mathematics I know of. Instead it tries to sneak in utilitarian assumptions by calling their violation "biased".

Thomas Kwa @ 2022-10-06T09:11 (+4)

Anything is VNM-consistent if your utility function is allowed to take universe-histories or sequences of actions. So you will have to make some assumptions.

Ben_West @ 2022-10-07T22:50 (+2)

Various social aggregation theorems (e.g. Harsanyi's) show that "rational" people must aggregate welfare additively.

(I think this is a technical version of Thomas Kwa's comment.)

Mayank Modi @ 2022-10-17T19:16 (+1)

To answer this question in short: It is so because it's innate. Like any other bias scope insensitivity comes from within, in the case of an individual as well as an organization run by individuals. We may generalize it as the product of human values because of the long-running history of constant 'Self-Value' teachings(not the spiritual ones). But there will always be a disparity when considering the ever-evolving nature of human values, especially in the current era.

--------

On the contrary, most of the time, I do consider scope insensitivity as the typical human way. One absurd reason I identified is the outward negligence towards any scope of sensitive issues. There's always this demand for a huge and attractive convincing, whenever there are multiple issues at hand. And the ones with the ability to convince often get listened to. The result: the insensitivity towards the scope of the issue posed by a commoner(less talented).

This is just one case. If we somehow avert from pinning blame, we can say that there is a very real imbalance between the scope identifiers and the scope rectifiers.

Geoffrey Miller @ 2022-10-05T21:31 (+1)

There's a lot of interesting writing about the evolutionary biology and evolutionary psychology of genetic selfishness, nepotism, and tribalism, and why human values descriptively focus on the sentient beings that are more directly relevant to our survival and reproductive fitness -- but that doesn't mean our normative or prescriptive values should follow whatever natural selection and sexual selection programmed us to value.

P @ 2022-10-06T05:24 (+2)

Then what does scope sensitivity follow from?

Geoffrey Miller @ 2022-10-06T18:06 (+1)

Scope sensitivity, I guess, is the triumph of 'rational compassion' (as Paul Bloom talks about it in his book Against Empathy), quantitative thinking, and moral imagination, over human moral instincts that are much more focused on small-scope, tribal concerns.

But this is an empirical question in human psychology, and I don't think there's much research on it yet. (I hope to do some in the next couple of years though).

Stephen Clare @ 2022-10-06T09:21 (+32)

Why does most AI risk research and writing focus on artificial general intelligence? Are there AI risk scenarios which involve narrow AIs?

zeshen @ 2022-10-07T12:40 (+5)

Looking at your profile I think you have a good idea of answers already, but for the benefit of everyone else who upvoted this question looking for an answer, here's my take:

Are there AI risk scenarios which involve narrow AIs?

Yes, a notable one being military AI i.e. autonomous weapons (there are plenty of related posts on the EA forum). There are also multipolar failure modes on risks from multiple AI-enabled superpowers instead of a single superintelligent AGI.

Why does most AI risk research and writing focus on artificial general intelligence?

A misaligned AGI is a very direct pathway to x-risk, where an AGI that pursues some goal in an extremely powerful way without having any notion of human values could easily lead to human extinction. The question is how to make an AI that's more powerful than us do what we want it to do. Many other failures modes like bad actors using tool (narrow) AIs seem less likely to lead directly to x-risk, and is also more of a coordination problem than a technical problem.

Marc Wong @ 2022-10-11T04:07 (+2)

What happens when we create AI companions for children that are more “engaging” than humans? Would children stop making friends and prefer AI companions?

What happens when we create AI avatars of mothers that are as or more “engaging” to babies than real mothers, and people start using them to babysit? How might that affect a baby’s development?

What happens when AI becomes as good as an average judge at examining evidence, arguments, and reaching a verdict?

Zach Stein-Perlman @ 2022-10-10T03:32 (+2)

- "AGI" is largely an imprecisely-used initialism: when people talk about AGI, we usually don't care about generality and instead just mean about human-level AI. It's usually correct to implicitly substitute "human-level AI" for "AGI" outside of discussions of generality. (Caveat: "AGI" has some connotations of agency.)

- There are risk scenarios with narrow AI, including catastrophic misuse, conflict (caused or exacerbated by narrow AI), and alignment failure. On alignment failure, there are some good stories. Each of these possibilities is considered reasonably likely by AI safety & governance researchers.

Nathan Ashby @ 2022-10-06T01:07 (+25)

Who is Phil, and why does everyone talk about how open he is?

Emrik @ 2022-10-06T10:29 (+24)

What are the most ambitious EA projects that failed?

If we're encouraged to be more ambitious, it would be nice to have a very rough idea of how cost-effective ambition is itself. Essentially, I'd love to find or arrive at an intuitive/quantitative estimate of the following variables:

- [total # of particularly 'ambitious' past EA projects[1]]

- [total # (or value) of successfwl projects in the same reference class]

In other words, is the reason why we don't see more big wins in EA that people aren't ambitious enough, or are big wins just really unlikely? Are we bottlenecked by ambition?

For this reason, I think it could be personally[2] valuable to see a list,[3] one that tries hard to be comprehensive, of failed, successfwl, and abandoned projects. Failing that, I'd love to just hear anecdotes.

- ^

Carrick Flynn's political campaign is a prototypical example. Others include CFAR, Arbital, RAISE. Other ideas include published EA-inspired books that went under the radar, papers that intended to persuade academics but failed, or even just earning-to-give-motivated failed entrepreneurs, etc.

- ^

I currently seem to have a disproportionately high prior on the "hit rate" for really high ambition, just because I know some success stories (e.g. Sam Bankman-Fried), and this is despite the fact that I don't see much extreme ambition in the water generally.

- ^

Such a list could also be usefwl for publicly celebrating failure and communicating that we're appreciative of people who risked trying. : )

m(E)t(A) @ 2022-10-06T04:52 (+22)

Why there hasn't been a consensus/debate between people with contradicting views on the AGI timelines/safety topic?

I know almost nothing about ML/AI and I don't think I can form an opinion on my own so I try to base my opinion on the opinions of more knowledgeable people that I trust an respect. However what I find problematic is that those opinions vary dramatically, while it is not clear why those people hold their beliefs. I also don't think I have enough knowledge in the area to be able to extract that information from people myself eg. if I talk to a knowledgeable 'AGI soon and bad' person they would very likely convince me in their view and the same would happen if I talk to a knowledgeable 'AGI not soon and good' person. Wouldn't it be good idea to have debates between people with those contradicting views, figure out what the cruxes are and write them down? I understand that some people have vested interests in one side of the questions, for example a CEO of an AI company may not gain much from such debate and thus refuse to participate in it, but I think there are many reasonable people that would be willing to share their opinion and hear other people's arguments. Forgive me if this has already been done and I have missed it (but I would appreciate if you can point me to it).

Ben_West @ 2022-10-09T19:36 (+7)

- OpenPhil has commissioned various reviews of its work, e.g. on power-seeking AI.

- Less formal, but there was this facebook debate between some big names in AI.

Overall, I think a) this would be cool to see more of and b) it would be a service to the community if someone collected all the existing examples together.

Thomas Kwa @ 2022-10-06T09:44 (+4)

Not exactly what you're describing, but MIRI and other safety researchers did the MIRI conversations and also sort of debated at events. They were helpful and I would be excited about having more, but I think there are at least three obstacles to identifying cruxes:

- Yudkowsky just has the pessimism dial set way higher than anyone else (it's not clear that this is wrong, but this makes it hard to debate whether a plan will work)

- Often two research agendas are built in different ontologies, and this causes a lot of friction especially when researcher A's ontology is unnatural to researcher B. See the comments to this for a long discussion on what counts as inner vs outer alignment.

- Much of the disagreement comes down to research taste; see my comment here for an example of differences in opinion driven by taste.

That said, I'd be excited about debates between people with totally different views, e.g. Yudkowsky and Yann Lecun if it could happen...

Grant Fleming @ 2022-10-14T20:04 (+2)

The debate on this subject has been ongoing between individuals who are within or adjacent to the EA/LessWrong communities (see posts that other comments have linked and other links that are sure to follow). However, these debates often are highly insular and primarily are between people who share core assumptions about:

- AGI being an existential risk with a high probability of occurring

- Extinction via AGI having a significant probability of occurring within our lifetimes (next 10-50 years)

- Other extinction risks (e.g pandemics or nuclear war) not likely manifesting prior to AGI and curtailing AI development such that AGI risk is no longer of relevance in any near-term timeline as a result

- AGI being a more deadly existential risk than other existential risks (e.g pandemics or nuclear war)

- AI alignment research being neglected and/or tractable

- Current work on fairness and transparency improving methods for AI models not being particularly useful towards solving AI alignment

There are many other AI researchers and individuals from other relevant, adjacent disciplines that would disagree with all or most of these assumptions. Debates between that group and people within the EA/LessWrong community who would mostly agree with the above assumptions is something that is sorely lacking, save for some mud-flinging on Twitter between AI ethicists and AI alignment researchers.

Lumpyproletariat @ 2022-10-06T09:20 (+1)

There was a prominent debate between Eliezer Yudkowsky and Robin Hanson back in 2008 which is a part of the EA/rationalist communities' origin story, link here: https://wiki.lesswrong.com/index.php?title=The_Hanson-Yudkowsky_AI-Foom_Debate

Prediction is hard and reading the debate from the vantage point of 14 years in the future it's clear that in many ways the science and the argument has moved on, but it's also clear that Eliezer made better predictions than Robin Hanson did, in a way that inclines me to try and learn as much of his worldview as possible so I can analyze other arguments through that frame.

leosn @ 2022-10-16T16:50 (+2)

This link could also be useful for learning how Yudkowsky & Hanson think about the issue: https://intelligence.org/ai-foom-debate

Essentially, Yudkowsky is very worried about AGI ('we're dead in 20-30 years' worried) because he thinks that progress on AI overall will rapidly accelerate as AI helps us make further progress. Hanson was (is?) less worried.

pseudonym @ 2022-10-05T23:35 (+20)

What level of existential risk would we need to achieve for existential risk reduction to no longer be seen as "important"?

Zach Stein-Perlman @ 2022-10-10T03:44 (+5)

What's directly relevant is not the level of existential risk, but how much we can affect it. (If existential risk was high but there was essentially nothing we could do about it, it would make sense to prioritize other issues.) Also relevant is how effectively we can do good in other ways. I'm pretty sure it costs less than 10 billion times as much (in expectation, on the margin) to save the world as to save a human life, which seems like a great deal. (I actually think it costs substantially less.) If it cost much more, x-risk reduction would be less appealing; the exact ratio depends on your moral beliefs about the future and your empirical beliefs about how big the future could be.

pseudonym @ 2022-10-10T05:05 (+1)

Thanks!

Presumably both are relevant, or are you suggesting if we were at existential risk levels 50 orders of magnitude below today and it was still as cost-effective as it is today to reduce existential risk by 0.1% you'd still do it?

Zach Stein-Perlman @ 2022-10-10T05:39 (+2)

I meant risk reduction in the absolute sense, where reducing it from 50% to 49.9% or from 0.1% to 0% is a reduction of 0.1%. If x-risk was astronomically smaller, reducing it in absolute terms would presumably be much more expensive (and if not, it would only be able to absorb a tiny amount of money before risk hit zero).

pseudonym @ 2022-10-10T05:51 (+2)

I'm not sure I follow the rationale of using absolute risk reduction here, if you drop existential risk from 50% to 49.9% for 1 trillion dollars that's less cost effective than if you drop existential risk from 1% to 0.997% at 1 trillion dollars, even though one is a 0.1% absolute reduction, and the other is a 0.002% absolute reduction. So if you're happy to do a 50% to 49.9% reduction at 1 trillion dollars, would you not be similarly happy to go from 1% to 0.997% for 1 trillion dollars? (If yes, what about 1e-50 to 9.97e-51?)

Ula @ 2022-10-05T14:41 (+20)

What is the strongest ethical argument you know for prioritizing AI over other cause areas?

jwpieters @ 2022-10-06T17:29 (+16)

I'd also be very interested in the reverse of this. Is there anyone who has thought very hard about AI risk and decided to de-prioritise it?

Linch @ 2022-10-06T17:27 (+8)

I think Transformative AI is unusually powerful and dangerous relative to other things that can plausibly kill us or otherwise drastically affect human trajectories, and many of us believe AI doom is not inevitable.

I think it's probably correct for EAs to focus on AI more than other things.

Other plausible contenders (some of which I've worked on) include global priorities research, biorisk mitigation, and moral circle expansion. But broadly a) I think they're less important or tractable than AI, b) many of them are entangled with AI (e.g. global priorities research that ignores AI is completely missing the most important thing).

Lizka @ 2022-10-11T18:56 (+8)

I largely agree with Linch's answer (primarily: that AI is really likely very dangerous), and want to point out a couple of relevant resources in case a reader is less familiar with some foundations for these claims:

- The 80,000 Hours problem profile for AI is pretty good, and has lots of other useful links

- This post is also really helpful, I think: Without specific countermeasures, the easiest path to transformative AI likely leads to AI takeover

- More broadly, you can explore a lot of discussion on the AI risk topic page in the EA Forum Wiki

MariellaVee @ 2022-10-13T18:35 (+4)

Thank you for asking this! Some fascinating replies!

A related question:

Considering other existential risks like engineered pandemics, etc., is there an ethical case for continuing to escalate the advancement of AI development despite the possibly-pressing risk of unaligned AGI for addressing/mitigating other risks, such as developing better vaccines, increasing the rate of progress in climate technology research, etc.?

Alexander de Vries @ 2022-10-06T09:34 (+4)

[I'll be assuming a consequentialist moral framework in this response, since most EAs are in fact consequentialists. I'm sure other moral systems have their own arguments for (de)prioritizing AI.]

Almost all the disputes on prioritizing AI safety are really epistemological, rather than ethical; the two big exceptions being a disagreement about how to value future persons, and one on ethics with very high numbers of people (Pascal's Mugging-adjacent situations).

I'll use the importance-tractability-neglectedness (ITN) framework to explain what I mean. The ITN framework is meant to figure out whether an extra marginal dollar to cause 1 will have more positive impact than a dollar to cause 2; in any consequentialist ethical system, that's enough reason to prefer cause 1. Importance is the scale of the problem, the (negative) expected value in the counterfactual world where nothing is done about it - I'll note this as CEV, for counterfactual expected value. Tractability is the share of the problem which can be solved with a given amount of resources; percent-solved-per-dollar, which I'll note as %/$. Neglectedness is comparing cause 1 to other causes with similar importance-times-tractability, and seeing which cause currently has more funding. In an equation:

Now let's do ITN for AI risk specifically:

Tractability - This is entirely an epistemological issue, and one which changes the result of any calculations done by a lot. If AI safety is 15% more likely to be solved with a billion more dollars to hire more grad students (or maybe Terence Tao), few people who are really worried about AI risk would object to throwing that amount of money at it. But there are other models under which throwing an extra billion dollars at the problem would barely increase AI safety progress at all, and many are skeptical of using vast amounts of money which could otherwise help alleviate global poverty on an issue with so much uncertainty.

Neglectedness - Just about everyone agrees that if AI safety is indeed as important and tractable as safety advocates say, it currently gets less resources than other issues on the same or smaller scales, like climate change and nuclear war prevention.

Importance - Essentially, importance is probability-of-doom [p(doom)] multiplied by how-bad-doom-actually-is [v(doom)], which gives us expected-(negative)-value [CEV] in the counterfactual universe where we don't do anything about AI risk.

The obvious first issue here is an epistemological one; what is p(doom)? 10% chance of everyone dying is a lot different from 1%, which is in turn very different from 0.1%. And some people think p(doom) is over 50%, or almost nonexistent! All of these numbers have very different implications regarding how much money we should put into AI safety.

Alright, let's briefly take a step back before looking at how to calculate v(doom). Our equation now looks like this:

Assuming that the right side of the equation is constant, we now have 3 variables that can move around: and . I've shown that the first two have a lot of variability, which can lead to multiple orders of magnitude difference in the results.

The 'longtermist' argument for AI risk is, plainly, that is so unbelievably large that the variations in and are too small to matter. This is based on an epistemological claim and two ethical claims.

Epistemological claim: the expected amount of people (/sentient beings) in the future is huge. An OWID article estimates it at between 800 trillion and 625 quadrillion given a stable population of 11 billion on Earth, while some longtermists, assuming things like space colonization and uploaded minds, go up to 10^53 or something like that. This is the Astronomical Value Thesis.

This claim, at its core, is based on an expectation that existential risk will effectively cease to exist soon (or at least drop to very very low levels), because of something like singularity-level technology. If x-risk stays at something like 1% per century, after all, it's very unlikely that we ever reach anything like 800 trillion people, let alone some of the higher numbers. This EA Forum post does a great job of explaining the math behind it.

Moral claim 1: We should care about potential future sentient beings; 800 trillion humans existing is 100,000 times better than 8 billion, and the loss of 800 trillion future potential lives should be counted as 100,000 times as bad as the loss of today's 8 billion lives. This is a very non-intuitive moral claim, but many total utilitarians will agree with it.

If we combine the Astronomical Value Thesis with moral claim 1, we get to the conclusion that is so massive that it overwhelms nearly everything else in the equation. To illustrate, I'll use the lowball estimate of 800 trillion lives:

You don't need advanced math to know that the side with that many zeroes is probably larger. But valid math is not always valid philosophy, and it has often been said that ethics gets weird around very large numbers. Some people say that this is in fact invalid reasoning, and that it resembles the case of Pascal's mugging, which infamously 'proves' things like that you should exchange 10$ for a one-in-a-quadrillion chance of getting 50 quadrillion dollars (after all, the expected value is $50).

So, to finish, moral claim 2: at least in this case, reasoning like this with very large numbers is ethically valid.

And there you have it! If you accept the Astronomical Value Thesis and both moral claims, just about any spending which decreases x-risk at all will be worth prioritizing. If you reject any of those three claims, it can still be entirely reasonable to prioritize AI risk, if your p(doom) and tractability estimates are high enough. Plugging in the current 8 billion people on the planet:

That's still a lot of zeroes!

Nathan Young @ 2022-10-05T16:33 (+1)

Reasonable people think it has the most chance of killing all of us and ending future conscious life. Compared to other risks it is bigger, compared to other cause areas it will extinguish more lives.

Ula @ 2022-10-06T11:08 (+3)

"Reasonable people think" - this sounds like a very weak way to start an argument. Who are those people - would be the next question. So let's skip the differing to authority argument. Then we have "the most chance" - what are the probabilities and how soon in the future? Cause when we talk about deprioritizing other cause areas for the next X years, we need to have pretty good probabilities and timelines, right? So yeah, I would not consider differing to authorities a strong argument. But thanks for taking the time to reply.

Nathan Young @ 2022-10-06T14:58 (+4)

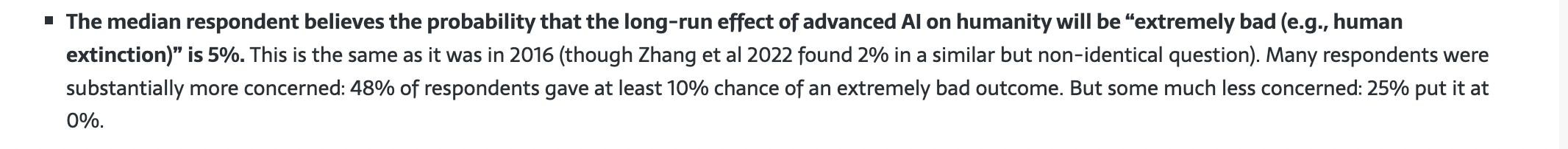

A survey of ML researchers (not necessarily AI Safety, or EA) gave the following

That seems much higher than the corresponding sample in any other field I can think of.

I think that an "extremely bad outcome" is probably equivalent to 1Bn or more people dying.

Do a near majority of those who work in green technology (what feels like the right comparison class) feel that climate change has a 10% chance of 1 Bn deaths?

Personally, I think there is like a 7% chance of extinction before 2050, which is waaay higher than anything else.

HowieL @ 2022-10-13T05:27 (+4)

FYI - subsamples of that survey were asked about this in other ways, which gave some evidence that "extremely bad outcome" was ~equivalent to extinction.

Explicit P(doom)=5-10% The levels of badness involved in that last question seemed ambiguous in retrospect, so I added two new questions about human extinction explicitly. The median respondent’s probability of x-risk from humans failing to control AI [1]was 10%, weirdly more than median chance of human extinction from AI in general,[2] at 5%. This might just be because different people got these questions and the median is quite near the divide between 5% and 10%. The most interesting thing here is probably that these are both very high—it seems the ‘extremely bad outcome’ numbers in the old question were not just catastrophizing merely disastrous AI outcomes.

Jonathan Yan @ 2022-10-06T01:16 (+1)

There is a big gap between killing all of us and ending future conscious life (on earth, in our galaxy, entire universe/multiverse?)

Nathan Young @ 2022-10-06T09:29 (+3)

Yes, but it's a much smaller gap than any other cause doing this.

You're right, conscious life will probably be fine. But it might not be.

Amber Dawn @ 2022-10-06T11:45 (+18)

What's the best way to talk about EA in brief, casual contexts?

Recently I've been doing EA-related writing and copyediting, which means that I've had to talk about EA a lot more to strangers and acquaintances, because 'what do you do for work?' is a very common ice-breaker question. I always feel kind of awkward and like I'm not doing the worldview justice or explaining it well. I think the heart of the awkwardness is that 'it's a movement that wants to do the most good possible/do good effectively' seem tautologous (does anyone want to do less good than possible?); and because EA is kind of a mixture of philosophy and career choice and charity evaluating and [misc], I basically find it hard to find legible concepts to hang it on.

For context, I used to be doing a PhD in Greek and Roman philosophy - not exactly the most "normal" job- and I found that way easier to explain XD

Amber Dawn @ 2022-10-06T11:50 (+7)

Related questions:

-what's the best way to talk about EA on your personal social media?

-what's the best way to talk about it if you go viral on Twitter? (this happened to me today)

-what's the best way to talk about it to your parents and older family members?

etc.

I think, kind of, 'templates' about how to approach these situations risk seeming manipulative and being cringey, as 'scripts' always are if you don't make them your own, but I'd really enjoy reading a post collecting advice from EA community builders, communicators, marketers, content writers...etc on their experiences trying to talk about EA in various contexts, and what tends to make those conversations go well or badly.

(If you'd be interested to produce something like this, I'd be happy to collaborate with you on it)

SeanFre @ 2022-10-06T20:32 (+2)

I think a collection like the one you're proposing would be an incredibly valuable resource for growing the EA community.

Rona Tobolsky @ 2022-10-08T00:29 (+6)

Here's an excellent resource for different ways of pitching EA (and sub-parts of EA). Disclaimer - I do not know who is the owner of this remarkable document. I hope sharing it here is acceptable! As far as I know, this is a public document.

My contingently-favorite option:

Effective Altruism is a global movement that spreads practical tools and advice about prioritizing social action to help others the most. We aim that when individuals are about to invest time or money in helping others, they will examine their options and choose the one with the highest impact - whether they are looking to help through donations, career decisions, social entrepreneurship, and so on.

The movement mainly promotes these tools through fellowships, courses, lectures, one-on-one consultations, and discussion groups. It is also a thriving community where we research how we can help others the most, and then do it!

Amber Dawn @ 2022-10-13T11:22 (+2)

Thanks! This looks extremely comprehensive.

Rona Tobolsky @ 2022-10-08T00:36 (+2)

This resource is also robust (and beautifully outlined).

Ula @ 2022-10-05T14:47 (+15)

What would convince you to start a new effective animal charity?

booritney @ 2022-10-06T03:10 (+14)

Has anyone produced writing on being pro-choice and placing a high value on future lives at the same time? I’d love to read about how these perspectives interact!

Amber Dawn @ 2022-10-06T11:25 (+6)

FYI I'm also interested in this.

I do think it's consistent to be pro-choice and place a high value on future lives (both because people might be able to create more future lives by (eg) working on longtermist causes than by having kids themself, and because you can place a high value on lives but say that it is outweighed by the harm done by forcing someone to give birth. But I think that pro-natalist and non-person-affecting views do have implications for reproductive rights and the ethics of reproduction that are seldom noticed or made explicit.

Larks @ 2022-10-06T03:20 (+6)

Richard Chappell wrote this piece, though IMHO it doesn't really get to the heart of the tension.

Trish @ 2022-11-12T09:37 (+1)

I've only just stumbled upon this question and I'm not sure if you'll see this, but I wrote up some of my thoughts on the problems with the Total View of population ethics (see "Abortion and Contraception" heading specifically).

Personally, I think there is a tension there which does not seem to have been discussed much in the EA forum.

Parrhesia @ 2022-10-17T23:45 (+1)

Here is a good post on the side of being pro-life: https://forum.effectivealtruism.org/posts/ADuroAEX5mJMxY5sG/blind-spots-compartmentalizing

I have thought about this a lot, and I think pro-life might actually win out in terms of utility maximization if it doesn't increase existential risk.

pseudonym @ 2022-10-05T23:40 (+14)

1) What level of funding or attention (or other metrics) would longtermism or AI safety need to receive for it to no longer be considered "neglected"?

2) Does OpenPhil or other EA funders still fund OpenAI? If so, how much of this goes towards capabilities research? How is this justified if we think AI safety is a major risk for humanity? How much EA money is going into capabilities research generally?

(This seems like something that would have been discussed a fair amount, but I would love a distillation of the major cruxes/considerations, as well as what would need to change for OpenAI to be no longer worth funding in future).

Zach Stein-Perlman @ 2022-10-10T03:47 (+2)

- See here. (Separating importance and neglectedness is often not useful; just thinking about cost-effectiveness is often better.)

- No.

pseudonym @ 2022-10-10T05:17 (+1)

Thanks!

This makes sense. In my head AI safety feels like a cause area that can just have room for a lot of funding etc, but unlike nuclear war or engineered pandemics which seem to have clearer milestones for success, I don't know what this looks like in the AI safety space.

I'm imagining a hypothetical scenario where AI safety is overprioritized by EAs, and wondering if or how we will discover this and respond appropriately.

RedStateBlueState @ 2022-10-06T18:12 (+13)

I’ve asked this question on the forum before to no reply, but do the people doing grant evaluations consult experts in their choices? Like do global development grant-makers consult economists before giving grants? Or are these grant-makers just supposed to have up-to-date knowledge of research in the field?

I’m confused about the relationship between traditional topic expertise (usually attributed to academics) and EA cause evaluation.

HowieL @ 2022-10-13T05:50 (+9)

[My impression. I haven't worked on grantmaking for a long time.] I think this depends on the topic, size of the grant, technicality of the grant, etc. Some grantmakers are themselves experts. Some grantmakers have experts in house. For technical/complicated grants, I think non-expert grantmakers will usually talk to at least some experts before pulling the trigger but it depends on how clearcut the case for the grant is, how big the grant is, etc.

Geoffrey Miller @ 2022-10-05T20:32 (+13)

'If I take EA thinking, ethics, and cause areas more seriously from now on, how can I cope with the guilt and shame of having been so ethically misguided in my previous life?'

or, another way to put this:

'I worry that if I learn more about animal welfare, global poverty, and existential risks, then all of my previous meat-eating, consumerist status-seeking, and political virtue-signaling will make me feel like a bad person'

(This is a common 'pain point' among students when I teach my 'Psychology of Effective Altruism' class)

ryancbriggs @ 2022-10-06T14:28 (+10)

I might be missing the part of my brain that makes these concerns make sense, but this would roughly be my answer: Imagine that you and everyone in your household consume water with lead it in every day. You have the chance to learn if there is lead in the the water. If you learn that it does, you'll feel very bad but also you'll be able to change your source of water going forward. If you learn that it does not, you'll no longer have this nagging doubt about the water quality. I think learning about EA is kind of like this. It will be right or wrong to eat animals regardless of whether you think about it, but only if you learn about it can you change for the better. The only truly shameful stance, at least to me, is to intentionally put your head in the sand.

My secondary approach would be to say that you can't change your past but you can change your future. There is no use feeling guilt and shame about past mistakes if you've already fixed them going forward. Focus your time and attention on what you can control.

Lorenzo Buonanno @ 2022-10-09T06:50 (+6)

My two cents: I view EA as supererogatory, so I don't feel bad about my previous lack of donations, but feel good about my current giving.

Changing the "moral baseline" does not really change decisions: seeing "not donating" as bad and "donating" as neutral leads to the same choices as seeing "not donating" as neutral and "donating" as good.

Geoffrey Miller @ 2022-10-09T16:26 (+4)

In principle, changing the moral baseline shouldn't change decisions -- if we were fully rational utility maximizers. But for typical humans with human psychology, moral baselines matter greatly, in terms of social signaling, self-signaling, self-esteem, self-image, mental health, etc.

Lorenzo Buonanno @ 2022-10-09T16:30 (+5)

I agree! That's why I'm happy that I can set it wherever it helps me the most in practice (e.g. makes me feel the "optimal" amount of guilt, potentially 0)

Yonatan Cale @ 2022-10-05T21:37 (+6)

Meta:

- Seems like a more complicated question than [I could] solve with a comment

- Seems like something I'd try doing one on one, talking with (and/or about) a real person with a specific worry, before trying to solve it "at scale" for an entire class

- I assume my understanding of the problem from these few lines will be wrong and my advice (which I still will write) will be misguided

- Maybe record a lesson for us and we can watch it?

Tools I like, from the CFAR handbook, which I'd consider using for this situation:

- IDC (maybe listen to that part afraid you'll think of yourself as a bad person, maybe it is trying to protect you from something that matters. I wouldn't just push that feeling away)

- homunculus (imagine you're waking up in your own body for the first time, in a brain that just discovered this EA stuff, and you check the memories of this body and discover it has been what you consider "bad" for all its life. You get to decide what to do from here, it's just the starting position for your "game", instead of starting from birth)

Geoffrey Miller @ 2022-10-06T18:03 (+5)

Yanatan -- I like your homunculus-waking-up thought experiment. It might not resonate with all students, but everybody's seen The Matrix, so it'll probably resonate with many.

HowieL @ 2022-10-13T05:34 (+4)

If you haven't come across it, a lot of EAs have found Nate Soares' Replacing Guilt series useful for this. (I personally didn't click with it but have lots of friends who did).

I like the way some of Joe Carlsmith's essays touch on this.

HowieL @ 2022-10-13T05:39 (+4)

Much narrower recommendation for nearby problems is Overcoming Perfectionism (~a CBT workbook).

I'd recommend to some EAs who are already struggling with these feelings (and know some who've really benefitted from it). (It's not precisely aimed at this but I think it can be repurposed for a subset of people.)

Wouldn't recommend to students recently exposed to EA who are worried about these feelings in future.

Jay Bailey @ 2022-10-07T07:17 (+4)

What has helped me most is this quote from Seneca:

Even this, the fact that it [the mind] perceives the failings it was unaware of in itself before, is evidence for a change for the better in one's character.

That helped me feel a lot better about finding unnoticed flaws and problems in myself, which always felt like a step backwards before.

I also sometimes tell myself a slightly shortened Litany of Gendlin:

What is true is already so.

Owning up to it doesn't make it worse.

Not being open about it doesn't make it go away.

People can stand what is true,

for they are already enduring it.

If X and Y make me a bad person, then...I'm already being a bad person. Owning up to it doesn't make it worse, and ignoring it doesn't make it disappear. Owning up to it is, in fact, a sign of moral progress - as bad as it feels, it means I'm actually a better person than I was previously.

Babel @ 2022-10-07T06:45 (+4)

My personal approach:

- I no longer think of myself as "a good person" or "a bad person", which may have something to do with my leaning towards moral anti-realism. I recognize that I did bad things in the past and even now, but refuse to label myself "morally bad" because of them; similarly, I refuse to label myself "morally good" because of my good deeds.

- Despite this, sometimes I still feel like I'm a bad person. When this happens, I tell myself: "I may have been a bad person, so what? Nobody should stop me from doing good, even if I'm the worst person in the world. So accept that you have been bad, and move on to do good stuff." (note that the "so what" here doesn't mean I'm okay with being bad; just that being bad has no implication on what I should do from now on)

- It doesn't mean it's okay to do bad things. I ask myself to do good things and not to do bad things, not because this makes me a better person, but because the things themselves are good or bad.

- The past doesn't matter (except in teaching you lessons), because it is already set in stone. The past is a constant term in your (metaphorical) objective function; go optimize for the rest (i.e. the present and the future).

Lizka @ 2022-10-27T16:17 (+2)

I think You Don't Need To Justify Everything is a somewhat less related post (than others that have been shared in this thread already) that is nevertheless on point (and great).

Kiu Hwa @ 2022-10-06T16:56 (+1)

I think it's okay to feel guilty, shame, remorse, rage, or even hopeless about our past "mistakes". These are normal emotions, and we can't or rather shouldn't purposely avoid or even bury them. It's analogous to someone being dumped by a beloved partner and feeling like the whole world is crumbling. No matter how much we try to comfort such a person, he/or she will feel heartbroken.

In fact, feeling bad about our past is a great sign of personal development because it means we realize our mistakes! We can't improve ourselves if we don't even know what we did wrong in the first place. Hence, we should burn these memories hard into our minds and apologize to ourselves for making such mistakes. Then we should promise to ourselves (or even better, make concrete plans) to prevent repeating the same mistakes or to repair the damages (e.g., eat less or no meat, be more prudent in spending or donate more to EA causes, etc.)

Nobody is born a saint, so keep learning and growing into a better person :)

Geoffrey Miller @ 2022-10-06T18:01 (+4)

Kiu -- I agree. It reminds me of the old quote from Rabbi Nachman of Breslov (1772-1810):

“If you won’t be better tomorrow than you were today, then what do you need tomorrow for?”

https://en.wikipedia.org/wiki/Nachman_of_Breslov

rodeo_flagellum @ 2022-10-05T14:58 (+12)

Does anyone have a good list of books related to existential and global catastrophic risk? This doesn't have to just include books on X-risk / GCRs in general, but can also include books on individual catastrophic events, such as nuclear war.

Here is my current resource landscape (these are books that I have personally looked at and can vouch for; the entries came to my mind as I wrote them - I do not have a list of GCR / X-risk books at the moment; I have not read some of them in full):

General:

- Global Catastrophic Risks (2008)

- X-Risk (2020)

- Anticipation, Sustainability, Futures, and Human Extinction (2021)

- The Precipice (2019)

AI Safety

- Superintelligence (2014)

- The Alignment Problem (2020)

- Human Compatible (2019)

Nuclear risk

General / space

- Dark Skies (2020)

Biosecurity

finm @ 2022-10-05T15:38 (+10)

In no particular order. I'll add to this if I think of extra books.

Precursors to thinking about existential risks and GCRs

- Reasons and Persons (1984) and On What Matters, Vol. 1 (2011)

- The End of the World: The Science and Ethics of Human Extinction (1996)

- The Fate of the Earth (1982)

Nuclear risk

- The Making of the Atomic Bomb (1986)

- The Doomsday Machine: Confessions of a Nuclear War Planner (2017)

- Command and Control: Nuclear Weapons, the Damascus Accident, and the Illusion of Safety (2013)

- A longer list is available here

Biosecurity

- Biosecurity Dilemmas: Dreaded Diseases, Ethical Responses, and the Health of Nations (2017)

- Biohazard: The Chilling True Story of the Largest Covert Biological Weapons Program in the World—Told from the Inside by the Man Who Ran It (2000)

- The Dead Hand: The Untold Story of the Cold War Arms Race and its Dangerous Legacy (2009)[1]

AI safety

- Smart Than Us (2014)

- The AI Does Not Hate You: Superintelligence, Rationality and the Race to Save the World (2019)

Recovery

- Feeding Everyone No Matter What: Managing Food Security After Global Catastrophe (2014)

- The Knowledge: How to Rebuild Our World from Scratch (2014)

Space / big-picture thinking

- Pale Blue Dot (1994), Cosmos (1980), and Billions and Billions[2] (1997)

- Maps of Time: An Introduction to Big History (2004)

Fiction

- The Three-Body Problem (2006)

- The Andromeda Strain (1969)

- The Ministry for the Future (2020)

- Cat's Cradle (1963)

- ^

This covers both nuclear security and biosecurity topics.

- ^

Especially Chapter 8: 'The Environment: Where Does Prudence Lie?', which contains some remarkable precursors to metaphors and arguments in The Precipice etc.

HowieL @ 2022-10-13T05:47 (+3)

Others, most of which I haven't fully read and not always fully on topic:

- Richard Posner. Catastrophe: Risk and Response. (Precursor)

- Richard A Clarke and RP Eddy. Warnings: Finding Cassandras to Stop Catastrophes

- General Leslie Groves. Now It Ca Be Told: the Story of the Manhattan Project (nukes)

evakat @ 2022-10-05T23:11 (+2)

Small remark: The Goodreads list on nuclear risk you linked to is private

Charles He @ 2022-10-15T19:54 (+11)

Of all the sort of "decision theory"-style discussions in EA, I think Anthropics (e.g. the fact we exist, tells us something about the nature of successful intelligence and x-risk) seems like one of the most useful that could arrive just from pure thought. This is sort of amazing.

The blog posts I've seen written in 2021 or 2020 seem sort of unclear and tangled (e.g. there are two competing theories and empirical arguments are unclear).

Is there a good summary of Anthropic ideas? Are there updates on this work? Is there someone working on this? Do they need help (e.g. from senior philosophers or cognitive scientists)?

pseudonym @ 2022-10-05T22:04 (+10)

A set of related questions RE: longtermism/neartermism and community building.

1a) What is the ideal theory of change for Effective Altruism as a movement in the next 5-10 years? What exactly does EA look like, in the scenarios that community builders or groups doing EA outreach are aiming for? This may have implications for outreach strategies as well as cause prioritization.[1]

1b) What are the views of various community builders and community building funders in the space on the above? Do funders communicate and collaborate on a shared theory of change, or are there competing views? If so, which organizations best characterize these differences, what are the main cruxes/where are the main sources of tension?

2a) A commonly talked about tension on this forum seems to relate to neartermism versus longtermism, or AI safety versus more publicly friendly cause areas in the global health space. How much of its value is because it's inherently a valuable cause area, and how much of it is because it's intended as an onramp to longtermism/AI safety?

2b) What are the views of folks doing outreach and funders of community builders in EA on the above? If there are different approaches, which organizations best characterize these differences, what are the main cruxes/where are the main sources of tension? I would be particularly interested in responses from people who know what CEA's views are on this, given they explicitly state they are not doing cause-area specific work or research. [2]

3) Are there equivalents [3] of Longview Philanthropy who are EA aligned but do not focus on longtermism? For example, what EA-aligned organization do I contact if I'm a very wealthy individual donor who isn't interested in AI safety/longtermism but is interested in animal welfare and global health? Have there been donors (individual or organizational) who fit this category, and if so, who have they been referred to/how have they been managed?

- ^

"Big tent" effective altruism is very important (particularly right now) is one example of a proposed model, but if folks think AI timelines are <10 years away and p(doom) is very high, then they might argue EA should just aggressively recruit for AI safety folks in elite unis.

- ^

Under Where we are not focusing: "Cause-specific work (such as community building specifically for effective animal advocacy, AI safety, biosecurity, etc.)"

- ^

"designs and executes bespoke giving strategies for major donors"

Julia_Wise @ 2022-10-11T19:11 (+6)

3. I'm not sure they do as much bespoke advising as Longview, but I'd say GiveWell and Farmed Animal Funders. I think you could contact either one with the amount you're thinking of giving and they could tell you what kind of advising they can provide.

Lizka @ 2022-10-05T10:28 (+10)

I also think that the first person to post a question will be performing a public service by breaking the ice!

Jordan Arel @ 2022-10-09T18:24 (+9)

I really want to learn more about broad longtermism. In 2019, Ben Todd said that in a survey EAs said that it was the most underinvested cause area by something like a factor of 5. Where can I learn more about broad longtermism, what are the best resources, organizations, and advocates on ideas and projects related to broad longtermism?

HowieL @ 2022-10-13T05:48 (+3)

I think parts of What We Owe the Future by Will MacAskill discuss this approach a bit.

Jordan Arel @ 2022-10-13T20:01 (+1)

Mm good point! I seem to remember something.. do you remember what chapter/s by chance?

HowieL @ 2022-10-14T11:04 (+2)

My guess is that Part II, trajectory changes will have a bunch of relevant stuff. Maybe also a bit of part 5. But unfortunately I don't remember too clearly.

HowieL @ 2022-10-14T11:09 (+2)

The 80k podcast also has some potentially relevant episodes though they're prob not directly what you most want.

- https://80000hours.org/podcast/episodes/phil-trammell-patient-philanthropy/

- https://80000hours.org/podcast/episodes/will-macaskill-ambition-longtermism-mental-health/

- Maybe especially the section on patient philanthropy.

- https://80000hours.org/podcast/episodes/will-macaskill-what-we-owe-the-future/

- https://80000hours.org/podcast/episodes/sam-bankman-fried-high-risk-approach-to-crypto-and-doing-good/

- Some bits of this. E.g. some of the bits on political donations.

Lizka @ 2022-10-31T20:10 (+8)

Winners of the small prize