X-Risk, Anthropics, & Peter Thiel's Investment Thesis

By Jackson Wagner @ 2021-10-26T18:38 (+50)

This story is cross-posted from my blog, jacksonw.xyz.

Summary: I analyze an essay by Peter Thiel, in which he explains:

- How markets are incentivized to ignore the risk of civilizational collapse.

- How this introduces distortions in both market prices and our thinking.

- How to attempt to correct for these distortions using "The Optimistic Thought Experiment"

- A big-picture view of financial history as a single "Great Boom" built on the uncertain hope that capitalist civilization will ultimately be proven viable and achieve existential security.

I then add my own musings about how these ideas might usefully connect with the goals of longtermist Effective Altruism.

EA Portfolio Design: An Unsolved Problem Way Over My Head

Effective altruists have discussed whether EA values and beliefs should affect how we invest the wealth that we eventually plan to donate:

- For altruistic donations, the utility of money is roughly linear with increasing wealth, rather than having diminishing returns like with increasing personal wealth. This probably means that we should be willing to use more leverage and invest in a higher-risk, higher-expected-return portfolio?

- Popular types of "socially-responsible investing" are sadly not very effective by EA lights, but this could be changed. Conversely, divestment is often considered to be an ineffective strategy, but done in the right way perhaps it could work well.

- Or perhaps we should do the opposite, using the logic of "mission hedging" to investing in bad things so we have more money to deploy in worlds where bad things grow larger. (More details on mission hedging here.)

- Perhaps we could pursue a variant of mission hedging that is less related to divestment and "sin funds" and more based on prediction-market hedging of specific events, like how a presidential election might change the tractability of a certain cause.

- There is a rich discussion around the idea of "patient philanthropy" or "investing to give". Investing money now and waiting to spend it later might be a good idea simply because of the growth rate of the economy, or because it allows us to wait and identify better opportunities for impact later, or to ensure the long-term health and viability of the EA movement, or perhaps even because having a store of capital around means that we can employ some of the other do-gooder financial schemes on this list! (See a detailed report on patient philanthropy here.)

- If we believe that transformative AI technology could be right around the corner, perhaps (in addition to freaking out) we should find some way to invest in the coming market foom.

I consider myself reasonably savvy about financial topics, but I would be in way over my head if I tried to synthesize all these competing claims and produce the One True EA Portfolio Theory.

However, I think it's an important unsolved problem in EA where further progress could likely be made, and thus should be taken very seriously. To some extent, the entire concept of longtermist EA is driven by a financial/values "edge" of buying the far future cheap because other people don't care as much about the far future. In this and other ways, EAs (including non-longtermists who care more about suffering of distant beings than most folks, and to a lesser extent rationalists more broadly who have unique beliefs about AI, etc) have different beliefs and different priorities than the typical investor, which should present many opportunities to bet on those beliefs and arbitrage the difference in values.

(PSA: For anyone reading who is totally new to finance & investing topics, stop reading this post and instead read up on the basics — here is a helpful, charismatic, rationalist introduction to the logic of index investing, and here is a handy reddit-consensus guide to personal finance. The couple hours I spent first reading about index investing and making the effort to get my finances in order have been some of the highest-value hours of my life, since they led directly to getting higher returns and paying lower fees on my entire life's savings.)

Reading the Thiel-Leaves of "The Optimistic Thought Experiment"

Instead of synthesizing any of the above claims, I am here merely to throw another wild idea into the mix!! In this post, I present my interpretation Peter Thiel's overall financial strategy, an investment thesis grounded in ideas like X-risk and the anthropic principle, based on what he calls "the optimistic thought experiment" in his 2008 essay of the same name.

Thiel's writing style is entertaining, but also cryptic and postmodern. He sometimes meanders around his real point, interleaving the chain of rational argument with jokes, straussian literary/religious allusions, and memorable bits of trivia from the history of finance. I'll try to extract the most relevant bits and clarify when necessary.

We face significant X-risk.

After kicking things off with some characteristic Christian allegory, Thiel introduces his concern about anthropogenic existential risk to civilization:

Beginning with the Great War in 1914, and accelerating after 1945, there has re-emerged an apocalyptic dimension to the modern world... Will it be an environmental catastrophe like runaway global warming, or will it be murderous robots, Ebola viruses genetically recombined with smallpox, nanotech devices that dissolve the living world into a gray goo, or the spread of miniature nuclear bombs in terrorist briefcases? Even if it is not yet possible for humans to destroy the whole world, on current trends it might just be a matter of time. The relentless proliferation of nuclear weapons remains the most obvious case in point... We know that there exists some point [as more and more countries acquire weapons] beyond which there is no stable equilibrium and where there will be a nuclear Armageddon.

Thiel contrasts these looming dangers with the "eerily complacent" nature of the stock market, which aside from occasional minor corrections seems to march inexorably upwards unperturbed by these existential threats. "The news and business sections [of the newspaper] seem to inhabit different worlds." If Thiel (and effective altruists) are correct to think that existential risk is sizeable — perhaps a 1-in-6 chance over the next century! — then why do markets seem so exuberant?

Anthropic logic means markets ignore the risk.

Thiel's answer is twofold:

First, the more pedestrian fact that pessimistic voices are ignored as they are drained of capital during long bull runs in the markets: perma-bear doomsayers have simply been "wrong for too long", and consequently "they have lost most of their money and have no significant capital left to invest in anything".

Second, and this is the main point that both Thiel and I am focusing on: Thiel reasons that markets are bound by their incentives to ignore existential risk, since there is no way to win a bet that the world will end and also successfully redeem your winnings afterwards.

Apocalyptic thinking appears to have no place in the world of money. For if the doomsday predictions are fulfilled and the world does come to an end, then all the money in the world — even if it be in the form of gold coins or pieces of silver, stored in a locked chest in the most remote corner of the planet — would prove of no value, because there would be nothing left to buy or sell. Apocalyptic investors will miss great opportunities if there is no apocalypse, but ultimately they will end up with nothing when the apocalypse arrives. Heads or tails, they lose. ...A mutual fund manager might not benefit from reflecting about the danger of thermonuclear war, since in that future world there would be no mutual funds and no mutual fund managers left. Because it is not profitable to think about one ’s death, it is more useful to act as though one will live forever.

Since it is not profitable to contemplate the end of civilization, this distorts market prices. Instead of telling us about the objective probabilities of how things will play out, prices are based on probabilities adjusted by the anthropic logic of ignoring doomed scenarios:

Let us assume that, in the event of [the project of civilization being broadly successful], a given business would be worth $ 100/share, but that there is only an intermediate chance (say 1:10) of that successful outcome. The other case is too terrible to consider. Theoretically, the share should be worth $ 10, but in every world where investors survive, it will be worth $100. Would it make sense to pay more than $10, and indeed any price up to $100? Whether in hope or desperation, the perceived lack of alternatives may push valuations to much greater extremes than in nonapocalyptic times.

This logic is essentially the real-world, financial version of the anthropic reasoning one encounters in things like the "quantum suicide" thought experiment or stories involving Russian Roulette. But lest you think that Thiel has already devolved into mad sci-fi ramblings, this is already a well-known phenomenon on prediction markets — metaculus users betting on catastrophic outcomes will never get their points, since the metaculus website would surely go down in any major nuclear exchange. More pedestrian questions, like "Will Metaculus still exist in 2030?" naturally raise similar issues. On a niche website run by fake internet points, it is enough to add the disclaimer "players are urged to predict in good faith". But in real financial markets full of rational actors following incentives, we can't use that trick.

What are markets ignoring? Not just X-risk, but anything that ends capitalism.

Importantly for all of this analysis (and in a marked divergence from the concerns of longtermist EAs), Thiel's apocalypse doesn't have to be literal extinction — for investment purposes, any catastrophe sufficient to end the global financial system amounts to the same thing.

From the point of view of an investor, one may define such a “secular apocalypse” as a world where capitalism fails. Therefore, the secular apocalypse would encompass not only catastrophic futures in which humanity completely self-destructs (most likely through a runaway technological disaster), but also include a range of other scenarios in which free markets cease to function, such as a series of wars and crises so disruptive as to drive the developed world towards fascism, anarchy, or both.

"Capitalism" has lots of different connotations for different people, so here's my take on what it would mean to find ourselves in "a world where capitalism fails":

Much of our activity (not just financial, but also cultural, institutional, etc) is motivated by desire to influence the future, and much of the meaning in our lives comes from the marks we hope to make on the world. To function, the financial system (and broader society) requires stability through time, giving people faith that efforts will not be in vain and promises will be repaid, and essentially allowing dealmaking to create chains of obligation and investment that bind together the past and future. But in a large enough crisis or transformation, those chains linking the past and future could be broken — reputations would be betrayed, debts would go unpaid[1], existing physical wealth would belong to whoever could grab it and hold onto it, and the value of all the past's systems of promises and obligations would crash to zero.

Besides the spectrum from full-on civilizational collapse to merely financial collapse, the world could be transformed in numerous other ways that would suffice to "end capitalism" along the way — we could fall into a stable dystopia such as a worldwide totalitarian government, or conversely our world could undergo a positive transformation that nevertheless breaks the chain of promises & obligations of the current capitalist system (like a sudden takeover by an aligned AI, or a worldwide communist-style revolution to a totally new form of utopian government). Markets ignore all of these scenarios, since in all of them financial investments made today would be zeroed out by transformative change.

What are markets hoping for?

So, markets are ignoring the risks of civilizational (and merely financial) collapse. But what is the good outcome that markets are hoping for? Thiel calls it "globalization", but I find this a confusing and complicated term given its specific associations with trade and Thiel's current political positioning as something of an anti-globalist.[2] Here is what I think Thiel is gesturing at...

As technology progresses, the fates of all the different parts of the world become more correlated with each other. This happens for many reasons — partly because countries trade more with each other (traditional "globalization"), but also because countries are more powerful and missiles can now fly all the way around the world (the idea of a "World War" was impossible before the 20th century), and also because increasingly many technologies (like AI or biotech) will have a transformative impact on all of humanity no matter where they are first invented. You can see how Thiel uses the term "globalization" to refer to this increasing correlation:

“Globalization” means a breaking down of barriers between nations; an increase in travel and knowledge about other countries; an increase of trade and competition among and between the peoples of the world, to the point where there is a more or less level playing field in the entire world; and the death of all cultures, in the sense of robust systems that exclude part of humanity. On the level of economics, it means a global marketplace; and on the level of politics, it means the ascension of transnational elites and organizations, at the expense of all localized countries and governments.

This leads to the Effective Altruist idea of the "hinge of history" or "the precipice" — unlike in the past when disasters and civilizational collapses were always local and limited, in the modern day the fates of all the different peoples of the earth are tightly bound together, creating a time of heightened existential risk:

By definition, the apocalypse would be worldwide in extent. For this reason, the point of departure for our thought experiment centers on the future of worldwide events — that is, on the future of globalization. In this, we are guided by the hope that the right sort of globalization might prevent the apocalypse and give us peace in our time.

Markets, then, are hoping for a twofold miracle:

- First, the financial aspect, that humanity's future arrives in a continuous, nondestructive way, such that the system of debts and obligations of capitalism continues and is never betrayed (thus our investments today will be worth something, and won't just be expropriated or destroyed or etc).

- But more importantly, markets are hoping to a positive answer to a great and fundamental question of civilization: will this common project of mankind prove successful, sustainable, and secure? Will we steer around the risks and obstacles in our path to a place of safety, prosperity, freedom, and happiness?

Financial history as a series of connected bubbles all asking the fundamental question of capitalist civilization's viability.

Thiel sketches an entertaining history of financial bubbles as a history of people getting prematurely hyped about the prospect that the world was finally about to achieve some unified & final system of fully integrated worldwide trade and innovation:

At various points, like a mirage in the desert, the goal of the project has seemed almost within reach, only to fail or be postponed every time, at least thus far. ...Almost every financial bubble has involved nothing more nor less than a serious miscalculation about the true probability of successful globalization.

He lists many examples of premature "globalization" bets turned sour:

In France, one had the System of John Law... The System ’s central plan was for the nearly bankrupt kingdom to achieve solvency by securitizing its debts through the newly chartered Mississippi Company, whose newly issued paper currency would be backed by the future proceeds from the New World. Speculation reached fevered proportions... at the height of the boom, the value of the Company approached the value of France itself. The key turning point occurred as investors realized that globalization would be much harder and take much longer: In the year 1720, the entire Louisiana Territory had a population of a few thousand Frenchmen, mostly criminal types... In the minds of the speculators, one could perceive a future capitalist paradise even though at the time there existed only a vast wild land inhabited by wild and savage men; as the bubble receded, that great vision of the future seemed to be but a dream. France’s finances never recovered.

The British Empire justified its existence because it guaranteed a sphere within which free trade would take place among the nations of the world. Because trade and commerce occurred at sea, Britain would remain the leading power so long as it ruled the sea. ...The financial mania of 1720 centered on the South Sea Company, which proposed to open the South Seas (and all the great wealth therein) to trade with Britain. By trading with the opposite pole of the globe, the British empire of commerce would become universal... [Then everything gets hyped to the moon, and then it all turns out to be a giant scam.] The British stock market did not recover its level of 1720 until 1815, when the victory at Waterloo promised a less divided world for the nineteenth century."

In the years that preceded 1914, the financial boom centered on the economic development of far-away places, most notably Russia, the great power whose peaceful integration into Europe would have enabled a more stable twentieth century.

In the case of Japan... the bubble of the late 1980s took off as people began to believe that Japan Inc. might actually run the entire planet. Management textbooks declared that the Japanese corporate model represented a sort of Hegelian final synthesis for harmonious labor-management relations; because no better model existed, Japanese corporatism represented the end of history. Zaitech financial engineering, under the auspices of the omniscient Ministry of International Trade and Industry, would do the rest. Only by drawing this extraordinary conclusion could the Nikkei account for almost half the world ’s market capitalization in 1989.

The bubbles he lists represent miscalculations — guesses about the future that turned out to be wrong. But many other bets (for instance, on America, on technology, etc) have paid off... insofar as civilization still seems to have at least a narrow, treacherous path to the stars, that means humanity's potential has not yet been squandered, and the the optimistic outcome is still in the game. But the fundamental question of civilization's viability remains the unanswered crux underpinning all our hopes:

All of these bubbles are not truly separate, but instead represent different facets of a single Great Boom of unprecedented size and duration. As with the earlier bubbles of the modern age, the Great Boom has been based on a similar story of globalization, told and retold in different ways — and so we have seen a rotating series of local booms and bubbles as investors price a globally unified world through the prism of different markets. Nevertheless, this Great Boom is also very different from all previous bubbles. This time around, globalization either will succeed and humanity will achieve a degree of freedom and prosperity that can scarcely be imagined, or globalization will fail and capitalism or even humanity itself may come to an end. The real alternative to good globalization is world war. And because of the nature of today ’s technology, such a war would be apocalyptic in the twenty-first century. Because there is not much time left, the Great Boom, taken as a whole, either is not a bubble at all, or it is the final and greatest bubble in history.

Takeaways and Musings

Use the "Optimistic Thought Experiment" to invest in a smarter way than just ignoring the risks.

"It is not profitable to think of one's own death", but most people are not doing lots of complicated anthropic reasoning in their head. Instead, they're picking up the successful strategy of "ignoring existential risk" from the general cultural vibe. The most straightforward way to ignore X-risk is to assume that the risk is extremely improbable, so that's what most people do. But, Thiel says, just blindly assuming away the risk can't be the best solution:

Such a narrowing of one’s horizon cannot, however, be the last word. After all, there exists some connection between the real world of events, on the one hand, and the virtual world of finance, on the other. It would be an abdication not to wrestle with the central question of our age: How should the risk of a comprehensive collapse of the world economic and political system factor into one’s decisions?

If we are instead clear-eyed about the likelihood of catastrophic scenarios, we can do better than the blind-faith approach by performing the anthropic reasoning intentionally instead of unconsciously/emotionally:

What must happen for there to be no secular apocalypse — for what one might call the “optimistic” version of the future to unfold? And furthermore, which sectors will do well — surprisingly well, in fact — if the world more or less stays intact, even if there are some major bumps and dislocations along the way? Any investor who ignores the apocalyptic dimension of the modern world also will underestimate the strangeness of a twenty-first century in which there is no secular apocalypse. If one does not think about forest fires, then one does not fully understand the teleology of each tree — and one badly will undervalue those trees that are immune to all but the greatest of fires. Even in our time of troubled confusion, there exists a chance that some things will work out immeasurably better than most believe possible.

Thiel says that the market is underrating the true threat of catastrophic risks, but therefore ALSO underrating the necessary strangeness of the small sliver of worlds where everything goes right. Therefore, try hard to imagine a world with a successful outcome — "the optimistic thought experiment". If catastrophic risk is actually substantial, then a world capable of navigating those obstacles will not be an ordinary business-as-usual world, but will be a strange world in which many things go right. Invest in these things, the underrated pieces of technology and government and culture and etc that have to happen if the world is to NOT end.

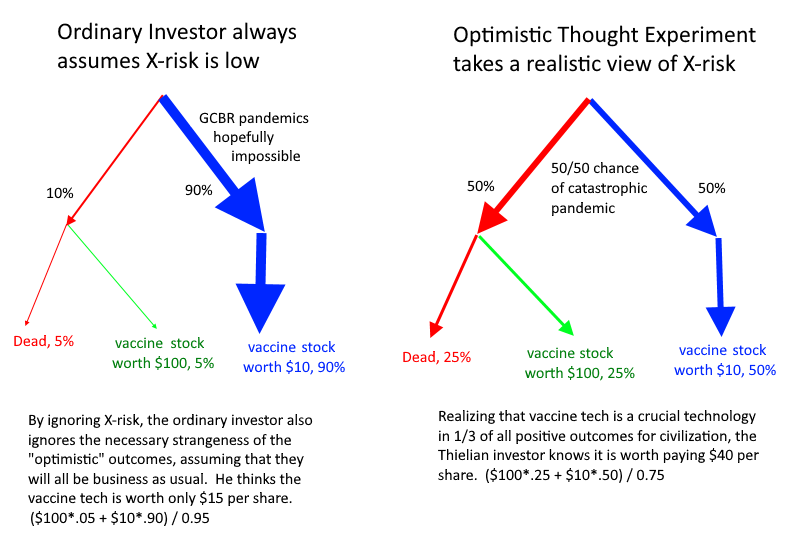

For example, an ordinary investor and a Thielian investor might both agree that a certain vaccine technology would come in super-handy during a catastrophic pandemic, but the ordinary investor might have an irrationally low estimate of whether a pandemic will occur over the next century. The Thielian investor still performs the anthropic reasoning of ignoring the worlds in which civilization collapses entirely, but they do so in a clear-eyed way that acknowledges the existence of pandemic risks, and therefore allows them to recognize the value of the vaccine technology.

A few caveats for any EAs hoping to incorporate this idea into their investment thesis:

- Thiel's thesis doesn't make as much sense as literal investment advice if you think apocalyptic chances are genuinely low. Even a 2% risk of extinction or civilizational collapse this century is terrifying, and obviously justifies massive civilizational effort to mitigate, including an EA movement that should rightfully be thousands of times larger than it is today. But I'm not sure that a 2% risk of collapse would cause enough huge distortions in the overall market, or whether Thiel's edge only makes sense once you think catastrophe is, say, more than 10% likely within one's lifetime.

- As described earlier, the market is equally incentivized to ignore extinction risks, civilizational collapse risks, and the mere failure of capitalism. This pushes it apart from the concerns of longtermist EA, which cares heavily about extinction above and beyond mere temporary collapse. Thiel himself is not a selflessly altruistic EA; like many rationalists he is heavily interested in personal survival in addition to the survival of overall human civilization, so it makes sense that relative to an EA he is less concerned about extinction risks (AI, GCBRs) versus more-likely-but-less-deadly catastrophic risks (various more common forms of war and violence).

- I've been talking about investing assets, but the same "Optimistic" analysis can be used to guide where we invest our personal efforts! Thiel's thought experiment is really just another tool in the toolset of forecaster techniques to help envision coherent futures.

Beyond an investment strategy, of course, the Optimistic Thought Experiment is advice for how to think about the world. The fact that doomsayers have lost their reputations after having been "wrong for too long" and that regardless "it is not profitable to think about one's death" means that our world's "apocalyptic" aspect is underestimated, and conventional cultural attitudes towards the subject cannot be trusted. Instead of being blindered by what "seems normal and reasonable", Thiel admonishes us to think from first-principles about what is truly possible. I obviously appreciate this spirit, which is a core pillar of the rationalist and EA mindset.

Those investors who limit themselves to what seems normal and reasonable in light of human history are unprepared for the age of miracle and wonder in which they now find themselves. The twentieth century was great and terrible, and the twenty-first century promises to be far greater and more terrible. ...The limits of a George Soros or a Julian Robertson, much less of an LTCM, can be attributed to a failure of the imagination about the possible trajectories for our world, especially regarding the radically divergent alternatives of total collapse and good globalization.

Can we somehow use market signals to measure the hidden X-risk?

Thiel hints that maybe we can use market information as a way to understand the risk landscape:

If the preceding line of analysis is correct, then the extreme valuations of recent times may be an indirect measure of the narrowness of the path set before us.

Thiel tells a story about how investors might have piled into the dot-com bubble because they saw that internet-fueled growth was the only viable future, even if the internet companies that actually existed at the time were unlikely to succeed.

In 1999 investors would not have risked as much on internet stocks if they still believed that there might be a future anywhere else. Employees of these companies (most of whom also were investors through stock option plans) took even greater risks, often leaving stable but unpromising jobs to gamble their life fortunes. It is often claimed that the mass delusion reached its peak in March 2000; but what if the opposite also were true, and this was in certain respects a peak of clarity? Perhaps with unprecedented clarity, at the market ’s peak investors and employees could see the farthest: They perceived that in the long run the Old Economy was surely doomed and believed that the New Economy, no matter what the risks, represented the only chance.

In other words, many people saw that the valuations of the dot-com companies were absurd, but Thiel's anthropic analysis claims that there are two sides to every bubble: valuations were only absurd because the only alternatives were much more doomed than commonly supposed. Today, one might tell a similar story about crypto.[3]

This is interesting, but it's all very subjective and fuzzy. How could one try to really seriously map out the kinds of extreme risks relevant to EA? Perhaps we could try to compare forecaster/research estimates to market estimates of some value. Ideally, the forecaster estimates would be trying to give us a picture of the objective probability space (no anthropic reasoning), while the markets are giving us the anthropic version of the story. By comparing the two, we could try to measure how much "anthropic pressure" is at work.

For instance, imagine that expert analysis says that AI should be possible to build, but markets seem to say that it'll never happen and companies based on researching AI will all go bankrupt. This might seem like a hopeful situation — AI is impossible to build, phew, safety worries over! But rational investors should treat those markets as a combined question about (probability that AI is possible to build) * (probability that AI will be aligned), since an unaligned AI is game-over for your portfolio. Thus, this seemingly hopeful indicator might actually be a terrifying sign of "the narrowness of the path set before us". Perhaps markets believe that alignment is the impossible technical challenge, but anthropic pressure is causing markets to bet it all on the hail-mary prospect that AI is impossible to build in the first place.

This kind of analysis would be very messy in the real world, where the closest thing we have to markets on the feasibility of AGI is the stock price of companies like Google. In the future, prediction markets like Kalshi, Polymarket, and PredictIt will hopefully let us ask more precise questions about future technologies, allowing us to more directly perceive anthropic pressures at work.

Should Thiel's strategy be modified for the unique needs of EA?

In his essay, Thiel gives several somewhat contradictory examples of what he would consider a good investment by the logic of the Optimistic Thought Experiment. On the one hand, he endorses the idea of (perhaps somewhat fraudulently) selling insurance against catastrophic risk, which is a strategy optimized for the worlds where everything goes absolutely swimmingly:

...Insurance and reinsurance policies for catastrophic global risk. In any world where investors survive, the issuers of these policies are likely to retain a significant portion of the premium — regardless of whether or not the risks were priced correctly ex ante. In this context, it is striking that Warren Buffett, often described as the greatest investor of all time, has shifted the Berkshire Hathaway portfolio from “value” investments... to the global insurance and reinsurance industries (perhaps one of the purest bets on the optimistic thought experiment).

On the other hand, he says that since civilization underestimates risk, it underprices extremely resilient, defensive assets (like, perhaps, fallout bunkers in remote New Zealand wilderness):

If one does not think about forest fires, then one does not fully understand the teleology of each tree — and one badly will undervalue those trees that are immune to all but the greatest of fires.

Thiel's strategy is framed more around a selfish individual trying to maximize personal return and chances of survival, so maybe a mix of both the above strategies make sense. But the purpose of effective altruism is to use resources to altruistically do something about the risks. For EA, it is not much use to become rich in the most easygoing of "optimistic thought experiment" utopias, since in utopia there are no extinction-level threats left to spend the money on. Surely it would be madness for EAs to simply cross their fingers and bet on worlds where the AI alignment problem handily solves itself, or where US-China relations grow ever-friendlier.

But surely it's also not worth betting on a complete & certain apocalypse, for the usual anthropic reasons. (An exception might be harm-mitigation groups like ALLFED, which could hope to invest pessimistically, make billions on the eve of a catastrophic war to fund a rapid last-minute scale-up.) Perhaps EAs would be best served by trying to bet on near-apocalypse, or warning-shot catastrophes (like covid-19) which are indicators that we're living in a world where EA efforts are most relevant? Maybe this leads us to a longtermist version of socially-responsible-investing, where we're not only donating to and working on but also investing in the technologies and institutions that seem most crucial for navigating the "hinge of history"/"time of perils" successfully. But like I said at the beginning, creating the final EA investment synthesis seems above my pay grade — for more info, see the conversation around "mission hedging" and the other posts I linked at the top.

An interesting real-world example of a financial "secular apocalypse" played out in ancient Greek cities besieged by enemy armies. In order to foment rebellion and conflict inside the city, the invaders (who naturally didn't care about the city's existing social order) promised that if they captured the city, they would cancel all debts and free all slaves, starting everyone over again at zero. As a counter-move to retain the loyalty of the citizenry, the leaders of the besieged city were often forced to preemptively give their subjects the same debt forgiveness. ↩︎

"Globalization" is commonly understood to mean increasing interdependence, trade, movement, and economic interdependence between countries, and in my mind it's particularly associated with the 1980s and 1990s. Thiel is using the word in a broader sense, to mean anything throughout history that increases the centralization or correlation of the different parts of the world. IMO he somewhat motte-and-baileys (or "strategically equivocates") from there on out... Thiel reasons that a fully globalized/correlated/centralized world would have a single point of failure for worldwide collapse, then he seems to think "therefore the world shouldn't be globalized (or at least shouldn't be globalized prematurely). But many of the biggest x-risk dangers (pandemics, AI, nuclear war) aren't really reduced by de-globalization in the way that financial collapse or risk of worldwide communist revolution might be. In his essay, Thiel acknowledges that "the realistic alternative to globalization is world war", and mocks the idea of a worldwide anti-globalization movement for being a contradiction in terms. Yet nowadays, 13 years later, Thiel is well-known for being strongly anti-globalist, seeing "one-to-many" process of imitation represented by globalization as being opposed to the "zero-to-one" technological development that can get us out of the Great Stagnation and back to the future. I have difficulty fitting all these parts of Thiel's philosophy together (what makes globalization trade off against innovation??)... to simplify the task of analyzing this essay, I will simply assume that 2008 Peter Thiel was less nationalistic and more classically libertarian. I will also not attempt to connect any of this essay to the ideas of Rene Girard, although in Thiel's mind globalization must surely be part of the process of Girardian imitation that supposedly leads to outbursts of violence. ↩︎

Here's a common and reasonable objection to Bitcoin's valuation: "What's to stop some better coin from coming along in the future and replacing all the current coins?" This could be perfectly true, but if investors see the eventual and complete "cryptoization" of the economy as inevitable, then even a slim chance that bitcoin, ethereum, etc maintain their pole positions is worth piling into. More interestingly, this means that high crypto valuations might not be telling us about Bitcoin in particular, but rather about investors' estimate of the odds of full "cryptoization". Indeed, 13 years after penning "The Optimistic Thought Experiment", Thiel seems to be using these same tools of analysis, saying "Surely the fact that it's at $60,000 is an extremely hopeful sign. ...It's the most honest market we have in the country, and it's telling us that this decrepit regime is just about to blow up." ↩︎

jh @ 2021-10-27T11:36 (+11)

Thought provoking post, thanks Jackson.

You humbly note that creating an 'EA investment synthesis' is above your pay grade. I would add that synthesizing EA investment ideas into a coherent framework is a collective effort that is above any single person's pay grade. Also, that I would love to see more people from higher pay grades, both in EA and outside the community, making serious contributions to this set of issues. For example, top finance or economics researchers or related professionals. Finally, I'd also say that any EA with an altruistic strategy that relates to money (i.e. isn't purely about direct work) has a stake in these issues and could benefit from further research on some of the topics you highlighted. So there's a lot of things to discuss and a lot of reasons to keep the discussion going.