From Comfort Zone to Frontiers of Impact: Pursuing A Late-Career Shift to Existential Risk Reduction

By Jim Chapman @ 2025-03-04T21:28 (+239)

By Jim Chapman, Linkedin.

TL;DR: In 2023, I was a 57-year-old urban planning consultant and non-profit professional with 30 years of leadership experience. After talking with my son about rationality, effective altruism, and AI risks, I decided to pursue a pivot to existential risk reduction work. The last time I had to apply for a job was in 1994. By the end of 2024, I had spent ~740 hours on courses, conferences, meetings with ~140 people, and 21 job applications. I hope that by sharing my experiences, you can gain practical insights, inspiration, and resources to navigate your career transition, especially for those who are later in their career and interested in making an impact in similar fields. I share my experience in 5 sections - sparks, take stock, start, do, meta-learnings, and next steps. [Note - as of 03/05/2025, I am still pursuing my career shift.]

Sparks – 2022

During a Saturday bike ride, I admitted to my son, “No, I haven’t heard of effective altruism.” On another ride, I told him, “I'm glad you’re attending the EAGx Berkely conference." Some other time, I said, "Harry Potter and Methods of Rationality sounds interesting. I'll check it out." While playing table tennis, I asked, "What do you mean ChatGPT can't do math? No calculator? Next token prediction?" Around tax-filing time, I responded, "You really think retirement planning is out the window? That only 1 of 2 artificial intelligence futures occurs – humans flourish in a post-scarcity world or humans lose?" These conversations intrigued and concerned me.

After many more conversations about rationality, EA, AI risks, and being ready for something new and more impactful, I decided to pivot my career to address my growing concerns about existential risk, particularly AI-related. I am very grateful for those conversations because without them, I am highly confident I would not have spent the last year+ doing that.

Take Stock - 2023

I am very concerned about existential risk cause areas in general and AI specifically.

I was getting tired of just being concerned.

In 2023, I was 57 and could retire in a year.

The last time I had to apply for a job was in 1994.

I have:

degrees in mechanical and civil engineering,

- a 30-year work history related to creating healthy, environmentally sustainable, and equitable communities,

- worked for non-governmental organizations (board vice president, policy analyst, and executive director), a university (co-directing a multi-million-dollar, multi-year research project), and for the past 20 years, an urban planning consulting firm (managing principal);

resources, including a current job (no immediate need to find something), time (due to a reduced workload at that job), a supportive and encouraging family, and money for learning, conferences, and travel.[1]

Start

Over about a year, I developed 4 ideas of how to address my concerns:

- Learn about achieving a career pivot and impactful cause areas,

- Find a job:

- directing philanthropic giving using my research and analytical background,

- primarily at AI safety organizations using my generalist operations experience, and

- Create a job using my leadership experience in a start-up-like environment to help create a new organization.

I tested these ideas in sequence, except for learning, which I did continuously.

Do – 2023 & 2024

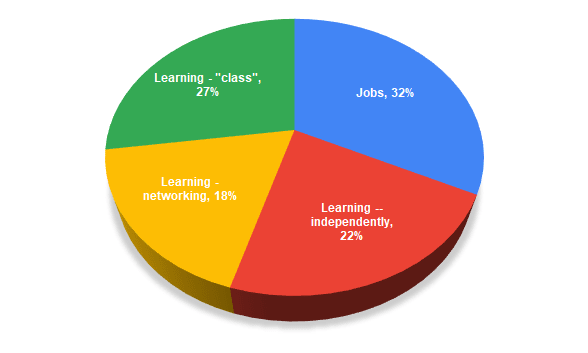

From late 2023 to December 2024, I spent ~740 hours on my late-stage career pivot. Two-thirds of the time, I learned with others (27% in classes and 18% networking) and on my own (22%). The remainder (32%) was split between applying for jobs, creating a job, and doing operations-related paid work as a contractor.

| Activity | Type | Count | % of Hours |

| Learning - class* | Career Coaching | 5 | 11% |

| Courses | 3 | 16% | |

| Learning – networking* | EA Conferences | 4 | 9% |

| 1:1s | 136 | 9% | |

| Learning – on my own | Podcasts | 100 | 17% |

| Papers | 12 | 2% | |

| Video lectures | 15 | 4% | |

| Jobs | Applied for | 21 | 11% |

| Created | 1 | 10% | |

| Contractor | 3 | 11% |

Each row in the table is described next.

Learn

Career Coaching

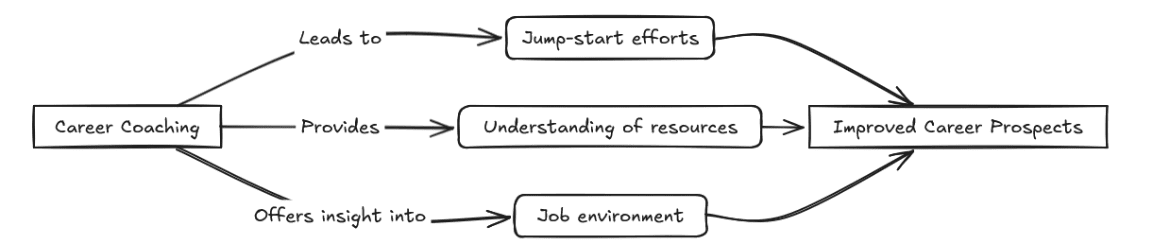

I benefitted a great deal from the career coaching services of 4 EA-aligned organizations. [2],[3],[4],[5],[6] The services were free, but had to be applied for. They ranged from 1 30-minute conversation to a 6-week course, from a weekend retreat to an ongoing regular contact and assistance. I found all the resources invaluable (e.g., self-paced exercises exploring my values, networking opportunities, webinars with topic experts, job and opportunity postings, and referrals to people who were hiring, connections to their alums, and contacts through Slack channels).

But, even without that, I would have benefited just from speaking with someone about my goals and what I knew and had tried. Everyone I spoke with knew how to look for a more impactful career. I always left each interaction with new ideas (e.g., people to talk with, organizations to investigate, classes to take, and conferences to attend) and renewed energy.

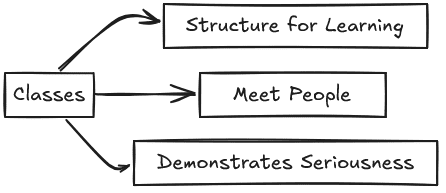

Classes

I learned about other educational resources in many ways – my coaches, networking, and reading. My approach was to be open to everything interesting and helpful to be more positively impactful. I applied for and completed 3 courses covering AI safety[7] and S-Risks.[8] I benefitted greatly from the thoughtful curation of materials to read, exercises to do, and weekly small group facilitated discussions. Such a format held me accountable (always doing the readings because I didn't want to let my cohort down during discussion), tested my comprehension by explaining and discussing the readings with my peers, and created a supportive, welcoming, and encouraging environment to discuss challenging material. These classes also connected me with new networks and information and opportunity sharing spaces, usually through Slack. In addition to all those personal benefits, I was able to add them to my resume, thereby indicating my seriousness about acting on my new career goal.

Network

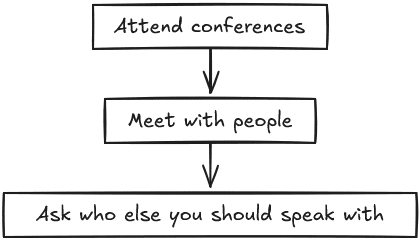

I participated in about 140 1-on-1s with people. They all came from the coaching sessions, the classes, the conferences, and recommendations made by others. These were 20 to 30 minute chats on average. I don't think I have ever met and talked with that many people in 1 year, even during my first year at university. It was incredibly fun, interesting, educational, supportive, and encouraging to engage with people who shared my interests and were from different places in the world, life, and perspectives.

At the recommendation of Irina (at Successif), I attended my first EA Global conference (2024 London). She suggested it for the networking and learning opportunities. It was great and hugely valuable. It was a fun, efficient, and focused way to learn more about the EA community, critical cause areas in general, AI specifically, and to meet people. I have since gone to 3 more (EAGx Toronto, EAG Boston, and EAG Bay Area) and lightly participated in a virtual one. At the conferences (where 1-on-1s are prioritized and facilitated), I spoke with every person in an operations role I could find and was encouraged to keep seeking a new role.[9]

On My Own

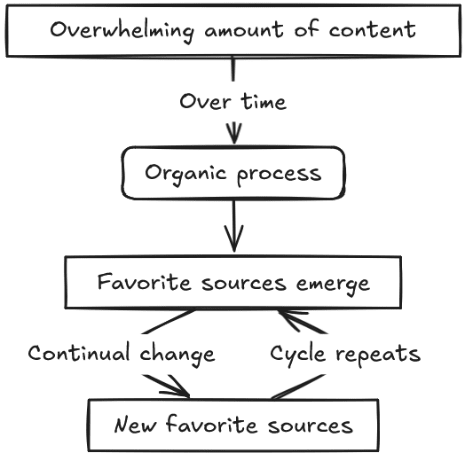

I was quickly overwhelmed by the sheer volume of interesting things to read, listen to, and watch. One thing would lead to the next, but here are the resources that I found I would frequently use:

- My son, who inspired me to learn more.

- I was quickly overwhelmed by the sheer volume of interesting things to read, listen to, and watch. One thing would lead to the next, but here are the resources that I found I would frequently use:

- Podcasts — many, including 80,000 Hours, The Foresight Institute, Future of Life, AI Safety Fundamentals, AI Safety Fundamentals Alignment, Hard Fork, Dwarkesh, Lex Friedman, Y Combinator.

- Videos — for excellent explainers on how AI works, andI always ticked the box at the end of the application, saying, “Yes, please share my contact information and interests with other hiring organizations.” many mathematical concepts I highly recommend Grant Sanderson's 3blue1brown YouTube channel.

- Newsletters (occasionally read, plus others) — Zvi Mowshowitz's Don't Worry About the Vase, The Rundown AI, Jack Clark, The EU AI Act.

- Background and trending topics from sources like the LessWrong, EA Forum, and research papers on arXiv.

Get a Job

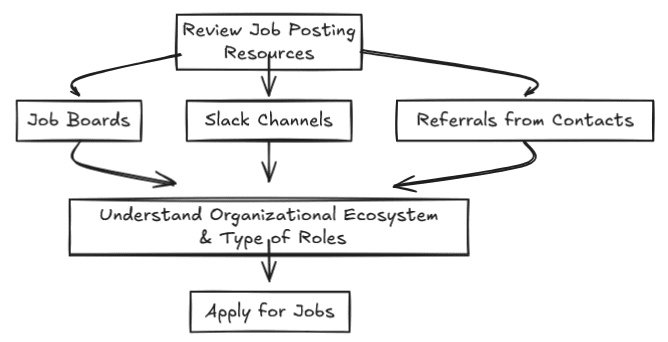

It was hard to start applying for jobs, but it got easier. I used job boards,[10] opportunities posted on the many Slack channels I ended up on, and referrals from people I met. Regularly reviewing job postings helped me better understand the organizational ecosystem[11] and the various roles I might consider. Within the theme of EA, AI, and wanting to do good effectively, I was (am) very open to organizations' various approaches and focus areas.

I applied for and was rejected from 21 jobs as of (~January 2025). Each rejection was disappointing, but I never hesitated to apply for a new one.

Not all jobs were solely focused on AI safety. I applied for employment as an analyst directing funding at 2 philanthropic organizations. These organizations' good work and strong emphasis on data, analysis, and transparent reasoning resonated with my engineering and research background. Over time, I became more focused on the many AI safety organizations but occasionally applied to other cause areas (e.g., meta-EA, community building, and other existential risk areas).

It took me until about April 2024 to realize I should also investigate using the generalist skills I already have. I think it took so long because I assumed that since, topically, I was doing such a huge shift, little of my past experiences would be relevant. Also, it took me a while to understand the organization and job landscape. In hindsight, this certainly seems silly, but I overcame my self-imposed limitations with the help of many people and resources. I have applied for 19 senior-level positions with titles like chief of operations, head of operations, or chief of staff. All the positions were with EA-aligned organizations, and all but a couple had a direct focus on helping AI go well.

I spent a lot of time updating my resume, getting into the right "apply for a job" mindset,[12] and responding to applications. It got a bit easier as time passed, as I had more pre-written material to adjust for the new roles. I always ticked the box at the end of the application, saying “yes, please share my contact information and interests with other hiring organizations.” For the jobs for which I moved to the next hiring stage, it was a pleasant surprise to see my time was valued. I did task tests 5 times and 1 recorded interview. I was paid for 4 tests (9 hours, received $540[13]). I received nice rejection emails. Most were brief. One was incredibly thoughtful (Appendix A). Some told me how many other applicants there were – 372 (Operations Manager), 130 (Chief of Staff), and 170[14] (Operations Lead)– which was disheartening. But it didn't surprise me. It seemed many people at EA Global conferences were also looking for jobs. So, what else could I do? I wanted a job, and many other people did, too.

Create a Job

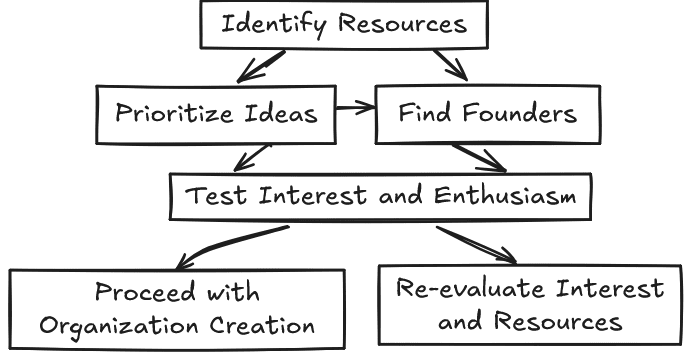

My main effort to create a job resulted from attending a dinner and talk hosted by Catalyze Impact during the London EA Global Conference. Their first AI safety incubator program wanted both generalists and AI researchers. I was reluctant to consider starting something new due to the uncertainty of success, the stereotype of founders working crazy hours, and the overall challenge. I was highly uncertain about my chances of being selected. Nonetheless I applied, and I was selected! I later learned that the selected cohort was about ~10% of ~250 applicants.

I was excited and threw myself into the 5-week program (about 15 hours per week). I met with 10 AI researchers who wanted to start a new for-profit or non-profit organization. They ranged from being unsure where to focus to having a few ideas to having worked on a specific research area for a while.

We collaborated on real-world tasks to start a new organization (e.g., idea prioritization, theory of change, market analysis, 1-year plan, founders' agreement, and pitch deck creation). These tasks accomplished useful work and also, importantly, provided an opportunity to get to know each other (structured speed-dating).

I looked forward to each session. Engaging with many innovative, passionate, and driven people and work tasks made me realize I am interested in and can see myself creating a start-up organization. The program was a success for me because of that confirmation, and I continue working with Cadenza Labs (doing research focused on LLM dishonesty detection and benchmarking). We are refining the strategic plan and seeking philanthropic funding for research initially focused on building ways to detect when large language models are dishonest.

Other related activities I have done:

- I listened to the information sessions and presentations provided as part of Apart Research's hackathons. I have not (yet) participated in a hackathon. I would like to.

- I attended Radical Ventures 2024 AI Founders Masterclass - speakers shared their experiences building tech-first start-ups and scaleups.

Contractor

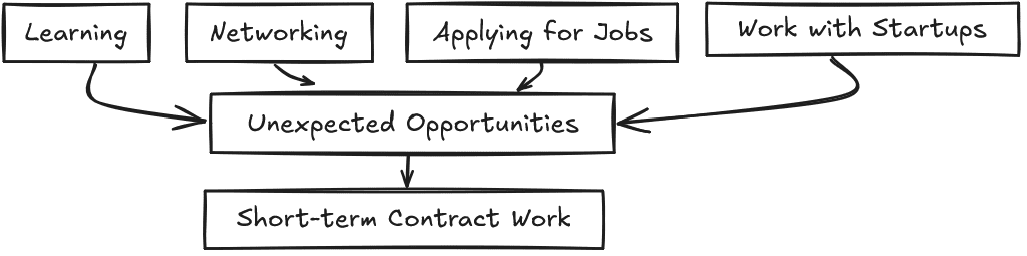

I had reasons for doing each class, conference, or job application, but I couldn't have foreseen what some would lead to, including 2 paid contractor positions.

Before attending the 2024 EA Global Conference in London, the folks at Successif gave me notice of the Centre for Effective Altruism's head of operations job posting. I met with Zach Robinson, CEA CEO, and Howie Oscar, CEA Chief of Staff. Because I had that meeting and subsequently applied for the position, I was known to CEA and was asked months later to help with a temporary part-time project to create CEA's first operations budget for 2025 (needed due to spinning out of Effective Ventures). In the end, I was not selected for the operations job, but I was glad to have been able to help, demonstrate my abilities, and make more connections.

As part of Catalyze Impact's AI safety incubator program, I met an AI researcher who needed help with a US government filing. I assisted with that paid task. Another founder asked if I could help develop funding proposals for his current employer. While that has not yet turned into a paid task, it might.

Meta-Learnings

- I still enjoy learning.

- I am not ready to retire.

- I am willing to be uncomfortable and uncertain and try something new and challenging.

- I am willing to put in the work and be someone who does stuff (thank you, Neel Nanda, for writing about that).

- An amazing amount of thoughtful support and resources is available for what I was trying to do. They only exist because of the foresight of many people who funded, created, and did the work for years. Thank you to everyone who did that.

- I should look for quick, cheap tests that connect me with people. Independent learning and upskilling are essential, but they won't result in being offered a job or receiving a recommendation to speak to someone. It was necessary to talk to people and ask for help.

- I was glad I widely shared my "origin story" about how I came to explore a career change. I probably had a couple dozen people tell me something like, "oh, I wish my parents were interested, weren't hostile/indifferent, didn't think EA was a cult, were thinking about AI, would take existential risks to heart, would consider making such a big change, etc." I greatly appreciate my relationship with my son.

- What "TL;DR" means, and that it can be a noun and verb.

- To be aware of my priors, test and update them as needed - Bayes' theorem.

Next Steps

- Keep talking with my son.

- Be a resource for anyone trying a similar big career pivot . This document is part of that. Feel free to reach out to me - Linkedin.

- Keep learning, exploring, opening doors, and making connections.

- As a result of the Catalyze Impact incubator, I continue working with Cadenza Labs. I am keeping in touch with some of the other founders who were part of that program.

- Look and apply for more jobs.

- Explore other start-up opportunities.

- Attend other EA Global conferences 2025

- Check-in with some of the great people I met – learn from their experiences, and try to support and encourage them.

Note – I used Excalidraw to create the flow diagrams.

Appendix A - Helpful Feedback

Below is an email from Cillian Crosson, Executive Director, Tarbell Fellowship,[15] informing me that I was not selected for the Operations Manager / Associate position. I was very grateful for his helpful feedback and resource suggestions.

While we cannot provide detailed feedback due to the volume of applications received, we wanted to share some common characteristics of successful candidates who progressed to the next stage:

- A track record of leading complex projects autonomously with little oversight. Top candidates at this stage often had 3+ years of project management experience and were able to draw upon this in their answers during the automated interview.

- Ability to prioritise & manage high-task volume. Top candidates recognised that it would not be possible to complete all three work tasks in the allocated time and correctly triaged what they should prioritise (e.g. using an Eisenhower Matrix). They also could draw on past experiences thriving in work environments with high task volumes (e.g. working in a small team / start-up environment).

- Strong attention to detail (e.g. identifying most of the major errors in task 3, few mistakes in their copy, fully addressing all aspects of questions in the automated interview and work task).

- Financial & legal fluency (e.g. competency navigating visa processes, as evidenced by their answers to the interview question about a student on an F1 visa).

- Dedication to our mission. Top candidates often had a track record of prioritising reducing risks from advanced AI. They were also able to clearly articulate their passion for our mission during the interview.

We'd like to share some resources that we think many might find helpful for identifying other opportunities, planning their careers, and/or building career capital.

- AI Safety Fundamentals run ~8-week courses in AI Governance and AI Alignment. Their programmes are virtual and completely free. We'd encourage you to sign up if you're interested in learning more about the topic.

- 2025 Tarbell Fellowship. Please complete this short form to be notified when applications reopen for the 2025 Tarbell Fellowship open in September 2025.

- 80,000 Hours Job Board often lists roles for those interested in using their career to reduce risks from AI. Some specific roles listed there that may be of interest:

- Winter Fellowship 2025 - Centre for the Governance of AI

- Head of Operations - AI & Democracy Foundation

- Horizon Fellowship 2025 - Horizon Institute for Public Service

- 80,000 Hours have a lot of valuable guidance on having more social impact with your career. In particular, we often suggest people check out their in-depth process for career planning and apply for their free career advising.

- Specifically, this career review on journalism and this problem profile on preventing an AI-related catastrophe may be of interest.

- NYU maintains a list of journalism fellowships & annual programmes. Many programmes listed here will likely be suitable for those who applied for the Tarbell Fellowship.

- The International Journalists Network regularly lists opportunities for early-career journalists on their opportunities page.

- ^

But I found many resources are free, pay as you can, or offer financial assistance.

- ^

Successif offers a range of services including coaching, career mentoring, opportunity-matching, and training. They also conduct market research to understand the most impactful jobs of tomorrow and inform our advising. I applied and was accepted to complete their well curated, set of self-paced reading and exercises to explore my values, cause areas, and how to be more impactful. I took part in information webinars and cohort discussions and had several check-ins with Irina Gueorguiev. As a result, I was referred to multiple jobs and have received assistance with review of my job application materials and interview preparation.

- ^

80,000 Hours - Personalised, and Impact-Focused Career Advising. I had 1 30-minute call, with the option for more. Thank you, Abigail Novick Hoskin.

- ^

Consultants for Impact - Doing good strategically. I participated in multiple virtual and in person coaching sessions. Thank you, Emily Dardaman and Sarah Pomeranz. I also took part in a NYC weekend retreat with ~25 people exploring how to make their careers more impactful.

- ^

High Impact Professionals – enabl[ling] working professionals to maximize their positive impact.I completed the Impact Accelerator Program, which helps experienced professionals take action toward high-impact careers through six weeks of facilitated cohort discussions, a global network, and career framework. Thank you Nina Friedrich.

- ^

AISafety.com’s advisor’s list. Note – this is provided as a resource, and it includes each of the ones I mentioned, but also several others.

- ^

Blue Dot AI Safety Fundamentals courses - Alignment (covering technical AI safety research to reduce risks from advanced AI systems) and Governance (covering a range of policy levers for steering AI development). 12 weeks of readings, exercises, small group facilitated discussions, and project.

- ^

Center for Reducing Suffering course- an introductory course looking at issues around reduc[ing] severe suffering, taking all sentient beings into account. 6 weeks of readings and small group facilitated discussions

- ^

Many said, “it seems useful and important for someone with your experience to get involved. Look around this conference hall, the vast majority are young with less experience than you in managing things.”

- ^

80,000 Hours’ job board. Consultants for Impact’s job board list of 9 more. High Impact Professionals have a talent board where employers can look for people to hire.

- ^

AISafety.com’s map of the “land” of AI Existential Safety here.

- ^

To me this means reflecting on what I have done, what is transferable, and becoming comfortable with sharing with someone I don’t know why I am great in a non-humble, boastful, but truthful way.

- ^

$200 for 3 hours. $50 for 1 hour. $80 for 2 hours. $210 for 3 hours.

- ^

~20 of which advanced to the work test stage, and I was one of them.

- ^

Thank you, Cillian for letting me share this.

gergo @ 2025-03-05T07:53 (+18)

It was really amazing to read your post, thank you for writing it up. I will make sure to share it with experienced professionals that I talk to! I think you are doing all the right things, so I hope you land a full-time role or start your own org soon!

Jim Chapman @ 2025-05-02T18:59 (+1)

Thank you for reading it and sharing it in your post.

Mitchell Laughlin🔸 @ 2025-03-08T10:08 (+14)

This post was really inspiring, (+1ing gergo) thank you for writing it. I'm glad you exist.

Ben_West🔸 @ 2025-03-13T16:34 (+5)

This was a great post, thanks for writing it up

kevinj @ 2025-03-13T14:45 (+3)

Someone who is 57 years old with decades of specific industry knowledge seems like a perfect candidate for earning to give. Have you considered that route? You can still stay informed on EA and participate in events and groups, while working in your current career.

Mo Putera @ 2025-03-14T09:01 (+4)

The original post implicitly answers this I think — Jim was ready to retire in 2024 from his then-current career, and only changed his mind about retirement because he's worried about x-risk and wanted to do something about it; he also seems to be a doer by disposition (so EtG would be complementary not substitutive to direct work if he did it). I also think mid- to late-career professionals with extensive leadership experience are undersupplied vs early-stage in the movement, although I'm guessing this is slowly shifting. His ops/generalist background highlights his org-building/running/boosting aptitude too, although I'm less sure how undersupplied this still is vs the other aptitudes Holden mentioned.

kevinj @ 2025-03-14T13:48 (+5)

I'd agree that his experience would be useful to many orgs, but staying in his current career and earning to give would have more impact than job searching for year upon year, with lower likelihood of success as he ages - if his ultimate goal is impact.

Jim Chapman @ 2025-04-26T20:09 (+2)

@kevinj I agree that earning to give could result in more impact (more quickly), but at the moment, I don't seem ready to be fully rational or selfless. Some burnout from my existing job and wanting to be personally involved in the work to address my concerns were part of my decision to explore this pivot.

Jim Chapman @ 2025-04-26T20:10 (+1)

Thanks for your comments and the link.

Jim Chapman @ 2025-04-26T20:14 (+1)

Thank you to everyone for taking the time to read my post, comment, and contact me. I have enjoyed meeting several new people during 1-on-1s. I was a bit nervous to share my story as my first post. I am glad people have found it helpful. The reception has been all positive and supportive. This is another example consistent with every new and uncomfortable position I put myself in over the past couple of years -- everyone has welcomed me in, listened to me, shared their experiences, and encouraged me. Thank you.

Ed M @ 2025-04-25T18:33 (+1)

Hey, Jim. Thanks for your article and candor. At one point you note, "It seemed many people at EA Global conferences were also looking for jobs." How does that affect the tenor of the conferences?

Jim Chapman @ 2025-04-26T19:59 (+1)

Not sure what you are thinking about -- but if it is a concern about it all being transactional and everyone focused on just themselves ("must get job"), I didn't feel that. Going to the EA conferences was the best thing to do to better understand the community, feel the positive energy of 200 to 1400+ smart, passionate people working to improve the world, and to talk with people who could help me test my fit to pivot into the space. I feel we are not fully tapping into all the interest out there. So that made me more open to the entrepreneurial route through Catalyze Impact. I am also still applying for jobs.