Good altruistic decision-making as a deep basin of attraction in meme-space

By Owen Cotton-Barratt @ 2021-01-01T17:11 (+31)

Epistemic status: this is a model I've been playing around with that I think captures some important dynamics, but hasn't had that much attention going into how it lines up with messy empirics.

In memetic selection, there's an evolutionary benefit to belief systems which include a directive to spread that system of beliefs further. This can attract others to the belief system and support growth -- at least for a while, until the easy growth is taken, or the belief system mutates.

I want to describe them as "explicit attractors" in meme-space in the sense of attractors in dynamical systems; although I'm using this as a slightly imprecise metaphor:

- We can't really consider one person's beliefs as a dynamical system, because there is too much dependence upon others' beliefs

- Indeed it is this dependence upon others' beliefs which provides most of what I wanting to refer to when I say "attractors"

- If we consider the space of all global beliefs, there could be poles where everyone buys into the same belief system

- If one of these was an attractor, it would follow that if we started in the right basin we would converge towards this global agreement

- I want to be able to refer to a weaker form of "attractor" where being in some proximity to one of these poles exerts pressure towards the pole -- but admit the possibility that the trajectory gets derailed or the pressure is eventually counteracted without giving up on calling the thing an attractor

I think for some explicit attractors, the directive to spread the beliefs further is very natural (perhaps could be derived as a consequence of other parts of the beliefs); for others it might be more arbitrary or bolted-on feeling. I guess we should expect cases where it's relatively natural to be a bit more robust (hence perhaps memetically successful): they can't easily lose the attractor property. This is an instance of a self-correction mechanism, which helps to prevent drift.

I think we see another kind of self-correction mechanism in the belief system of science. It provides tools for recognising truth and discarding falsehood, as well as cultural impetus to do so; this leads not just to the propagation of existing scientific beliefs, but to the systematic upgrading of those beliefs; this isn't drift, but going deeper into the well of truth.

It seems to me like at a certain point of sophistication-of-thinking, good altruistic decision-making has a very natural version of the explicit attractor property: when there's enough self-awareness, the decision-makers will realise that more good altruistic decision-making would be a very good thing on altruistic grounds, so it becomes valuable to spread that perspective. And at a certain point of truth-tracking, it will realise that having true beliefs is important in the pursuit of the good, and also strive to have more true beliefs about which decisions lead to good outcomes. This gives it something like the deep self-correction/improvement mechanism that science has.

I think that this is a very good place to be in memetic fundamentals. There's a lot of texture about what ultimately makes a good meme, what people find attractive, memorable, etc., and important details that to be honed there; but it's certainly helpful if your idea set can come with natural drives towards self-propagation and self-improvement.

I believe that this should make us optimistic about the future potential of the idea set around effective altruism: not necessarily under that brand, and not necessarily with particular current beliefs (as they may be proven wrong), but the fundamentals of seeking to help others, and doing this by seeking truer beliefs and building a larger coalition of people bought into the same basic goals. (Indeed I started this train of thought by wondering what is the kernel of effective altruism?, in the sense of looking for a minimal memeplex which would be self-propagating and self-improving, and eventually capture the goods that EA is achieving.)

So what? I think this gives pretty strong reason to favour the spread of truth-seeking self-aware altruistic decision-making (whether or not it's attached to the "EA" brand). In particular it gives some reason to make sure that we're keeping all of those elements: altruistic, truth-seeking, and self-aware (and not drop one for the sake of expedience).

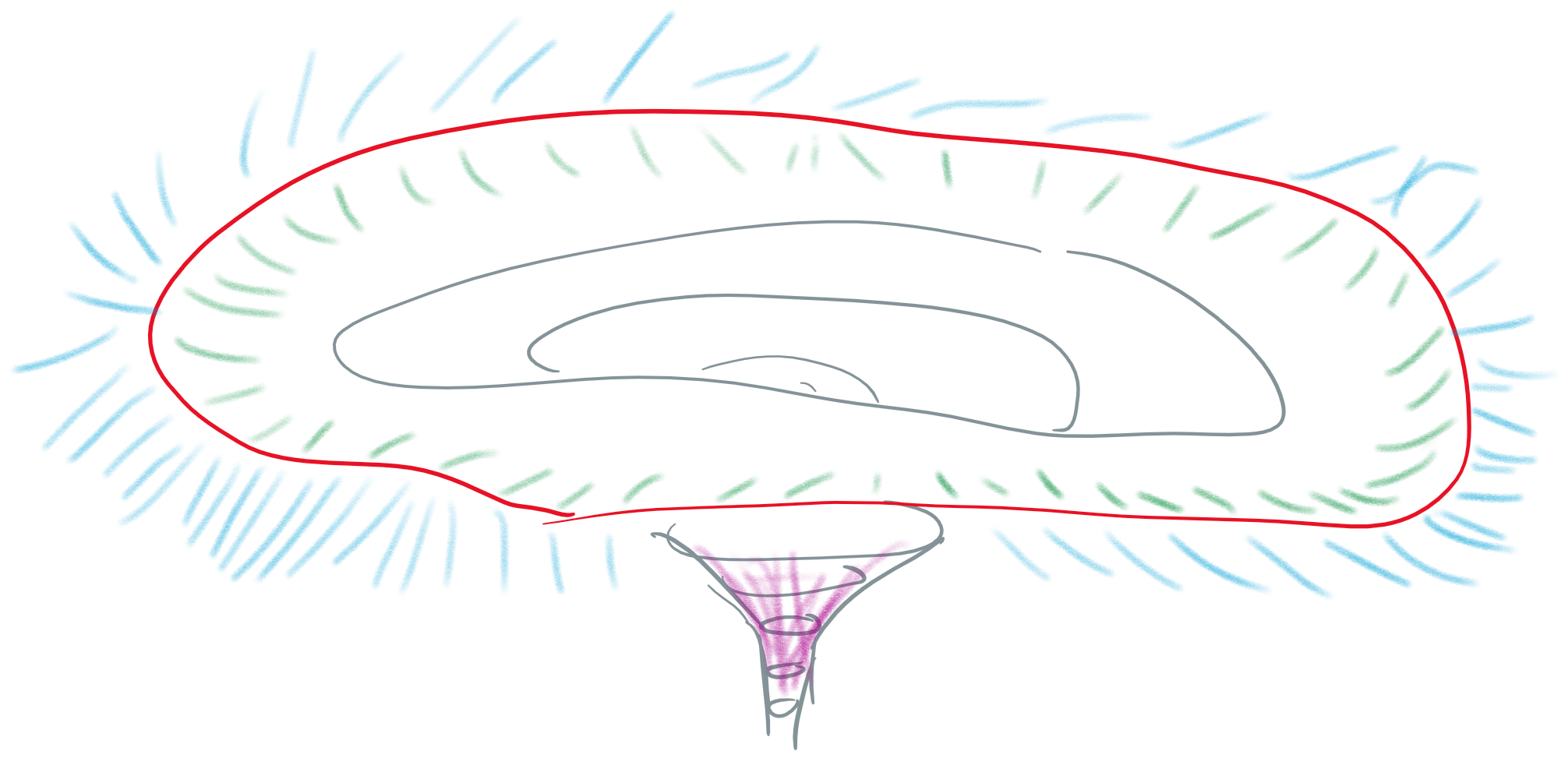

It might seem like it becomes particularly important to know when things are on the right side of the boundary such that they're just self-aware and just truth-seeking enough to eventually end up descending into the well (on the green side of the red boundary in my sketch below).

I think there's something to that, but it's unclear how important the precise location of the boundary is (at least after we have enough people solidly within the basin). Pulling people deeper in could help to accelerate their useful work (e.g. suppose you need to undertake a certain amount of successful truth-seeking before you can make your best altruistic decisions, as denoted by the purple region in the sketch). And if there is a substantial amount of work from people within the basin aiming to bring others in, then moving people in the blue zone just a bit closer to the boundary could be helpful for their subsequent passage over it, even if they don't get there now. (It also seems quite possible that people closer to being in the basin will tend to do more useful work than people further from it, although I'm not sure about that.)

MichaelA @ 2021-01-04T06:43 (+4)

Thanks, I found this post interesting.

(It also seems quite possible that people closer to being in the basin will tend to do more useful work than people further from it, although I'm not sure about that.)

Is part of why you're not sure about that related to your observation in this post that someone becoming more "strategic" without becoming more "virtuous" could substantially increase the expected harm they do, since it might move them towards working in higher-leverage domains? In this case, the idea might be one of the following:

- People might move closer to the basin via becoming more truth-seeking and/or self-aware, but without becoming more altruistic. If so, they might then start acting in higher-leverage domains, but act in overly self-interested, short-sighted, uncooperative, etc. ways, causing problems

- People might move closer to the basin via becoming more altruistic, but without becoming more truth-seeking or self-aware. Such people might have had a decent chance of making blunders regardless of what domains they worked in. But their increased altruism means those people are more likely to be acting in high-leverage domains or acting in a way perceived as "representative" of some important ideas/movement, so the blunders are now more harmful.

Owen_Cotton-Barratt @ 2021-01-04T09:59 (+4)

Yes, that's the kind of thing I had in the back of my mind as I wrote that.

I guess I actually think:

- On average moving people further into the basin should lead to more useful work

- Probably we can identify some regions/interventions where this is predictably not the case

- It's unclear how common such regions are

kokotajlod @ 2021-01-03T02:32 (+4)

Interesting post! I'm excited to see more thinking about memetics, for reasons sketched here and here. Some thoughts:

--In my words, what you've done is point out that approximate-consequentialism + large-scale preferences is an attractor. People with small-scale preferences (such as just caring about what happens to their village, or their family, or themselves, or a particular business) don't have much to gain by spreading their memeplex to others. And people who aren't anywhere close to being consequentialists might intellectually agree that spreading their memeplex to others would result in their preferences being satisfied to a greater extent, but this isn't particularly likely to motivate them to do it. But people who are approximately consequentialist and who have large-scale preferences will be strongly motivated to spread their memeplex, because doing so is a convergent instrumental goal for people with large-scale preferences. Does this seem like a fair summary to you?

--I guess it leaves out the "truth-seeking" bit, maybe that should be bundled up with consequentialism. But I think that's not super necessary. It's not hard for people to come to believe that spreading their memeplex will be good by their lights; that is, you don't have to be a rationalist to come to believe this. It's pretty obvious.

--I think it's not obvious this is the strongest attractor, in a world full of memetic attractors. Most major religions are memetic attractors, and they often rely on things other than convergent instrumental goals to motivate their members to spread the memeplex. And they've been extremely successful, far more so than "truth-seeking self-aware altruistic decision-making," even though that memeplex has been around for millenia too.

--On the other hand, maybe truth-seeking self-aware altruistic decision-making has actually been even more successful than every major religion and ideology, we just don't realize it because as a result of being truth-seeking, the memplex morphs constantly, and thus isn't recognized as a single memplex. (By contrast with religions and ideologies which enforce conformity and dogma and thus maintain obvious continuity over many years and much territory.)

Owen_Cotton-Barratt @ 2021-01-03T14:28 (+3)

In my words, what you've done is point out that approximate-consequentialism + large-scale preferences is an attractor.

I think that this is a fair summary of my first point (it also needs enough truth seeking to realise that spreading the approach is valuable). It doesn't really speak to the point about being self-correcting/improving.

I'm not trying to claim that it's obviously the strongest memeplex in the long term. I'm saying that it has some particular strengths (which make me more optimistic than before I was aware of those strengths).

I think another part of my thinking there is that actually quite a lot of people have altruistic preferences already, so it's not like trying to get buy-in for a totally arbitrary goal.

Matt_Lerner @ 2021-01-02T21:37 (+1)

I read this post with a lot of interest; it has started to seem more likely to me lately that spreading productive, resilient norms about decision-making and altruism is a more effective means of improving decisions in the long run than any set of particular institutional structures. The knock-on effects of such a phenomenon would, on a long time scale, seem to dwarf the effects of many other ostensibly effective interventions.

So I get excited about this idea. It seems promising.

But some reflection about what is commonly considered precedent for something like this makes me a little bit more skeptical.

I think we see another kind of self-correction mechanism in the belief system of science. It provides tools for recognising truth and discarding falsehood, as well as cultural impetus to do so; this leads not just to the propagation of existing scientific beliefs, but to the systematic upgrading of those beliefs; this isn't drift, but going deeper into the well of truth.

I have a sense that a large part of the success of scientific norms comes down to their utility being immediately visible. Children can conduct and repeat simple experiments (e.g. baking soda volcano); undergraduates can repeat famous projects with the same results (e.g. the double slit experiment), and even non-experimentalists can see the logic at the core of contemporary theory (e.g. in middle school geometry, or at the upper level in real analysis). What's more, the norms seem to be cemented most effectively by precisely this kind of training, and not to spread freely without direct inculcation: scientific thinking is widespread among the trained, and (anecdotally) not so common among the untrained. For many Western non-scientists, science is just another source of formal authority, not a process that derives legitimacy from its robust efficacy.

I can see a way clear to a broadening of scientific norms to include what you've characterized as "truth-seeking self-aware altruistic decision-making." But I'm having trouble imaging how it could be self-propagating. It would seem, at the very least, to require active cultivation in exactly the way that scientific norms do-- in other words, that it would require a lot of infrastructure and investment so that proto-truth-seeking-altruists can see the value of the norms. Or perhaps I am having a semantic confusion: is science self-propagating in that scientists, once cultivated, go on to cultivate others?

Owen_Cotton-Barratt @ 2021-01-03T14:32 (+2)

I have a sense that a large part of the success of scientific norms comes down to their utility being immediately visible.

I agree with this. I don't think science has the attractor property I was discussing, but it has this other attraction of being visibly useful (which is even better). I was trying to use science as an example of the self-correction mechanism.

Or perhaps I am having a semantic confusion: is science self-propagating in that scientists, once cultivated, go on to cultivate others?

Yes, this is the sense of self-propagating that I intended.

Will Kirkpatrick @ 2021-01-01T20:53 (+1)

Thanks for sharing your thoughts.

I don't know if altruistic, truth seeking, and self aware are all necessary requirements though. It seems very much so to me that we're never going to be able to convince the vast majority of people to have the excited attitude about EA that most of us do now. Maybe the right focus of the "altruism" meme like this should be on spreading the first two, altruistic and truth seeking.

Self awareness seems almost contrary to the idea of a meme like this, given that it relies on the spreading without too much questioning. Ideas with altruistic frameworks have done well in the past, i.e. ALS ice bucket challenge, but I don't know how you would go about including a second idea into the existing matrix of a meme like that.

A scientific approach to memetics, I love our weird ideas!

Keep up the good posts Owen!

Owen_Cotton-Barratt @ 2021-01-01T20:59 (+4)

Why does it need to rely on spreading without too much questioning?

(BTW I'm using "meme" in the original general sense not the more specific "internet meme" usage; was that obvious enough?)