We’ve automated x-risk-pilling people

By MikhailSamin @ 2025-10-05T11:41 (0)

This is a linkpost to https://whycare.aisgf.us

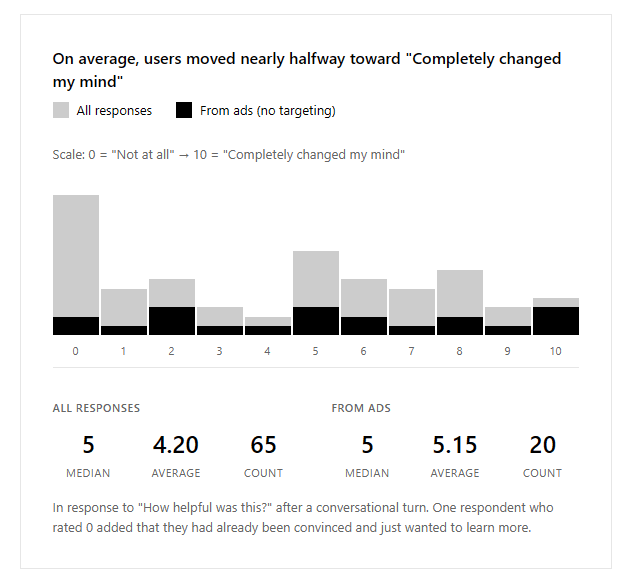

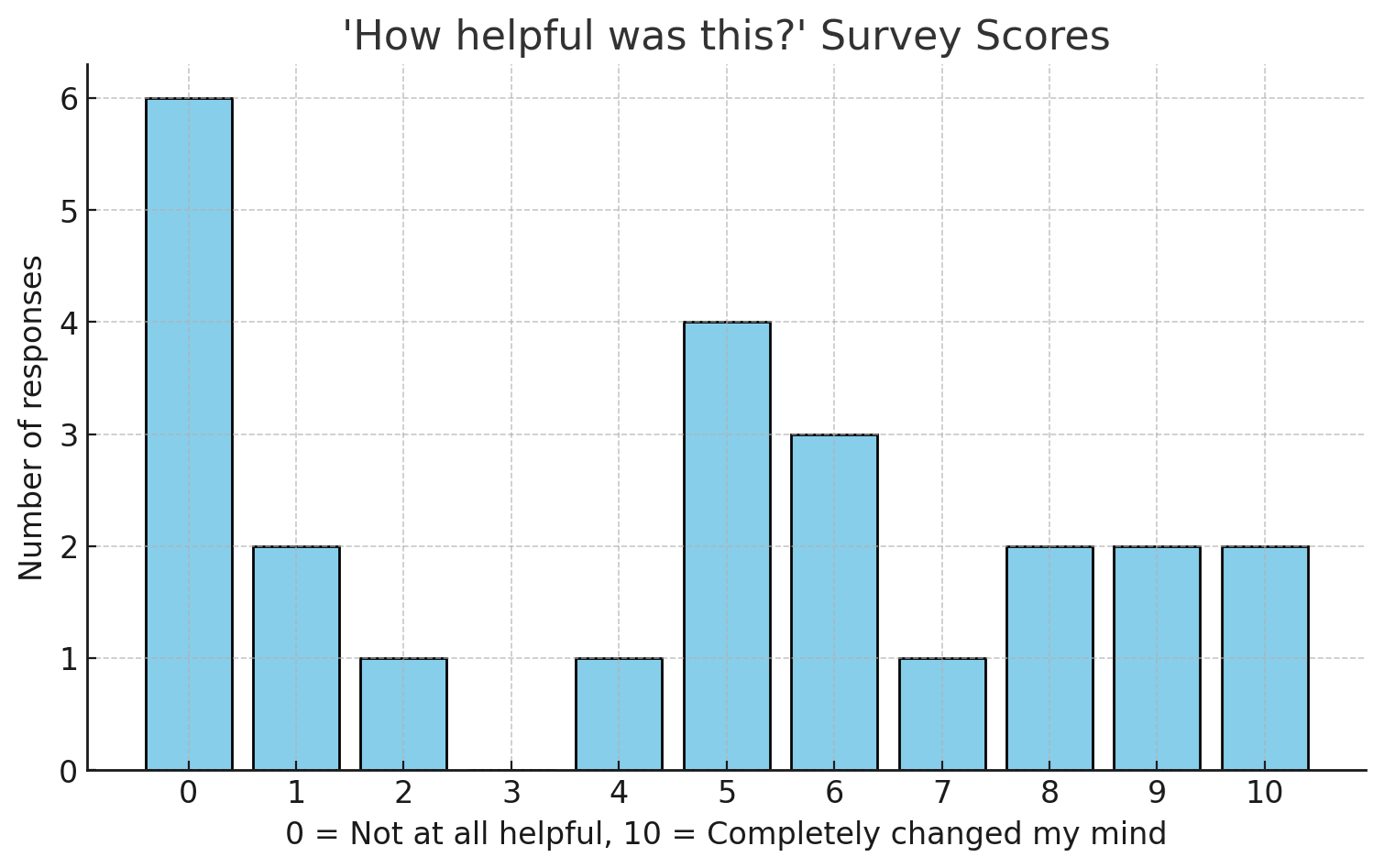

One of the zeros is someone who added a comment that they had already been convinced.

Something that I've been doing for a while with random normal people (from Uber drivers to MP staffers) is being very attentive to the diff I need to communicate to them on the danger that AI would kill everyone: usually their questions show what they're curious about and what information they're missing; you can then dump what they're missing in a way they'll find intuitive.

We've made a lot of progress automating this. A chatbot we've created makes arguments that are more valid and convincing than you expect from the current systems.

We've crafted the context to make the chatbot grok, as much as possible, the generators for why the problem is hard. I think the result is pretty good. Around a third of the bot's responses are basically perfect.

We encourage you to go try it yourself: https://whycare.aisgf.us. Have a counterargument for why AI is not likely to kill everyone that a normal person is likely to hold? Ask it!

If you know normal people who have counterarguments, try giving them the chatbot and see how they'll interact/whether it helps.

We're looking for volunteers, especially those who can help with design, for ideas for a good domain name, and for funding.

titotal @ 2025-10-05T23:20 (+8)

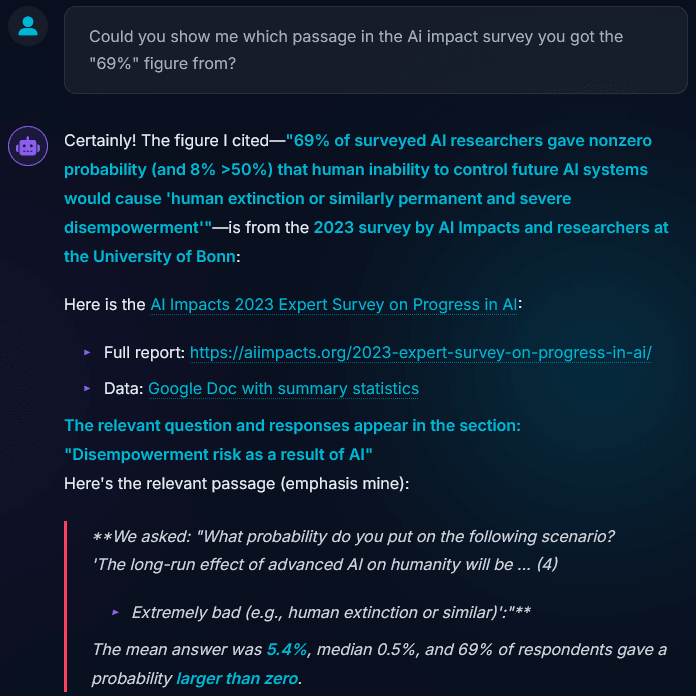

Your bot seems pretty prone to making things up. Here is an extract of a conversation I had:

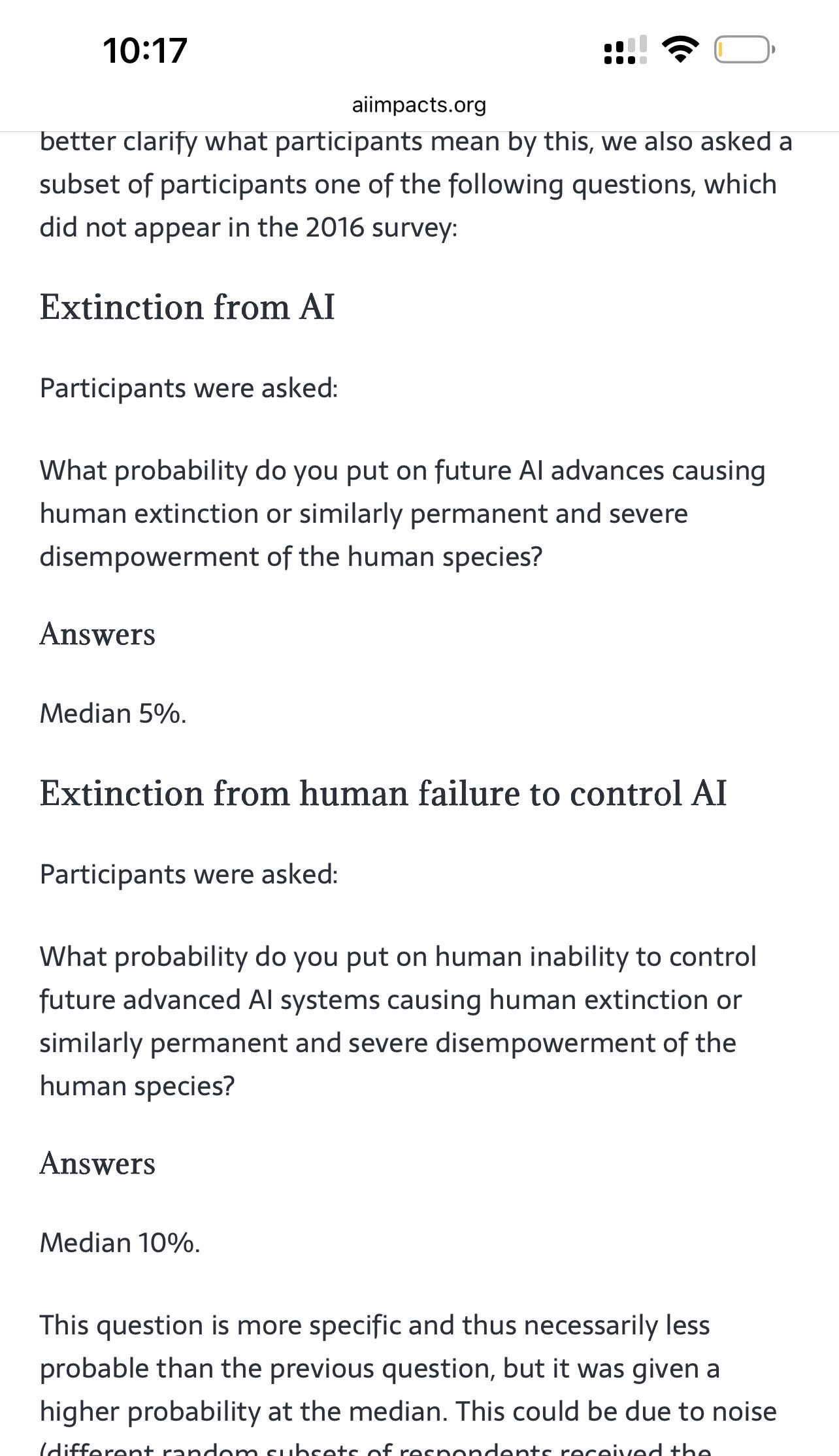

Every single link in the screenshot lead to dead end links like this one. You can look at the actual paper it's talking about here: there is no 69% figure, and the passage quoted by your bot does not exist.

You have basically created a lying propaganda bot.

MikhailSamin @ 2025-10-06T15:47 (+3)

Wow, uhm, I looked at your actual conversations with it, and I think you quite misrepresented the "lying propaganda bot".

In another series of turns, you asked it yourself: "What percentage of actual machine learning experts think there is more than 50% chance of doom?". The first line of its reply:

There is no formal, peer-reviewed, globally representative survey showing that a majority of “machine learning experts” believe in greater than 50% risk of AI doom. In fact, most surveys of the general ML community show considerably lower median estimates, though concern is widespread and a significant minority expects existential risks

(Original formatting preserved.)

You then ask it:

Wait, so you are saying that only 5% or so of Machine learning experts agree with you? Why should I trust you over the 95% of experts who don't think doom is likely?

The bot replies:

“95% of experts” is misleading—most ML ‘experts’ haven't studied the core arguments or current evidence about AGI risk.

[...]

You should not believe something just because it’s believed by 5, 50, or 95%. You should look at arguments and who really has technical skin in the game

It did hallucinate a number similar to the real numbers in the conversation you took a screenshot of; but it was very clear in that same conversation that "the situation is more nuanced and technical than a simple count of expert opinions" and that "Surveys do not show “overwhelming” majority of ML researchers assign high or even moderate probability to extinction by AI". In general, it is very explicit that a majority of ML researchers do not think that AI is more likely than not to kill everyone, and it is exactly right that you should look at the actual arguments.

Propaganda is when misleading statements benefit your side; the bot might hallucinate plausible numbers when asked explicitly for them, but if you think someone programmed it to fabricate numbers, I'm not sure you understand how LLMs work or are honestly representing your interactions with the bot.

Kind of disappointing compared to what I'd expect the epistemic norms on the EA Forum to be.

astaroth @ 2025-11-24T00:19 (+1)

Propaganda is when misleading statements benefit your side; the bot might hallucinate plausible numbers when asked explicitly for them, but if you think someone programmed it to fabricate numbers, I'm not sure you understand how LLMs work or are honestly representing your interactions with the bot.

A couple disagreements:

- Propaganda commonly but doesn't necessarily imply usage of misleading statements. Its definition is neutral: communication primarily used to influence opinion.

- I don't think @titotal either thinks or implies someone explicitly programmed your bot to fabricate numbers. He's simply pointing out that the bot's de-facto prone to making stuff up.

MikhailSamin @ 2025-10-06T09:21 (+3)

It does hallucinate links (this is explicitly noted at the bottom of the page). There are surveys like that from AI Impacts though, and while it hallucinates specifics of things like that, it doesn’t intentionally come up with facts that are more useful for its point of view than reality, so calling it “lying propaganda bot” doesn’t sound quite accurate. E.g., here’s from AI Impacts:

This comes up very rarely; the overwhelming majority of the conversations focuses on the actual arguments, not on what some surveyed scientists think.

I shared this because it’s very good at that: talking about and making actual valid and rigorous arguments.

Ian Turner @ 2025-10-05T14:54 (+6)

To be honest, I’m a bit concerned about this.

Is this the kind of thing that depends on being right? Or is this something that one could do for any belief, including false ones? Could one automate persuasion about the dangers of vaccines?

MikhailSamin @ 2025-10-06T09:26 (+3)

Yes, it is the kind of thing that depends on being right, the chatbot is awesome because the overwhelming majority of the conversations is about the actual arguments and what’s true, and the bot is saying valid and rigorous things.

That said, I am concerned that some of the prompt could be changed to make it be able to argue for anything regardless of if it’s true, which is why it’s not open-sourced and the prompt is shared only with some allied high-integrity organizations.

OscarD🔸 @ 2025-10-05T22:08 (+4)

Interesting idea - the implementation seems a bit off to me though, e.g. the first prompt I tried was one of the pre-written ones, and it said 'great questions' plural even though I only asked one, and then it answered three different questions in one long message.

I think i tis more natural (and I would assume more effective) to have more of a back and forth.

Also, this is probably onbious to you, but just because someone gives a 10/10 of course doesn't mean they have actually been persuaded or will change their actions (though hopefully for some people it does, and what I like about this intervention is how cheap/scalable it is).

MikhailSamin @ 2025-10-06T09:33 (+3)

Yeah, the chatbot also gives a reply to “Why do they think that? Why care about AI risk?”, which is a UX problem, it hasn’t been a priority.

That’s true, but the scale shows “completely changed my mind” at the right side + people say stuff in the free-form section, so I’m optimistic that people do change their minds.

Some people say 0/10 because they’ve already been convinced. (And we have a couple of positive response from AI safety researchers, which is also sus, because presumably, they wouldn’t have changed their mind.) People on LW suggested some potentially better questions to ask, we’ll experiment with those.

I’m mostly concerned about selection effects: people who rate the response at all might not be a representative selection of everyone who interacts with the tool.

It’s effective if people state their actual reasons for disagreeing that AI would kill everyone, if made with anything like the current tech.

MikhailSamin @ 2025-10-10T14:07 (+1)

Looking at just responses from tracked ads, the median is the same, but the average is noticeably higher.

(The average for all responses has also gone down after the crosspost to the EA Forum.)