GWWC's 2020–2022 Impact evaluation (executive summary)

By Michael Townsend🔸, Sjir Hoeijmakers🔸, Giving What We Can🔸 @ 2023-03-31T07:34 (+181)

This is a linkpost to https://www.givingwhatwecan.org/impact

Giving What We Can (GWWC) is on a mission to create a world in which giving effectively and significantly is a cultural norm. Our research recommendations and donation platform help people find and donate to effective charities, and our community — in particular, our pledgers — help foster a culture that inspires others to give.

In this impact evaluation, we examine GWWC's[1] cost-effectiveness from 2020 to 2022 in terms of how much money is directed to highly effective charities due to our work.

We have several reasons for doing this:

- To provide potential donors with information about our past cost-effectiveness.

- To hold ourselves accountable and ensure that our activities are providing enough value to others.

- To determine which of our activities are most successful, so we can make more informed strategic decisions about where we should focus our efforts.

- To provide an example impact evaluation framework which other effective giving organisations can draw from for their own evaluations.

This evaluation reflects two months of work by the GWWC research team, including conducting multiple surveys and analysing the data in our existing database. There are several limitations to our approach — some of which we discuss below. We did not aim for a comprehensive or “academically” correct answer to the question of “What is Giving What We Can’s impact?” Rather, in our analyses we are aiming for usefulness, justifiability, and transparency: we aim to practise what we preach and for this evaluation to meet the same standards of cost-effectiveness as we have for our other activities.

Below, we share our key results, some guidance and caveats on how to interpret them, and our own takeaways from this evaluation. GWWC has historically derived a lot of value from our community’s feedback and input, so we invite readers to share any comments or takeaways they may have on the basis of reviewing this evaluation and its results, either by directly commenting or by reaching out to sjir@givingwhatwecan.org.

Key results

Our primary goal was to identify our overall cost-effectiveness as a giving multiplier — the ratio of our net benefits (additional money directed to highly effective charities, accounting for the opportunity costs of GWWC staff) compared to our operating costs. [2]

We estimate our giving multiplier for 2020–2022 is 30x, and that we counterfactually generated $62 million of value for highly effective charities.

We were also particularly interested in the average lifetime value that GWWC contributes per pledge, as this can inform our future priorities.

We estimate we counterfactually generate $22,000 of value for highly effective charities per GWWC Pledge, and $2,000 per Trial Pledge.

We used these estimates to help inform our answer to the following question: In 2020–2022, did we generate more value through our pledges or through our non-pledge work?

We estimate that pledgers donated $26 million in 2020–2022 because of GWWC. We also estimate GWWC will have caused $83 million of value from the new pledges taken in 2020–2022.[3]

We estimate GWWC caused $19 million in donations to highly effective charities from non-pledge donors in 2020–2022.

These key results are arrived at through dozens of constituent estimates, many of which are independently interesting and inform our takeaways below. We also provide alternative conservative estimates for each of our best-guess estimates.

How to interpret our results

This section provides several high-level caveats to help readers better understand what the results of our impact evaluation do and don’t communicate about our impact.

We generally looked at average rather than marginal cost-effectiveness

Most of our models are estimating our average cost-effectiveness: in other words, we are dividing all of our benefits by all of our costs. We expect that this will not be directly indicative of our marginal cost-effectiveness — the benefits generated by each extra dollar we spend — and that our marginal cost-effectiveness will be considerably lower for reasons of diminishing returns.

We try to account for the counterfactual

This evaluation reports on the value generated by GWWC specifically. To do this, we estimate the difference in what has happened given GWWC existed, and compare it to our best guess of what would have happened had we never existed (the “counterfactual” scenario we are considering).

We did not model our indirect impact

For the purpose of this impact evaluation, we focused on Giving What We Can as a giving multiplier. Our models assumed our only value was in increasing the amount of donations going to highly effective charities or funds. While this is core to our theory of impact, it ignores our indirect impact (for example, improving and growing the effective altruism community), which is another important part of that theory.

Our analysis is retrospective

Our cost-effectiveness models are retrospective, whereas our team, strategy, and the world as a whole shift over time. For example, in our plans for 2023 we focus on building infrastructure for long-term growth and supporting the broader effective giving ecosystem. We think such work is less likely to pay off in terms of short-term direct impact, so we expect our giving multiplier to be somewhat lower in 2023 than it was in 2020–2022.

A large part of our analysis is based on self-reported data

To arrive at our estimates, we rely a lot on self-reported data, and the usual caveats for using self-reported data apply. We acknowledge and try to account for the associated risks of biases throughout the report — but we think it is worth keeping this in mind as a general limitation as well.

The way we account for uncertainty has strong limitations

We arrived at our best-guess and conservative multiplier estimates by using all of our individual best-guess and conservative input estimates in our models, respectively. Among other things, this means that our overall conservative estimates underestimate our impact, as they rely on many separate conservative inputs being correct at the same time, which is highly unlikely.

We treated large donors’ donations differently

For various reasons, we decided to treat large donors differently in our analysis, including by fully excluding our top 10 largest GWWC Pledge donors from our model estimating the value of a new GWWC Pledge. We think this causes our impact estimates to err slightly conservatively.

We made many simplifying assumptions

Our models are sensitive to an array of simplifying assumptions people could disagree with. For instance, for pragmatic reasons we categorised recipient charities into only two groups: charities where we are relatively confident they are “highly effective,” and charities where we aren’t.

We documented our approach, data, and decisions

In line with our aims of transparency and justifiability, we did our best to record all relevant methodology, data, and decisions, and to share what we could in our full report, working sheet, and survey documentation. We invite readers to reach out with any requests for further information, which we will aim to fulfil insofar as we can, taking into account practicality and data privacy considerations.

Our takeaways

Below is a selection of our takeaways from this impact evaluation, including implications that could potentially result in concrete changes to our strategy. Please note that the implications — in most cases — only represent updates on our views in a certain strategic direction, and may not represent our all-things-considered view on the subject in question. As mentioned above, we invite readers who have comments or suggestions for further useful takeaways to reach out.

Our giving multiplier robustly exceeds 9x

- Our conservative estimate for our multiplier — which combines multiple conservative inputs — is 9x, suggesting that every $1 spent on GWWC in 2020–2022 is highly likely to have caused more than $9 to go to highly effective charities — and our best guess is much higher, at $30.

- This doesn’t imply funding GWWC on the margin will always yield such a high multiplier, but it does provide a strong case for scaling up some of GWWC’s most cost-effective current activities — or at least for making sure we are able to keep doing what we do well.

New GWWC Pledges likely account for most of our impact

- We estimate we generated 1.5-4.5x[4] more value through the GWWC Pledge than through non-pledge donations.

- This can inform our work going forward — for example, on our marketing strategy and on whether we choose to support other effective giving organisations with pledge-related products.

We found an increase in recorded GWWC Pledge donations with time

- One of our most surprising findings was that the average recorded yearly donations of GWWC Pledge donors seems to increase with Pledge age rather than decrease: the increase in average donations among the Pledge donors who keep recording their giving more than compensates for any drop-out of Pledge donors. We have several hypotheses for why this may be and are not confident it will replicate in future evaluations.

- This is weak evidence in favour of promoting pledges among younger audiences with high earning potential, as retention is less of an issue than one might expect.

A small but significant percentage (~9%) of our Trial Pledgers have gone on to take the GWWC Pledge, and this plausibly[5] represents the bulk of the value we add through the Trial Pledge

- This is evidence that our Trial Pledge is working as a step towards the GWWC Pledge, but there is also room to improve.

- Given our $22,000 estimate of the value we cause per GWWC Pledge, and the fact that we see a similar number of Trial Pledges and GWWC Pledges each year, this suggests there is value in experimenting with activities that could increase the proportion of Trial Pledgers who take the GWWC Pledge.

The vast majority of our donors give to charities that we expect are highly effective

- We estimate that more than 70% of all pledge and non-pledge donations we recorded went to organisations that would meet the criteria to be one of our top-rated charities and funds at the time.

- This suggests a lot of our value comes from highlighting and facilitating donations to these charities and funds in particular, which is a relevant consideration for — among other things — updating our inclusion criteria.

Our donations follow a heavy-tailed distribution

- Less than 1% of our donors account for 50% of our recorded donations. This amounts to dozens of people, while the next 40% of donations (from both pledge donors and non-pledge donors) is distributed among hundreds. This suggests that most of our impact comes from a small-to-medium-size group of large donors (rather than from a very small group of very large donors, or from a large group of small donors).[6]

- This information can be relevant in deciding which groups we could put extra resources into reaching to increase our giving multiplier — though it’s worth noting that our short-term giving multiplier isn’t our only consideration for deciding which groups we target.

Nearly 60% of our donors’ recorded donations go to the cause area of improving human wellbeing

- For the remaining cause areas, slightly below 10% of recorded donations go improving animal welfare, slightly above 10% to creating a better future, and another ~20% to unknown or multiple cause areas.

- We don’t currently have a clear view of what (if anything) this finding implies we should do — as we generally don’t prioritise any of these cause areas over one another — but we think it can inform discussions on how much we highlight each area in our communications, and how we prioritise our time when investigating our recommendations.

We plan to report on how we have used these takeaways in our next impact evaluation, both to hold ourselves accountable to using them and to test how useful they turned out to be.

Where you can learn more

- Our full impact evaluation report — this includes a much more detailed description of our assumptions, considerations, methodology, and results.

- Our working sheet — this contains all of our estimates and how we arrived at them.

- Our survey documentation and results — this documents how we conducted the 2023 surveys that informed our evaluation, and summarises our findings.

How you can help

If you are interested in supporting GWWC’s work, we are currently fundraising! We have ambitious plans, and we’re looking to diversify our funding and to extend our runway (which is currently only about one year). For all of this, we are looking to raise ~£2.2 million by June 2023, so we would be very grateful for your support. You can read our draft strategy for 2023, make a direct donation, or reach out to our executive director for more information.

Acknowledgements

We’d like to thank the many people who provided valuable feedback — including Anne Schulze, Federico Speziali, Basti Schwiecker, and Jona Glade — and in particular Joey Savoie, Callum Calvert, and Josh Kim for their extensive comments on an earlier draft of this evaluation. Thank you also to Vasco Amaral Grilo for providing feedback on our methodology early on in our process, and for conducting his own analysis of GWWC's impact.

We’d also like to give a special thank you to our colleagues Fabio Kuhn and Luke Freeman for their extensive support on navigating our database, to Luke also for support on our survey design, to Katy Moore for high-quality review and copy editing, to Nathan Sherburn for helping us compile and analyse our survey results, and to Bradley Tjandra for spending multiple working days (!) supporting the data analysis for this evaluation. Both Nathan and Bradley conducted this work as part of The Good Ancestors Project.

- ^

Throughout the impact evaluation, unless otherwise specified, we are including our donation platform as part of GWWC. This includes the period before we acquired it from EA Funds in 2022. The reason we are considering it “GWWC” for that period is because the platform’s cost-effectiveness at the time is still relevant to its current and projected cost-effectiveness.

- ^

All of our results are adjusted for inflation and reported in 2022-equivalent USD.

- ^

We distinguish between the “funding we caused to go to effective charities from existing pledgers” and the “value we will have generated for effective charities from new pledges.” The former is purely retrospective (only looking at donations that have been made) whereas the latter also includes donations we expect will be made in the future. We do not add these two estimates together, but take a weighted average of the two to avoid double-counting our impact.

- ^

Specifically, we had 4.5x as much impact if we look at the value of the GWWC Pledge in terms of the number of new pledges taken in that period multiplied by our estimated value generated per Pledge. We had 1.5x as much impact if we instead just look at the amount of money existing GWWC Pledgers donated because of us in 2020–2022.

- ^

This assumes causality from correlation, which we think is plausible in this case (we don’t assume this causality for our overall impact estimates).

- ^

Note that these figures do not account for non-recorded donations and so could exaggerate how heavy-tailed the distribution is.

Jeff Kaufman @ 2023-04-02T12:14 (+17)

Thanks for sharing this!

One large worry I have in evaluating GWWC's impact is that I'd expect the longer someone has been a GWWC member the more likely they are to drift away and stop keeping their pledge, and people who aren't active anymore are hard to survey. I've skimmed through the documents trying to understand how you handled this, and found discussion of related issues in a few places:

Pledgers seem to be giving more on average each year after taking the Pledge, even when you include all the Pledgers for whom we don’t have recorded donations after some point. We want to emphasise that this data surprised us and caused us to reevaluate a key assumption we had when we began our impact evaluation. Specifically, we went into this impact evaluation expecting to see some kind of decay per year of giving. In our 2015 impact evaluation, we assumed a decay of 5% (and even this was criticised for seeming optimistic compared to EA Survey data — a criticism we agreed with at the time). Yet, what we in fact seem to be seeing is an increase in average giving per year since taking the Pledge, even when adjusting for inflation.

How does this handle members who aren't reporting any donations? How does reporting rate vary by tenure?

We ran one quick check: the average total 2021 donations on record for our full 250-person sample for the GWWC Pledge survey on reporting accuracy was $7,619, and among the 82 respondents it was $8,124. This may indicate some nonresponse bias, though a bit less than we had expected.

Was the $7,619 the average among members who recorded any donations, or counting ones who didn't record donations as having donated $0? What fraction of members in the 250-person sample recorded any donations?

We are not assuming perfect Pledge retention. Instead, we are aiming to extrapolate from what we have seen so far (which is a decline in the proportion of Pledgers who give, but an increase in the average amount given when they do).

Where does the decline in the proportion of people giving fit into the model?

Michael Townsend @ 2023-04-03T08:40 (+15)

Thanks for your questions Jeff!

To answer point by point:

How does [the evaluation’s finding that Pledgers seem to be giving more on average each year after taking the Pledge] handle members who aren't reporting any donations?

The (tentative) finding that Pledgers’ giving increases more each year after taking the Pledge assumes that members who aren’t reporting any donations are not donating.

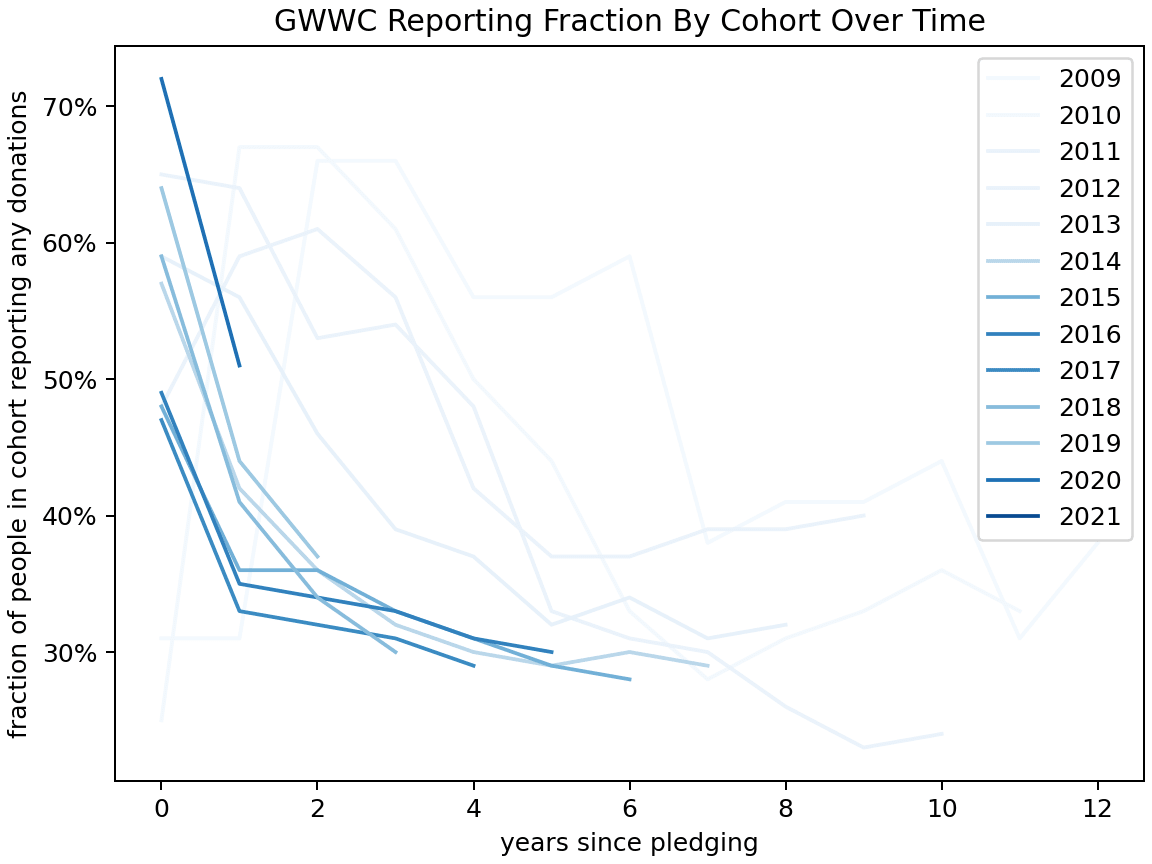

How does reporting rate vary by tenure?

We include a table “Proportion of GWWC Pledgers who record any donations by Pledge year (per cohort)” on page 48. In sum: reporting declines in the years after the Pledge, but that decline seems to plateau at a reporting rate of ~30% .

Was the $7,619 the average among [the 250-person sample we used for GWWC reporting accuracy survey] who recorded any donations, or counting ones who didn't record donations as having donated $0? What fraction of members in the 250-person sample recorded any donations?

The $7,619 figure is the average if you count those as not recording a donation as having donated $0. Unfortunately, I don’t have the fraction of the 250-person sample who recorded donations at all on hand. However, I can give an informed guess: the sample was a randomly selected group of people who had taken the GWWC Pledge before 2021, and eyeballing the table I linked above, ~40-50% of pre-2021 Pledgers record a donation each year.

Where does the decline in the proportion of people giving fit into the model?

The model does not directly incorporate the decrease in proportion of people recording/giving, and neither does it directly incorporate the increase in the donation sizes for people who record/give. The motivation here is that — at least in the data so far — we see these effects cancel out (indeed, we see that the increase in donation size slightly outweighs the decrease in recording rates — but we’re not sure that trend will persist). We go into much more depth on this in our appendix section “Why we did not assume a decay in the average amount given per year”.

Jeff Kaufman @ 2023-04-03T14:28 (+18)

Thanks! I think your "Proportion of GWWC Pledgers who record any donations by Pledge year (per cohort)" link is pointing a bit too early in the doc, but I do see the table now, and it's good.

reporting declines in the years after the Pledge, but that decline seems to plateau at a reporting rate of ~30%

Here's a version of that table with lines colored by how many people there are in that cohort:

It doesn't look like it stops at a reporting rate 30%, and the more recent (high cohort size) lines are still decreasing at maybe 5% annually as they get close to 30%.

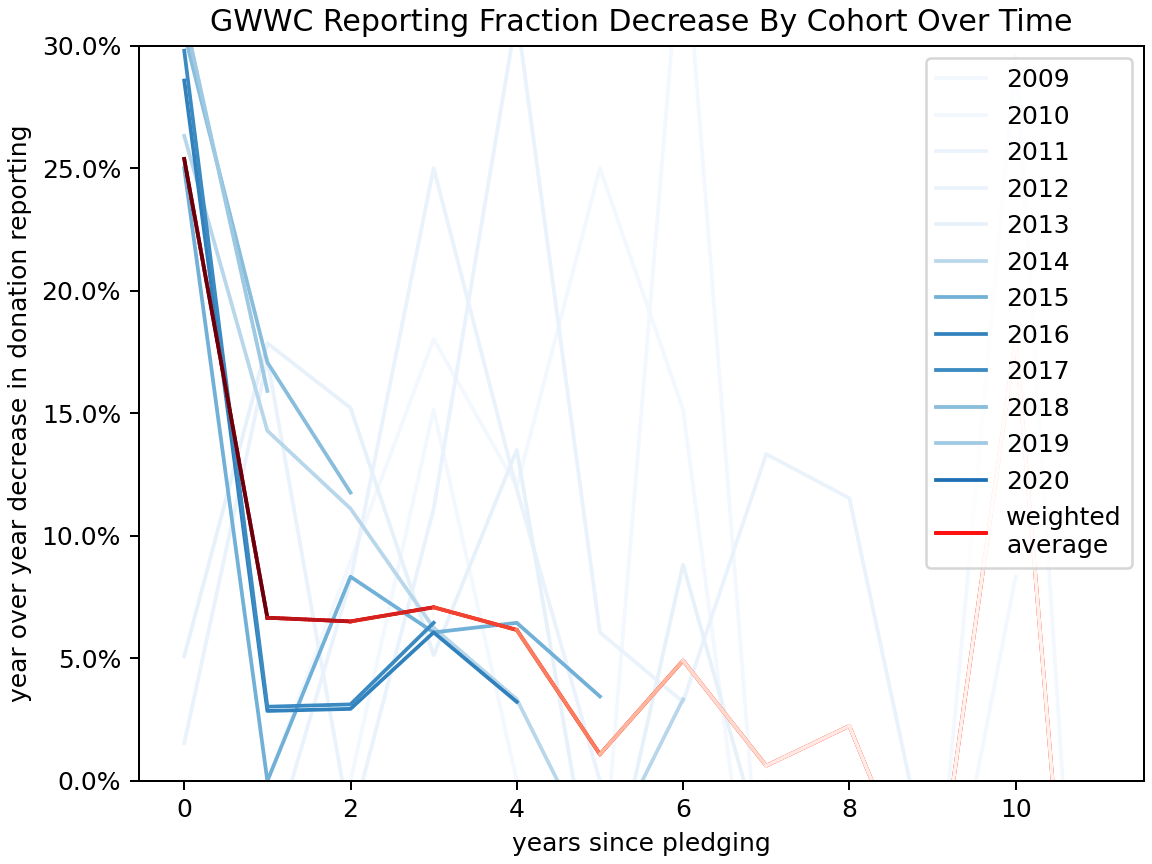

And here are the year/year decreases:

Looking at the chart, it's clear that decay slows down over time, and maybe it slows enough that it's fine to ignore it, but it doesn't look like it goes to zero. A cohort-size-weighted average year/year decay where we ignore the first six years (starting with 5y since pledging, and so ignoring all cohorts since 2015) is 2%.

(code)

But that's probably too optimistic, since looking at more recent cohorts decay doesn't seem to be slowing, and the reason ignoring the first six years looks good is mostly that it drops those more recent cohorts.

Separately, I think it would be pretty reasonable to drop the pre-2011 reporting data. I think this probably represents something weird about starting up, like not collecting data thoroughly at first, and not about user behavior? I haven't done this in my analysis above, though, because since I'm weighting by cohort size it doesn't do very much.

Michael Townsend @ 2023-04-04T00:56 (+7)

Really appreciate this analysis, Jeff.

Point taken that there is no clear plateau at 30% -- it'll be interesting to see what future data shows.

Part of the reason for us having less analysis on the change of reporting rates over time is that we did not directly incorporate this rate of change into our model. For example, the table of reporting rates was primarily used in our evaluation to test a hypothesis for why we see an increase in average giving (even assuming people are not reporting are not giving at all). Our model does not assume reporting rates don't decline, nor does it assume the decline in reporting rates plateaus.

Instead, we investigated how average giving (which is a product of both reporting rates, and the average amount given conditional on reporting) changes over time. We saw that the decline in reporting rates is (more than) compensated by the increase in giving conditional on reporting. It could be that this will no longer remain true beyond a certain time horizon (though, perhaps it will!), but there are other arguably conservative assumptions for these long time-horizons (e.g., that giving stops at pension age, doesn't include any legacy giving). Some of these considerations come up as we discuss why we did not assume a decay in our influence and in our limitations of our Pledge model (in the bottom of this section, right above this one).

On your final point:

Separately, I think it would be pretty reasonable to drop the pre-2011 reporting data. I think this probably represents something weird about starting up, like not collecting data thoroughly at first, and not about user behavior? I haven't done this in my analysis above, though, because since I'm weighting by cohort size it doesn't do very much.

Do you mean excluding it just for the purpose of analysing reporting rates over time? If so, that could well be right, and if we investigate this directly in future impact evaluations we'll need to look into what the quality/relevance of that data was and make a call here.

Jeff Kaufman @ 2023-04-04T01:02 (+6)

That makes sense, thanks! I think your text makes it sound like you disagree with the earlier attrition discussion, when actually it's that giving increasing over time makes up for the attrition?

just for the purpose of analysing reporting rates over time?

Sorry, yes. I think it's probably heavily underreported, since the very early reporting system was probably worse?

Michael Townsend @ 2023-04-04T01:39 (+4)

Ah, I can see what you mean regarding our text, I assume in this passage:

We want to emphasise that this data surprised us and caused us to reevaluate a key assumption we had when we began our impact evaluation. Specifically, we went into this impact evaluation expecting to see some kind of decay per year of giving. In our 2015 impact evaluation, we assumed a decay of 5% (and even this was criticised for seeming optimistic compared to EA Survey data — a criticism we agreed with at the time). Yet, what we in fact seem to be seeing is an increase in average giving per year since taking the Pledge, even when adjusting for inflation.

What you say is right: we agree there seems to be a decay in fulfilment / reporting rates (which is what the earlier attrition discussion was mostly about) but we just add the additional observation that giving increasing over time makes up for this.

There is a sense in which we do disagree with that earlier discussion, which is that we think the kind of decay that would be relevant to modelling the value of the Pledge is the decay in average giving over time, and at least here, we do not see a decay. But we could've been clearer about this; at least on my reading, I think the paragraph I quoted above conflates different sorts of 'decay'.

MatthewDahlhausen @ 2023-04-05T15:21 (+3)

I'd be interested in a chart similar to "Proportion of GWWC Pledgers who record any donations by Pledge year (per cohort)", but with 4 versions (median / average donation in $) x (inclusive / exclusive of those that didn't record data, assuming no record is $0). From the data it seems that both things are true: "most people give less over time and stop giving" and "on average, pledge donations increase over time", driven entirely by ~5-10% of extremely wealthy donors that increase their pledge.

MichaelStJules @ 2023-05-05T07:59 (+15)

In the report, you write:

But there is another sense in which some readers may judge we do not avoid double-counting. Suppose there was someone else for whom GWWC and GiveWell were both necessary for them to give to charity (i.e., if either did not exist, they would not give anything). In this instance, we would fully count their donations towards our impact, as in the counterfactual scenario of GWWC not existing (but GiveWell still existing), this donor would not have given at all. We think this is the right way of counting impact for our purposes, as our goal here is usefulness over “correct” attribution: we think we should be incentivised to work with this donor for the full extent of their donations (given GiveWell’s existence). However, we know there is disagreement about this and we want to be upfront about our approach here.

For example, some readers may hold the view that in such a case GiveWell and GWWC should each only attribute a percentage of the impact to themselves, with the two percentages summing to 100%.

Because what we're really interested in is marginal cost-effectiveness, GiveWell not existing is probably too extreme of a scenario anyway, and we should be thinking in terms of GiveWell's marginal work, too. But there can still be a problem for marginal work. From Triple counting impact in EA:

It would be very easy for an EA to find out about EA from Charity Science, to read blog posts from both GWWC and TLYCS, sign up for both pledges, and then donate directly to GiveWell (who would count this impact again). This person would become quadruple counted in EA, with each organization using their donations as impact to justify their running. The problem is that, at the end of the day, if the person donated $1000, TLYCS, GWWC, GiveWell, and Charity Science may each have spent $500 on programs for getting this person into the movement/donating. Each organization would proudly report they have 2:1 ratios and give themselves a pat on the back, when really the EA movement as a whole just spent $2000 for $1000 worth of donations.

So, we'd want to ask: if, together and across charities, we give

- $X1 more to GWWC,

- $X2 more to GiveWell,

- $X3 more to OFTW,

- $X4 more to Founders Pledge,

- $X5 more to TLYCS,

- $X6 more to ACE,

- $X7 more for EA community building (e.g. local groups),

- etc.,

how much more in donations all together (not just from GWWC pledges) do we get for direct work (orgs)? If it ended up being that $X1 +... + $Xn was greater than the counterfactual raised (including possibly lower donations from staff at these orgs that could donate more if they worked elsewhere with higher incomes), then we'd actually be bringing in less than we're spending.

However, we might still allocate funding more effectively, because some of these orgs do more cost-effectiveness research with marginal funding. And there are non-funding benefits to consider, e.g. more/better direct work from getting people more engaged.

(And direct work orgs also do their own fundraising, too.)

We're interested in increasing the counterfactual donated to direct work (orgs) - ($X1 + ... + $Xn).[1]

If/when GWWC's own marginal multiplier (fixing the funding to other orgs) is only a few times greater than 1, then I'd worry about spending more than we're raising as a community through marginal donations to GWWC.

- ^

Maybe after weighing by cost-effectiveness of the direct work, e.g. something like the quality factor in https://founderspledge.com/stories/giving-multipliers.

NunoSempere @ 2023-05-05T15:28 (+3)

Oh man

Stan van Wingerden @ 2023-04-01T08:56 (+12)

Thanks, Michael & Sjir, for writing this exhaustive report! The donation distribution is fascinating, and I loved reading your reasoning about & estimation of quantities I didn't know were important. I'm glad GWWC has such a great giving multiplier!

After reading the summary & skimming the full report & working sheet, I've got three questions:

1. Does the dropping of the top 10 donors influence the decision on which groups to target? I think the reasoning to drop their 27% of donations from the giving multiplier is clear, but it's not obvious to me that they should also be dropped when prioritizing, and I could not find this in the report. (You mention the multiplier is not the only thing that influences which groups to target, of course. To me the overall distribution seems to imply running a top-100 or top-200 survey could be useful in increasing the multiplier somehow, and this argument would be about twice as strong with the top 10 included vs without.)

2. Why did you choose the counterfactual as "what would have happened had GWWC never existed"? I think it's somewhat plausible that the pre-2020 pledgees would still have donated 10% of their income from 2020 onward, had GWWC existed from 2020 onward in a very minimal form. This is about ~35% of the total donations, so this also seems a relevant counterfactual.

3. Similar to 2, I also didn't quite get how you dealt with which GWWC year gets to count the impact from past pledges. It would seem off to me if both GWWC 2020-2022 and GWWC 2017-2019 get to fully count the donations a 2018 pledge-taker made in 2022 for their multipliers, although both of them definitely contributed to the donations, of course. How did you deal with this? Maybe this is addressed by the survey responses already?

Sjir Hoeijmakers @ 2023-04-01T11:46 (+7)

Thanks for asking these in-depth questions, Stan!

-

The donation distribution statistics do not exclude our top 10 donors (neither on the pledge nor on the non-pledge side), so our takeaways from those aren't influenced. I should also clarify that we do not exclude all top 10 donors (on either the pledge or non-pledge side) from all of our donation estimates that influence the giving multiplier: we only exclude all top 10 pledge donors from our estimates of the value of the pledge (for more detail on how we treated large donors differently and why, see this appendix). Please also note that we haven't (yet) made any decisions on which groups to target more or less on the basis of these results: our takeaways will inform our organisation-wide strategic discussions going forwards but we haven't had any of those yet - as we have only just finished this evaluation - and - as we also emphasize in the report - the takeaways provide updates on our views but not our all-things-considered views.

-

This is a great question and I think points at the importance of considering the difference between marginal and average cost-effectiveness when interpreting our findings: as you say, many people might have still donated "had GWWC existed from 2020 in a very minimal form", i.e. the first few dollars spent on GWWC may be worth a lot more than the last few dollars spent on it. As we note in the plans for future evaluations section, we are interested in making further estimates like the one you suggest (i.e. considering only a part of our activities) and will consider doing so in the future; we just didn't get to doing this in this evaluation and chose to make an estimate of our total impact (i.e. considering us not existing as the counterfactual) first.

-

We explain how we deal with this in this appendix. In short, we use two approaches, one of which counts all the impact of a pledge in the year the pledge is taken and the other in the years donations are made against that pledge. We take a weighted average of these two approaches to avoid double-counting our impact across different years.

Stan van Wingerden @ 2023-04-04T11:43 (+5)

Thank you for the explanation & references, all three points make sense to me!

Joel Tan (CEARCH) @ 2023-04-05T01:11 (+7)

Hi Sjir, after quickly reading through the relevant parts in the detailed report, and following your conversation with Jeff, can I clarify that:

(1) Non-reporters are classified as donating $0, so that when you calculate the average donation per cohort it's basically (sum of what reporters donate)/(cohort size including both reporters and non-reporters)?

(2) And the upshot of (1) would be that the average cohort donation each year is in fact an underestimate insofar as some nonreporters are in fact donation? I can speak for myself, at least - I'm part of the 2014 cohort, and am obviously still donating, but I have never reported anything to GWWC since that's a hassle.

(3) Nonresponse bias is really hard to get around (as political science and polling is showing us these last few years), but you could probably try to get around this by either relying on the empirical literature on pledge attrition (e.g. https://www.sciencedirect.com/science/article/pii/S0167268122002992) or else just making a concerted push to reach out to non-reporters, and find the proportion who are still giving (though that in turn will also be subject to nonresponse bias, insofar as non-reporters twice over are different from non-reporters who respond to the concerted push (call them semi-reporters), and you would want to apply a further discount to your findings, perhaps based on the headline reporter/semi-reporter difference if you assume that reporter/semi-reporter donation difference = difference in semi-reporter/total non-reporter difference.

(4) My other big main concern beyond nonresponse bias is just counterfactuals, but looking at the report it's clearly been very well-thought out, and I'm really impressed at the thoroughness and robustness. All I would add is that I would probably put more weight on the population base rate (even if you have to source US numbers), and even revise that downwards given the pool that EA draws from is distinctively non-religious and we have lower charitable donation rates.

Michael Townsend @ 2023-04-05T07:52 (+4)

Hi Joel — great questions!

(1) Are non-reporters counted as giving $0?

Yes — at least for recorded donations (i.e., the donations that are within our database). For example, in cell C41 of our working sheet, we provide the average recorded donations of a GWWC Pledger in 2022-USD ($4,132), and this average assumes non-reporters are giving $0. Similarly, in our "pledge statistics" sheet, which provides the average amount we record being given per Pledger per cohort, and by year, we also assumed non-reporters are giving $0.

(2) Does this mean we are underestimating the amount given by Pledgers?

Only for recorded donations — we also tried to account for donations made but that are not in our records. We discuss this more here — but in sum, for our best guess estimates, we estimated that our records only account for 79% of all pledge donations, and therefore we need to make an upwards adjustment of 1.27 to go from recorded donations to all donations made. We discuss how we arrived at this estimate pretty extensively in our appendix (with our methodology here being similar to how we analysed our counterfactual influence). For our conservative estimates, we did not make any recording adjustments, and we think this does underestimate the amount given by Pledgers.

(3) How did we handle nonresponse bias and could we handle it better?

When estimating our counterfactual influence, we explicitly accounted for nonresponse bias. To do so, we treated respondents and nonrespondents separately, assuming a fraction of influence on nonrespondents compared to respondents for all surveys:

- 50% for our best-guess estimates.

- 25% for our conservative estimates.

We actually did consider adjusting this fraction depending on the survey we were looking at, and in our appendix we explain why we chose not to in each case. Could we handle this better? Definitely! I really appreciate your suggestions here — we explicitly outline handling nonresponse bias as one of the ways we would like to improve future evaluations.

(4) Could we incorporate population base rates of giving when considering our counterfactual influence?

I'd love to hear more about this suggestion, it's not obvious to me how we could do this. For example, one interpretation here would be to look at how much Pledgers are giving compared to the population base rate. Presumably, we'd find they are giving more. But I'm not sure how we could use that to inform our counterfactual influence, because there are at least two competing explanations for why they are giving more:

- One explanation is that we are simply causing them to give more (so we should increase our estimated counterfactual influence).

- Another is that we are just selecting for people who are already giving a lot more than the average population (in which case, we shouldn't increase our estimated counterfactual influence).

But perhaps I'm missing the mark here, and this kind of reasoning/analysis is not really what you were thinking of. As I said, would love to hear more on this idea.

(Also, appreciate your kind words on the thoroughness/robustness)

Joel Tan (CEARCH) @ 2023-04-05T08:31 (+4)

Thanks for the clarifications, Michael, especially on non-reporters and non-response bias!

On base rates, my prior is that people who self select into GWWC pledges are naturally altruistic and so it's right (as GWWC does) to use the more conservative estimate - but against this is a concern that self-reported counterfactual donation isn't that accurate.

It's really great that GWWC noted the issue of social desirability bias, but I suspect it works to overestimate counterfactual giving tendencies (rather than overestimating GWWC's impact), since the desire to look generous almost certainly outweighs the desire to please GWWC (see research on donor overreporting: https://researchportal.bath.ac.uk/en/publications/dealing-with-social-desirability-bias-an-application-to-charitabl). I don't have a good solution to this, insofar as standard list experiments aren't great for dealing with quantification as opposed to yes/no answers - would be interested in hearing how your team plans to deal with this!

Arepo @ 2023-04-04T15:52 (+6)

Congrats Michael and Sjir. I love to see meta-orgs take self-evaluation seriously :)

My main open question from having read the summary (and briefly glanced at the full report) is how the picture would look if you split by approximate cause area rather than effective/noneffective. Both in the sense of which cause areas you would guess your donors would have given to otherwise, and which cause areas your pledgers would have given to. It would be interesting to see eg a separate giving multiplier for animal welfare/global development/longtermist donations.

Is that something you thought about?

Michael Townsend @ 2023-04-04T23:58 (+6)

Thanks :)!

You can see in the donations by cause area a breakdown of the causes pledge and non-pledge donors give to. This could potentially inform a multiplier for the particular cause areas. I don't think we considered doing this, and am not sure it's something we'll do in future, but we'd be happy to see others' do this using the information we provide.

Unfortunately, we don't have a strong sense in how we influenced which causes donors gave to; the only thing that comes to mind is our question: "Please list your best guess of up to three organisations you likely would *not* have donated to if Giving What We Can, or its donation platform, did not exist (i.e. donations where you think GWWC has affected your decision)" the results of which you can find on page 19 of our survey documentation here. Only an extremely small sample of non-pledge donors responded to the question, though. Getting a better sense of our influence here, as well as generally analysing trends in which cause areas our donors give to, is something we'd like to explore in our future impact evaluations.

Rasool @ 2023-04-05T11:31 (+5)

Thanks for the summary!

You mention that ~9% of Trial Pledgers have gone on to take the GWWC Pledge, do you know what the other 91% did (eg. extend their trial, stop entirely, commit to a smaller pledge like OFTW)?

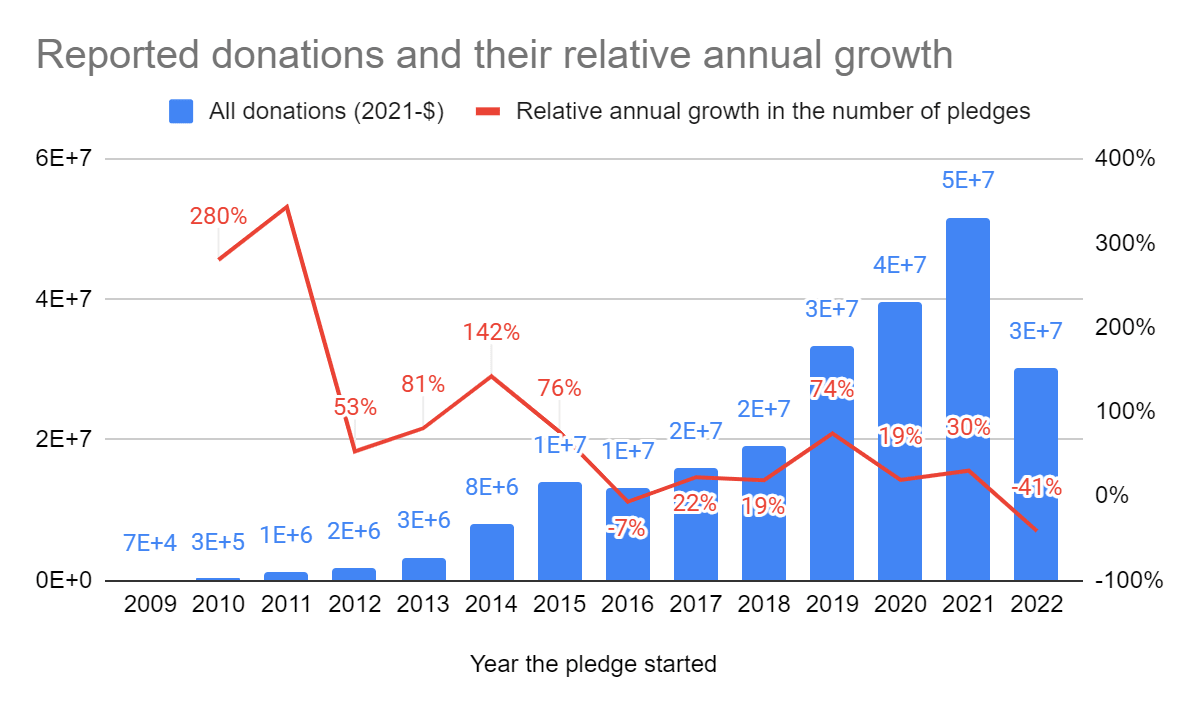

Vasco Grilo @ 2024-02-25T19:22 (+4)

Hi Michael and Sjir,

I have plotted the reported donations and their relative annual growth.

The overall downwards trend of the growth is not encouraging. On the other hand, donations grew every year except for 2016 and 2022.

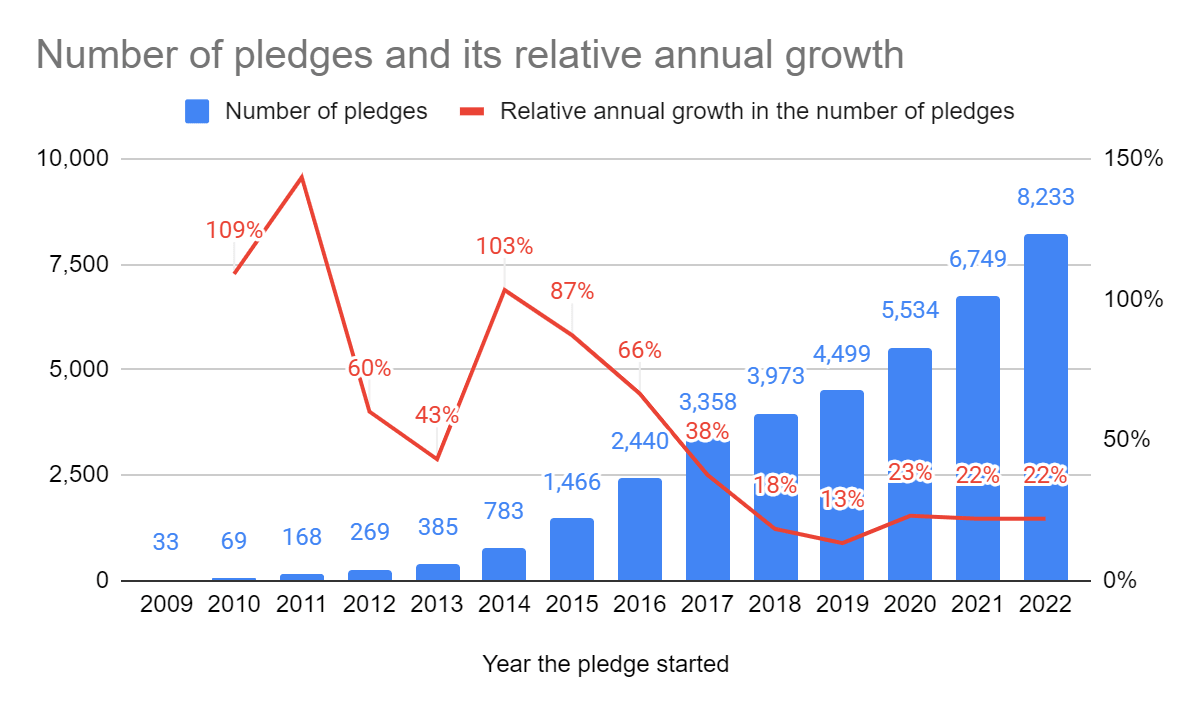

I have also plotted the number of GWWC Pledges and its relative annual growth.

There was an initial downwards trend, but it looks like it stabilised in the last few years. The mean annual growth rate between 2017 and 2022 was 19.7 %[1], for which:

- The doubling time is 3.85 years (= LN(2)/LN(1 + 0.197)).

- The time to grow by a factor of 10 is 12.8 years (= LN(10)/LN(1 + 0.197)).

- ^

Mean of the 5 most recent annual growth rates in the graph.