ITN 201: pitfalls in ITN BOTECs

By Lizka @ 2025-08-13T04:01 (+135)

The fact that the ITN framework[1] can help us prioritize between problems feels almost magical to me.

But when I see ITN BOTECs in the wild, I’m often very skeptical. It seems really easy for these estimates to be inadvertent “conclusion-laundering” — regurgitating the author’s opinions in a quantitative or more robust-seeming form without actually providing any independent signal. And even when that’s not the case, the bottom-line estimates can seem so noisy and ungrounded that I trust them less than my fuzzy, un-BOTECed intuitions.

So I'm[2] sharing notes on some pitfalls that seem especially pernicious and common to (i) help BOTEC authors avoid these issues, (ii) nudge readers to be somewhat cautious about deferring to such estimates, and (iii) encourage people to supplement (and/or to replace) ITN BOTECs with other approaches.

Note: I expect the post will make more sense to people who are already somewhat familiar with ITN and estimation. (See other content for background on the ITN framework or on BOTECs/fermi estimates.) It's also a fairly informal post — more like “research/field notes” than “article”. As always, expect mistakes.

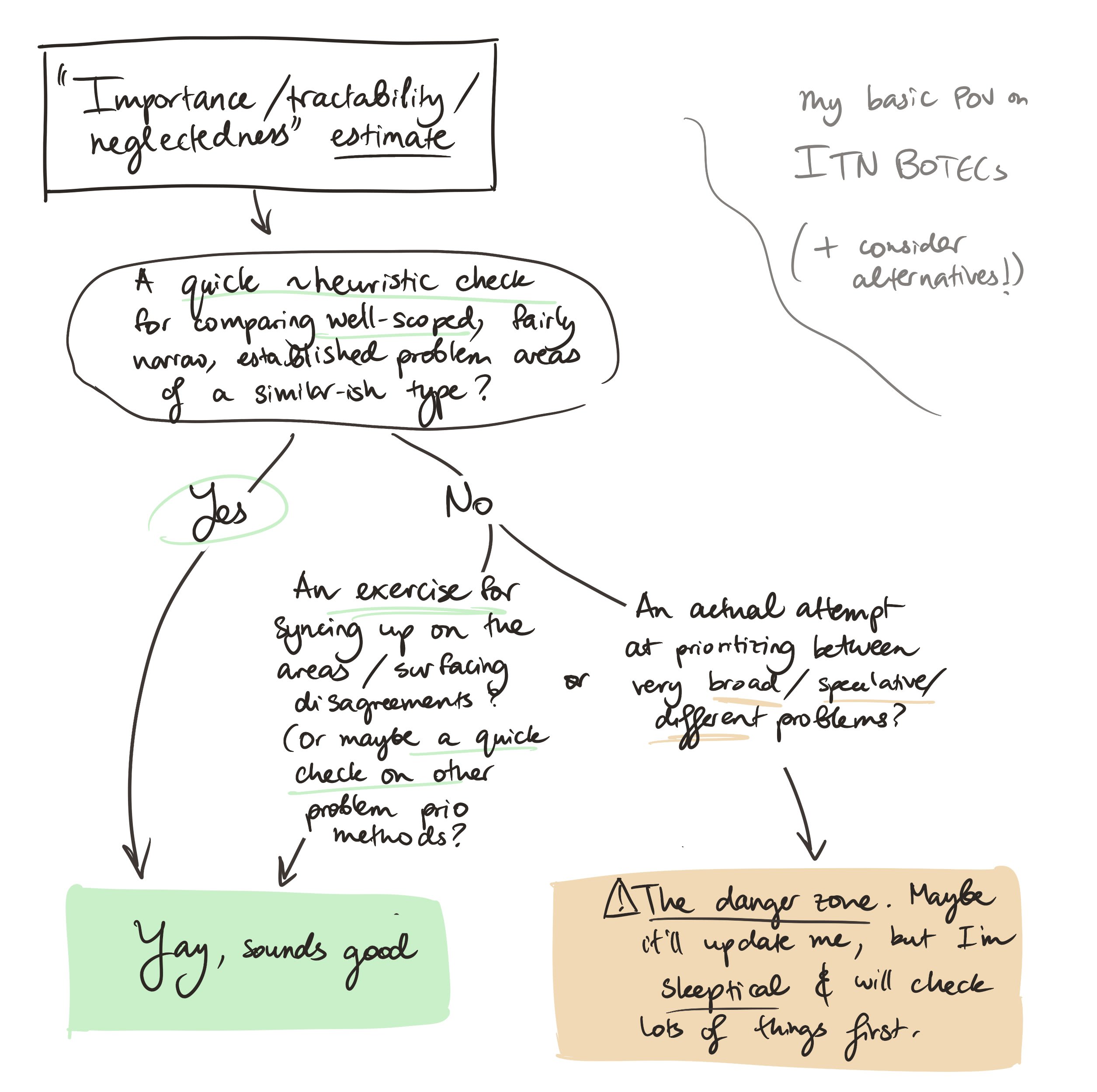

Brief outline of my views

ITN BOTECs are useful for quick comparisons of well-defined, reasonably narrow problems that are of a similar kind (and that we can picture solving), like malaria and diabetes.

The further we stray from this “safe zone” — e.g. if the problems we're evaluating are loosely scoped, very broad, or speculative/hard to picture, or if there are significant structural differences between them — the less we should update on the results.

(Although the BOTECs can still be useful as exercises for prompting richer discussions and clarifying the problems)

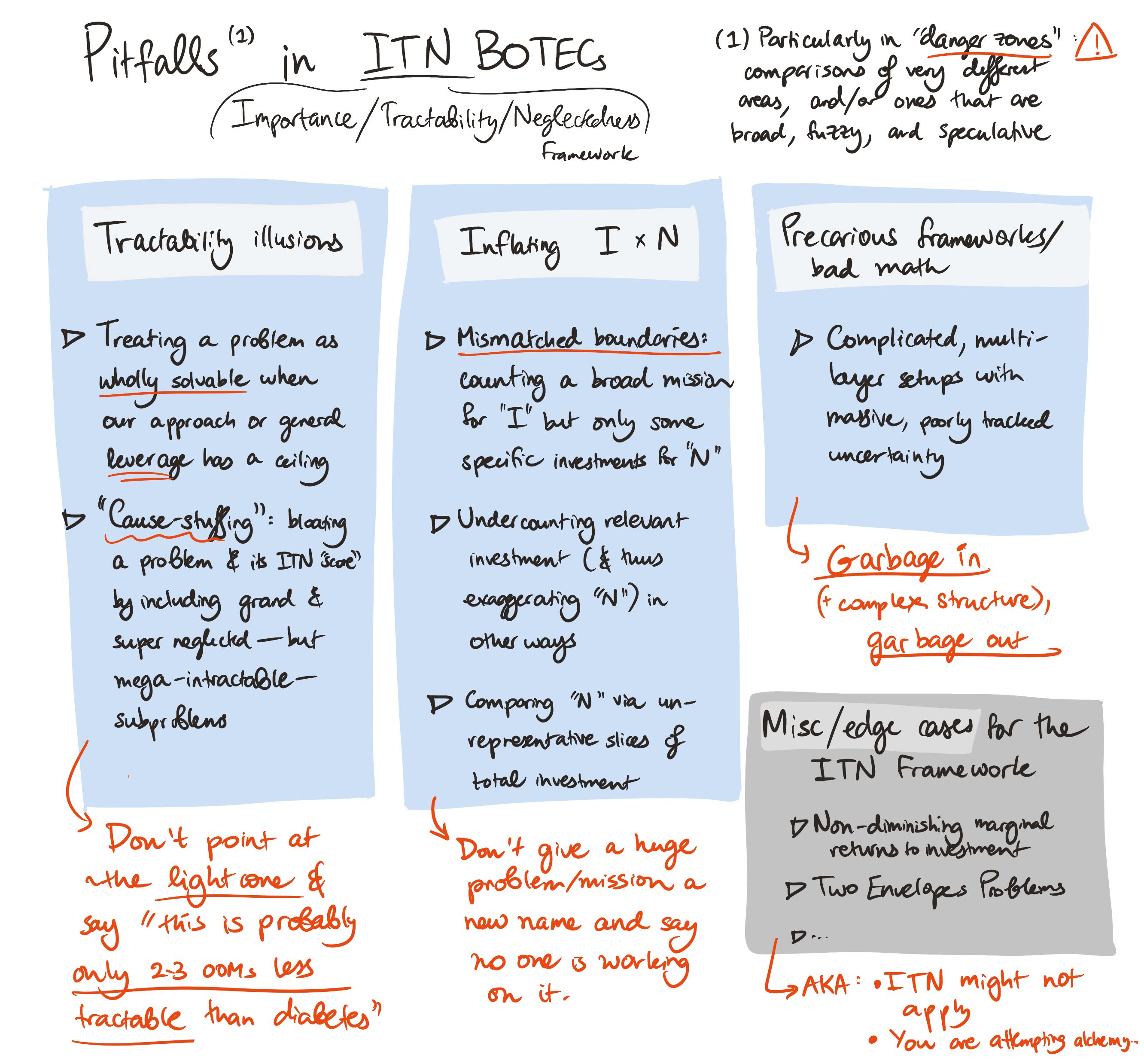

Specificw pitfalls (outside the safe zone) include:

Illusions of tractability: ITN BOTECs may rely on (and obscure) unwarranted optimism about the fraction of a problem that we can realistically make progress on

→ Make sure the way you define the “problem” only covers things that contemporary humans can predictably affect or that the approach (implicitly) considered has leverage over, and take care when applying the "tractability doesn't differ that much" heuristic

Inflated neglectedness*importance: ITN estimates sometimes consider broad versions of the problem when estimating importance and narrow versions when estimating total investment for the neglectedness factor (or otherwise exaggerate neglectedness), which inflates the overall results

→ Be more explicit when outlining the problem areas & make sure to use consistent boundaries for different parts of the estimate, double check that you're not missing relevant work (including over time) for your estimates of the amount of investment in the space, and if you're estimating "neglectedness" via "slices" of investment instead of trying to account for everything, check how consistently representative those slices seem to be (particularly if the problems you're comparing are structurally quite different)

Notes on a few other ITN-specific pitfalls

(This collects links on (i) cases when the returns to investment are not our standard log-ish curves, (ii) the two envelopes problem, as it shows up in ITN estimates, and (iii) complications when using point estimates of problem difficulty.)

Precarious frameworks: ITN BOTECs suffer from all of the issues that plague any quantitative frameworks

→ Avoid complex, "spindly" models, do some sensitivity checks, sanity check your results by trying to approach the problem from a very different direction — including via non-ITN BOTECs — and generally try to update cautiously and only after poking at the model

Finally, I think ITN is simply not the best tool for every prioritization job, and I worry it's become a strong default or authority even when other approaches are more appropriate. For instance, it's sometimes better to just weigh the expected effects (and costs) of a potential intervention. (Or to sketch out what exactly an influx of resources in an area might accomplish and evaluate those outcomes.) Then we can find conversion factors to compare between our exciting options. Alternatively, we could focus more on heuristics like “relative to society, how much do I value this cause?” Or we could shift somewhat away from EV-focused calculations (for discussion see e.g., and more notes here). Etc.

1. Illusions of tractability

There's a useful rule of thumb that “Most problems fall within a 100x tractability range”. (See the footnote for a summary of the basic argument.[3])

But this kind of heuristic is applied where it shouldn't be, sometimes in ways that are very hard to notice.

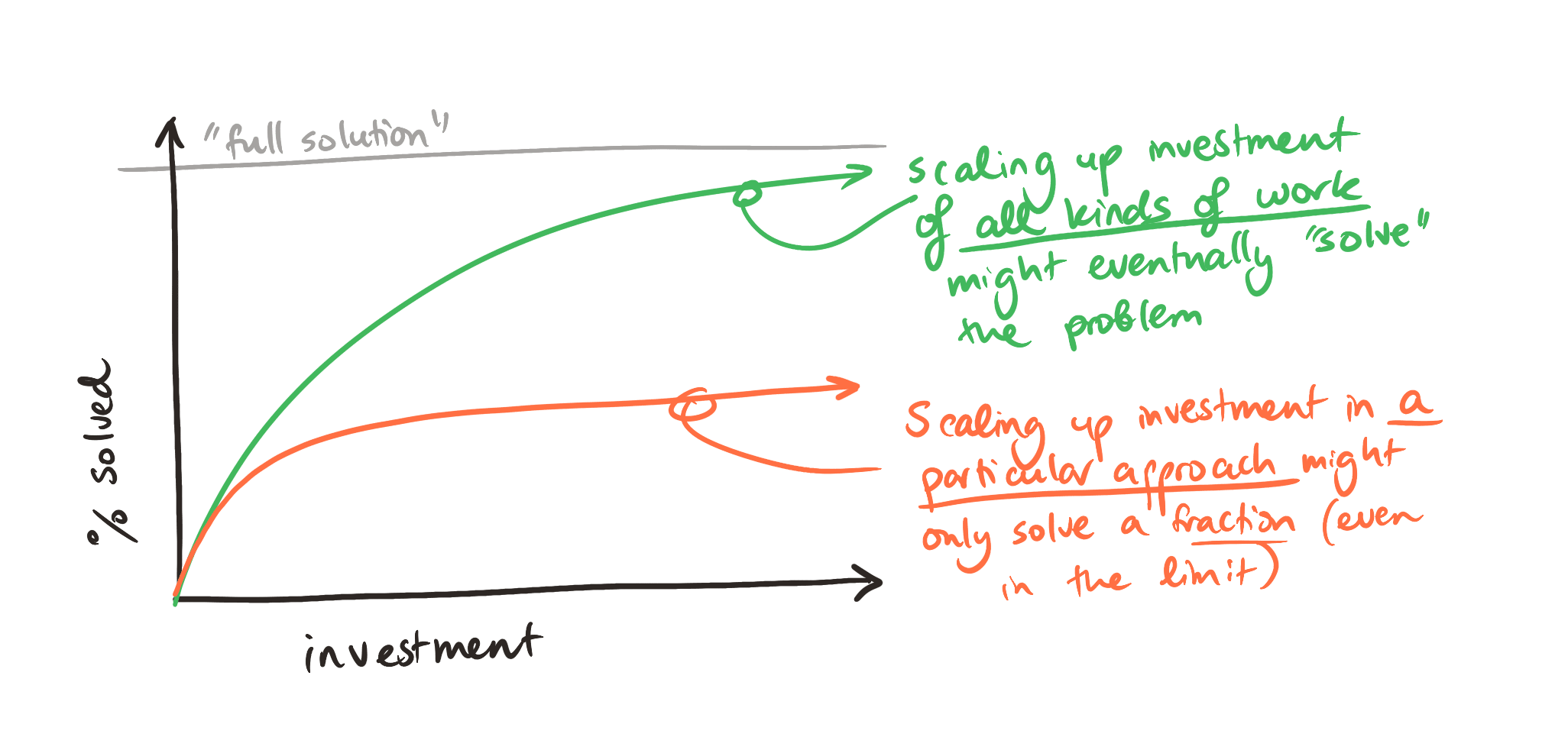

a. Hard upper limits; our leverage may only cover a small fraction of the problem we're hoping to solve

We sometimes use ITN to evaluate approaches (e.g. vaccines, alternative proteins, IIDM, AI alignment) instead of problems (people getting sick, farmed animal welfare, ...). This requires some care, as the approach we're considering may only be able to solve a small fraction of the underlying problem — even with unlimited investment. (For instance, I think no amount of investment in technical alignment research could reduce the risks of rogue AI agents making the Earth uninhabitable to humans to ~0.) In extreme cases, our approach could be a total dud.[4]

And simply sticking to “approach-agnostic” ITN BOTECs wouldn’t get us very far; the whole point of these estimates is directing additional resources to real-world areas of work.[5] So even if the BOTEC focuses exclusively on the underlying problems, whatever area will get extra investment may only ever be able to help with a small fraction of the problem and we’ve merely shifted the concern to a different point in the story.

This gets even trickier when we're dealing with a broad, speculative problem area. Even if we can list some interventions that could help, we (modern-day humans) may have no real ways of predictably helping with more than a tiny fraction of the problem space.[6] Any progress we manage to achieve might be limited in reach, making a difference only for specific sub-areas (e.g. in pockets of predictability). So although the problem may be solvable in theory, we really shouldn't expect that doubling investment would solve a nontrivial portion[7] of the overall space (as the tractability heuristic might suggest).

For instance, ITN BOTECs of problems like “(optimal) allocation of space resources” or “post-AGI non-human-animal suffering” might gesture to the sheer scale of the issue — the potential of many galaxies, an astronomical number of animals — and then appeal to the tractability-range heuristic and the causes' (real!) neglectedness to argue that we should invest more in them today. But the practical area of work that we can currently pursue may be fundamentally limited.

We can try to resolve this “hard upper limits” issue by zooming in on the accessible subset of the problem space (and penalizing our “importance” score to account for the change): i.e. redrawing the boundaries of the problem to count only the (expected) subset that, in the limit, seems solvable via modern methods / a chosen approach. Bypassing ITN entirely and trying to directly estimate the cost-effectiveness of an exciting-seeming project could also help, especially when the extent of our leverage is very uncertain.

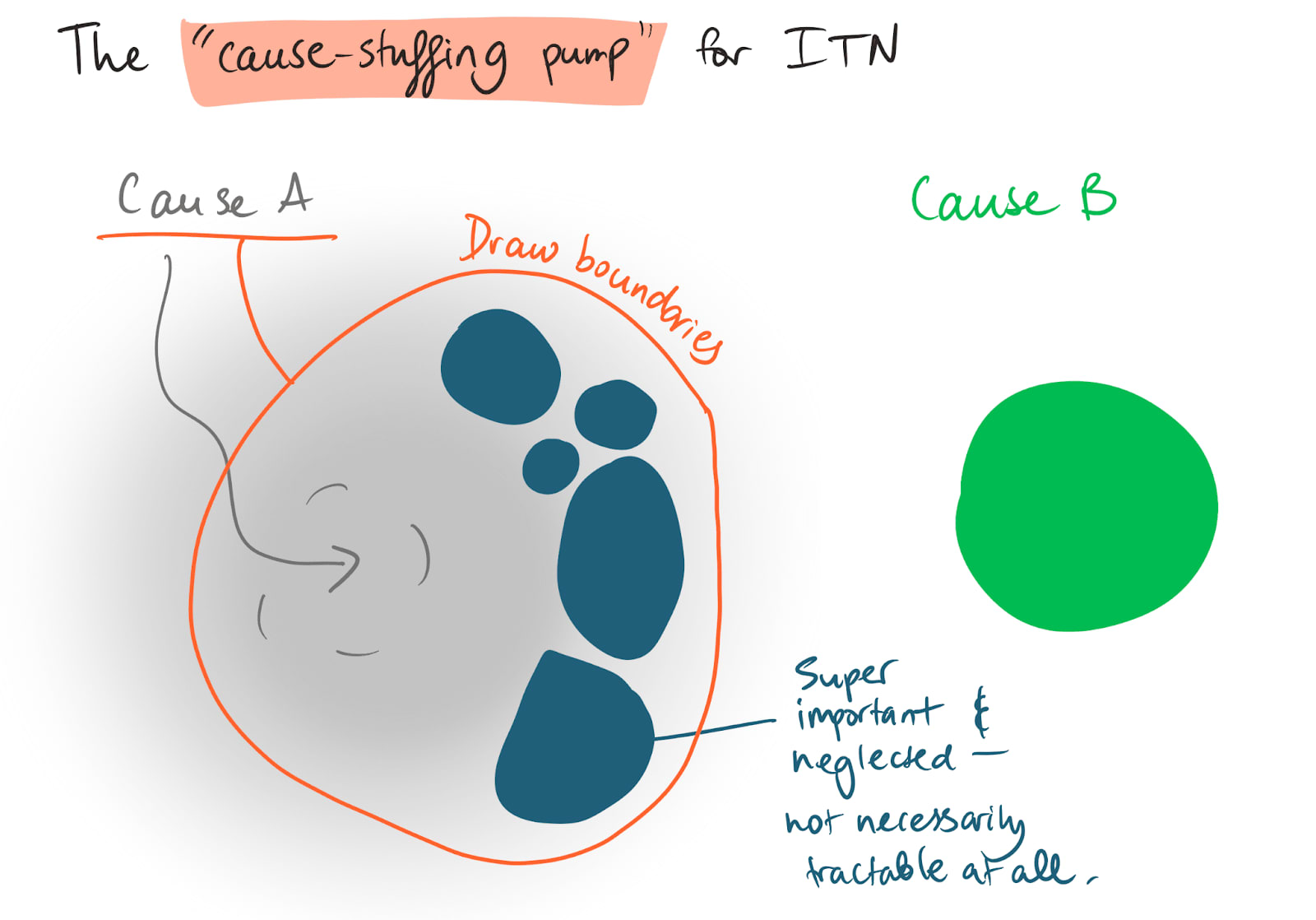

b. The "cause-stuffing pump" — or why the 100x heuristic can bias us towards wider (and/or vaguely defined) problem areas

A related issue can be illustrated by demonstrating how it can be exploited.

Let’s say I want to argue for Cause A, comparing it to Cause B. And let's assume that my initial ITN estimates come out roughly similar for A and B. Then I can pump A’s “ITN score” up by expanding its boundaries and applying the tractability-range heuristic:

The cause-stuffing pump - instructions

Find a bunch of problems in A’s vicinity — ideally ones that could arguably fall under A's broad mission — that are extremely neglected and collectively extremely high on “importance”

Let’s say that if we managed to solve them all, it’d be 1000x as valuable as solving A

Crucially, these problems don’t have to be at all tractable[8], and (unless we've stumbled on a gold mine) they'll probably be worse targets for extra investment than what we were originally considering in A

- Now redraw the boundaries of A, making sure to include all those extra problems

- For instance, if “A” was originally “vaccinate these 100 people”, then in Step 1 we might have identified 100,000 additional unvaccinated people who are extremely difficult to reach (e.g. they're in an active war zone), and in Step 2 we redefined A to include vaccinating the new group of people, too

- Reap the benefits:

- A quick-and-dirty ITN BOTEC for A would find that:

- Its importance has gone up by ~1000x

- We chose extremely neglected problems to add, so we didn’t really move A’s neglectedness

- And we can apply our tractability heuristic and say that we’ve cut down tractability by, at most, 100x

- → So marginal investment in A suddenly looks (at least) 10x better than it did before — despite the fact that it'd be going to the exact same target

- A quick-and-dirty ITN BOTEC for A would find that:

Is this just a theoretical concern?

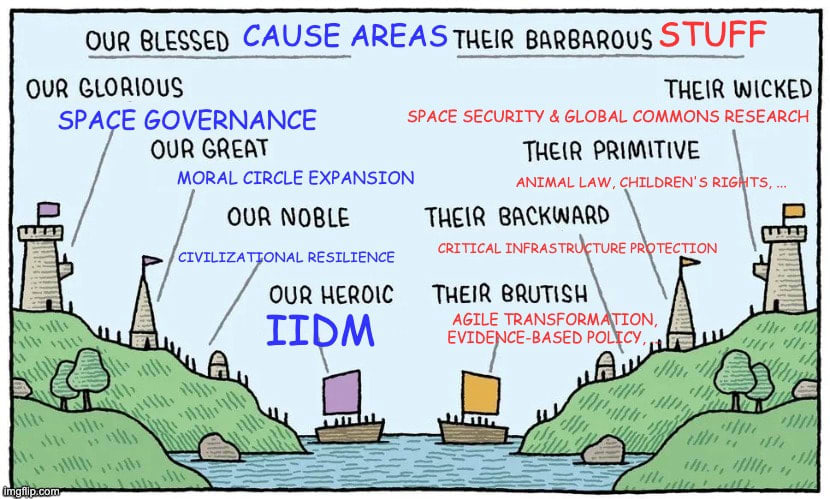

The setup above may seem contrived and adversarial, but the underlying dynamic shows up naturally (and in sneakier forms!) when we compare crisp, narrow problems with neglected-but-broad problems with blurry boundaries.

For instance, it’s easy to accidentally inflate ITN results for a field like “preparing for the intelligence explosion”, by imagining that a “solution” would ensure that non-Earth resources are allocated ideally from an impartial point of view (and forgetting to ask whether we have any plan of attack on that portion of the overall problem, or fully accounting for the hit to tractability). There’s no need to assume manipulation to worry about the dynamic; the natural description of what “success” looks like for areas like “preparing for the IE” could easily include low-leverage, high-importance issues as sub-goals.[9]

(And we should be careful about responses here that amount to: “Sure, it's unlikely that we can affect the overall problem. But we might be able to, and the resulting EV is really high.” See links on two-envelopes-problem-like issues, below, and various discussions of Pascal's mugging.)

Some specific recommendations for avoiding the cause-stuffing pump:

- Be very careful with the 100x heuristic when we’re comparing problems of radically different breadth

Where possible, replace broader problem areas with sub-problems[10] that are more similar in scope to the other problem(s) in the ITN comparison

More generally, try to always focus on the leverage we have

In particular, sometimes focusing on broader problems actually makes sense. The key question here is whether zooming out and focusing on the broader problem gives us an unusual amount of leverage, making it possible to make faster progress on the whole thing than we’d be able to do by focusing on a sub-domain. (More in this monster-footnote.[11])

(Related discussion in Why we look at the limiting factor instead of the problem scale, and parts of this recent post.)

2. Inflating neglectedness*importance

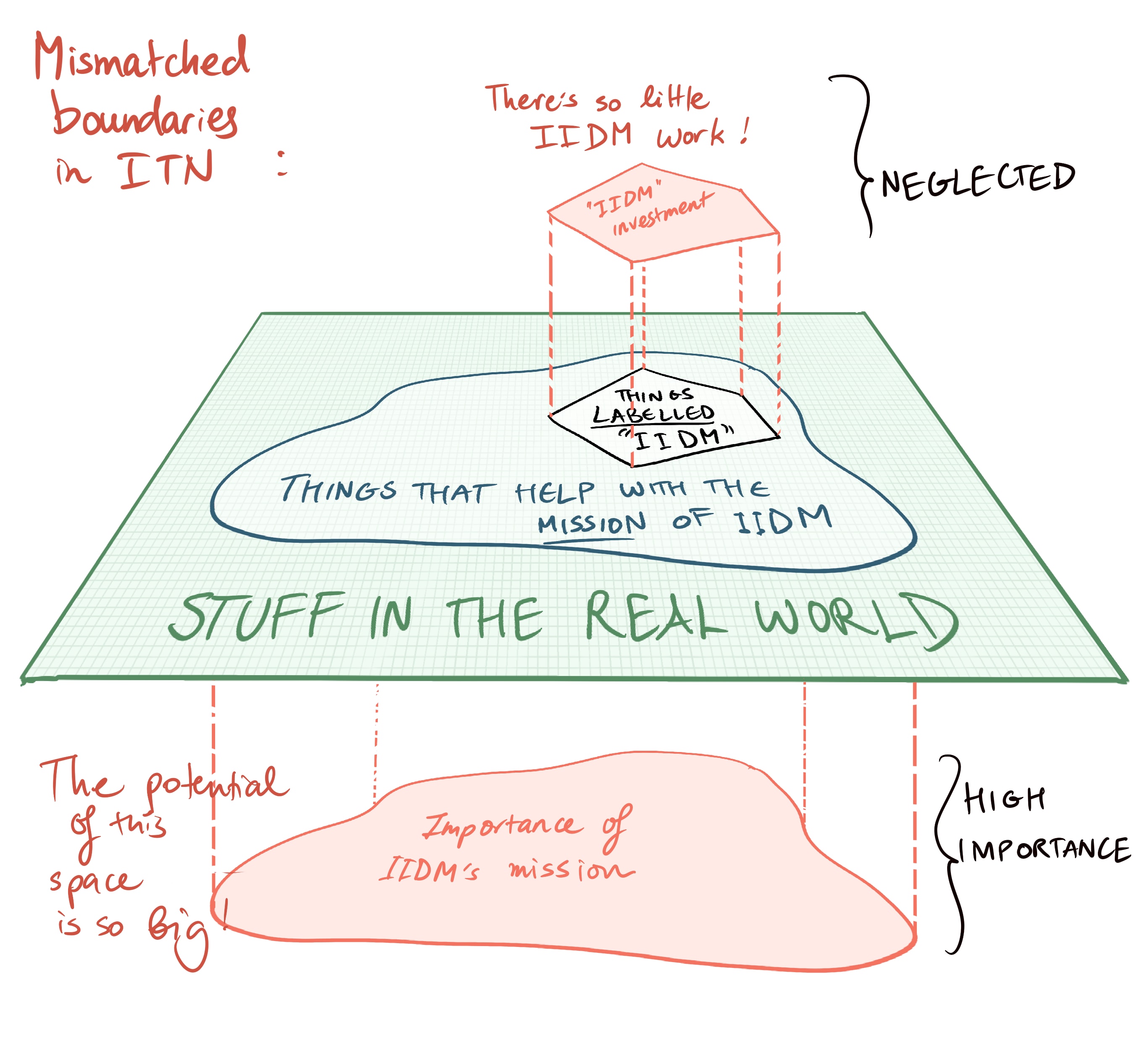

a. Mismatched boundaries

It's easy to artificially exaggerate “how I*T*N an area is” by considering a broad mission for its “importance”[12] while counting only specific kinds of work as “relevant investment” for the “neglectedness” factor.

Let's take “improving institutional decision-making” (IIDM) as an example. We might try to estimate a (baseline for) “importance” by asking how valuable it would be if key institutions were more sane (by some measure). We might then be tempted to estimate “neglectedness” in terms of the number of people working explicitly on “IIDM.”

But the resulting estimate would be very off. We’re ignoring all the other investment that helps improve the sanity of those institutions, so our guesses about the amount of low-hanging fruit left in the space will be overly optimistic. (You can picture this as noticing that we're further along the diminishing-returns curve than we initially thought we were, so the value of an extra unit of investment here will be lower than we expected.)

(Like before, this pitfall is harder to avoid when the problem’s boundaries are blurrier.)

To guard against this issue, I recommend indexing the problem's definition on the thing you want to count as “relevant investment”; take whatever method/approach you want to consider for “neglectedness” and use that to specify the problem's overall boundaries (which in turn will get used to estimate the area's potential impact). For instance, we could define the mission of preparing for the intelligence explosion as “better understand the issues a potential intelligence explosion would bring and advance AI macrostrategy research” instead of “prepare for the intelligence explosion” — and then penalize “importance” to account for the narrowed focus. (This is related to the “hard upper limits” discussion above.)

But we can basically just choose which of I, T, or N we want to try to adjust to fit with the other two.[13] The point is consistency.

And simply explicitly describing the problem's boundaries can help a lot.

b. Other ways “neglectedness” gets exaggerated or skewed

An undercurrent in the above is that it’s quite easy to think a problem area is more neglected than it is, because of things like:

- Methodology myopia: Counting only a specific kind of approach to making progress on the problem (often the approach that falls under the sticker label we give the problem)

- Insider’s curse: Being ignorant of investment from sources that we’re less familiar with

- Presentism:

These biases are more potent for certain kinds of problems. We should take extra care when, for instance:

- Blurry boundaries — many very different kinds of work could be contributing non-trivially, but it's kinda unclear

- The network/population you're familiar with accounts for a smaller fraction of the relevant investment

- You're exploring what seems like a niche, relatively new methodology (and you wouldn't immediately notice if other types of work contribute a lot)

- The problem is a globally relevant future challenge that appears to be gaining traction/attention, or one that'll likely get automated as quickly as it can be

- ...so it'll likely get a lot of future investment — although be careful with your windows of opportunity

(See also this list of ways in which cost estimates can be misleading.)

Note: We can also underappreciate the “neglectedness” of an area.

Some popular areas of work might have highly neglected (and important) subareas. To evaluate such cases, we can simply introduce the subarea as a standalone problem and focus on that for both “importance” and “neglectedness”.[15]

Alternatively, you might be exploring a new angle/approach on an established problem. If we treated investments from the new angle as “more of the same”, we'd effectively be ignoring the possibility of newly-unlocked low-hanging opportunities. But we can't just pretend like investment from the old angles didn't exist and act as if we're dealing with a green field; some of the fruit have in fact been picked. Indexing the problem (or “opportunity”) on the specific angle and its potential could help. For instance, an ITN-style BOTEC of fracking could focus on newly accessible deposits of oil/natural gas.[16]

(For more, see this post on highly neglected solutions to less-neglected problems.)

Finally, it's also worth remembering that a problem that's getting a large amount of discussion may not be getting significant amounts of investment.

c. Using unrepresentative “slices” of total investment for comparisons of problems' overall “neglectedness”

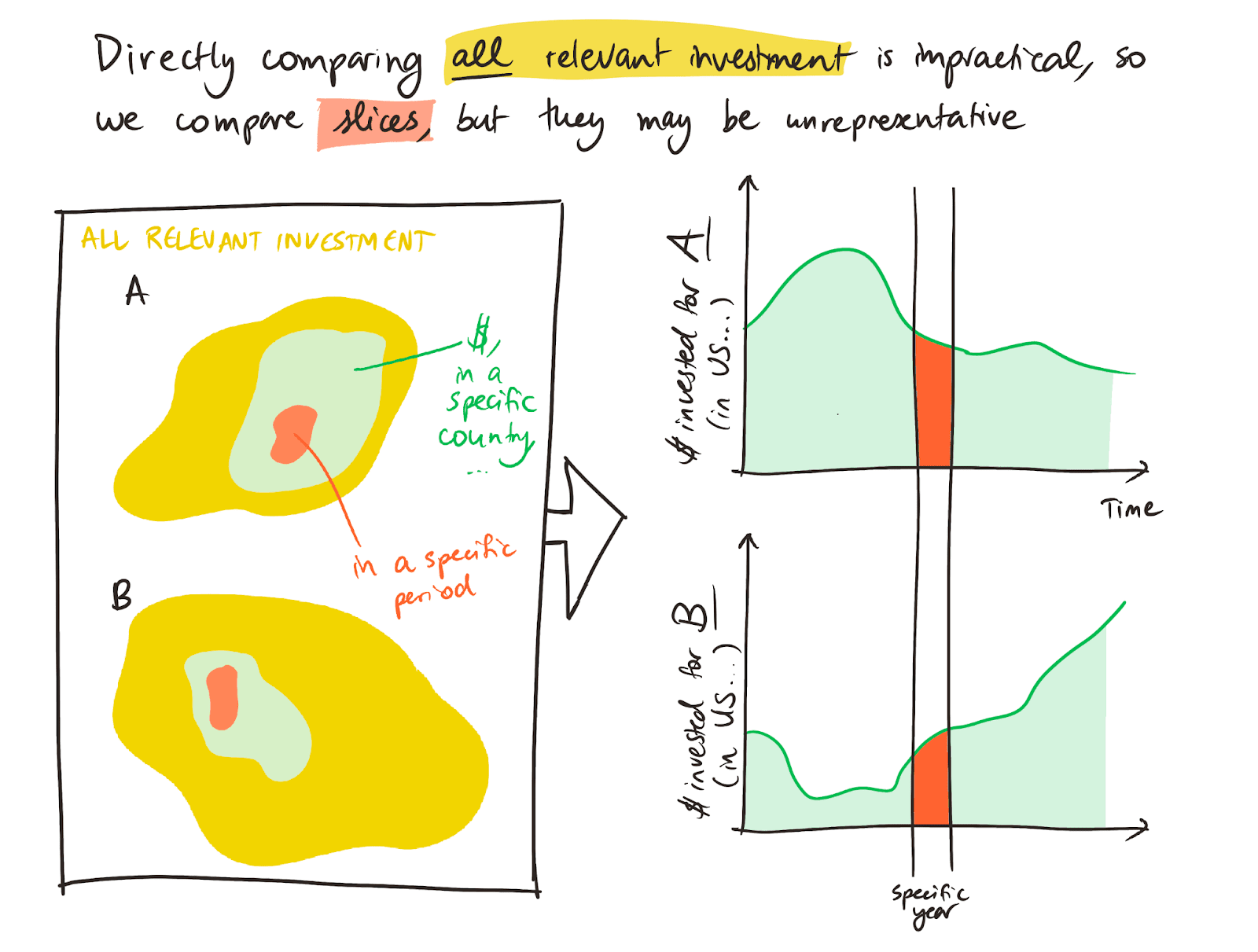

A variant on the “mismatched boundaries” issue shows up in relative ITN BOTECs. (“How much more ‘ITN’ is A than B? Well, A is 2x more ‘important’ than B ...”)

Instead of comparing total relevant investment (of all kinds, across the world, etc.) to get the relative “neglectedness” of some problems, we often look at slices of investment, like money invested in a particular year, from a particular country. (E.g. “in 2023 in the US, there was [N] times less money spent on farmed animal welfare than there was on wildlife conservation”.) This often makes sense, since accounting for literally all relevant investment everywhere and across all time is not feasible.

But naively extrapolating from comparisons of such slices to overall “neglectedness” ratios can skew our results if the slices aren't similarly representative. In particular, the slicing we choose may account for a much smaller fraction of “all relevant investment” for one of the areas in question than for the other(s).

For instance, it might be the case that:

- ...the US is an outlier for one of the problems (e.g. because Americans care an unusual amount about one of the issues), so shifting to a global view would change the ratio

- ...one of the problems gets a lot more non-financial investment (e.g. relevant volunteering)

- ...investment is growing or falling rapidly for one of the areas (or otherwise fluctuating by year, such that e.g. 2023 isn’t representative)

Extrapolating from slice-based comparisons is generally safer for problem areas that are of a similar type (since investment will fluctuate similarly across time, vary less idiosyncratically by country or type of investment, etc.).

3. “Edge cases” & other issues with ITN

Applying the ITN framework can mislead us in other ways, too. Here are quick notes and links on a few additional ones (this is still not exhaustive):

- i) ITN assumes diminishing returns to investment, and sometimes that’s not actually appropriate. See e.g.:

- Concave and convex altruism

- Additionally, even if we expect that returns to investment are generally log-ish, returns might be different on the scale on which you’ll realistically operate

- Related: A cause can be too neglected

- ii) The "two envelopes problem" can show up in ITN estimates when we're trying to evaluate “importance” via ratios/relative values

- See this recent post, more discussion from Brian Tomasik here, and this thread from Carl Shulman

- (This post on argmax & content linked in it might also be useful, as might this one)

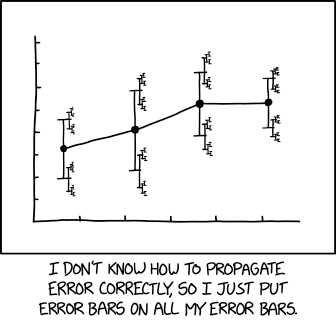

- iii) And using point estimates instead of distributions can also get messy

- See, for instance, Beware point estimates of problem difficulty

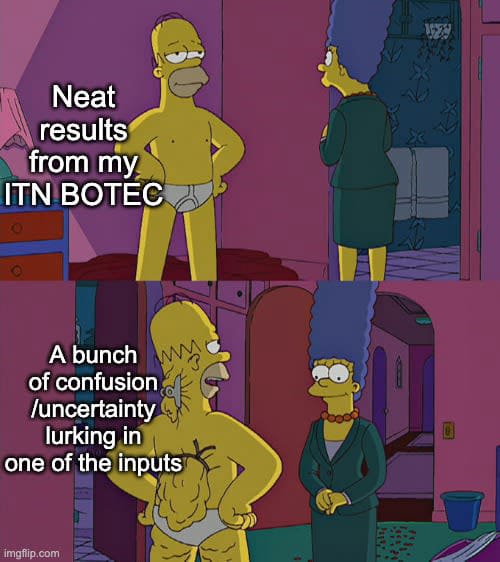

4. Precarious frameworks & the garbage-in, garbage-out effect

A final type of pitfall is not specific to ITN estimates, but seems to show up a lot in that context: over-updating on the results of precarious, complicated, and untested models.

Any quantitative framework suffers from the “garbage in, garbage out” problem.[17] It’s really easy to rely on a chain of biased inputs and decisions about how to combine them, such that the ultimate result is systematically skewed (or extremely noisy). Even when we try to track uncertainty, model uncertainty can dwarf the estimated uncertainty in the model — which is especially likely when we're dealing with low probabilities and high stakes.

As a general rule, the less grounded an input (or a decision about how to combine inputs) is, the more wiggle room our biases will have to fudge the process and skew the results. Moreover, BOTEC results can be very sensitive to a single input or logical step.

We worry more if our result depends on the product of many sub-estimates. The uncertainty/noise around each will ~multiply, it’s easy for the inputs to be off in a correlated way, and the way we've set up the model might imply something about the distributions they're drawn from that we do not endorse.[18] This suggests we should, all else equal, favor simpler models with fewer layers.

And the more complex the framework, the harder it is for us or others to notice the weakest links and stay calibrated about the results' strength.

So if you're relying on an ITN BOTEC, I fairly strongly suggest that you:

- Check how trustworthy the BOTEC setup seems, understand its assumptions

- Do some stress tests & sensitivity checks — e.g. see what happens when you tweak your setup or inputs

- Don't just vary parameters randomly; think about what perspectives or worldviews would systematically push multiple parameters in the same direction

- Check how tethered the concepts/inputs that feed into it are

- (Can you actually imagine success? Can you picture what "doubling" would mean, where that effort would go?)

- Sanity check the conclusion by approaching it from a very different direction — e.g. by constructing a very different kind of estimate (see footnote[19])

See also

Why We Can't Take Expected Value Estimates Literally (Even When They're Unbiased), an older post on ways in which cost-effectiveness estimates can be misleading, Many Weak Arguments vs. One Relatively Strong Argument, Potential downsides of using explicit probabilities,[20] and Do The Math, Then Burn The Math and Go With Your Gut.

Less related to "garbage-in, garbage-out", but still very relevant for this post: On category I and category II problems.

Thanks!

Thanks to Owen Cotton-Barratt, Max Dalton, Will MacAskill, Fin Moorhouse, Toby Ord, and various others for useful comments and feedback on an earlier draft and conversations that led to this post. (Any mistakes are mine, and these folks may disagree with some of what I've written here.)

- ^

ITN is a framework for estimating the value of allocating marginal resources to a given problem area, based on the area's importance (how valuable making some amount of progress is), tractability (how much progress we’d make on solving this problem by increasing resources devoted to it), and neglectedness (a factor that corresponds to the quotient of total investment, or: "if we add one unit of resources, how much, in relative terms, will total investment in this area grow?").

The ITN framework is basically a way to leverage the phenomenon of diminishing returns to investment — the fact that making the same amount of progress on solving a problem tends to be harder further down the line, once we’ve invested more resources in the problem. This shouldn’t be too surprising; if we assume that earlier waves of investment have been picking the lower-hanging fruit, then what's left should be harder to pursue/solve. Specifically, it turns out we can roughly model the “return on investment” via a log-like curve. (Although you can generally use whatever diminishing-returns function you think is most appropriate given your context. See isoelastic utility.)

Visually, you can imagine that we’ve got some general shape for a curve that models our diminishing returns to further investment. Then you can picture ITN as asking us to pick & input: (1) for importance, something like a scalar/bound/point that tells us how high up the Y-axis this curve should reach [at a given point in time], (2) tractability can either be seen as determined by the curve itself or considered as an optional ~penalty/adjustment on the ~slope for unusually slow/rapid progress [around a specific point, perhaps], and (3) neglectedness is determined by the total investment that’s gone into this area so far; i.e. where we are on the X-axis.

(I’ll flag that I think this way of thinking about “tractability” is somewhat messy, and generally prefer to mostly just consider the shape of the curve.)

- ^

I should flag that while I've spent a decent amount of time thinking about ITN[1], most of that has been in the form of "side-quests" and I would not call myself an ITN / cause prioritization expert.

(Honestly, in many ways this post was written as a document recent-past-Lizka would have appreciated and benefited from.)

[1] especially relative to the average person

- ^

My rough summary of the core argument in the post (which seems sound) is:

Let's assume roughly logarithmic returns to investment; i.e. each doubling of resources solves approximately the same additional fraction of a given problem in expectation (a significant but pretty reasonable assumption).

Then notice that a problem (A) being an order of magnitude less tractable than some "baseline" means it takes 10 doublings (a ~1000x scale-up of resources) to make as much (relative) progress on that problem as we'd make by merely doubling resources for a normal-tractability problem.

That already seems like a very unusually low level of tractability; scaling up investment on a given problem by 1000x seems like it would usually give us good odds of solving the problem (i.e. would solve more than half the problem in expectation). (This ~claim will also be used below.) (The post references examples like starting a Mars colony or solving the Riemann hypothesis to suggest that most problems that are sovable in theory seem at least as tractable as this.)

To get more extreme differences (two OOMs or more) in tractability, then, we can either find even more intractable problems (that are still solvable in theory... — this seems hard), or look for unusually tractable problems on the other end of the comparison. But to get a problem (B) that's >10x more tractable than is "normal" we'd need to assume that doubling investment once does more than what scaling up investment in a baseline problem by 1000x does — i.e. more than solving most of the problem in expectation (see the above note). Which means doubling investment on B solves most of the problem (in expectation), since as noted above that's what we think a 1000x scale-up of investment in a "baseline" problem usually does. As the post notes: "It's rare that we find an opportunity more tractable than this that also has reasonably good [importance] and neglectedness."

- ^

or be insanely inefficient, and thus violate the tractability-range heuristic above

- ^

These areas of work might be fuzzier and more portfolio-like (diverse) than the "methodologies" listed as examples above, but they're still ultimately practical approaches whose viability depends on more than whether the underlying problems are, in theory, solvable.

- ^

See some discussion of this kind of concern here: A_Note_on_Pascalian_Wagers

- ^

if we weren't worried about these dynamics and quickly applied the tractability heuristic, we'd expect each dobling to be at least 1/100th as good at solving the overall problem as doubling investment in a space like farmed animal welfare

- ^

(It’s hard to find extremely important, tractable, neglected problems! We can pick stuff like “perfectly allocate all resources past the Earth” without worrying about whether we have any way of making progress on those issues.

- ^

I personally have done this kind of thing in some docs in the past, for this exact field (inadvertently!)

- ^

(ideally your best guess at the sub-area within the broad problem that’s actually most promising / where you would actually direct resources if you were scaling investment)

- ^

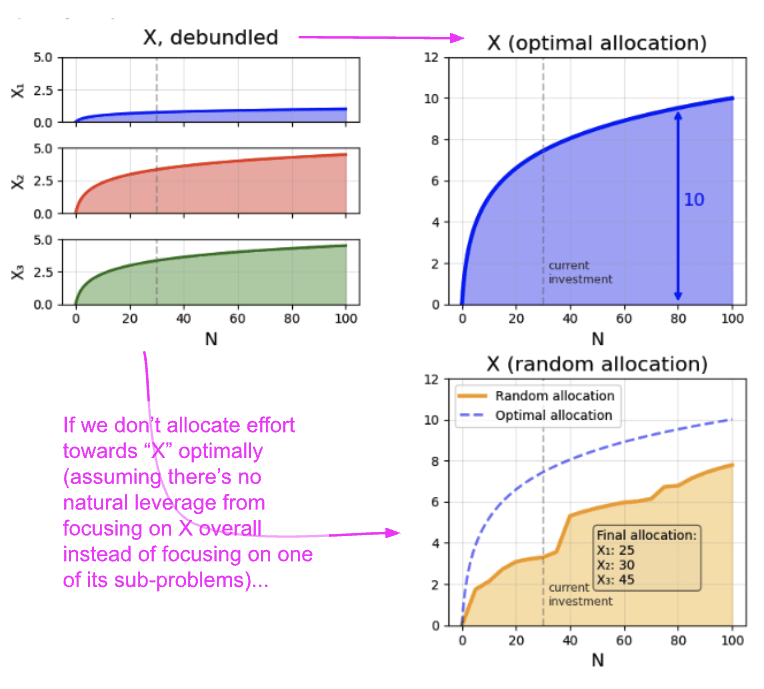

In particular, let’s start by assuming that our broad area X (e.g. “preparing for the IE”) does not have synergies / cross-cutting interventions — such that we can basically just treat it as a bundle of distinct sub-domains. Then for the assumptions underpinning ITN to hold, we need to believe that ~each additional unit of investment in the overall bundle will be allocated optimally across the sub-domains. If we assumed, instead, that additional units would be allocated randomly across the areas, then even if investment in each of those areas follows a standard log curve, overall progress would look a fair bit worse (see yellow curve below):

(If we knew investment would go towards the single most promising sub-area, I think we should just focus on that sub-area.)

However, sometimes there’s real leverage; zooming out and focusing on a broader problem allows us to make progress faster than we could by focusing on any of the sub-problems (even if we allocated investment perfectly). For “preparing for the IE” I do think there are a number of strategies/issues that the sub-problem-focused approach would miss (and I in fact think “preparing for the IE” is more pressing right now, although mostly for non-ITN-BOTEC reasons). (But I think it’d be better to pull that synergy-oriented cluster of work — a small fraction of all work relevant to preparing for the IE — out specifically and use that for ITN-style comparisons.)

We can think of this in terms of what’s happening under the hood of the ITN framework. ITN leverages the general pattern of “diminishing returns” of investment in any given area — solving the same fraction of a given problem tends to be harder further down the line, since earlier investment likely already took care of the lowest-hanging fruit. Conversely, we’re quite likely to find unusually good opportunities (gold) to help when exploring a new area or new approach. (And it turns out we can roughly model the “return on investment” via a log-ish curve.)

When zooming out gives us more leverage, we can basically treat the zoomed-out version of the problem as a new, distinct area in which we may encounter gold others have missed. (Or a way to get a good vantage point on a massive field in which others are digging, to notice patterns, etc. that could help uncover better strategies...)

- ^

As one specific example of this in the wild (picked randomly, not because it’s unusually bad on this front, tbc!); gesturing at the potential astronomical scale of what space governance could affect

- ^

The other two:

Adjust our neglectedness estimate to account for all investment that helps the problem’s broad mission (and use standard heuristics for “tractability”)

Try to massively penalize tractability — viewing it as the ability of the specific methodology/reference class we considered for “neglectedness” to make make progress on solving the overall mission of the problem

- ^

which, for problems that will manifest in the future, could ramp up and arrive early enough to make a real difference...

(Thanks to Will for this point!)

- ^

For example, if you think that overall, there's a reasonable amount of investment in “nuclear risk” (given its total importance), but that nuclear winter specifically is unusually neglected, you can just define your problem as “mitigating the harms of a potential nuclear winter, assuming a significant exchange occurs” and focus on that.

- ^

focusing specifically on the extraction of natural gas

- ^

Every input in a BOTEC is a noisy estimate of some “true” value, and basically every “step” in the calculation (how you put “inputs” together) will further distort the true signal carried by the inputs (or lose some of it).

- ^

See Dissolving the Fermi Paradox for a demonstration of how much can change when a model like this is replaced by probability distributions.

- ^

One way to get more stable estimates is to take an “input-search-first” approach to setting up the BOTEC framework, instead of starting with a general framework and looking for ways to estimate the inputs it demands. I.e. cast around to notice “foothold” inputs that are harder to mess with, or for which we have better intuitions and taste. Then see if you can chart a path through those via logical steps you basically trust to produce an estimate for the question you’re interested in. (Related discussion/advice/tips here.)

This type of approach means each input will generally be more grounded. It also often makes it easier to avoid long multiplicative chains; when we don’t take the input-first approach we often end up having to sneak in “sub-BOTECs” for the supposedly bottom-line inputs like “amount of investment” (adding extra layers and injecting more noise), or sometimes reuse inputs in multiple places (making the bottom-line results more sensitive to mistakes in those inputs).

The input-first approach might involve being flexible about the kind of end-estimate you hope to produce, and might not always look like an ITN BOTEC.

For instance, if we started by hoping to produce ITN BOTECs for marginal investment in “preparing for the intelligence explosion” and a separate BOTEC for something like “alignment”, we might find that we have more footholds for comparing projects in this space to some intermediate thing, or pursuing a non-ITN BOTEC for something like “if we were to try setting up a research group focused on preparing for the intelligence explosion, how much value would it produce within the next three years relative to [some comparable project], in expectation?”)

- ^

Edited the post to add this when I came across it (again). Might add other links opportunistically again in the future, without adding new footnotes.

OllieBase @ 2025-08-13T08:08 (+8)

This is a great post!

> ITN estimates sometimes consider broad versions of the problem when estimating importance and narrow versions when estimating total investment for the neglectedness factor (or otherwise exaggerate neglectedness), which inflates the overall results

I really like this framing. It isn't an ITN estimate, but a related claim I think I've seen a few times in EA spaces is:

"billions/trillions of dollars are being invested in AI development, but very few people are working on AI safety"

I think this claim:

- Seems to ignore large swathes of work geared towards safety-adjacent things like robustness and reliability.

- Discounts other types of AI safety "investments" (e.g., public support, regulatory efforts).

- Smuggles in a version of "AI safety" that actually means something like "technical research focused on catastrophic risks motivated by a fairly specific worldview".

I still think technical AI safety research is probably neglected, and I expect there's an argument here that does hold up. I'd love to see a more thorough ITN on this.

Lizka @ 2025-08-20T13:39 (+4)

Yeah, this sort of thing is partly why I tend to feel better about BOTECs like (writing very quickly, tbc!):

What could we actually accomplish if we (e.g.) doubled (the total stock/ flow of) investment in ~technical AIS work (specifically the stuff focused on catastrophic risks, in this general worldview)? (you could broaden if you wanted to, obviously)

Well, let's see:

- That might look like:

- adding maybe ~400(??) FTEs similar (in ~aggregate) to the folks working here now, distributed roughly in proportion to current efforts / profiles — plus the funding/AIS-specific infrastructure (e.g. institutional homes) needed to accomodate them

- E.g. across intent alignment stuff, interpretability, evals, AI control, ~safeguarded AI, AI-for-AIS, etc., across non-profit/private/govt (but in fact aimed at loss of control stuff).

- How good would this be?

- Maybe (per year of doubling) we'd then get something like a similar-ish value from this as we don from a year of current space (or something like 2x less, if we want to eyeball diminishing returns)

- Then maybe we can look at what this space has accomplished in the past year and see how much we'd pay for that / how valuable that seems...

- (What other ~costs might we be missing here?)

You might also decide that you have much better intuitions for how much we'd accomplish (and how valuable that'd be) on a different scale (e.g. adding one project like Redwood/Goodfire/Safeguarded AI/..., i.e. more like 30 FTEs than 400 — although you'd probably want to account for considerations like "for each 'successful' project we'd likely need to invest in a bunch of attempts/ surrounding infrastructure..."), or intuitions about what amount of investment is required to get to some particular desired outcome...

Or if you took the more ITN-style approach, you could try to approach the BOTEC via something like (1) how much investment has there been so far in this broad ~POV / porftolio, (2 (option a)) how much value/progress has this portfolio made + something like "how much has been made in the second half?" (to get a sense of how much we're facing diminishing returns at the moment — fwiw without thinking too much about it I think "not super diminishing returns at the mo"), or (2 (option b)) what fraction of the overall "AI safety problem" is "this-sort-of-safety-work-affectable" (i.e. something like "if we scaled up this kind of work — and only this kind of work — to an insane degree, how much of the problem will be fixed?") + how big/important the problem is overall... Etc. (Again, for all of this my main question is often "what are the sources of signal or taste / heuristics / etc. that you're happier basing your estimates on?)

SummaryBot @ 2025-08-13T15:16 (+4)

Executive summary: This exploratory post outlines common pitfalls in using Importance–Tractability–Neglectedness (ITN) back-of-the-envelope calculations (BOTECs), arguing that while ITN can be valuable for comparing similar, well-defined problems, it is often misapplied in ways that produce misleadingly precise or inflated results—especially for broad, speculative, or structurally different causes—and offering recommendations for more careful, context-appropriate use or alternative approaches.

Key points:

- Safe zone for ITN BOTECs: They work best for narrow, well-defined, and comparable problems; results become less reliable when problems are broad, speculative, loosely scoped, or structurally different.

- Illusions of tractability: BOTECs may overestimate how much of a problem is solvable with available approaches, misuse the “100x tractability range” heuristic, or inflate scores by expanding problem boundaries (“cause-stuffing pump”).

- Inflating neglectedness×importance: Results can be skewed by mismatched problem boundaries, narrow definitions of relevant investment, undercounting existing or past work, or using unrepresentative “slices” of investment data for comparisons.

- Additional ITN-specific issues: Misapplied assumptions about diminishing returns, “two envelopes problem” dynamics, and reliance on single point estimates instead of distributions can all distort conclusions.

- Precarious frameworks: Complex or untested quantitative models amplify biases and noise; authors should favor simpler setups, stress-test assumptions, and sanity-check results using alternative estimation methods.

- Alternatives to ITN: In some cases, directly estimating intervention cost-effectiveness, exploring resource influx scenarios, or applying heuristic comparisons may yield more reliable prioritization guidance.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

Vasco Grilo🔸 @ 2025-08-15T18:32 (+3)

Thanks for the post, Lizka! I have thought for a while that estimating the cost-effectiveness of (concrete) interventions is strictly better than assessing (abstract) sets of interventions with the ITN framework.

The product between importance, tractability, and neglectedness is supposed to be equal to the cost-effectiveness. So I prefer to model cost-effectiveness based on the parameters which are better defined, and are easier to quantify accurately for the intervention under assessment instead of always relying on the same 3 factors of ITN.

I also like that cost-effectiveness analyses focus on concrete interventions. The ITN framework is often applied to get a sense of the cost-effectiveness of sets of interventions, but there is often significant variation, so the results are not necessarily informative even if they are accurate. To learn more about the cost-effectiveness of a set of interventions, I prefer estimating the cost-effectiveness of interventions which I think are representative, account for a large share of the overall funding, or have the chance to be the most cost-effective.

JamesÖz 🔸 @ 2025-08-18T10:27 (+2)

This is great Lizka! Thanks for writing it up and beautifully illustrating it. Relatedly, what do you use for your diagrams?

Lizka @ 2025-08-20T13:09 (+7)

Thank you! I used Procreate for these (on an iPad).[1]

(I also love Excalidraw for quick diagrams, have used & liked Whimsical before, and now also semi-grudgingly appreciate Canva.)

Relatedly, I wrote a quick summary of the post in a Twitter thread a few days ago and added two extra sketches there. Posting here too in case anyone finds them useful:

- ^

(And a meme generator for the memes.)