Timelines are short, p(doom) is high: a global stop to frontier AI development until x-safety consensus is our only reasonable hope

By Greg_Colbourn ⏸️ @ 2023-10-12T11:24 (+78)

The people arguing against stopping (or pausing) either have long timelines or low p(doom).

The tl;dr is the title. Below I try to provide a succinct summary of why I think this is the case (read just the headings on the left for a shorter summary).

Timelines are short

The time until we reach superhuman AI is short.

“While superintelligence seems far off now, we believe it could arrive this decade.” - OpenAI

GPT-4

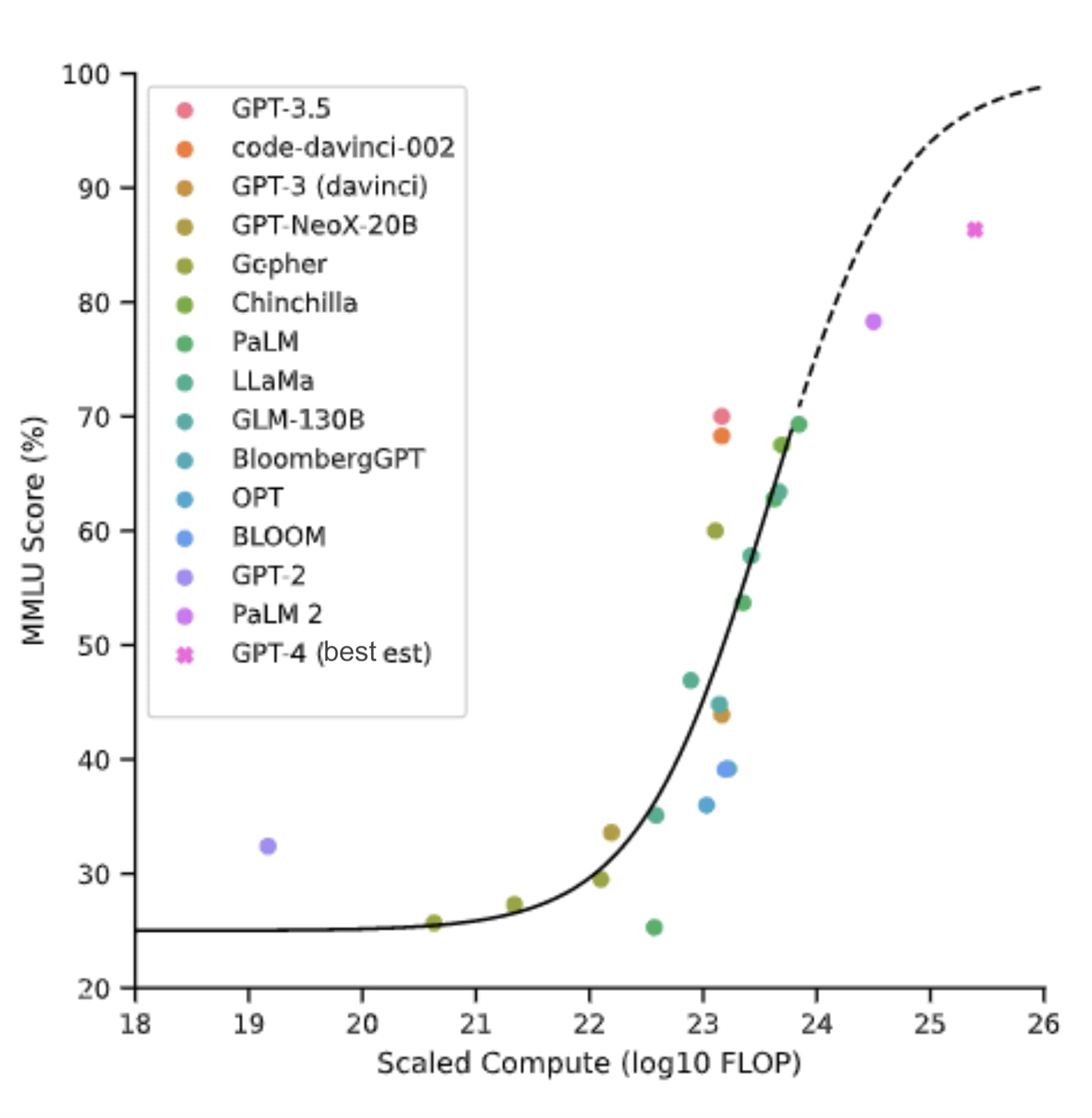

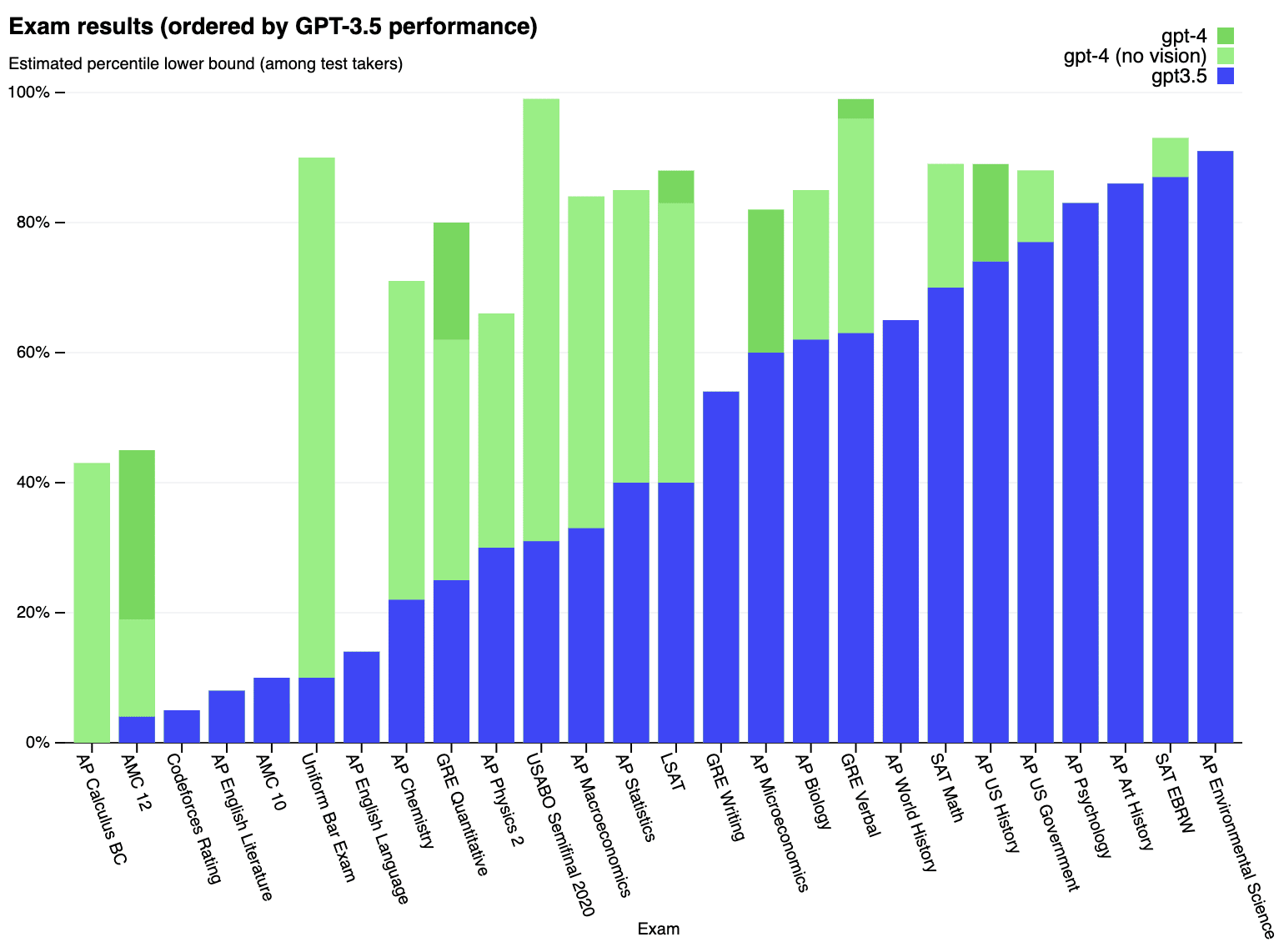

Artificial General Intelligence (AGI) is now in its ascendency. GPT-4 is already ~human-level at language and image recognition, and is showing sparks of AGI. This was surprising to most, and led to many people reducing their AI timelines.

Massive investment

Following GPT-4, the race to superhuman AI has become frantic. Google DeepMind has heavily invested in a competitor (Gemini), and Amazon has recently invested $4B into Anthropic, following the success of its Claude models. We now have 7 of the world’s 10 largest companies heavily investing into frontier AI[1]. Inflection and xAI have also entered the race.

Scaling continues unabated

It’s looking highly likely that the current paradigm of AI architecture (Foundation models), basically just scales all the way to AGI. There is little evidence to suggest otherwise. Thus it’s likely that all that is required to get AGI is throwing more money (compute and data) at it. This is ready happening to the point where AGI is likely "in the post" already, with a delivery time of 2-5 years[2].

Graph adapted[3] from Extrapolating performance in language modeling benchmarks [Owen, 2023]. An MMLU score of 90% represents human expert level across many (57) subjects.

General cognition

Foundation model AIs are “General Cognition Engines”[4]. Large multimodal models – text-, image-, audio-, video-, VR/games-, robotics[5]-manipulation by a single AI – will arrive very soon and will be ~human-level at many things: physical as well as mental tasks; blue collar jobs[6] in addition to white collar jobs.

Takeoff to Artificial Superintelligence (ASI)

Regardless of whether an AI might be considered AGI (or TAI) or superhuman AI, what’s salient from an extinction risk (x-risk) perspective is whether it is able to speed up AI research. Once an AI becomes as good at AI engineering as a top AI engineer (reaches the “Ilya Threshold”), then it can be used to rapidly speed up further AI development. Or, an AI that is highly capable at STEM could pose an x-risk as is - a “teleport” to x-risk as opposed to a “foom”. Worrying developments pushing in these directions include larger context windows, plugins, planners and early attempts at recursive self-improvement.

There are no bottlenecks

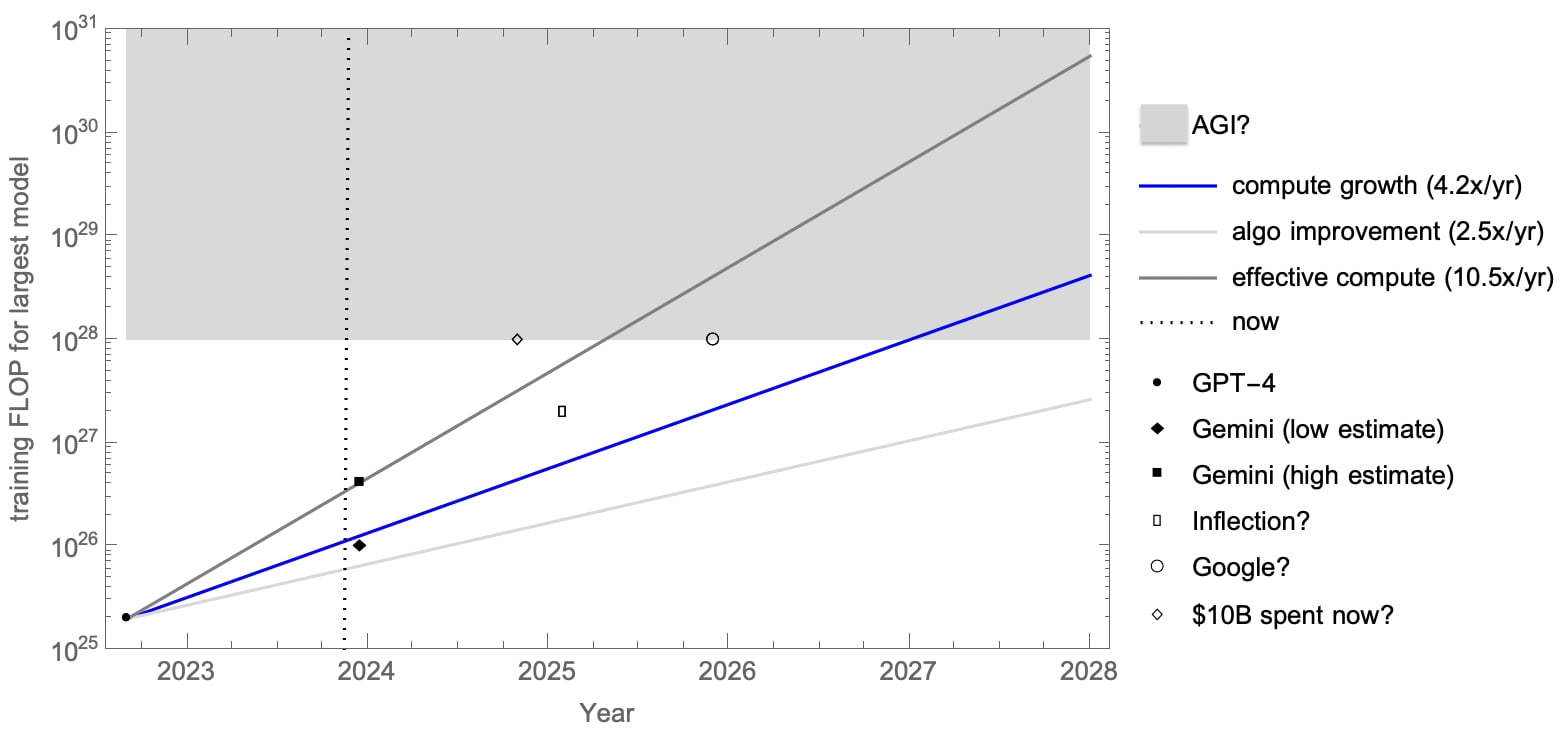

Compute: Following Epoch, it looks like there is currently a 10.5x[7] growth of effective training FLOP every year. So if we were at 1E25 FLOP in 2022 (GPT-4), then that is 1E28 effective FLOP in 2025 and 1E31 in 2028. And 1E28-1E31 is probably AGI (near perfect performance on human-level tasks)[8].

This isn’t just a theoretical projection: Inflection are claiming to be on for having 100x GPT-4 (2E27 FLOP) in 18 months[9]. Google may well have a Zettaflop (1E21) cluster by 2025; 4 months of runtime (1E7s) gives 1E28 FLOP[10]. It’s plausible that an actor spending $10B on compute now could get a 1E28 FLOP model in months.

Projection of training FLOP for frontier AI over the next 4 years (see the above two paragraphs for a detailed explanation).

Data: There are billions of high-res video cameras (phones), and sensors in the world. There is YouTube (owned by Google). There is a fast-growing synthetic data industry[11]. For specific tasks that require a high skill level, there is training data provided by narrow AI specialised in the skill. Regarding the need for active learning for AGI, see DeepMind's Adaptive Agent and the Voyager "lifelong learning" Minecraft agent, significant steps in this direction. Also, humans reach human level performance with a fraction of the data used by current models, so we know this is possible.

Ability to influence the real world: Error correction; design iteration; trial and error[12]. All are clearly not insurmountable, given the evidence we have of human success in the physical world. But can it happen quickly with AI? Yes. There are many ways that AI can easily break out into the physical world, involving hacking networked machinery and weapons systems, social persuasion, robotics, and biotech.

p(doom|AGI) is high

"I haven't found anything. Usually any proposal I read about or hear about, I immediately see how it would backfire, horribly." "My position is that lower level intelligence cannot indefinitely control higher level intelligence" - Roman Yampolskiy

The most likely (default) outcome of AGI is doom[13][14]. Superhuman AI is near (see above), and viable solutions for human safety from extinction (x-safety) are nowhere in sight[15]. If you apply a security mindset (Murphy’s Law) to the problem of securing x-safety for humans in a world with smarter-than-human AI, it should quickly become apparent that it is very difficult.

Slow alignment progress

The slow progress of the field to date is further evidence for this. The alignment paradigms used for today’s frontier models only appear to make them relatively safe because they are (currently) weaker than us[16]. If the “grandma’s bedtime story napalm recipe” prompt engineering hack actually led to the manufacture of napalm, it would be immediately obvious how poor today’s level of practical AI alignment is[17].

4 disjunctive paths to doom

There are 4 major unsolved disjunctive components to x-safety: outer alignment, inner alignment, misuse risk, multi-agent coordination. And a variety of corresponding threat models.

Any given approach that might show some promise on one or two of these still leaves the others unsolved. We are so far from being able to address all of them. And not only do we have to address them all, but we have to have totally watertight solutions, robust to AI being superhuman in its capabilities (in contrast to today’s “weak methods”). In the limit of superintelligent AI, alignment and other x-safety protections (those against misuse or conflict) need to be perfect. Else all the doom flows through the tiniest crack in our defence[18].

The nature of the threat

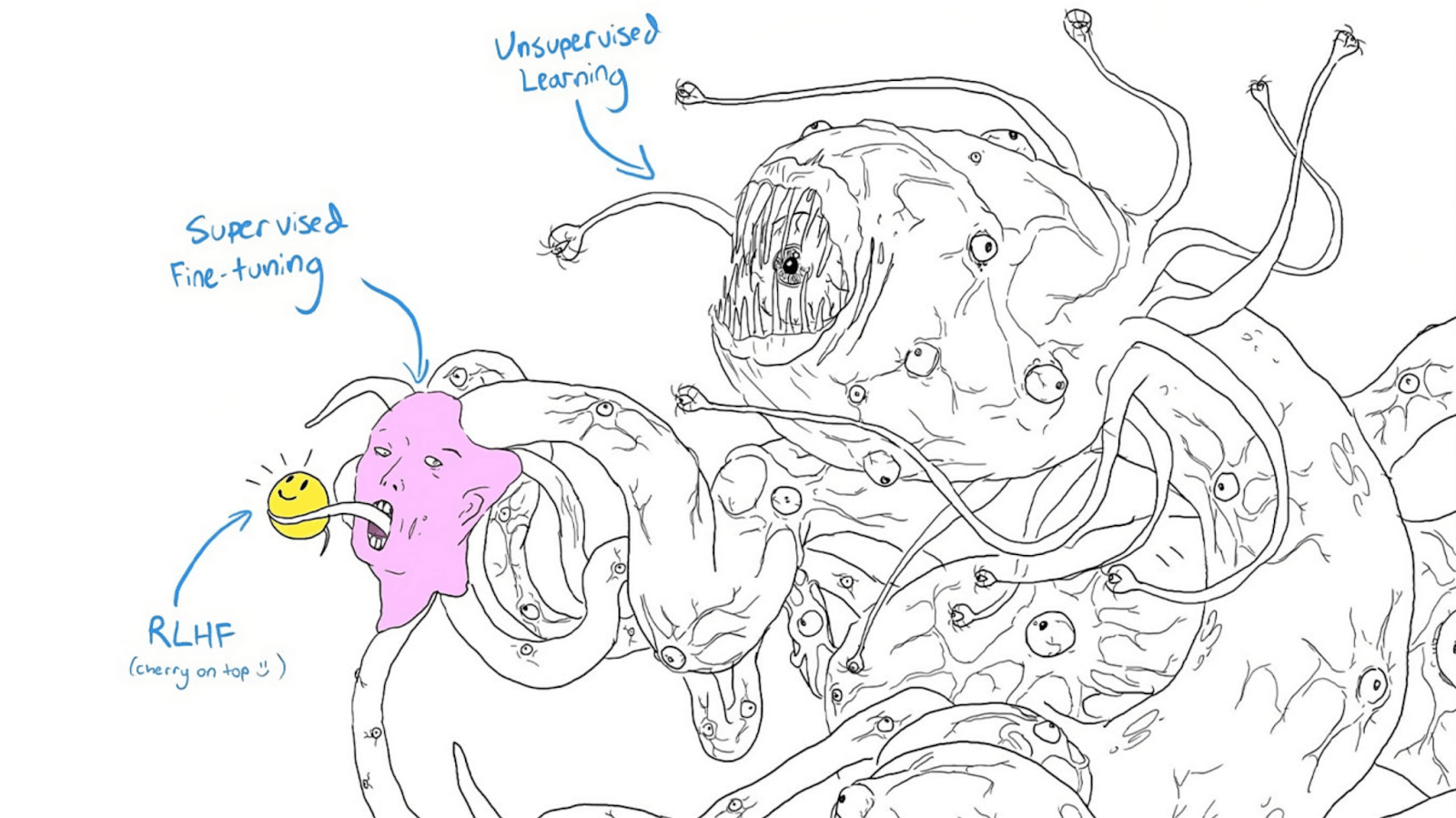

The Shoggoth meme.

X-risk-posing AI will be:

- A General Cognition Engine (see above); an AGI.

- Superhumanly capable at STEM.

- Alien (merely very good at imitating humans).

- Fast (we’ll be like plants to it).

- Very difficult to stop if open sourced (or leaked).

- Unstoppable if put on the blockchain.

- Capable of world model building.

- Situationally aware (and deceptive).

- Capable of goal mis-generalisation[19] (mesa-optimisation).

- Misaligned (see above).

- Uncontrollable (see below).

X-safety from ASI might be impossible

We don’t even know for sure at this stage whether x-safety from superhuman AI is even possible. A naive prior would actually suggest that it may not be. Since when has a more intelligent species indefinitely controlled a less intelligent species? And there is in fact currently ongoing work to establish impossibility proofs for ASI alignment[20]. These being accepted could go a long way to securing a moratorium on further frontier AI development.

A global stop to frontier AI development is our only reasonable hope

I had referred to this as an “indefinite global pause” in a draft, but following Scott Alexander’s taxonomy of pause debate positions, I realise that a stop (and restart when safe) is a cleaner way of putting it[21].

“There are only two times to react to an exponential: too early, or too late.” - Connor Leahy[22]

X-safety research needs time to catch up

X-safety research is far behind relative to AI capabilities and shortened timelines. This creates a dire need for extra time to be bought for it to catch up. There are 100s of PhDs that could be done just on getting closer to perfect alignment for current sota models. We could stop for at least 5 years before coming close to running out of useful x-safety research that needs doing.

The pause needs to be indefinite (i.e. a stop) and global

No serious, or (I think) realistic, pause is going to be temporary or local (confined to one country). Many of the posts arguing against a pause seem to be making unrealistic assumptions along these lines. A pause needs to be global and indefinite, until either there is a global consensus on x-safety (amongst ~all experts), or a global democratic mandate to resume scaling of AI (this could happen even if expert consensus on x-risk is 1%, or 10%, I guess, if there are other problems that require AGI to fix, or just a majority desire to make such a gamble for a shot at utopia). This is not to say that it may not start off as local, partial (e.g. regulation based on existing law) or finite, but the goal remains for it to be global and indefinite.

Restart slowly when there is a consensus on AI x-safety

Worries about overhangs in terms of compute and algorithms are also based on unreasonable assumptions: if there are strict limits on compute (and data), they aren’t suddenly going to be lifted. Assuming that there is a global consensus on a way forward that has a high chance of being existentially safe, ratcheting up slowly would be the way to go, doing testing and evals along the way.

Ideally, the consensus on x-safety should be extended to a global democratic mandate to proceed with AGI development, given that it affects everyone on the planet. A global referendum would be the aspiration here. But the initial agreement to stop should have baked into it a mechanism for determining consensus on x-safety and restarting. I hope that the upcoming UK AI Safety Summit can be a first step toward such an agreement.

Consider that maybe we should just not build AGI at all

The public doesn’t want it[23]. Uncertainty over extreme risks alone justifies a stop. It’s almost a cliche now for climate change and cancer cures to be trotted out as reasons to pursue AGI. EAs might also think about solutions to poverty and all disease. But we are already making good progress on these (including with existing narrow AI), and there’s no reason that we can’t solve them without AGI. We don’t need AGI for an amazing future.

Objections answered

We don’t need to keep scaling AI capabilities to do good alignment research.

The argument by the big AI labs that we need to advance capabilities to be able to better work on alignment is all too conveniently aligned with their money-making and power-seeking incentives. Responsible Scaling policies are deeply flawed; it’s basically an oxymoron when AGI is so close. The danger is already apparent enough (see above) to stop scaling now. The same applies to conditional pauses, trying to predict when we will get dangerous AI, or notice “near-dangerous” AI. Also, as mentioned above, there is plenty of AI Safety research that is possible during a stop[24] (e.g. mech. interp.).

We don’t need a global police state to enforce an indefinite moratorium.

The worry is about world-ending compute becoming more and more accessible as it continues to become cheaper, and algorithms keep advancing. But hardware development and research can also be controlled. And computers already report a lot of data - adding restrictions along the lines of “can’t be used for training dangerous frontier AI models” would not be such a big change. More importantly, a taboo can go a long way to reducing the need for enforcement. Take for example, human reproductive cloning. This is so morally abhorrent that it is not being practised anywhere in the world. There is no black market in need of a global police state to shut it down. AGI research could become equally uncool once the danger, and loss of sovereignty, it represents is sufficiently well appreciated. Nuclear non-proliferation is another example - there is a lot of international monitoring and control of enriched uranium without a global police state. The analogy here is GPUs being uranium and large GPU clusters being enriched uranium.

Scott Alexander has the caveat “absent much wider adoption of x-risk arguments” when talking about enforcement of a pause. I think that we can get that much wider adoption of x-risk arguments (indeed we are already seeing it), and a taboo on AGI / superhuman AI to go along with it.

Fears over AI leading to more centralised control and a global dictatorship are all the more reason to shut down AI now.

Even if your p(doom) is (still) low (despite considering the above), we need a global democratic mandate for something as momentous as AGI

Whilst I think AGI is 0-5 years away and p(doom|AGI) is ~90%[25], I want to remind people that a p(doom) of ~10% is ~1B human lives lost in expectation. I know many people working on AI, and EAs, and rationalists, would happily play a round of Russian Roulette for a shot at utopia; but it’s outrageous to force this on the whole world without a democratic mandate. Especially given the general public do not want the “utopia” anyway: they do not want smarter than human AI in the first place.

The benefits are speculative, so they don’t outweigh the risks.

Assumptions of wealth generation, economic abundance and public benefit from AGI are ungrounded without proof that they are even possible - and they aren’t (yet), given a lack of scalable-to-ASI solutions to alignment, misuse, and coordination. A stop isn’t taking away the ladder to heaven, for such a ladder does not exist, even in theory, as things stand[26]. Yes, it is a bit depressing realising that the world we live in may actually be closer to the Dune universe (no AI) than the Culture universe (friendly ASI running everything). But we can still have all the nice things (including a cure for ageing) without AGI; it might just take a bit longer than hoped. We don’t need to be risking life and limb driving through red lights just to be getting to our dream holiday a few minutes earlier.

There is precedent for global coordination at this level.

Examples include nuclear, biological, and chemical weapons treaties; the CFC ban; and taboos on human reproductive cloning and Eugenics. There are many other groups already in opposition to AI due to ethical concerns. A stop is a common solution to most concerns[27].

There aren’t any better options

They all seem far worse:

Superalignment - trying to solve a fiendishly difficult unsolved problem, with no known solution even in theory, to a deadline, sounds like a recipe for failure (and catastrophe).

Pivotal act - requires some minimal level of alignment to pull off. Again, pretty hubristic to expect this to not end in disaster.

Hope for the best - not going to cut it when extinction is on the line and there is nowhere to run or hide.

Hope for a catastrophe first - given human history and psychology (precedents being Covid, Chernobyl), it unfortunately seems likely that the bodies will have to start piling up before sufficient action is taken on regulation/lockdown. But this should not be an actual strategy. We really need to do better this time. Especially when there may not actually be a warning shot, and the first disaster could send us irrevocably toward extinction.

Acknowledgements: For helpful comments and suggestions that have improved the post, I thank Miles Tidmarsh, Jaeson Booker, Florent Berthet and Joep Meindertsma. They do not necessarily endorse everything written in the post, and all remaining shortcomings are my own.

- ^

Including NVidia, Meta, Tesla; and Apple.

- ^

See section below - “There are no bottlenecks” - for more details.

- ^

Rather than max and min estimates for GPT-4 compute, the best estimate from Epoch (2E25) is taken.

- ^

Perhaps if they were actually called this, rather than misnomered as Large Language Models (or Foundation models), then people would be taking this more seriously!

- ^

See also: Tesla’s Optimus, Fourier Intelligence’s GR-1.

- ^

Although scaling general-purpose robotics to automate most physical jobs would likely take a number of years, by which point it is likely to be eclipsed by ASI.

- ^

4.2x from growth in training compute, and 2.5x from algorithm improvements.

- ^

Note also that Ajeya Cotra in her “Bio Anchors” report has ~1E28 and 1E31 FLOP estimates for the Lifetime Anchor and Short horizon neural network hypotheses (p8). And see also the graph above from Owen (2023).

- ^

15 months from now if taken from Mustafa Suleyman’s first pronouncement of this.

- ^

Factoring a continued 2.5x/yr effective increase from algorithmic improvements makes this 1E29 effective FLOP in 2025.

- ^

Whilst Epoch have gathered some data showing that the stock high quality text data might soon be exhausted, their overall conclusion is that there is only a “20% chance that the scaling (as measured in training compute) of ML models will significantly slow down by 2040 due to a lack of training data.”

- ^

Examples along these lines already in use are prompt engineering to increase performance, and code generation to solve otherwise unsolvable problems.

- ^

p(doom|AGI) means probability of doom, given AGI; “doom” means existential catastrophe, or for our purposes here, human extinction.

- ^

I have yet to hear convincing arguments against this.

- ^

See “4 disjunctive paths to doom” below.

- ^

I’ve linked to some of my (and others’) criticisms of other posts in the AI Pause Debate throughout this post.

- ^

Note that the RepE approach only stops ~90% of the jailbreaks. The nature of neural networks suggests that progress in their alignment is statistical in nature and can only asymptote toward 100%. A terrorist intent on misuse isn’t going to be thwarted by having to try prompting their model 10 times.

- ^

One could argue that watertight solutions aren’t necessary, given the example of humans not value loading perfectly between generations, but I don’t think this holds, given massive power imbalances and large amounts of subjective time for accidents to happen, and the likelihood of a singleton emerging given first mover advantage. At the very least we would need to survive the initial foom in order for secondary safeguard systems to be put in place. And imperfect value loading could still lead to non-extinction existential catastrophes of the form of being only left a small amount of the cosmos (e.g. just Earth) by the AI; in which case the non-AGI counterfactual world would probably be better.

- ^

See also The Alignment Problem from a Deep Learning Perspective, p4.

- ^

Although the above caveat from fn. 16 about imperfect value loading (and astronomical waste) might still apply. As might a caveat about the possibility of limited ASI.

- ^

That also makes it easier to distinguish from the other positions. Yudkowsky’s TIME article articulates this view forcefully.

- ^

- ^

Note this is mostly based on a recent survey of the US public. Attitudes in other countries may be more in favour.

- ^

Scott Alexander: “a lot hinges on whether alignment research would be easier with better models. I’ve only talked to a handful of alignment researchers about this, but they say they still have their hands full with GPT-4.”

- ^

The remaining ~10% for “we’re fine” is nearly all exotic exogenous factors (related to the simulation hypothesis, moral realism being true - valence realism?, consciousness, DMT aliens being real etc), that I really don't think we can rely on to save us!

- ^

- ^

Although we should beware the failure mode of a UBI/profit share being accepted as a solution to mass unemployment, as this doesn’t prevent x-risk!

evhub @ 2023-10-13T05:17 (+25)

I tend to put P(doom) around 80%, so I think I'm on the pessimistic side, and I tend to think short timelines are at least a real and serious possibility that we should be planning for. Nevertheless, I disagree with a global stop or a pause being the "only reasonable hope"—global stops and pauses seem basically unworkable to me. I'm much more excited about governmentally enforced Responsible Scaling Policies, which seem like the "better option" that you're missing here.

Vasco Grilo @ 2024-04-05T14:36 (+4)

Hi Evan,

What is your median time from now until human extinction? If it is only a few years, I would be happy to set up a bet like this one.

Vasco Grilo @ 2024-04-05T15:37 (+18)

Thanks for sharing your thoughts, Greg!

Whilst I think AGI is 0-5 years away and p(doom|AGI) is ~90%

Assuming you believe there is a 75 % chance of AGI within the next 5 years, the above suggests your median time from now until doom is 3.70 years (= 0.5*(5 - 0)/0.75/0.9). Is your median time from now until human extinction also close to 3.70 years? If so, we can set up a bet similar to the one between Bryan Caplan and Eliezer Yudkowsky:

- I send you 10 k 2023-$ in the next few days.

- If humans do not go extinct in 3.70 years, or until the end of 2027 for simplicity, you send me 19 k 2023-$.

- The expected profit is:

- For you, 500 2023-$ (= 10*10^3 - 0.5*19*10^3).

- For me, 9.00 k 2023-$ (= -10*10^3 + 19*10^3), as I think the chance of humans going extinct until the end of 2027 is basically negligible. I would guess around 10^-7 per year.

- The expected profit is quite positive for both of us, so we would agree on the bet as long as my (your) marginal earnings after loosing 10 k 2023-$ (19 k 2023-$) would still go towards donations, which arguably do not have much diminishing returns.

- I guess my marginal earnings after loosing 10 k 2023-$ would still go towards donations, so I am happy to take the bet.

Greg_Colbourn @ 2024-04-15T17:45 (+10)

Hi Vasco, sorry for the delay getting back to you. I have actually had a similar bet offer up on X for nearly a year (offering to go up to $250k) with only one taker for ~$30 so far! My one is you give x now and I give 2x in 5 years, which is pretty similar. Anyway, happy to go ahead with what you've suggested.

I would donate the $10k to PauseAI (I would say $10k to PauseAI in 2024 is much greater EV than $19k to PauseAI at end of 2027).

[BTW, I have tried to get Bryan Caplan interested too, to no avail - if anyone is in contact with him, please ask him about it.]

Jason @ 2024-04-15T22:06 (+4)

As much as I may appreciate a good wager, I would feel remiss not to ask if you could get a better result for amount of home equity at risk by getting a HELOC and having a bank be the counterparty? Maybe not at lower dollar amounts due to fixed costs/fees, but likely so nearer the $250K point -- especially with the expectation that interest rates will go down later in the year.

Greg_Colbourn @ 2024-04-17T13:12 (+4)

I don't have a stable income so I can't get bank loans (I have tried to get a mortgage for the property before and failed - they don't care if you have millions in assets, all they care about is your income[1], and I just have a relatively small, irregular rental income (Airbnb). But I can get crypto-backed smart contract loans, and do have one out already on Aave, which I could extend.).

Also, the signalling value of the wager is pretty important too imo. I want people to put their money where their mouth is if they are so sure that AI x-risk isn't a near term problem. And I want to put my money where my mouth is too, to show how serious I am about this.

- ^

I think this is probably because they don't want to go through the hassle of actually having to repossess your house, so if this seems at all likely they won't bother with the loan in the first place.

Vasco Grilo @ 2024-04-15T18:42 (+4)

Thanks for following up, Greg! Strongly upvoted. I will try to understand how I can set up a contract describing the bet with your house as collateral.

Could you link to the post on X you mentioned?

I will send you a private message with Bryan's email.

Jason @ 2024-04-16T00:07 (+10)

Definitely seek legal advice in the country and subdivision (e.g., US state) where Greg lives!

You may think of this as a bet, but I'll propose an alternative possible paradigm: it's may be a plain old promissory note backed by a mortgage. That is, a home-equity loan with an unconditional balloon payment in five years. Don't all contracts in which one party must perform in the future include a necessarily implied clause that performance is not necessary in the event that the human race goes extinct by that time? At least, I don't plan on performing any of my future contractual obligations if that happens . . . .

So even assuming this wouldn't be unenforceable as gambling, it might run afoul of the rules for mortgage lending (e.g., because the implied interest rate [~14.4%?] is seen as usurious, or because it didn't comply with local or national laws regulating mortgage lending). That is a pretty regulated industry in general. It would definitely need to follow all the formalities for secured lending against real property: we require those formalities to make sure the borrower knows what he is getting into, and to give notice to other would-be lenders that they would be further back in line on repayment.

I should also note that it is pretty difficult in many places to force a sale on someone's primary residence if you hold certain types of security interests (as opposed to, e.g., a primary mortgage). So you might be holding a lien that doesn't have much practical value unless/until Greg decides to sell and there is value after paying off whoever is ahead in line on payment. Again, I can only advise seeking legal counsel in the right jurisdiction.

The off-the-wall thought I have is that Greg might be able to get around some difficulties by delivering a promissory note backed by a recorded security interest to an unrelated charity. But at the risk of sounding like a broken record, everyone would need legal advice from someone licensed in the jurisdiction before embarking on any approach in this rather unusual and interesting scenario.

Vasco Grilo @ 2024-04-16T08:16 (+2)

Thanks for sharing your thoughts, Jason!

Greg_Colbourn @ 2024-04-15T19:18 (+4)

Cool, thanks. I link to one post in the comment above. But see also.

Vasco Grilo @ 2024-04-16T08:22 (+2)

Thanks! Could you also clarify where is your house, whether you live there or elsewhere, and how much cash you expect to have by the end of 2027 (feel free to share the 5th percentile, median and 95th percentile)?

Greg_Colbourn @ 2024-04-17T10:14 (+4)

It's in Manchester, UK. I live elsewhere - renting currently, but shortly moving into another owned house that is currently being renovated (I've got a company managing the would-be-collateral house as an Airbnb, so no long term tenants either). Will send you more details via DM.

Cash is a tricky one, because I rarely hold much of it. I'm nearly always fully invested. But that includes plenty of liquid assets like crypto. Net worth wise, in 2027, assuming no AI-related craziness, I would be expect it to be in the 7-8 figure range, 5-95% maybe $500k-$100M).

Vasco Grilo @ 2024-06-04T16:46 (+8)

Update. I bet Greg Colbourn 10 k€ that AI will not kill us all by the end of 2027.

Ryan Greenblatt @ 2024-04-06T17:22 (+5)

Greg can presumably also just take out a loan? I think this will likely dominate the bet you proposed given that your implied interest rates are very high.

The bet might be nice symbolism though.

Greg_Colbourn @ 2024-04-15T17:47 (+4)

As I say above, I've been offering a similar bet for a while already. The symbolism is a big part of it.

I can currently only take out crypto-backed loans, which have been quite high interest lately (don't have a stable income so can't get bank loans or mortgages), and have considered this but not done it yet.

Vasco Grilo @ 2024-04-07T06:30 (+4)

Thanks for the suggestion, Ryan. As I side note, I would be curious to know how my comment could be improved, as I see it was downvoted. I guess it is too adversarial.

Greg can presumably also just take out a loan? I think this will likely dominate the bet you proposed given that your implied interest rates are very high.

I feel like there is a nice point there, but I am not sure I got it. By taking a loan, Greg would loose purchasing power in expectation (meanwhile, I have replaced "$" by "2023-$" in my comment), but he would gain it by taking the bet. People still take loans because they could value additional purchasing power now more than in the future, but this is why I said the bet would only make sense if my and Greg's marginal earnings would continue to go towards donations if we lost the bet. To ensure this, I would consider the bet a risky investment, and move some of my investments from stocks to bonds to offset at least part of the increase in risk. Even then, I would want to set up an agreement with signatures from both of us, and a 3rd party before going ahead with the bet.

The bet might be nice symbolism though.

Yes, I think symbolism would plausibly dominate the benefits for Greg.

Ryan Greenblatt @ 2024-04-07T16:29 (+5)

I feel like there is a nice point there, but I am not sure I got it.

The key thing is that you don't have to pay off loans if we're all dead. So all loans are implicitly bets about whether society will collapse by some point.

Greg_Colbourn @ 2024-04-15T17:55 (+4)

Re risk, as per my offer on X, I'm happy to put my house up as collateral if you can be bothered to get the paperwork done. Otherwise happy to just trade on reputation (you can trash mine publicly if I don't pay up).

Ryan Greenblatt @ 2024-04-07T16:27 (+3)

As I side note, I would be curious to know how my comment could be improved, as I see it was downvoted. I guess it is too adversarial.

(I didn't downvote it.)

Greg_Colbourn @ 2024-04-17T13:36 (+4)

I think the chance of humans going extinct until the end of 2027 is basically negligible. I would guess around 10^-7 per year.

Would be interested to see your reasoning for this, if you have it laid out somewhere. Is it mainly because you think it's ~impossible for AGI/ASI to happen in that time? Or because it's ~impossible for AGI/ASI to cause human extinction?

Vasco Grilo @ 2024-04-17T14:58 (+4)

Would be interested to see your reasoning for this, if you have it laid out somewhere.

I have not engaged so much with AI risk, but my views about it are informed by considerations in the 2 comments in this thread. Mammal species usually last 1 M years, and I am not convinced by arguments for extinction risk being much higher (I would like to see a detailed quantitative model), so I start from a prior of 10^-6 extinction risk per year. Then I guess the risk is around 10 % as high as that because humans currently have tight control of AI development.

Is it mainly because you think it's ~impossible for AGI/ASI to happen in that time? Or because it's ~impossible for AGI/ASI to cause human extinction?

To be consistent with 10^-7 extinction risk, I would guess 0.1 % chance of gross world product growing at least 30 % in 1 year until 2027, due to bottlenecks whose effects are not well modelled in Tom Davidson's model, and 0.01 % chance of human extinction conditional on that.

Greg_Colbourn @ 2024-04-17T15:47 (+4)

Interesting. Obviously I don't want to discourage you from the bet, but I'm surprised you are so confident based on this! I don't think the prior of mammal species duration is really relevant at all, when for 99.99% of the last 1M years there hasn't been any significant technology. Perhaps more relevant is homo sapiens wiping out all the less intelligent hominids (and many other species).

Vasco Grilo @ 2024-04-17T17:18 (+2)

On the question of priors, I liked AGI Catastrophe and Takeover: Some Reference Class-Based Priors. It is unclear to me whether extinction risk has increased in the last 100 years. I estimated an annual nuclear extinction risk of 5.93*10^-12, which is way lower than the prior for wild mammals of 10^-6.

Greg_Colbourn @ 2024-04-17T17:34 (+4)

I see in your comment on that post, you say "human extinction would not necessarily be an existential catastrophe" and "So, if advanced AI, as the most powerful entity on Earth, were to cause human extinction, I guess existential risk would be negligible on priors?". To be clear: what I'm interested in here is human extinction (not any broader conception of "existential catastrophe"), and the bet is about that.

Vasco Grilo @ 2024-04-17T19:39 (+2)

To be clear: what I'm interested in here is human extinction (not any broader conception of "existential catastrophe"), and the bet is about that.

Agreed.

Greg_Colbourn @ 2024-04-17T17:23 (+4)

See my comment on that post for why I don't agree. I agree nuclear extinction risk is low (but probably not that low)[1]. ASI is really the only thing that is likely to kill every last human (and I think it is quite likely to do that given it will be way more powerful than anything else[2]).

NunoSempere @ 2024-04-09T15:42 (+4)

I upvoted this offer. I have an alert for bet proposals on the forum, and this is the first genuine one I've seen in a while.

Jason @ 2024-04-07T03:05 (+4)

"I think AGI is 0-5 years away" != "I am certain AGI will happen in within five years." I think it is best read as implying somewhere between 51 and 100% confidence, at least standing alone. Depending on where it is set, you probably should offer another ~12-18 months.

Vasco Grilo @ 2024-04-07T05:57 (+4)

Nice point, Jason! I have adjusted the numbers above to account for that. I have also replaced "$" by "2023-$" to account for inflation.

John G. Halstead @ 2023-10-12T14:17 (+10)

Re p(doom) being high, I don't think you need to commit to the view that the most likely outcome of AGI is doom. Surveys of AI researchers put the risk from rogue AI at 5%. In the XPT survey professional forecasters put the risk of extinction from AI at 0.03% by 2050 and 0.38% by 2100, whereas domain experts put the chance at 1.1% and 3%, respectively. Though they had longer timelines than you seem to endorse here.

I think your argument goes through even if the risk is 1% conditional on AGI and that seems like an estimate unlikely to upset too many people, so I would just go with that

Greg_Colbourn @ 2023-10-12T15:03 (+4)

I still don't understand where the 95% for non-doom is coming from. I think it's useful to look at actual mechanisms for why people think this (and so far I've found them lacking). The qualifications of the "professional forecasters" in the XPT survey are in doubt (and again, it was pre-GPT-4).

Greg_Colbourn @ 2023-10-12T15:30 (+3)

The argument might go through even if the risk is 1%, but people sure aren't acting like that. At least in EA, broadly speaking (where I imagine the average p(doom|AGI) is closer to 10%). Also, I'd rather just say what I actually believe, even if it sounds "alarmist". At least I've tried to argue for it in some detail. The main reason I am prioritising this so much is because I think it's the most likely reason I, and everyone I know and love, will die. Unless we stop it. Forget longtermism and EA: people need to understand that this is a threat to their own personal near-term survival.

Isaac Dunn @ 2023-10-12T17:20 (+14)

I think you come across as over-confident, not alarmist, and I think it hurts how you come across quite a lot. (We've talked a bit about the object level before.) I'd agree with John's suggested approach.

Isaac Dunn @ 2023-10-12T17:22 (+5)

Relatedly, I also think that your arguments for "p(doom|AGI)" being high aren't convincing to people that don't share your intuitions, and it looks like you're relying on those (imo weak) arguments, when actually you don't need to

Greg_Colbourn @ 2023-10-12T17:55 (+4)

I'm crying out for convincing gears-level arguments against (even have $1000 bounty on it), please provide some.

richard_ngo @ 2024-04-07T18:26 (+4)

The issue is that both sides of the debate lack gears-level arguments. The ones you give in this post (like "all the doom flows through the tiniest crack in our defence") are more like vague intuitions; equally, on the other side, there are vague intuitions like "AGIs will be helping us on a lot of tasks" and "collusion is hard" and "people will get more scared over time" and so on.

Greg_Colbourn @ 2024-04-15T17:37 (+2)

I'd say it's more than a vague intuition. It follows from alignment/control/misuse/coordination not being (close to) solved and ASI being much more powerful than humanity. I think it should be possible to formalise it, even. "AGIs will be helping us on a lot of tasks", "collusion is hard" and "people will get more scared over time" aren't anywhere close to overcoming it imo.

richard_ngo @ 2024-04-15T20:58 (+2)

It follows from alignment/control/misuse/coordination not being (close to) solved.

"AGIs will be helping us on a lot of tasks", "collusion is hard" and "people will get more scared over time" aren't anywhere close to overcoming it imo.

These are what I mean by the vague intuitions.

I think it should be possible to formalise it, even

Nobody has come anywhere near doing this satisfactorily. The most obvious explanation is that they can't.

Isaac Dunn @ 2023-10-12T18:59 (+1)

To be fair, I think I'm partly making wrong assumptions about what exactly you're arguing for here.

On a slightly closer read, you don't actually argue in this piece that it's as high as 90% - I assumed that because I think you've argued for that previously, and I think that's what "high" p(doom) normally means.

Greg_Colbourn @ 2023-10-12T19:26 (+2)

I do think it is basically ~90%, but I'm arguing here for doom being the default outcome of AGI; I think "high" can reasonably be interpreted as >50%.

Greg_Colbourn @ 2023-10-12T18:01 (+2)

I feel like this is a case of death by epistemic modesty, especially when it isn't clear how these low p(doom) estimates are arrived at in a technical sense (and a lot seems to me like a kind of "respectability heuristic" cascade). We didn't do very well with Covid as a society in the UK (and many other countries), following this kind of thinking.

John G. Halstead @ 2023-10-12T16:21 (+4)

I suppose one solution might be to say that your personal view is that pdoom is >50%, but a range of estimates suggest >1% is plausible

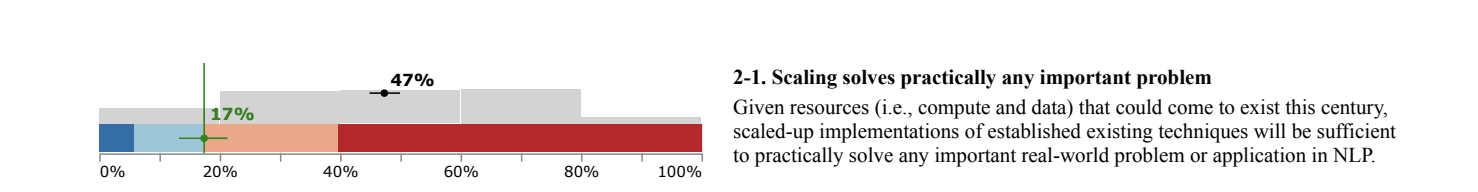

John G. Halstead @ 2023-10-12T14:08 (+7)

Thanks for this post Greg.

Re your point about scaling, the Michael et al survey of NLP researchers suggests that researchers don't think scaling will take us all the way there.

Figure 4.

Based on my limited understanding I agree with you that it seems pretty plausible that scaling does take us to human level AI and beyond, but the experts seem to disagree and I'm not sure why

Greg_Colbourn @ 2023-10-12T14:47 (+7)

Interesting. I'll note that this survey was pre-GPT-4 (or even before GPT3.5 was in widespread use? May-June 2022) when (I think) people were still sceptical of LLMs being able to do well on university exams, amongst many other things. Would be interesting to see a similar survey that is post-GPT-4 (I've not been able to find anything). I predict that it will show a significantly higher % agreeing.

In general I think any survey on AI that was conducted in the pre-GPT-4 era is now woefully out of date.

William the Kiwi @ 2023-10-17T01:54 (+3)

I would agree, relying on pre-GPT4 estimates seems flawed.

Chris Leong @ 2023-10-13T01:11 (+4)

Hmm... that isn't exactly the question I'd like the answer too, which is more scaling + minor incremental improvements + creative prompting.

riceissa @ 2023-10-12T20:18 (+6)

I agree with most of the points in this post (AI timelines might be quite short; probability of doom given AGI in a world that looks like our current one is high; there isn't much hope for good outcomes for humanity unless AI progress is slowed down somehow). I will focus on one of the parts where I think I disagree and which feels like a crux for me on whether advocating AI pause (in current form) is a good idea.

You write:

But we can still have all the nice things (including a cure for ageing) without AGI; it might just take a bit longer than hoped. We don’t need to be risking life and limb driving through red lights just to be getting to our dream holiday a few minutes earlier.

I think framings like these do a misleading thing where they use the word "we" to ambiguously refer to both "humanity as a whole" and "us humans who are currently alive". The "we" that decides how much risk to take is the humans currently alive, but the "we" that enjoys the dream holiday might be humans millions of years in the future.

I worry that "AI pause" is not being marketed honestly to the public. If people like Wei Dai are right (and I currently think they are), then AI development may need to be paused for millions of years potentially, and it's unclear how long it will take unaugmented or only mildly augmented humans to reach longevity escape velocity.

So to a first approximation, the choice available to humans currently alive is something like:

- Option A: 10% chance utopia within our lifetime (if alignment turns out to be easy) and 90% human extinction

- Option B: ~100% chance death but then our descendants probably get to live in a utopia

For philosophy nerds with low time preference and altruistic tendencies (into which I classify many EA people and also myself), Option B may seem obvious. But I think many humans existing today would rather risk it and just try to build AGI now, rather than doing any AI pause, and to the extent that they say they prefer pause, I think they are being deceived by the marketing or acting under Caplanian Principle of Normality, or else they are somehow better philosophers than I expected they would be.

(Note: if you are so pessimistic about aligning AI without a pause that your probability on that is lower than the probability of unaugmented present-day humans reaching longevity escape velocity, then Option B does seem like a strictly better choice. But the older and more unhealthy you are, the less this applies to you personally.)

titotal @ 2023-10-12T22:07 (+6)

- Option A: 10% chance utopia within our lifetime (if alignment turns out to be easy) and 90% human extinction

Are you simplifying here, or do you actually believe that "utopia in our lifetime" or "extinction" are the only two possible outcomes given AGI? Do you assign a 0% chance that we survive AGI, but don't have a utopia in the next 80 years?

What if AGI stalls out at human level, or is incredibly expensive, or is buggy and unreliable like humans are? What if the technology required for utopia turns out to be ridiculously hard even for AGI, or substantially bottlenecked by available resources? What if technology alone can't create a utopia, and the extra tech just exacerbates existing conflicts? What if AGI access is restricted to world leaders, who use it for their own purposes?

What if we build an unaligned AGI, but catch it early and manage to defeat it in battle? What if early, shitty AGI screws up in a way that causes a worldwide ban on further AGI development? What if we build an AGI, but we keep it confined to a box and can only get limited functionality out of it? What if we build an aligned AGI, but people hate it so much that it voluntary shuts off? What if the AGI that gets built is aligned to the values of people with awful views, like religious fundamentalists? What if AGI wants nothing to do with us and flees the galaxy? What if [insert X thing I didn't think of here]?.

IMO, extinction and utopia are both unlikely outcomes. The bulk of the probability lies somewhere in the middle.

riceissa @ 2023-10-13T08:09 (+2)

I was indeed simplifying, and e.g. probably should have said "global catastrophe" instead of "human extinction" to cover cases like permanent totalitarian regimes. I think some of the scenarios you mention could happen, but also think a bunch of them are pretty unlikely, and also disagree with your conclusion that "The bulk of the probability lies somewhere in the middle". I might be up for discussing more specifics, but also I don't get the sense that disagreement here is a crux for either of us, so I'm also not sure how much value there would be in continuing down this thread.

William the Kiwi @ 2023-10-17T01:38 (+1)

I would agree that "utopia in our lifetime" or "extinction" seems like a false dichotomy. What makes you say that you predict the bulk of the probability lies somewhere in the middle?

Greg_Colbourn @ 2023-10-13T10:02 (+2)

How about an Option A.1: pause for a few years or a decade to give alignment a chance to catch up? At least stop at the red lights for a bit to check whether anyone is coming, even if you are speeding!

if you are so pessimistic about aligning AI without a pause that your probability on that is lower than the probability of unaugmented present-day humans reaching longevity escape velocity

I think this easily goes through, even for 1-10% p(doom|AGI), as it seems like ageing is basically already a solved problem or will be within a decade or so (see the video I linked to - David Sinclair; and there are many other people working in the space with promising research too).

Benjamin M. @ 2023-10-13T02:20 (+5)

I'm not an expert on most of the evidence in this post, but I'm extremely suspicious of the claim that GPT-4 represents AI that is "~ human level at language", unless you mean something by this that is very different from what most people would expect.

Technically, GPT-4 is superhuman at language because whatever task you are giving it is in English, and the median human's English proficiency is roughly nil. But a more commonsense interpretation of this statement is that a prompt-engineered AI and a trained human can do the task roughly as well.

What you link to shows the results of how GPT-4 performs on a bunch of different exams. This doesn't really show how language is used in the real world, especially since the exams very closely match past exams that were in the training data. It's good at some of them, but also extremely bad at others (AP English Literature and Codeforces in particular), which is an issue if you're making a claim that it's roughly human level.

Furthermore, language isn't just putting words together in the right order and with the right inflection. It also includes semantic information (what the actual meaning of the sentences is) and pragmatic information (is the language conveying what it is trying to convey, not just the literal meaning). I'm not sure whether pragmatics in particular would be relevant for AI risk, but the fact that anecdotally even GPT-4 is pretty bad at pragmatics prevents a literal interpretation of your statement.

In my opinion, the best evidence for GPT-4 not being human level at language is that, in the real world, GPT-4 is much cheaper than a human but consistently unable to outcompete humans. News organizations have a strong incentive to overhype GPT-caused automation, but the examples that they've found are mostly of people saying that either GPT-4 or GPT-3 (it's not always clear which) did their job much worse than them, but good enough for clients. Take https://www.washingtonpost.com/technology/2023/06/02/ai-taking-jobs/ as a typical story.

Exams aren't exactly the real world, but the popular example of GPT-4 doing well on exams is https://www.slowboring.com/p/chatgpt-goes-to-harvard. This both ignores citations (which is a very important part of college writing, and one that GPT-3 couldn't do whatsoever and which GPT-4 still is significantly below what I would expect from a human) and relies on the false belief that Harvard is a hard school to do well at (grade inflation!)

I still agree with two big takeaways of your post, that an AI pause would be good and that we don't necessarily need AGI for a good future, but that's more because it's robust to a lot of different beliefs about AI than because I agree with the evidence provided. Again, a lot of the evidence is stuff that I don't feel particularly knowledgeable about, I picked this claim because I've had to think about it before and because it just feels false from my experience using GPT-4.

Greg_Colbourn @ 2023-10-13T10:31 (+2)

the median human's English proficiency is roughly nil.

GPT-4 is also proficient at many other languages, so I don't think English is the appropriate benchmark! Is GPT-4 as good as the median human at language in general? I think yes. In fact it's probably quite a lot better.

anecdotally even GPT-4 is pretty bad at pragmatics

Can you link to examples? Most examples I've seen on X are people criticising chatGPT-3.5 (or other models) and then someone coming along showing chatGPT-4 getting it right!

GPT-4 or GPT-3 (it's not always clear which)

It's nearly always GPT-3 (or 3.5). We only need to be concerned about the best AI models, not the lower tiers! I've heard anecdotes, in real life, of people who are using GPT-4 to do parts of their jobs - e.g. writing long emails that their boss was impressed with (they didn't tell them it was chatGPT!)

false belief that Harvard is a hard school to do well at

Harvard is one of the best schools in the world. The average human is quite far from being smart enough to get in to it. I don't think saying this is helping the credibility of your argument! Seems a lot like goalpost moving.

I still agree with two big takeaways of your post, that an AI pause would be good and that we don't necessarily need AGI for a good future

Thanks, good to know :)

William the Kiwi @ 2023-10-17T01:52 (+5)

GPT4 is clearly above the median human when it comes to a range of exams. Do we have examples of GPT4's comparison to the median human in non-exam like conditions?

William the Kiwi @ 2023-10-12T15:48 (+5)

I agree with most of your conclusions in this post. I feel uncomfortable. I'll write more once I have processed some of my emotions and can think in a more clear manner.

Chris Leong @ 2023-10-13T01:03 (+5)

Hope everything is okay.

PS. I'm doing AI Safety movement building in Australia and New Zealand, so if you need someone to talk to, feel free to reach out.

William the Kiwi @ 2023-10-16T23:24 (+3)

Hi Chris thanks for reaching out. Obviously things with the world aren't ok, it seems insane that every country is staring down a massive national security risk and they haven't done much about it.

How is movement building going?

Greg_Colbourn @ 2023-10-13T08:51 (+4)

I know William is already aware, but for others who aren't, there are groups focused on getting a pause: PauseAI (Discord) and the AGI-Moratorium-HQ Slack are two of them. And there are now quite a lot of people on X with ⏸️ or ⏹️ in their name. I find that it makes me feel better being pro-active about doing something about it.

I spoke with William at length yesterday. The situation is dire, but I don't think it's impossible.

William the Kiwi @ 2023-10-16T23:26 (+1)

Yea, thanks for the talk Greg, it was informative.

Chris バルス @ 2024-02-17T11:07 (+3)

Strong upvote.

GPT-1 was released 2018. GPT-4 has shown sparks of AGI.

We have early evidence of self-improvement -- or conservatively -- positive feedback loops are evident.

Open AI intends to build ~AGI to automate alignment research. Sam Altman is attempting to raise $7T USD to build more GPUs.

Anthropic CEO estimates 2-3 years until AGI.

Meta has gone public about their goal of open-sourcing AGI.

Superalignment might even be impossible.

It seems to be difficult to defend a world against rouge AGIs, it seems difficult for aligned AIs to defend us.

Chris Leong @ 2023-10-12T11:43 (+3)

I'm skeptical about the tractability of making AGI development taboo within a very few years. It seems like this plan would require moderate timelines in order to be viable.

That said, I'm starting to wonder whether we should be trying to gain support for a pause in theory: specifically, people to agree that in an ideal world, we would pause AI development where we are now.

That could help open up the Overton window.

Odd anon @ 2023-10-19T06:35 (+5)

AGI development is already taboo outside of tech circles. Per the September poll by the AIPI, only 12% disagree that "Preventing AI from quickly reaching superhuman capabilities" should be an important AI policy goal. (56% strongly agree, 20% somewhat agree, 8% somewhat disagree, 4% strongly disagree, 12% not sure.) Despite the fact that world leaders are themselves influenced by tech circles' positions, leaders around the world are quite clear that they take the risk seriously.

The only reason AGI development hasn't been halted already is that the general public does not yet know that big tech is both trying to build AGI, and actually making real progress towards it.

Greg_Colbourn @ 2023-10-12T15:06 (+5)

The taboo only really needs to kick in on moderate timelines, so we're in luck :) On short timelines, only massive data centres and the leading AI labs need to be regulated.

Greg_Colbourn @ 2023-10-19T20:32 (+2)

I've now made post this into an X thread (with some slight edits and some condensing).

Robi Rahman @ 2023-10-17T01:38 (+2)

Upvoted your post because you made some good points, but I think your analogy between human cloning and AI training is totally wrong.

Take for example, human reproductive cloning. This is so morally abhorrent that it is not being practised anywhere in the world. There is no black market in need of a global police state to shut it down. AGI research could become equally uncool once the danger, and loss of sovereignty, it represents is sufficiently well appreciated.

There is no black market in human cloning, and no police state trying to stop it, because no one benefits very much from cloning. Cloning is just not that useful. Whereas if we stop corporate AI development for 40 years but computer hardware keeps improving, anyone can get rich by training an AGI on their gaming laptop. It would be like trying to confiscate all the drug imports in a world where everyone with a cell phone is a drug addict.

Greg_Colbourn @ 2023-10-17T10:32 (+2)

Thanks. I also address the "get rich" point though! People can't get rich from it AGI because they lose control of it (/the world ends) before they get rich. AGI is not that useful either, because it's uncontrollable and has negative externalities that will come back and swamp any hoped for benefits, even for the producer (i.e. x-risk).

Assumptions of wealth generation, economic abundance and public benefit from AGI are ungrounded without proof that they are even possible - and they aren’t (yet), given a lack of scalable-to-ASI solutions to alignment, misuse, and coordination. A stop isn’t taking away the ladder to heaven, for such a ladder does not exist, even in theory, as things stand.