Effective Altruism Foundation: Plans for 2019

By Jonas_ @ 2018-12-04T16:41 (+52)

Summary

- Research: We plan to continue our research in the areas of AI-related decision theory and bargaining, fail-safe measures, and macrostrategy.

- Research coordination: We plan to host a research workshop focused on preventing disvalue from AI, publish an updated research agenda, and continue our support and coaching of independent researchers and organizations.

- Grantmaking: We plan to grow our grantmaking capacity by expanding our team with a dedicated grantmaking researcher.

- Fundraising for other charities: We will continue to fundraise several million dollars per year for effective charities, but expanding these activities will not be a priority for us next year.

- Handing off community building: We will transfer most of our community-building work in the German-speaking area to CEA, LEAN, and EA local groups.

- Fundraising target: We aim to raise $400,000 by the end of 2018. If you prioritize reducing s-risks, there is a strong case for supporting us. Make a donation.

Table of contents

- About the Effective Altruism Foundation (EAF)

- Plans for 2019

- Research (Foundational Research Institute – FRI)

- Research coordination

- Grantmaking

- Other activities

- Financials

- When does it make sense to support our work?

- Brief review of 2018

- Organizational updates

- Achievements

- Mistakes

- We are interested in your feedback

About the Effective Altruism Foundation (EAF)

We conduct and coordinate research on how to do the most good in terms of reducing suffering, and support work that contributes towards this goal. Together with others in the effective altruism community, we want careful ethical reflection to guide the future of our civilization. We currently focus on efforts to reduce the worst risks of astronomical suffering (s-risks) from advanced artificial intelligence. (More about our mission and priorities.)

Plans for 2019

Research (Foundational Research Institute – FRI)

We plan to continue our research in the areas of AI-related decision theory and bargaining (e.g., implied decision theories of different AI architectures), fail-safe measures (e.g., surrogate goals), and macrostrategy. We would like to make progress in the following areas in particular:

- Preventing conflicts involving AI systems. We want to learn more about how decision theory research and AI governance can contribute to more cooperative AI outcomes.

- AI alignment approaches. We want to better understand various AI alignment approaches, how they affect the likelihood and scope of different failure modes, and what we can do to make them more robust.

We are looking to grow our research team in 2019, so we would be excited to hear from you if you think you might be a good fit!

Research coordination

Academic institutions, the AI industry, and other EA organizations frequently provide excellent environments for research in the areas mentioned above. Since EAF currently cannot provide such an environment, we aim to act as a global research network, promoting the regular exchange and coordination between researchers whose work contributes to reducing s-risks.

- EAF research retreat: preventing disvalue from AI. As a first step, we will host an AI alignment research workshop with a focus on s-risks from AI and seek out feedback on our research from domain experts.

- Research agenda. We plan to publish a research agenda to facilitate more independent research.

- Advice. We will continue to offer support and advice, in particular with career planning, to individuals dedicated to contributing to our research priorities—be it at EAF or organizations or institutions working in high-priority areas.

- Operations support. We will experiment with providing operational support to individual researchers. This may be a particularly cost-effective way to increase research capacity.

The value of these activities depends to some extent on how many independent researchers are qualified and motivated to work on questions we would like to see progress on. We will re-assess after 6 and 12 months.

Grantmaking

The EAF Fund will support individuals (students, academics, and independent researchers) and organizations (research institutes and charities) to carry out research in the areas of decision theory and bargaining, AI alignment and fail-safe architectures, macrostrategy research, and AI governance. To identify promising funding opportunities, we will expand our research team with a dedicated grantmaking researcher, invest more research hours from existing staff, and try various funding mechanisms (e.g., requests for proposals, prizes, teaching buy-outs, and scholarships).

We plan to grow the amount of available funding by providing high-fidelity philanthropic advice, i.e., formats which allow for sustained engagement (e.g., 1-on-1 advice, workshops), and investing more time into making our research accessible to non-experts.

We are uncertain how many opportunities there are for enabling the kind of work we would like to see outside of our own organization. Depending on the results, we will expand our efforts in this area further.

Other activities

- Fundraising for EA organizations. Through Raising for Effective Giving, we will continue to fundraise several million dollars per year for EA charities. Similarly, we will also continue to enable German, Swiss, and Dutch donors to deduct their donations from their taxes when giving to EA charities around the world, enabling $600,000 – $1.4 million in tax savings for EA donors in 2019. However, expanding these activities will not be a priority for us next year.

- Community building. In 2018 we published a guide on how to run local groups and hosted a local group retreat. We now want to focus more on our core priorities, which is why we are in the process of transferring our community-building activities to CEA, LEAN, and EA local groups.

- 1% initiative. We will bring to a close our ballot initiative in Zurich calling for a percentage of the city’s budget to be allocated to effective global development charities. We expect a favorable outcome.

Financials

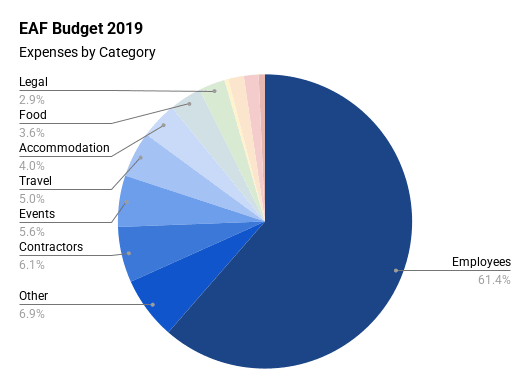

- Budget 2019: $925,000 (12 expected full-time equivalent employees)

- Current reserves: $1,400,000 (18 months)

- Room for more funding: $400,000 (to attain 24 months of reserves)

- Additional donations would allow us to further build up our grantmaking capacity, our in-house research team, and operations support for individual researchers.

- If reserves exceed 24 months at the end of a quarter, we will very strongly consider allocating the surplus to the EAF Fund.

When does it make sense to support our work?

Our funding situation has improved a lot compared to previous years. For donors who are on the fence about which cause or organization to support, this is a reason to donate elsewhere this year. However, we rely on a very small number of donors for 80% of our funding, so we are looking to diversify our support base.

If you subscribe to some form of suffering-focused ethics and want to focus on ways to improve the long-term future, we think supporting our work is the best bet for achieving that, as we outline in our donation recommendations.

It may also make sense to support our work if (1) you think suffering risks are particularly neglected in EA given their expected tractability, or (2) you are unusually pessimistic about the quality of the future. We think (1) is the stronger reason.

Note: It is no longer possible to earmark donations for specific projects or purposes within EAF (e.g., REG or FRI). All donations will by default contribute to the entire body of work we have outlined in this post. We might make individual exceptions for large donations.

Would you like to support us? Make a donation.

Brief review of 2018

Organizational updates

- We hired Anni Leskelä to join our research team.

- We ran a hiring round for two positions, one of which we have already been able to fill.

- Stefan Torges was named Co-Executive Director, alongside Jonas.

- We moved in with Benckiser Stiftung Zukunft in Berlin, a major foundation in Germany.

- We instituted an academic advisory board.

Achievements

- Notable research publications:

- Kaj Sotala, Lukas Gloor: Superintelligence as a Cause or Cure for Risks of Astronomical Suffering (Informatica: 41, 4)

- Caspar Oesterheld: Robust program equilibrium (Theory and Decision, 2018)

- Tobias Baumann: Using Surrogate Goals to Deflect Threats (runner-up in the AI alignment prize)

- Caspar Oesterheld: Approval-directed agency and the decision theory of Newcomb-like problems (runner-up in the AI alignment prize)

- Lukas Gloor: Cause prioritization for downside-focused value systems (EA Forum)

- We laid the groundwork for important collaborative efforts with other EA organizations that will hopefully come to fruition in 2019.

- We ran over 80 coaching sessions on cause prioritization and s-risk career choice. Our preliminary evaluation estimates the resulting career plan changes to be worth at least $400,000 in donations to EAF.

- We expect to fundraise more than $4,000,000 for EA causes and organizations, just as in 2017, and we expect around 15% of these donations to go to long-term future causes.

- We launched the EAF Fund and made two initial grants.

- We streamlined and improved several internal processes, leading to significant efficiency and accuracy gains, saving around 0.4 full-time equivalents per year (e.g., donation processing, grantmaking, financial projections, data protection).

Mistakes

- Research. We did not sufficiently prioritize consolidating internal research and publishing a research agenda. Doing so would likely have led to better coordination of both internal and external research. We plan to address these problems in 2019.

- Strategy. We changed our strategy too slowly and invested insufficient resources into strategic planning in light of the insight that our previous fundraising approach was ill-suited for financing the opportunities we would now consider most impactful (e.g., individual scholars, research groups, fairly unknown organizations). As a result, we allocated staff time suboptimally.

- Fundraising. We had several promising initial conversations with major philanthropists. However, so far we have been unable to capitalize on most of these opportunities, with some exceptions. While we do not think we made significant mistakes, it seems plausible that we could have taken better care of these relationships.

- Organizational health, diversity, and inclusion. We did not sufficiently prioritize diversity and professional development. We have now appointed Alfredo Parra as Equal Opportunity & Organizational Health Officer and implemented best practices during our hiring round as well as for the organization in general. We plan to make further progress in these domains in 2019.

- Operations. We were too slow to address a lack of operations staff capacity, which put excessive pressure on existing staff.

We are interested in your feedback

If you have any questions or comments, we look forward to hearing from you; you can also send us your critical feedback anonymously. We greatly appreciate any critical thoughts that could help us improve our work.

undefined @ 2018-12-05T06:24 (+3)

Thanks for a great writeup, Jonas! I really liked the clear layout of the post and the link to provide anonymous feedback.

Questions I had after reading the post:

1. It's clear that EAF does some unique, hard-to-replace work (REG, the Zurich initiative). However, when it comes to EAF's work around research (the planned agenda, the support for researchers), what sets it apart from other research organizations with a focus on the long-term future? What does EAF do in this area that no one else does? (I'd guess it's a combination of geographic location and philosophical focus, but I have a hard time clearly distinguishing the differing priorities and practices of large research orgs.)

2. Regarding your "fundraising" mistakes: Did you learn any lessons in the course of speaking with philanthropists that you'd be willing to share? Was there any systematic difference between conversations that were more vs. less successful?

3. It was good to see EAF research performing well in the Alignment Forum competition. Do you have any other evidence you can share showing how EAF's work has been useful in making progress on core problems, or integrating into the overall X-risk research ecosystem?

(For someone looking to fund research, it can be really hard to tell which organizations are most reliably producing useful work, since one paper might be much more helpful/influential than another in ways that won't be clear for a long time. I don't know if there's any way to demonstrate research quality to non-technical people, and I wouldn't be surprised if that problem was essentially impossible.)

undefined @ 2018-12-05T10:08 (+8)

Thanks for the questions!

1. It's clear that EAF does some unique, hard-to-replace work (REG, the Zurich initiative). However, when it comes to EAF's work around research (the planned agenda, the support for researchers), what sets it apart from other research organizations with a focus on the long-term future? What does EAF do in this area that no one else does? (I'd guess it's a combination of geographic location and philosophical focus, but I have a hard time clearly distinguishing the differing priorities and practices of large research orgs.)

I'd say it's just the philosophical focus, not the geographic location. In practice, this comes down to a particular focus on conflict involving AI systems. For more background, see Cause prioritization for downside-focused value systems. Our research agenda will hopefully help make this easier to understand as well.

2. Regarding your "fundraising" mistakes: Did you learn any lessons in the course of speaking with philanthropists that you'd be willing to share? Was there any systematic difference between conversations that were more vs. less successful?

If we could go back, we'd define the relationships more clearly from the beginning by outlining a roadmap with regular check-ins. We'd also focus less on pitching EA and more on explaining how they could use EA to solve their specific problems.

3. It was good to see EAF research performing well in the Alignment Forum competition. Do you have any other evidence you can share showing how EAF's work has been useful in making progress on core problems, or integrating into the overall X-risk research ecosystem?

(For someone looking to fund research, it can be really hard to tell which organizations are most reliably producing useful work, since one paper might be much more helpful/influential than another in ways that won't be clear for a long time. I don't know if there's any way to demonstrate research quality to non-technical people, and I wouldn't be surprised if that problem was essentially impossible.)

In terms of publicly verifiable evidence, Max Daniel's talk on s-risks was received positively on LessWrong, and GPI quoted several of our publications in their research agenda. In-person feedback by researchers at other x-risk organizations was usually positive as well.

In terms of critical feedback, others pointed out that the presentation of our research is often too long and broad, and might trigger absurdity heuristics. We've been working to improve our research along these lines, but it'll take some time for this to become publicly visible.